Limitations of the Use of Mobile Devices and Smart Environments for

the Monitoring of Ageing People

Ivan Miguel Pires

1,2,3

, Nuno M. Garcia

1,3,4

, Nuno Pombo

1,3,4

and Francisco Flórez-Revuelta

5

1

Instituto de Telecomunicações, Universidade da Beira Interior, Covilhã, Portugal

2

Altranportugal, Lisbon, Portugal

3

ALLab - Assisted Living Computing and Telecommunications Laboratory, Computer Science Department,

Universidade da Beira Interior, Covilhã, Portugal

4

Universidade Lusófona de Humanidades e Tecnologias, Lisbon, Portugal

5

Department of Computer Technology, Universidad de Alicante, Spain

Keywords: Activities of Daily Living, Elderly People, Recognition, Mobile Devices, Smart Environments.

Abstract: The monitoring of the daily life of ageing people is a research topic widely explored by several authors,

which they presented different points of view. The different research studies related to this topic have been

performed with mobile devices and smart environments, combining the use of several sensors and

techniques in order to handle the recognition of Activities of Daily Living (ADL) that may be used to

monitor the lifestyle and improve the life’s quality of the ageing people. However, the use of the mobile

devices has several limitations, including the low power processing and the battery life. This paper presents

some different points of view about the limitations, combining them with a research about use of a mobile

application for the recognition of activities. At the end, we conclude that the use of lightweight methods

with local processing in mobile devices is the best method to the recognition of the ADL of ageing people in

order to present a fast feedback about their lifestyle. Finally, for the recognition of the activities in a

restricted space with constant network connection, the use of smart environments is more reliable than the

use of mobile devices.

1 INTRODUCTION

Over the last few years, research on recognizing

activities using sensors available on technological

devices is growing because of new techniques and

new devices. Based on (He, Goodkind, and Kowal,

2016), the number of older people in the world is

growing, with 8.5% of the people in the world being

65 or older, and technology can promote

independent living, reduce solitude and isolation

among other benefits (Age, 2010). The promotion of

independent living may include recognition of the

activities of the elderly using artificial intelligence

methods in the day-to-day care systems of the

elderly, health-related systems, social assistance

systems, telecare systems, including Others (Age,

2010). Due to the increase in the number of elderly,

the development of care systems is of great

importance for improving the quality of life of older

people (Jin, Simpkins, Ji, Leis, and Stambler, 2015),

which is included in the development of the systems

Ambient Assisted Living (AAL) and Enhanced

Living Environments (ELE) systems (Botia, Villa,

and Palma, 2012; Dobre, Mavromoustakis, Garcia,

Goleva, and Mastorakis, 2016; Garcia, 2016; Garcia,

Rodrigues, Elias, and Dias, 2014; Goleva et al.,

2017; Huch et al., 2012; Siegel, Hochgatterer, and

Dorner, 2014).

However, the development of these systems may

have limitations in the recognition of the activities

performed, including the positioning of the sensors

in smart environments, the environmental noise, the

implementation of the developed methods, the large

number of activities performed by older people, the

limited resources of the mobile devices, and other

software and hardware limitations. This paper will

explore this limitations and present the possible

solutions for each limitations, finalizing with a real

environment analysis of some limitations.

This paragraph finalizes the Section 1 of this

paper, which introduces its topic. Section 2 presents

the background of the recent development in this

Pires, I., Garcia, N., Pombo, N. and Flórez-Revuelta, F.

Limitations of the Use of Mobile Devices and Smart Environments for the Monitoring of Ageing People.

DOI: 10.5220/0006817802690275

In Proceedings of the 4th International Conference on Information and Communication Technologies for Ageing Well and e-Health (ICT4AWE 2018), pages 269-275

ISBN: 978-989-758-299-8

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

269

topic. Our view of this topic and the validation of the

problem will be presented in the Section 3. Section 4

presents the discussion and results obtained. Finally,

the conclusions of this study will be presented in the

Section 5.

2 BACKGROUND

The monitoring of the activities performed by ageing

people may be performed in controlled or

uncontrolled environments. Firstly, the controlled

environments considered in this study are the smart

environments (e.g., smart homes), where the ageing

people are living, equipped with several sensors for

the recognition of the activities. Finally, the

uncontrolled environments considered in this study

are the different environments in real life, using the

mobile devices for the data acquisition and further

recognition of the activities.

Smart environments used for the recognition of

the activities performed by ageing people may be

equipped with cameras, temperature sensors,

altimeter sensors, accelerometer sensors, contact

switches, pressure sensors and Radio-frequency

identification (RFID) sensors. The recognition of the

activities in these environments are performed using

server-side processing methods. Botia et al. (2012)

used the cameras for the recognition of the presence

of the ageing people in home office, kitchen, living

room and outdoor spaces, and several activities,

including making coffee, walking on stairs and

working on a computer.

In (Chernbumroong, Cang, Atkins, and Yu,

2013), the authors used the altimeter, accelerometer

and temperature sensors for the recognition of

brushing teeth, feeding, dressing, sleeping, walking,

lying, ironing, walking on stairs, sweeping, washing

dishes and watching TV. (Kasteren and Krose, 2007)

implemented a method that used pressure sensors,

accelerometer sensors and contact switches for the

recognition of bathing, eating and toileting activities.

The accelerometers and RFID sensors may be

used for the recognition of pushing a shopping cart,

sitting, standing, walking, phone calling, taking

picture, running, lying, wiping, switching on skin

conditioner, hand shaking, reading, jumping and hair

brushing activities (Hong, Kim, Ahn, and Kim,

2008).

Other studies making use of only one type of

sensors available in smart environments. Firstly,

other authors used only accelerometer for the

recognition of making coffee, brushing teeth and

boiling water activities (Liming, Hoey, Nugent,

Cook, and Zhiwen, 2012). Secondly, other authors

used only RFID sensors for the recognition of phone

calling, preparing a tea, preparing a meal, making

soft-boiled eggs, using the bathroom, taking out the

trash, setting the table, eating, drinking, preparing

orange juice, cleaning the table, cleaning a toilet,

cleaning the kitchen, making coffee, sleeping,

getting a drink, getting a snack, using a dishwasher,

using a microwave, taking a shower, adjusting the

thermostat, using a washing machine, using the

toilet, vacuuming, leaving the house, reading,

receiving a guest, boiling a pot of tea, doing laundry,

boiling water, brushing hair, shaving face, washing

hands, watching TV and brushing teeth activities

(Cheng, Tsai, Liao, and Byeon, 2009; Danny,

Matthai, and Tanzeem, 2005; Hoque and Stankovic,

2012). Finally, other authors used ZigBee wireless

sensors for the recognition of watching TV,

preparing a meal and preparing a tea activities

(Suryadevara, Quazi, and Mukhopadhyay, 2012).

Related to the use of the data acquired from the

mobile devices, the implemented methods for the

recognition of activities may be implemented locally

on the mobile devices as a mobile application or

server-side, requiring a constant network connection.

Another challenge in the use of the mobile devices

for the recognition of activities is related to the

positioning of the mobile device, that affects the

reliability of the recognition methods. In addition,

the use of these devices should be adapted to the

hardware condition of these devices, such as limited

processing, battery, and storage capabilities.

The most used sensor for the recognition of

activities is the accelerometer sensor embedded in

the mobile devices, enabling the recognition of

several activities, including rowing, walking,

walking on stairs, jumping, jogging, running, lying,

standing, getting up, cycling, sitting, falling and

travelling with different transportation facilities

(Büber and Guvensan, 2014; Cardoso, Madureira,

and Pereira, 2016; Ivascu, Cincar, Dinis, and Negru,

2017; Khalifa, Lan, Hassan, Seneviratne, and Das,

2017; Tsai, Yang, Shih, and Kung, 2015).

The combination of the data acquired from the

accelerometer and the Global Positioning System

(GPS) receiver embedded on the mobile devices can

increase the number and accuracy of the recognition

of activities, including the sitting, standing, walking,

lying, walking on stairs, cycling, falling, jogging,

running, playing football and rowing (Ermes,

Parkka, Mantyjarvi, and Korhonen, 2008; Fortino,

Gravina, and Russo, 2015; Zainudin, Sulaiman,

Mustapha, and Perumal, 2015).

HSP 2018 - Special Session on Healthy and Secure People

270

Table 1. Relation between the activities recognized in smart environments and with mobile devices.

Activities:

Smart Environments:

Mobile devices:

Adjusting the thermostat; brushing hair;

cleaning a toilet; cleaning the kitchen; doing

laundry; getting a drink; getting a snack;

receiving a guest; setting the table; shaving

face; taking a shower; taking out the trash;

using a dishwasher; using a microwave;

using a washing machine; vacuuming;

washing hands; preparing orange juice;

making soft-boiled eggs

RFID sensors

-

Bathing

pressure sensors; accelerometers;

contact switches; RFID sensors

-

Boiling water; hair brushing; hand shaking;

phone calling; pushing a shopping cart;

switching on skin conditioner; taking

picture; wiping

Accelerometer; RFID sensors

-

Brushing teeth; dressing; Feeding; washing

dishes; Ironing; sweeping

Altimeter; Accelerometer;

Temperature sensor

-

Cleaning the table

RFID sensors

Accelerometer; Microphone

Cooking; Driving; Shopping; Using a

smartphone

-

Accelerometer; Microphone

Cycling

-

Accelerometer; Microphone;

GPS receiver

Drinking; leaving the house

RFID sensors; Cameras

Accelerometer; Microphone;

GPS receiver

Eating; toileting

pressure sensors; accelerometers;

contact switches; RFID sensors;

Cameras

Accelerometer; Microphone;

GPS receiver

Falling; Jogging; Playing football; Rowing

-

Accelerometer; GPS receiver

Getting up; Travelling

-

Accelerometer

Jumping

Accelerometer; RFID sensors

accelerometer

Lying

Altimeter; Accelerometer;

Temperature sensor

Accelerometer; GPS receiver

Making coffee

Cameras; Accelerometer; RFID

sensors

-

Preparing a meal; preparing a tea

RFID sensors; ZigBee sensors

-

Reading

Accelerometer; RFID sensors

Accelerometer; Microphone

Running; Sitting; standing

Accelerometer; RFID sensors

Accelerometer; GPS receiver

Sleeping

Altimeter; Accelerometer;

Temperature sensor; RFID

sensors; Cameras

Accelerometer; Microphone;

GPS receiver

Walking

Altimeter; Accelerometer;

Temperature sensor; RFID

sensors

Accelerometer; GPS receiver

Walking on stairs

Cameras; Altimeter;

Accelerometer; Temperature

sensor

Accelerometer; GPS receiver

Watching TV

Altimeter; Accelerometer;

Temperature sensor; RFID

sensors; ZigBee sensors

Accelerometer; Microphone

Using a computer

Cameras

Accelerometer; Microphone

Limitations of the Use of Mobile Devices and Smart Environments for the Monitoring of Ageing People

271

The combination of the data acquired from the

accelerometer and microphone embedded on mobile

the mobile devices allows the recognition of cycling,

cleaning table, shopping, toileting, cooking,

watching TV, eating, working on a computer,

reading, using a smartphone, driving, sleeping and

nursing activities (Inoue, Ueda, Nohara, and

Nakashima, 2015; Nishida, Kitaoka, and Takeda,

2014).

Finally, the combination of the sensors available

in smart environments, i.e., cameras and RFID

sensors, and the sensors available in the mobile

devices, i.e., accelerometer, GPS receiver and

microphone, may increase the accuracy of the

recognition of activities, including leaving the

house, toileting, sleeping, eating and drinking

(Ordonez, de Toledo, and Sanchis, 2013).

Table 1 summarizes the activities recognized by

sensors presented in this section as example of

activities that may be recognized in smart

environments and/or with mobile devices.

Regarding several studies (Alam, Reaz, and Ali,

2012; Arif, El Emary, and Koutsouris, 2014;

Jakkula, 2007; Montoro-Manrique, Haya-Coll, and

Schnelle-Walka; Poslad, 2011), the main problems

using smart environments for the monitoring of the

activities of ageing people are:

The positioning of the sensors in the smart

environment may affect the correct

identification of the object, environment

and/or people;

The sensors should cooperate between them

and, in case of fails, the system will return

incorrect results;

These environments require a constant

connection to a server and, when the sensors

fails, the activities are not recognized or have

invalid results;

The different number of sensors available

may affect the recognition of the activities;

Due to the use of distributed systems, the

security and the resilience of the data is

important for the recognition of the activities.

Regarding several studies (Arif et al., 2014; Bert,

Giacometti, Gualano, and Siliquini, 2014;

Choudhury et al., 2008; Montoro-Manrique et al.;

Poslad, 2011; Santos et al., 2016), the main

problems using the sensors available in mobile

devices for the monitoring of the activities of ageing

people are:

The use of multiple sources for the data

acquisition (i.e., smartphone and smartwatch

sensors) allows the acquisition of more

physical and physiological parameters, but it

required a constant connection by Bluetooth

between them;

The use of the sensors and the Bluetooth

and/or Wi-Fi connection increases the speed

of battery draining;

The execution of the data processing in the

mobile devices may decrease their

performance;

Due to the low resources of these devices, the

accuracy of the sensors may not be constant

during the data acquisition process;

The user may not use their equipments in the

correct placement during the data acquisition

process;

Some studies present methods that require a

constant data connection for further

processing of the data acquired;

The different number of sensors embedded in

the mobile devices may affect the recognition

of the activities;

Due to the use of multiple devices, the

security and the resilience of the data is

important for the recognition of the activities;

Finally, the ageing people commonly do not

use these devices and they needs a

familiarization with these devices.

3 METHODS AND MATERIALS

For the research about the limitation of the use of

mobile devices and smart environments

technologies, we have discovered several limitations

of these technologies in the regular use for the

recognition of the daily activities of ageing people,

but these limitations may be reduced with

lightweight methods. Mainly, the limitations of the

use of mobile devices are related to the low

resources and the limitations of the use of smart

environments are related to the positioning of the

sensors.

Based in a mobile application that implements

the framework described in (I. Pires, N. Garcia, N.

Pombo, and F. Flórez-Revuelta, 2016; Pires, Garcia,

and Flórez-Revuelta, 2015; I. M. Pires, N. M.

Garcia, N. Pombo, and F. Flórez-Revuelta, 2016),

we used the mobile application in four mobile

devices (i.e., Sony Ericsson Xperia Neo, Sony

Ericsson Xperia Live Walkman, BQ Aquarius 5.7

and Samsung Galaxy J3) in order to verify the

restrictions in the use of these applications, focusing

on the speed of battery draining, the performance of

the mobile devices during the use of the mobile

HSP 2018 - Special Session on Healthy and Secure People

272

application, and their adaptation to the number of

sensors available in the mobile devices. This mobile

application captures 5 seconds of the sensors’ data

every 5 minutes and processes the data acquired

with machine learning methods for the recognition

of the activities performed. During the performance

of these experiments, the mobile devices are in use

continuously with other tasks, including receiving

and making calls and/or text messages, accessing to

the Internet and others.

The recognition of the activities does not need a

constant data acquisition and processing, and the use

of a technique to enable and disable the acquisition

and processing of the sensors’ data over the time

may reduce the effects in battery consumption and

processing capabilities. The effects depending on

number of sensors can be avoided with the

construction of mobile applications with methods

that should be a function of the number of sensors

available at the moment of the data acquisition and

processing. The unique limitation that is difficult to

control is the positioning of the mobile devices

related to the users’ body, however it is possible to

stop the data acquisition when the data seems to be

inconsistent.

4 DISCUSSION AND RESULTS

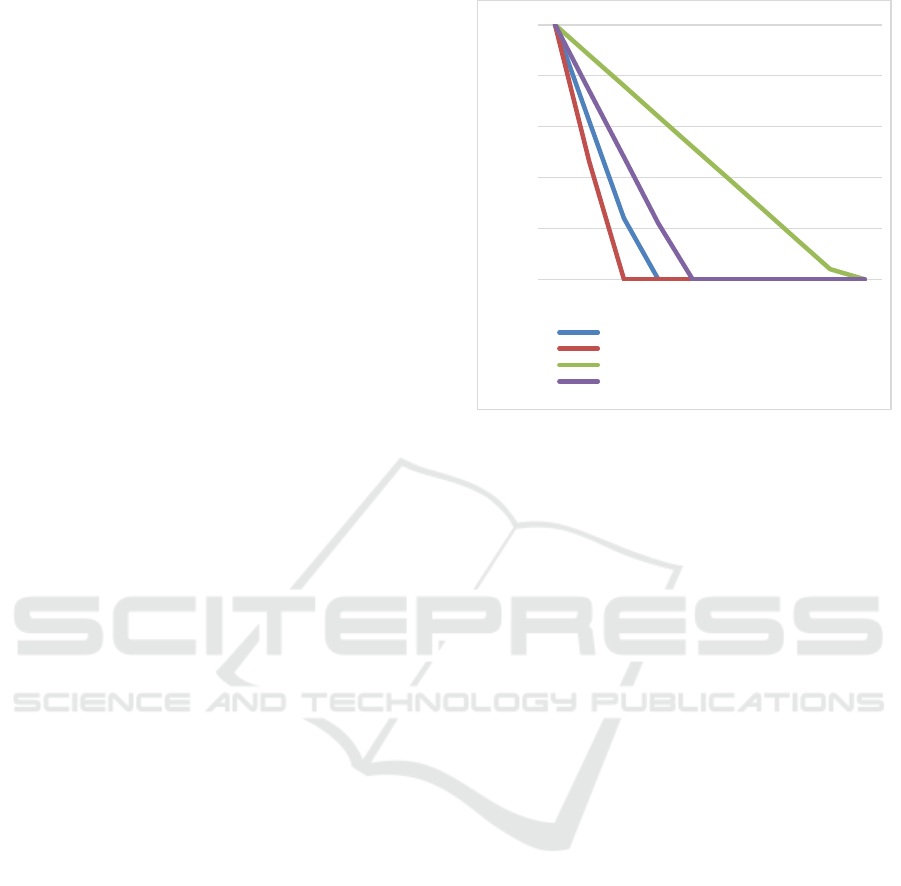

Regarding the experiments performed, we verified

that the performance of the mobile devices is only

affected during the data acquisition and processing

process. In general, the battery consumption is

affected, as verified in the figure 1, but the minimum

time between the fully charged and the empty

battery (16 hours) was achieved with the Sony

Ericsson Xperia Live Walkman (2011) that is an old

device. The maximum performance and battery life

was achieved with the BQ Aquarius 5.7 (2013) that

is more recent than Sony Ericsson Xperia Live

Walkman (2011), reporting approximately 68 hours

of battery life.

As verified, our study confirms the findings that

the data acquisition and process of the sensors’ data

affects the battery life and the power processing

capabilities, but we verified that the minimum value

is 16 hours of battery life. Thus, the recognition of

activities using the mobile devices may be used,

because currently these devices should receive a

daily recharge. The different implementations of the

methods can reduce this impact, and the methods

that should be implemented in the mobile devices

should be lightweight methods. The server-side

processing and the use of multiple device for the

data acquisition may have a lot of connectivity

Figure 1. Battery Consumption using the mobile

application for the recognition of activities. The horizontal

axis represents the time between the fully charged and

empty battery (h). The vertical axis represents the level of

battery charge (%).

issues, needing a constant connection to the Internet

or between devices. Regarding the use of the mobile

devices, the more stable solution consists on the use

of the local processing with methods that needs low

resources. Finally, regarding the use of smart

environments, the implementation of backup

systems and more sensors in strategic placements

may increase the reliability of these systems.

However, the use of network connections should

implement methods to minimize the security and

privacy problems.

5 CONCLUSIONS

Currently, the use of the technological equipment is

increasing with the ageing people to maintain the

contact with other people and it may be used for the

monitoring of the ageing people, promoting the well

independent living.

This paper confirms that these devices have

several restrictions. In the case of the use of smart

environments, the main problems are related to the

connectivity issues and positioning of the sensors. In

case of the use of mobile devices, the problems are

related to the low resources, the placement of the

mobile device and the connectivity issues.

We performed some experiments with different

devices for the recognition of activities using a

mobile application with local processing methods,

0%

20%

40%

60%

80%

100%

0 8 16 24 32 40 48 56 64 68

Sony Ericsson Xperia Neo

Sony Ericsson Xperia Live Walkman

BQ Aquarius 5.7

Samsung Galaxy J3

Limitations of the Use of Mobile Devices and Smart Environments for the Monitoring of Ageing People

273

verifying that the battery drains with different

speeds, between 16 and 68 hours. The performance

is affected, but it is reduced acquiring a small

window of sensors’ data in every defined time

interval.

The technology can promote the independent

living of ageing people, helping in the emergency

situations, controlling their lifestyle and increasing

their life’s quality.

ACKNOWLEDGEMENTS

This work was supported by FCT project

UID/EEA/50008/2013 (Este trabalho foi suportado

pelo projecto FCT UID/EEA/50008/2013).

The authors would also like to acknowledge the

contribution of the COST Action IC1303 –

AAPELE – Architectures, Algorithms and Protocols

for Enhanced Living Environments.

REFERENCES

Age, U. (2010). Technology and older people evidence

review. Age UK, London.

Alam, M. R., Reaz, M. B. I., and Ali, M. A. M. (2012). A

Review of Smart Homes—Past, Present, and Future.

IEEE Transactions on Systems, Man, and Cybernetics,

Part C (Applications and Reviews), 42(6), 1190-1203.

doi:10.1109/tsmcc.2012.2189204

Arif, M. J., El Emary, I. M., and Koutsouris, D. D. (2014).

A review on the technologies and services used in the

self-management of health and independent living of

elderly. Technol Health Care, 22(5), 677-687.

doi:10.3233/THC-140851

Bert, F., Giacometti, M., Gualano, M. R., and Siliquini, R.

(2014). Smartphones and health promotion: a review

of the evidence. J Med Syst, 38(1), 9995.

doi:10.1007/s10916-013-9995-7

Botia, J. A., Villa, A., and Palma, J. (2012). Ambient

Assisted Living system for in-home monitoring of

healthy independent elders. Expert Systems with

Applications, 39(9), 8136-8148.

doi:10.1016/j.eswa.2012.01.153

Büber, E., and Guvensan, A. M. (2014, 21-24 April 2014).

Discriminative time-domain features for activity

recognition on a mobile phone. Paper presented at the

2014 IEEE Ninth International Conference on

Intelligent Sensors, Sensor Networks and Information

Processing (ISSNIP).

Cardoso, N., Madureira, J., and Pereira, N. (2016).

Smartphone-based Transport Mode Detection for

Elderly Care. 2016 Ieee 18th International Conference

on E-Health Networking, Applications and Services

(Healthcom), 261-266.

doi:10.1109/HealthCom.2016.7749465

Cheng, B.-C., Tsai, Y.-A., Liao, G.-T., and Byeon, E.-S.

(2009). HMM machine learning and inference for

Activities of Daily Living recognition. The Journal of

Supercomputing, 54(1), 29-42. doi:10.1007/s11227-

009-0335-0

Chernbumroong, S., Cang, S., Atkins, A., and Yu, H.

(2013). Elderly activities recognition and classification

for applications in assisted living. Expert Systems with

Applications, 40(5), 1662-1674. doi:10.1016/j.eswa.

2012.09.004

Choudhury, T., Borriello, G., Consolvo, S., Haehnel, D.,

Harrison, B., Hemingway, B., . . . Wyatt, D. (2008).

The Mobile Sensing Platform: An Embedded Activity

Recognition System. IEEE Pervasive Computing,

7(2), 32-41. doi:10.1109/mprv.2008.39

Danny, W., Matthai, P., and Tanzeem, C. (2005).

Unsupervised activity recognition using automatically

mined common sense Proceedings of the 20th national

conference on Artificial intelligence - Volume 1 %@

1-57735-236-x (pp. 21-27). Pittsburgh, Pennsylvania:

AAAI Press.

Dobre, C., Mavromoustakis, C. x., Garcia, N., Goleva, R.

I., and Mastorakis, G. (2016). Ambient Assisted Living

and Enhanced Living Environments: Principles,

Technologies and Control: Butterworth-Heinemann.

Ermes, M., Parkka, J., Mantyjarvi, J., and Korhonen, I.

(2008). Detection of Daily Activities and Sports With

Wearable Sensors in Controlled and Uncontrolled

Conditions. Trans. Info. Tech. Biomed., 12(1), 20-26.

doi:10.1109/titb.2007.899496

Fortino, G., Gravina, R., and Russo, W. (2015, 6-8 May

2015). Activity-aaService: Cloud-assisted, BSN-based

system for physical activity monitoring. Paper

presented at the 2015 IEEE 19th International

Conference on Computer Supported Cooperative

Work in Design (CSCWD).

Garcia, N. M. (2016). A Roadmap to the Design of a

Personal Digital Life Coach ICT Innovations 2015:

Springer.

Garcia, N. M., Rodrigues, J. J. P. C., Elias, D. C., and

Dias, M. S. (2014). Ambient Assisted Living: Taylor

and Francis.

Goleva, R. I., Garcia, N. M., Mavromoustakis, C. X.,

Dobre, C., Mastorakis, G., Stainov, R., . . . Trajkovik,

V. (2017). AAL and ELE Platform Architecture.

He, W., Goodkind, D., and Kowal, P. R. (2016). An aging

world: 2015: United States Census Bureau.

Hong, Y.-J., Kim, I.-J., Ahn, S. C., and Kim, H.-G. (2008).

Activity Recognition Using Wearable Sensors for

Elder Care. Paper presented at the Future Generation

Communication and Networking, 2008. FGCN '08.

Second International Conference on, Hainan Island.

Hoque, E., and Stankovic, J. (2012, 21-24 May 2012).

AALO: Activity recognition in smart homes using

Active Learning in the presence of Overlapped

activities. Paper presented at the Pervasive Computing

Technologies for Healthcare (PervasiveHealth), 2012

6th International Conference on.

Huch, M., Kameas, A., Maitland, J., McCullagh, P. J.,

Roberts, J., Sixsmith, A., and Augusto, R. W. J. C.

HSP 2018 - Special Session on Healthy and Secure People

274

(2012). Handbook of Ambient Assisted Living:

Technology for Healthcare, Rehabilitation and Well-

being - Volume 11 of Ambient Intelligence and Smart

Environments: IOS Press.

Inoue, S., Ueda, N., Nohara, Y., and Nakashima, N.

(2015). Mobile activity recognition for a whole day:

recognizing real nursing activities with big dataset.

Paper presented at the Proceedings of the 2015 ACM

International Joint Conference on Pervasive and

Ubiquitous Computing, Osaka, Japan.

Ivascu, T., Cincar, K., Dinis, A., and Negru, V. (2017, 22-

24 June 2017). Activities of daily living and falls

recognition and classification from the wearable

sensors data. Paper presented at the 2017 E-Health

and Bioengineering Conference (EHB).

Jakkula, V. (2007). Predictive Data Mining to Learn

Health Vitals of a Resident in a Smart Home. Paper

presented at the Seventh IEEE International

Conference on Data Mining - Workshops.

Jin, K., Simpkins, J. W., Ji, X., Leis, M., and Stambler, I.

(2015). The Critical Need to Promote Research of

Aging and Aging-related Diseases to Improve Health

and Longevity of the Elderly Population. Aging Dis,

6(1), 1-5. doi:10.14336/AD.2014.1210

Kasteren, T. v., and Krose, B. (2007, 24-25 Sept. 2007).

Bayesian activity recognition in residence for elders.

Paper presented at the Intelligent Environments, 2007.

IE 07. 3rd IET International Conference on.

Khalifa, S., Lan, G., Hassan, M., Seneviratne, A., and Das,

S. K. (2017). HARKE: Human Activity Recognition

from Kinetic Energy Harvesting Data in Wearable

Devices. IEEE Transactions on Mobile Computing,

PP(99), 1-1. doi:10.1109/TMC.2017.2761744

Liming, C., Hoey, J., Nugent, C. D., Cook, D. J., and

Zhiwen, Y. (2012). Sensor-Based Activity

Recognition. IEEE Transactions on Systems, Man, and

Cybernetics, Part C (Applications and Reviews),

42(6), 790-808. doi:10.1109/tsmcc.2012.2198883

Montoro-Manrique, G., Haya-Coll, P., and Schnelle-

Walka, D. Internet of Things: From RFID Systems to

Smart Applications. Upgrade: European Journal for

the Informatics Professional, XII(1).

Nishida, M., Kitaoka, N., and Takeda, K. (2014, 9-12 Dec.

2014). Development and preliminary analysis of

sensor signal database of continuous daily living

activity over the long term. Paper presented at the

Signal and Information Processing Association Annual

Summit and Conference (APSIPA), 2014 Asia-Pacific.

Ordonez, F. J., de Toledo, P., and Sanchis, A. (2013).

Activity recognition using hybrid generative/

discriminative models on home environments using

binary sensors. Sensors (Basel), 13(5), 5460-5477.

doi:10.3390/s130505460

Pires, I., Garcia, N., Pombo, N., and Flórez-Revuelta, F.

(2016). From Data Acquisition to Data Fusion: A

Comprehensive Review and a Roadmap for the

Identification of Activities of Daily Living Using

Mobile Devices. Sensors, 16(2), 184.

Pires, I. M., Garcia, N. M., and Flórez-Revuelta, F. (2015).

Multi-sensor data fusion techniques for the

identification of activities of daily living using mobile

devices. Paper presented at the Proceedings of the

ECMLPKDD 2015 Doctoral Consortium, European

Conference on Machine Learning and Principles and

Practice of Knowledge Discovery in Databases, Porto,

Portugal.

Pires, I. M., Garcia, N. M., Pombo, N., and Flórez-

Revuelta, F. (2016). Identification of Activities of

Daily Living Using Sensors Available in off-the-shelf

Mobile Devices: Research and Hypothesis. Paper

presented at the Ambient Intelligence-Software and

Applications–7th International Symposium on

Ambient Intelligence (ISAmI 2016).

Poslad, S. (2011). Ubiquitous computing: smart devices,

environments and interactions: John Wiley and Sons.

Santos, J., Rodrigues, J. J. P. C., Silva, B. M. C., Casal, J.,

Saleem, K., and Denisov, V. (2016). An IoT-based

mobile gateway for intelligent personal assistants on

mobile health environments. Journal of Network and

Computer Applications, 71, 194-204.

doi:10.1016/j.jnca.2016.03.014

Siegel, C., Hochgatterer, A., and Dorner, T. E. (2014).

Contributions of ambient assisted living for health and

quality of life in the elderly and care services--a

qualitative analysis from the experts' perspective of

care service professionals. BMC Geriatr, 14, 112.

doi:10.1186/1471-2318-14-112

Suryadevara, N. K., Quazi, M. T., and Mukhopadhyay, S.

C. (2012, 26-29 June 2012). Intelligent Sensing

Systems for Measuring Wellness Indices of the Daily

Activities for the Elderly. Paper presented at the

Intelligent Environments (IE), 2012 8th International

Conference on.

Tsai, P. Y., Yang, Y. C., Shih, Y. J., and Kung, H. Y.

(2015, 6-9 Sept. 2015). Gesture-aware fall detection

system: Design and implementation. Paper presented

at the 2015 IEEE 5th International Conference on

Consumer Electronics - Berlin (ICCE-Berlin).

Zainudin, M. N. S., Sulaiman, M. N., Mustapha, N., and

Perumal, T. (2015). Activity Recognition based on

Accelerometer Sensor using Combinational

Classifiers. 2015 Ieee Conference on Open Systems

(Icos), 68-73. doi:10.1109/icos.2015.7377280

Limitations of the Use of Mobile Devices and Smart Environments for the Monitoring of Ageing People

275