Breast Cancer Detection using Deep Convolutional Neural Network

Hana Mechria

1

, Mohamed Salah Gouider

1

and Khaled Hassine

2

1

SMART Laboratory, University of Tunis, Tunis, Tunisia

2

IResCoMath, Faculty of Science Gabes, University of Gabes, Gabes, Tunisia

Keywords:

Breast Cancer, Deep Learning, Deep Convolutional Neural Network, AlexNet, Mammography, Digital

Database for Screening Mammography, Stacked AutoEncoders.

Abstract:

Deep Convolutional Neural Network (DCNN) is considered as a popular and powerful deep learning algorithm

in image classification. However, there are not many DCNN applications used in medical imaging, because

large dataset for medical images is not always available. In this paper, we present two DCNN architectures, a

shallow DCNN and a pre-trained DCNN model: AlexNet, to detect breast cancer from 8000 mammographic

images extracted from the Digital Database for Screening Mammography. In order to validate the performance

of DCNN in breast cancer detection using a big data , we carried out a comparative study with a second deep

learning algorithm Stacked AutoEncoders (SAE) in terms accuracy, sensitivity and specificity. The DCNN

method achieved the best results with 89.23% of accuracy, 91.11% of sensitivity and 87.75% of specificity.

1 INTRODUCTION

According to theWorld Health Organization (WHO)

reports, breast cancer is the most common cancer

among women. This pathology is the first major cause

of death in all cancers among women, such as 570000

women died from breast cancer in 2015 (World He-

alth Organisation). The early detection of breast can-

cer is needed for effective diagnosis and treatment.

Currently, mammography is the most widely used

imaging modality for detection and diagnosis of bre-

ast tumors. A large number if mammography is re-

alized every day, which make the task of analysis of

image difficult because a radiologist can not analyzes

hundreds of images with the same accuracy and a mi-

nimal time.

Therefore, the development of Computer Aided-

Diagnosis systems (CAD) (Tang et al., 2009) which

can assist medical personnel with the early detection

of cancer, pose a crucial alternative.

Deep learning (Hinton et al., 2006; Ranzato et al.,

2006) is a new area of machine learning. In recent

years, deep learning has attracted attention in various

research areas such as computer vision, image clas-

sification and big data analysis. This method achie-

ved a record results in many challenges like ImageNet

Large Scale Visual Recognition Competition (ILS-

VRC) (Russakovsky et al., 2015).

The DCNN (Lecun et al., 1998) is one of the

most successful techniques in deep learning which

achieved outstanding performance on challenging

tasks such as image classification (Rawat and Zeng-

hui, 2017), visual object recognition (Radovic et al.,

2017), Segmentation (Long et al., 2017).

In this study, we aim to use DCNN to detect breast

cancer from a large number of mammographic images

(8000 mammography). We implemented two diffe-

rent DCNN models with the mammographic image

features based CAD system. To assess the perfor-

mance of our method with the big data, we compared

the results of our models with a second deep learning

algorithm Stacked AutoEncoders (SAE) (Vareka and

Mautner, 2017).

The paper is organized as follows: in Section

2, we present a previous study of breast cancer de-

tection and classification using deep convolutional

neural network architecture. Section 3 describes deep

convolutional neural network. Section 4 presents our

approach such as DCNN models architecture. Section

5 reports our experiments and results. Finally, Section

6 concludes the work presenting some possibilities for

further researches.

2 RELATED WORKS

DCNN has achieved interesting results in images pro-

cessing. Recently, this network began to prove its per-

Mechria, H., Gouider, M. and Hassine, K.

Breast Cancer Detection using Deep Convolutional Neural Network.

DOI: 10.5220/0007386206550660

In Proceedings of the 11th International Conference on Agents and Artificial Intelligence (ICAART 2019), pages 655-660

ISBN: 978-989-758-350-6

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

655

formance in medical tasks, particularly the analysis of

medical imaging.

In the context of our study to detect breast cancer

automatically in early stages, several studies addres-

ses the problems of detection and classification of bre-

ast masses based on DCNN. In (Posada et al., 2015)

they used two DCNN models AlexNet and VGGNet

as a features extractor and the SVM as a classifier

to detect and diagnose breast cancer with 64.52% of

accuracy. This system is applied to a dataset contai-

ning 600 mammography where 360 for training and

240 for test. Michal Zejmo et al (Zejmo et al., 2017)

classified breast microscopic images for 50 patients

using GoogLeNet and AlexNet which achieved re-

spectively 83% and 80% accuracy. The authors of

(Zhou et al., 2016) analyzed the effeciency of DCN-

Nin determining the existence of breast masses using

322 mammographic images. This analysis gave an

accuracy equal to 60.9%. In (Jadoon et al., 2017), the

authors classified 2796 mammography into three clas-

ses normal, begnin and malign using DCNN to obtain

83.74% of accuracy.

Although research in this context has used deep

DCNN models and has achieved interesting results,

the dataset used in evaluation are small despite the

large number of mammography performed every day.

For this, our work consists mainly of creating a com-

puter aided breast cancer diagnosis using a big num-

ber of mammographic images (8000 images).

3 DEEP CONVOLUTIONAL

NEURAL NETWORK

The deep convolutional neural network is the most

popular kind of deep learning models, as it is used

in large scale image recognition tasks and especially

in the medical imaging analysis. The DCNN archi-

tecture is a stack of three main layers: convolutional

layer, pooling layer and fully connected layer.

• The convolutional layer is the principal building

block of the DCNN. The layer parameters are a

set of weights called filter or kernel. The input

feature map is divided into small regions called

receptive fields, and each receptive field will be

multiplied by the filter to produce the output fe-

ature map. The stride is the distance between the

applications of filters that if this hyperparameter is

smaller than filter size, the convolution is applied

in overlapping windows.

• The pooling layer is responsible for downsam-

pling the spatial dimension of the input. The main

objectives of this layer type are the reduction pro-

gressively of the spatial size of the representa-

tion and the reduction of the number of parame-

ters and computations required by the network.

Despite the availability of various types of pool-

ing function like average pooling, L2-norm pool-

ing, the max pooling is the most used as it consists

to compute the maximum in the input patch.

• The fully connected layer is a traditional Multi

Layer Perceptron that uses a softmax activation

function in the output layer. The neurons of this

layer have full connections to all activations in

the previous layer. The purpose of the fully con-

nected layer is to classify the input image using

the highlevel features extracted from convolutio-

nal and pooling layers.

4 METHODS

In this section, we describe our approach to breast

cancer detection from a big number of mammography

images.

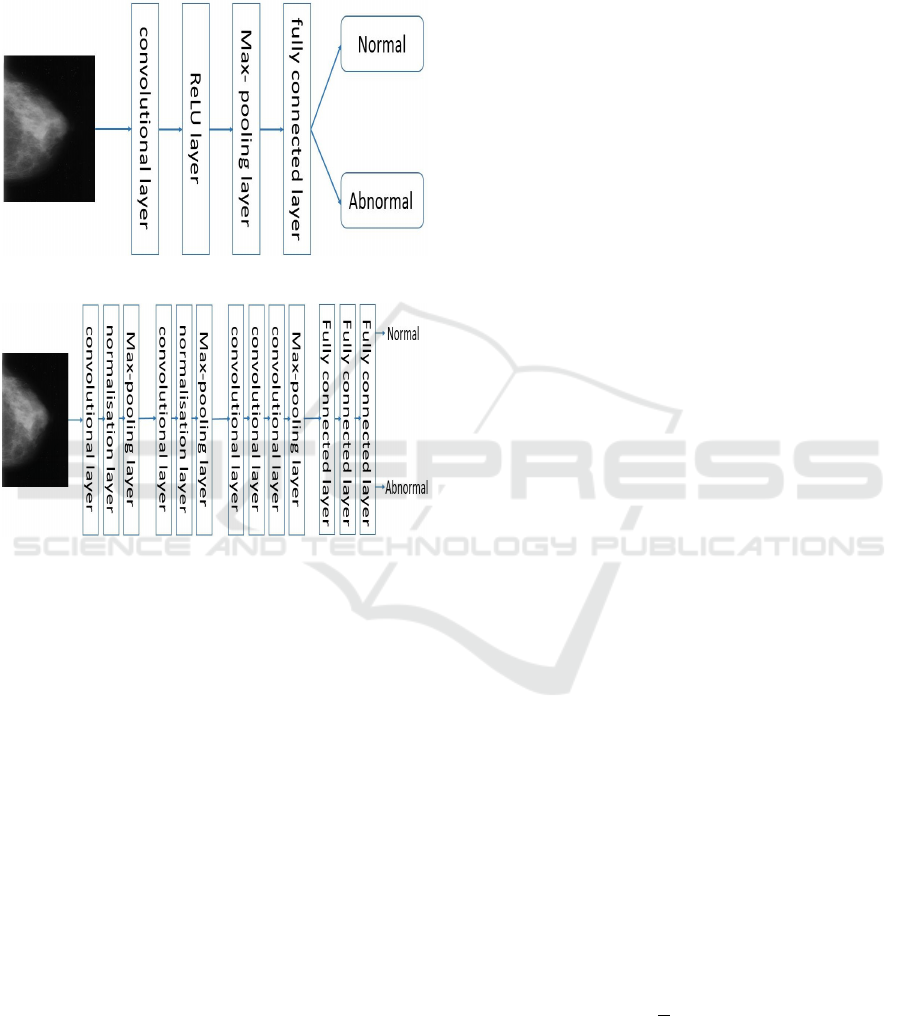

4.1 DCNN Architecture

We train two different DCNN architectures for breast

cancer detection which are shown in figures 1 and 2,

and analyze the effect of model choices that describe

the below. We evaluate two models, a shallow DCNN

(the baseline model) and a pre-trained model AlexNet

(a deeper model) (Krizhevsky et al., 2012).

The baseline model architecture includes a convo-

lutional layer, a max-pooling layer, a fully connected

layer and a soft- max classifier for a binary classifica-

tion. The convolutional layer is composed of 20 fil-

ters of size 5*5 and stride of 2, where receptive field

were no overlapping. The final layer contains two

units fully connected with the previous layer (fully

connected layer), one neuron activated by soft-max

regression which produce a value between 0 and 1 to

interpret cancer or not.

The AlexNet model is designed in the context of

ILSVRC 2012. It is the winner of this challenge with

57% for top-1 accuracy and 80.3% for top-5 accuracy.

The network takes a 227*227*3 as input and produ-

ces as output a distribution of predicted probabilities

across the 1000 classes for ImageNet classification.

AlexNet architecture is a set of stacked 5 convoluti-

onal layers followed by 3 fully connected layers and

ending with a soft-max layer. Concerning the first two

convolutional layers are followed by a normalization

and max-pooling layer. The last convolutional layer

is followed by a maxpooling layer, and the last fully

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

656

connected layer has two outputs in our adapted ver-

sion of AlexNet (equaling to the number of classes in

our dataset). This DCNN model uses a Rectified Li-

near Unit (ReLU) as a neural activation function and

a dropout (Srivastava et al., 2014) as a regularization

technique.

Figure 1: The shallow DCNN architecture.

Figure 2: The AlexNet architecture.

4.2 Preprocessing Images

Simple preprocessing of mammographic images is

performed. Firstly, we resized images to 28*28 and

227*227 pixels, the input image size for each DCNN

model. Secondly, images are converted to an RGB

images.

4.3 Data Augmentation

We study the performance of DCNN in breast cancer

detection from a big number of mammographic ima-

ges (8000 images). The dataset available are small,

for that, we use rotation to increase the size of our da-

taset. Each image was rotated 45, 90 and 180. This

augmentation is justified because masses have no in-

herent orientation and their diagnosis is invariant to

the transformation.

5 EXPERIMENTS AND RESULTS

5.1 Dataset Description

The mammography used in this work are extrac-

ted from the Digital Database for Screening Mam-

mography (DDSM) (Digital Database for Screening

Mammography). This database contains 2620 stu-

dies, each containing both Cranio Caudal (CC) and

Medio Lateral Oblique (MLO) view of each breast.

Each image is a grayscale. This database also in-

cludes information about patient ( age at time of

study,ACR breast density rate...) and the image (spa-

tial resolution...).

In this work, we only used 2000 mammographic

images CC which are normal and abnormal (contai-

ning tumor). The images of our dataset are randomly

split into training and testing sets respectively 70%

and 30% of the full dataset.

5.2 Performances Metrics

In order to evaluate the performance of DCNN in bre-

ast cancer detection, we compared the experimental

results in terms of accuracy, sensitivity and specificity.

Accuracy, sensitivity and specificity are described in

terms of TP, TN, FP and FN.

• True Positive (TP): if the condition is positive and

the prediction (the ratio of sick people with a po-

sitive test).

• True Negative (TN): if the condition is negative

and the prediction (the ratio of healthy people

with a negative test).

• False Positive (FP): if the condition is negative

and the prediction is positive (the ratio of sick pe-

ople with a negative test).

• False Negative (FN): if the condition is positive

and the prediction is negative (the ratio of healthy

people with a positive test).

• Accuracy, sensitivity and specificity are the main

metrics for the performance evaluation of a sy-

stem. N is the number of tests performed.

• Accuracy: is the percentage of mammography

correctly classified.

Accuracy = (

˙

T P + T N)

(

˙

T P + FN + FP + T N)

Overall Accuracy =

1

N

N

∑

i=1

Accuracy

i

(1)

• Sensitivity is the percentage of abnormal mammo-

graphy (with cancer) correctly classified.

Breast Cancer Detection using Deep Convolutional Neural Network

657

Sensitivity = (

˙

T P)

(

˙

T P + FN)

Overall Sensitivity =

1

N

N

∑

i=1

Sensitivity

i

(2)

• Specificity is the percentage of normal mammo-

graphy (without cancer) correctly classified.

Speci f icity = (

˙

T N)

(

˙

T N + FP)

Overall Speci f icity =

1

N

N

∑

i=1

peci f icity

i

(3)

5.3 Experiment Description

DCNN is being widely used to carry out image classi-

fication due to its outstanding performance compared

to other classification techniques. DCNN has become

an emerging alternative in the CAD field.

Our work consists to create a computer aided bre-

ast cancer diagnosis based on DCNN using a large

number of mammographic images (big data). The

main goal of this system is to distinguish between two

classes, mammographic image normal (without can-

cer) and abnormal (with cancer).

In this work, two experiments are carried out. Fir-

stly, two DCNN models, a shallow model and a pre-

trained model AlexNet that we saw their architectures

previously, are used. Secondly, the results of the two

DCNN models are compared to a second deep lear-

ning algorithm SAE using accuracy, sensitivity and

specificity.

In this experiment, to evaluate our methodology,

8000 mammography are selected from the data aug-

mentation operation. We performed 10 tests for both

DCNN models. In each test, our dataset is randomly

divided into training (5600 images) and test (2400

images) sets, in which a different training and test sets

are used in each test. This technique is called cross

validation which allows the evaluation of machine le-

arning algorithms performance in making predictions

on new datasets that it has not been trained on.

5.4 Results

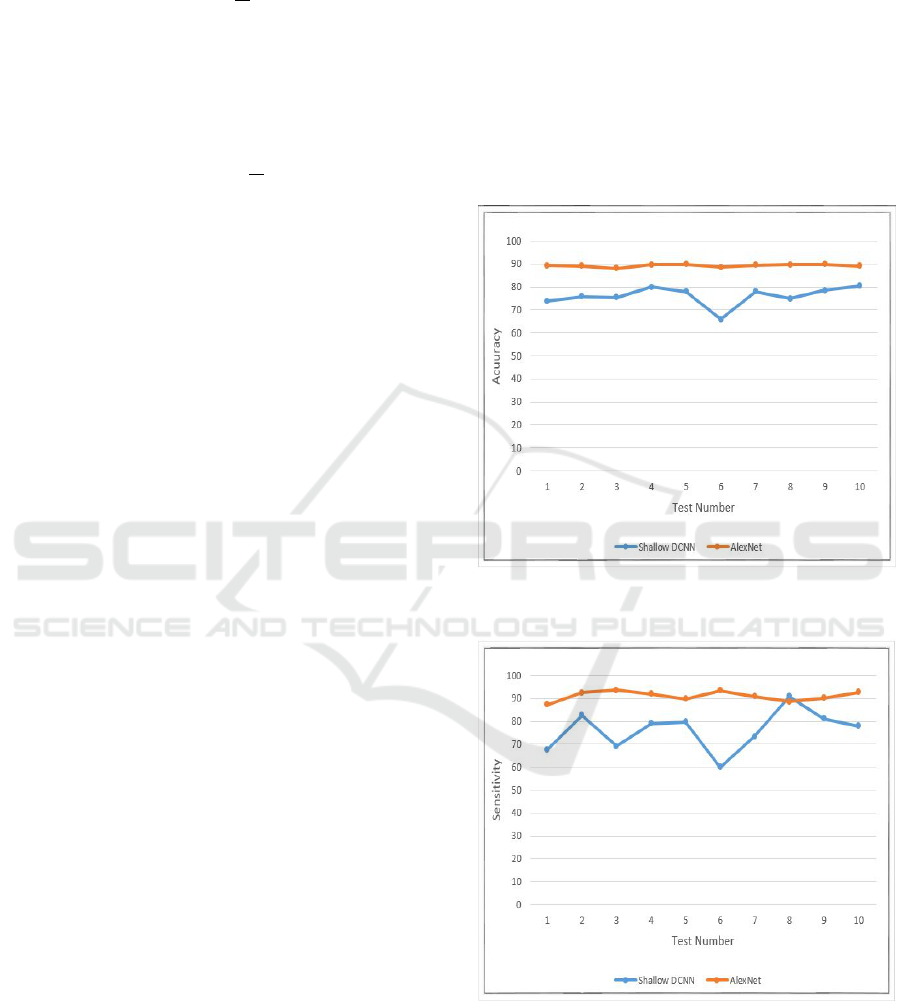

In figure 3, the accuracy rate of the 10 tests is com-

pared between the two DCNN models in which Alex-

Net has the best results. The AlexNet accuracy results

in all the tests are very close that are varied between

88.04% and 89.83%, while the maximum accuracy

value of the shallow DCNN does not exceed 80.47%.

Figure 4 shows the comparison sensitivity rate in

the 10 tests using the two DCNN models where Alex-

Net outperformed the shallow DCNN. The AlexNet

gives sensitivity results between 87.37% and 93.68%,

whereas the shallow DCNN gives sensitivity results

in the interval [60.02%, 90.83%].

Figure 5 presents the comparison specificity rate

in the 10 tests for both DCNN models in which

AlexNet specificity results are between 83.68% and

91.23% and the shallow DCNN specificity results are

in the intervall [61.37%, 87.95%]. This results de-

monstrate that the deeper DCNN model performed

better than the shallow model in all the tests in terms

accuracy, sensitivity and specificity.

Figure 3: The accuracy comparison of the shallow DCNN

and AlexNet.

Figure 4: The sensitivity comparison of the shallow DCNN

and AlexNet.

Table 1 shows the overall accuracy, overall sensiti-

vity and overall specificity of the two DCNN models.

The deeper model AlexNet achieved the best results

where gives 89.23% of overall accuracy, 91.11% of

overall sensitivity and 87.75% of overall specificity.

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

658

Figure 5: The specificity comparison of the shallow DCNN

and AlexNet.

This results presents the importance of the number of

layers in breast cancer detection and especially when

we use a big data for analysis (8000 mammographic

images).

Table 1: Comparison of Results of DCNN Models.

Shallow DCNN AlexNet

Accuracy 76.05% 89.23%

Sensitivity 76.19% 91.11%

Specificity 80.28% 87.75%

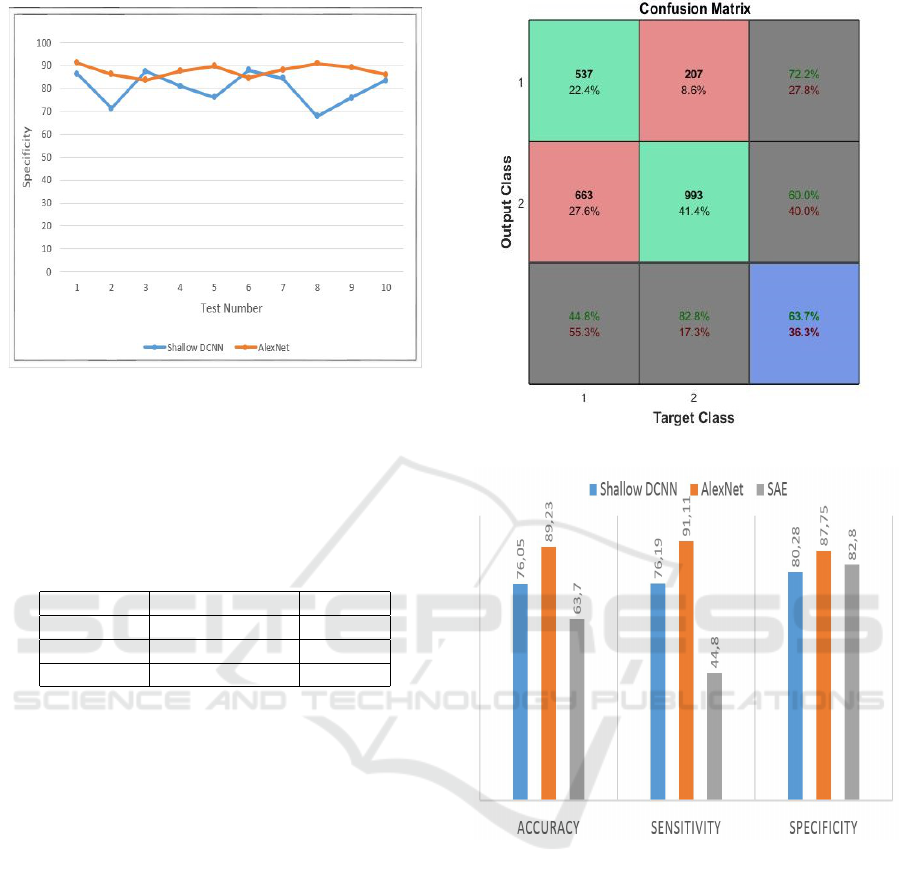

6 COMPARISON WITH SAE

In order to validate the performance of DCNN in

computer aided breast cancer diagnosis system using

a big number of mammography, we carried out a com-

parative study with the SAE algorithm.

The SAE model consists of two autoencoders,

each autoencoder stacked on top of each other. There

are 300 hidden layers in each autoencoder.This model

is applied on the same dataset.

The figure 6 shows the confusion matrix of SAE

which gives 63.7% of accuracy, 44.8% of sensitivity

and 82.8% of specificity.

Figure 7 presents the comparison accuracy, sen-

sitivity and specificity between DCNN models and

SAE. This graph shows the accuracy, sensitivity and

specificity results by three classifiers. The difference

between the results of DCNN and SAE is huge in

terms of three parameters such as DCNN with its

simple architecture, its easy learning and its shared

weight has achieved better results than SAE and es-

pecially AlexNet.

Figure 6: The confusion matrix of the SAE model.

Figure 7: The comparison of DCNN and SAE models.

According to these results DCNN present a pro-

mising methodology for a computer aided breast can-

cer diagnosis system using a big number of mammo-

graphy which the automatically extracted features by

DCNN are effective in mammographic images anlysis

as compared to SAE.

7 CONCLUSION

The performance of DCNN in object recognition and

image classification has made tremendous progress in

the past few years. Recently, many studies are ba-

sed on DCNN in medical imaging analysis like breast

cancer detection by analyzing mammography images,

Breast Cancer Detection using Deep Convolutional Neural Network

659

and they achieved interesting results.

The big data present the philosophy of measuring

all sorts of things, and today a large number of mam-

mography is performed every day. For this, we at-

tempted to expand our dataset using data augmenta-

tion operation to have 8000 mammography, in order

to test the feasibility of using DCNN in breast cancer

detection using big data (8000 mammography).

In this study, we present the performance of

DCNN for computer aided breast cancer diagnosis

system using a big number of mammography (8000

mammographic images). We implemented and com-

pared the performance of two different deep learning

algorithms: DCNN (a shallow model, AlexNet) and

SAE, and the highest results we get are 89.23% for

accuracy, 91.11% for sensitivity, and 87.75% for spe-

cificity.

The comparison results demonstrated the great po-

tential for DCNN and computer learned features used

in the medical imaging area. So the DCNN is a promi-

sing methodology for mammographic CAD system,

especially the deeper model AlexNet.

Since the reliability of the system is pertinent, it is

desirable to increase accuracy more than 89.23%. For

this, we propose to use a deeper DCNN model such

as GoogLeNet (Szegedy, 2015) and ResNet (He et al.,

2015) which have achieved very high accuracy for

image recognition in ILSVRC. In addition, we pro-

pose to increase the number of mammography, to use

another type of classifier in task of classification in

DCNN like SVM, and test another deep learning algo-

rithm such as Deep Belief Network, Deep Boltzmann

Machine... .

REFERENCES

World Health Organisation, http://www.who.int/cancer/

prevention/diagnosisscreening/breastcancer/en/

J. Tang, R. M. Rangayyan, J. Xu, I. E. Naqa and Y. Yang.

(2009) Computer Aided Detection and Diagnosis of

Breast Cancer With Mammography: Recent Advan-

ces.” IEEE Journal of Biomedical and Health Infor-

matics 13 (2): 236251.

G. E. Hinton, S. Osindero and Y. W. Teh. (2006) A fast

learning algorithm for deep belief nets.” Journal of

Neural Computation 18 (7): 15271554.

M. A. Ranzato, C. Poultney, S. Chopra, and Y. LeCun.

(2006) Efficient learning of sparse representations

with an energybased model.” Neural Information Pro-

cessing Systems 19 (NIPS): 11371144.

Olga Russakovsky, Jia Deng, Hao Su et al. (2015) ImageNet

Large Scale Visual Recognition Challenge” Internati-

onal Journal of Computer Vision 115 (3): 211252.

Y. Lecun, L. Bottou, Y. Bengio and P. Haffner. (1998)

Gradient based learning applied to document recog-

nition”, Proceedings of the IEEE 86 (11).

Waseem Rawat and Zenghui. (2017) Wang, Deep Convo-

lutional Neural Networks for Image Classification: A

Comprehensive Review”, Journal of Neural Compu-

tation 29 29 (9): 23522449.

M. Radovic, O. Adarkwa, and Q.Wang. (2017) Object Re-

cognition in Aerial Images Using Convolutional Neu-

ral Networks”, Journal of Imaging 3 (2), 21.

J. Long, E. Shelhamer and T. Darrell. (2017) Fully convo-

lutional networks for semantic segmentation”, IEEE

Journal of Transactions on Pattern Analysis and Ma-

chine Intelligence 39 (4).

L. Vareka and P. Mautner. (2017) Stacked Autoencoders for

the P300 Component Detection” Frontiers in Neuros-

cience journal 11.

J. D. Gallego Posada et al. (2015) Detection and Diagnosis

of Breast Tumors using Deep Convolutional Neural

Networks”.

M.Zejmo, M. Kowal, J. Korbicz and R. Monczak. (2017)

Classifcation of breast cancer cytological specimen

using convolutional neural network”, Journal of Phy-

sics: Conference Series 783, (conference 1).

H. Zhou, Y. Zaninovich, C. M. Gregory. (2016) Mammo-

gram Classification Using Convolutional Neural Net-

works”.

M. M. Jadoon, Q. Zhang, I. U. Haq, S. Butt and A. Jadoon.

(2017) Three Class Mammogram Classification Ba-

sed onDescriptive CNN Features” Journal BioMed

Research International 2017.

A. Krizhevsky, I. Sutskever and G. E. Hinton. (2012) Ima-

geNet Classifcation with Deep Convolutional Neural

Networks” NIPS2 Proceedings of the 25th Interna-

tional Conference on Neural Information Processing

Systems 1: 10971105.

N. Srivastava, G. E. Hinton, A. Krizhevsky, I. Sutskever and

R. Salakhutdinov. (2014) Dropout: A simple way to

prevent neural networks from overfitting” the Journal

of Machine Learning Research 15: 1929195.

DDSM : Digital Database for Screening Mammo-

graphy, http://marathon.csee.usf.edu/Mammography/

Database.html

C. Szegedy. (2015) Going deeper with convolutions” In

Proceedings of the IEEE Conference on Computer Vi-

sion and Pattern Recognition.

K. He, X. Zhang, S. Ren and J. Sun. (2015) Deep Residual

Learning for Image Recognition” IEEE Conference

on Computer Vision and Pattern Recognition

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

660