Multimodal Sentiment and Gender Classification for Video Logs

Sadam Al-Azani and El-Sayed M. El-Alfy

College of Computer Sciences and Engineering,

King Fahd University of Petroleum and Minerals, Dhahran 31261, Saudi Arabia

Keywords:

Multimodal Recognition, Sentiment Analysis, Opinion Mining, Gender Recognition, Machine Learning.

Abstract:

Sentiment analysis has recently attracted an immense attention from the social media research community. Un-

til recently, the focus has been mainly on textual features before new directions are proposed for integration of

other modalities. Moreover, combining gender classification with sentiment recognition is a more challenging

problem and forms new business models for directed-decision making. This paper explores a sentiment and

gender classification system for Arabic speakers using audio, textual and visual modalities. A video corpus is

constructed and processed. Different features are extracted for each modality and then evaluated individually

and in different combinations using two machine learning classifiers: support vector machines and logistic

regression. Promising results are obtained with more than 90% accuracy achieved when using support vector

machines with audio-visual or audio-text-visual features.

1 INTRODUCTION

Social media platforms have become a very attrac-

tive environment for people to share and express their

opinions on all different aspects of life. They use

various forms of content including text, audio, and

video to expression their attitudes, beliefs and opin-

ions. Flourishing business models with great so-

cial impacts have emerged in education, filmmaking,

advertisement, marketing, public media, etc. Since

the advent of YouTube in 2005, the volume of on-

line videos is dramatically increasing and video blog-

ging is becoming very prevalence with million daily

YouTube views. Video blogging is overtaking other

forms of blogging due to its visual expression captiva-

tion. The advances of smartphones with high quality

video cameras have also invigorated people for more

user-generated contents. Nowadays, YouTube sup-

ports more than 75 languages. Several other online

services allowing video sharing include Flickr, Face-

book, Instagram, DailyMotion, GoodnessTv, Meta-

cafe, SchoolTube, TeacherTube, and Vimeo.

Analyzing, understanding and evaluating social

media content can provide valuable knowledge in dif-

ferent applications and domains such as reviews of

products, hotels, hospitals, visited places, and ob-

tained services (Garrido-Moreno et al., 2018; Misirlis

and Vlachopoulou, 2018). Sentiment analysis (SA)

is defined as the field of study that analyzes people’s

opinions, sentiments, evaluations, appraisals, atti-

tudes, and emotions towards entities such as products,

services, organizations, individuals, issues, events,

topics, and their attributes in order to understanding

the public mode and support decision making and

recommendation (Liu, 2012). It incorporates sev-

eral tasks; among them are subjectivity determination,

polarity detection, affect analysis, opinion extraction

and summarization, sentiment mining, emotion detec-

tion, and review mining in different levels namely,

document, sentence and features/aspects (Ravi and

Ravi, 2015).

Several approaches and methodologies have been

proposed to address sentiment analysis tasks pro-

viding many resources and tools. However, most

of the techniques in the context of sentiment analy-

sis have focused on textual reviews and blogs. Re-

cently, some researchers have been motivated to ex-

plore other communication modalities and combina-

tions of modalities for sentiment analysis (Soleymani

et al., 2017). Unimodal analysis and recognition sys-

tems suffer from several drawbacks and limitations

such as noisy sensor data, non-universality, and lack

of individuality. Additionally, each modality has its

own challenges. For example, voice-based recogni-

tion systems might be affected by different attributes

such as low voice quality, background noise, and

disposition of voice-recording devices. Text-based

recognition systems also suffer from several issues re-

lated to morphological analysis, multi-dialectics, am-

biguity, temporal dependency, domain dependency,

Al-Azani, S. and El-Alfy, E.

Multimodal Sentiment and Gender Classification for Video Logs.

DOI: 10.5220/0007711409070914

In Proceedings of the 11th International Conference on Agents and Artificial Intelligence (ICAART 2019), pages 907-914

ISBN: 978-989-758-350-6

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

907

etc. This is true as well regrading visual modality,

which also suffers from illumination conditions, pos-

ture, cosmetics, resolution, etc. In consequence, this

leads to inaccurate and insufficient representation of

discriminating patterns.

Another interesting problem that has a growing

research interest is gender recognition with the aris-

ing need for personalized, reliable, and secure sys-

tems (Alexandre, 2010; Cellerino et al., 2004; Li

et al., 2013; Shan, 2012). It has several promising ap-

plications, e.g. human-computer interaction, surveil-

lance, computer forensics, demographic studies, and

consumer behavior monitoring (Abouelenien et al.,

2017). Consequently, many studies have been carried

out and several methodologies have been proposed to

address this task.

Combining sentiment with gender recognition can

provide more valuable segmentation information that

can improve both individual systems. It can over-

come the real-world gender bias issue of current sen-

timent analysis systems (Thelwall, 2018). The pro-

posed method is based on three forms of modalities:

text, audio and visual. Incorporating different modali-

ties for a subject can be more reliable and significantly

improve the performance since it provides evidences

from different perspectives which can overcome the

limitations of unimodal systems. Additionally, the

proposed system conquers the domain-, topic- and

time-independence sentiment analysis issues.

Determining the polarity of user’s opinions along-

side with gender is very important and have several

interesting applications. For example, it is an ex-

cellent opportunity for large companies to capitalize

on, by extracting user sentiment, suggestions, and

complaints on their products from these video re-

views. Consequently, they can improve their prod-

ucts/services to meet the customers’ expectations. For

example, reviews of shaving machines by males are

more significant than from females whereas reviews

of women-specific products such as make-up products

are more appreciated from females than from males.

This can also be applicable for governments to ex-

plore issues related to citizens according to their gen-

ders. Another application is for criminal and forensic

investigation where the information obtained can be

used as evidences. Amazing applications can be in

educational and training systems such as adaptive and

interactive educational systems where the content is

tailored according to the learners’ genders and emo-

tional expressions.

The rest of this paper is organized as follows.

Section 2 describes the proposed method. Section 3

presents the experimental work and results. Section 4

concludes the paper.

2 PROPOSED METHOD

Our research aims at exploring a new method for joint

classification of sentiments with corresponding gen-

der using multi-modal analysis. Thus, the proposed

model analyzes sentiments and recognizes opinions

per gender. As a multi-class problem, four classes are

defined and detected: negative opinions by females

(F Neg), negative opinions by males (M Neg), posi-

tive opinions by females (F Pos), and positive opin-

ions by males (M Pos). The proposed system is eval-

uated on a video corpus of Arabic speakers.

Figure 1 outlines the general framework of the

proposed multimodal analysis. After collecting and

preparing the video corpus, various channels are sep-

arated and audio is converted to text transcript and

some preprocessing steps are conducted. Each audio

input is in WAV format, 256bits, 48KHz sampling

frequency and a mono channel. Preprocessing op-

erations including normalizing certain Arabic letters

written in different forms (e.g. Alefs and Tah Marb-

otah) are carried out on the associated text transcript.

Each video input is resized to 240 × 320 after detect-

ing faces. A feature extractor is developed for each

modality. The acoustic feature extractor constructs

a feature vector of 68 dimensions for each instance.

Moreover, a textual feature extractor is implemented

to extract textual features based on Word2Vec word

embedding. For each instance, 300 textual features

are extracted, i.e. same dimensionality of vectors in

Word2Vec pretrained models. The visual feature ex-

traction module extracts 800 features for each input.

Various features are then concatenated to form three

bimodal systems of audio-text, text-visual and audio-

visual modalities, and one trimodal system of audio-

text-visual. The generated feature vectors individu-

ally and in combinations are used to train two ma-

chine learning classifiers: Support Vector Machine

(SVM) and Logistic Regression (LR). The follow-

ing subsections provide more details about the main

phases in the system: video corpus preparation, fea-

ture extraction and classification.

2.1 Video Corpus Preparation

A video corpus is collected from YouTube. It is com-

posed of 63 opinion videos expressed by 37 males and

26 females. The videos are segmented into 524 opin-

ions distributed as 168 negative opinions expressed

by males, 140 positive opinions expressed by males,

82 negative opinions expressed by females, and 134

positive opinions expressed by females. Topics cov-

ered in the videos belong to different domains in-

cluding reviews of products, movies, cultural views,

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

908

Figure 1: The proposed system pipeline describing the multimodal features combined using machine learning classifiers for

classifying sentiment and gender.

etc. They are expressed in six different Arabic dialec-

tics: Egyptian, Levantine, Gulf, Iraqi, Moroccan, and

Yemeni using various settings. The collected videos

were recorded by users in real environments includ-

ing houses, studios, offices, cars or outdoors. Users

express their opinions in different periods.

2.2 Feature Extraction

In the following subsections, the textual, acoustic and

visual feature extraction processes are described in se-

quence.

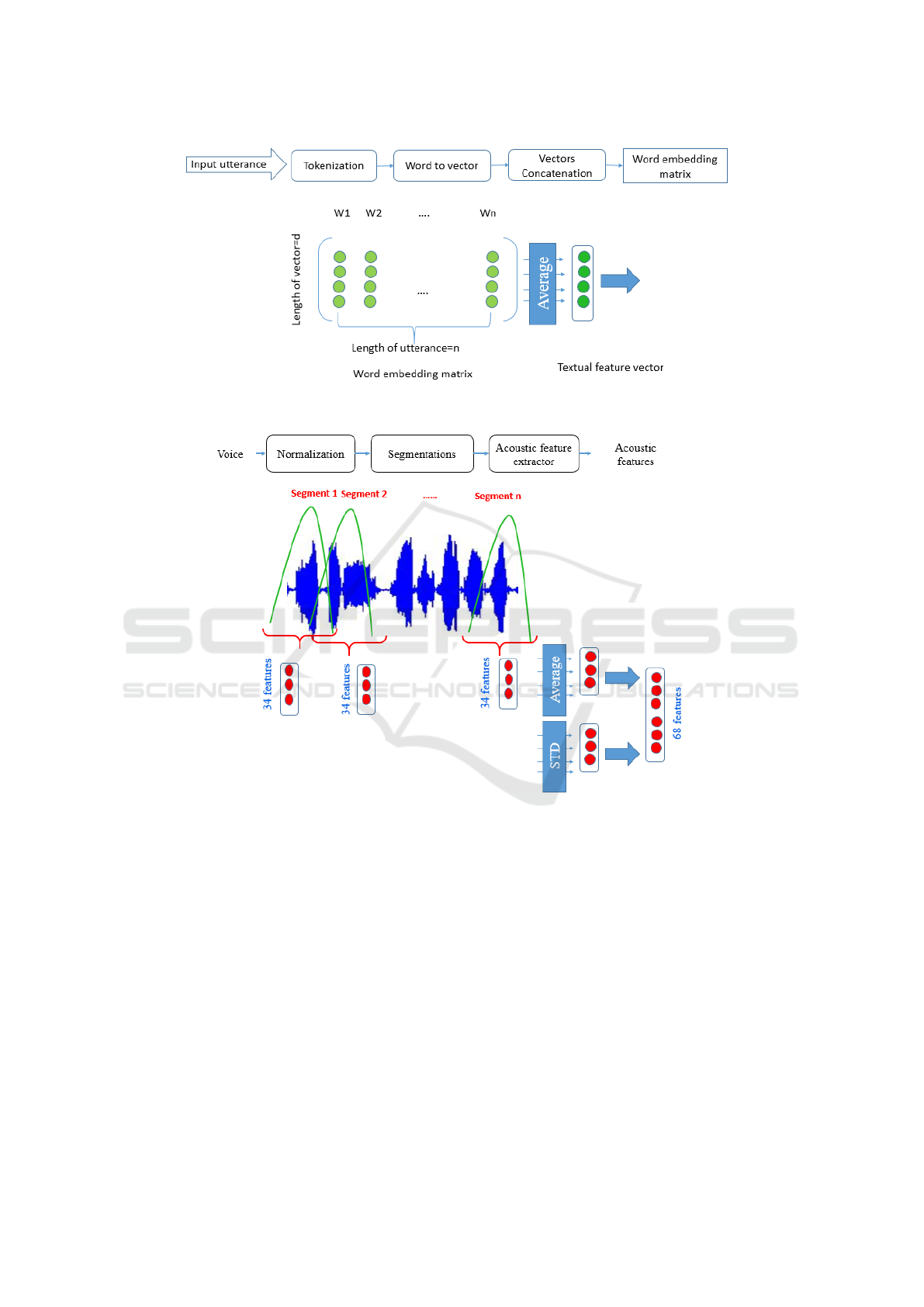

2.2.1 Textual Features

For textual features, word embedding based features

are employed. Word embedding techniques are recog-

nized as an efficient method for learning high-quality

vector representations of words from large amounts

of unstructured text data. They refer to the process

of mapping words from the vocabulary to real-valued

vectors such that elements with similar meaning have

a similar representation. Word2Vec word embed-

ding methods (Mikolov et al., 2013a; Mikolov et al.,

2013b) efficiently compute word vector representa-

tions in a high-dimensional vector space. Word vec-

tors are positioned in the vector space such that words

sharing common contexts and having similar seman-

tics are mapped nearby each other. Word2Vec has

two neural network architectures: continuous bag-

of-words (CBOW) and skip-grams (SG). CBOW and

SG have similar algorithms but the former is trained

to predict a word given a context whereas the latter

is trained to predict a context given a word. Word-

embedding based features have been adopted for dif-

ferent Arabic natural language processing tasks and

achieved the highest results (Al-Azani and El-Alfy,

2018) comparing to other traditional features such as

bag of words.

In this study, a skip-gram model trained using a

Twitter dataset with a dimensionality of 300 (Soli-

man et al., 2017) is used to derive textual features.

As illustrated in Figure 2, a feature vector is gener-

ated for each sample by averaging the embeddings of

that sample (Al-Azani and El-Alfy, 2017).

2.2.2 Acoustic Features

Audio is another important component. The input

audio is segmented into short-term frames or win-

dows of length 0.05 millisecond, and each frame is

split into sub-frames. For each generated frame, a set

of 34 features is computed utilizing pyAudioAnalysis

Python package (Giannakopoulos, 2015). These ex-

tracted features are: (1) Zero Crossing Rate (ZCR)

which is the rate of sign changes of the audio sig-

nal during the frame time, (2) Energy which is the

sum of squares of the signal values normalized by

the frame length, (3) Entropy of sub-frames’ normal-

ized energies, which measures the abrupt changes,

(4) Spectral Centroid which is the center of gravity

of the frame spectrum, (5) Spectral Spread which is

the second central moment of the frame spectrum,

(6) Spectral Entropy of the normalized spectral ener-

gies of a set of sub-frames, (7) Spectral Flux which is

the squared difference between the normalized mag-

nitudes of the spectra of two successive frames, (8)

Spectral Rolloff which is the frequency below which

90% of the magnitude distribution of the spectrum is

concentrated, (9-21) Mel Frequency Cepstral Coeffi-

Multimodal Sentiment and Gender Classification for Video Logs

909

Figure 2: Textual feature extractor.

Figure 3: Audio feature extraction.

cients (MFCCs), (22-33) Chroma Vector which is a

12-element histogram representation of the spectral

energy, and (34) Chroma Deviation which is the stan-

dard deviation of the 12 chroma vector elements. Sub-

sequently, statistics are computed from each audio’s

segments to represent the whole audio using one de-

scriptor such as the mean and standard deviation in

our study. Thus, each input audio is represented by

68 (34 × 2) features (as illustrated in Figure 3).

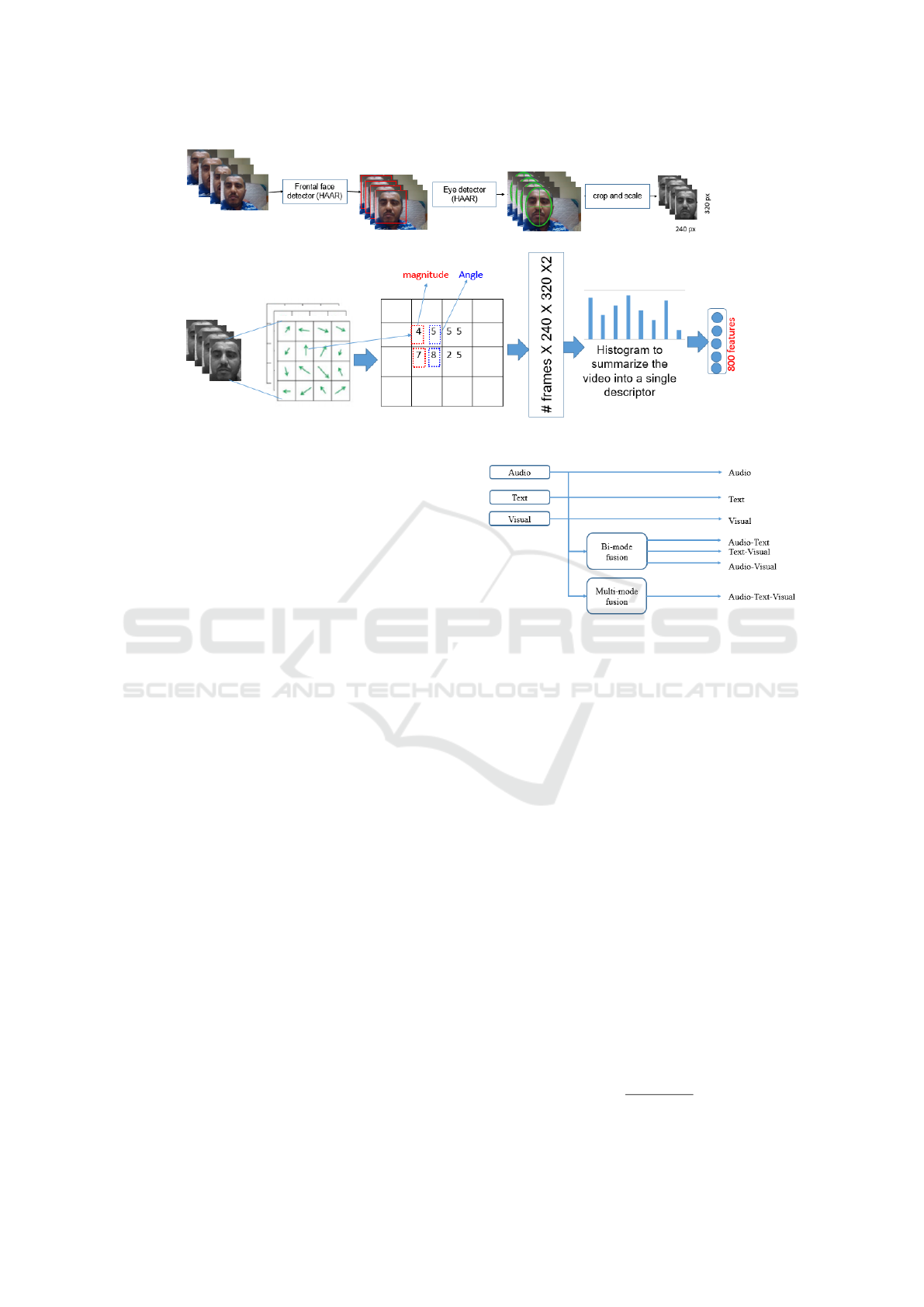

2.2.3 Visual Features

Figure 4 depicts the general process of visual feature

extraction. Facial expressions play very important

role in reducing language ambiguity. Recently, it has

been shown that face expressions can recognize hu-

man’s emotional states using a hybrid deep learning

model with promising results (Jain et al., 2018). A

main step in our study to mine opinions and genders

from video is to detect the speaker’s face and segment

faces from the rest of a given frame. Towards this end,

the general frontal face and eye detectors (Viola and

Jones, 2004) are utilized. The frontal face detector

is based on HAAR features (Viola and Jones, 2001)

which combine more complex classifiers in a cascade

to detect the face. HAAR feature based face detector

is widely used and considered to be very popular with

high detection rate (Padilla et al., 2012). Addition-

ally, an eye detector is adopted to locate eye positions

which provide significant and useful values to crop

and scale the frontal face to 240 × 320 pixels in our

case.

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

910

Figure 4: Face localization and histogram of optical flow features extraction.

After segmenting the whole face in successive

frames, it is possible to compute the optical flow to

capture the evolution of complex motion patterns for

the classification of facial expressions. Optical flow

is considered to extract the sentiment visual features

from the videos processed in the previous step. Opti-

cal flow measures the motion relative to an observer

between two frames at each point of them. At each

point in the image, the magnitude and phase values

are obtained which describe the vector representing

the motion between the two frames. This results in

a descriptor of NoF × 240 × 320 × 2 dimensions to

represent each video, where NoF refers to the num-

ber of frames in a video and 240 × 320 is the size of

one frame. For example, a video of 30 frames is rep-

resented by a descriptor of 30 × 240 × 320× dimen-

sions. To describe each video as a single feature vec-

tor (descriptor), we need to summarize the generated

descriptor of NoF × 240 × 320 × 2 dimensions. To-

wards this end, several statistical methods can be used

such as average, standard deviation, min, max, etc.

Histogram is considered as a good technique to sum-

marize such descriptors in previous works (Carcagn

`

ı

et al., 2015; Dalal et al., 2006). In our study, the his-

togram of the optical flows per video is calculated to

summarize the high-dimensional descriptor as a sin-

gle feature vector.

The scene is split into a grid of s × s

bins, where s = 10. The location of each

feature is recorded, and the direction of the

flow is categorized as one of the eight motions

from {0, 45, 90, 135, 180, 225, 270, 315, 360}. The

number of flows belonging to each direction is then

counted to end up with 10 × 10 × 8 bins for each

frame. Average and max-pooling methods are con-

sidered to combine the histograms of various grids in

each video.

Figure 5: The evaluated models.

2.2.4 Fusion and Classification

For the three modalities: Audio, Textual, and Visual,

there are four main possibilities to combine them:

audio-textual, textual-visual, audio-visual, and audio-

textual-visual. In this work, feature-level fusion is

carried out by simply concatenating the extracted fea-

tures of textual, audio and visual modalities. Math-

ematically, let T = {t

1

, t

2

, ..., t

n

}, T ∈ R

n

, represent

the textual feature vector with length n and A =

{a

1

, a

2

, ..., a

m

}, A ∈ R

m

, represent acoustic feature

vector with size m and V = {v

1

, v

2

, ..., v

o

}, V ∈ R

k

,

represent visual feature vector with size k. T, A and V

are combined in various ways and their abilities to

detect sentiment-gender are evaluated. Two inher-

ent issues arise when fusing features and need to be

addressed, namely scaling and the curse of dimen-

sionality. The former arises due to having differ-

ent scales for features extracted by different methods.

Moreover, some features might be redundant or noisy.

These two issues are handled through normalization

and feature selection. The features are normalized us-

ing min-max scheme:

x

0

=

x − x

min

x

max

− x

min

Multimodal Sentiment and Gender Classification for Video Logs

911

Table 1: Unimodal and multimodal sentiment-gender joint analysis results using a support vector machine.

Modality Rec Prc F

1

GM Acc MCC

Audio 80.73 80.65 80.62 86.62 80.73 0.7375

Text 68.32 67.65 67.83 77.82 68.32 0.5673

Visual 81.68 81.95 81.70 87.09 81.68 0.7505

Audio-Text 83.02 82.94 82.96 88.42 83.02 0.7690

Text-Visual 84.54 84.89 84.59 89.23 84.54 0.7901

Audio-Visual 91.60 91.68 91.61 94.13 91.60 0.8859

Audio-Text-Visual 90.65 90.71 90.63 93.51 90.65 0.8729

Table 2: Unimodal and multimodal sentiment-gender joint analysis results using a logistic regression classifier.

Modality Rec Prc F

1

GM Acc MCC

Audio 75.19 75.05 75.04 82.84 75.19 0.6628

Text 67.18 67.23 67.19 77.23 67.18 0.5542

Visual 83.40 83.59 83.44 88.40 83.40 0.7742

Audio-Text 82.63 82.52 82.54 88.04 82.63 0.7635

Text-Visual 85.11 85.30 85.14 89.68 85.11 0.7977

Audio-Visual 89.50 89.52 89.49 92.71 89.50 0.8572

Audio-Text-Visual 90.27 90.37 90.21 93.21 90.27 0.8677

where x

0

is the normalized value corresponding to x

which falls in the range from x

min

to x

max

. For feature

reduction, the principal component analysis (PCA) is

applied with the criterion to select the number of com-

ponents such that the amount of variance that needs

to be explained is greater than 0.99. Each form of in-

dividual and combinations of modalities is evaluated

using (1) LibSVM SVM with Linear Kernel, and (2)

LR with L2-norm regularization and Liblinear solver.

3 EXPERIMENTS AND RESULTS

Experiments are conducted on the developed video

corpus using 10-fold cross validation mode. Sen-

timent models are built using the extracted features

with the aforementioned classifier. The main com-

ponents in the proposed system including preprocess-

ing module, feature extraction module, fusion module

and classification module are developed on Python.

Gensim package is applied for textual features extrac-

tion, PayAudioAnalysis package is utilized for acous-

tic features extraction while OpenCV package is im-

plemented for visual features extraction. Scikit-learn

package is used for feature reduction and classifica-

tion.

Precision (Prc), Recall (Rec), F

1

, Geometric mean

(GM), percentage accuracy (Acc), and Matthews Cor-

relation Coefficient (MCC) are adopted to evaluate the

proposed models. These measure are computed from

10-fold cross-validation mode. By fitting independent

models and averaging results over 10 partitions, the

variance is reduced, which is preferable over hold-out

estimator to get more reliable measures to compare

various models when the amount of data is limited.

Since we deal with a multimodal recognition sys-

tem, several models will be generated from the con-

sidered modalities either standalone modalities, bi-

modalities or trimodalities. In this study, seven main

models are generated per each classifier as illustrated

in Figure 5. Three models are generated for audio,

text and visual modalities. Three other models need

to generated for the bimodal approaches of audio-

textual, textual-visual, and audio-visual modalities.

The last model is for the trimodal case combining au-

dio, text and visual modalities.

Table 1 shows the results of different models us-

ing SVM. For the standalone cases, visual modal-

ity achieves the highest results with an accuracy of

81.68% which is followed by audio modality with

an accuracy of 80.73%. The lowest performance is

achieved by the textual modality with an accuracy

of 68.32%. Combining audio with textual modalities

leads to improving the results of both of them signif-

icantly, in all cases. For example, in the best case of

bimodality, the accuracy of audio modality and visual

modality went up from 80.73% and 81.68%, respec-

tively, to 91.60% in the case of the bimodal audio-

visual recognition system. This is true regarding the

multimodal recognition system as well; combining

the three modalities causes remarkable improvement

of the results over single modalities.

In the case of the LR classifier and standalone

modalities, visual modality again reports the high-

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

912

est results with an accuracy of 83.40% which is fol-

lowed by audio modality that achieves an accuracy of

75.19%. The least results are obtained when using

textual modality with an accuracy of 67.18%. Com-

bining the standalone modalities leads to improve the

results significantly in all cases using bimodal fusion

and trimodal fusion. The highest results of LR are ob-

tained from the combination of the three modalities

with an accuracy of 90.27%.

Overall, the highest accuracy of 91.6% is obtained

when using audio-visual support vector recognition

system.

4 CONCLUSIONS

Joint recognition of gender and sentiment polarity is

addressed in this paper as a multi-class classification

problem. A video corpus of Arabic speakers is col-

lected and processed. Two machine learning classi-

fiers are evaluated using various modalities. Features

are extracted and evaluated individually and after fu-

sion. The experimental work using 10-fold cross val-

idation showed that significant improvements can be

achieved when combining modalities and using a sup-

port vector machine classifier. As future work, we

suggest exploring parameter optimization and deep

learning approaches to further improve the results.

ACKNOWLEDGEMENTS

The authors would like to acknowledge the sup-

port provided by the Deanship of Scientific Research

at King Fahd University of Petroleum & Minerals

(KFUPM) during this work.

REFERENCES

Abouelenien, M., P

´

erez-Rosas, V., Mihalcea, R., and

Burzo, M. (2017). Multimodal gender detection. In

Proceedings of the 19th ACM International Confer-

ence on Multimodal Interaction, pages 302–311.

Al-Azani, S. and El-Alfy, E.-S. M. (2017). Using word em-

bedding and ensemble learning for highly imbalanced

data sentiment analysis in short arabic text. Procedia

Computer Science, 109:359–366.

Al-Azani, S. and El-Alfy, E.-S. M. (2018). Combining emo-

jis with arabic textual features for sentiment classifica-

tion. In 9th IEEE International Conference on Infor-

mation and Communication Systems (ICICS), pages

139–144.

Alexandre, L. A. (2010). Gender recognition: A multiscale

decision fusion approach. Pattern recognition letters,

31(11):1422–1427.

Carcagn

`

ı, P., Coco, M., Leo, M., and Distante, C. (2015).

Facial expression recognition and histograms of ori-

ented gradients: a comprehensive study. Springer-

Plus, 4(1):645.

Cellerino, A., Borghetti, D., and Sartucci, F. (2004). Sex

differences in face gender recognition in humans.

Brain research bulletin, 63(6):443–449.

Dalal, N., Triggs, B., and Schmid, C. (2006). Human de-

tection using oriented histograms of flow and appear-

ance. In European conference on computer vision,

pages 428–441. Springer.

Garrido-Moreno, A., Lockett, N., and Garc

´

ıa-Morales, V.

(2018). Social media use and customer engagement.

In Encyclopedia of Information Science and Technol-

ogy, Fourth Edition, pages 5775–5785. IGI Global.

Giannakopoulos, T. (2015). pyaudioanalysis: An open-

source python library for audio signal analysis. PloS

one, 10(12).

Jain, N., Kumar, S., Kumar, A., Shamsolmoali, P., and

Zareapoor, M. (2018). Hybrid deep neural networks

for face emotion recognition. Pattern Recognition Let-

ters.

Li, M., Han, K. J., and Narayanan, S. (2013). Automatic

speaker age and gender recognition using acoustic and

prosodic level information fusion. Computer Speech

& Language, 27(1):151–167.

Liu, B. (2012). Sentiment analysis and opinion mining.

Synthesis Lectures on Human Language Technologies,

5(1):1–167.

Mikolov, T., Chen, K., Corrado, G., and Dean, J. (2013a).

Efficient estimation of word representations in vector

space. In Proceedings of Workshop at International

Conference on Learning Representations.

Mikolov, T., Sutskever, I., Chen, K., Corrado, G. S., and

Dean, J. (2013b). Distributed representations of words

and phrases and their compositionality. In Advances in

Neural Information Processing Systems, pages 3111–

3119.

Misirlis, N. and Vlachopoulou, M. (2018). Social media

metrics and analytics in marketing–s3m: A mapping

literature review. International Journal of Information

Management, 38(1):270–276.

Padilla, R., Costa Filho, C., and Costa, M. (2012). Evalu-

ation of haar cascade classifiers designed for face de-

tection. World Academy of Science, Engineering and

Technology, 64:362–365.

Ravi, K. and Ravi, V. (2015). A survey on opinion mining

and sentiment analysis: Tasks, approaches and appli-

cations. Knowledge-Based Systems, 89:14–46.

Shan, C. (2012). Learning local binary patterns for gen-

der classification on real-world face images. Pattern

recognition letters, 33(4):431–437.

Soleymani, M., Garcia, D., Jou, B., Schuller, B., Chang, S.-

F., and Pantic, M. (2017). A survey of multimodal sen-

timent analysis. Image and Vision Computing, 65:3–

14.

Soliman, A. B., Eissa, K., and El-Beltagy, S. R. (2017).

Aravec: A set of arabic word embedding models for

use in arabic nlp. In Proceedings of the 3rd Interna-

tional Conference on Arabic Computational Linguis-

tics (ACLing 2017), volume 117, pages 256–265.

Multimodal Sentiment and Gender Classification for Video Logs

913

Thelwall, M. (2018). Gender bias in sentiment analysis.

Online Information Review, 42(1):45–57.

Viola, P. and Jones, M. (2001). Rapid object detection us-

ing a boosted cascade of simple features. In Proceed-

ings of the 2001 IEEE Computer Society Conference

on Computer Vision and Pattern Recognition (CVPR),

volume 1, pages I–I.

Viola, P. and Jones, M. J. (2004). Robust real-time face

detection. International Journal of Computer Vision,

57(2):137–154.

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

914