Workflows for Virtual Reality Visualisation and Navigation

Scenarios in Earth Sciences

Krokos Mel

1,3 a

, Bonali Fabio Luca

2 b

,

Vitello Fabio

3 c

,

Antoniou Varvara

4 d

,

Becciani Ugo

3

,

Russo Elena

2 e

,

Marchese Fabio

2 f

,

Fallati Luca

2 g

, Nomikou Paraskevi

4 h

,

Kearl Martin

1 i

,

Sciacca Eva

3 j

and Malcolm Whitworth

5 k

1

University of Portsmouth, School of Creative Technologies, Eldon Building, Winston Churchill Ave, Portsmouth,

PO1 2UP, U.K

2

University of Milano-Bicocca, Department of Earth and Environmental Sciences, Piazza della Scienza 4 – Ed. U04,

20126 Milan, Italy

3

Italian National Institute for Astrophysics (INAF), Astrophysical Observatory of Catania, Italy

4

National and Kapodistrian University of Athens, Department of Geology and Geoenvironment,

Panepistimioupoli Zografou, 15784 Athens, Greece

5

University of Portsmouth, School of Earth and Environmental Sciences, Burnaby Road, Portsmouth, PO1 3QL, U.K

{fabio.vitello, ugo.becciani,eva.sciacca}@inaf.it, {vantoniou, evinom}@geol.uoa.gr,

{e.russo11, l.fallati}@campus.unimib.it

Keywords: Virtual Reality, Workflows, Visual Exploration and Discovery, Video Game Engines, Structure from Motion,

Digital Terrain and Bathymetric Models.

Abstract: This paper presents generic guidelines for constructing customised workflows exploiting game engine

technologies aimed at allowing scientists to navigate and interact with their own virtual environments. We

have deployed Unity which is a cross-platform game engine freely available for educational and research

purposes. Our guidelines are applicable to both onshore and offshore areas (either separately or even merged

together) reconstructed from a variety of input datasets such as digital terrains, bathymetric and structure from

motion models, and starting from either freely available sources or ad-hoc produced datasets. The deployed

datasets are characterised by a wide range of resolutions, ranging from a couple of hundreds of meters down

to single centimetres. We outline realisations of workflows creating virtual scenes starting not only from

digital elevation models, but also real 3D models as derived from structure from motion techniques e.g. in the

form of OBJ or COLLADA. Our guidelines can be knowledge transferred to other scientific domains to

support virtual reality exploration, e.g. 3D models in archaeology or digital elevation models in astroplanetary

sciences.

1 INTRODUCTION

Scientific visualisation can be an important aid for

effective analysis and communication of complex

a

https://orcid.org/0000-0001-5149-6091

b

https://orcid.org/0000-0003-3256-0793

c

https://orcid.org/0000-0003-2203-3797

d

https://orcid.org/0000-1111-2222-3333

e

https://orcid.org/0000-0001-7744-1224

f

https://orcid.org/0000-0001-8384-3019

g

https://orcid.org/0000-0002-5816-6316

h

https://orcid.org/0000-0001-8842-9730

i

https://orcid.org/0000-0003-2016-7601

j

https://orcid.org/0000-0002-5574-2787

k

https://orcid.org/0000-0003-3702-9694

information that may be otherwise difficult to convey

(Vitello et al., 2018, Dykes et al 2018). Visualisation

is often a fundamental part of the research process,

used for understanding, interpreting and exploring,

Mel, K., Luca, B., Fabio, V., Varvara, A., Ugo, B., Elena, R., Fabio, M., Luca, F., Paraskevi, N., Martin, K., Eva, S. and Whitworth, M.

Workflows for Virtual Reality Visualisation and Navigation Scenarios in Earth Sciences.

DOI: 10.5220/0007765302970304

In Proceedings of the 5th International Conference on Geographical Information Systems Theory, Applications and Management (GISTAM 2019), pages 297-304

ISBN: 978-989-758-371-1

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

297

and it can be helpful in guiding the direction of

research, from fine-tuning individual parameters to

posing entirely new research questions (Mitasova et

al., 2012; Sciacca et al., 2015). Furthermore, Virtual

reality (VR) can provide scientists with novel

navigation mechanisms for exploring outcrops in a

fully immersive way, offering the possibility to also

interact and collect measurements thus replicating

real world field activities (Fig. 1). The increasingly

lower costs of new VR devices make it possible to

easily access immersive VR environments in a variety

of contexts e.g. for outreach, research, training,

education and dissemination purposes (e.g. Nayyar et

al., 2018; Freina and Ott, 2015; Oprean et al., 2018).

Recently Gerloni et al. (2018) presented a VR

platform enabling the survey of geological

environments and assessment of related geo-hazards,

targeting a widespread audience, ranging from early

career scientists and civil planning organisations to

academics (e.g. students and teachers) and the society

at large for public outreach. The underlying idea is to

provide users with a holistic view of particular

geohazards by allowing exploration of specific

features from several points of view and at different

scales, to provide rich user experiences by realising

exploration scenarios that may not even be possible

to perform in the actual field in a real-world scenario.

Such VR platforms can be valuable tools in earth-

related sciences for developing skills in spatial

awareness thus aiding users to comprehend complex

geological features more easily. This paper reports

our experiences in creating virtual scenes from

different sources for reconstructing onshore and

offshore 3D environments.

Due to the rapidly evolving technological

advances there is currently an explosion in both the

amount and complexity of scientific datasets

manifested by several global Digital Elevation

Models (DEMs)/Digital Terrain Models (DTMs) that

are freely available (e.g. http://srtm.csi.cgiar.org/) but

also high-quality DTMs/DEMs/Digital Surface

Models (DSMs) coming from airborne acquisition

such as LIDAR (e.g. Liu, 2008) and Aerial Structure

from Motion approaches (ASfM) (e.g. Westoby et al.,

2012; Turner et al., 2012). Freely available DTMs

usually cover larger areas with a lower resolution

whereas LIDAR and ASfM approaches are generally

used to create smaller DTMs often characterised by

higher resolutions and rich textures. Using dedicated

Structure from Motion (SfM) software packages it is

also possible to directly reconstruct VR environments

a

http://paulbourke.net/dataformats/obj/

exploiting 3D model formats such as OBJ

a

or

COLLADA

b

.

Figure 1: Exploration of Icelandic volcanoes through VR.

Freely available offshore datasets are provided by

the General Bathymetric Chart of the Oceans

supporting pixel sizes of 250m (GEBCO -

https://www.gebco.net/) as well as EMODnet-

Bathymetry providing harmonised DTMs

(http://www.emodnet-bathymetry.eu/) for the

European sea regions with a pixel size of 100m.

To achieve fine scales (e.g. 1m) scientists

normally perform dedicated surveys based on

customised multibeam echosounders or even small

ROVs (Remote Operated Vehicles, e.g. Savini et al.,

2014).

In the following sections we introduce all

necessary steps to produce VR-enabled scenarios

exploiting modern game engines focusing on Unity,

which is the backbone upon which we have

developed two workflows for onshore and offshore

scenarios..

2 GAME ENGINES FOR VR

Game engines provide sets of tools to build video

games for consoles, mobile devices, and personal

computers providing a rendering module for 2D or

3D graphics, a physics engine or collision detection

(and collision response), sound, scripting, animation,

but also functionalities for artificial intelligence,

networking, streaming, memory management,

threading, localization support, scene graphs, and

may also include video support for cinematics. A

game engine provides mechanisms to control the

b

https://www.khronos.org/collada/

GISTAM 2019 - 5th International Conference on Geographical Information Systems Theory, Applications and Management

298

course of the game and is responsible for the visual

appearance of the game rules. Examples of game

engines available for free are Unity

(https://unity3d.com) from Unity Technologies,

CryEngine (https://www.cryengine.com/) from the

German development studio Crytek, and Unreal

(https://www.unrealengine.com/) from Epic Games.

A current overview and comparison of different game

engines can be found e.g. in O’Flanagan (2014) and

Lawson (2016). In the context of this paper, for the

creation of a virtual scenario applicable to Earth

Sciences, Unity was selected. This game engine is

best-suited for our development purposes considering

its cross-platform integration allowing less

development effort toward supporting different

platforms such as desktops (Windows, MacOS,

Linux), mobiles (Android, iOS) and webGL based

applications. Unity supports full object orientation in

C#, supporting modularity and extensibility to build

scalable assets that are easily expanded and sustained

long term. Furthermore, Unity supports several file

formats as used in industry-leading 3D applications

e.g. 3DS Max, or Blender. Finally, Unity is well

documented as it is supported by large numbers of

communities of software developers.

3 WORKFLOWS MODELS

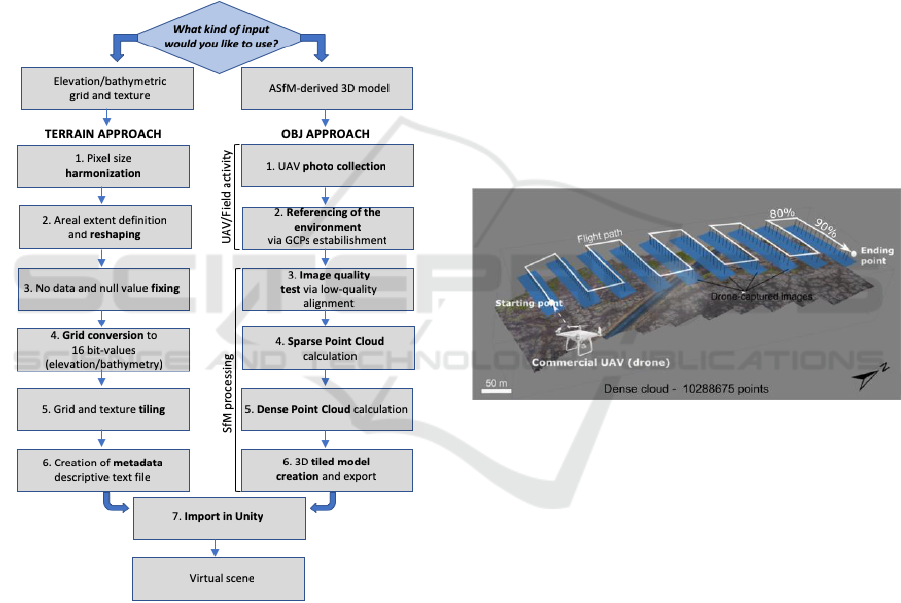

This section describes the two workflows (Fig. 2)

developed to generate the VR scenes from different

sources regarding onshore and offshore

environments.

3.1 VR Scenes from DTM

Onshore and offshore data in DTM/DEM/DSM

format can be imported in the game engine using

Unity’s terrain system based on 16-bit grayscale

heightmaps. To prepare the proper input files the

following information is needed: i) an

elevation/bathymetric grid for user-selected area (the

elevation/depth value z is reported in meters); ii) a

raster/image file representing the texture for the

selected area. These datasets must be georeferenced

in metric units. The steps required to merge and

process the datasets are detailed below.

STEP 1. Harmonisation: the texture and elevation

grid have to match the same pixel size. If necessary,

both must be resampled up to the best pixel size value.

This is a critical and mandatory step in order to

c

https://tinyurl.com/y62w52ao

manage assets so that they can be imported into

Unity.

STEP 2. Reshaping: the elevation grid and the

texture must have the same extent. Furthermore, the

shape of the target area must be a square as it will

have to be divided, in a further step, in square tiles.

STEP 3. Value Fixing: another important point is

that both the elevation grid and the texture must be

assigned with non-null or non-empty values. Each

required value must be filled by interpolation or by a

default value within the range of the elevation/depth

values and RGB colours.

STEP 4. Grid Conversion: The elevation/depth grid

must be adapted in order to be used with the Unity

terrain based on an heightmap which is a black and

white image that stores elevation data. Considering

that Unity expects 16-bit unsigned integer (ranging

from black to white to represent height, where black

is the lowest point and white is the highest point) the

elevation grid must be scaled within the range 0 -

2^16=65535. This scaled data is then exported into a

new elevation grid suitable for further processing.

STEP 5. Tiling Processing: due to the high

resolution and large extension of the areas that are

usually managed, we recommend production of a

tiled set of the elevation/depth grid-texture. The

Global Mapper software

c

package has a function

(Elevation Grid Format → UNITY RAW

TERRAIN/TEXTURE) that tiles and exports (at the

same time) both the texture and the elevation grid

data. Users must set values for a number of

parameters such as terrain size and texture image size

in pixels, e.g. 4096 for the texture and 4097 for the

terrain (one additional pixel is required by Unity).

Global Mapper will then export the texture as .jpg file

format and the elevation/depth grid (called terrain in

Unity) as a 16-bit RAW file. Both of them are rotated

and ready for the import process to start in stage 6. To

facilitate the import process into Unity the following

information must be given: i) x and y limits of the

model (in the metric system - e.g. utm coordinates);

ii) areal extents of the model; iii) min and max

altitudes of the elevation/depth grid; iv) pixel size; v)

number of tiles along x and y and finally vi) number

of pixels per each tile.

STEP 6. Metadata Association: the produced tiled

elevation/depth grid-texture is finally associated with

a descriptive text file, which adds information about

the real environment depicted in the data mentioned

in step 5. The essential information these files must

contain are easting, northing and elevation. These text

Workflows for Virtual Reality Visualisation and Navigation Scenarios in Earth Sciences

299

files are formatted as JSON strings, used to calculate

the position of the player in the real-world

coordinates.

STEP 7. Tiles Importing: this step is required for

large extensions and/or high resolutions where the

number of resulting tiles can be prohibitively large.

As an example, in Gerloni et al. (2018) importing a

high-resolution terrain and texture with 2 cm/pixel

resulted in a total number of 1681 of tiles with

512x512 pixels each. To automate the import and

alignment of such numbers of tiles the Unity editor

functionality has been extended with an ad-hoc code

made available on GitHub (https://tinyurl.com

/y268jsg2).

Figure 2: Conceptual overview summarising the

workflows.

3.2 VR Scenes from ASFM 3D Models

This section describes the main steps needed for

reconstruction, processing and rendering from the

real environment into the VR scene using a 3D tiled

OBJ model. The environment can be reconstructed

using the ASfM approach (e.g. Westoby et al., 2012),

providing centrimentric pixel size resolution of the

texture. The SfM technique allows the identification

of matching features in different images, captured

along proper UAV flight paths (Fig. 3), the

combination between them to create a sparse and

dense cloud, the creation of a mesh, a texture and,

much more importantly for VR, a high-resolution 3D

tiled model (e.g. Westoby et al., 2012).

STEP 1. Photo Collection: UAV-captured photos

have been collected in order to have an overlap of

90% along the path and 80% in lateral direction,

useful to have a good alignment of images and to

reduce the distortions on the resulting orthomosaics

and have been processed with the use of Agisoft

PhotoScan (http://www.agisoft.com/), a commercial

Structure from Motion (SfM) software. We used a

quadcopter (DJI Phantom 4 Pro) for image

acquisition every 2 seconds (equal time interval

mode), with a constant velocity of 3 m/s to minimize

the motion blur, as well as to achieve well-balanced

camera settings (exposure time, ISO aperture) and

ensure sharp and sufficiently exposed images with

low noise. The UAV flight path has been planned and

managed using DJI Ground Station Pro software

(https://www.dji.com/ground-station-pro).

Figure 3: UAV flight path (white line) at 50 m of flight

height. The dense SfM-resulting cloud is represented.

STEP 2. Environment Referencing: Furthermore,

in order to allow the co-registration of datasets and

the calibration of the 3D model, World Geodetic

System (WGS84) coordinates of, at least, four

artificial Ground Control Points (GCPs) have been

established near each corner within each surveyed

area and one in the central part for reducing the so

called doming effect resulting from SfM processing

(e.g. James and Robson, 2012).

STEP 3. Image Quality Test: the first step was to

obtain an initial low-quality image alignment, only

considering measured camera locations (Reference

preselection mode). After that, images with quality

value of ˂0.5, or out of focus, have been disabled and

thus excluded from further photogrammetric

processing, as suggested in the user manual of the

software.

GISTAM 2019 - 5th International Conference on Geographical Information Systems Theory, Applications and Management

300

STEP 4. Sparse Point Cloud Calculation: after this

first quality check, ground control points were added

in all images, where available, in order to: i) scale and

georeference the point cloud (and thus the resulting

model); ii) optimise extrinsic parameters, such as

estimated camera locations and orientations; iii)

improve the accuracy of the final model.

Furthermore, realignment of photos using high

accuracy settings was completed, resulting in a better

establishment of camera location and orientation, and

then the sparse point cloud was computed.

STEP 5. Dense Point Cloud Calculation: Dense

point cloud (e.g. Fig. 3) is then calculated from the

sparse point cloud, using a Mild depth filtering and

high-quality settings.

STEP 6. Tiled Model Creation: a tiled model with a

very high resolution that represents all 3D objects and

vertical surfaces (Fig. 5B) can be built and exported

in Wavefront OBJ format that is compatible with

Unity. We suggest a tile size of 4096x4096 pixels as

a compromise between performance and quality.

STEP 7. Model Rendering Optimization: in order

to optimize the rendering performance of the scene

we suggest using Levels of Detail (LODs) to reduce

the number of details shown when the model is far

away from the camera.

To make the model robust for navigation purposes

each tile must have its own collider generated and to

match the same north between the real and the virtual

world the 3D objects must be rotated by 180° along

the y axis. Finally, the virtual scene has to be properly

referenced and scaled by employing information

derived from SfM processing and thus the scene

dimension (in meters) and the corresponding

geographic coordinates must be defined, as well as

the altitude referred to the 3D reconstructed model.

After these steps, the scene is ready to be navigated

(e.g. Fig. 5B).

4 USE CASES

This section describes the application of the

mentioned workflows through two different cases.

4.1 VR Scene from DTM

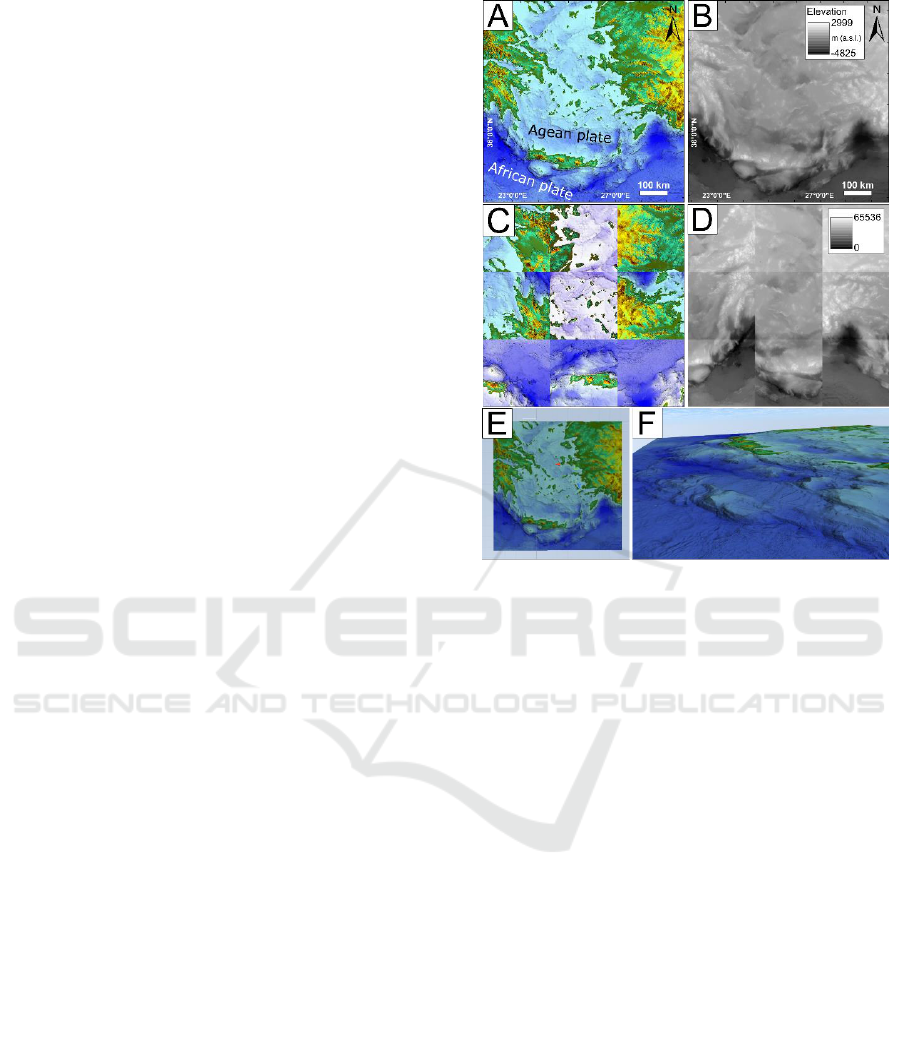

In this use case we demonstrate the workflow

discussed in Section 3.1 (Fig. 2) using a large area of

the Hellenic arc with WGS 84 / UTM zone 35N

spatial reference system (Fig. 4).

Figure 4: (A) texture; (B) elevation grid; (C) tiled texture;

(D) raw tiled terrain ready to be imported in Unity; (E) tiled

model in Unity and (F) VR navigation.

Bathymetry comes from EMODnet-Bathymetry

and DEM from the Srtm v4.1 DEM database

(http://srtm.csi.cgiar.org/), having a different areal

extent and pixel size. They have been harmonized to

the same (best) pixel size, merged and reshaped to a

square area, and incorrect values have been fixed

(Steps 1, 2 and 3; Fig. 4B); The texture (Fig. 4A) has

been created from the resulting elevation grid and it

is representative of the elevation. Grid conversion and

tiling processing (Steps 4-5) is depicted in Figures

4C-D. Finally, Figure 4E shows the resulting tiled

model in Unity whereas the VR navigation is depicted

in Figure 4F.

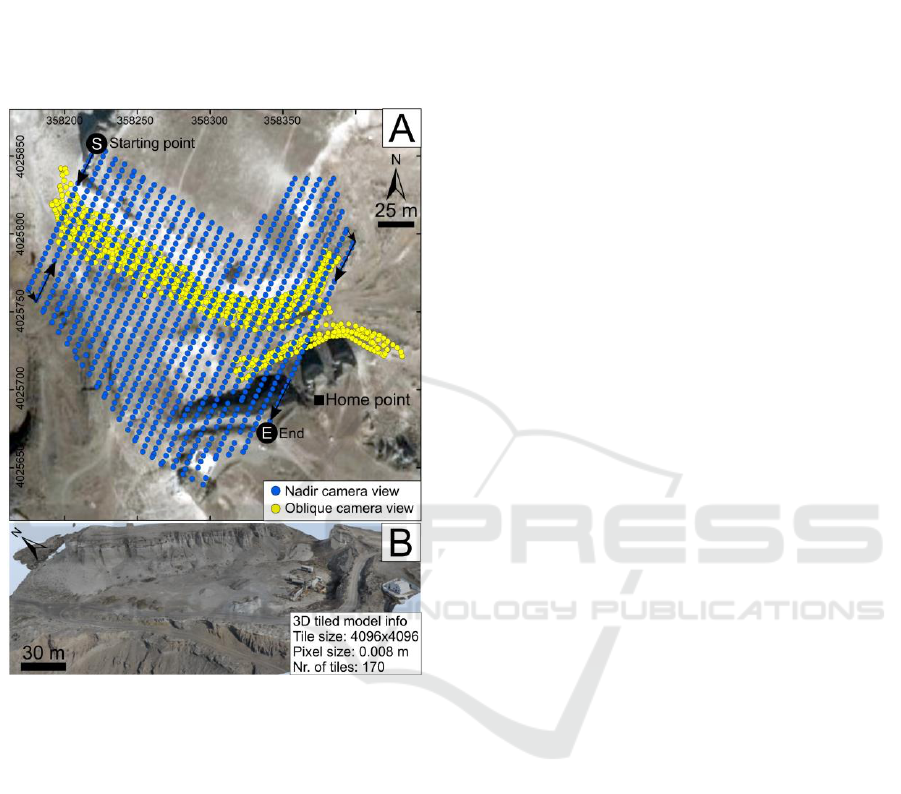

4.2 VR Scene from OBJ Model

In this use case we demonstrate the workflow

discussed in Section 3.2, focusing on the western part

of the Metaxa Mine, Santorini, Greece. The first UAV

flight was dedicated specifically in capturing a set of

photos in nadir camera view (Fig. 5A) with the

suggested overlap (Bonali et al., 2019). The photos

have been captured every 2 seconds, at an altitude of

20 m from the highest point of the ground (Home

point) and with a constant velocity of 2 m/s. The

second flight was for collecting photos of the vertical

Workflows for Virtual Reality Visualisation and Navigation Scenarios in Earth Sciences

301

outcrops and the camera was oriented orthogonal to

vertical cliff, in order to add details to the model. A

set of 20 GCPs have also been included in order to

co-register the 3D model to the WGS84 reference

system. The resulting 3D model is as large as 349 x

383 m, is composed of 170 tiles, each of them is

4096x4096 pixels, and the pixel resolution is 8 mm;

it has been imported in Unity engine (Fig. 5B).

Figure 5: (A) Location of UAV-captured images, spatial

reference: WGS 84 / UTM zone 35N. (B) 3D tiled model of

the western part of the mine, explored in virtual reality.

5 DISCUSSION

We deployed the workflows presented in earlier

sections (Fig. 2) to create Unity scenes for the

purposes of visual exploration through immersive

VR. Both approaches (terrain and OBJ) enable

immersive user interaction scenarios based on

previously reconstructed 3D models. This section

reports on our experiences with various models with

both approaches and discusses briefly possible

improvements and pointers to future work

developments. The terrain approach is suitable for

DTM/DSM-textures both from freely available

sources and ad-hoc created models for onshore/

offshore environments, individually or combined.

Specifically, this approach works within a wide range

of resolutions from hundreds down to single meters.

Although other works (e.g. Gerloni et al., 2018) have

applied a similar approach down to cms scale, this

may require scalings within Unity posing excessive

memory requirements resulting in prohibitively low

frame rates. Figure 6A shows an example of the

Cratered Cones Near Hephaestus Fossae (in Mars)

based on the publicly available HiRISE Digital

Terrain Models (https://www.uahirise.org/). The

resolution of the area shown is 1m whereas the areal

extent is 4800 x 4800 m. Figure 6B shows an ad-hoc

bathymetric model created from data collected during

two oceanographic cruises, with the use of a Reson

Seabat 8160 Multibeam Echosounder (Savini et al.,

2010; Savini et al., 2014). The resulting DTM is of 40

m resolution and provides a wide range of

bathymetric depth information from 120m to 1400m.

The 3D OBJ approach was also tested with several

models of different areal extents and resolutions (see

Figs. 6C-D) covering from 8mm to 1m. This

approach allows improved representations of

geometry especially around features that are vertical

to the terrain as shown in Figure 6D where an outcrop

from Vlychada beach, Santorini, Greece, shows

several volcanic layers related to the Minoan eruption

(e.g. Nomikou et al., 2016). To our experience so far

both approaches work well in creating VR scenes. A

number of optimisations are planned for future

developments. Firstly, we would like to avoid any

dependencies upon proprietary software packages

(such as Agisoft Photoscan, Global Mapper or

ArcGIS). Currently the open-source alternatives such

as QGIS (https://www.qgis.org/it/site/) and

VisualSFM (http://ccwu.me/vsfm/) suffer from a

number of limitations, e.g. non-streamlined user

interface for data processing for the former, and low

quality of point clouds for the latter (e.g. Burns et al.,

2017). Additionally, for VisualSFM further post-

processing through other third party software is

needed (Falkingham, 2013). Regarding the use of VR

in Earth Sciences, this technology provides a series of

positive feedbacks for geological and geo-hazards

studies.

Firstly, it facilitates the survey of geological

environments and related geo-hazards assessment: i)

travel time and associated costs to study remote areas

are cut off, for example from Europe to South

America or Asia (Lanza et al., 2013; Tibaldi et al.,

2015); ii) all logistic issues that must be taken into

account during mission preparation are overcome, as

well as VR allows to access remote or dangerous

areas (e.g. Tibaldi et al., 2008).

GISTAM 2019 - 5th International Conference on Geographical Information Systems Theory, Applications and Management

302

Figure 6: VR scenes from elevation / bathymetric grid-

texture format (A-B) and 3D OBJ models (C-D).

This technology is also very innovative and can

be used for research, teaching and outreach activities,

encompassing a wide audience that spans from early

career scientists and civil planning organisations, to

academic and non-academic people. Finally, it is

possible to establish a virtual geological lab where the

reconstructed key sites are permanently available for

geological exploration and studies, thus providing the

possibility of geological exploration also to people

affected by motor disabilities.

6 FUTURE DEVELOPMENTS

Our future vision is to build fully open-source

workflows avoiding completely any dependencies

upon proprietary software. To this extent we will

work in integrating existing open source packages

while injecting into them any additional

functionalities as required. Specifically, for the

terrain approach to streamline the process for end

users, as automatic as possible procedures are needed.

We envisage development of a collection of Python

scripts to handle tasks such as areal extent definition

and pixel homogenization of both elevation grids and

textures or the conversion to RAW format and

successive tiling process. Although the development

of our workflows was based on the Unity game

engine we have focused on the generic functionalities

of game engines abstracting from specific realisations

thus following a modular approach so that in future

we would be able to support other engines. Our future

development plan also includes a formal assessment

of user interaction mechanisms under various

scenarios to ascertain potential VR experiences fully

tailored to specific teaching, training and research

activities, including scenarios coming from

geophysical and lab-scaled studies (Tortini et al.,

2014; Russo et al., 2017).

7 CONCLUSIONS

We have outlined generic workflow approaches for

creating virtual reality scenes based on a variety of

sources. We have demonstrated onshore and offshore

(including combined) examples using different

sources and deploying different. The vision is to build

fully automated workflows without any dependencies

upon proprietary software packages. The work

presented in this paper has followed a generic

approach applicable to creating virtual scenarios in

other disciplines such as geophysic, astro planetary

sciences and archaeology.

ACKNOWLEDGEMENTS

This work is supported by i) 3DTeLC Erasmus+

Project 2017-1-UK01-KA203-036719; ii) MIUR

Argo3D projects; iii) MIUR – Dipartimenti di

Eccellenza 2018–2022 (http://3dtelc.lmv.uca.fr/;

http://www.argo3d.unimib.it/).

REFERENCES

Bonali, F.L., Tibaldi, A., Marchese, F., Fallati, L., Russo,

E., Corselli, C. and Savini, A. (2019). UAV-based

surveying in volcano-tectonics: An example from the

Iceland rift. Journal of Structural Geology, pp.46-64.

Burns, J. and Delparte, D. (2017). Comparison of

commercial structure-from-motion photogrammety

software used for underwater three-dimensional

modeling of coral reef environments. ISPRS -

International Archives of the Photogrammetry, Remote

Sensing and Spatial Information Sciences, XLII-2/W3,

pp.127-131.

Dykes, T., Hassan, A., Gheller, C., Croton, D. and Krokos,

M. (2018). Interactive 3D Visualization for Theoretical

Virtual Observatories, MNRAS, 477, pp.1495–1507.

Freina, L., Ott, M. (2015). A Literature Review on

Immersive Virtual Reality in Education: State of The

Art and Perspectives. eLearning & Software for

Education, 1.

Falkingham, P.L. (2013). Generating a photogrammetric

model using Visual SFM, and post-processing with

meshlab. Brown University, Tech. Rep.

Gerloni, I. G., Carchiolo, V., Vitello, F. R., Sciacca, E.,

Becciani, U., Costa, A., ... & Marchese, F. (2018).

Immersive Virtual Reality for Earth Sciences. In 2018

Federated Conference on Computer Science and

Information Systems (FedCSIS) IEEE, pp.527-534.

James, M.R., Robson, S., d'Oleire-Oltmanns, S.,

Niethammer, U. (2017). Optimising UAV topographic

surveys processed with structure-from-motion: ground

Workflows for Virtual Reality Visualisation and Navigation Scenarios in Earth Sciences

303

control quality, quantity and bundle adjustment.

Geomorphology 280, pp.51-66.

Lanza, F., Tibaldi, A., Bonali, F.L. and Corazzato, C.

(2013). Space–time variations of stresses in the

Miocene–Quaternary along the Calama–Olacapato–El

Toro Fault Zone, Central Andes. Tectonophysics, 593,

pp.33-56.

Lawson, E. (2016). Game engine analysis. Dublin,

available at: www. gamesparks. com/blog/game-

engine-analysis/

Liu, X. (2008). Airborne LiDAR for DEM generation:

some critical issues. Progress in Physical Geography,

32(1), pp.31-49.

Mitasova, H., Harmon, R.S., Weaver, K.J., Lyons, N.J. and

Overton, M.F. (2012). Scientific visualization of

landscapes and landforms. Geomorphology, 137(1),

pp.122-137.

Nayyar, A., Mahapatra, B., Le, D. and Suseendran, G.

(2018). Virtual Reality (VR) & Augmented Reality

(AR) technologies for tourism and hospitality industry.

International Journal of Engineering & Technology,

7(2.21), pp.156-160.

Nomikou, P., Druitt, T., Hübscher, C., Mather, T., Paulatto,

M., Kalnins, L., Kelfoun, K., Papanikolaou, D.,

Bejelou, K., Lampridou, D., Pyle, D., Carey, S., Watts,

A., Weiß, B. and Parks, M. (2016). Post-eruptive

flooding of Santorini caldera and implications for

tsunami generation. Nature Communications, 7(1),

p.13332.

O’Flanagan, J. (2014). Game engine analysis and

comparison. Dublin, available at: www. gamesparks.

com/blog/game-engine-analysis-and-comparison/

Oprean, D., Simpson, M. and Klippel, A. (2018).

Collaborating remotely: an evaluation of immersive

capabilities on spatial experiences and team

membership. International journal of digital earth,

11(4), pp.420-436.

Russo, E., Waite, G.P. and Tibaldi, A. (2017). Evaluation

of the evolving stress field of the Yellowstone volcanic

plateau, 1988 to 2010, from earthquake first-motion

inversions. Tectonophysics, 700, pp.80-91.

Savini, A. and Corselli, C. (2010). High-resolution

bathymetry and acoustic geophysical data from Santa

Maria di Leuca Cold Water Coral province (Northern

Ionian Sea—Apulian continental slope). Deep Sea

Research Part II: Topical Studies in Oceanography,

57(5-6), pp.326-344.

Savini, A., Vertino, A., Marchese, F., Beuck, L. and

Freiwald, A. (2014). Mapping cold-water coral habitats

at different scales within the Northern Ionian Sea

(Central Mediterranean): an assessment of coral

coverage and associated vulnerability. PLoS One, 9(1),

p.87108.

Sciacca, E., Becciani, U., Costa, A., Vitello, F., Massimino,

P., Bandieramonte, M., Krokos, M., Riggi, S., Pistagna,

C. and Taffoni, G. (2015). An integrated visualization

environment for the virtual observatory: Current status

and future directions. Astronomy and Computing, 11,

pp.146-154.

Tibaldi, A., Pasquarè, F. A., Papanikolaou, D., & Nomikou,

P. (2008). Discovery of a huge sector collapse at the

Nisyros volcano, Greece, by on-land and offshore

geological-structural data. Journal of Volcanology and

Geothermal Research, 177(2), pp.485-499.

Tibaldi, A., Corazzato, C., Rust, D., Bonali, F.L., Mariotto,

F.P., Korzhenkov, A.M., Oppizzi, P. and Bonzanigo, L.

(2015). Tectonic and gravity-induced deformation

along the active Talas–Fergana Fault, Tien Shan,

Kyrgyzstan. Tectonophysics, 657, pp.38-62.

Tortini, R., Bonali, F.L., Corazzato, C., Carn, S.A. and

Tibaldi, A. (2014). An innovative application of the

Kinect in Earth sciences: quantifying deformation in

analogue modelling of volcanoes. Terra Nova, 26(4),

pp.273-281.

Turner, D., Lucieer, A. and Watson, C. (2012). An

automated technique for generating georectified

mosaics from ultra-high resolution unmanned aerial

vehicle (UAV) imagery, based on structure from

motion (SfM) point clouds. Remote sensing, 4(5),

pp.1392-1410.

Vitello, F., Sciacca, E., Becciani, U., Costa, A.,

Bandieramonte, M., Benedettini, M., Brescia, M.,

Butora, R., Cavuoti, S., Di Giorgio, A.M. and Elia, D.

(2018). Vialactea Visual Analytics Tool for Star

Formation Studies of the Galactic Plane. Publications

of the Astronomical Society of the Pacific, 130(990),

p.084503.

Westoby, M.J., Brasington, J., Glasser, N.F., Hambrey,

M.J. and Reynolds, J.M. (2012). ‘Structure-from-

Motion’photogrammetry: A low-cost, effective tool for

geoscience applications. Geomorphology, 179, pp.300-

314.

GISTAM 2019 - 5th International Conference on Geographical Information Systems Theory, Applications and Management

304