Differential Privacy meets Verifiable Computation: Achieving Strong

Privacy and Integrity Guarantees

Georgia Tsaloli and Aikaterini Mitrokotsa

Department of Computer Science and Engineering, Chalmers University of Technology, Gothenburg, Sweden

Keywords:

Verifiable Computation, Differential Privacy, Privacy-preservation.

Abstract:

Often service providers need to outsource computations on sensitive datasets and subsequently publish statis-

tical results over a population of users. In this setting, service providers want guarantees about the correctness

of the computations, while individuals want guarantees that their sensitive information will remain private. En-

cryption mechanisms are not sufficient to avoid any leakage of information, since querying a database about

individuals or requesting summary statistics can lead to leakage of information. Differential privacy addresses

the paradox of learning nothing about an individual, while learning useful information about a population.

Verifiable computation addresses the challenge of proving the correctness of computations. Although verifi-

able computation and differential privacy are important tools in this context, their interconnection has received

limited attention. In this paper, we address the following question: How can we design a protocol that provides

both differential privacy and verifiable computation guarantees for outsourced computations? We formally

define the notion of verifiable differentially private computation (VDPC) and what are the minimal require-

ments needed to achieve VDPC. Furthermore, we propose a protocol that provides verifiable differentially

private computation guarantees and discuss its security and privacy properties.

1 INTRODUCTION

Recent progress in ubiquitous computing has allowed

data to be collected by multiple heterogeneous de-

vices, stored and processed by remote, untrusted

servers (cloud) and, subsequently, used by third par-

ties. Although this cloud-assisted environment is very

attractive and has important advantages, it is accom-

panied by serious security and privacy concerns for all

parties involved. Individuals whose data are stored in

cloud servers want privacy guarantees on their data.

Cloud servers are untrusted and thus, often need to

perform computations on encoded data, while service

providers want integrity guarantees about the correct-

ness of the outsourced computations.

Encryption techniques can guarantee the confi-

dentiality of information. Nevertheless, querying a

database about individuals or requesting summary

statistics can lead to leakage of information. In

fact, by receiving the response to different statistical

queries, someone may draw conclusions about indi-

vidual users and could be exploited to perform differ-

encing and reconstruction attacks (Dwork and Roth,

2014) against a database. Differential privacy (Dwork

et al., 2006) addresses the paradox of learning nothing

about an individual, while learning useful information

about a population. Verifiable delegation of computa-

tion (Gennaro et al., 2010) provides reliable methods

to verify the correctness of outsourced computations.

Although differential privacy and verifiable compu-

tation have received significant attention separately,

their interconnection remains largely unexplored.

In this paper, we investigate the problem of

verifiable differentially private computation (VDPC),

where we want not only integrity guarantees on the

outsourced results but also differential privacy guar-

antees about them. This problem mainly involves the

following parties: (i) a curator who has access to a

private database, (ii) an analyst who wants to perform

some computations on the private dataset and then

publish the computed results, and (iii) one or more

readers (verifiers) who would like to verify the cor-

rectness of the performed computations as well as that

the computed results are differentially private.

(Narayan et al., 2015) introduced the concept

of verifiable differential privacy and proposed a sys-

tem (VerDP) that can be employed to provide ver-

ifiable differential privacy guarantees by employing

VFuzz (Narayan et al., 2015), a query language for

computations and Pantry (Braun et al., 2013), a sys-

Tsaloli, G. and Mitrokotsa, A.

Differential Privacy meets Verifiable Computation: Achieving Strong Privacy and Integrity Guarantees.

DOI: 10.5220/0007919404250430

In Proceedings of the 16th International Joint Conference on e-Business and Telecommunications (ICETE 2019), pages 425-430

ISBN: 978-989-758-378-0

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

425

tem for proof-based verifiable computation. Although

VerDP satisfies important privacy and integrity guar-

antees, it is vulnerable to malicious analysts, who may

intentionally leak private information.

In this paper, we formalize the notion of verifiable

differentially private computation and provide its for-

mal definition as well as the requirements that need

to be satisfied in order to achieve it. Furthermore, we

propose a detailed protocol that can be employed to

provide verifiable differential privacy guarantees and

we discuss the security and privacy properties it sat-

isfies and why it resolves the identified weaknesses in

VerDP (Narayan et al., 2015).

Paper Organization: The paper is organized as fol-

lows. In Section 2, we describe the related work. In

Section 3, we provide an overview of the general prin-

ciples of the main building blocks – verifiable com-

putation and differential privacy – where verifiable

differentially private computation (VDPC) is based

on. In Section 4, we describe the minimal require-

ments needed to achieve verifiable differentially pri-

vate computations, provide the formal definition of

VDPC and describe our proposed public VDPC pro-

tocol. In Section 5, we discuss the security and pri-

vacy properties that our protocol satisfies and how it

resolves open issues in the state-of-the-art. Finally,

Section 6 concludes the paper.

2 RELATED WORK

Verifiable computation (VC) is inherently connected

to the problem of verifiable differential privacy.

VC schemes based on fully homomorphic encryp-

tion (Gennaro et al., 2010; Chung et al., 2010) nat-

urally offer input-output privacy because the inputs

and correspondingly the outputs are encrypted. How-

ever, they do not provide public verifiability in case

multiple readers want to verify the correctness of out-

sourced computations. VC schemes based on homo-

morphic authenticators have some restrictions with

respect to the supported function class, nevertheless,

some offer input privacy. For instance, (Fiore et al.,

2014) have proposed a VC scheme for multivariate

polynomials of degree 2, that offers input privacy,

yet provides no public verifiability. (Parno et al.,

2012) showed how to construct a VC scheme with

public verifiability from any attribute-based encryp-

tion (ABE) scheme. If the underlying ABE scheme

is attribute-hiding, the function’s input is encoded in

the attribute and, thus, the VC scheme is input pri-

vate. However, existing VC schemes largely ignore

the problem of VDPC.

(Narayan et al., 2015) proposed the VerDP system

that provides important privacy and integrity guaran-

tees based mainly on the Pantry (Braun et al., 2013)

VC scheme and the VFuzz (Narayan et al., 2015)

language that can be employed to guarantee the use

of differentially private functions. However, in the

VerDP system, the analyst has access to the dataset

and thus, jeopardizes the privacy of the data. We ad-

dress this challenge by making sure that analysts may

access only encoded data. Equally important is the

random noise used to guarantee differentially private

computations. We make sure that this noise is gen-

erated based on a randomness u on which the reader

and the curator mutually agree and therefore, no one

can control the random noise term on his own.

3 PRELIMINARIES

In this section, we briefly overview the general prin-

ciples and recall the definitions of the main building

blocks – verifiable computation and differential pri-

vacy – where verifiable differentially private compu-

tation is based on.

3.1 Verifiable Computation

A verifiable computation (VC) scheme can be em-

ployed when a client wants to outsource the computa-

tion of a function f to an external untrusted server.

The client should be able to verify the correctness

of the returned result (by the server), with less com-

putation cost than performing the computation itself.

Below we provide the definition of a verifiable com-

putation scheme (VC) as introduced by (Parno et al.,

2012) and (Fiore and Gennaro, 2012).

Definition 1 (Verifiable Computation (Parno et al.,

2012; Fiore and Gennaro, 2012)). A verifiable com-

putation scheme VC is a 4-tuple of PPT algorithms

(KeyGen, ProbGen, Compute, Verify) which are de-

fined as follows:

• KeyGen( f , 1

λ

) → (PK

f

,EK

f

): This randomized

key generation algorithm takes the security pa-

rameter λ and the function f as inputs and out-

puts a public key PK

f

to be used for encoding the

function input and a public evaluation key EK

f

to

be used for evaluating the function f .

• ProbGen

PK

f

(m) → (σ

m

,VK

m

): This randomized

problem generation algorithm takes as input the

key PK

f

and the function input m and outputs σ

m

,

which is the encoded version of the function input,

and VK

m

, which is the verification key used in the

verification algorithm.

SECRYPT 2019 - 16th International Conference on Security and Cryptography

426

• Compute

EK

f

(σ

m

) → σ

y

: Given the key EK

f

and

the encoded input σ

m

, this deterministic algo-

rithm encodes the function value y = f (m) and

returns the result, σ

y

.

• Verify

VK

m

(σ

y

) → y ∪ ⊥: The deterministic veri-

fication algorithm takes as inputs the verification

key VK

m

and σ

y

and returns either the function

value y = f (m), if σ

y

represents a valid evalua-

tion of the function f on m, or ⊥, if otherwise.

We note here that a publicly verifiable computation

scheme is a VC scheme, where the verification key

VK

m

is public, allowing in this way the public veri-

fication of the outsourced computation.

3.2 Differential Privacy

Differential privacy is a framework which can be em-

ployed in order to achieve strong privacy guarantees.

Intuitively, via a differentially private mechanism, it is

impossible to distinguish two neighboring databases

(i.e., databases that differ in a single record) storing

private data when the same query is performed in

both, regardless of the adversary’s prior information.

For instance, differential privacy implies that it is im-

possible, given the result of the queries, to determine

if an individual’s record is included in the databases

or not. (Dwork et al., 2006) formally introduced dif-

ferential privacy as follows:

Definition 2 (ε-Differential Privacy (Dwork et al.,

2006)). A randomized mechanism M : D → R with

domain D and range R satisfies ε-differential privacy

if for any two adjacent inputs d,d

0

∈ D and for any

subset of outputs S ⊆ R it holds that:

Pr[M (d) ∈ S] ≤ e

ε

Pr[M (d

0

) ∈ S]

In this notation, ε denotes the privacy parameter

which is defined in positive real numbers. A privacy

promise can be considered strong when its ε is close

to zero.

Let us consider a deterministic real-valued func-

tion f : D → R. A common method used to approxi-

mate the function f with a differentially private mech-

anism is by employing additive noise incorporated in

f ’s sensitivity S

f

. The sensitivity S

f

of a function f

is defined as the maximum possible distance between

the replies to queries (i.e., | f (d)− f (d

0

)|) addressed to

any of the two neighboring databases (d and d

0

). By

intuition, larger sensitivity demands a stronger coun-

termeasure. A commonly employed differentially pri-

vate mechanism, the Laplace noise mechanism, is de-

fined as follows:

M (d) , f (d) + Laplace(λ)

We should note here that except of providing strong

privacy guarantees, it is rather important to consider

the utility of the data when employing a differentially

private mechanism. Indeed, each query may lead to

leakage of information about the data stored in the

queried database. By increasing the noise, we can

provide high privacy guarantees but this could also

lead to low utility of the used information. Thus,

achieving a good trade-off between utility and privacy

is what needs to be achieved by an efficient differen-

tially private mechanism.

4 VERIFIABLE

DIFFERENTIALLY PRIVATE

COMPUTATION

We consider the problem of verifiable differentially

private computations where a curator (e.g., National

Institute of Health (NIH)) has access to a private

database m (e.g., medical information). An analyst

(e.g., a researcher) wants to perform some computa-

tions on the private dataset and publish the results.

One or more readers would like to verify not only the

correctness of the computations but also that the com-

putations were performed with a differentially private

algorithm. To guarantee differential privacy, the ana-

lyst has to execute a differentially private query f on

a dataset m, resulting in an output y, without having

access to m. The reader then should be convinced that

y is the correct result.

In differential privacy (DP), we assume that the al-

gorithm is randomized which we can model by adding

a uniformly distributed input u to a deterministic func-

tion g( f ,ε,u,m). More precisely, the reader should be

able to verify that:

y = g( f , ε, u, m) = f (m) + τ( f , ε, u)

where u ∼ U (i.e., U denotes the Uniform distribu-

tion) and ε determines the level of differential privacy

provided. In the right-hand side, we have decom-

posed the differentially private (DP) computation into

two parts: f (m), which produces the exact query re-

sponse, and τ, which transforms the uniform noise ap-

propriately in order to achieve the required differen-

tial privacy guarantees. For any given query denoted

by f , the noise described by τ is fixed (e.g., Laplace or

Gaussian noise). All in all, the reader needs to verify

that all the following requirements hold:

1. g is an ε-DP computation for the query f . The

way this is done is outside the scope of this pa-

per and could be achieved using the VFuzz lan-

guage (Narayan et al., 2015).

Differential Privacy meets Verifiable Computation: Achieving Strong Privacy and Integrity Guarantees

427

2. u is from a high-quality pseudo-random source.

3. y is correctly computed from g, which is evaluated

on the dataset m with the randomness u.

We first propose the definition of a publicly verifiable

differentially private computation scheme VDPC

Pub

which is based on Parno et al.’s (Parno et al., 2012)

publicly verifiable computation VC definition. Sub-

sequently, we describe a concrete protocol that can

be employed in order to provide public verifiability

(i.e., all readers are able to verify the correctness of

the computations) as well as the privacy and the in-

tegrity guarantees required in this setting.

Definition 3. (Publicly Verifiable Differentially Private

Computation) A publicly verifiable differentially pri-

vate computation scheme VDPC

Pub

is a 5-tuple of

PPT algorithms (Gen DP, KeyGen, ProbGen, Com-

pute, Verify) which are defined as follows:

• Gen DP( f , ε, u) → g( f , ε, u, ·): Given the function

f , the randomness u and the value ε, this algo-

rithm computes g( f , ε, u, ·) = f (·) + τ( f ,ε,u) with

τ denoting the used noise.

• KeyGen(g,1

λ

) → (PK

g

,EK

g

): Given the security

parameter λ and the function g, the algorithm out-

puts a public key PK

g

to be used for encoding the

function input and a public evaluation key EK

g

to

be used for evaluating the function g.

• ProbGen

PK

g

(ukm) → (σ

ukm

,VK

ukm

): This algo-

rithm takes as input the public key PK

g

and the

function input m as well as the randomness u and

outputs σ

ukm

, which is the encoded version of the

function input, and VK

ukm

, which is used for the

verification.

• Compute

EK

g

(σ

ukm

) → σ

y

: Given the evaluation

key EK

g

and the encoded input σ

ukm

, this algo-

rithm encodes the function value y = g( f , ε, u, m)

and returns the result, σ

y

.

• Verify

VK

ukm

(σ

y

) → y ∪ ⊥: Given the verification

key VK

ukm

and the encoded result σ

y

, this al-

gorithm returns either the function value y =

g( f , ε, u, m), if σ

y

represents a valid evaluation of

the function g on m, or ⊥, if otherwise.

We assume that the reader has verified that g is an

ε-DP computation for the query f when u is uni-

formly distributed, using for instance the VFuzz lan-

guage (Narayan et al., 2015). We suggest the em-

ployment of a zero-knowledge protocol to prove that

indeed u comes from a high quality pseudo-random

source and a VDPC

Pub

scheme to prove that y is the

correct computation of g in the underlying data m.

We should note here that, in our definition, we focus

on publicly verifiable computation. We can adjust it

though in cases where a single verifier (reader) is re-

quired and thus, a verifiable computation scheme with

secret verification could be incorporated accordingly.

4.1 A Publicly Verifiable Differentially

Private Protocol

We consider three main parties in our protocol: (i) the

curator, (ii) the analyst, and (iii) one or more readers

(verifiers). The reader should obtain the desired result

y, a proof about its correctness as well as differential

privacy guarantees about the performed computation.

The curator has access to some data generated by

multiple data subjects (e.g., patients). As soon as the

curator collects the data, he chooses the randomness

u that will be used to generate the stochasticity in

the ε-DP function g. The randomness u is not re-

vealed to any other party but it is committed so that

the curator cannot change it. On the other hand, the

curator should convince the reader that the random-

ness he selected is indistinguishable from a value se-

lected from a uniform distribution and then use this

value u to compute the ε-DP function g( f , ε, u, m).

The analyst, even though he does not have any ac-

cess to the dataset m but some encoded information

σ

ukm

about the dataset and the randomness u respec-

tively, he is still able to generate the encoded value σ

y

of y = g( f , ε, u, m). We should note that the computa-

tion of the encoded value σ

y

is of high complexity and

thus, the analyst is the one who will perform this op-

eration instead of the curator. Eventually, the reader,

having the ε-DP function value y and the public veri-

fication key VK

ukm

, is able to verify that what he has

received from the analyst is correct.

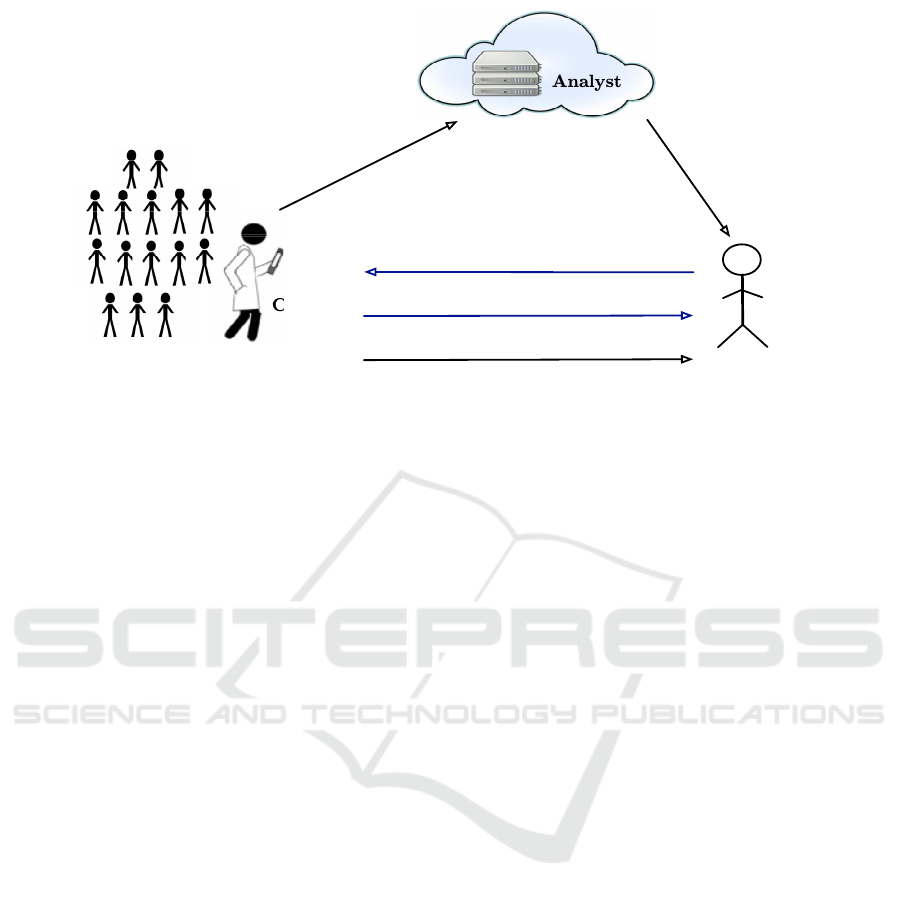

The workflow of our protocol (depicted in Fig. 1)

is separated into two phases. The first phase focuses

on the differential privacy requirements, while the

second phase is related to the verifiable computation

of the function g.

We need to stress here that in the first phase, the

main issue we want to address is to make sure that

the random noise used to compute the differentially

private function g from the query function f is gener-

ated with a good source of randomness u. This corre-

sponds to the second requirement that needs to be sat-

isfied in order to achieve verifiable differentially pri-

vate computations as listed in Section 4. To meet the

above requirement, we incorporate in our protocol, a

zero-knowledge protocol that allows us to guarantee

that the random noise is generated based on a random-

ness u (on which the reader and the curator mutually

agree). We describe the two phases in detail:

• PHASE 1: We are interested in computing an ε-

DP function g that depends on the query f . In

SECRYPT 2019 - 16th International Conference on Security and Cryptography

428

EK

g

, σ

u!m

σ

y

where

Reader

Curator

Choose x ∈ X

p

1

, p

2

x

Data

subjects

m

1

, m

2

, . . . , m

k

VK

u!m

generate PK

g

, EK

g

u = PRF(k, x)

p

1

= com(k; r

1

)

p

2

= com(u; r

2

)

y = g(f, ε, u, m

1

, m

2

, . . . , m

k

)

ProbGen

PK

g

(u!m) → (σ

u!m

, VK

u!m

)

Verify

VK

u!m

(σ

y

) → y ∪ ⊥

Compute

EK

g

(σ

u!m

) → σ

y

Analyst

where

u!m = (u, m

1

, m

2

, . . . , m

k

)

Figure 1: The workflow of achieving public verifiable differential privacy.

our model, we do not want to place all the trust in

the curator. Thus, the curator should agree with

the reader on the randomness u that will be used

in computing the (Laplace) noise transformation.

However, only the curator should know the value

of u. To achieve this, a protocol is run between

the reader and the curator. More precisely, (i) the

reader chooses an input x ∈ X , where X is the in-

put space and sends it to the curator, and (ii) the

latter picks a secret key k ∈ K of a pseudoran-

dom function PRF, where K is the key space of

a PRF, computes the randomness u = PRF(k, x)

and the commitments p

1

= com(k; r

1

) and p

2

=

com(u;r

2

), where r

1

,r

2

denote randomness. After

sending p

1

, p

2

to the reader, the curator runs an in-

teractive zero-knowledge protocol to convince the

reader that the statement (x, p

1

, p

2

) is true. The

statement (x, p

1

, p

2

) is true, when the following

holds: ∃ k, r

1

,u,r

2

such that:

p

1

= com(k; r

1

) ∧ p

2

= com(u; p

2

) ∧ u = PRF(k, x).

In this way, the reader and the curator agree on the

randomness u while its value is available only to

the curator.

• PHASE 2: Now, let us see how we may employ

the publicly verifiable differentially private com-

putation scheme VDPC

Pub

in our system.

(a) The curator collects the data from the data

subjects (e.g., patients) into a dataset m =

{m

1

,...,m

k

}.

(b) The curator runs KeyGen(g, 1

λ

) → (PK

g

,EK

g

)

to get a short public key PK

g

that will be used

for input delegation and the public evaluation

key EK

g

which will be used from the analyst to

evaluate the function g. The curator sends EK

g

to the analyst.

(c) ProbGen

PK

g

(ukm) → (σ

ukm

,VK

ukm

) is run by

the curator to get a public encoded value of u

and m, called σ

ukm

, and a public value VK

ukm

which will be used for the verification. The cu-

rator sends σ

ukm

to the analyst and publishes

VK

ukm

. We note that the curator can directly

send σ

ukm

to the analyst after computing it (i.e.,

no need to store the value). Note also that, nei-

ther the analyst nor the reader can retrieve any

information about the dataset m from σ

ukm

.

(d) The analyst runs the Compute

EK

g

(σ

ukm

) → σ

y

algorithm using the evaluation key EK

g

and

σ

ukm

in order to obtain the encoded version of

the function g( f , ε, u, m

1

,...,m

k

) = y. After per-

forming the evaluation he publishes σ

y

.

(e) Now, the reader uses VK

ukm

and σ

y

to run the

algorithm Verify

VK

ukm

(σ

y

) → y ∪ ⊥ which indi-

cates whether σ

y

represents the valid ε-DP out-

put of f or not.

Differential Privacy meets Verifiable Computation: Achieving Strong Privacy and Integrity Guarantees

429

5 DISCUSSION

By employing our proposed protocol, the readers

(verifiers) can get strong guarantees about the in-

tegrity and correctness of the computed result. To

provide strong privacy guarantees, we need to make

sure that the random noise used to compute the dif-

ferentially private function g is generated with a good

source of randomness. To address this, we incorpo-

rate in the VDPC

Pub

protocol, a zero-knowledge pro-

tocol. The latter allows us to guarantee that the ran-

dom noise is generated based on a randomness u on

which the reader and the curator mutually agree and

thus, no one can control the random noise term on

his own. Of course the DP level achieved via our

solution depends on the differentially private mech-

anism employed and can be tuned based on the se-

lected ε parameter in order to achieve a good balance

of utility and privacy. Curious readers that want to

recover private data are not able to get any additional

information than the result of the computation. More-

over, our protocol is secure against malicious ana-

lysts, since analysts have access to encoded informa-

tion about the dataset m but do not obtain neither the

dataset itself nor the randomness u. Our security re-

quirement is that the computations performed by the

analysts should be correct and they should not be able

to get any additional information besides what is re-

quired to perform the computation. This is achieved

assuming that the employed (publicly) VC scheme is

secure. Contrary to our approach, in the VerDP sys-

tem the analyst can access the dataset and thus, poses

a privacy risk. In our approach this is addressed by

allowing the analysts to access only an encoded form

of the data.

6 CONCLUSION

Often, when receiving an output, we want to confirm

that it is computed correctly and that it does not leak

any sensitive information. Thus, we require not only

confidentiality on the used data but also differential

privacy guarantees on the computed result, while at

the same time, we want to be able to verify its correct-

ness. In this paper, we formally define the notion of

verifiable differentially private computations (VDPC)

and we present a protocol (VDPC

Pub

) that can be em-

ployed to compute the value of a differentially private

function g (i.e., denoting the ε-DP computation for a

function f ), as well as to check the correctness of the

computation.

VDPC is an important security notion that has re-

ceived limited attention in the literature. We believe

that verifiable differentially private computation can

have important impact in a broad range of applica-

tion scenarios in the cloud-assisted setting that require

strong privacy and integrity guarantees. However, a

rather challenging point for any verifiable differen-

tially private computation protocol is for the end user

to be able to verify that a VDPC scheme is used, and

how we can make sure that he understands the use

of the epsilon parameters. More precisely, we are

interested in further investigating how a link can be

made between the formal verification process and a

human verification of the whole process, especially at

the user level.

ACKNOWLEDGEMENTS

This work was partially supported by the Wallen-

berg AI, Autonomous Systems and Software Program

(WASP) funded by the Knut and Alice Wallenberg

Foundation.

REFERENCES

Braun, B., Feldman, A. J., Ren, Z., Setty, S., Blumberg,

A. J., and Walfish, M. (2013). Verifying computations

with state. In Proceedings of SOSP, pages 341–357.

Chung, K.-M., Kalai, Y. T., and Vadhan, S. P. (2010). Im-

proved delegation of computation using fully homo-

morphic encryption. In CRYPTO, volume 6223, pages

483–501.

Dwork, C., McSherry, F., Nissim, K., and Smith, A. D.

(2006). Calibrating noise to sensitivity in private data

analysis. In Proceedings of TCC, pages 265–284.

Dwork, C. and Roth, A. (2014). The algorithmic founda-

tions of differential privacy. Foundations and Trends

in Theoretical Computer Science, 9(3-4):211–407.

Fiore, D. and Gennaro, R. (2012). Publicly verifiable dele-

gation of large polynomials and matrix computations,

with applications. In Proceedings of CCS, pages 501–

512.

Fiore, D., Gennaro, R., and Pastro, V. (2014). Efficiently

verifiable computation on encrypted data. In Proceed-

ings of the 2014 ACM SIGSAC Conference on Com-

puter and Communications Security, pages 844–855.

Gennaro, R., Gentry, C., and Parno, B. (2010). Non-

interactive verifiable computing: Outsourcing com-

putation to untrusted workers. In Advances in

Cryptology–CRYPTO 2010, pages 465–482.

Narayan, A., Feldman, A., Papadimitriou, A., and Hae-

berlen, A. (2015). Verifiable differential privacy. In

Proceedings of the Tenth European Conference on

Computer Systems, page 28.

Parno, B., Raykova, M., and Vaikuntanathan, V. (2012).

How to delegate and verify in public: Verifiable com-

putation from attribute-based encryption. In Proceed-

ings of TCC, pages 422–439.

SECRYPT 2019 - 16th International Conference on Security and Cryptography

430