Development of an Experimental Strawberry Harvesting Robotic System

Dimitrios S. Klaoudatos

1

a

, Vassilis C. Moulianitis

2,3 b

and N. A. Aspragathos

3 c

1

Department of Electrical and Computer Engineering, University of Patras, Patra, Greece

2

Department of Product and Systems Design Engineering, University of the Aegean, Ermoupolis, Syros, Greece

3

Mechanical Engineering and Aeronautics Department, University of Patras, Patra, Greece

Keywords:

Agriculture Robotics, Harvesting Robot, Robot Vision, Robotic Gripper, Robot Operating System, Occluded

Crops.

Abstract:

This paper presents the development of an integrated strawberry harvesting robotic system tested in lab con-

ditions in order to contribute to the automation of strawberry harvesting. The developed system consists of

three main subsystems; the vision system, the manipulator and the gripper. The procedure for the strawberry

identification and localization based on vision is presented in detail. The performance of the robotic system

is assessed by the results of experiments that take place in the lab and they are related to the recognition of

occluded strawberries, the check of the strawberries for possible bruises after the grasping and the accuracy

of detection of the strawberries’ location. The results show that the developed vision algorithm recognizes

correctly every single strawberry and has high accuracy in recognizing occluded strawberries in which the

largest part of each of them is visible. A small localization error results in a correct grasp and cut without

causing damage to the fruit.

1 INTRODUCTION

The agriculture sector is changing due to the use of

new technologies such as automation, providing sig-

nificant benefits to the farmers. This paper deals with

the automation in the harvest of strawberries, one of

the most popular and profitable berries. The straw-

berry farmers around the world face serious problems

of labor shortage, due to the tedious working condi-

tions and the general social and financial conditions.

Today, the growth of strawberries in table-top culti-

vation (see figure 1) is very common, something that

facilitates the robotic harvesting process as the berries

are more approachable and they differentiate from the

leaves. In this way, harvesting robots offer quality,

higher productivity, as they can operate during the

whole day, and more profits to the producers with-

out having to modify the layout and the size of their

cultivation.

The actions that take place in harvesting is the de-

tection, the approach, the grasp and the placement of

the strawberry in a little box. In order to automate

a

https://orcid.org/0000-0002-5831-3363

b

https://orcid.org/0000-0003-1822-5091

c

https://orcid.org/0000-0002-7662-5942

this procedure, these actions must be accomplished

by a robotic system, which should contain at least a

computer vision system for the detection localization

of the mature fruit, a manipulator for the movement

of the gripper towards the grasping of the fruit.

A suitable robot vision system must include meth-

ods for the recognition and the localization of the

strawberry. The recognition of the strawberry is used

for the quality control of the fruit such as the check

of the maturity, the existence of diseases and dam-

ages in the fruit. These methods that were imple-

mented for other fruits can also be used for the straw-

berry case with some modifications. The mature fruits

are usually recognized by using color based meth-

ods (Slaughter and Harrell, 1987), by measuring a

fraction of the mature region of the strawberry over

the immature region (Hayashi et al., 2009) and (Feng

et al., 2008). The recognition of disease existence is

very useful as the defective strawberry must be sepa-

rated by the healthy ones and it is implemented by an

image segmentation method (Narendra and Hareesha,

2010).

The exact detection of strawberries’ place is the

most challenging point in the automated harvesting

as the strawberries can be occluded from other straw-

berries, leaves and other objects. There have been de-

Klaoudatos, D., Moulianitis, V. and Aspragathos, N.

Development of an Experimental Strawberry Harvesting Robotic System.

DOI: 10.5220/0007934004370445

In Proceedings of the 16th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2019), pages 437-445

ISBN: 978-989-758-380-3

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

437

Figure 1: Strawberries in table-top cultivation.

veloped methods to solve this problem with various

success rates. (Stajnko et al., 2004) used a thermal

camera (LWIR) in combination with an image seg-

mentation method in order to analyze the apple crops

by taking advantage of the difference in infrared ra-

diation between the fruit and the leaves. The success

rate of this method was increased, if the pictures were

taken in the evening, when there is a high difference

in temperature between the apples and the environ-

ment. (Yang et al., 2007) proposed a 3D stereo vision

system and color based image segmentation to dis-

tinguish effectively the tomato clusters, but they did

not achieve the separation of each tomato from their

cluster as the tomatoes had the same depth. (Nguyen

et al., 2014) made use of the redness of each pixel

in the colorful image of the RGB-D camera in order

to distinguish the apple from its background, imple-

mented the RANSAC algorithm, and obtained the lo-

cation of the center of each detected apple by using

an iterative estimation method to the partially visible

target object data.

Object classification methods were used on top of

the image segmentation to face the occlusions. These

methods create a model for the object to be detected,

using image training samples in different variations.

The Viola-Jones algorithm (Viola and Jones, 2001) is

very efficient in real time object detection as its cas-

cade structure makes the classifier extremely rapid.

(Puttemans et al., 2016) applied a semi-supervised

fruit detector for strawberries and apples by using

these object classification techniques. The detection

accuracy was improved in comparison to the previ-

ous methods, but only for the fruits that were used as

training samples and there is the problem of the pre-

cise characterization of a sample as positive or neg-

ative. (Li et al., 2018) use a deep learning method

in order to recognize elevated mature strawberries.

A neural network was trained in order to recognize

overlapping and occluded strawberries. This achieves

very high accuracy in the detection and the low aver-

age recognition time makes it suitable for real-time

machine picking, but the deep network training re-

quires a lot of iterations for a high rate of accuracyand

capturing and processing a huge amount of learning

samples of strawberries images. The cutting points

on the peduncles of double overlapping grape clus-

ters are detected in (Luo et al., 2018). The edges of

the clusters are extracted and the contour intersection

points of the two overlapping grape clusters are cal-

culated based on a geometric model, but there is the

limitation of non detection more than two overlapping

grape clusters.

In previous works, various types of grippers have

been developed in order to assure a sufficient grasp

of the strawberry without causing damage to this. A

common type of gripper consists of a scissor which

cuts and holds the strawberry by his peduncle in or-

der to avoid possible damage of the fruit. In ad-

dition, (Hayashi et al., 2009) used a suction device

which holds the fruit before its separation from the

peduncle if the localization error is small. (Hemming

et al., 2014) developed a gripper whose fingers adapt

to the fruit. It also contained a cut mechanism of the

fruit. These types of grippers have some drawbacks,

as the use of scissors lets a piece of the stem on the

strawberry which is undesirable and the suction de-

vice may cause serious damage to the fruit. (Dimeas

et al., 2015) designed and constructed a system which

grasps and cuts the strawberry in the way that a la-

borer would do. The localization of strawberry with

respect to the fingers is achieved using haptic sensor

and a fuzzy control system controls the force to be

applied to the strawberry for correct hold. The sepa-

ration of fruit from the peduncle is done by rotating

the grasped strawberry by 45

◦

.

In this paper, a robotic system for strawberry

harvesting is presented with emphasis to the vision

recognition and localization of the crop. The vision

system is based on a modified approach presented in

(Luo et al., 2018) and adapted to strawberries. The

Kinect V2 sensor is used and the integration of the

system is made in ROS. The methods for the ob-

ject detection, image segmentation based on the color

model, the feature detection and the object classifica-

tion are presented. The system is tested for occluded

crops and for various features, such as, position accu-

racy, success of removal and crop damage in a single

crop.

The remaining of the paper is organised as follow-

ing: In the next section the proposed method is pre-

sented briefly. The analysis of the integrated system

with emphasis to the vision methods and the exper-

imental results are presented in sections 3 and 4 re-

ICINCO 2019 - 16th International Conference on Informatics in Control, Automation and Robotics

438

spectively. Finally concluded remarks are closing this

paper.

2 THE PROPOSED APPROACH

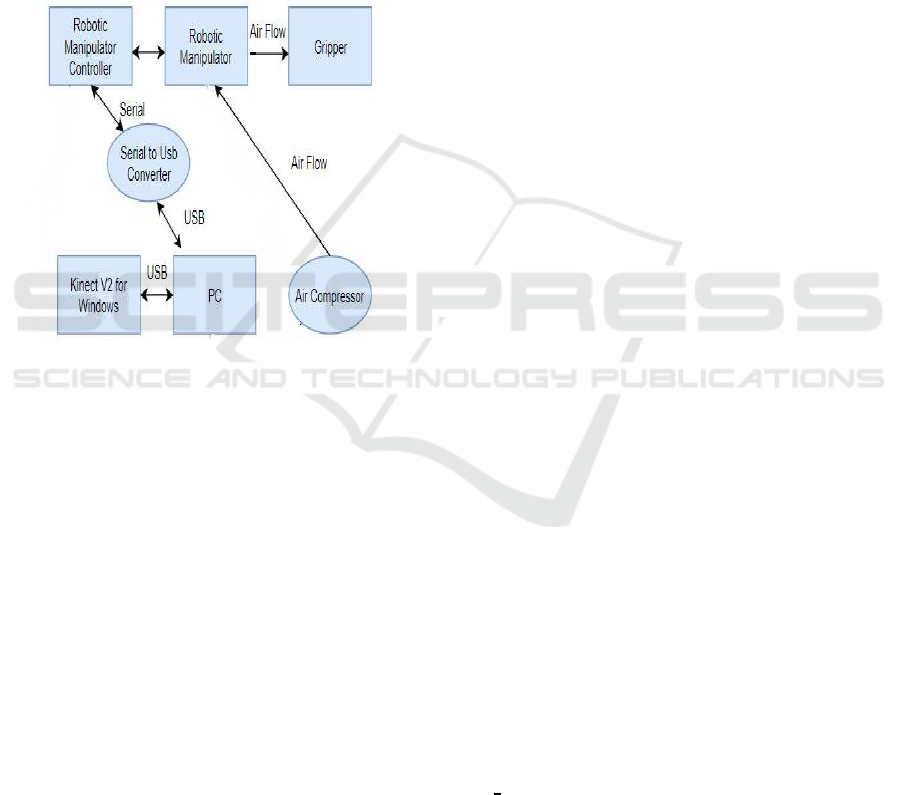

The structure of the system is illustrated in Figure

2. The sensor Kinect V2 for Windows depicts the

robot vision system, the robotic manipulator represent

the strawberry approach system and the gripper is the

grasping system. These subsystems are connected to

a PC where runs the software that is developed for the

purpose of this work.

Figure 2: Hardware structure of the developed robotic sys-

tem.

As it is mentioned in the introduction, the main

points for object detection are the image segmenta-

tion based on a color model, the feature detection and

the object classification. Since the strawberry has the

uniform red color and spores on its surface, the accu-

racy of a feature detection method is too low. Taking

into account these limitations and the demand for a

huge number of samples in order to create a reliable

model in the object classification technique, an image

segmentation method based on a color model is ap-

plied. In this direction, a suitable color model is used,

between the various available models, that represents

in an obvious way the color of the strawberries. After

image pre-processing, a threshold, that distinguishes

the pixels of the strawberries than the pixels of the

background, is selected. Furthermore, an algorithm

is developed based on the geometry of the strawber-

ries’ clusters in order to separate the strawberries that

belong to the same cluster.

After the successful recognition of the strawberry

and the computation of its center of mass, the distance

of the detected strawberry from the robot end-effector

is estimated. For this estimation, a depth sensor or

the capture of images from two different points can

be used. The depth sensors’ performance is quite in-

fluenced by the lighting conditions which have sig-

nificant variations in the outdoor environment. More-

over, these sensors have a remarkable error in depth

estimation as the distance of the object from the cam-

era increases. The second method requires the exis-

tence of two cameras or one moving camera. In the

case of strawberries, it is difficult to implement this

technique as they have a lot of same features on their

surface so it is tough to find the position of a certain

point in both images. As the experiments are done

in lab conditions, where the lighting conditions are

steady and controllable and the strawberries can be in

a distance where the depth sensor’s error is low, the

sensor Kinect for Windows v2 is used, mounted on

a fixed base, to estimate the strawberries’ position in

3D space. For the developmentof the computer vision

algorithms, the OpenCV library is used.

In a previous paper (Dimeas et al., 2013), the

movement of the labourers’ arm was studies and the

design of the gripper’s movement was based on this.

The robotic manipulator for the experiment is the

MITSUBISHI RV-A4 which has 6 DoF. In order to

grasp the strawberry, an open source three- fingered

design of a robot gripper is adapted, manufactured

using a 3D printer and mounted on the manipulator’s

arm.

In terms of the software of the system, Robot Op-

erating System (ROS) framework in a Linux operat-

ing system and C++ as the programming language are

used.

3 VISUAL IDENTIFICATION AND

LOCALISATION OF THE

STRAWBERRY

The main sensor for the visual identification and lo-

calisation of the strawberry is the Kinect which has

a monochrome CMOS sensor capable to observe the

infrared light. It is placed at an offset relative to the

IR emitter, and the difference between the observed

and the emitted IR dot positions is used to calculate

the depth at each pixel of the RGB camera. The

’libfreenect2’ open source driver for the Kinect for

Windows v2 device is used (Xiang et al., 2016). The

iai

kinect2 is used which includes tools and libraries

for the ROS interface of this sensor (Wiedemeyer,

2015). The implementation of the visual identifica-

tion and localisation of the strawberry is based on the

OpenCV software library.

The following algorithm for the robot vision sys-

tem is developed:

Development of an Experimental Strawberry Harvesting Robotic System

439

• The vision sensor is initialized and a color image

and a depth image are obtained by using a camera

grabber software package that is developed in the

ROS framework.

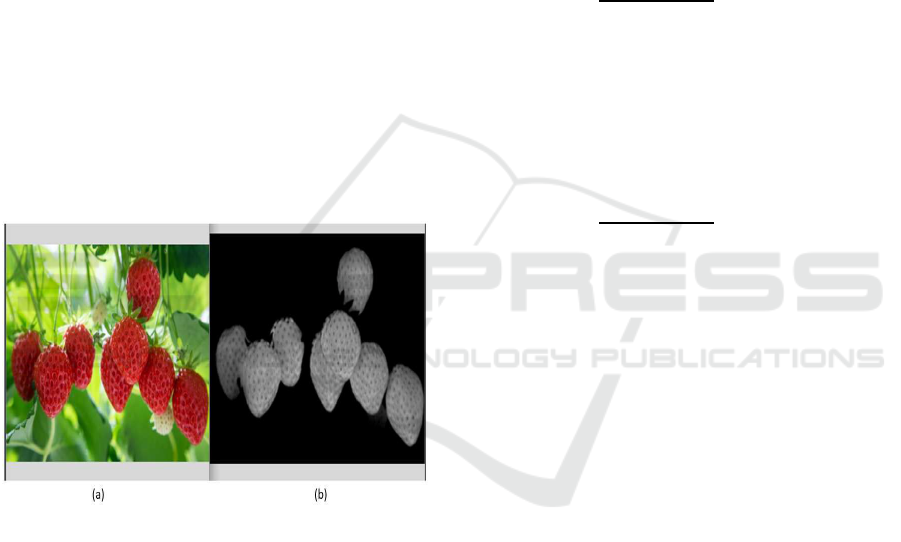

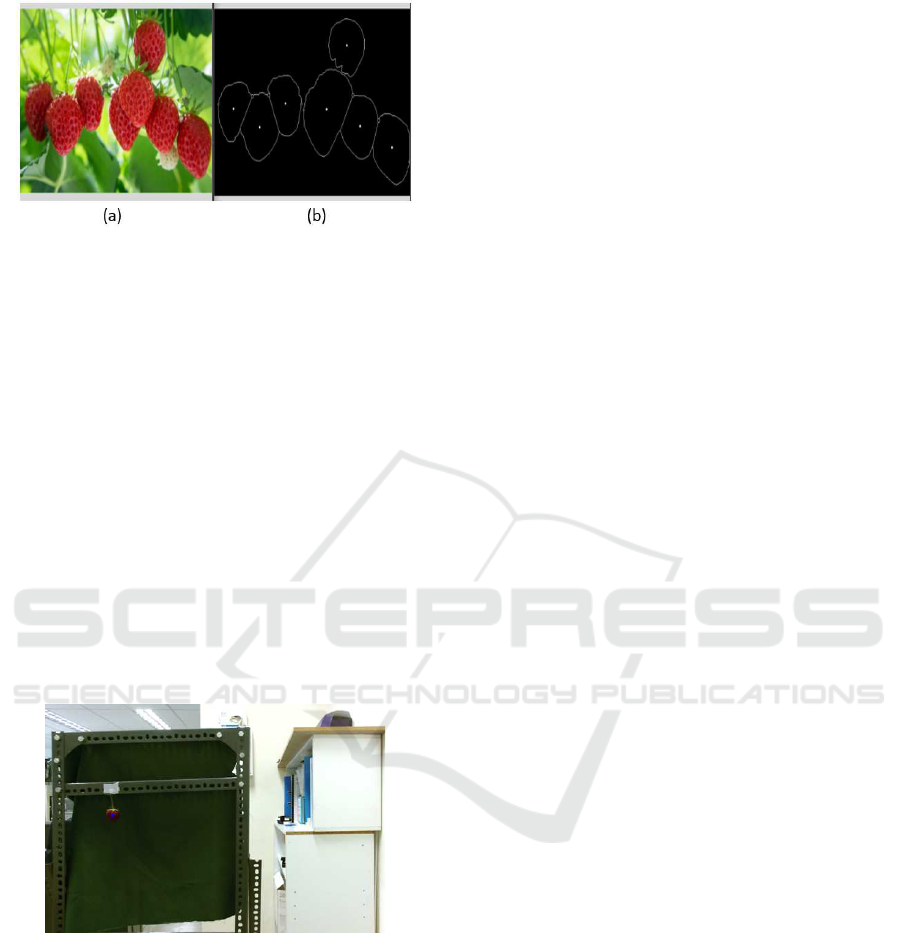

• The RG image (see Figure 3) is obtained using the

following formula:

I

rg

=

I

r

− I

g

, if I

r

≥ I

g

0, otherwise

(1)

where I

r

, I

g

∈ 0, ..., 255 are the intensity of the

pixels at the red and the green channel respec-

tively. These two channels are chosen as the ma-

ture strawberry is red and the leaves are green.

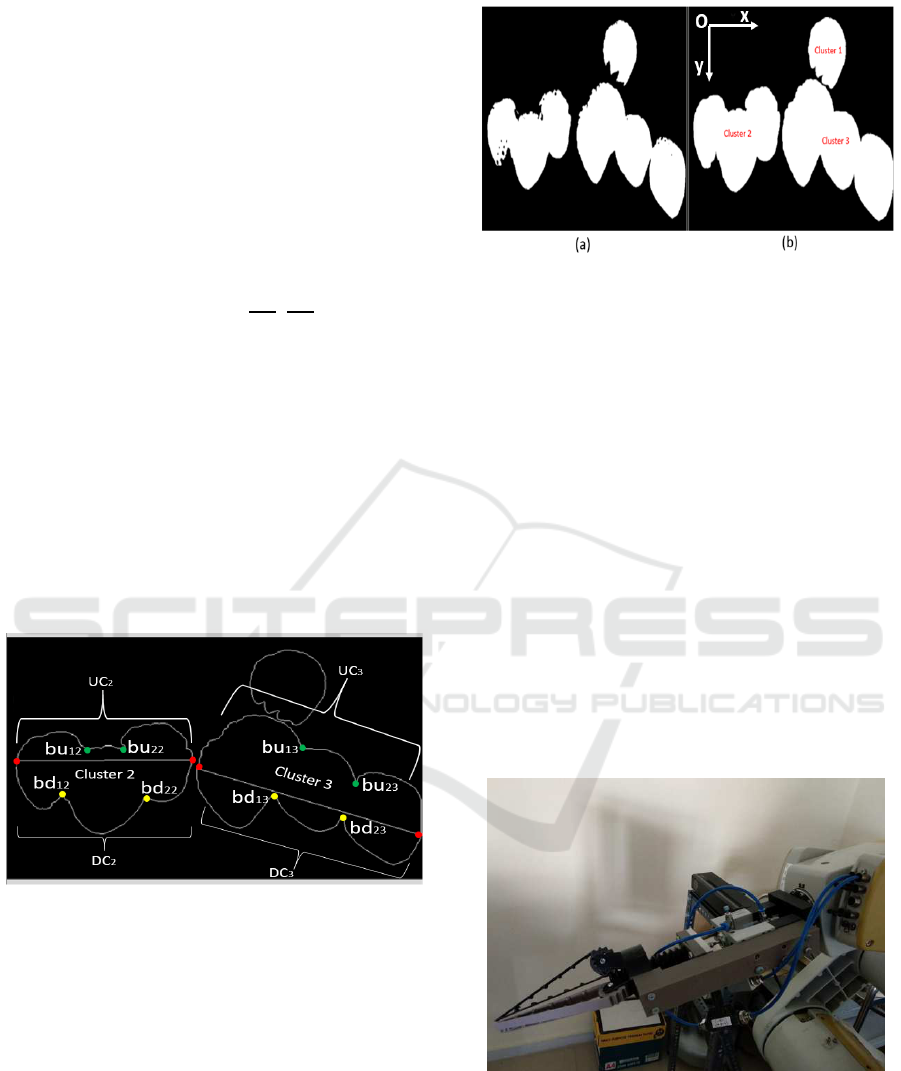

• In order to select an optimal threshold, the RG im-

age is segmented using the OTSU method (Otsu,

1979) and a binary image is derived. Morpholog-

ical transformations such as dilation and erosion

are used in a suitable sequence in order to fill holes

in the identified areas and to reduce the noise in

the binary image. In figure 5.a and figure 5.b the

binary image after the segmentation and after the

morphologicaltransformationsare shown, respec-

tively.

Figure 3: Result of applying the RG formula in an image.

(a) Original Image (b) Image after RG formula.

• The contours of the clusters of strawberries are

determined using the findContours function of

OpenCV (Bradski, 2000) which implements a

border following algorithm (Suzuki et al., 1985).

• For the j-th cluster, where j ∈ 1, . . . , nc and nc is

the number of the clusters in the image, the far-

left and the far-right points in the x-direction are

determined and connected with a line. The pos-

itive directions x and y and the beginning of the

coordinate frame are illustrated at the right image

of the figure 5. The space of the binary image D

bin

is defined as

D

bin

= {(x, y) ∈ R

2

: x ∈ (0, w), y ∈ (0, h), I

bin

(x, y) = 0 or 255) }

(2)

where w and h are the width and the height of the

image respectively and I

bin

(x, y) is the intensity of

the binary image at the pixel (x, y).

Therefore, for each cluster the far-left point

(x

l

, y

l

) and the far-right point (x

r

, y

r

) are:

(x

l

, y

l

) ∈ {(x, y) ∈ D

bin

: x

l

= minx ∀x ∈ cluster j}

(x

r

, y

r

) ∈ {(x, y) ∈ D

bin

: x

r

= maxx ∀x ∈ cluster j}

(3)

Then the diagonal line of each cluster is found in

order to classify the points of the contour of each

cluster in upper and down points and the equation

of this line is the following:

(−y) =

(−y

r

) − (−y

l

)

x

r

− x

l

(x− x

r

) + (−y

r

) (4)

• For each cluster, the points of their contours are

separated in upper and down points by comparing

their coordinates with the line designed in the pre-

vious step. So a point (x

u

, y

u

) belongs to the upper

part of the cluster, which is symbolized as the set

UC

j

, if the following inequality holds.

(−y

u

) >

(−y

r

) − (−y

l

)

x

r

− x

l

(x

u

− x

r

) + (−y

r

) (5)

Otherwise it belongs to the down part of the clus-

ter, which is the set DC

j

.

• The k local upper points, which are candidate re-

gions of the contours of the two images of the

two strawberries which intersect, (x

tukj

, y

tukj

) of

each cluster j are found by comparing the y value

of each point ∈ UC

j

with the corresponding y

values of its neighbor points, in the x-direction

neighborhood, and are defined as breaking points

(x

bukj

, y

bukj

). In case that there are many break-

ing points in a narrow area in the x-direction due

to the non uniformity of the found contour, then

the breaking point is considered to be the median

point between them as there is no possibility of

a large distance between these points because of

the geometry of strawberries’ shape at the break-

ing points. In a cluster j, if there are i breaking

points, it means that there are i+ 1 strawberries in

this cluster.

• The corresponding lower breaking point of each

upper breaking point is the point of DC

j

that has

the minimum Euclidean distance from the upper

breaking point i.e.

(x

bdij

, y

bdij

) = {(x, y) ∈ DC

j

: mindist((x, y), (x

buij

, y

buij

)) }

(6)

Then the corresponding upper and lower breaking

points are connected with a line and after we find

ICINCO 2019 - 16th International Conference on Informatics in Control, Automation and Robotics

440

the contours of each cluster of strawberries by us-

ing the findContours function of OpenCV (Brad-

ski, 2000). The previous steps are illustrated in

the figure 4.

• The new contours are considered as strawberries

if their area satisfies a constraint that is defined by

taking into account the geometry of strawberries’

shape. The strawberries are considered to be con-

ical, but since the image is two-dimensional the

formulas for the area of a triangle are used.

• The center of each contour that corresponds to a

strawberry is determined by using the 1

st

and 2

nd

order moments, (x

c

, y

c

) = (

M

10

M

00

,

M

01

M

00

).

• The orientation of strawberry is computed by us-

ing Principal Component Analysis (PCA) (Brad-

ski, 2000). The angle of the eigenvector with the

largest eigenvalue corresponds to the main (larger

in length) axis and provides the orientation of the

strawberry in the image plane.

• The point cloud that is created is stored in the

memory like a one-dimensional matrix. The value

of the matrix at the index that corresponds to the

center (x

c

, y

c

) of the strawberry shows the depth

of the strawberry i.e the distance of the strawberry

with respect to the camera frame.

Figure 4: Explanation of the geometric model. The red dots

show the far-left and far-right points, the green dots show

the breaking points of the upper part and the yellow points

show the down part of each of the clusters 2 and 3. Also,

the diagonal line of the equation (4) for each of the clusters

2 and 3 is presented.

4 ROBOTIC MANIPULATOR AND

GRIPPER

As the strawberries are detected and their locations

in 3D space are determined, then the robotic manip-

ulator moves the gripper to the position of the straw-

berries with an orientation defined according to the

strawberry orientation. The robotic manipulator has

Figure 5: (a) Segmenting the RG image (b) Morphological

transformations in the binary image.

6 DoF, the maximum load that it can hold is 3kg so

it bears the weight of the mounted gripper and its

workspace is sufficient in order to approach the straw-

berries. The manipulator’s controller receives the ex-

tracted coordinates of a strawberry and its orientation

and uses them as a reference signal for the movement

of the gripper towards grasping the specified straw-

berry.

A gripper is designed and 3D printed (Figure 6).

The drafts of the main part of the gripper are open

source (Bieber, 2016). The fingers of the gripper

adapt to the strawberry since they are quite flexible.

The outside part of the finger is more compact than

the inside part in order to present a resistance to the

cut of the fruit and also the gap between the three fin-

gers is such that the fruit does not slip after grasping

The components of the gripper are made by using a

3D printer. The gripper is actuated pneumatically by

a simple open-loop ON-OFF control logic.

Figure 6: Gripper of the robotic system.

Development of an Experimental Strawberry Harvesting Robotic System

441

5 EXPERIMENTAL ROBOTIC

SYSTEM

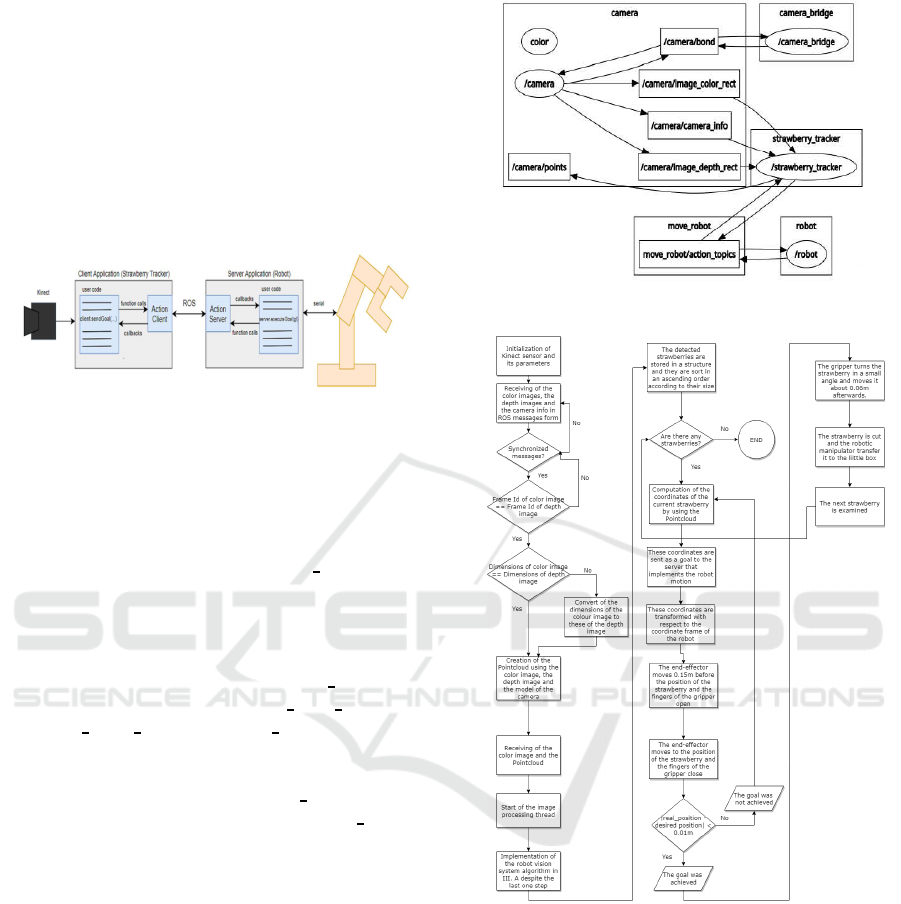

The software for the experimental system is imple-

mented in the Linux operating system and in partic-

ular the 16.04 version of Xubuntu using ROS. Apart

from the developed software packages, some avail-

able ROS packages are used e.g the actionlib pack-

age. The use of the actionlib package in our system is

shown in figure 7.

Figure 7: Overview of ROS client-server interaction in the

robotic system.

The graph of the operation of ROS in shown in fig-

ure 8. In this work, the main developed ROS package

is the StrawberryHarvester. The ros nodes that are in-

cluded in the StrawberryHarvester package are shown

inside the ellipsoids that are the camera

bridge, which

is responsible for the beginning of the kinect sensor’s

operation, the definition of the topics for the cam-

era info, the color image and the depth image, and

the definition of camera parameters such as the im-

age resolution. Then the node strawberry

tracker sub-

scribes to the topics /camera/image color rect, /cam-

era/image

depth rect and /camera info in order to ob-

tain the color and depth images and create the point

cloud which publishes to the topic /camera/points af-

terward. Also, the nodes strawberry

tracker and the

robot communicate through the action move

robot.

The functional diagram of the strawberry harvest-

ing robotic system is shown in the Figure 9. When the

strawberries are located and their positions with re-

spect to the sensor’s coordinate frame are computed,

then these positions are transformed with respect to

the coordinate frame of the robot, so that they are sent

to the robotic manipulator’s controller.

6 EXPERIMENTAL RESULTS

Two types of experiments were carried out in order

to evaluate the developed system: (a) Recognition of

separate and occluded mature strawberries. (b) Evalu-

ation of the harvesting process for single strawberries.

The developed algorithm for recognition of straw-

berries has a great success rate. In the case of the sin-

gle strawberries, the success rate is 100 % as in a num-

Figure 8: ROS operation graph.

Figure 9: Flowchart of function of the robotic system.

ber of 30 images it recognized correctly all the straw-

berries. In the case of the occlusion, the algorithm

distinguishes the strawberries only if their largest part

is visible, i.e. the 60% of their area is not occluded

by other object or if the gap between the upper points

of the fruits is not large enough to consider a break-

ing point as it is illustrated in Figure 10 in which the

first from the left strawberry in the right cluster is not

separated from the adjacent strawberry that is located

in front of it. This is due to the fact that the edge in

the upper contour of these two strawberries does not

show a dip.

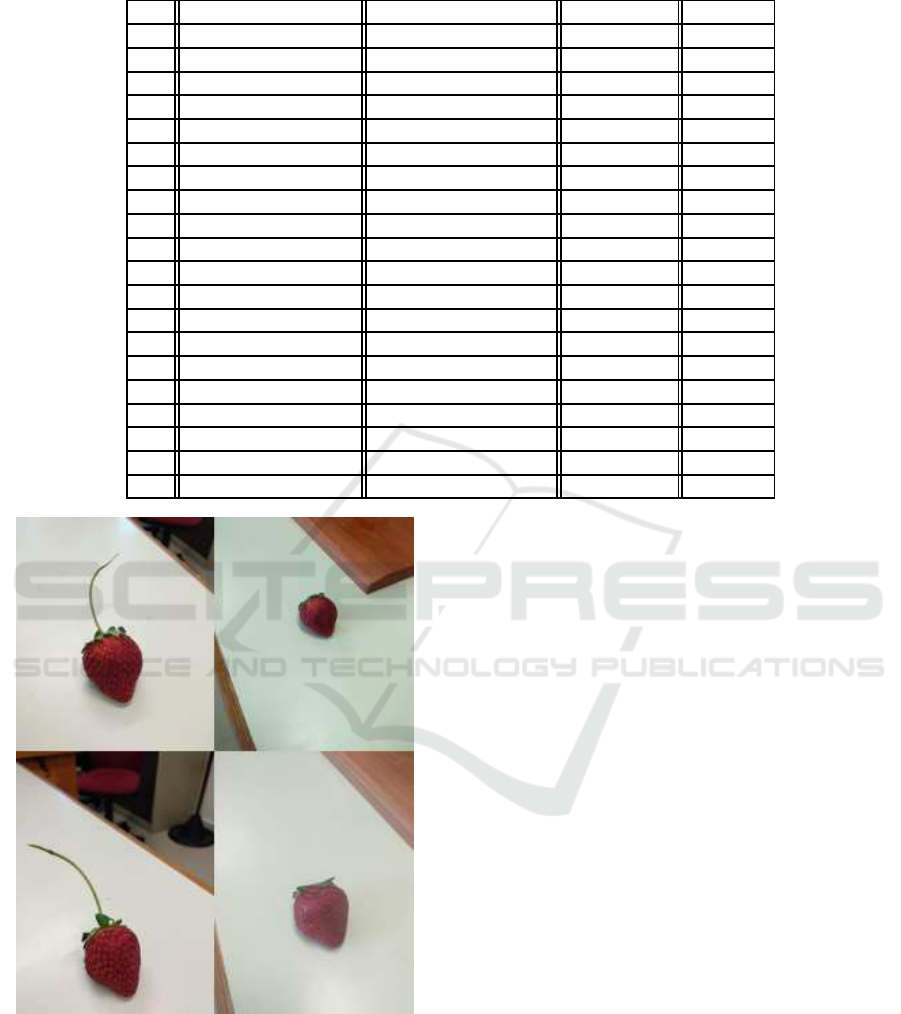

The recognition and the detection of a strawberry

ICINCO 2019 - 16th International Conference on Informatics in Control, Automation and Robotics

442

Figure 10: (a) Occluded strawberries (b) Result of applying

the vision algorithm in occluded strawberries.

during the experiment is represented in figure 11. The

harvesting experiment was set up by hanging fresh

strawberries with their peduncles on a metal structure

and putting some green cloth in order to simulate the

leaves and the immature strawberries. The calibration

of the sensor took place several times before the ex-

periment until achieving a good result in the 1

st

exper-

iment. Finally, the developed strawberry harvesting

robotic system is evaluated by repeating the harvest-

ing process a lot of times, in which the strawberries

are located in different positions around the field of

view of the camera and by checking the correctness

of the recognition, the accuracy of the localization,

the way of gripping the strawberry, the correctness of

the crop removal from the peduncle and the existence

of damages in the strawberry after the harvest. The

results are listed in Table 1.

Figure 11: Recognition and detection of a strawberry during

experiment.

According to Table 1, the mean error along the

X,Y,Z directions of the robot base coordinate frame is

0.45cm, 0.335cm and 0.11cm respectively. Therefore,

the mean error is inside the acceptable limits which

are 1cm in each direction. Also, it is shown that the

accuraccy of recognition and localization is 90% i.e.

18 out of the 20 cases. In the 4

th

experiment the error

is high which is due to the visual field and the dis-

tortion lens of the sensor and to the accuracy of the

calibration. In this case, the strawberry was placed

far from the center of the image where generally the

lens distortion is larger. In the 8

th

experiment, the er-

ror along the x direction is high as the strawberry was

far from the robot base and out of its workspace. In

the 18

th

experiment, the gripper goes a bit righter than

the position of the strawberry so it does not remove it

correctly after.

To sum up, the experimental results show that

the robotic system identifies occluded strawberries

and localize the mature separate strawberries with

great accuracy (90%) and large correct harvesting rate

(85%). The recognition and localization accuracy is

a bit larger than the 87% true positive rate in sweet-

peppers detection of (Hemming et al., 2014), the 88%

recognition accuracy in overlapping grape clusters

(Luo et al., 2018) and the 85% rate of correct detec-

tion in occluded apples (Nguyen et al., 2014). But, it

is smaller than the 95% average recognition rate by

using a deep learning method in recognizing straw-

berries. As regards, the success rate in harvesting, our

system shows a little bigger correct harvesting rate of

85% in relation to the 80% of (Hayashi et al., 2009).

With reference to the situation of the strawberry

after the harvest, the results showed that the gripper

grasped and cut the strawberry without causing dam-

age to its outer surface and deteriorating the quality

of the fruit. In a sample of 20 strawberries, only one

had some bruises which is justified by the fact that

some strawberries remained long time detached from

the plant. The images in figure 12 depict the situa-

tion of a strawberry at both sides before and after the

harvest. A video of the recorded harvesting process is

available at this link (Klaoudatos et al., ).

7 CONCLUSIONS

In this paper, a robotic system for strawberry harvest-

ing is developed and tested in laboratory conditions.

A new method for the recognition and localisation of

occluded mature strawberries is developed. A flexi-

ble gripper is built for grasping the strawberry and it

is tested with good success in grasping and removing

the strawberries without damaging them. The labora-

tory experimental results show that the developed sys-

tem is able to recognize occluded crops and localize

the mature separate strawberries with high accuracy

and large successful harvesting rate. Future work will

be focused on using larger sample of strawberries, as

now the experiments with 20 samples of strawberries

are initial experiments, and on achieving higher rate

of recognition of quite occluded strawberries using

machine learning algorithms and the incorporation of

an autonomous mobile robot in order to harvest the

strawberries in real conditions.

Development of an Experimental Strawberry Harvesting Robotic System

443

Table 1: Results of the experiment.

No Position error in cm Correct Grasp Correct Cut Damage

1 (0,0,0) yes yes no

2 (0,0.3,0) yes yes no

3 (0,0.3,0) yes yes no

4 (0.4,1.3,0.8) wrong position no grasp no grasp

5 (1,1,0) yes yes no

6 (1,1,0) at the edge of fingers yes no

7 (0.5,0,0.3) yes yes no

8 (2,0,0.3) out of workspace no grasp no grasp

9 (0,0.3,0) yes yes no

10 (1,0.3,0) at the edge of fingers yes no

11 (0.5,0,0) yes yes no

12 (1,0.8,0.4) a bit sideways yes no

13 (1,0,0) yes yes no

14 (0,0,0) yes yes no

15 (0,0.2,0.3) yes yes no

16 (0.3,0.1,0.1) yes yes no

17 (0.3,0,0) yes yes no

18 (0.1,1,0) sideways no grasp no grasp

19 (0.4,0.1,0) yes yes yes

20 (0,0,0) yes yes no

Figure 12: At the left column is the strawberry before the

harvest in both sides and at the right the respective after the

harvest.

ACKNOWLEDGEMENTS

We would like to thank the staff of Georion Ltd., and

especially Mr. F. Papanikolopoulosand Dr. E. Tsorm-

patsidis for providing fresh strawberries to conduct

the experiments.

REFERENCES

Bieber, A. (2016). Adaptive claw/gripper evolution.

Bradski, G. (2000). The OpenCV Library. Dr. Dobb’s Jour-

nal of Software Tools.

Dimeas, F., Sako, D., Moulianitis, V., and Aspragathos, N.

(2013). Towards designing a robot gripper for efficient

strawberry harvesting.

Dimeas, F., Sako, D. V., Moulianitis, V. C., and Aspra-

gathos, N. A. (2015). Design and fuzzy control of

a robotic gripper for efficient strawberry harvesting.

Robotica, 33(5):1085–1098.

Feng, G., Qixin, C., and Masateru, N. (2008). Fruit detach-

ment and classification method for strawberry harvest-

ing robot. International Journal of Advanced Robotic

Systems, 5(1):4.

Hayashi, S., Shigematsu, K., Yamamoto, S., Kobayashi, K.,

Kohno, Y., Kamata, J., and Kurita, M. (2009). Eval-

uation of a strawberry-harvesting robot in a field test.

105:160–171.

Hemming, J., Bac, C. W., van Tuijl, B. A. J., Barth, R.,

Bontsema, J., Erik, and Pekkeriet (2014). A robot for

harvesting sweet-pepper in greenhouses.

Klaoudatos, D., Moulianitis, V., and Aspragathos, N.

Strawberry harvesting robotic system. https://youtu.

be/S8lzHjZTQl4.

Li, X., Li, J., and Tang, J. (2018). A deep learning method

for recognizing elevated mature strawberries. In 2018

ICINCO 2019 - 16th International Conference on Informatics in Control, Automation and Robotics

444

33rd Youth Academic Annual Conference of Chinese

Association of Automation (YAC), pages 1072–1077.

Luo, L., Tang, Y., Lu, Q., Chen, X., Zhang, P., and Zou,

X. (2018). A vision methodology for harvesting robot

to detect cutting points on peduncles of double over-

lapping grape clusters in a vineyard. Computers in

Industry, 99:130 – 139.

Narendra, V. G. and Hareesha, S. (2010). Quality inspec-

tion and grading of agricultural and food products by

computer vision- a review. International Journal of

Computer Applications, 2.

Nguyen, T. T., Vandevoorde, K., Kayacan, E., Baerde-

maeker, J. D., and MeBioS, W. S. (2014). Apple de-

tection algorithm for robotic harvesting using a rgb-d

camera.

Otsu, N. (1979). A threshold selection method from gray-

level histograms. IEEE Transactions on Systems,

Man, and Cybernetics, 9(1):62–66.

Puttemans, S., Vanbrabant, Y., Tits, L., and Goedem´e, T.

(2016). Automated visual fruit detection for harvest

estimation and robotic harvesting. In 2016 Sixth In-

ternational Conference on Image Processing Theory,

Tools and Applications (IPTA), pages 1–6.

Slaughter, D. C. and Harrell, R. C. (1987). Color vision in

robotic fruit harvesting.

Stajnko, D., Lakota, M., and Hoˇcevar, M. (2004). Estima-

tion of number and diameter of apple fruits in an or-

chard during the growing season by thermal imaging.

Computers and Electronics in Agriculture, 42(1):31 –

42.

Suzuki, S. et al. (1985). Topological structural analy-

sis of digitized binary images by border following.

Computer vision, graphics, and image processing,

30(1):32–46.

Viola, P. and Jones, M. (2001). Rapid object detection us-

ing a boosted cascade of simple features. In Proceed-

ings of the 2001 IEEE Computer Society Conference

on Computer Vision and Pattern Recognition. CVPR

2001, volume 1, pages I–I.

Wiedemeyer, T. (2014 – 2015). IAI Kinect2. https://github.

com/code-iai/iai\

kinect2. Accessed June 12, 2015.

Xiang, L., Echtler, F., Kerl, C., Wiedemeyer, T., Lars,

hanyazou, Gordon, R., Facioni, F., laborer2008, Ware-

ham, R., Goldhoorn, M., alberth, gaborpapp, Fuchs,

S., jmtatsch, Blake, J., Federico, Jungkurth, H.,

Mingze, Y., vinouz, Coleman, D., Burns, B., Rawat,

R., Mokhov, S., Reynolds, P., Viau, P., Fraissinet-

Tachet, M., Ludique, Billingham, J., and Alistair

(2016). libfreenect2: Release 0.2.

Yang, L., Dickinson, J., Wu, Q. M. J., and Lang, S. (2007).

A fruit recognition method for automatic harvesting.

In 2007 14th International Conference on Mechatron-

ics and Machine Vision in Practice, pages 152–157.

Development of an Experimental Strawberry Harvesting Robotic System

445