A Supervised Autonomous Approach for Robot Intervention with

Children with Autism Spectrum Disorder

Vinícius Silva

1a

, Sandra Queirós

1

, Filomena Soares

1b

, João Sena Esteves

1c

and Demétrio Matos

2d

1

Center Algoritmi, University of Minho, Campus de Azurém, Guimarães, Portugal

2

ID+ – IPCA-ESD, Campus do IPCA, Barcelos, Portugal

Keywords: Playware, Human Robot Interaction, Autism Spectrum Disorders.

Abstract: Technological solutions such as social robots and Objects based on Playware Technology (OPT) have been

used in context of intervention with children with Autism Spectrum Disorder (ASD). Very often in these

systems, the social robot is being fully controlled using the Wizard of Woz (WoZ) method. Although reliable,

this method increases the cognitive workload on the human operator. They have to pay attention to the child

and ensure that the robot is responding correctly to the child’s actions. In order to mitigate this, recently,

researchers have been proposing the introduction of some autonomy in these systems. Following this trend,

the present work targets a supervised behavioural system architecture using a novel hybrid approach with a

humanoid robot and OPT to allow the detection of the child behaviour and consequently adapt the robot to

the child’s action, enabling a more natural interaction. The system was designed for emotion recognition

activities with children with ASD. Additionally, this paper provides an overview of the experimental design

where the interventions will be carried out in school environments in a triadic setup (child-robot-

researcher/therapist).

1 INTRODUCTION

Autism Spectrum Disorder (ASD) is a developmental

disability defined by the diagnostic criteria that

include deficits in social communication and social

interaction, and the presence of restricted, repetitive

patterns of behaviour, interests, or activities that can

persist throughout life (Association 2013; Mazzei et

al. 2012). Although, nowadays the diagnosis can be

done correctly around the 36 months old, the

intervention is still a relatively unexplored field. Due

to the diversity and specificities of symptoms,

professionals have found some difficulties in

developing effective interventions.

Some interventions performed in the last years use

robots, mechanical components, and computers.

Studies conducted with recourse to these materials

show that children with ASD have a great affinity

with them (Tapus et al. 2012; Dautenhahn & Werry

a

https://orcid.org/0000-0003-0082-343X.

b

https://orcid.org/0000-0002-4438-6713

c

https://orcid.org/0000-0002-3492-1786

d

https://orcid.org/0000-0003-4417-6115

2004). It has been demonstrated that subjects

diagnosed with ASD show improvements in social

behaviours such as imitation, eye gaze, and motor

ability while interacting with robots. Based on this

previous works, it is possible to conclude that robots

are very promising in intervention/therapies. Most of

these works have been exploring the interaction

between children and the robots, focusing on tasks

such as imitation and collaborative interaction

(Dautenhahn et al. 2006; Wainer et al. 2010).

Although the results have been interesting, some of

the studies in the literature use non-humanoid robots

or systems with no (or at least low) adaptation to the

activity. In this sense, most of the interactions with

robots are often rigid, ambiguous, and confusing.

Additionally, the interaction is triadic (child-

therapist-robot) where the therapist/experimenter

interacts together with the children. Thus, it is

important to introduce some adaptation to these

Silva, V., Queirós, S., Soares, F., Esteves, J. and Matos, D.

A Supervised Autonomous Approach for Robot Intervention with Children with Autism Spectrum Disorder.

DOI: 10.5220/0007958004970503

In Proceedings of the 16th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2019), pages 497-503

ISBN: 978-989-758-380-3

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

497

platforms in order to enrich the interaction with the

user and lighten up the cognitive burden on the human

operator. Additionally, to the use of robots,

researchers have been using objects with playware

technology (OPT). These devices are tangible

interfaces developed for children’s play and playful

experiences for the user (Lund et al. 2005). In general,

the use of these technologies have been very useful in

intervention with these children (Dautenhahn &

Billard 2002). However, in general, they do not adapt

to children behaviour. Others tries to introduce some

adaptation by using wearable devices which can be

invasive. Furthermore, none explores the use of an

adaptative hybrid approach, using a social robot and

an OPT, to interact with children with ASD.

Following this idea, the present work proposes a

supervised behavioural system architecture using a

hybrid approach to allow the detection of the child

behaviour and consequently adapt the robot to the

child’s action, enabling a more natural interaction.

The goal of this approach is to introduce some level

of automation in a supervised manner. Additionally,

the present work consists in the development of an

OPT to be used as an add-on to the human-robot

interaction with children with ASD in emotion

recognition activities.

The present paper is organized as follow: section

2 presents the related work; section 3 shows the

proposed approach; the experimental design is

described in section 4; the final remarks and future

work are addressed in section 5.

2 RELATED WORK

Nowadays, distinct technological strategies have

been used in the intervention process with children

with ASD, mainly through the use of Objects based

on Playware Technology (OPT) and social robots

(Lund 2009; Pennisi et al. 2016). Different social

robots have already been used successfully in robot

assisted therapy (Pennisi et al. 2016), helping children

to develop their skills. Many of these systems helps

to deliver a standard and effective treatment to

children with ASD by using the Wizard of Oz (WoZ)

method, where the therapist or researcher fully

controls the robot. Despite of being a successful

method (Huijnen et al. 2016), it requires an additional

operator other than the therapist that is engaging with

the child in the triadic setup. However, some

configurations (Costa et al. 2015; Costa et al. 2019),

uses a keypad where the researcher/therapist controls

the interaction. Although it does not need an

additional operator, this approach imposes a cognitive

load on the researcher/therapist during the

intervention. Additionally, systems that employ the

WoZ method usually do not record the child’s

behaviour (body posture, facial expressions, eye gaze,

among others) which might not be suitable to use in

live Human Robot Interaction (HRI) scenarios

(Zaraki et al. 2018). Therefore, it is paramount to

introduce some degree of autonomy, enabling the

robot to perform some autonomous behaviours whilst

keeping track of the interaction data.

Following this trend, there has been a concerning

in developing more adaptive approaches to interact

with children with ASD.

Some of the works in the literature (Mazzei et al.

2011; Bekele et al. 2014; Bekele et al. 2013), use a

combination of hardware, wearable devices and

software algorithms to measure the affective states

(e.g. eye gaze attention, facial expressions, vital

signs, skin temperature, and skin conductance

signals) of the child in order to adapt the robot

behaviour. Bekele et al. (Bekele et al. 2013; Bekele et

al. 2014) developed and later evaluated a humanoid

robotic system capable of intelligently managing joint

attention prompts and adaptively respond based on

measurements of gaze and attention. They concluded

that the children with ASD directed their gaze

towards to the robot when prompted with a question

by the robot. Furthermore, the authors suggested that

robotic systems endowed with enhancements for

successfully captivating the child attention might be

capable to meaningfully enhance skills related to

coordinated attention. However, the completion rate

of the activity was 60% for the ASD group, mainly

due to the fact of the willingness of these participants

to wear the LED cap even for a brief interval of time

(i.e. less than 15 min). This wearable device was a

crucial part of the system since it allowed to track the

children gaze during the activity. Thus, there is a need

for the development of non-invasive systems and the

use of such technologies with children with ASD with

common sensory sensitivities (Rogers & Ozonoff

2005).

In order to overcome the use of wearable devices,

other projects (Esteban et al. 2017; Koutras et al.

2018) use an array of cameras and depth sensors that

allows the robot to perform tasks autonomously.

These sensory devices are usually precisely fixed,

having into account the background and the lighting

conditions, in a static structure around a table where

the robot is placed, being limited to a controlled

environment. Although this approach minimizes the

noise in the robot perception system, laboratorial

settings are usually not suitable for children with

ASD, since it can be stressful, taking a considerable

ICINCO 2019 - 16th International Conference on Informatics in Control, Automation and Robotics

498

time for them to adapt to a new environment.

Therefore, a recent approach (Zaraki et al. 2018)

proposes the use social robots and how to introduce

some autonomy in non-clinical environments. The

authors use the Kaspar robot with a robotic system

called Sense-Think-Act that allows the robot to

operate with some autonomy (under human

supervision) with children in the real-world school

settings. The system was successful in providing the

robot with appropriate control signals to operate in a

semi-autonomous manner. They further concluded

that the architecture appears to have promising

potential.

Similar to the use of social robots, researchers

have been using OPT to interact with children with

ASD. The term “playware” is suggested as a

combination of intelligent hardware and software that

aims at producing play and playful experiences

among users (Lund et al. 2005). This technology

emphasizes the role of interplay between morphology

and control using processing, input, and output. These

objects can take up different embodiments such as

modular buttons, coloured puzzle tiles (Lund 2009),

Lego-like building blocks (Barajas et al. 2017),

among others. Although there is some works

concerning the use of OPT with children, only a few

research projects have been exploring the use of this

technology as an intervention tool with children with

ASD. (Lund 2009), used the developed tiles in a game

with ASD participants which consisted in mixing the

tiles in order to produce new colours. They observed

that OPT can be playful tools for cognitive challenged

children. Since the use of these objects as well as the

use of social robots with children with ASD have

presented positive outcomes, the authors proposed

and evaluated in (Silva et al. 2018) the use of a hybrid

approach (a social robot and an OPT) in an

intervention process with children with ASD. The

preliminary results demonstrated the positive

outcomes that this child-OPT-robot interaction may

produce.

3 PROPOSED APPROACH

In order to conduct an effective child-robot

interaction in a supervised manner it is important for

the system to be able to infer the participants

psychological disposition in that way adapting the

intervention process. Therefore, it is paramount to

have a framework able to extract different sensory

data. Taking this into account the following section

shows the proposed framework.

3.1 Framework

The proposed system, depicted in Figure 1, is

composed of a humanoid robot capable of displaying

facial expressions, a computer, two OPT devices, an

RGB camera, and a 3D sensor.

Figure 1: The proposed system. Starting from the left

bottom: PlayBrick, PlayCube, Intel RealSense D435, HP

RGB camera, computer, and the humanoid robot ZECA.

The Zeno R50 RoboKind humanoid child-like

robot ZECA (a Portuguese name that stands for Zeno

Engaging Children with Autism) is a robotic platform

that has 34 degrees of freedom: 4 are located in each

arm, 6 in each leg, 11 in the head, and 1 in the waist.

The robot is capable of expressing facial cues thanks

to the servo motors mounted on its face and a special

material, Frubber, which looks and feels like human

skin, being a major feature that distinguishes Zeno

R50 from other robots.

Concerning the OPT, two devices were

developed: the PlayCube and PlayBrick. The

PlayCube (Silva et al. 2019) (7cm×7cm×7cm), has an

OLED RGB display with a touch sensitive surface,

Inertial Measurement Unit (IMU), a small

development board (ESP32) that already has built-in

Bluetooth and Wi-Fi communication, an RGB LED

ring, a Linear Resonant Actuator (LRA), and a Li-Po

battery. Interacting with the PlayCube just means,

touching the physical object and manipulating it via

natural gestures (e.g. rotation, shake, tilt, among

others). The PlayBrick (20cm×11cm×3cm) shares the

same internal components as the PlayCube but

instead of the small 1.5-inch display that is on the

cube, the brick has a 5.0-inch touch resistive display

which allows to display more information.

Feedback is a key feature in guiding the children

through the play activity, especially children with

A Supervised Autonomous Approach for Robot Intervention with Children with Autism Spectrum Disorder

499

ASD. Therefore, both OPT devices have visual and

touch feedback, through the use of RGB LEDs and

LRA motors. In both devices, the type of feedback is

configurable.

Regarding the capture of sensing information, it

was used two different sensors, an RGB camera and

an Intel RealSense. The camera used is a full high

definition RGB HP camera. This camera is used with

the OpenFace library (Baltrusaitis et al. 2016) to track

the user facial action units and head motion data in

order to infer possible distraction patterns.

The Intel Realsense 3D sensor, 90mm x 25 mm x

25mm, (Intel 2019) is a USB-powered device that

contains a conventional RGB full HD camera, an

infrared laser projector, and a pair of depth cameras.

The present work uses the Intel RealSense model

D435 along with the Intel RealSense SDK and

Nuitrack (Nuitrack 2019) SDK to track the user

joints. This sensor was manly chosen due to its

smaller size which allows the final framework to be

portable. It will be placed on the robot chest.

3.2 The Behavioural Control

Architecture

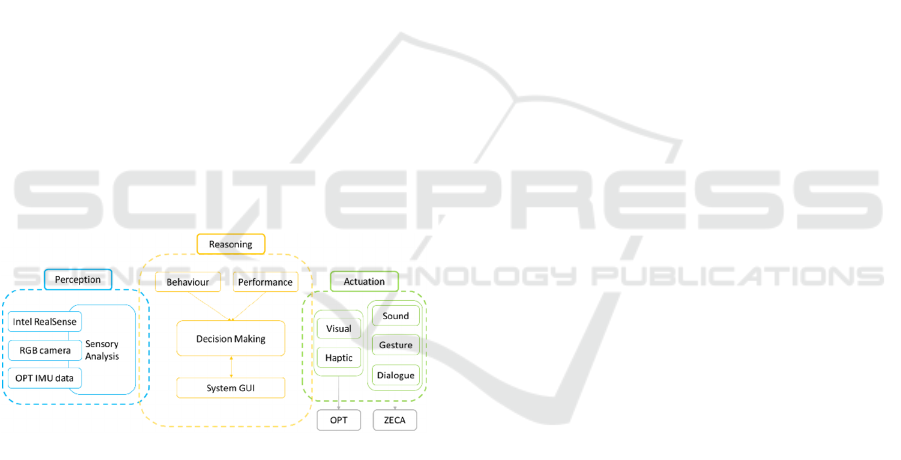

The software architecture includes three main

subsystems where two are interconnected via a

TCP/IP network and Bluetooth, Figure 2.

Figure 2: The behavioural architecture is composed of three

main layers – the Perception (blue), Reasoning (yellow),

and Actuation (green) layers.

The perception layer is responsible for sensing

and processing of the data received from the sensors.

Since the activities target the recognition of emotions,

it is necessary to extract and analyse features that are

required by these game scenarios such as: gaze, the

frequency and duration of possible movements done

by the participant, emotional cues, object tracking,

and stereotypical behaviours (such as hands and head

shaking).

Regarding the gaze estimation and emotional

cues, it is used the open source OpenFace library with

the RGB camera. It detects the user face and outputs

the facial landmarks, the head pose and eye gaze data,

and facial action units. For detecting the child facial

expressions, it was trained a Support Vector Machine

with a Radial Basis function (RBF) kernel model.

This model (adapted from previous work of the

research group, Silva et al. 2016) can detect the six

basic emotions plus neutral, achieving an accuracy of

89%.

Concerning the automatic recognition of User´s

movements, it is used the Intel RealSense with the

Nuitrack Software Development Kit (SDK). A 3D

sensor is used because of the depth information that

is acquired, providing another dimensional

information being less sensitive to illumination and

subject texture (Esteban et al. 2017). The Nuitrack

SDK is able to simultaneously detect up to six people

and the 3D positions of 19 joints. The position

coordinates are normalized so that the motion is

invariant to the initial body orientation and size.

Using this information, the User´s moving trend will

be computed and a supervised classifier will be

trained using a dataset that contains 600 minutes of

child-robot interaction (Costa et al. 2019).

Additionally, it is also possible to extract the User´s

proximity to the robot.

In order to track the OPT, a Histogram-of-

Oriented-Gradient (HOG) based object detector was

trained. Additionally, the Dlib correlation tracker

(King 2009) is also used, allowing the detection and

tracking of the OPT in real-time during an

intervention session. This approach is described in

detail in (Silva et al. 2019).

Additionally, the OPT IMU data is used to detect

if the child is interacting with the system.

Furthermore, the IMU information can be used to

detect some stereotypical behaviours (such as hand

shaking) contributing to the human action

recognition.

The reasoning layer is influenced by the child

behaviour and performance. Using the information

acquired and computed by the perception layer, the

system will decide the interaction flow of the

intervention. The decided action is displayed on the

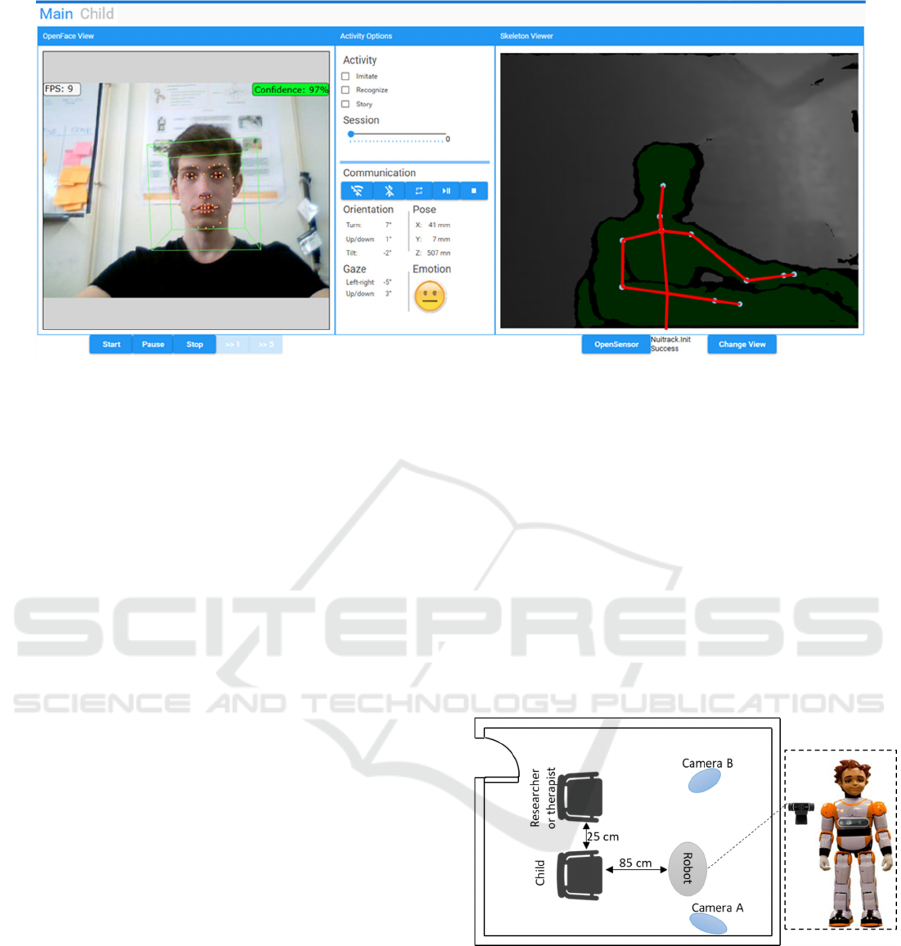

Graphical User Interface (GUI), Figure 3. The

interaction flow will be designed using a sate machine

approach. A state machine is a simple model to track

the events triggered by external inputs. This is done

by assigning intermediate states to decide what

happens when a specific input comes, and which

event is triggered. The output of this layer will

influence the dynamics of the next layer, the Action

layer. Therefore, the behaviour displayed by the robot

will be influenced by the interaction flow of the

session.

ICINCO 2019 - 16th International Conference on Informatics in Control, Automation and Robotics

500

Figure 3: The graphical user interface. The user can easily control the overall system and receive feedback from each

subsystem. It is possible to see the participant’s facial data (such as gaze, head orientation, facial expression) as well as the

skeleton data.

Additionally, the feedback given by the OPT

(visual and haptic) will also be dependent of the

output of the previous layer.

Since it is a supervised architecture, at any time,

the researcher/therapist can pause/resume and

start/stop the activity on the GUI.

The GUI will prompt the detected behaviours and

recommend possible actions that will be carried out

automatically, unless the researcher/therapist

intervenes. It is possible to record the session data

such as child performance, head orientation, facial

cues, IMU data from the OPTs, and skeleton (joints)

data. Through the GUI it is established the TCP/IP

connection with the robot (ZECA) and the Bluetooth

connection with the OPTs (PlayCube or PlayBrick).

Additionally, with the GUI it is possible to visualize

the children data (e.g. the child performance in all

sessions). Furthermore, if desired the

researcher/therapist can extend the session time. The

progress of the session will be also displayed in the

GUI.

4 EXPERIMENTAL DESIGN

In order to evaluate the proposed framework, a study

will be conducted in child-friendly environments

(such as schools) where the experiments will be

performed individually in a triadic setup, i.e., child-

robot- researcher/therapist.

The experimental set-up, Figure 4, consists in the

child seating in front of the robot, on the child’s line

of sight, at approximately 85 cm. Behind the robot,

two cameras to video record the sessions are

positioned. Camera A records only the child and

camera B records the overall session. Next to the

child is the researcher/therapist responsible for

maintaining him/her attention to the task. The

PlayBrick or PlayCube is on the hands of the child

since the beginning of the session. Since the tests will

be conducted in a known, comfortable environment

for the child, it is also an unconstrained setting,

therefore this layout is proposed in order to provide a

basis of comparison between the participants along

the sessions.

Figure 4: The proposed experimental design with a triadic

configuration, where the child is approximately 25cm from

therapist and 85cm from the robot.

The experimental configuration includes eight

individual sessions in the children´ school: Pre-test,

six Practice sessions, and Post-test. The Pre-Test is to

measure children’ skills; the Practice phase is to

implement the activities; and the Post-Test is to

evaluate if the competence was acquired. The Pre and

Post tests are performed without the proposed system.

The first time the activity is performed, the researcher

explains the objective of the session and how the

A Supervised Autonomous Approach for Robot Intervention with Children with Autism Spectrum Disorder

501

system works. The sessions approximately last 10

minutes, except the first that can last 15 minutes due

to its training period. The researcher/therapist can

extend the default time of the session if needed.

However, the session can be interrupted if the child

demonstrates irritability, fussiness or lack of interest

on the session. The sessions are video recorded for

further analysis, using cameras A and B (Figure 4).

The activities played are focused on emotion

recognition. Therefore, two game scenarios aiming

on improving the children emotion recognition skills

were developed: Recognize and Storytelling

activities. In the Recognize game scenario, ZECA

randomly performs a facial expression and its

associated gestures, representing one of the five basic

emotions (happiness, sadness, anger, surprise, and

fear). The child has to choose the correct facial

expression matching the emotion. Concerning the

Storytelling activity, ZECA randomly tells one of the

fifteen available stories that are associated with an

emotion and the child has to choose the correct facial

expression matching the emotion. In parallel, as a

visual cue, an image is shown representing the social

context of the story. The goal of this game scenario is

to evaluate the affective state of a character at the end

of a story. In order to select the answer, in both

activities, the children have to manipulate the cube or

brick by tilting it back or forward in order to scroll

through the facial expressions displayed by the OPTs.

When the child selects an answer, ZECA verifies if

the answer is correct and prompts a reinforcement.

Simultaneously, the OPTs provide visual and/or

haptic feedback accordingly to the child’s answer.

The participants are children with ASD aged

between 6 and 10 years old with no comorbidities

associated. Since the work involves typically

developing children and children with ASD, the

following ethical concerns were met: the research

work was approved by the ethical committee of the

university, collaboration protocols were firmed

between the university and the schools, and informed

consents were signed by the parents/tutors of the

children that will participate in the studies.

5 FINAL REMARKS AND

FUTURE WORK

The present paper concerns the development of a

supervised autonomous system to promote social

interactions with children with ASD. These

individuals are described as having impairments in

social interactions and communications, usually

accompanied by restricted interests and repetitive

behaviour. Technological devices (such as social

robots and OPT) are increasingly being used in

intervention processes with children with ASD.

However, in general, they do not adapt to children

behaviour. Others try to introduce some adaptation by

using wearable devices which can be invasive.

Furthermore, none explores the use of an adaptive

hybrid approach, using a social robot and an OPT, to

interact with children with ASD.

Therefore, the present work proposes a supervised

behavioural system architecture using a hybrid

approach to allow the detection of the child behaviour

and consequently adapt the robot to the child’s action,

enabling a more natural interaction. A full

autonomous system is not desired due to ethical

concerns (Esteban et al. 2017).

The supervised behavioural framework has three

main layers – Perception, Reasoning, and Actuation.

The perception layer is responsible for sensing and

analysis of the data received from the sensors. The

interaction flow is defined in the reasoning layer.

Finally, the robot will display the different behaviours

and the OPT will give different feedbacks

accordingly to the output of the Reasoning layer.

Since it is a supervised approach, the system GUI will

prompt the detected behaviours and depending on the

interaction state, it will recommend possible actions

that will be carried out automatically, unless the

researcher/therapist intervenes.

Future work includes a continuous improve of the

framework and testing the proposed system in school

environment in a triadic setup (child-robot-

researcher/therapist), following the proposed

experimental design, with the goal to evaluate how

this hybrid adaptive concept (OPT and social robot)

can be used as a valuable tool to promote emotion

skills in children with ASD.

The system’s level of autonomy should also be

increased by adding interactive machine learning,

enabling it to learn on the fly.

ACKNOWLEDGEMENTS

The authors thank to COMPETE: POCI-01-0145-

FEDER-007043 and FCT – Fundação para a Ciência

e Tecnologia within the Project Scope:

UID/CEC/00319/2019. Vinicius Silva also thanks

FCT for the PhD scholarship SFRH/BD/

SFRH/BD/133314/2017.

ICINCO 2019 - 16th International Conference on Informatics in Control, Automation and Robotics

502

REFERENCES

Association, A. P., 2013. DSM 5, Available at:

http://ajp.psychiatryonline.org/article.aspx?articleID=1

58714%5Cnhttp://scholar.google.com/scholar?hl=en&

btnG=Search&q=intitle:DSM-5#0.

Baltrusaitis, T., Robinson, P. & Morency, L. P., 2016.

OpenFace: An open source facial behavior analysis

toolkit. In 2016 IEEE Winter Conference on

Applications of Computer Vision, WACV 2016.

Barajas, A. O., Osman, H. Al & Shirmohammadi, S., 2017.

A Serious Game for Children with Autism Spectrum

Disorder as a Tool for Play Therapy.

Bekele, E. et al., 2014. Pilot clinical application of an

adaptive robotic system for young children with autism.

Autism : the international journal of research and

practice, 18(5), pp.598–608. Available at:

http://www.pubmedcentral.nih.gov/articlerender.fcgi?a

rtid=3980197&tool=pmcentrez&rendertype=abstract.

Bekele, E. T. et al., 2013. A step towards developing

adaptive robot-mediated intervention architecture

(ARIA) for children with autism. IEEE Transactions on

Neural Systems and Rehabilitation Engineering, 21(2),

pp.289–299.

Costa, S. et al., 2019. Social-Emotional Development in

High Functioning Children with Autism Spectrum

Disorders using a Humanoid Robot. Interaction

Studies, accepted for publication.

Costa, S. et al., 2015. Using a Humanoid Robot to Elicit

Body Awareness and Appropriate Physical Interaction

in Children with Autism. International Journal of

Social Robotics, 7(2), pp.265–278.

Dautenhahn, K. et al., 2006. How May I Serve You? A

Robot Companion Approaching a Seated Person in a

Helping Context. Human-Robot Interaction.

Dautenhahn, K. & Billard, A., 2002. Games children with

autism can play with Robota, a humanoid robotic doll.

Universal access and assistive technology, pp.179–190.

Available at: http://citeseerx.ist.psu.edu/viewdoc/

download?doi=10.1.1.66.7741&rep=rep1&t

ype=pdf.

Dautenhahn, K. & Werry, I., 2004. Towards interactive

robots in autism therapy: Background, motivation and

challenges. Pragmatics & Cognition, 12(1), pp.1–35.

Available at: http://www.jbe-platform.com/content/

journals/10.1075/pc.12.1.03dau.

Esteban, P. G. et al., 2017. How to build a supervised

autonomous system for robot-enhanced therapy for

children with autism spectrum disorder. Paladyn, 8(1),

pp.18–38.

Huijnen, C. A. G. J. et al., 2016. Mapping Robots to

Therapy and Educational Objectives for Children with

Autism Spectrum Disorder. Journal of Autism and

Developmental Disorders.

Intel, 2019. Intel® RealSenseTM Technology. Available

at: https://www.intel.com/content/www/us/en/archite

cture-and-technology/realsense-overview.html

[Accessed April 8, 2019].

King, D. E., 2009. Dlib-ml: A Machine Learning Toolkit.

Journal of Machine Learning Research.

Koutras, P. et al., 2018. Multi3: Multi-Sensory Perception

System for Multi-Modal Child Interaction with

Multiple Robots.

Lund, H.H., 2009. Modular playware as a playful diagnosis

tool for autistic children. 2009 IEEE International

Conference on Rehabilitation Robotics, ICORR 2009,

pp.899–904.

Lund, H.H., Klitbo, T. & Jessen, C., 2005. Playware

technology for physically activating play. Artificial Life

and Robotics, 9(4), pp.165–174.

Mazzei, D. et al., 2011. Development and evaluation of a

social robot platform for therapy in autism. Engineering

in Medicine, Proceedings of the Annual International

Conference of the IEEE, 2011, pp.4515–8.

Mazzei, D. et al., 2012. Robotic social therapy on children

with autism: Preliminary evaluation through multi-

parametric analysis. In Proceedings - 2012 ASE/IEEE

International Conference on Privacy, Security, Risk

and Trust and 2012 ASE/IEEE International

Conference on Social Computing, SocialCom/PASSAT

2012.

Nuitrack, 2019. Nuitrack Full Body Skeletal Tracking

Software - Kinect replacement for Android, Windows,

Linux, iOS, Intel RealSense, Orbbec. Available at:

https://nuitrack.com/ [Accessed April 8, 2019].

Pennisi, P. et al., 2016. Autism and social robotics: A

systematic review. Autism Research, 9(2), pp.165–183.

Rogers, S.J. & Ozonoff, S., 2005. Annotation: What do we

know about sensory dysfunction in autism? A critical

review of the empirical evidence. Journal of Child

Psychology and Psychiatry, 46(12), pp.1255–1268.

Available at: http://doi.wiley.com/10.1111/j.1469-

7610.2005.01431.x [Accessed March 31, 2019].

Silva, V. et al., 2018. Building a hybrid approach for a game

scenario using a tangible interface in human robot

interaction. In Lecture Notes in Computer Science

(including subseries Lecture Notes in Artificial

Intelligence and Lecture Notes in Bioinformatics).

Silva, V. et al., 2019. PlayCube: Designing a Tangible

Playware Module for Human-Robot Interaction. In

Advances in Intelligent Systems and Computing.

Silva, V. et al., 2016. Real-time Emotions Recognition

System. In 8th International Congress on Ultra Modern

Telecommunications and Control Systems and

Workshops (ICUMT). Lisboa, pp. 201–206.

Tapus, A. et al., 2012. Children with autism social

engagement in interaction with Nao, an imitative robot.

Interaction Studies.

Wainer, J. et al., 2010. Collaborating with Kaspar: Using an

autonomous humanoid robot to foster cooperative

dyadic play among children with autism. In 2010 10th

IEEE-RAS International Conference on Humanoid

Robots, Humanoids 2010.

Zaraki, A. et al., 2018. Development of a Semi-

Autonomous Robotic System to Assist Children with

Autism in Developing Visual Perspective Taking

Skills. In RO-MAN 2018 - 27th IEEE International

Symposium on Robot and Human Interactive

Communication. pp. 969–976.

A Supervised Autonomous Approach for Robot Intervention with Children with Autism Spectrum Disorder

503