The Estimation of Occupants’ Emotions in Connected and

Automated Vehicles

Juan-Manuel Belda-Lois

1a

, Sofía Iranzo

2b

, Javier Silva

2c

, Begoña Mateo

2d

,

Nicolás Palomares

2e

, José Laparra-Hernández

2f

and José S. Solaz

2g

1

Grupo de Tecnología Sanitaria del IBV, CIBER de Bioingeniería, Biomateriales y Nanomedicina (CIBER-BBN),

Valencia, Spain

2

Instituto de Biomecánica de Valencia, Universitat Politècnica de València, València, Spain

jose.laparra@ibv.org, jose.solaz@ibv.org

Keywords: Connected and Automated Vehicles, Emotion, Dimensional Model, Physiological Signals, Human Centered

Artificial Intelligence.

Abstract: One critical factor of success and user acceptance in connected automated vehicles (CAVs) is trust in

technology, being the main obstacle that remains from a customer’s perspective. Trust in automated systems

is based on feelings of safety and acceptance, being the emotional process the most influential aspect. One of

the main ambitions of SUaaVE project (SUpporting acceptance of automated VEhicle) is to develop an

emotional model to understand the passenger’s state during the trip (in Real-Time), based on body biometrics,

allowing to adapt the vehicle features to enhance the in-vehicle user experience, while increasing trust, and

therefore acceptance. This research addressed a initial experiments to identify changes in the emotional state

of the occupants in different driving experiences (in a driving simulator and in real conditions) by measuring

and analysing the physiological signals of the participants, serving as a basis for the generation of the

emotional model. The results showed that it is possible to estimate the level of Arousal and Valence of the

participants during the journey from the analysis of ECG, EMG and GSR signals. These results have positive

implications for the automobile industry facilitating a better integration of human factor in the deployment of

CAV.

1 INTRODUCTION

Many researchers, experts and companies in the

automobile industry (Sensum, 2020) coincide in

stating that future automated vehicles will be entirely

focused on the passenger experience and

understanding the passenger requirements. Research

on the introduction of emotional passenger state in the

artificial intelligence (AI) of connected and

automated vehicles (CAVs) to make every service

empathic is needed. Yet, nowadays most of the

development is being focusing on the technological

a

https://orcid.org/0000-0002-7648-799X

b

https://orcid.org/0000-0003-2579-7135

c

https://orcid.org/0000-0001-5115-1392

d

https://orcid.org/0000-0003-2633-8993

e

https://orcid.org/0000-0002-4523-341X

f

https://orcid.org/0000-0002-7121-5418

g

https://orcid.org/0000-0002-2058-9591

feasibility of such vehicles without sufficiently

considering the human factor, particularly in the

context of the anticipated vehicle-user interactions

considering the emotions of the occupants. However,

both the science and technology of emotion are still

in relatively youthful states (Sensum, 2020), being

necessary to tackle this topic of research from the

perspective of Human Centered Artificial

Intelligence (HAI).

Emotion state could be obtained through

questionnaires, behaviour analysis and physiological

response. Questionnaires are the most used technique,

262

Belda-Lois, J., Iranzo, S., Silva, J., Mateo, B., Palomares, N., Laparra-Hernández, J. and Solaz, J.

The Estimation of Occupants’ Emotions in Connected and Automated Vehicles.

DOI: 10.5220/0010214802620267

In Proceedings of the 4th International Conference on Computer-Human Interaction Research and Applications (CHIRA 2020), pages 262-267

ISBN: 978-989-758-480-0

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

because of their simplicity, but not adequate to real

time interpretation (Harmon-Jones & Winkielman,

2007). Behavioural analysis, body posture, gaze and

facial gesture can determine some emotions but other

emotions, such as happiness, do not have a clear

behavioural pattern or are recognised at late stages,

such as drowsiness in fatigue.

The emotional response and many cognitive

processes are partially processed unconsciously and

the physiological response analysis allow to access to

the unconscious response (galvanic skin response or

skin conductivity, heart rate, electroencephalography,

facial electromyography, breathing and blood

pressure). The study conducted by Holzinger shows

that physiological data can be used for stress

recognition (Holzinger et al., 2013). In the case of

automotive field, drivers stress has been detected with

galvanic skin response, somnolence with breathing,

fatigue with facial gesture and blinking or distraction

with eye tracking (Tan & Zhang, 2006). Additionally,

there have been great improvements recently

resulting from the shrinking costs and growing

accuracy of biometric sensors such as smart and

wearable devices and non- intrusive (not necessary to

attach sensors) solutions such as recording breathing

with a camera (Laparra Hernández et al., 2019).

Existing work is focused on directly estimating

human emotions based on a number of measures

(Eyben et al., 2010). However, it is not an enough

reliable approach to picture of a person’s state.

Given this context, SUaaVE project (SUpporting

acceptance of automated VEhicle), funded from the

European Union’s Horizon 2020 Research and

Innovation Programme, aims to develop an emotional

model to monitor and interpret the passenger state in

CAVs based on the emotional response. In particular,

this model will be based on the circumplex model of

emotion (Russell, 1980). In his theory, the emotions

are differentiated by their location on a bi-

dimensional space created by the pleasantness-

unpleasantness (Valence) and by the activation

(Arousal). The variation among emotions is

continuous and goes from negative to positive in the

case of the Valence, and from passive to active in the

case of the Arousal. Different emotions can be plotted

in the two-dimensional space as shown in Figure 1.

For example, happy has positive Valence and

medium Arousal while sadness has negative Valence

and low Arousal. Different studies have revealed that

Valence and Arousal can be estimated with psycho

physiologic methods, such as Skin Conductance

Level (SCL), Heart Rate Variability (HRV) or

Electroencephalography (EEG) (Chanel et al., 2007;

Stickel et al., 2009).

In this regard, emotion recognition has been

investigated to characterize the emotional state of

subjects through the analysis of heartbeat dynamics

estimating four possible emotional states based on the

circumplex model (Valenza et al., 2014).

Under this approach, the ambition of SUaaVE is

to build an emotion prediction framework for the

automated vehicle based on the estimation of the

occupants’ state (their values of Arousal and Valence)

through monitoring their physiological responses on

board, by measuring cardiovascular signals, as well

as electrodermal and respiratory activity. All these

biometrics have been identified as capable to

recognize emotions (Shu et al., 2018).

Figure 1: Two-dimensional emotion model based on

Arousal-Valence space and basic emotions

(Jirayucharoensak et al., 2014).

The aim of the article is to identify changes in the

state of the occupants to different driving conditions,

events and traffic environment (through the analysis

of the physiological signal measured), interaciton

with HMI devices and CAV as well as obtaining and

estimation of the values of Arousal and Valence.This

paper presents the results of the initial two

experiments carried out in SUaaVE in a driving

simulator and in a manual car in real conditions.

2 METHODS

2.1 Experiment 1: Measures in a

Driving Simulator

The measurement of the physiological signals in a

driving simulator was conducted in the dynamic

simulator of IDIADA (DiM250 from VI-Grade),

which generates longitudinal, transversal and

rotational acceleration forces up to 2.5 g,

The Estimation of Occupants’ Emotions in Connected and Automated Vehicles

263

characterised by low latency and high-frequency,

replicating a wide range of vehicle dynamics

manoeuvres. The scenario reproduced on the screens

was a circuit around a big city, with fluent traffic, as

observed in Figure 2. The participant was a male in

the range of 30-35 years old, and with no

cardiovascular pathologies. The participant

performed manual driving control during the whole

experiment. During the test, a codriver was also in the

simulator to annotate the timing of the scenario

events.

Figure 2: Dynamic simulator of IDIADA where the

experiment 1 was performed.

The participant was instrumented with the

equipment Biosignals Plux© for the acquisition of the

following physiological signals:

• Electrocardiogram (ECG).

• Electromyography (EMG) of the facial

muscles: Zygomatic and corrugator.

• Galvanic skin response (GSR).

The physiological signals were later processed to

extract a set of key parameters that characterize their

main features. After this, the values of Arousal have

been estimated by a Principal Components Analysis

(PCA) from the parameters of GSR and the HRV of

the Low Frequency Band (0.04 – 0.15 Hz). GSR

reflects the activity of the sweat glands, which

respond to changes in the sympathetic nervous

system. An increase in the level of emotional

activation causes an increase in the level of GSR.

HRV is inversely related to the intensity or emotional

activation. When there is a high cognitive or

emotional demand, the heart shows a steady rhythm

to optimize performance, reducing heart variability.

In contrast, when the person is in a state of relaxation

or low activity, the heart rhythm is more variable,

since it does not need to optimize the body's

performance, thus increasing variability.

With regards to the estimation of the values of

Valence, it was used the key parameter “normalised

average value of activated frames” of EMG, taking

into account that the zygomatic activation is mainly

related to a positive Valence, whereas the corrugator

activation corresponds to a negative Valence.

2.2 Experiment 2: Measures in Real

Conditions

This experiment consisted on the measurement of the

physiological signals of a co-driver, which is the most

similar to a passenger traveling in a CAV. The

participant was a female in the range of 30-35 years

old, and with no cardiovascular pathologies. She

performed an urban journey by car in the city of

València (Spain) one morning of a weekday with

variable weather.

In this case, the physiological signals were

acquired by Empatica E4© wristband (allowing an

unobtrusive monitoring of the driver), measuring the

Blood Volume Pulse (BVP), from which heart rate

variability can be derived (Figure 3).

Figure 3: Empatica E4© wristband.

During the experiment, the participant annotated

the timing of any event on road. Furthermore, it was

developed an online questionnaire (through Google

Forms©) so that the co-driver pointed out, for each

event, their emotional state through values of Valence

and Arousal. The questionnaire follows the Self-

Assessment Manikin (SAM) scale (Bradley & Lang,

1994), being the most appropriate to gather the

emotional perception from subjects. The used scale

consists of five pointers that relate directly to the

Valence (positive or negative impact of the event) and

the Arousal (level of excitement reached because of

the event). Figure 4 shows the questionnaire used to

SUaaVE 2020 - Special Session on Reliable Estimation of Passenger Emotional State in Autonomous Vehicles

264

assign values and characterize the emotion in each

event.

The data acquired in real conditions were

processed following the same methodology used for

the data gathered in the experiment 1 with the

dynamic simulator. The components of the HRV have

been splitted by tree band-pass 3 order Butterworth

filters in Very Low Frequency (VLF), Low

Frequency (LF) and High Frequency (HF) with the

common frequencies (0.04 Hz, 0.12 Hz and 0.40 Hz).

Per each component 1 minute moving average of the

square of the signal has been analysed.

Figure 4: Google forms questionnaire (following SAM

scale) used to evaluate the Valence and Arousal

respectively of the co-driver in each event.

3 RESULTS

3.1 Experiment 1

The values of Arousal and Valence and their

combination with respect to the events of the scenario

simulated were observed. Figure 5 shows these

results represented in the circumplex model. From the

data it can be observed that different values of

Arousal and Valence are obtained depending on the

type of event on road.

In general, the Arousal levels are higher for

negative Valence. This effect was expected because

people are more reactive to negative stimulus in the

virtual reality. Going into detail, the “Fog” event and

the “Risky overtaking” were the ones eliciting the

highest Arousal, whereas the event when the vehicle

passed through a section of road with guardrails

obtained the lowest values.

Concerning the Valence, taking a curve was the

one eliciting the highest Valence. This can be

explained because the driver liked sport driving and

the curve was taken appropriately. On the other hand,

the lowest value of Valence corresponds to the “First

breaking”, possibly due to lack of experience of

driving in a simulator at the beginning of the

experiment.

Figure 5: Values of Arousal and Valence obtained from the

physiological signals acquired in the experiment 1 with the

dynamic simulator.

3.2 Experiment 2

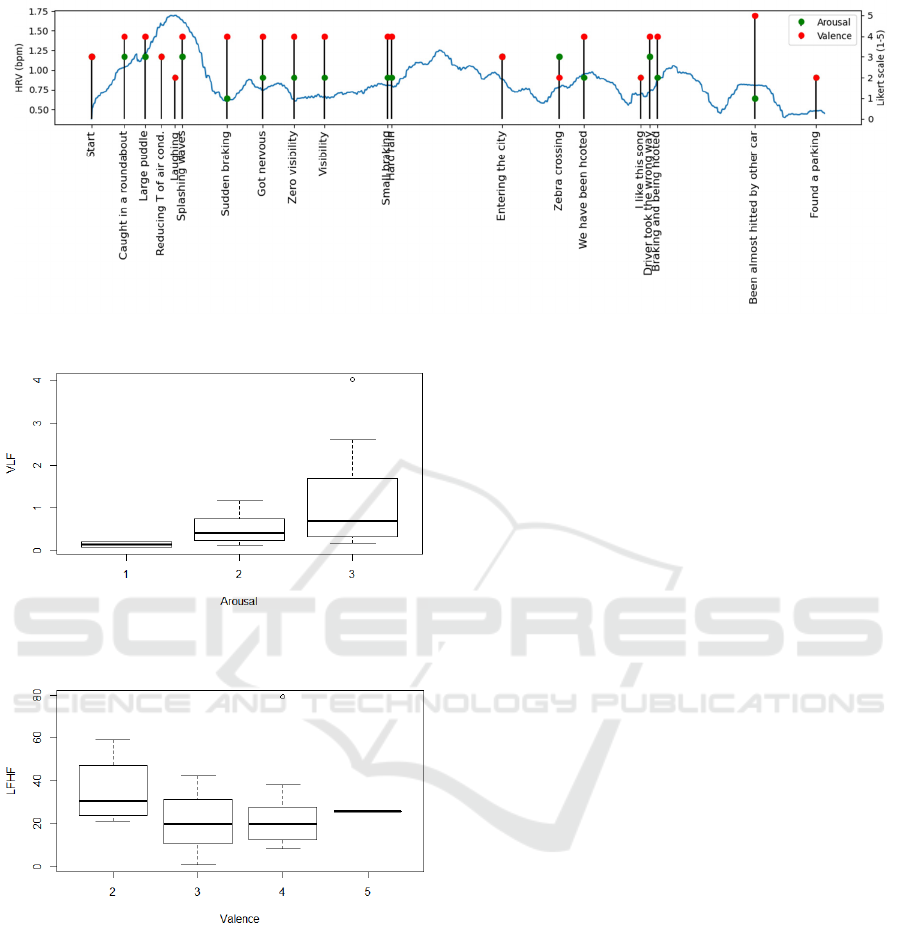

The Figure 6 shows the values of HRV for the whole

trip. The first result is that there is noticeable variation

of HRV for each event of the road. In general, it can

be seen that HRV decreases (or the Arousal value

decreases) as there is an event. This fact is especially

remarkable with the events of low visibility, when

there is heavy rain, or there is a risk of collision with

another car, all with a decrease of Arousal values.

The moving average of 1 minute of each

component of the HRV provides reliable

measurements of the Arousal and the Valence

declared by the user. In particular the VLF band is in

good agreement with the Arousal (Figure 7) and the

ration between the HF and LF band is in good

agreement with the Valence (Figure 8).

As can be seen in SAM (Figure 4), the scale of

Arousal moves from high Arousal (value 1) to low

Arousal (value 5). Therefore, the relationship for the

HRV is that the VLF decreases as the Arousal

increases, as expected from previous results in the

scientific literature.

The Valence scale in SAM (Figure 4) moves from

positive Valence (value 1) to negative Valence (value

5). Therefore, the it is a direct relationship a higher

ratio implies a more positive Valence (Figure 7).

Arousal

Active (high)

Passive (low)

Valence

Positive (high)

Negative (low)

Fog

Risky overtaking

Darkness

Curve

First breaking

Other car approaches

Guardrail

Breaking

The Estimation of Occupants’ Emotions in Connected and Automated Vehicles

265

Figure 6: HRV along the experiment and emotional components (Arousal and Valence) declared by the user in each event.

Figure 7: Relationship between the Very Low Frequency

Component and the Arousal declared by the user.

Figure 8: Relationship between Valence and the Ratio of

HF and LF bands.

4 DISCUSSION

These experiments aimed to find out whether it is

possible to identify changes in the state of driver to

different driving conditions, events and traffic

environment through the analysis of the physiological

signals. The results obtained in both experiments

showed that key parameters of ECG, EMG and GSR

can to detect variations of the state of the driver and

therefore estimate their emotional state by calculating

the values of Arousal and Valence.

However, it is worth mentioning that these results

are only preliminary and present certain limitations in

samples and types of scenarios on road. A higher

sample of participants, gender balance, driver

experience and personal factors & preferences will be

considered in the tests with subjects to be conducted

in the next stage of SUaaVE in the Human

Autonomous Vehicle (HAV) at IBV, a dynamic

driving simulator, which offer high level immersive

experiences on board. This will allow to obtain a

reliable model capable to provide an accurate

estimation of the values of Arousal and Valence.

The results of this initial experiments have

positive implications for the automotive field,

especially on CAV experience. By measuring and

analysing the biometric data of the occupants, the

emotional model will be able to estimate the emotion

and reactions of the people in a vehicle, opening the

possibility of analyzing travelers’ emotions all along

the journey in real time. In short, the approach set out

will enable that the automated vehicles will

understand how we feel and use such information to

make system more empathic, responding to the

occupant emotions in real time.

The development of the empathic module will

provide OEMs and Tier 1 suppliers a detailed

characterization of the passenger needs, enabling

them the development of strategies to enhance the in-

cabin experiences. This opens multiple possibilities to

tailor the travel experience such as:

• Intelligent adjustment of vehicle

movements.

• Offer services such as the personalization of

entertainment content.

SUaaVE 2020 - Special Session on Reliable Estimation of Passenger Emotional State in Autonomous Vehicles

266

• Improve the interaction with interfaces and

virtual assistants on-board.

Therefore, this empathic approach presented will

revolutionize the transportation through the analysis

of physiological (biometric) and contextual

(environmental) data, fused together to improve the

understanding the emotional state of the passenger.

This will allow to respond to the user in an

appropriate way, establishing trust between people

and automated vehicles, as well as enhance the in-

cabin and personalized experience. Furthermore, the

empathic module can be applied, not only to cars but

also to other means of transport such as buses, trucks,

planes, ships, to understand the experience of the

occupants and provide tailored services accordingly.

5 CONCLUSIONS

The results of the current experiment validate that it

is possible to detect changes in the state of the

occupants on board from physiological signals. The

extraction and analysis of key parameters of ECG and

EMG allows to obtain the values of Arousal and

Valence and therefore estimate their emotional state.

These results have positive implications for the

automobile industry enabling that CAVs will

understand how we feel and use such information to

make system more empathic, responding to the

occupant emotions in real time, and therefore

enhancing the CAV acceptance.

Future tests with subjects in the immersive

Human Autonomous Vehicle (HAV) will allow to

generate in SUaaVE project a reliable emotional

model, being more sensitive to differences in gender

perspective, driving experience and personal profile.

ACKNOWLEDGEMENTS

The paper presents the overall objective and the

methodology of the project SUaaVE (SUpporting

acceptance of automated VEhicle), funded from the

European Union’s Horizon 2020 Research and

Innovation Programme under Grant Agreement No

814999.

REFERENCES

Bradley, M. M., & Lang, P. J. (1994). Measuring emotion:

The self-assessment manikin and the semantic

differential. Journal of behavior therapy and

experimental psychiatry, 25(1), 49–59.

Chanel, G., Ansari-Asl, K., & Pun, T. (2007). Valence-

arousal evaluation using physiological signals in an

emotion recall paradigm. 2007 IEEE International

Conference on Systems, Man and Cybernetics, 2662–

2667.

Eyben, F., Wöllmer, M., Poitschke, T., Schuller, B.,

Blaschke, C., Färber, B., & Nguyen-Thien, N. (2010).

Emotion on the road—Necessity, acceptance, and

feasibility of affective computing in the car. Advances

in human-computer interaction, 2010.

Harmon-Jones, E., & Winkielman, P. (2007). Social

neuroscience: Integrating biological and psychological

explanations of social behavior. Guilford Press.

Holzinger, A., Bruschi, M., & Eder, W. (2013). On

interactive data visualization of physiological low-cost-

sensor data with focus on mental stress. International

Conference on Availability, Reliability, and Security,

469–480.

Jirayucharoensak, S., Pan-Ngum, S., & Israsena, P. (2014).

EEG-based emotion recognition using deep learning

network with principal component based covariate shift

adaptation. The Scientific World Journal, 2014.

Laparra Hernández, J., Izquierdo Riera, M. D., Medina

Ripoll, E., Palomares Olivares, N., & Solaz Sanahuja,

J. S. (2019). Dispositivo Y Procedimiento De Vigilancia

Del Ritmo Respiratorio De Un Sujeto.

https://patentscope.wipo.int/search/es/detail.jsf?docId=

WO2019145580&tab=PCTBIBLIO

Russell, J. A. (1980). A circumplex model of affect. Journal

of personality and social psychology, 39(6), 1161.

Sensum. (2020, September 21). Empathic AI for smart

mobility, media & technology (https://sensum.co/)

[Text/html]. Sensum; Sensum. https://sensum.co

Shu, L., Xie, J., Yang, M., Li, Z., Li, Z., Liao, D., Xu, X.,

& Yang, X. (2018). A review of emotion recognition

using physiological signals. Sensors, 18(7), 2074.

Stickel, C., Ebner, M., Steinbach-Nordmann, S., Searle, G.,

& Holzinger, A. (2009). Emotion detection:

Application of the valence arousal space for rapid

biological usability testing to enhance universal access.

International Conference on Universal Access in

Human-Computer Interaction, 615–624.

Tan, H., & Zhang, Y.-J. (2006). Detecting eye blink states

by tracking iris and eyelids. Pattern Recognition

Letters, 27(6), 667–675.

Valenza, G., Citi, L., Lanatá, A., Scilingo, E. P., & Barbieri,

R. (2014). Revealing real-time emotional responses: A

personalized assessment based on heartbeat dynamics.

Scientific reports

, 4, 4998.

The Estimation of Occupants’ Emotions in Connected and Automated Vehicles

267