Solving Linear Programming While Tackling Number Representation

Issues

Adrien Chan-Hon-Tong

a

ONERA, Universit

´

e Paris Saclay, France

Keywords:

Linear Programming, Polynomial Time Complexity, Number Representation.

Abstract:

Gaussian elimination is known to be exponential when done naively. Indeed, theoretically, it is required to

take care of the intermediary numbers encountered during an algorithm, in particular of their binary sizes.

However, this point is weakly tackled for linear programming in state of the art. Thus, this paper introduces

a new polynomial times algorithm for linear programming focusing on this point: this algorithm offers an

explicit strategy to deal with all number representation issues. One key feature which makes this Newton

based algorithm more compliant with binary considerations is that the optimization is performed in the so-

called first phase of Newton descent and not in the so-called second phase like in the state of the art.

1 INTRODUCTION

Linear programming is a central optimization prob-

lem which aims to solve:

min

x∈Q

N

, Ax≥b

c

T

x (1)

where A ∈ Z

M×N

is a matrix and b ∈Z

M

, c ∈ Z

N

two

vectors with M being the number of constraints/rows

of A and N the number of variables/columns of A.

Today, the state of the art is central-path log-

barrier (Nesterov and Nemirovskii, 1994) and/or

path-following (Renegar, 1988) algorithms which

solves linear programs with total binary size L in less

than

e

O(

√

ML) Newton steps (assuming N = O(M)).

As each Newton step is mainly the resolution of an

M ×M linear system, the arithmetic time complex-

ity of those algorithms is

e

O(M

ω

√

ML) (

e

O(.) notation

will be used instead of O(.) to express the fact that log

factors are omitted) where ω is the coefficient of ma-

trix multiplication i.e. 3 with simple algorithm but

2.38 with (Ambainis et al., 2015). Faster random-

ized algorithms like (Cohen et al., 2021) are not in

the scope of this paper.

However, considering that matrix inversion can be

done in

e

O(M

ω

) operations is only half of the story. It

is true by considering operation on Z or Q as 1 oper-

ation. But, from theoretical point of view, either op-

erations are realized with fixed precision (opening the

a

https://orcid.org/0000-0002-7333-2765

door to numerical instabilities), or, all numbers have

to be represented either by an arbitrary large integer

or a fraction of arbitrary large integer. But, in this

setting, it is required to count the number of binary

operations. Even more, it is required to take care of

the binary sizes of the number that appear during the

algorithm to avoid bad binary time complexity like in

naive Gaussian elimination which is exponential for

this reason (Fang and Havas, 1997).

Precisely, a binary-compliant algorithm for linear

programming should have all the 3 following features:

• a good arithmetic time complexity

• an explicit rounding strategy for the intermediary

numbers

• which maintains the binary size of those numbers

bounded by

e

O(L)

Typically, Simplex-like algorithms (Dantzig, 1955)

have no binary issues because the intermediary points

are related to a linear subsystem of the input con-

straints but they have not a good arithmetic time com-

plexity. Inversely, most state of art methods rely on

scaling, i.e. at some point in the algorithm, there is

an operation like A = A(I + xx

T

) in (Pe

˜

na and So-

heili, 2016), or column(A,k) =

1

2

×column(A,k) in

(Chubanov, 2015) or µ =

1

2

×µ in (Anderson et al.,

1996) (a classical implementation for central path log

barrier). Yet, structurally, even if one could set up an

explicit rounding strategy, those algorithms will not

respect the property of having intermediary numbers

40

Chan-Hon-Tong, A.

Solving Linear Programming While Tackling Number Representation Issues.

DOI: 10.5220/0010812300003117

In Proceedings of the 11th International Conference on Operations Research and Enterprise Systems (ICORES 2022), pages 40-47

ISBN: 978-989-758-548-7; ISSN: 2184-4372

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

bounded by

e

O(L) as one variable scaled twice each of

the

√

ML steps is finally scaled by 2

√

ML

.

Currently, from state of the art algorithms, only

(Renegar, 1988) has both the first and the third fea-

tures because in (Renegar, 1988) scaling is performed

with a factor (1 +

O(1)

√

M

) ( (1 +

O(1)

√

M

)

√

ML

≈ 2

log(e)L

so this scaling still leads to a binary size of

e

O(L)).

Yet, (Renegar, 1988) only offers a sketch of strategy

to round intermediary numbers, and, admits that it

sketch depends on several implicit constants. Thus,

there is not known algorithm with the 3 features al-

lowing to be fully binary-compliant.

Yet, this paper introduces a new algorithm related

to interior point algorithms but with those 3 features.

Currently, it requires

e

O(ML) steps against

e

O(

√

ML)

for (Renegar, 1988; Nesterov and Nemirovskii, 1994).

But, it is scaling free with variable sizes naturally

bounded by

e

O(L). And, the internal variables can be

explicitly rounded to integer at the end of each New-

ton steps (potentially a final phase is required but this

final phase only last at most

e

O(log(L)) so it is negli-

gible).

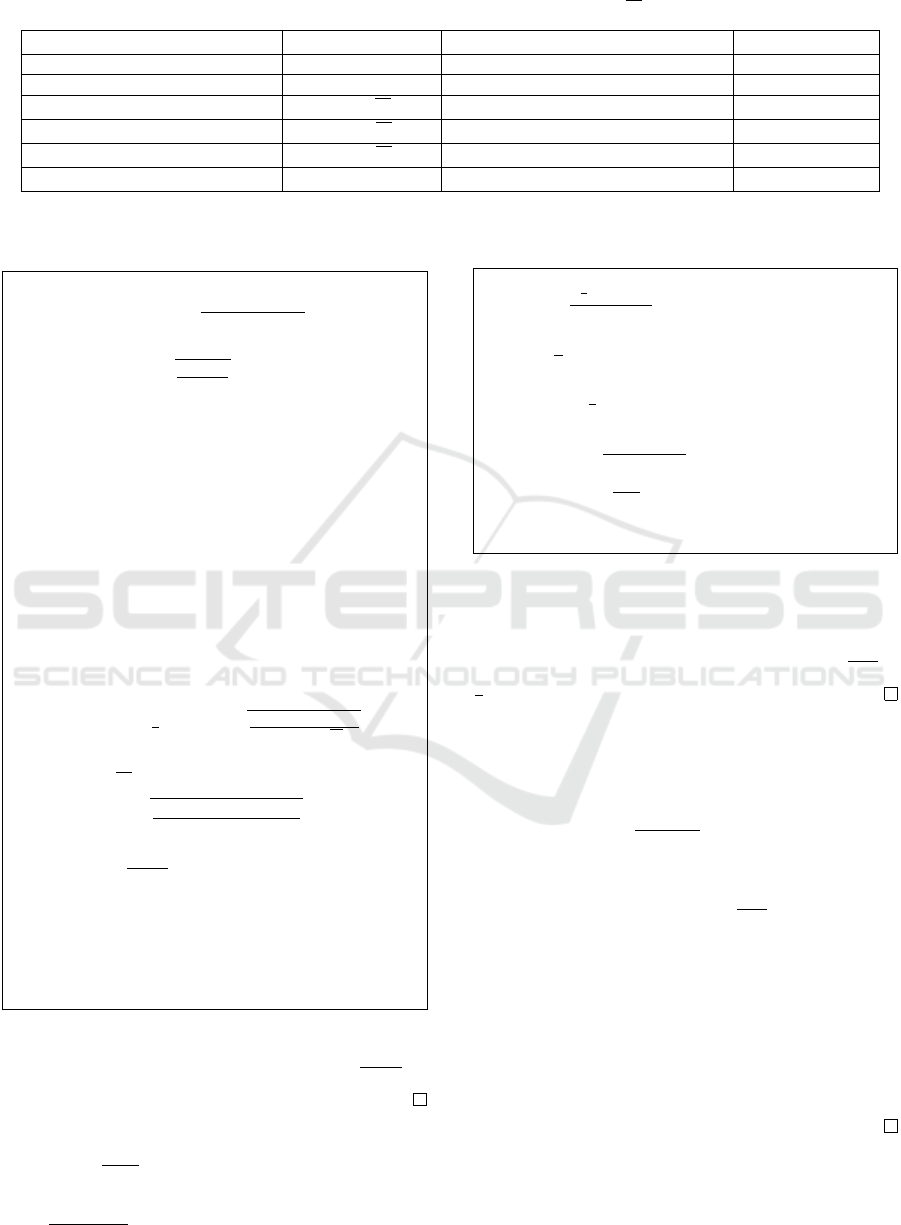

This situation is summarized by Table 1.

Finally, this algorithm also takes care of round-

ing/approximating operations which could lead to ir-

rational numbers (typically

√

2 has no binary num-

ber representation, so rounding should be used in-

stead), as, the main interest of this paper is to tackle

number representation issues. Independently, the of-

fered algorithm can be seen as a self concordant ver-

sion of Perceptron (Rosenblatt, 1958) and/or (Pe

˜

na

and Soheili, 2016), it is faster than (Pe

˜

na and Soheili,

2016) which requires

e

O(M

2

√

ML) Perceptron steps

i.e.

e

O(M

4

√

ML) arithmetic operations.

2 SELF CONCORDANT

PERCEPTRON

The offered algorithm is described in table 2.

Currently, this algorithm solves linear feasibility

a simpler form of linear programming which consists

to solve:

find x ∈X

A

= {χ ∈ Q

N

, Aχ > 0} (2)

for a given matrix A ∈ Z

M×N

such that X

A

6=

/

0 and

0 is the vector with all 0. Trivially, this problem is

equivalent to find x such that Ax ≥1 with 1 the vector

filled by 1. Importantly, not all matrix A could be

encountered when solving linear feasibility because

the problem assumes X

A

6=

/

0. Typically, the algorithm

offered in table 2 may have an undefined behavior if

the input matrix A does not verify X

A

6=

/

0.

But, despite this linear feasibility problem may

seem very specific, it is theoretically equiva-

lent with general linear programming: some well

known strongly polynomial times pre/post processing

(mainly based on duality theory) allows to solve any

linear programming instance by solving a linear fea-

sibility instance with equivalent size and total binary

size and X

A

6=

/

0. For completeness, these well known

equivalences are recalled in appendix.

Finally, a simplified version of the algorithm

which will be useful for proving the convergence is

provided in table 3 (yet it does not verify the binary-

compliant features). This simplified version allows to

see that the offered self concordant Perceptron is just

Newton descent on the function

F

A

(v) =

1

2

v

T

AA

T

v −

∑

m∈{1,...,M}

log(v

m

) (3)

3 PROOFS

3.1 Convergence

Theorem from (Nemirovski, 2004):

If Ψ(x) is a self concordant function (mainly sum

of quadratic, linear, constant and −log term), with a

minimum Ψ

∗

, then, the Newton descent starting from

x

start

allows to compute x

ε

such that Ψ(x

ε

) −Ψ

∗

≤ ε

in

e

O(Ψ(x

start

) − Ψ

∗

+ log log(

1

ε

)) damped Newton

steps. Each step simply relies on two linear algebra

operations: λ

Ψ

(x) ←

p

(∇

x

Ψ)

T

(∇

2

x

Ψ)

−1

(∇

x

Ψ) and

x ←x −

1

1+λ

Ψ

(x)

(∇

2

x

Ψ)

−1

(∇

x

Ψ). Precisely,

• While λ

Ψ

(x) ≥

1

4

, each damped Newton step de-

creases Ψ of at least

1

4

−log(

5

4

) ≥

1

50

. This so

called first phase can not last more than 50 ×

(Ψ(x

start

) −Ψ

∗

) damped Newton steps.

• As soon as one has computed any x

phase

with

λ

Ψ

(x

phase

) ≤

1

4

, then,

e

O(loglog(

1

ε

)) additional

steps are required to get x

ε

such that Ψ(x

ε

) −

Ψ

∗

≤ ε. This is the so called second phase with

quadratic convergence (i.e. log log(ε) steps lead

to a precision ε).

Lemma 1:

∀A ∈ Q

M×N

, x ∈ Q

N

such that Ax ≥ 1, and, v ≥ 0,

then,

||v||

2

2

||x||

2

2

≤ ||A

T

v||

2

2

.

Proof. Cauchy inequality applied to x

T

(A

T

v) gives:

x

T

(A

T

v) ≤ ||x||

2

×||A

T

v||

2

.

But, x

T

(A

T

v) = (Ax)

T

v ≥1

T

v as v ≥0 and Ax ≥1.

Thus, 1

T

v ≤ ||x||

2

×||A

T

v||

2

.

Solving Linear Programming While Tackling Number Representation Issues

41

Table 1: Self concordant Perceptron offers both a mastered internal binary size, and, an explicit rounding strategy while

having an arithmetic time complexity only higher than the state of the art by a factor

√

M.

Algorithm Q time complexity internal binary size bounded by

e

O(L) explicit rounding

(Rosenblatt, 1958) exponential no no

(Dantzig, 1955) exponential yes yes

(Pe

˜

na and Soheili, 2016)

e

O(M

4

√

ML) no no

(Anderson et al., 1996)

e

O(M

ω

√

ML) no no

(Renegar, 1988)

e

O(M

ω

√

ML) yes no

Self concordant Perceptron (this)

e

O(M

ω

ML) yes yes

Table 2: Self concordant Perceptron solves linear feasibility

in

e

O(ML) steps with mainly integer computations.

Algorithm(A)

1. Γ ← int(1000M

q

Mmax

m

A

m

A

T

m

) + 1

2. w ←

int

Γ

q

M

1

T

AA

T

1

+ 1

×1

3. if AA

T

w > 0 return w

4. H ←

w

2

1

0 ...

0 ... 0

... 0 w

2

M

AA

T

+ Γ

2

I

5. h ←Γ

2

×w

−

w

2

1

0 ...

0 ... 0

... 0 w

2

M

AA

T

w

6. solve the integer linear system HN = h

//N may be fractional

7. λ

2

← |N

T

h|

8. compute θ,

1

2

θ ≤ 1 +

q

λ

2

(1000)

3

M

4

√

Mϒ

3

≤ θ

9. if λ

2

>

Γ

3

16

go to 10 else go to 13

10. q ←int

q

(w+θN )

T

AA

T

(w+θN )

MΓ

2

+ 1

11. w ←int(

v+θN

q

) + 1

12. go to 3

13. w ←v + θN

// no rounding for the so-called second phase

14. go to 3

As each side is positive, one could take the square

(and push ||x||

2

to the left), this gives

(1

T

v)

2

||x||

2

2

≤

||A

T

v||

2

2

. Yet, as v ≥0, v

T

v ≤ (1

T

v)

2

.

Lemma 2:

Let f (t) =

1

2||x||

2

2

t

2

−log(t) with any vector x with

||x|| ≥ 1, then, f is lower bounded with a minimum

f

∗

=

1−log(||x||

2

)

2

≥ −log(||x||

2

).

Table 3: A simplified version of the self concordant Percep-

tron algorithm.

Simplified Algorithm(A)

ϒ =

q

max

m

A

m

A

T

m

v ←

1

ϒ

1

while ¬(AA

T

v > 0) do

F ←

1

2

v

T

AA

T

v −

∑

m

log(v

m

)

N ← (∇

2

v

F)

−1

(∇

v

F)

λ ←

p

(∇

v

F)

T

N

v ← v −

1

1+λ

N

end while

return v

Proof. f is a continuous function from ]0,∞[ to R.

f (t) →

t→∞

∞ due to the −log, and, f (t) →

t→∞

∞ due to

the t

2

. So, f is lower bounded with a minimum. As f

is smooth, this minimum is solution of f

0

(t) =

t

||x||

2

2

−

1

t

= 0 i.e. t

∗

= ||x||

2

and f

∗

= f (||x||

2

).

Lemma 3:

Assume X

A

6=

/

0, F

A

is lower bounded - with F

A

the

function defined in equation (3) i.e.

F

A

(v) =

v

T

AA

T

v

2

−

∑

m

log(v

m

)

Proof. If X

A

6=

/

0, then, ∃x,Ax ≥ 1. But, following

lemma 1, it holds that F

A

(v) ≥

v

T

v

2x

T

x

−

∑

m

log(v

m

) =

∑

m

f (v

m

) (with the function f introduced in lemma 2).

So, F

A

(v) ≥

∑

m

f

∗

≥ −M log(||x||

2

) following lemma

2. Finally, as for all m, F

A

(v) ≥ f (v

m

) + (M −1) f

∗

and f (t) →∞ in 0 or ∞, then, it means F can not admit

an infimum on the border of ]0,∞[

M

. So the property

of being lower bounded (by M f

∗

) without infimum at

the border implies that F

A

has a minimum F

∗

A

, and so

F

∗

A

≥ M f

∗

.

From now, the assumption that X

A

6=

/

0 will be omitted.

Remark: As F

A

(defined in eq.(3) has a minimum

when X

A

6=

/

0 (lemma 3), then, theorem from (Ne-

ICORES 2022 - 11th International Conference on Operations Research and Enterprise Systems

42

mirovski, 2004) holds in this case: the damped New-

ton descent on F

A

from any v

start

will provide v such

that F

A

(v) − F

∗

A

≤ ε in less than F

A

(v

start

) − F

∗

A

+

loglog(

1

ε

).

Lemma 4:

F

A

(v) −F

∗

A

≤ min

m

1

v

2

m

A

m

A

T

m

+1

⇒ AA

T

v > 0

Proof. Let assume that there exists k such that

A

k

A

T

v ≤0, and, let introduce w = v+t1

k

i.e. w

m

= v

m

if m 6= k and w

k

= v

k

+t.

F

A

(w

k

) =

1

2

(v + t1

k

)

T

AA

T

(v + t1

k

) −

∑

m

log(v

m

) +

log(v

k

) −log(v

k

+ t) = F

A

(v) + tA

k

A

T

v +

1

2

t

2

A

k

A

T

k

−

log(v

k

+ t) + log(v

k

). But, A

k

A

T

v ≤ 0, so F

A

(w

k

) ≤

F

A

(v) +

1

2

t

2

A

k

A

T

k

− log(v

k

+ t) + log(v

k

), and, it is

clear that for 0 ≤t 1, F

A

(w

k

) < F

A

(v) (because this

is −log(v

k

+t) at first order).

Precisely, one could define Φ(t) = F

A

(v) +

1

2

t

2

A

k

A

T

k

− log(v

k

+ t) + log(v

k

). Then, Φ

0

(t) =

A

k

A

T

k

t −

1

t+v

k

and Φ

00

(t) = A

k

A

T

k

+

1

(t+v

k

)

2

and

Φ

000

(t) = −

2

(t+v

k

)

3

. As, Φ

000

(t) ≤ 0 and t ≥ 0, Φ(t) ≤

Φ(0) +tΦ

0

(0) +

t

2

2

Φ

00

(0) i.e.

Φ(t) ≤ −

t

v

k

+

t

2

2

(A

k

A

T

k

+

1

v

2

k

)

In particular, for t =

v

k

v

2

k

×A

k

A

T

k

+1

, F

A

(w) ≤ F

A

(v) −

1

2

1

v

2

k

×A

k

A

T

k

+1

. But, this is not possible if F

A

(v) is closer

than F

∗

A

by this value.

Lemma 5:

Assume Ax ≥1, then F

A

(v) ≤F

A

(v

start

) ⇒v ≤||x||

2

×

(1 + ||x||

2

(F

A

(v

start

) + M log(||x||

2

)) ×1.

Proof. From lemma 2, F

A

(v) ≥

∑

m

f (v

m

) with f (t) =

t

2

2||x||

2

2

−log(t). Thus, for all k, F

A

(v) ≥ f (v

k

) + (M −

1) f

∗

(and f

∗

= −log(||x||

2

2

) see lemma 2). So F(v) ≤

F

A

(v

start

) ⇒ f (v

k

) ≤ F(v

start

) + M log(||x||

2

2

).

But, ∀t ≥ t

∗

,∃θ, f (t) = f (t

∗

) + (t −t

∗

) f

0

(t

∗

) +

1

2

(t −t

∗

)

2

f

00

(t

∗

)+

1

6

(t −t

∗

)

3

f

000

(θ). Yet f

000

(t) < 0 and

f

0

(t

∗

) = 0, so it holds that f (t) ≥

1

2

(t −t

∗

)

2

f

00

(t

∗

) =

(t−t

∗

)

2

||x||

2

2

.

So f (t) ≤ F

A

(v

start

) + M log(||x||

2

) ⇒ t ≤ t

∗

+

||x||

2

p

F

A

(v

start

) + M log(||x||

2

). Applying this last

inequality to each component of v provide the ex-

pected inequality.

Theorem 1:

Damped Newton descent on F

A

starting from

any v

start

will terminate eventually return-

ing v such that AA

T

v > 0 - precisely this

will happen at least when F

A

(v) − F

∗

A

≤

min

m

1

(||x||

2

2

×(1+||x||(F(v

start

)+M log(||x||

2

)))

2

A

m

A

T

m

+1

Proof. This is just lemma 4 + lemma 5

3.2 Complexity

Definition for the rest of this paper,

ϒ

A

=

q

max

m

A

m

A

T

m

(4)

In particular, F

A

(

1

ϒ

A

1) ≤ M

2

+ M log(ϒ

A

) (from

Cauchy inequality).

Theorem from (Khachiyan, 1979):

There are standard complexity results about the link

between the total binary size L and the determinant

of a matrix since (Khachiyan, 1979). Typically, as-

suming X

A

6=

/

0, there exists x such that Ax ≥ 1. Even

more, one such x can be expressed as linear system

extracted from A. So, log(||x||

2

2

) =

e

O(L) where L is

the total binary size of input matrix A. Also, trivially,

log(ϒ

A

) =

e

O(L).

Theorem 2:

The simplified self concordant Perceptron presented

in table 3 solves linear feasibility in less than

e

O(ML)

Newton steps.

Importantly, the so-called second phase of the

Newton descent is negligible.

Proof. The proof is mainly the theorem from (Ne-

mirovski, 2004) with the correct value for v

start

, F

∗

A

,ε.

First, theorem 1 provides the correct value for ε

(such that the algorithm outputs v with AA

T

v > 0).

Now, the ε is into a double log resulting in negligible

e

O(log(L)) complexity.

Thus, contrary to the state of the art, almost all

the algorithm takes place in the so called first phase

which lasts

e

O(F

A

(v

start

) − F

∗

A

) =

e

O(ML) damped

Newton steps. Now, v

start

=

1

ϒ

A

1, so F

A

(v

start

) =

M

2

+M log(ϒ

A

) =

e

O(ML) and −F

∗

≤M log(||x||

2

2

) =

e

O(ML) from definition of ϒ

A

, and, lemma 2, and,

from standard complexity results.

At this point, this paper proves that the simpli-

fied self concordant Perceptron (table 3) solves linear

feasibility in

e

O(ML) damped Newton steps. How-

ever, this result is not really interesting by itself: it

is almost a corollary of self concordant theory from

(Nemirovski, 2004), and, better algorithms exists (re-

quiring only

e

O(

√

ML) steps e.g. (Nesterov and Ne-

mirovskii, 1994)). Yet the interesting point of the pa-

per is the binary property of the complete self concor-

dant Perceptron (table 2), and, proven bellow.

Solving Linear Programming While Tackling Number Representation Issues

43

3.3 Binary Property

Lemma 6:

In damped Newton step,

1

1+λ

can be replace by a 2

approximation.

Proof. F

A

is convex so F

A

(v −θ(∇

2

v

F

A

)

−1

(∇

v

F

A

)) ≤

1

2

(F(v) + F

A

(v −

1

1+λ(v)

(∇

2

v

F

A

)

−1

(∇

v

F

A

))) if

1

2

1

1+λ(v)

≤ θ ≤

1

1+λ(v)

Lemma 7:

F

A

(

q

M

v

T

AA

T

v

× v) < F

A

(v) - or with integer -

F

A

1

int(

q

v

T

AA

T

v

M

)+1

v

!

≤ F

A

(v)

Proof. Considering the function t → F

A

(t ×v) triv-

ially proves that F

A

(v) decreases when v is normalized

such that v

T

AA

T

v goes closer to M.

The rounded version

1

int(

q

v

T

AA

T

v

M

)+1

only ma-

nipulate integer and guarantees that v

T

AA

T

v ∈

[

M

4

,4M].

Definition for the rest of this paper,

Γ

A

= 1000M

√

Mϒ

A

(5)

Theorem 3:

Assume that v

T

AA

T

v ≤ 4M, then:

∀ϖ ∈

0,

1

Γ

A

M

, F(v + ϖ) ≤ F(v) +

1

200

In particular, ∀v,

F

int(Γ

A

×v

1

)+1

Γ

A

...

int(Γ

A

×v

M

)+1

Γ

A

≤ F(v) +

1

200

Proof. First, the log part only decreases when adding

ϖ ≥ 0, thus, only the quadratic part should be consid-

ered. So F(v + ϖ) ≤ F(v) +

1

2

ϖ

T

AA

T

ϖ + ϖ

T

AA

T

v.

But, A

T

ϖ =

∑

m

ϖ

m

A

T

m

so ||A

T

ϖ|| ≤

∑

m

ϖ

m

||A

T

m

|| ≤

||ϖ||

∞

Mϒ ≤

1

500

√

M

and ||A

T

ϖ||

2

= ϖ

T

AA

T

ϖ ≤

1

(1000)

2

M

.

So, ϖ

T

AA

T

v ≤

√

ϖ

T

AA

T

ϖ ×v

T

AA

T

v ≤

q

1

(500)

2

M

×4M ≤

1

250

(from Cauchy). And,

1

2

ϖ

T

AA

T

ϖ =≤

1

2×(1000)

2

M

≤

50

1000

. Thus, it holds that

F(v + ϖ) ≤ F(v) +

1

200

.

Then, int(t) +1 is a special case of t +τ,τ ∈ [0, 1],

so the offered rounding scheme correspond to add ϖ ∈

h

0,

1

Γ

A

i

M

.

Theorem 4:

The self concordant Perceptron presented in table 2 is

consistent with the simplified version (table 3), and,

adds:

• normalizing of v such that v

T

AA

T

v ≤ 4M

• 2 approximation of

1

1+λ

• rounding all components of v with a common de-

nominator of Γ

A

• and with w being the numerator after rounding

(could be seen as substitution w = Γ

A

×v)

and, thus, this algorithm converges like the simplified

version presented in table 3.

Proof. First, normalizing v is not an issue as it de-

creases F

A

as proven in lemma 7.

Then, as recalled in theorem from (Nemirovski,

2004), each damped Newton step (during the so called

first phase) decreases F

A

by at least

1

50

. Approximat-

ing of

1

1+λ

adds a factor

1

2

(see lemma 6).

Yet, as pointed in theorem 3, rounding on a com-

mon denominator of Γ

A

only increases F

A

by

1

200

.

So, combining damped Newton step with approx-

imation of

1

1+λ

+ normalization + rounding decreases

F

A

by at least

1

200

=

1

50

×

1

2

−

1

200

(

1

50

for the origi-

nal damped Newton step,

1

2

due to the approximation,

−

1

200

due to the rounding). From complexity point of

view, the conclusion is that the complete step of self

concordant Perceptron decreases F

A

by O(1).

Finally, to give an explanation of the steps

4 and 5 of algorithm table 2, ∇

v

F

A

= AA

T

v −

1

v

1

...

1

v

M

So (Γ

A

)

3

v

1

... 0

0 ... 0

0 ... v

M

2

(∇

v

F

A

) =

−h And, ∇

2

v

F

A

= AA

T

+

1

v

1

... 0

0 ... 0

0 ...

1

v

M

2

, so,

(Γ

A

)

2

v

1

... 0

0 ... 0

0 ... v

M

2

(∇

2

v

F

A

) = H

This way, N computed in step 6 of algorithm table

2 is the Newton direction adapted to w = Γ ×v.

So, the self concordant Perceptron from table 2

has exactly the same property than the simplified ver-

sion of table 3.

4 CONCLUSIONS

This paper introduces an algorithm for linear pro-

gramming with arithmetic complexity of

e

O(ML)

ICORES 2022 - 11th International Conference on Operations Research and Enterprise Systems

44

Newton steps (higher from the state of the art by a

factor

√

M). But, this algorithm has good binary prop-

erty: it keeps the binary size of intermediary numbers

bounded by

e

O(L), and, offers an explicit strategy for

rounding all intermediary numbers (see table 1).

REFERENCES

Ambainis, A., Filmus, Y., and Le Gall, F. (2015). Fast

matrix multiplication: limitations of the coppersmith-

winograd method. In Proceedings of the forty-seventh

annual ACM symposium on Theory of Computing,

pages 585–593.

Anderson, E. D., Gondzio, J., M

´

esz

´

aros, C., and Xu, X.

(1996). Implementation of interior-point methods for

large scale linear programs. In Interior Point Meth-

ods of Mathematical Programming, pages 189–252.

Springer.

Chubanov, S. (2015). A polynomial projection algorithm

for linear feasibility problems. Mathematical Pro-

gramming, 153(2):687–713.

Cohen, M. B., Lee, Y. T., and Song, Z. (2021). Solving

linear programs in the current matrix multiplication

time. Journal of the ACM (JACM), 68(1):1–39.

Dantzig, G. B. e. a. (1955). The generalized simplex method

for minimizing a linear form under linear inequality

restraints. In Pacific Journal of MathematicsAmerican

Journal of Operations Research.

Fang, X. G. and Havas, G. (1997). On the worst-case com-

plexity of integer gaussian elimination. In Proceed-

ings of the 1997 international symposium on Symbolic

and algebraic computation, pages 28–31.

Khachiyan, L. (1979). A polynomial algorithm for linear

programming. Doklady Akademii Nauk SSSR.

Nemirovski, A. (2004). Interior point polynomial time

methods in convex programming. Lecture notes,

42(16):3215–3224.

Nesterov, Y. and Nemirovskii, A. (1994). Interior-point

polynomial algorithms in convex programming. Siam.

Pe

˜

na, J. and Soheili, N. (2016). A deterministic rescaled

perceptron algorithm. Mathematical Programming,

155(1-2):497–510.

Renegar, J. (1988). A polynomial-time algorithm, based on

newton’s method, for linear programming. Mathemat-

ical programming, 40(1):59–93.

Rosenblatt, F. (1958). The perceptron: a probabilistic model

for information storage and organization in the brain.

Psychological review, 65(6):386.

APPENDIX

Equivalence of Linear Programming and

Linear Feasibility

This paper provides an algorithm algo

0

which re-

turns v such that AA

T

v > 0 on an input A assum-

ing ∃x, Ax ≥ 1 (undefined behaviour otherwise - v

is positive but this does not matter). Trivially, it is

thus possible to form algo

1

which returns x such that

Ax > 0 on input A assuming such x exists by returning

A

T

algo

0

(A) (undefined behaviour otherwise).

• Thank to algo

1

, one could form algo

2

(A,b) which

returns x such that Ax > b assuming such x ex-

ists (undefined behaviour otherwise). Indeed, let

consider any A,b such that ∃x, Ax > b, finding

such x is equivalent to find a pair x,t such that

Ax −t ×b > 0 and t > 0, because

x

t

is then a solu-

tion of the original problem. Formally, let A

1

the

matrix A plus 1 as additional column and (0 1)

as additional row. Thus, one can get (x

1

t

1

) by

computing algo

1

(A) and returning

x

1

t

1

as output of

algo

2

(A,b).

Importantly, only constant number of vari-

ables/constraints are added, and, binary size is not

increased. So complexity of algo

2

(A,b) is the

same than algo

1

(A,b).

• Thank to algo

2

, one could form algo

3

(A,b) which

returns x such that Ax ≥b assuming such x exists.

Indeed, if ∃x/Ax ≥ b, then a fortiori ∃x,t such

that Ax + t1× > b, 0 < t <

1

Ω(A)

(with Ω(A) the

maximal subdeterminant of A). So, one could call

algo

1

on A

2

,b

2

with A

2

being A plus 1 column

plus a row with 0 and Ω(A) and b

2

being b plus

two 1. Thus, algo

2

(A

2

,b

2

) = x

2

,t

2

.

Now, one could consider greedy improvement

of min t

x,t, Ax+t1≥b,t≥0

initialized from (x

2

,t

2

). Such

greedy improvement can be performed by project-

ing (x,t) on {(x,t), Ax + t1 ≥ b} while minimiz-

ing t. One such greedy step can simply be done

by looking for χ, τ such that A

S

χ + t1

S

= 0 and

τ = −1 with S the saturated rows in Ax + t1 ≥ b.

If no such χ,τ exists, the greedy improvement

has terminated otherwise one could do (x,t) ←

(x +µχ,t +µτ) with µ such that Ax +t1 ≥b,t ≥0.

There will be no more than M such greedy purifi-

cation because one row enter the saturated ones at

each step.

When this greedy process terminates, this leads to

ˆx,

ˆ

t with A ˆx +

ˆ

t1 ≥ b, 0 ≤

ˆ

t ≤ t

2

<

1

Ω(A)

but ˆx,

ˆ

t is

a vertex of A. So Cramer rule applies, and so

ˆ

t =

Solving Linear Programming While Tackling Number Representation Issues

45

Det(S

t

)

Det(S)

with S a sub matrix of A and S

t

the Cramer

partial submatrix related to t. But

ˆ

t ≤ t

2

≤

1

Ω(A)

,

so

ˆ

t = 0, and thus, A ˆx ≥ b. So, this projection of

x

2

,t

2

gives x

3

= algo

3

(A,b).

Importantly, the binary size of A

2

,b

2

is just twice

the binary size of A,b because log(Ω(A)) ≤L(A),

so L(A

2

) = L(A) + log(Ω(A)) ≤ 2L(A) and only

a constant number of variables constraints are

added. so complexity of algo

3

(A,b) is the same

than algo

2

(A,b).

• Let any A,b - without assumption - solving Ax ≥

b (or producing a certificate that no solution ex-

ists) is equivalent to solve min

z /Az+t1≥b,t≥0

t (there is

a solution if the minimum is 0). Yet, this last lin-

ear program is structurally feasible (x = 0 and a

sufficiently large t provided a feasible point) and

bounded because t ≥ 0. Thus, primal dual theory

gives a system A

primal−dual

(x y) ≥ b

primal−dual

whose solution contains solution of the linear pro-

gram min

z /Az+t1≥b,t≥0

t.

Applying algo

3

(A

primal−dual

,b

primal−dual

) pro-

vides thus such x

primal−dual

, y

primal−dual

from

which one could restore x

3

,t

3

with either t

3

= 0

and so Ax

3

≥ b or t

3

6= 0 (this is a certificate).

This leads to an algorithm algo

4

which is able to

find x such that Ax ≥b (or to produce a certificate

that no solution exists) without assumption on A, b

about the existence or not of such x.

Importantly, the number of variables-constraints

is only scaled two folds when computing the pri-

mal dual, so from theoretical point of view, it

does not change the complexity between algo

4

and algo

3

.

• Finally, for any A,b,c without any assumption

solving min

x /Ax≥b

c

T

x can be done with 2 algo

4

calls

and one algo

3

call:

– one to know if the problem is feasible i.e.

algo

4

(A,b)

– one on the dual to known if it is bounded

algo

4

(A

dual

,b

dual

)

– and one call to algo

3

on the primal dual to get

the optimal solution (if previous two compu-

tations certify that the problem is feasible and

bounded).

Again, from theoretical point of view, the com-

plexity does not change: it only does 3 calls on

instances only scaled 2 times. At the end, it re-

turns the optimal solution or a certificate that the

problem is not feasible or not bounded.

Thus, an algorithm algo

0

which returns v such that

AA

T

v > 0 on input A if there exists such v (undefined

behaviour otherwise) allows to build with same com-

plexity algo

5

(A,b,c) which solves general linear pro-

gramming.

The opposite way is trivial algo

5

(AA

T

,1,0) is a

correct implementation of algo

1

(A) for any A.

ICORES 2022 - 11th International Conference on Operations Research and Enterprise Systems

46

Table 4: The sequences of pre/post processing connecting

linear feasibility and linear programming.

Assume algo

1

(A) takes A and returns one x such that

Ax > 0 if one exists, then:

algo

2

(A,b)

xt ← algo

1

A −b

0 1

return xt[: −1]/xt[−1] //python convention

takes A,b and returns one x with Ax > b if one exists.

algo

3

(A,b)

Γ ← Hadamard bound on A

xt = algo

2

A 1

0 t

0 −Γ

,

b

0

−1

x

2

,t

2

← xt[: −1],xt[−1]

S ←{m,A

m

x

2

+t

2

= b

m

}

while ∃χ,A

S

χ = 1 do

x

2

← x

2

+ λχ , t

2

← x

2

−λ

with λ maximal such that Ax

2

+t

2

1 ≥ b

S ←{m,A

m

x

2

+t

2

= b

m

}

end while

return x

2

takes A,b and returns one x with Ax ≥ b if one exists.

algo

4

(A,b)

A

p

←

A 1

0 1

, b

p

←

b

0

, c

p

← (0 1)

compute A

dual

,b

dual

,c

dual

with duality theory

χ ← algo

3

A

p

0

0 A

dual

c

p

−c

dual

−c

p

c

dual

,

b

p

b

dual

0

0

x ←χ[: M], t ← χ[M]

return x,t

takes A, b returns one x,t such that t > 0 means that

there is no Ax ≥b, and, t = 0 means that Ax ≥ b.

algo

5

(A,b,c)

compute A

dual

,b

dual

,c

dual

with duality theory

x,t ← algo

4

(A,b)

y,τ ← algo

4

(A

dual

,b

dual

)

if t > 0 or τ > 0 then

return infeasible (t > 0) or unbounded (τ > 0)

else

χ ←algo

3

A 0

0 A

dual

c −c

dual

−c c

dual

,

b

b

dual

0

0

return χ[: M]

end if

is a standard linear programming solver.

Solving Linear Programming While Tackling Number Representation Issues

47