INTERACTIVITY WITHIN IMS LEARNING DESIGN AND

QUESTION AND TEST INTEROPERABILITY

Onjira Sitthisak, Lester Gilbert, Mohd T Zalfan and Hugh C. Davis

Learning Technologies Group, School of Electronics and Computing Science

University of Southampton, Highfield

Southampton, SO17 1BJ, United Kingdom

Keywords: Interactivity, Formative assessment, Learning design, IMS, QTI.

Abstract: We examine the integration of IMS Question and Test Interoperability (QTI) and IMS Learning Design

(LD) in implementations of E-learning from both pedagogical and technological points of view. We

propose the use of interactivity as a parameter to evaluate the quality of assessment and E-learning, and

assess various cases of individual and group study for their interactivity, ease of coding, flexibility, and

reusability. We conclude that presenting assessments using IMS QTI provides flexibility and reusability

within an IMS LD Unit Of Learning (UOL) for individual study. For group study, however, the use of QTI

items may involve coding difficulties if group members need to wait for their feedback until all students

have attempted a question, and QTI items may not be able to be used at all if the QTI services are

implemented within a service-oriented architecture.

1 INTRODUCTION

E-learning can be viewed as the process of web-

based or online learning within an open, flexible,

and distributed learning environment (Westera et al.,

2005). Although several web-based educational

systems have been developed, these are ineffective

for facilitating the reuse and sharing of either

educational content or activities (Sampson et al.,

2006).

The IMS Learning Design (LD) specification

was introduced (IMS LD, 2003) to promote the

exchange and interoperability of E-learning

materials and to support pedagogical diversity. This

specification offered a standardized way to associate

educational content, activities and actors in the

design of any teaching-learning process. Educational

developers can use IMS LD to model who does

what, when, and with which content and services in

order to achieve the intended learning objectives.

The IMS QTI specification is used for

exchanging assessment information such as

questions, tests, and results. Similarly, it aims to

promote the exchange and interoperability of

assessment materials and services (IMS QTI, 2006).

We are looking into practices in the area of

integration between IMS LD and IMS QTI. IMS

QTI can be integrated with IMS LD in a number of

ways, and questions arise about integrating IMS LD

and IMS QTI from the point of view of pedagogical

effectiveness. In particular, implementations may

not sufficiently promote or control the desired

interactivity experienced by learners, or may present

an ineffective interactivity within the teaching-

learning process.

In this paper, we consider the presentation of

assessment and the resulting interactivity within an

IMS LD

Unit Of Learning (UOL) through the use of

IMS QTI. First, the role of assessments and

interactivity in the teaching-learning process is

explored. Second, the basic ideas of IMS QTI and

IMS LD are described. Third, various

implementation cases of assessment in IMS LD are

explained and their problems identified. Finally, the

joint use of IMS QTI and IMS LD are evaluated in

terms of improvement in flexibility, reusability, and

other parameters in the provision of best-case

interactivity expressible within a LD UOL.

440

Sitthisak O., Gilbert L., T Zalfan M. and C. Davis H. (2007).

INTERACTIVITY WITHIN IMS LEARNING DESIGN AND QUESTION AND TEST INTEROPERABILITY.

In Proceedings of the Third International Conference on Web Information Systems and Technologies - Society, e-Business and e-Government /

e-Learning, pages 440-445

DOI: 10.5220/0001269304400445

Copyright

c

SciTePress

2 INTERACTIVITY IN THE

TEACHING AND LEARNING

PROCESS

The level of interactivity such as communication,

participation, activity, and feedback has a major

impact on the quality of technology enhanced

learning. Consequently, “interactivity does not

simply occur but must be intentionally designed”

(Berge, 1999, p.5) into an E-learning system.

In an E-learning systems context, a cycle of

interactivity occurs when the students are presented

with a number of choices that requires them to

actively process the course information and

materials, and are then given prompt, contingent,

and specific feedback about their particular choice.

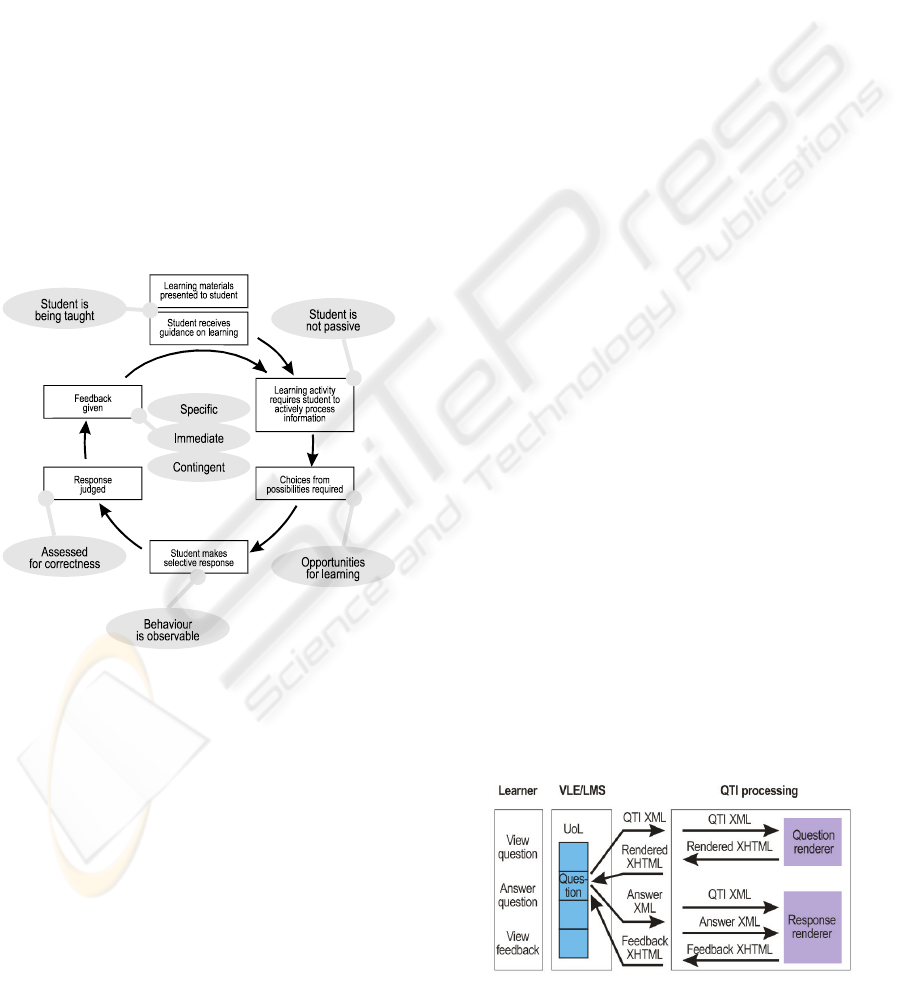

This view of interactivity is based upon principles

from the psychology of learning. Figure 1 illustrates

these key characteristics of the interactivity cycle

(Gilbert and Gale, 2007).

Figure 1: Characteristics of the interactivity cycle.

Interactivity begins when the student is required

to process actively the materials and information. In

order to ensure active processing of the materials,

the student is posed a problem, question, or asked to

undertake an activity that offers a number of options

or choices. The student makes a choice, and

receives feedback about that choice. The

interactivity cycle completes, and the student

continues with the next learning activity.

Rolfe and McPherson (1995, p. 837) note that

“feedback or knowledge of results is the life-blood

of learning”. Appropriate feedback from assessment

can motivate students and redirect their learning

towards areas of deficiency, and can help teachers

improve their coursework and instructional methods.

Assessments may be categorized as diagnostic,

formative, or summative (McMillan, 2006).

Formative assessment should be followed by

feedback and remedial guidance so learners may

know their deficiency in understanding, knowledge,

or competence (Rolfe and McPherson, 1995).

Feedback is most effective and usable by the

student when it is immediate, specific, and

contingent (McKendree, 1990; King, 1999).

Generally, feedback should be given immediately, or

as soon as possible. Delayed feedback is usually

less useful the more it is delayed, and is of course

completely useless if it never arrives. The feedback

must be specific if it is to be optimally effective. It

is the specificity of the feedback that allows the

students to focus on exactly those aspects of their

learning that could do with improvement.

Most importantly, feedback must be contingent.

This is a technical term that means the feedback

must be functionally dependent upon, must follow

and be linked to, the student’s selective response.

Effective feedback should incorporate these three

factors in order to support a well-designed unit of

learning.

In this paper we implement and evaluate

interactivity using two specifications, IMS QTI and

IMS LD, by expressing an UOL which involves the

provision of feedback in formative assessment.

3 THE IMS QTI SPECIFICATION

The IMS QTI specification (IMS QTI, 2006) is a

part of the same family of specifications as IMS LD.

It describes an information model for representing

questions, tests, and results. This specification

enables the exchange of item; test, and results data

between authoring tools, item banks, and test

construction tools, as well as learning systems and

assessment delivery systems. QTI version 2.0

processing is illustrated in Figure 2.

Figure 2: QTI version 2.0 processing.

INTERACTIVITY WITHIN IMS LEARNING DESIGN AND QUESTION AND TEST INTEROPERABILITY

441

When a learner accesses a Virtual Learning

Environment or Learning Management System

(VLE/LMS) to view and respond to a QTI question,

the system initially sends a QTI XML file to a QTI

processing service where a Question renderer

renders the question, the rendered question is sent

back to the VLR/LMS for display to the student.

The learner’s answer is sent to a QTI Response

renderer which marks the answer and provides

feedback. The rendered feedback is sent back to the

VLE/LMS for display to the learner.

4 THE IMS LD SPECIFICATION

IMS LD (IMS LD, 2003) is based on the following

principles: in a learning process each person has a

role (learner or teacher) and achieves learning

outcomes by carrying out learning activities within a

supportive environment. The major concept of the

IMS LD, the Method, is an element which allows the

coordination of activities of each role in the

designated environment to achieve learning

objectives.

The learning process is modeled on a theatrical

play from a structural point of view. A Method

consists of one or more concurrent Play(s); a Play

consists of one or more sequential Act(s); an Act

consists of one or more concurrent Role-Part(s), and

each Role-Part associates exactly one Role with one

Activity or Activity-Structure.

In this study, we construct an IMS LD UOL to

provide questions, check the correct answer and give

feedback. We use Learning Design to orchestrate the

above processes according to the interactivity cycle

of Figure 1.

Activities in LD are associated with a Role in a

Role-Part, and they contain the actual instruction for

a person in that role. If the activity is directed at a

learner and aims to achieve a specific learning

outcome it is referred to as a learning activity.

A LD Method may contain conditions, i.e. If-Then-

Else rules that further refine the assignment of

activities and environment entities for persons and

roles. The ‘If’ part of the condition uses Boolean

expressions on the properties that are defined for

persons and roles in the LD UOL. Properties are

containers that can store information about persons’

roles and about the UOL itself, e.g. user profiles,

progression data (completion of activities), results of

tests (e.g. prior knowledge, competencies, learning

styles), or learning objects added during the

teaching-learning process (e.g. reports, essays or

new learning materials).

5 ASSESSMENT CASES USING

LEARNING DESIGN

To explore assessments implemented using IMS LD,

a small UOL was developed incorporating question

and feedback activities. Students could see all

question activities, and could access each question in

turn. Interactivity was implemented as follows.

First, a question with multiple answer choices was

presented to each student. Next, the student

responded to the question by selecting one of the

choices. Then, the student’s response was

evaluated. Finally, the student received immediate,

specific feedback relating to his or her particular

answer. Then, the student moved forward to the

second question where the pattern was repeated.

This implementation may be considered ‘individual’

study. For a ‘group’ study implementation, a

student was given the feedback of a question only

after all students had finished answering it.

Using different mechanisms of Play, Act,

condition elements, and activity conditions within

IMS LD in order to control the interactivity, a

number of different UOL cases were developed for

the individual and group assessment scenarios.

Because IMS QTI has the capability to encapsulate

the question and feedback, each UOL case involving

a question activity immediately followed by a

feedback activity can be alternatively implemented

as an IMS QTI item instead of being implemented

within IMS LD.

Shows an implementation structure of the

assessment case for individual study using IMS LD

Figure 3: The structure of ‘individual’ study

implementation IMS LD (illustrated with two students).

WEBIST 2007 - International Conference on Web Information Systems and Technologies

442

alone. Figure 4 shows the implementation structure

for individual study using assessment implemented

as IMS QTI items.

Figure 5 shows the

implementation structure for group study using the

assessment implemented as IMS LD items.

Figure 4: The structure of ‘individual’ study

implementation IMS LD + QTI (illustrated with two

students).

Figure 5: The structure of group study implementation

using IMS LD (illustrated with two students).

6 EVALUATION

In this study, the criteria for evaluating the IMS LD

UOL of ‘individual’ and ‘group’ implementations

are as follows:

• Interactivity quality

The four criteria of interactivity quality are: the

control of interactivity, and the specificity,

immediacy, and contingency of feedback.

• Ease of coding

Ease of coding refers to the ease of providing the

functionality needed in the implementation of each

UOL.

• Flexibility and reusability

Flexibility and reusability refers to the ease with

which the properties of the UOL can be changed and

re-used in other contexts.

First, we consider the analysis of the simple

UOL ‘individual’ implementation with one Play, one

Act, and one Role-Part.

In , when the question activity is completed, the

feedback activity will be displayed immediately

based on the result of answering the question.

Hence, the IMS LD-only ‘individual’

implementation () provides full support for

specificity, immediacy, and contingency of

feedback.

Using the capability of IMS QTI to encapsulate

the question activity and the feedback activity

(

Figure 4), the feedback message in the QTI activity

will be displayed immediately when learners answer

the question. As with the case of the LD-only

structure, the LD + QTI UOL ‘individual’

implementation provides effective interactivity since

feedback is immediate, specific, and contingent.

Because IMS LD and IMS QTI provide mechanisms

for controlling interactivity by using activity

conditions, sequence/selection properties, and QTI

mechanisms, the ‘individual’ implementations ( and

Figure 4) fully support ease of coding.

With regard to flexibility and reusability, the LD-

only structure () provides partial support because

changing, adding, or deleting the question and/or

feedback requires re-coding the UOL. This is due to

the dependency of the feedback activity on the result

of answering in the question activity. However, this

limitation may be addressed by implementing the

assessments as IMS QTI items (

Figure 4), increasing

the flexibility and reusability of the UOL. The IMS

QTI features provide for simpler coding within the

UOL and enhance its reusability.

Second, we analyze the UOL ‘group’

implementations as follows. Due to the nature of the

‘group’ study, all group members need to complete

the question activity before starting the feedback

activity. Therefore, the learners may not get their

feedback immediately after answering the question.

However, they can still get specific and contingent

feedback for their answers. As a result, a UOL

‘group’ implementation offers only partial support

INTERACTIVITY WITHIN IMS LEARNING DESIGN AND QUESTION AND TEST INTEROPERABILITY

443

for immediate feedback, but with full support for

specific and contingent feedback.

The LD-only UOL ‘group’ study implementation

(

Figure 5) provides for ease of coding through the

use of the ‘Act’ LD structure to control interactivity.

However, when implementing the assessment items

as QTI items, there may be difficulties with coding

within the UOL because IMS QTI may not provide

sufficient support for controlling group interactivity.

As in the LD-only UOL ‘individual’ study

implementation, the separation of question and

feedback activities in the IMS LD-only UOL ‘group’

study implementation (Figure 5) the may cause

difficulties with changing and re-using this UOL in

other contexts. The ‘group’ study implementation of

Figure 5 provides only partial support for flexibility

and reusability. The LD + QTI ‘group’

implementation also provides only partial support

for flexibility and reusability of implementation, but

for a different reason. If group members need to wait

for their feedback until all students have attempted a

question, QTI items may not be appropriate.

According to QTI processing (Figure 2), feedback is

sent to the learner immediately after receiving the

answer. Hence, it may not be possible to implement

this version of ‘group’ study with QTI version 2.0

items by using rendering and response services

within a service-oriented architecture.

Table 1 shows the analysis of the ‘individual’

study and table 2 shows the analysis of ‘group’ study

assessment implementations using IMS LD alone

and with IMS QTI.

Table 1: Analysis of ‘individual’ study assessment

implementation.

Assessment

implementation

LD-only

(individual)

LD +

QTI(individu

al)

Figure Figure 4

Approach for

controlling

interactivity

Activity condition,

sequence/selection

property

QTI

mechanism

Immediate

feedback

Full support Full support

Contingent

feedback

Full support Full support

Ease of coding Full support Full support

Flexibility and

Reusability

Partial support Full support

Table 2: Analysis of ‘group’ study assessment

implementation.

Assessment

implementation

LD-only

(group)

LD + QTI

(group)

Figure Figure 5 Not illustrated

Approach for

controlling

interactivity

Act mechanism May not be

feasible

depending upon

QTI service

implementation

Immediate

feedback

Partial support Partial support

Contingent

feedback

Full support Full support

Ease of coding Full support Partial support

Flexibility and

Reusability

Partial support Partial support

7 DISCUSSION

Table 1 and Table 2 illustrate two important issues.

First, in measuring the pedagogical effectiveness

of any assessment, the model of interactivity shown

in Figure 1 provides key indicators. These include

the specificity, immediacy, and contingency of the

feedback given to the student upon completion of

the assessment. An instructional designer may

evaluate an implementation of IMS LD and IMS

QTI against these measures.

Second, the IMS QTI specification can be

considered as an integrative layer in implementing

IMS LD UOLs. However, there are some

shortcomings when integrating IMS QTI and IMS

LD implementations, as discussed in the evaluation

section. Instructional designers should consider this

issue when integrating IMS QTI items within an

IMS LD UOL.

8 CONCLUSION

The features of IMS QTI help the instructional

designer to implement an assessment within an IMS

LD UOL for individual study, solving the problems

that we found: ineffective interactivity, difficulty of

learning design coding, inflexibility, and poor

reusability. Teachers are increasingly expected to

create or adapt online activities without any

technical support from specialists, and the use of

IMS LD and QTI standards should help them meet

these expectations. Future developments in IMS LD

WEBIST 2007 - International Conference on Web Information Systems and Technologies

444

aim to improve the quality of e-learning, not only for

educators, but also for learners, and aim to increase

adaptation and reuse of UOLs (De Vries et al.,

2006).

Our study suggests that interactivity may be used

as a parameter for the pedagogical evaluation of

assessment and E-learning. As a result, instructional

designers are able to talk in terms of pedagogy rather

than technology, making explicit pedagogical

choices, subject to review, inspection, and critique.

Integration of IMS LD and IMS QTI would

increase the value of UOLs, but attention needs to be

paid to the usability of QTI items within ‘group’

study UOLs. The study and classification of group

activities and typical interactivities will provide

guidelines for developers to implement QTI and LD

authoring and run-time tools which allow

instructional designers to realize pedagogically

informed UOLs.

REFERENCES

Berge, Z. L., 1999. Interaction in Post-Secondary Web-

Based Learning. Educational Technology, Vol.39,

No.1, pp 5-11.

De Vries, F., Tattersall, C. and Koper, R., 2006. Future

developments of IMS Learning Design tooling.

Educational Technology & Society, Vol.9, No.1, pp 9-

12.

Gilbert, L. and Gale, V., 2007. Principles of E-learning

Systems Engineering, Chandos.

IMS LD, 2003. IMS Learning Design Best Practice and

Implementation Guide. Available from

http://www.imsglobal.org/learningdesign/ldv1p0/imsl

d_bestv1p0.html

IMS QTI, 2006. IMS Question and Test Interoperability

Overview. Available from

http://www.imsproject.org/question/qti_v2p0/imsqti_o

viewv2p0.html

King, J., 1999. Giving feedback. British Medical Journal,

Vol.318, No.7200, pp 1-6.

McAndrew, P., Nadolski, R. and Little, A., 2005.

Developing an approach for Learning Design Players.

Journal of Interactive Media in Education 2005(14).

Available from http://www-jime.open.ac.uk/2005/14/

McKendree, J., 1990. Effective Feedback Content for

Tutoring Complex Skills. Human-Computer

Interaction, Vol.5, pp 381-413.

McMillan, J. H., 2006. Classroom Assessment: Principles

and Practice for Effective Instruction, Pearson

Technology Group.

Rolfe, I. and MaPherson, J., 1995. Formative assessment:

how am I doing? The Lacent, Vol.345, No.8953, pp

837-839.

Sampson, D., Karampiperis, P. and Zervas, P., 2006.

Authoring web-based learning scenarios based on the

IMS Learning Design: Preliminary Evaluation of the

ASK Learning Design Toolkit. IEEE, pp 1003-1010.

Available from

http://ieeexplore.ieee.org/iel5/10748/33913/01618475.

pdf

Westera, W., Brouns, F., Pannekeet, K., Janssen, J. and

Manderveld, J., 2005. Achieving E-learning with IMS

Learning Design - Workflow Implications at the Open

University of the Netherlands. Educational

Technology & Society, Vol.8, No.3, pp 216-225.

Available from

http://dspace.ou.nl/bitstream/1820/443/1/eLearning+w

ithIMSLD.pdf

INTERACTIVITY WITHIN IMS LEARNING DESIGN AND QUESTION AND TEST INTEROPERABILITY

445