BUILDING AN ONTOLOGY THAT HELPS IDENTIFY

CRIMINAL LAW ARTICLES THAT APPLY TO A CYBERCRIME

CASE

El Hassan Bezzazi

IREENAT, Faculté de Droit de Lille 2, Lille, France

Keywords: Ontologies, Description logics, Nonmonotoic logics, Law, Cybercrime, Counterfactuals, Concept fitting.

Abstract: We present in this paper a small formal cybercrime ontolog

y by using concrete tools. The purpose is to

show how law articles and legal cases could be defined so that the problem of case resolution is reduced to a

classification problem as long as cases are seen as subclasses of articles. Secondly, we show how

counterfactual reasoning may be held over it. Lastly, we investigate the implementation of an hybrid system

which is based both on this ontology and on a non-monotonic rule based system which is used to execute, in

a rule based way, an external ontology dealing with a technical domain in order to clarify some of the

technical concepts.

1 INTRODUCTION

We investigate in this paper the implementation of a

formal ontology for criminal law dealing with

cybercrime which is both functional and applicative.

Our objective is twofold. First, we wish to present

the ontology and an example of counterfactual

reasoning it may support in a less abstract way than

usual by using concrete tools. We use Protégé

(Protégé, 2007) which is an ontology editor

supporting the OWL language and Racer (Racer,

2007) which is a reasoning system based on

description logic. We wish also to depict in the case

of an interdisciplinary collaboration the clarification

of some technical concepts through the use of a

nonmonotonic inference engine. This clarification

allows the enrichment of the ontology in a way that

may have consequences in the judge decision.

Having worked on law texts related to computer

security, we have chosen cybercrime as a subfield of

criminal law as long as it constitutes a relatively

small closed field. This paper is structured as

follows. In the following section remind some of the

basic ideas related to formal ontologies, typically

Protégé ontologies, and to description logics. In

section 3, the corpus of interest is described and

structured into classes. Section 4 is devoted to the

reasoning mechanism that allows us solving a case

by identifying the law articles covering it. Issues

related to concept fitting are pointed out in section 5

and a technique to achieve such an operation is

presented in section 6.

2 PRELIMINARIES

The use of ontologies in legal domains is an issue

which has been intensively investigated (Asaro et

al., 2003; Bench-Capon & Visser, 1997; Breuker et

al.,2002; Valente, 1995). A formal ontology

describes the concepts and the relations relating

them in a given domain. The relations define the

semantics. Building a formal ontology is especially

recommended for domains expressed in natural

language as documents and corpus. An immediate

benefit from the definition of such a formal ontology

is the normalization of the semantics materialized by

a structured terminology. This normalization is most

relevant in the case of an interdisciplinary

collaboration where a given term may carry real

ambiguity according to one field or another. Indeed,

natural language is characterized by its contextual

nature which may lead to different interpretations.

Think, in a forensic context, of how computer data

suppression might be understood by a judge with no

special knowledge in computer science. Expressing

179

Hassan Bezzazi E. (2007).

BUILDING AN ONTOLOGY THAT HELPS IDENTIFY CRIMINAL LAW ARTICLES THAT APPLY TO A CYBERCRIME CASE.

In Proceedings of the Second International Conference on Software and Data Technologies - PL/DPS/KE/WsMUSE, pages 179-185

DOI: 10.5220/0001327801790185

Copyright

c

SciTePress

the concepts in a formal language such as OWL

helps stabilizing the interpretation of these terms.

Besides, expressing a formal ontology in OWL

makes it machine consumable.

In computer science, mainly three kinds of

ontologies are to be distinguished (Sowa, 2007).

Terminological ontologies in which concepts are

named and are structured using mainly relations of

the sub-type/super-type kind. As a matter of fact,

such an ontology which is sometimes referred to as

taxonomy can be expressed by using rules as we will

further do it in the case of the ontology of computer

data suppression. Ontologies of the second kind are

those of which the concepts are built by enumerating

the instances which compose them on the basis of

some metric which defines their similarity. These

concepts come usually as a result of a classification

and are not named beforehand. The third type of

ontology is the most sophisticated. The concepts are

defined by axioms generally expressed in a

decidable fragment of first order logic, namely

Description Logic. Logical inferences can then be

implemented for the classification of new instances.

Incontestably, Description Logic is currently without

the standard for expressing formal ontologies on the

basis of the OWL language for example. Efforts are

carried out to extend it to a system able to handle

knowledge expressed in the form of rules. This way,

requests could be sent to existing rule bases within

the semantic Web (Eiter et al., 2004). Another

advantage that we outline in this extension is the

possibility of supplementing a knowledge

representation based on Description Logic by a rule

based representation when this is more adequate.

The use of rules is all the more relevant when it

comes to take into account certain exceptions which

characterize nonmonotonic reasoning.

2.1 Classes and Properties

Classes are concrete representation for concepts.

Different classes may be identified for representing a

given domain knowledge. They must afterwards be

structured by linking them with relations which can

be subsumption relations or Protégé-OWL relations

called properties.

Properties are relationships between individuals and

an inverse property may be defined for a given

property.

Classes are interpreted as sets of individuals of

similar structure. Classes can be organized in

subclass-superclass hierarchy. The graphical

representation of a hierarchy uses nodes for concepts

and arcs for subsumption relations.

Concretely, a class is defined by describing the

conditions to be satisfied by individuals for they

belong to the class. Note that classes may overlap

and can be made explicitly distinct. .

2.2 Description Logics

A knowledge base using description logic as a

knowledge representation tool has two components :

- the TBox which contains the terminology of the

domain of interest.

- the ABox which contains assertions on individuals

named through the defined terminology.

The vocabulary is composed by concepts which

denote individual sets and roles which denote binary

relations between individuals.

The description language which is specific to each

description logic system has a well defined

semantic: each TBox or ABox declaration may be

identified to a formula of first order logic or a slight

extension of it.

Description logic provides also reasoning tools to

decide for example if a description is consistent or

not or if it is more general than another.

Elementary descriptions are atomic concepts and

atomic roles. These allow more complex

descriptions to be built with concept constructors.

The description logic language we shall use is

defined by the following assertions where C and D

are concepts, A an atomic concept and R a role.

A (atomic concept)

T and ⊥ (universal concept and empty concept)

¬C (concept negation)

C∩D (concept intersection)

C∪D (concept union)

∀R.C (value restriction)

∃R.C (limited existential quantification)

A formal ontology is defined by a set of structured

concepts and a number of inclusions between these

concepts.

The semantics of the concepts and roles is defined

with respect to a domain of interpretation O which

defines the interpretation of each constant A: ι(A)=a.

Concepts are interpreted as subsets of O and roles

are interpreted as binary relations over O satisfying :

ICSOFT 2007 - International Conference on Software and Data Technologies

180

ι(T)=O, ι(⊥)=∅

ι(¬C)= ι-ι(C)

ι(C∩D)= ι(C)∩ι(D), ι(C∪D)= ι(C)∪ι(D)

ι(∀R.C)={d∈O|(d,e)∈ι(R)⇒e∈ι(C) for all e in O}

ι(∃R.C)= {d∈O| there exists e in O s.t. (d,e)∈ι(R)

and e∈ι(C)}

Two frameworks are mainly referred to in practical

logics: logic programming and first order logic. An

important difference between these two frameworks

is the close world assumption (CWA) admitted in

the former and the open world assumption (OWA)

admitted in the latter.

Even if OWL admits primarily the OWA, CWA

may be admitted if stated explicitly. CWA is very

useful for dealing for example with the application

of forward chaining. If in a rule base, only the rule

“IF offence OR crime THEN infringement” infers

the fact infringement, CWA allows inferring that

there is no infringement if none of the facts offence

or crime is established.

3 THE CORPUS

We list in this subsection the French criminal law

articles that are of interest to us and from which

irrelevant metadata has been removed (Légifrance,

2007).

Article 323-1

Fraudulently accessing or remaining within all or

part of an automated data processing system is

punished by one year’s imprisonment and a fine of €

15,000.

Where this behaviour causes the suppression or

modification of data contained in that system, or any

alteration of the functioning of that system, the

sentence is two years’ imprisonment and a fine of €

30,000.

Article 323-2

Obstruction or interference with the functioning of

an automated data processing system is punished by

three years’ imprisonment and a fine of € 45,000.

Article 323-3

The fraudulent introduction of data into an

automated data processing system or the fraudulent

suppression or modification of the data that it

contains is punished by three years’ imprisonment

and a fine of € 45,000.

Article 323-4

The participation in a group or conspiracy

established with a view to the preparation of one or

more offences set out under articles 323-1 to 323-3,

and demonstrated by one or more material actions,

is punished by the penalties prescribed for offence in

preparation or the one that carries the heaviest

penalty.

We shall consider in what follows three concepts:

Malicious actions which are punished by criminal

law, responsibilities related to an action and the

criminal law articles. Other classes of our ontology

such as Sanction and Infringement are of less

interest in what we shall expose.

Several actions may be qualified as being malicious

in computer security and put in classes like privacy

or hacking which in its turn covers classes like

intrusion, denial of service….etc.

The class Malicious_Act depicts a classification for

a sample of malicious actions:

Figure 1: The class of malicious actions.

Criminal law makes a distinction between two types

of responsibilities, objective responsibility which

may be commission, omission or attempt and the

subjective responsibility which describes the

intentional nature of the act. Of course the classes

BUILDING AN ONTOLOGY THAT HELPS IDENTIFY CRIMINAL LAW ARTICLES THAT APPLY TO A

CYBERCRIME CASE

181

and subclasses defining these concepts are

exclusives.

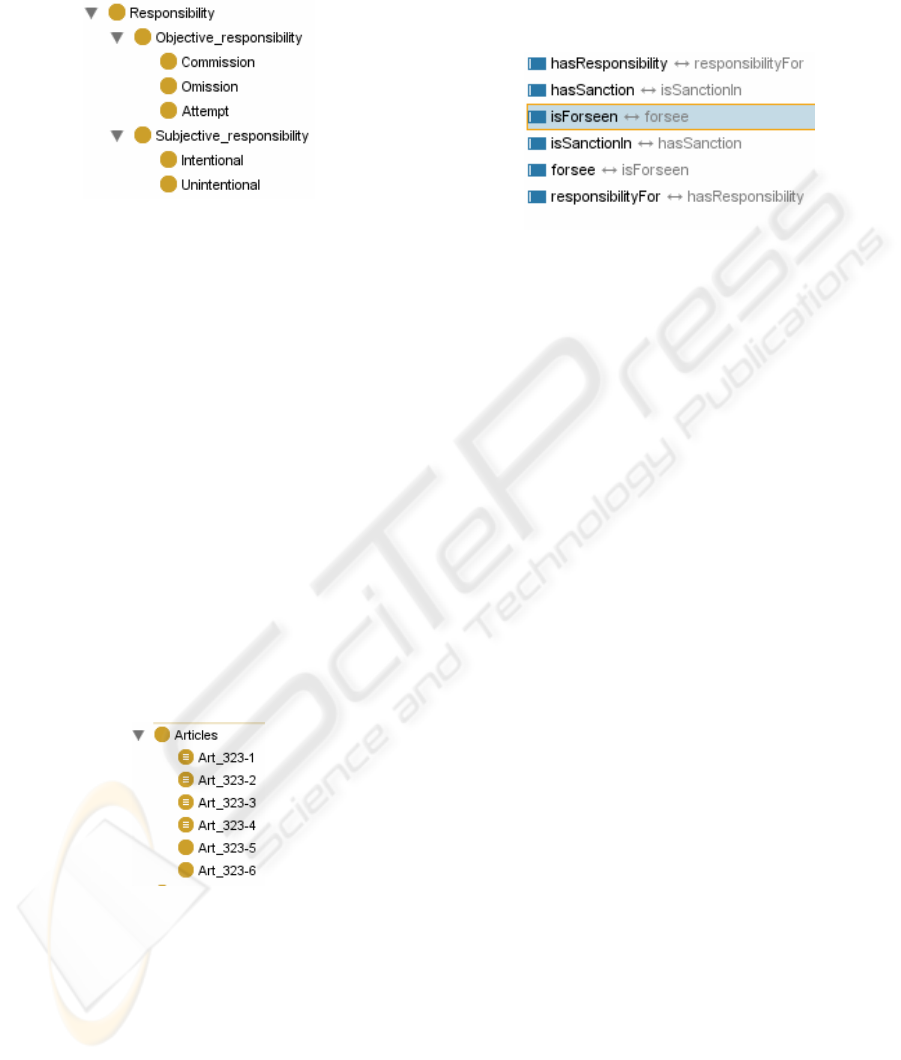

Figure 2: The class of responsibilities.

Criminal law articles which are of interest to us are

grouped in the class Articles. As a matter of fact

there are only six articles that deal directly with

cybercrime. Among these, for our purpose, we shall

consider in particular four articles.

It should be outlined that the conception that we

make of a law article makes of it a class which

groups all the cases it allows to characterize, that is

to say the cases which fall under this article!. In this

respect our model (see appendix) is different from

(Asaro et al., 2003). The rationale behind this

conceptualization is that the concept of case inherits

of the same characteristics and properties as in the

concept of article. Henceforth, the application of our

ontology consists in classifying, if possible, each

case of interest as a subclass of one or more

subclasses of Articles.

Figure 3: The class of articles.

Listed below are some of the relations of interest we

shall use here. In particular, between the two classes

Articles and Responsibility the relation

hasResponsibility specifies the nature of the

responsibility handled in the article which may be

commission, omission or attempt in the case of

objective responsibility or which may be intentional

or unintentional in the case of subjective

responsibility. For each relation its inverse relation

is given. Inverse relations are very useful and and

enhance the way of expressing axioms as we shall

see below.

Figure 4: Properties with their inverses.

4 USING A REASONER

The possibility of using a reasoner to build

automatically the hierarchy of classes is one of the

major advantages in using OWL-DL. Indeed in the

case of important ontologies containing hundreds of

classes the use of a reasoner is crucial, in particular

when dealing with multiple inheritance. Thus the

designer will focus on logical description which is

hierarchical, flexible and consequently easy to

maintain.

4.1 Articles Conceptualization

Ontologies which are described in OWL-DL may be

processed by a reasoner. One of the main tasks

handled by a reasoner is to check if a given class is a

subclass of another class. Another task is to check

consistency, the reasoner can check on the basis of

the class conditions if the class may have instances

or not. A class which has no instances is

inconsistent. Thus a class which is defined to be a

subclass of both classes A and B which are disjoint

will be detected as inconsistent by the reasoner.

Necessary conditions are used to express « if an

object is in this class it necessarily must satisfy these

conditions ». A class which uses only necessary

conditions is called partial.

Necessary and sufficient conditions are used to

express « if an object is in this class it necessarily

must satisfy these conditions and if an individual

satisfy these conditions then it necessary belongs to

this class». Such a class is said to be complete and

allow a CAW reasoning. All the classes we shall

deal with in this paper are complete.

ICSOFT 2007 - International Conference on Software and Data Technologies

182

The classes art_323-1, art_323-2, art_323-3 et

art_323-4 are complete:

Figure 5: Axioms for Art_323-1, Art_323-2 and Art_323-

3 definition.

The reasoner can classify only complete classes.

Several acts may be qualified as being malicious in

computer security and put in classes like privacy or

hacking which in its turn covers classes like

intrusion, denial of service….etc.

The class Malicious_Act depicts a classification for

a sample of malicious actions:

Criminal law makes a distinction between two types

of responsibilities, objective responsibility which

may be commission, omission or attempt and the

subjective responsibility which describes the

intentional nature of the act.

4.2 Reasoning with Counterfactuals

We are going to depict the expressive power of

description logic through an example where it is

made an assumption that contradicts the reality. This

kind of reasoning is called counterfactual reasoning

(Ginsberg, 1986). It allows reasoning on abstract

facts which are inconsistent with actual facts. For

example, solving a case which falls under article

Art_323-4 needs, as stated by this even article, to

compare the case to articles Art_323-1, Art_323-2

and Art_323-3. Solving the case is made possible by

making an assumption in the definition of Art_323-4

which is contrary to what is stated in it. Indeed,

think of a case defined by Attempt and Intrusion. To

realise that this case falls under article_323-4, one

should first assume that in case the responsibility

was Commission then the case would have fallen

under Article_323-1. This is a counterfactual

reasoning as long as the assumption Commission is

contrary to the Attempt responsibility which

characterizes the case at hand. The fact that in

propositional logics the formula A⇒B is equivalent

to ¬A∨B makes it possible to express this

assumption within Description logics.

∃foresee. ∃isForseen.(¬(hasResponsibility.Commiss

ion)∪(Art_323-1∪Art_323-2∪Art_323-3)))

According to the interpretation rules given above,

this is to be understood as the class of articles that

foresee malicious actions that are foreseen in articles

Art_323-1, Art_323-2 or Art_323-3, by assuming

Commission responsibility. Rewritten as:

∃f.∃i.(¬C∪(A1∪A2∪A3))

A1, A2 and A3 are the axioms defining the three

first articles. C stands for articles stating

Commission responsibility. To isolate within the

articles the stated malicious actions from the

responsibility, axioms A1, A2, A3 are rewritten as:

A1≡C∩A1_3

A2≡C∩A2_3

A3≡C∩A3_3

By substitution, we have:

∃f.∃i.(¬C∪(C∩A1_3∪C∩A2_3∪C∩A3_3))

Thus :

∃f.∃i.(¬C∪(C∩(A1_3∪ A2_3∪A3_3)))

It is easy to prove in propositional logic :

¬C∪(C∩X)=¬C∪(¬C∩X)∪(C∩X)=

¬C∪((¬C∪C)∩X)= ¬C∪X

Therefore, we have:

∃f.∃i.(¬C∪A1_3∪A2_3∪A3_3).

The point here is that we have succeeded this way to

evacuate from Art_323-1, Art_323-2 and Art_323-3

the Commission responsibility to make things

BUILDING AN ONTOLOGY THAT HELPS IDENTIFY CRIMINAL LAW ARTICLES THAT APPLY TO A

CYBERCRIME CASE

183

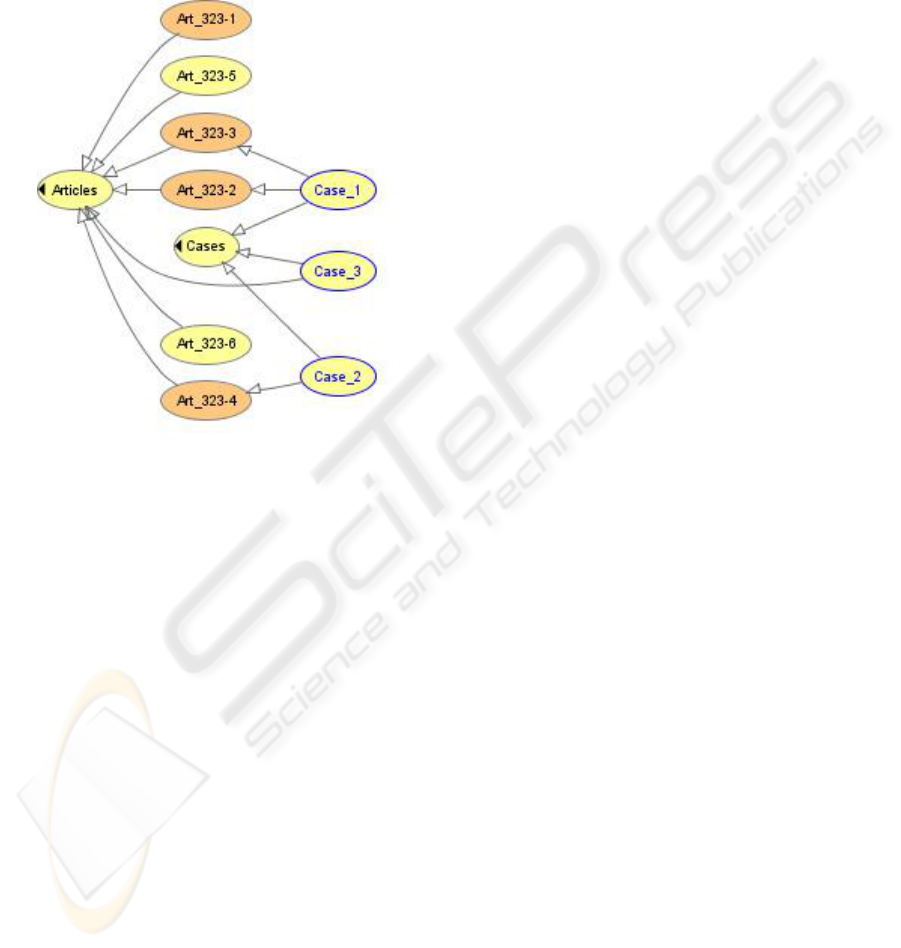

consistent. Figure 6 shows the resolution of three

cases. Case_1 consists in both system blocking and

data modification which have been committed,

thanks to multiple inheritance, and case_2 is a case

where an intrusion attempt has been stated. Case_3

is an example of cases that might not be resolved,

for example a case referring to data theft which does

not appear explicitly in the corpus.

Figure 6: Inferred Ontology.

5 FITTING TECHNICAL

CONCEPTS AND LEGAL

CONCEPTS

Mismatching between legal concepts and technical

concepts constitutes a serious issue (De Lamberterie

and videau, 2006). For example, Computer data

suppression happens to be mentioned in some of

criminal law articles. With no explicit legal

definition, this naturally leads the judge to adopt the

natural language definition for suppression. The

common understanding of the term suppression is

physical suppression where a thing which is

suppressed merely stops existing. However, in the

computer world, suppressing data means very often

logical suppression where data could be restored

with adequate tools. In addition to that, even in the

case of a physical suppression, computer data could

be restored when a backup or archiving politic is

observed by the data processor.

This semantic difference should be definitely

specified because of the penal consequences for a

fraudulent computer data suppression may vary

according to the possibility of recovering the data.

This means that although the act is condemnable in

both cases, the sanction might be worsened or

attenuated depending on the type of suppression.

To make the common understanding of the term

suppression fit the effective definition of the term

computer data suppression, one solution consists in

« connecting » its concept in a legal ontology to its

concept in a computer ontology. This connection

may need some new concepts and new relations with

the two already existing ontologies. New concepts

may also be needed to summarize or to extract from

the second ontology that information which is

readily of interest for a legal reasoning. For

example, in the case we are dealing with, such new

concepts are « restorable data» and «unrestorable

data». These ontological adjustments may prove to

be disproportionate in case where the relevant

information is well defined. It is indeed sufficient to

compute this information by using a rule based

inference engine. As a matter of fact, the second

ontology is principally used to deduce facts rather

than for classification.

6 HYBRID REASONING

In a case where only the subsumption relation is

used to deduce relevant facts, it is sufficient to use

the second ontology in a rule based form within a

propositional logic framework. However the

inference engine to be used should allow non

monotonic reasoning if we wish preserve the

ontology structure in this translation and in the same

time manage conflicting facts. We have chosen to

use an inference engine based on stratified forward

chaining which through an adequate backward

chaining (Bezzazi, 2006) sends questions to the user

to compute which of the facts « existing data » or «

non-existing data » holds for the suppressed data. It

should be noticed that the concept of legal

suppression as well as the concept of computer data

suppression, both inherits somehow of the French

language concept of the term suppression which

normally entails the no more existence of the

suppressed object. Indeed, according to the French

definition, to suppress something is to be understood

as putting an end to the existence of something.

French_suppression > !existence

Legal_suppression > French_suppression

data_suppression > French_suppression.

ICSOFT 2007 - International Conference on Software and Data Technologies

184

Figure 7: The taxonomy of data suppression.

The translation of this ontology fragment as a

rule base yields:

Logical_suppression > data_suppression

Physical_suppression > data_suppression

Formatting > Logical_suppression

Destruction > Physical_suppression

Demagnetization > Physical_suppression

Rewriting > Physical_suppression

Partial_ rewriting > Rewriting

Total_rewriting > Rewriting

We add a rule which expresses that data which

has been logically suppressed may still exist.

Logical_suppression > existence

If logical suppression is established, the stratified

forward chaining will, like an inheritance system

with exceptions, give priority to the application of

this last rule with respect to the more general rule :

French_suppression > !existence

Therefore, this rule base should help the lawyer

or the judge make their decisions or instruct a case

by shedding light on a technical concept lacking a

legal definition. The explanation process is done

through a question-response procedure.

7 CONCLUSIONS

The framework we have presented in this paper is

based on the idea of considering cases as being, by

their structure, subclasses of articles. Therefore, the

problem of solving a case is the same as that of

classifying it. With such a system at work, all one

has to do is implement articles as classes which

should not be a difficult task at least manually.

Doing this in a semi automatic or automatic way

constitutes an interesting topic for investigation. We

have also shown, in a rather practical way, how

counterfactual reasoning and non monotonic

reasoning are naturally used in legal reasoning.

However further work need to be done on this topic

independently of any domain of interest to analyze

the mechanisms that implement counterfactual

reasoning and to what extent this may be done. We

have also introduced some conceptual and technical

ideas related to fitting technical concepts and legal

concepts. Computer data suppression is one example

among other technical concepts which need

clarification such as integrity and anonymity. We

think that such concepts must be identified in the

law texts for their natural ontology be connected to a

well built legal ontology through easily understood

production rules.

REFERENCES

Asaro, C., Biasiotti, M.A., Guidotti, P., Papini, M., Sagri,

M.T., Tiscornia, D. A, 2003. Domain Ontology:

Italian Crime Ontology, ICAIL 2003 Workshop on

Legal Ontologies

Bench-Capon, T.J.M., and Visser, P.R.S., 1997.

Ontologies in Legal Information Systems: The Need

for Explicit Specifications of Domain

Conceptualisations. In Proceedings of the Sixth

International Conference on AI and Law. ACM Press.

Bezzazi, H., 2006. On some inferences based on stratified

forward chaining: an application to e-Government.

Proceedings of the 15th International Conference on

Information System Development.

Breuker, J., Elhag, L., Petkov, E., and Winkels,R., 2002.

Ontologies for legal information serving and

knowledge management. In Legal Knowledge and

Information Systems. Jurix 2002: The Fifteenth

Annual Conference. Amsterdam. IOS Press.

De Lamberterie, I., and Videau, M.,. 2006. Regards

croisés de juristes et d'informaticiens sur la sécurité

informatique. In Symposium sur la Sécurité des

Technologies de l'Information et des Communications.

Rennes, mai-juin 2006.

Eiter, T., Lukasiewicz, T., Schindlauer, R., and Tompits.

H., Combining answer set programming with

description logics for the semantic web. In

Proceedings of the 9th Int. Conf. on the Principles of

Knowledge Representation and Reasoning.

Ginsberg, M. L., 1986. Counterfactuals. Artificial

Intelligence 30:35-79.

Légifrance, Retrieved February 4, 2007, from Web site

http://www.legifrance.gouv.fr/html/codes_traduits/cod

e_penal_textan.htm

Protégé, Retrieved February 4, 2007, from Web site

http://protege.stanford.edu

Racer, Retrieved February 4, 2007, from Web site

http://www.racer-systems.com

Sowa, J. F. Ontology. Retrieved February 4, 2007, from

Web site: http://www.jfsowa.com/ontology

Valente, A., 1995. Legal Knowledge Engineering; A

Modelling Approach, IOS Press, Amsterdam, The

Netherlands.

BUILDING AN ONTOLOGY THAT HELPS IDENTIFY CRIMINAL LAW ARTICLES THAT APPLY TO A

CYBERCRIME CASE

185