ROTATION-INVARIANT IRIS RECOGNITION

Boosting 1D Spatial-Domain Signatures to 2D

Stefan Matschitsch, Herbert St

¨

ogner, Martin Tschinder

School of Telematics & Network Engineering, Carinthia Tech Institute, Austria

Andreas Uhl

Department of Computer Sciences, University of Salzburg, Austria

Keywords:

Biometric authentication, iris recognition, rotation invariance.

Abstract:

An iris recognition algorithm based on 1D spatial domain signatures is improved by extending template data

from mean vectors to 2D histogram information. EER and shape of the FAR curve is clearly improved as

compared to the original algorithm, while rotation invariance and the low computational demand is maintained.

The employment of the proposed scheme remains limited to the similarity ranking scenario due to its overall

FAR/FRR behaviour.

1 INTRODUCTION

With the increasing usage of biometric systems in

general the interest in non-mainstream modalities

rises naturally. Iris recognition systems are claimed to

be among the most secure modalities exhibiting prac-

tically 0% FAR and low FRR which makes them in-

teresting candidates for high security application sce-

narios. An interesting fact is that the iris recognition

market is strongly dominated by Iridian Inc. based

technology which is based on algorithms by J. Daug-

man (Daugman, 2004). The corresponding feature

extraction algorithm employes 2D Gabor functions.

However, apart from this approach, a wide variety of

other iris recognition algorithms has been proposed in

literature, most of which are based on a feature extrac-

tion stage involving some sort of transform (see e.g.

(Ma et al., 2004; Zhu et al., 2000) for two examples

using a wavelet transform).

Controlling the computational demand in biomet-

ric systems is important, especially in distributed sce-

narios with weak and low-power sensor devices. Inte-

gral transforms (like those already mentioned or oth-

ers like DFT, DCT, etc.) cause substantial complex-

ity in the feature extraction stage, therefore feature

extraction techniques operating in the spatial domain

have been designed (e.g. (Ko et al., 2007)) thus avoid-

ing the additional transform complexity.

An additional issue causing undesired increase in

complexity is the requirement to compensate for the

possible effects of eye tilt. For example, the match-

ing stage of the Daugman scheme involves multiple

matching stages using several shifted versions of the

template data which is a typical approach. As a conse-

quence, rotation invariant iris features are highly de-

sired to avoid these additional computations.

Global iris histograms (Ives et al., 2004) combine

both advantages, i.e. rotation invariant features ex-

tracted in the spatial domain thus providing low over-

all computational complexity. However, FAR and

FRR are worse compared to state of the art tech-

niques. A recent approach (Du et al., 2006) uses ro-

tation invariant 1D signatures with radial locality ex-

tracted from the spatial domain. Still, also the latter

technique suffers from unsatisfactory FAR and FRR

and thus is only recommended to be used in a simi-

larity ranking scheme (i.e. determining the n closest

matches). In this work we aim at improving this algo-

rithm.

In Section 2, we will review the original version

of the algorithm and then describe the improvements

conducted. Section 3 provides experimental results.

We first describe the experimental settings (employed

data and software used). Subsequently, we present

and discuss our experimental results providing EER

improvements over the original version of the algo-

rithm. Section 4 concludes the paper and gives out-

look to future work.

232

Matschitsch S., Stögner H., Tschinder M. and Uhl A. (2008).

ROTATION-INVARIANT IRIS RECOGNITION - Boosting 1D Spatial-Domain Signatures to 2D.

In Proceedings of the Fifth International Conference on Informatics in Control, Automation and Robotics - SPSMC, pages 232-235

DOI: 10.5220/0001480102320235

Copyright

c

SciTePress

2 ROTATION INVARIANT IRIS

SIGNATURES

Iris texture is first converted into a polar iris image

which is a rectangular image containing iris texture

represented in a polar coordinate system. Note that

the ISO/IEC 19794-6 standard defines two types of

iris imagery: rectilinear images (i.e. images of the en-

tire eye like those contained in the CASIA database)

and polar images (which are basically the result of

iris detection and segmentation). As a further pre-

processing stage, we compute local texture patterns

(LTP) from the iris texture as described in (Du et al.,

2006). We define two windows T (X,Y ) and B(x, y)

with X > x and Y > y (we use 15 × 7 pixels for T and

9 × 3 pixels for B). Let mT be the average gray value

of the pixels in window T . The LTP value of pixels in

window B at position (i, j) is then defined as

LTP

i, j

= |I

i, j

− mT |

where I

i, j

is the intensity of the pixel at position (i, j)

in B. Note that due to the polar nature of the iris tex-

ture, there is no need to define a border handling strat-

egy. LTP represents thus the local deviation from the

mean in a larger neighbourhood.

In order to cope with non-iris data contained in the

iris texture, LTP values are set to non-iris in case 40%

of the pixels in B or 60% of the pixels in T are known

to be non-iris pixels.

2.1 The Original 1D Case

The original algorithm (Du et al., 2006) computes

the mean of the LTP values of each row (line) of the

polar iris image and concatenates those mean values

into a 1D signature which serves as the iris template.

Clearly, this vector is rotation invariant since the mean

over the rows (lines) is not at all affected by eye tilt. If

more then 65% of the LTP values in a row are non-iris,

this signature element is ignored in the distance com-

putation. In order to assess the distance between two

signatures, the Du measure is suggested (Du et al.,

2006) which we apply in all variants.

2.2 The 2D Extension

LTP row mean and variance capture first order statis-

tics of the LTP histogram. In order to capture more

properties of the iris texture without losing rota-

tion invariance we propose to employ the row-based

LTP histograms themselves as features (since his-

tograms are known to be rotation invariant as well

and have been used in iris recognition before (Ives

et al., 2004)). This adds a second dimension to the

signatures of course (where the first dimension is the

number of rows in the polar iris image and the second

dimension is the number of bins used to represent the

LTP histograms).

In fact, we have a sort of multi-biometrics-

situation resulting from these 2D signatures, since

each histogram could be used as a feature vector on

its own. We suggest two fusion strategies for our 2D

signatures:

1. Concatenated histograms: the histograms are sim-

ply concatenated into a large feature vector. The

Du measure is applied as it is in the original ver-

sion of the algorithm.

2. Accumulated errors: we compute the Du measure

for each row (i.e. each single histogram) and ac-

cumulate the distances for all rows.

The iris data close to the pupil are often said to be

more distinctive as compared to “outer” data. There-

fore we propose to apply a weighting factor > 1 to

the most “inner” row, a factor = 1 to the “outer”-most

row and derive the weights of the remaining rows by

linear interpolation. These weights are applied to the

“accumulated errors” fusion strategy by simply mul-

tiplying the distances obtained for each row by the

corresponding weight.

3 EXPERIMENTAL STUDY

3.1 Setting and Methods

For all our experiments we considered images with 8-

bit grayscale information per pixel from the CASIA

1

v1.0 iris image database. We applied the experimen-

tal calculations on the images of 108 persons in the

CASIA database using 7 iris images of each person

which have all been cropped to a size of 280 × 280

pixels.

The employed iris recognition system builds upon

Libor Masek’s MATLAB implementation

2

of a 1D

version of the Daugman iris recognition algorithm.

First, this algorithm segments the eye image into the

iris and the remainder of the image (“iris detection”).

Subsequently, the iris texture is converted into a po-

lar iris image. Additionally, a noise mask is generated

indicating areas in the iris polar image which do orig-

inate from eye lids or other non-iris texture noise.

Our MATLAB implementation uses the extracted

iris polar image (360 × 65 pixels) for further process-

ing and applies the LTP algorithm to it. Following the

1

http://www.sinobiometrics.com

2

http://www.csse.uwa.edu.au/˜pk/

studentprojects/libor/sourcecode.html

ROTATION-INVARIANT IRIS RECOGNITION - Boosting 1D Spatial-Domain Signatures to 2D

233

suggestion in (Du et al., 2006), we discard the upper

and lower three lines of the LTP polar image due to

noise often present in these parts of the data (resulting

in a 360 × 59 pixels LTP patch). The 1D and 2D sig-

natures described in the last section are then extracted

from these patches.

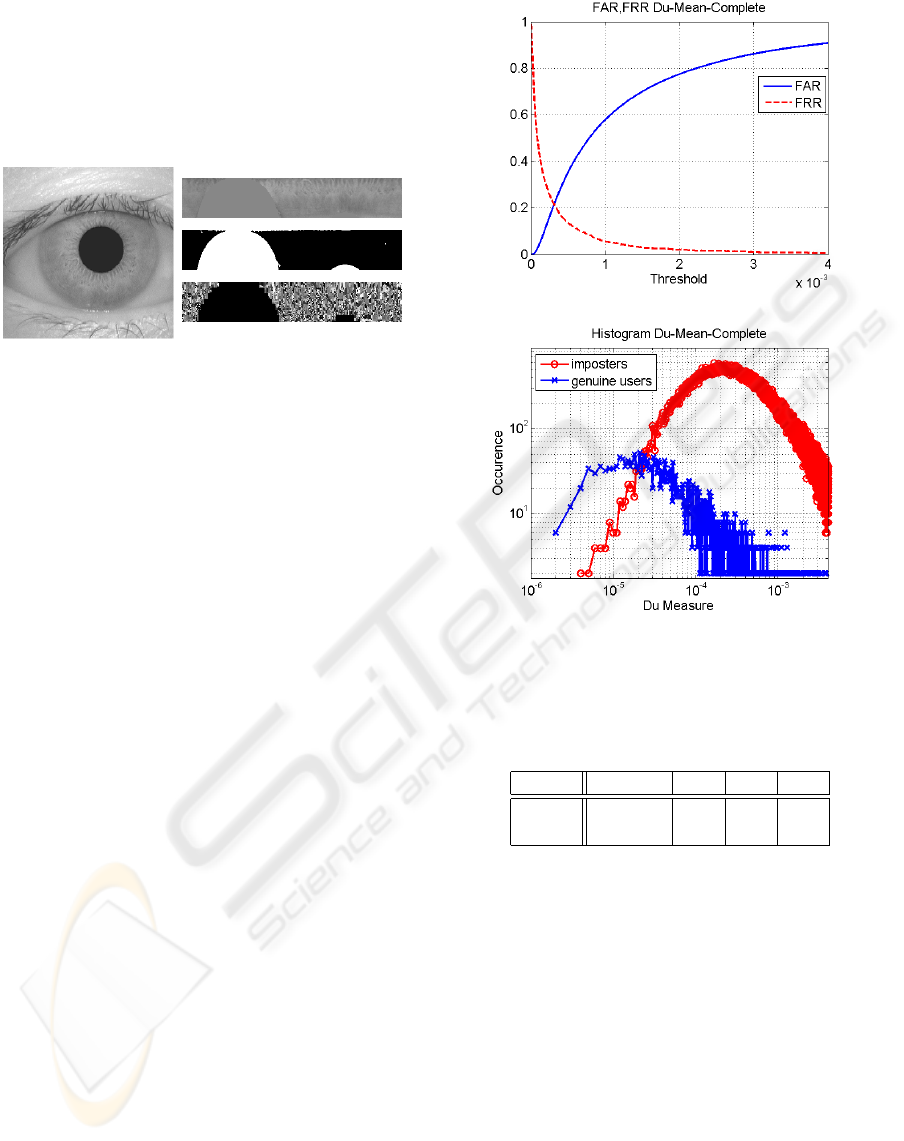

Figure 1: CASIA iris image and the corresponding iris tem-

plate, noise mask, and LTP patch.

Figure 1 shows an example of an iris image of

one person (CASIA database), together with the ex-

tracted polar iris image, the noise mask, and the LTP

patch (template, noise mask, and LTP patch have been

scaled in y-direction by a factor of 4 for proper dis-

play).

3.2 Experimental Results

In Figure 2.a, we show the ROC curve of the origi-

nal version of the Du approach employing 1D signa-

tures based on LTP row mean vectors. EER is rather

high with 0.22 and especially the concave shape of the

FAR curve for the Du algorithm depicts a steep slope

close to zero which means that low FAR values cause

unrealistically high FRR. The latter result illustrates

the reason why this algorithm is restricted to the simi-

larity ranking scenario in the original work (Du et al.,

2006).

The reasons for the respective behaviour can be

seen in Figure 2.b. The overlap between genuine users

and imposters distributions is is very large for the Du

approach, obviously causing the high EER.

When turning to 2D signatures, we compare dif-

ferent fusion strategies and histogram resolutions in

Table 1 with respect to their EER. While it is obvious

that too many histogram bins lead to poor results (im-

portant histogram properties are concealed by noise),

also a reduction to 20 bins results in lower EER as

compared to 100 bins. When comparing the two fu-

sion strategies, accumulating distances (AD) at a row

basis is clearly superior to simple histogram concate-

nation (HC) at a reasonable histogram resolution. In

this scenario, we are clearly able to improve EER

as compared to the original Du algorithm (from 0.22

down to 0.16).

(a) ROC-plot: ERR 0.22

(b) Genuine users and imposters distributions

Figure 2: Behaviour of the original DU algorithm.

Table 1: EER for two assessment variants and different his-

togram resolutions (2D signatures).

# bins 1500450 255 100 20

HC 0.3 0.2 0.18 0.19

AD 0.32 0.16 0.16 0.18

Note also, that histgram resolution up to 255 is

beneficial for accumulating errors fusion while it is

not for histogram concatenation. This is an intuitive

result, since in case of histogram concatenation the

vectors to be compared in the Du measure are already

fairly long overall, while this is not the case for accu-

mulating errors fusion.

Table 2 compares three weighting strategies for

the accumulated errors fusion strategy. The best re-

sults are obtained when using weight 4 for the LTP

row closest to the pupil. This result confirms the as-

sumption, that “inner” iris information is most impor-

tant for recognition purposes.

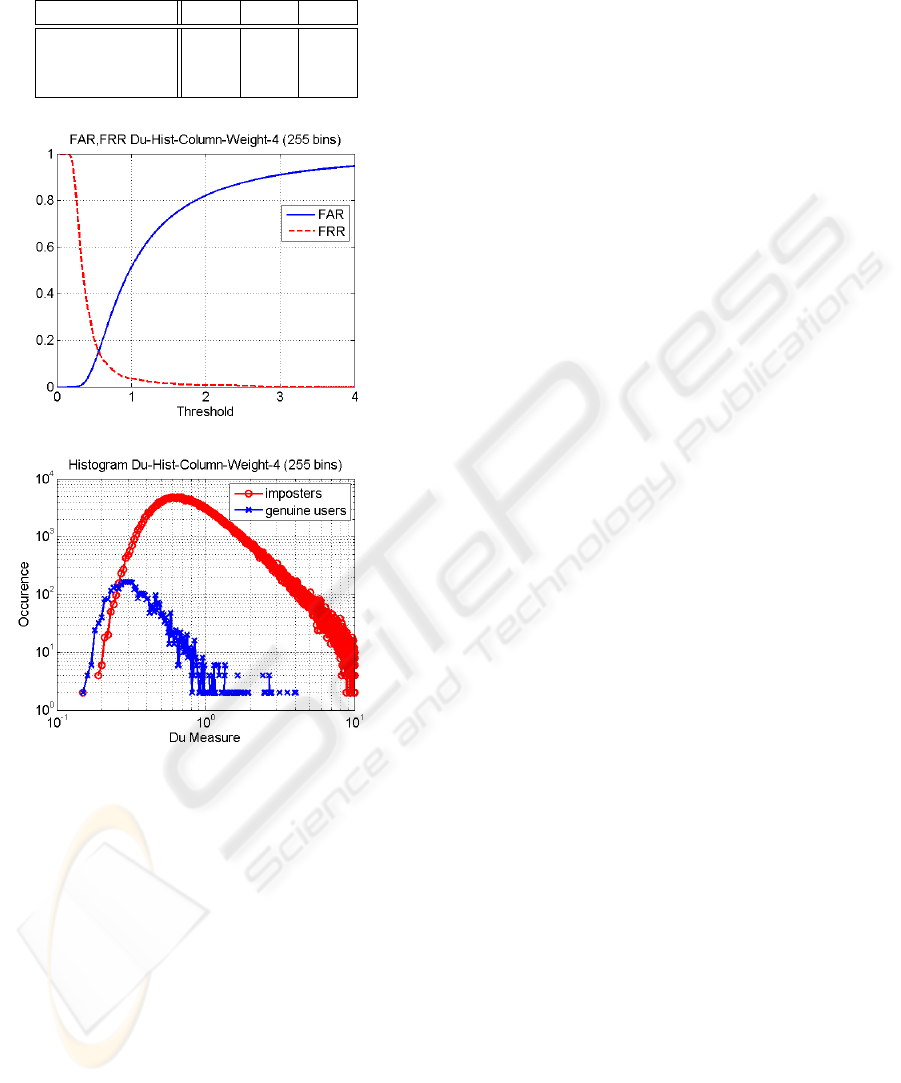

We display the ROC curve for the best setting for

accumulted error fusion strategy in Figure 3.a. The

graph exhibits a much better behaviour of the FAR

ICINCO 2008 - International Conference on Informatics in Control, Automation and Robotics

234

Table 2: EER for three weighting variants and different his-

togram resolutions (2D signatures).

histogram bins 255 100 20

no weight 0.16 0.16 0.18

weight 2 0.15 0.15 0.19

weight 4 0.15 0.15 0.16

(a) ROC-plot: EER 0.15

(b) Genuine users and imposters distributions

Figure 3: Behaviour of best 2D Du variant (accumulated

errors (weight 4, 255 bins)).

curve in proximity of zero as compared to the original

one which documents also the improved behaviour.

Finally, we visualize genuine users and imposters

distributions the same 2D variant of the Du algorithm

in Figure 3.b which confirms improvements with re-

spect to the original algorithm.

4 CONCLUSIONS

In this work we have improved an iris recognition al-

gorithm based on 1D signatures extracted from the

spatial domain by including histogram based infor-

mation instead of mean values. While we succeeded

in maintaining rotation invariance in our improved

version, FAR and FRR are still significantly worse

compared to state of the art identification techniques

which limits this improvement to the employment in

a similarity ranking scheme as it is the case for the

original version.

One reason for the still disappointing behaviour

is as follows: when shifting the different rows in the

polar iris image with a different amount against each

other, the 2D signatures (as well as the 1D signatures

of course) are preserved. This operation corresponds

to the rotation of concentric circles of iris pixels by

an arbitrary amount – still, the signatures for all those

artificially generated images are identical. Our results

indicate that indeed information about the spatial po-

sition of frequency fluctuations in iris imagery is cru-

cial for effective recognition.

ACKNOWLEDGEMENTS

Most of the work described in this paper has been

done in the scope of a semester project in the mas-

ter program on “Communication Engineering for IT”

at Carithia Tech Institute.

REFERENCES

Daugman, J. (2004). How iris recognition works. IEEE

Transactions on Circiuts and Systems for Video Tech-

nology, 14(1):21–30.

Du, Y., Ives, R., Etter, D., and Welch, T. (2006). Use

of one-dimensional iris signatures to rank iris pattern

similarities. Optical Engineering, 45(3):037201–1 –

037201–10.

Ives, R., Guidry, A., and Etter, D. (2004). Iris recognition

using histogram analysis. In Conference Record of the

38th Asilomar Conference on Signals, Systems, and

Computers, volume 1, pages 562–566. IEEE Signal

Processing Society.

Ko, J.-G., Gil, Y.-H., Yoo, J.-H., and Chung, K.-I. (2007).

A novel and efficient feature extraction method for iris

recognition. ETRI Journal, 29(3):399 – 401.

Ma, L., Tan, T., Wang, Y., and Zhang, D. (2004). Efficient

iris recognition by characterizing key local variations.

IEEE Transactions on Image Processing, 13:739–750.

Zhu, Y., Tan, T., and Wang, Y. (2000). Biometric personal

identification based on iris patterns. In Proceedings of

the 15th International Conference on Pattern Recog-

nition (ICPR’00), volume 2, pages 2801–2804. IEEE

Computer Society.

ROTATION-INVARIANT IRIS RECOGNITION - Boosting 1D Spatial-Domain Signatures to 2D

235