Image Feature Significance for Self-position Estimation

with Variable Processing Time

Kae Doki, Manabu Tanabe, Akihiro Torii and Akiteru Ueda

Dept. of Mechanical Engineering, Aichi Institute of Technology

1247 Yachigusa, Yakusa-cho, Toyota, Aichi, Japan

Abstract. We have researched about an action planning method of an autono-

mous mobile robot with a real-time search. In the action planning based on a

real-time search, it is necessary to balance the time for sensing and time for

action planning in order to use the limited computational resources efficiently.

Therefore, we have studied on the sensing method whose processing time is vari-

able and constructed a self-position estimation system with variable processing

time as an example of sensing. In this paper, we propose a self-position estima-

tion method of an autonomous mobile robot based on image feature significance.

In this method, the processing time for self-position estimation can be varied by

changing the number of image features based on its significance. To realize this

concept, we conceive the concepts of the significance on image features, and ver-

ify three kinds of equations which respectively express the significance of image

features.

1 Introduction

An autonomous mobile robot is one of the most interesting targets in the field of the

robotics. It is very expected not only in the industrial field but also in the community

like an office, a hospital and a house in the future. Among various kinds of problems

for an autonomous mobile robot, we have focused on and researched about its action

planning methods with the real-time search [1][2]. In the action planning with the real-

time search, a robot action is acquired through the recognition of the current situation

and the action search on the ground. Therefore, the computational resource for the ac-

tion planning is limited, and the situation around a robot changes every moment in a

dynamic environment where the robot moves. Under these circumstances, we have to

consider the balance between the time for recognition and the time for action search

according to the situation around the robot in order to utilize the limited computational

resource efficiently. This idea is based on Anytime Sensing which has been proposed

by S.Zilberstein et.al[3], and one of crucial features in the action planning with the

real-time search. As an example of recognition, we deal with a self-position estimation

problem for the robot with vision and construct a self-position estimation system with

variable processing time based on the above idea.

In this system, it is assumed that the robot estimates the current self-position by

matching an acquired input image with stored images which indicate certain positions

in the environment. The normalized correlation coefficient [7] is applied as a criterion

Doki K., Tanabe M., Torii A. and Ueda A. (2009).

Image Feature Significance for Self-position Estimation with Variable Processing Time.

In Proceedings of the 5th International Workshop on Artificial Neural Networks and Intelligent Information Processing, pages 134-142

DOI: 10.5220/0002261001340142

Copyright

c

SciTePress

Fig.1. Self-position Estimation System.

of the template matching. In order to use the limited computational resource efficiently,

only image features in a stored image are used in the self-position estimation process.

Moreover, the processing time for the self-position estimation is varied by changing the

number of the image features utilized for the template matching. In this case, it is im-

portant that the number of the image features is varied according to priority in order not

to deteriorate the performance of the self-position estimation.[5][6] To realize this idea,

it is essential to decide how important each image feature is for the self-position estima-

tion. By changing the number of the image features based on image feature importance,

stable self-position estimation can be realized even if the time for the self-position esti-

mation is shortened. According to these reasons, a new self-position estimation method

with variable processing time is proposed based on image feature significance in this

research. In this paper, the concepts based on image feature importance are conceived,

and three types of equations are defined as an indicator of significance on image fea-

tures. These equations are compared through some experimental results.

2 Self-Position Estimation with Variable Processing Time

2.1 Self-position Estimation System

As a framework of a self-position estimation problem, the robot moves in the indoor en-

vironment and estimates which key location it is around in the environment. It obtains

images at key locations and image templates are generated with them as a prepara-

tion for the self-position estimation.At the self-position estimation, an input image is

obtained and it is processed through image processing for noise reduction and gray

scaling. Then, an input image matches stored image templates and the estimation result

is output. In the case of Fig.1(a), the highest value of the similarity is one with the posi-

tion B. Therefore, the output is the position B as the estimation result. In this paper, the

normalized correlation coefficient(NCC) is applied as a criterion for the similarity.

135

Fig.2. Generation Method of Image Template.

R =

P

M

x

P

N

y

f(x, y) −

¯

f

· {g(x, y) − ¯g}

q

P

M

x

P

N

y

f(x, y) −

¯

f

2

q

P

M

x

P

N

y

{g(x, y) − ¯g}

2

(1)

Eq.1 indicates the normalized correlation coefficient between two images F and

G. They are grey-scale images whose size is M × N. The parameters f (x, y) and

g(x, y) are the brightness of the pixel at the coordinate (x, y) in each image F and G.

The parameters

¯

f and ¯g are the mean value of f (x, y) and g(x, y) respectively. When

f(x, y) is entirely identical to g(x, y) , the value of R is 1.0. On the other hand, when

the image f(x, y) is completely different from g(x, y) , R is -1.0. Here, it is assumed

that f(x, y) is an input image obtained at the current position of the robot, and that

g(x, y) is an image template stored in the robot. By the image template matching using

this equation, it is estimated that the robot is around the position which is indicated by

the image template with the highest value of R in the stored image templates.

2.2 Changing Method of Processing Time

The time for the image template matching occupies most of the processing time for

the self-position estimation in this system. This is because NCC is the pixel-based cal-

culation which requires computational resources. According to the above equation, the

computational amount for calculating R is in proportion to the number of pixels. There-

fore, only image features are utilized for the self-position estimation, and the number

of the image features is changed in order to change its processing time.

The image features are extracted as some rectangle areas from a stored image

through the image processing. shows. A set of extracted rectangles is called as an image

template in this paper, and template generation method is described in the next section.

The similarity between an input image and an image template is the average of NCC

value on all rectangle areas utilized for the self-position estimation.

2.3 Generation Method of Image Templates

Fig.2 shows the generation method of the image template for the self-position estima-

tion. First of all, feature points in each stored image are extracted with Harris opera-

tor[8] which is widely utilized for image feature extraction. As Fig.2(a) shows, a mass

of feature points is extracted around the distinctive parts in a stored image. Thus, these

points are grouped with hierarchical clustering as Fig.2(b). Then, a rectangle is created

136

Fig.3. Image Feature Importance from Viewpoint of Self-poisition Estimation.

Fig.4. Image Feature Importance from Computational Resources.

which covers a group of feature points as Fig.2(c) shows. These rectangle areas are

utilized for image features for the self-position estimation.

3 Addition of Significance to Image Features

3.1 Concept of Image Feature Significance

The processing time for the self-position estimation is varied by changing the number

of the image features utilized for the template matching in this system. In this case,

the more effective image feature for the self-position estimation should be given higher

preference over the others. For example, when the processing time is short, only crucial

image features to the self-position estimation are utilized to guarantee the quality of

the self-position estimation. On the other hand, more image features are used for the

self-position estimation to realize robust self-position estimation when the processing

time is long. In order to realize the above idea, it is essential to decide how important

each image feature is for the self-position estimation. Therefore, the following three

concepts are conceived to define the importance of each image feature for the self-

position estimation.

Fig.3 shows the relationship between the NCC values for one image feature and

its significance. The horizontal axis is a NCC value between an image feature and an

obtained input image, and the vertical axis is its probability. Input images are influ-

enced by the robot motion, therefore NCC values changes for the same image feature.

137

In the self-position estimation system, the current position is estimated as one which is

indicated by the image template with the highest similarity for an input image. There-

fore, the higher NCC values for the images obtained at correct position are better for

the self-position estimation on an image feature. Moreover, the higher NCC values for

the images obtained at wrong positions is worse for the self-position estimation on the

image feature even if the NCC value is pretty high as Fig.3(a) shows. Therefore, the

difference between NCC values at correct and wrong positions is important for the self-

position estimation in addition to the absolute value of NCC at the correct position.

Moreover, when the NCC values widely changes for various input images, the distri-

bution of NCC value at the correct position overlaps the one at the wrong positions as

Fig.3(b). This overlap causes the error of the self-position estimation. Therefore, the

variance of NCC value should be also considered for stable self-position estimation.

Fig.4 shows the relationship between the size of the image feature and its signifi-

cance. This is the concept from the viewpoint of the computational resources. As men-

tioned in Section 1, the computational resources is limited in the action planning with

the real-time search, therefore it is important to utilize the computational resources

efficiently. Here, let two rectangles drawn in Fig.4 stand for image features for the es-

timation of a certain position. As mentioned in Section 2.2, the computational amount

for the self-position estimation is in proportion to the number of pixels of an image

feature. Therefore, if the NCC values of these two image features are very similar as

this figure shows, it is apparent that the small image feature is better than the large one.

According to this reason, the size of image features should be considered on the image

feature significance.

3.2 Equations of Image Feature Significance

Based on the above concepts, the three kinds of equations are formulated as a indicator

of significance on image features.

E

1

= k

1

µ

c

+ (µ

c

− max

j

µ

w(j)

) (2)

E

2

= k

1

µ

c

+ {(µ

c

− k

2

σ

c

) − max

j

(µ

w(j)

+ k

2

σ

w(j)

)} (3)

E

3

= E

2

− k

3

S

p

P

h

q=1

S

q

(4)

µ

c

, µ

w

: mean value of NCC of correct or wrong images

σ

c

, σ

w

: variance of NCC of correct or wrong images

j : number of positions

k

1

∼ k

3

: weight

S

p

: number of pixels of image features

h : number of image features

Eq.(2) indicates the significance on an image feature which considers only the mean

value of NCC at the correct position and the difference of NCC values between the cor-

rect position and wrong ones.In this equation, the first term is the absolute value of NCC

138

for an input image obtained at the correct position. The second term is the difference of

NCC values between the correct and wrong positions. k

1

is a weight in order to balance

these two parameters. The larger this value becomes, the more important the image fea-

ture becomes for the self-position estimation. Eq.(3) indicates the significance on an

image feature which considers the variance of NCC values at the correct and wrong

positions.The larger the variance of NCC values becomes, the smaller the second and

third terms become. Eq.(4) indicates the image feature significance which considers the

computational amount for the self-position estimation in addition to the quality of the

self-position estimation. The second term is a percentage of the pixel of an image fea-

ture for all image features. Therefore, the second term becomes large when the size of

an image feature becomes large.

4 Experimental Results and Verification

4.1 Experimental Setup

In order to examine the proposed method, we performed some experiments. First of all,

six image templates were generated which respectively distinguished from the position

A to the position F. The value of significance was calculated for each image feature in

a image template according to the above equations. The weights in Eq.2, Eq.3 and Eq.4

were 0.14, 1.20, and 1.20 respectively. These parameters were decided experimentally.

Then, a real robot performed the self-position estimation with the acquired image tem-

plates in a real environment. In this experiment, the number of the image features was

changed from one up to five according to the priority given by each equation of the im-

age feature significance. The self-position estimation was performed a hundred times at

each key location.

4.2 Generated Image Templates

Fig.5 shows the image templates which were generated with the method in Section

2.3. As this figure shows, rectangles which cover distinctive parts in each stored image

were generated as an image template. Fig.6 shows the top five rectangles ranked by

each equation expressed in Section 3.2 for the templates of Position E. This is because

the rank of the image features in this image template changed characteristically. As

this figure shows, unique image features have higher priority in each image template.

Moreover, comparing image features ranked by Eq.(2) with ones by Eq.(3), the ranking

of some image features changes. This is because image features which are ranked lower

in Eq.(3) are much influenced by the robot motion, therefore, the variance of NCC

values becomes large. In addition, image features with a larger size are ranked lower by

Eq.(4) in comparison with ones ranked by Eq.(4). This is because larger image features

are ranked by Eq.(4) from the viewpoint of the computational resources.

4.3 Performance of Self-position Estimation

In order to examine the image feature significance ranked by Eq.(2), Eq(3) and Eq.(4),

the experiment of the self-position estimation with a real-robot was performed. In this

139

Fig.5. Generated Templates.

Fig.6. Comparison of Image Feature Ranking(Position E).

experiment, the number of the image feature changed according to the priority ranked

by each equation, and the self-position estimation was performed with only selected

image features. The robot succeeded the estimation of its current position at all positions

in all trials. In order to compare the quality of each equation, the relationship between

the performance of the self-position estimation and each equation on image feature

significance was examined. In addition, the relationship between the processing time

and each equation on image feature significance was also examined.

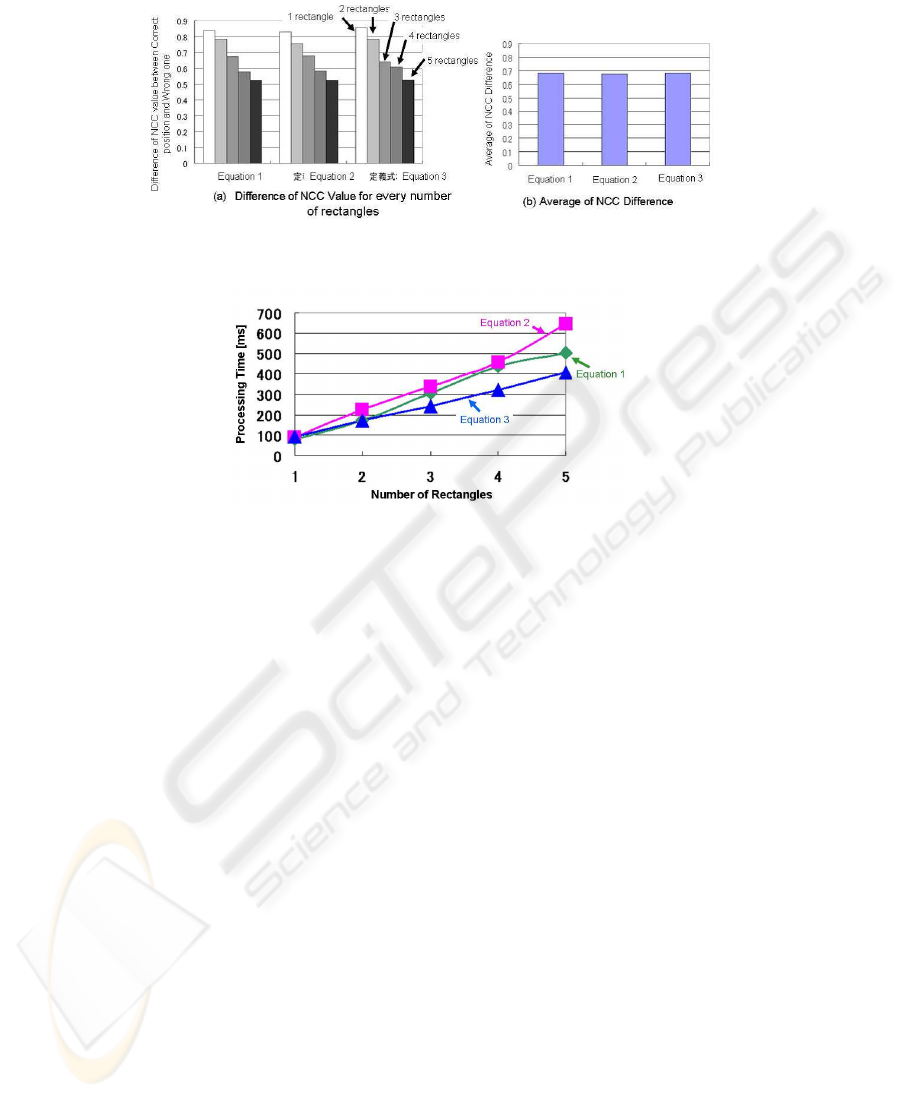

Fig.7 shows the difference of NCC values between the correct and wrong positions

on the image template of the position E. The larger this value becomes, the more clearly

the image template can distinguish the correct position from wrong ones. According to

this result, all image templates have almost same quality Fig.8 shows the processing

time for the self-position estimation when the number of the image features in an image

template changes. As Fig.8 shows, the processing time with the image template ranked

by Eq.4 is the shortest in allequations. This is because Eq.4 considers the computational

resources for the self-position estimation. According to these results, Eq.4 is the best

indicator of image feature significance.

140

Fig.7. Difference of NCC values between the correct and wrong positions for the template.

Fig.8. Processing Time for Self-position Estimation.

5 Conclusions

A new self-position estimation method with variable processing time was proposed

based on image feature significance in this research. It was demonstrated that the stable

and efficient self-position estimation could be realized with an image template based on

image feature significance even if the number of the image feature was changed. For the

future work, the self-position estimation with an omni-directional image will be tackled

based on the proposed method. Moreover, it will be examined how the environmental

change influences the performance of the self-position estimation.

References

1. K.Fujisawa et. al: A real-time search for autonomous mobile robots, J.of the Robotics Society

of Japan, Vol.17, No.4, pp.503-512,1999

2. K.Doki et.al: Environment Representation Method Suitable for Action Acquisition of an Au-

tonomous Mobile Robot, Proc. of International Conference on Computer,Communication

and Control Technologies,Vol.5, pp.283-288,2003

3. S.Zilberstein et al.: Anytime Sensing, Planning and Action: A Practical Model for Robot

Control, the ACM magazine,1996

4. K.Doki et al.: Self-Position Estimation of Autonomous Mobile Robot Considering Variable

Processing Time, Proc. of The 11th IASTED International Conference on Artificial Intelli-

gence and Soft Computing, 584-053(CD-ROM), 2007

141

5. T.Taketomi, T.Sato, N.Yokoya: Fast and robust camera parameter estimation using a feature

landmark database with priorities of landmarks, Technical report of IEICE. PRMU, Vol.107,

No.427, pp.281-286, 2008

6. N.Mitsunaga, M.Asada: Visual Attention Control based on nformation Criterionfor a Legged

Mobile Robot that Observes the Environment while Walking, J. of the Robotics Society of

Japan, Vol.21, No.7, pp.819-827, 2003

7. Y.Satoh et.al: Robust Image Registration Using Selective Correlation Coefficient, Trans. of

IEE Japan, Vol. 121-C, No.4, pp.1-8, 2001

8. Nixon, Mark S. , Aquado, Alberto S.: Feature Extraction and Image Processing(2ND), Aca-

demic Pr, 2008

9. M.Asada et. al: Purposive Behavior Acquisition for a Robot by Vision-Based Reinforcement

Learning, Journal of the Robotics Society of Japan, Vol.13, No.1, pp.68-74,1995

10. H.Koyasu et.al: Recognizing Moving Obstacles for Robot Navigation Using Real-Time

Omni-directional Stereo Vision, J.of Robotics and Mechatronics, Vol.14, No.2, pp.147-

156,2002

142