CHANGING TOPICS OF DIALOGUE FOR NATURAL

MIXED-INITIATIVE INTERACTION OF CONVERSATIONAL

AGENT BASED ON HUMAN COGNITION AND MEMORY

Sungsoo Lim, Keunhyun Oh and Sung-Bae Cho

Department of Computer Science,Yonsei University, Seoul, Korea

Keywords: Mixed-initiative interaction, Global workspace, Semantic network, Spreading activation theory.

Abstract: Mixed-initiative interaction (MII) plays an important role in conversation agent. In the former MII research,

MII process only static conversation and cannot change the conversation topic dynamically by the system

because the agent depends only on the working memory and predefined methodology. In this paper, we

propose the mixed-initiative interaction based on human cognitive architecture and memory structure. Based

on the global workspace theory, one of the cognitive architecture models, proposed method can change the

topic of conversation dynamically according to the long term memory which contains past conversation. We

represent the long term memory using semantic network which is a popular representation for storing

knowledge in the field of cognitive science, and retrieve the semantic network according to the spreading

activation theory which has been proven to be efficient for inferring in semantic networks. Through some

dialogue examples, we show the usability of the proposed method.

1 INTRODUCTION

Conversational agent can be classified into user-

initiative, system-initiative, and mixed-initiative

agent with the subject who plays a leading role when

solving problems. In the user-initiative

conversational agent, the user takes a leading role

when continuing the conversation, requesting

necessary information and services to the agent with

the web searching engine and question-answer

systems. On the other hand, in the system-initiative

conversational agent, the agent calls on users to

provide information by answering the predefined

questions. Although the various conversational

agents have been suggested with the user-initiative

or system-initiative, these techniques still have

significant limitations for efficient problem solving.

To overcome their limitations, the mixed-initiative

interaction has been discussed extensively.

Mixed-initiative conversational agent is defined

as the process the user and system; which both have

the initiative; and solve problems effectively by

continuing identification of each other’s intention

through mutual interaction when needed (Hong et al.,

2007, Tecuci et al., 2007). Macintosh, Ellis, and

Allen (2005) showed that mixed-initiative

interaction can provide better satisfaction to the user,

comparing system based interface and mixed-

initiative interface through ATTAIN (Advanced

Traffic and Travel Information System) (Macintosh

et al., 2005).

The research to implement mixed-initiative

interaction has been studied widely. Hong used

hierarchical Bayesian network to embody mixed-

initiative interaction (Hong et al., 2007), and Bohus

and Rundnicky utilized dialogue stack (Bohus and

Rudnicky, 2009). However, these methods, which

utilize predefined methodology depending on

working memory solely, can process only static

conversation and cannot change topics naturally.

In this paper, we focus on the question – how

could the conversational agent naturally change the

topics of dialogue? We assume that the changed

topics in human-human dialogue are related with

their own experiences and the semantics which are

presented in the current dialogues.

We apply the global workspace theory (GWT)

on the process of changing topics. GWT is a simple

cognitive architecture that has been developed to

account qualitatively for a large set of matched pairs

of conscious and unconscious processes. On the

view of memory, we define the consiousness part as

a working memory where the attended topics on the

107

Lim S., Oh K. and Cho S. (2010).

CHANGING TOPICS OF DIALOGUE FOR NATURAL MIXED-INITIATIVE INTERACTION OF CONVERSATIONAL AGENT BASED ON HUMAN

COGNITION AND MEMORY.

In Proceedings of the 2nd International Conference on Agents and Artificial Intelligence - Artificial Intelligence, pages 107-112

DOI: 10.5220/0002733501070112

Copyright

c

SciTePress

working memory are candidates of next topics, and

the unconsiousness part as a long term memory. By

the broadcasting process of GWT, the most related

unconsious process is called and becomes a consious

process which means one of the candidates of next

topics in this paper. We model the unconsiousness

part (or long term memory) using semantic network

and the boradcating process using the spreading

activate theory.

2 RELATED WORKS

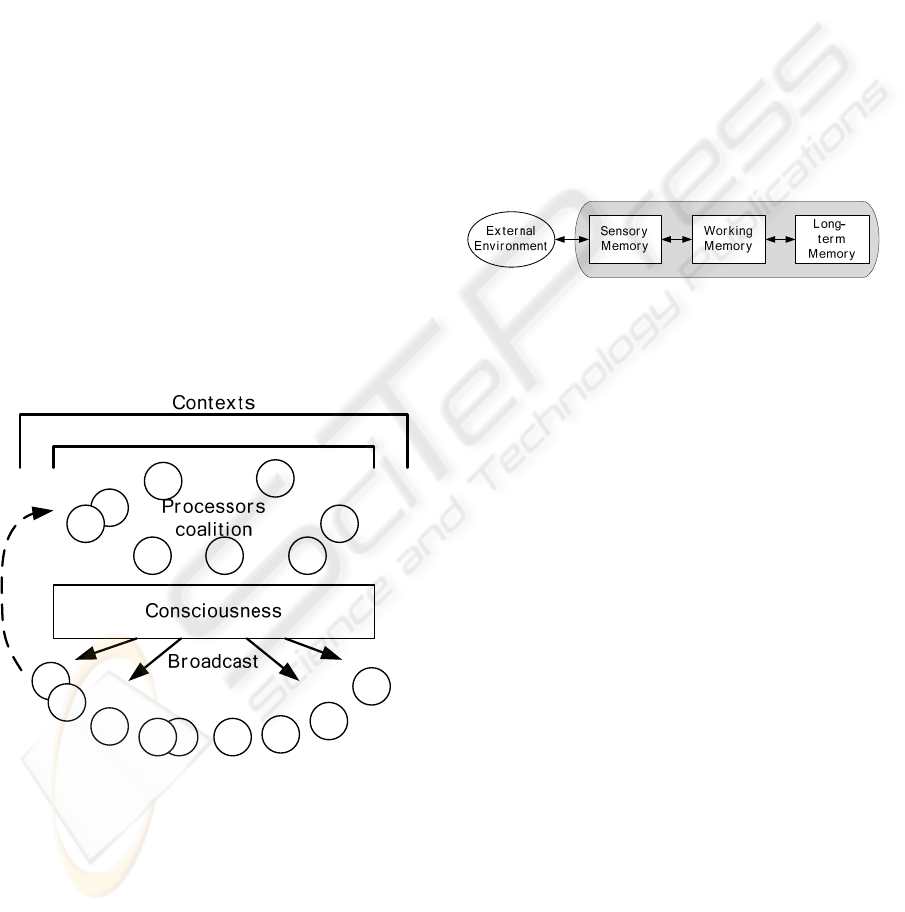

2.1 Global Workspace Theory

Global workspace theory models the problem

solving cognitive process of the human being. As

figure 1 shows, there is independent and different

knowledge in the unconscious area. This theory

defines the independent knowledge as the processor.

Simple work such as listening to the radio is possible

in the unconscious but complex work is not possible

in the unconscious only. Hence, he calls the

necessary processors through the consciousness and

solves the faced problem by combining processors

(Moura, 2006).

Figure 1: Global workspace theory.

An easy way to understand about GWT is in

terms of a “theatre metaphor”. In the “theatre of

consciousness” a “spotlight of selective attention”

shines a bright spot on stage. The bright spot reveals

the contents of consciousness, actors moving in and

out, making speeches or interacting with each other.

In this paper, the bright spot could be interpreted as

current topic of dialogue, the dark part on the stage

as the candidate topics of next dialogue, and the

outside of the stage represents the unconsciousness

part (long term memory).

2.2 Structure of Human Memory and

Semantic Network

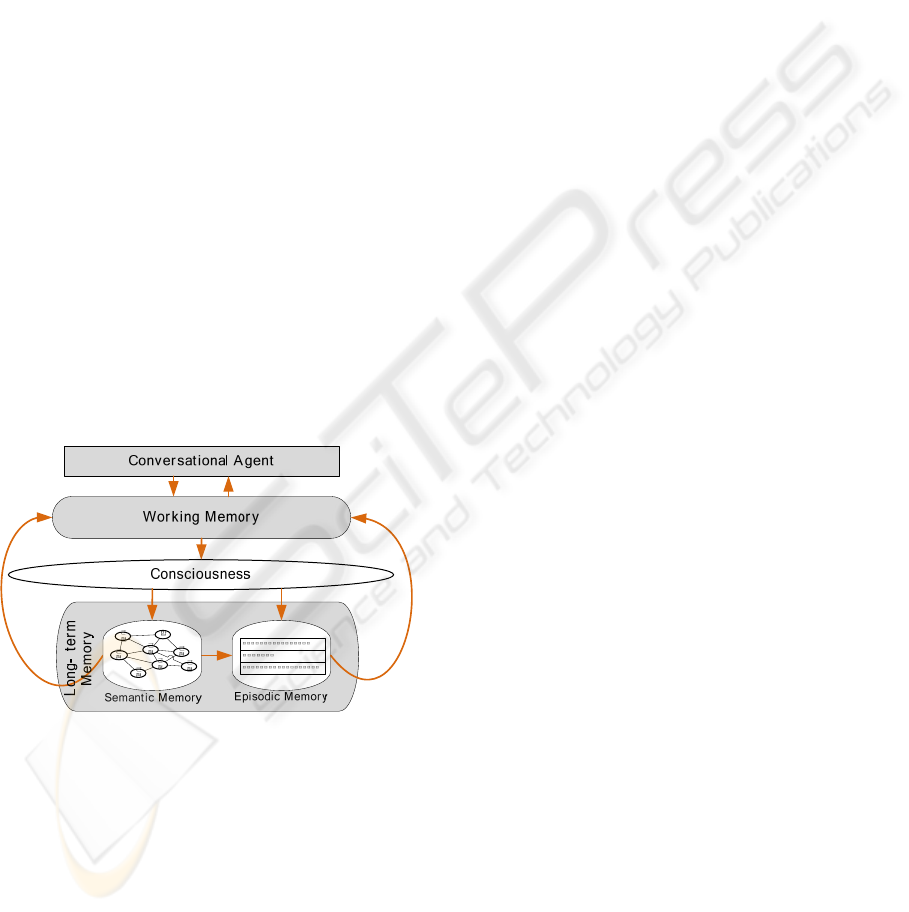

Figure 2 shows the structure of human memory.

Sensory memory receives the information or stimuli

from the outside environment, and the working

memory solves problems with the received

information. The working memory cannot contain

the received memory in the long term since it store

the information only when the sensory memory is in

the cognition process of the present information.

Hence, the working memory calls the necessary

information from the long term memory when the

additional memory needed (Atkinson and Shiffrin,

1968).

Figure 2: Human memory structure.

The long term memory of human can be

classified into non-declarative memory which cannot

be described with a certain language and declarative

memory which can be. In the conversation agent, we

deal the declarative memory and construct the

declarative memory in the long term memory. The

declarative memory is divided into semantic

memory and episodic memory. Semantic memory is

the independent data which contain only the

relationship, and episodic memory is the part which

stores data related with a certain event (Squire and

Zola-Morgan, 1991).

In this research, for the domain conversation, we

transfer the needed keywords and the past

conversation record to semantic memory and

episodic memory respectively, and express

descriptive memory as semantic network. In the

field of cognitive science, semantic network is a

popular representation for storing knowledge in long

term memory (Anderson, 1976). Semantic network

is the directional graph which consists of nodes

connected with edges. Each node represents the

concept, and the edge represents the relationship

between concepts the nodes mean. Semantic

network is mainly used as a form for knowledge

symbol, and it is simple, natural, clear, and

significant (Sowa, 1992). Semantic network is

utilized to measure the relationship between the

created keywords during the present conversation

and the past conversation, and generate system-

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

108

initiative interaction when the past conversation with

the significant level of relationship is discovered.

2.3 Spreading Activation Theory

Spreading activation serves as a fundamental

retrieval mechanism across a wide variety of

cognitive tasks (Anderson, 1983). It could be applied

as a method for searching semantic networks. In the

spreading activation theory, the activation value of

each and every node spreads to its neighbouring

nodes. As the first step of the search process, a set of

source nodes (e.g. concepts in a semantic network)

is labelled with weights or activation values and then

iteratively propagating or spreading that activation

out to other nodes linked to the source nodes.

3 CHANGING TOPICS IN

CONVERSATIONAL AGENT

3.1 System Overview

In this paper, we proposed a method for changing

topic of dialogue in conversational agent. Figure 3

represents the overview of proposed method

adapting memory structure of human and global

workspace theory.

Figure 3: Overview of proposed method.

In the working memory, the information needed

to process the present conversation and the

candidates of next topics of dialogues are stored.

Long term memory is composed of semantic

memory and episodic memory. Semantic memory

expresses the relationship between important

keywords in the conversation, and episodic memory

stores the past conversation which is not completed.

The proposed method is to represent such two types

of memory into be a semantic network.

Conversation agent process the current dialogue

using the information in working memory. When the

current dialogue is ended, it selects some candidates

of next topics in the long term memory by

broadcasting using spreading activation. If there are

some topics which have more activation value than

threshold, they are called and become candidates of

next topics. Finally, the conversational agent selects

the most proper topics from the candidate topics.

3.2 Conversational Agent

In this paper, we adjust and adapt the conversation

agent using CAML (Conversational Agent Markup

Language) to our experiment (Lim and Cho, 2007).

CAML has designed in order to reduce efforts on

system construction when applying the conversation

interface to a certain domain. It helps set up the

conversation interface easily by building several

necessary functions of domain services and

designing the conversation scripts without

modifying the source codes of conversational agent.

The agent using CAML works as following.

1) Analyze the user’s answers and choose the

scripts to handle them

2) Confirm the necessary factors to offer service

which is provided by the chosen scripts. (If

there is no information of the factors, system

asks the user about them.)

3) Provide services

This agent use both stack and queue of the

conversation topics to manage the stream of

conversation, and provide different actions to the

identical input by the faced situation. System

initiative conversation with working memory is

already constructed; we focus on the system

initiative conversation using long term memory.

3.3 Semantic Networks and Spreading

Activation

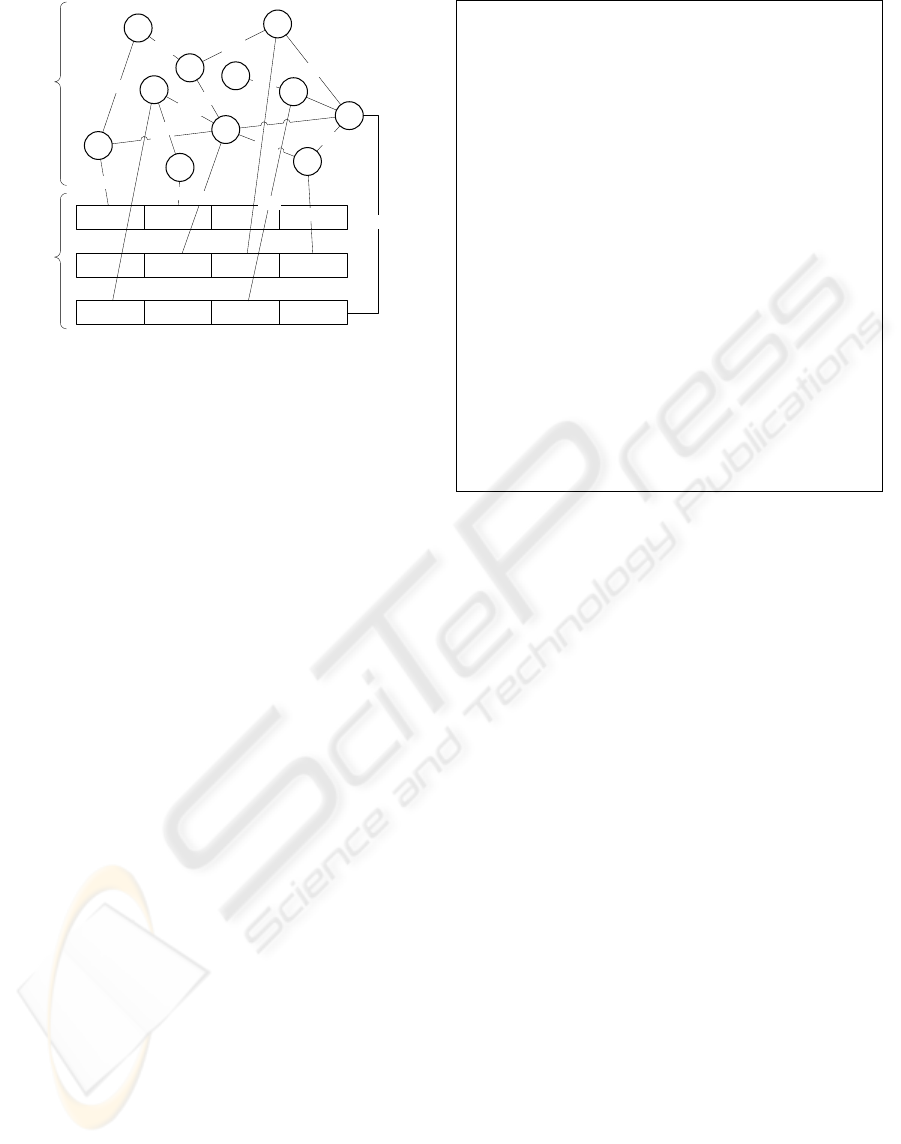

Figure 4 shows the structure of semantic network we

used in this research. The internal nodes in the

network consist of semantic memories, the leaf

nodes episodic memories, and the edges show the

intensity of connections.

Formally, a semantic networks model can be

defined as a structure

),,,( WRCN

δ

=

consisting of

CHANGING TOPICS OF DIALOGUE FOR NATURAL MIXED-INITIATIVE INTERACTION OF

CONVERSATIONAL AGENT BASED ON HUMAN COGNITION AND MEMORY

109

0.76

0.85

0.95

0.90

0.45

0.78

0.81

0.93

0.81

0.68

0.87

0.83

0.85

0.97

0.89

0.92

0.96

Episodic

Memory

Semantic

Memory

Figure 4: Semantic networks.

z A set of concepts C = {C

s

, C

e

} which represents

the semantics of keywords and episodic memory

respectively,

z A set of semantic relation R = {R

s

Æ

s

, R

s

Æ

e

}

which represents the semantic relations between

keyword and keyword, and between keyword

and episodic memory respectively,

z A function

δ

: Cⅹ C→ R, which associates a

pair of concepts with a particular semantic

relation,

z A set of weight functions W = {W

c

(c

x

), W

r

(c

x

,

c

y

)}which assign weights to concepts and

relations respectively.

The past conversation stored in episodic memory

C

e

goes up to working memory by broadcasting, and

we customize this process with applying spreading

activation theory. Searching network is done by BFS

(breath first search) algorithm using priority queue,

and calculates the level of relationship between the

corresponding node and the working memory when

searching from the node to the next node. Finally, it

calculates the level of relationship of episodic

memory, the leaf node, and if it has relationship over

a certain level, corresponding information goes up to

working memory.

Figure 5 shows the pseudo code of Semantic

network searching process. For the first stage of

spreading activation, it gets the initial semantics

which is appeared keywords in the current dialogue.

The activation values for these concepts are set 1.

Then the activation values are spread through the

semantic relations. The spreading values are

calculated as follow:

c

y

.value = c

x

.value*W

r

(c

x

,c

y

)*W

c

(c

y

)

procedure SpreadingActivation

input: C

s

// a set of semantics

in working memory

output: E // a set of episodic

memory

begin

Q.clear() // Q = priority queue

for each c

x

in C

s

Q.push(c

x

)

end for

while Q is not Empty

c

x

= Q.pop()

for each linked concept c

y

with c

x

c

y

.value =

c

x

.value*W

r

(c

x

,c

y

)*W

c

(c

y

)

if c

y

.value < threshold1

then continue

if isVisitedConcept(c

y

) is false

then Q.push(c

y

)

if c

y

is episodic memory

then E.insert(c

y

)

end for

end while

end proc

Figure 5: Pseudo code for Spreading Activation.

The weight function W

c

(c

x

) returns 1 when c

x

∈

C

s

, and returns the priority of episodic memory

when c

x

∈ C

e

.

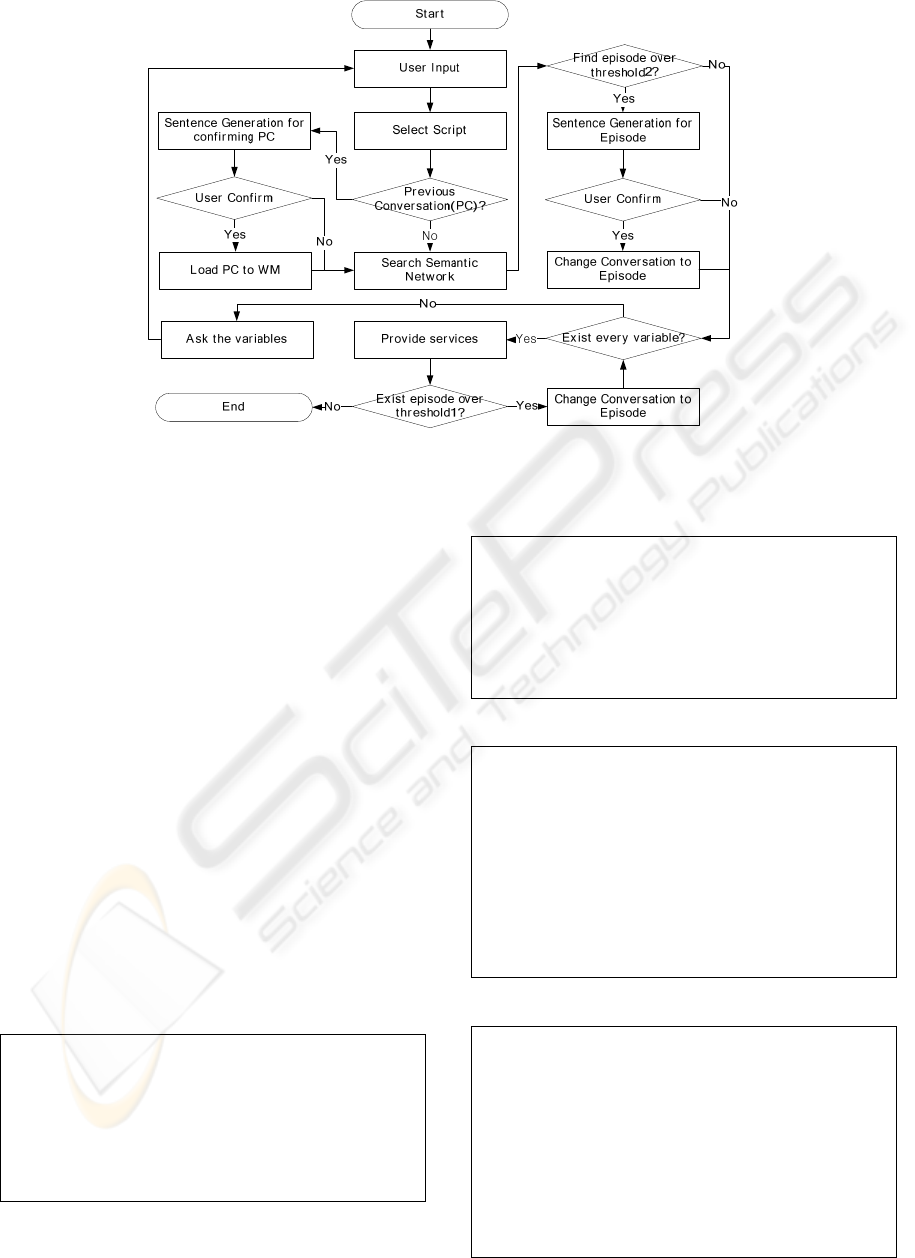

Figure 6 represents a flow chart of the

conversation process in conversation agents. In this

paper, we use the 2 level of the threshold values. If

the level of relationship is over the threshold value 1,

the information has a significant link. If the level is

over the threshold value 2, it means that it has both a

significant link and urgency. If there is episodic

memory over the threshold value 2, the agent stops

the current conversation to deal the past

conversation. And if there is episodic memory with

only threshold value 1, the agent waits the current

conversation to be finished and then it precedes the

past conversation.

4 DIALOGUE EXAMPLES

We use the scheduling program domain to test the

utility of the proposed method. Agent needs 4

factors: the type of schedule, subject, time and

location. It leaves the conversation unhandled if the

factors are unknown because of user’s situation.

Hence, Semantic network for this scheduling

conversation agent have 4 types of internal nodes of

in the network: the type of schedule, subject, time,

location. The leaf nodes have episodes with 4 values.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

110

Figure 6: Flow chart of the conversation process.

In this paper, we show how the scheduling program

works on 4 situations, when the episodic memory is

constructed.

The First scenario shows the general schedule

registration process. The agent asks the necessary

information and registers the corresponding schedule

when all information is obtained. The second

scenario shows the past schedule reprocessing.

Whenever the schedule information gathered, the

agent confirms that there is any correspondence, and

continue the past conversation if there is the same

data as showed in the scenario. In the third scenario,

there exists related conversation which has

activation value between threshold value 1 and

threshold value 2, so that the agent continues the

related conversation after the present conversation

had finished. If the activation value of related

conversation is bigger than threshold value 2, it

stops the present conversation and process the past

conversation ahead because it means the related

conversation has high priority.

1) Register the schedule

U: I got a dinner appointment.

A: Who will you meet?

U: I’m going to meet Gina.

A: What time will you meet her?

U: At 7 P.M.

A: Where do you meet her?

U: We decided to meet at Yeouido.

A: Okay. The new dinner schedule had registered.

2) Register unprocessed schedule

U: I got a meeting appointment with Jaenam.

A: Is that a dinner appointment at Shinchon you

told me on June 13.

U: Yes, that’s right.

A: Did you arrange the time to meet?

U: Yes. I decided to meet him at 6 P.M.

A: Okay. The dinner schedule had registered.

3) Process the related conversation

U: I and Kangseok will go out to drink.

A: What time will you meet him?

U: Tomorrow. At 8 P.M.

A: Where do you meet him?

U: At Apgujeong.

A: Okay. The new schedule had registered.

Did you arrange the place of tomorrow’s dinner

with Gina?

U: Not yet. I’ll tell you when I decide.

A: Okay.

4) Process the related conversation 2

U: I will study with Gina on this weekend.

A: Did you decide where you and Gina have

dinner tonight?

U: Oh! Let it be Shinchon.

A: Okay. The location had registered. When do

you study?

U: At 2 P.M.

A: Where do you study?

U: At Gongdeok.

A: Okay. The study schedule had registered.

CHANGING TOPICS OF DIALOGUE FOR NATURAL MIXED-INITIATIVE INTERACTION OF

CONVERSATIONAL AGENT BASED ON HUMAN COGNITION AND MEMORY

111

5 CONCLUSIONS

Former conversation agent provides mixed-initiative

conversation according to the predefined

methodology and it only depends on the working

memory so that only static conversation can be

processed. Hence, in this research, we have studied

to give an active function to conversational agent

that can change the topic of dialogues. The proposed

method models the declarative memory of long term

memory with the semantic network, and implements

the broadcasting process in global workspace theory

using spreading activation. By searching relevant

episode memory with current dialogue, the

conversational agent can change the topic of

dialogue naturally.

As showed in the dialogue examples, the

proposed method works according to the

relationship between the present conversation and

long term memory so that the various mixed

initiative conversation can be occurred.

Hereafter, it is necessary to form the semantic

network automatically by using the frequency of

appeared keywords during the conversation and

coherence of keywords. Also, the adaptation of

memory reduction function is required to calculate

the relationship smoothly.

ACKNOWLEDGEMENTS

This research was supported by the Conversing

Research Center Program through the National

Research Foundation of Korea(NRF) funded by the

Ministry of Education, Science and Technology

(2009-0093676).

REFERENCES

Anderson, J.R., 1976. Language, memory and thought,

Erlbaum, Hillsdale, NJ.

Anderson, J.R., 1983. A spreading activation theory of

memory. Journal of Verbal Learning and Verbal

Behavior, New York, Academic Press.

Atkinson, R.C. and Shiffrin, R.M. , 1968. Human

memory: A proposed system and its control processes,

The psychology of learning and motivation, Elsevier.

Bohus, D. and Rudnicky, A. I., 2009. The RavenClaw

dialog management framework: Architecture and

systems, Computer Speech & Language, Elsevier.

Hong, J.-H. et al., 2007. Mixed-initiative human-robot

interaction using hierarchical Bayesian networks. IEEE

Trans. Systems, Man and Cybernetics, IEEE.

Lim, S. and Cho, S.-B., 2007, CAML: Conversational

agent markup language for application-independent

dialogue system, 9th China-India-Japan-Korean Joint

Workshop on Neurobiology and Neuroinformatics.

Macintosh, A. et al., 2005. Evaluation of a mixed-

initiative dialogue multimodal interface, the 24th SGAI

International Conf. on Innovative Techniques and

Applications of Artificial Intelligence, Springer-Verlag.

Moura, I., 2006. A model of agent consciousness and its

implementation, Neurocomputing, Elsevier.

Sowa, J. F., 1992. Semantic networks revised and

extended for the second edition, Encyclopedia of

Artificial Intelligence, John Wiley & Sons.

Squire, L.R. and Zola-Morgan, S., 1991. The medial

temporal lobe memory system, Science, AAAS.

Tecuci, G. et al., 2007. Seven aspects of mixed-initiative

reasoning: An introduction to this special issue on

mixed-initiative assistants, AI Magazine, AAAI.

ICAART 2010 - 2nd International Conference on Agents and Artificial Intelligence

112