FORMALIZING DIALECTICAL REASONING FOR

COMPROMISE-BASED JUSTIFICATION

Hiroyuki Kido, Katsumi Nitta

Interdisciplinary Graduate School of Science and Engineering, Tokyo Institute of Technology, Tokyo, Japan

Masahito Kurihara

Graduate School of Information and Science, Hokkaido University, Sapporo, Japan

Daisuke Katagami

Faculty of Engineering, Tokyo Polytechnic University, Tokyo, Japan

Keywords: Argument-based reasoning, Compromise, Dialectical thought, Deliberation.

Abstract: Chinese traditional philosophy regards dialectics as a style of reasoning that focuses on contradictions and

how to resolve them, transcend them or find the truth in both. Compromise is considered to be one possible

way to resolve conflicts dialectically. In this paper, we formalize dialectical reasoning as a way for deriving

compromise. Both the definition of the notion of compromise and the algorithm for dialectical reasoning

are proposed on an abstract complete lattice. We prove that the dialectical reasoning is sound and complete

with respect to the compromise. We propose the concrete algorithm for dialectical reasoning characterized

by definite clausal language and generalized subsumption. The algorithm is proved to be sound with respect

to the compromise. Furthermore, we expand an argumentation system to handle compromise arguments, and

illustrate that an agent bringing up a compromise argument realizes a compromise based justification towards

argument-based deliberation.

1 INTRODUCTION

Argumentation in artificial intelligence, often called

computational dialectics, is rooted in Aristotle’s idea

of evaluating argumentation in a dialogue model

(Hamblin, 1970). In contrast, there exist various def-

initions of dialectics in history (Rescher, 2007) and

it leads to various interpretations in various areas to-

day. For instance, psychologist Nisbett interprets di-

alectics as a style of reasoning intended to find a

middle way (Nisbett, 2003), some logicians as for-

mal logic disrespecting the law of noncontradiction

(Carnielli et al., 2007), and some computer scientists

as the study of systems mediating discussions and ar-

guments between agents, artificial and human (Gor-

don, 1995). In particular, Nisbett pointed out that

there is a style of reasoning in Eastern thought, trace-

able to the ancient Chinese, which has been called

dialectical, meaning that it focuses on contradictions

and how to resolve them or transcend them or find the

truth in both. His experiments showed that, compared

with Westerners, Easterners have a greater preference

for compromise solutions and for holistic arguments,

and they are more willing to endorse both of two ap-

parently contradictory arguments. Moreover, he con-

trasted a logical approach and a dialectical approach

for conflicting propositions, and pointed out that the

former one would seem to require rejecting one of the

propositions in favor of the other in order to avoid

possible contradiction, and the latter one would favor

finding some truth in both, in a search for the Middle

Way. We think that the perspective opens up a new

horizon for argumentationinartificial intelligence, es-

pecially in argument-based deliberation and negotia-

tion, because argumentation is a prominent way for

conflict detection, social decision making and con-

sensus building, and the latter two cannot be achieved

without such kinds of thought. However, there is lit-

tle work on computational argumentation directed to

dialectical conflict resolution we mentioned above.

In this paper, we formalize dialectical reasoning

as a way for deriving a compromise. This is based on

the knowledge that a compromise is a possible way

for realizing dialectical conflict resolution, and our

355

Kido H., Nitta K., Kurihara M. and Katagami D..

FORMALIZING DIALECTICAL REASONING FOR COMPROMISE-BASED JUSTIFICATION.

DOI: 10.5220/0003181903550363

In Proceedings of the 3rd International Conference on Agents and Artificial Intelligence (ICAART-2011), pages 355-363

ISBN: 978-989-8425-40-9

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

idea that compromise mechanisms should be calcu-

lated through reasoning mechanism. We give a formal

definition of compromise and an algorithm for dialec-

tical reasoning both on an abstract complete lattice.

We show that the algorithm is sound and complete

with respect to the compromise. We propose a con-

crete and sound algorithm for dialectical reasoning

characterized by definite clausal language and gener-

alized subsumption on the assumption that arguments

are constructed from knowledge bases. We expand

the argumentation system (Prakken, 1997) to han-

dle compromise arguments and illustrate that compro-

mise arguments realize compromise-based justifica-

tion towards argumentation for deliberation.

This paper is organized as follows. Section 2

gives a motivational example of dialectical thought

and Section 3 shows preliminaries. Section 4 defines

a notion of compromise and Section 5 proposes both

abstract and concrete algorithms for dialectical rea-

soning. Section 6 expands an argumentation system

and illustrates the effectiveness of compromise argu-

ments. Section 7 discusses the related works, and Sec-

tion 8 describes the conclusion and future works.

2 MOTIVATIONAL EXAMPLE

Let us consider the simple deliberative dialogue by

which agents A and B try to decide which camera to

buy. Theyare assumed to have their individual knowl-

edge bases from which they make arguments. The

worst situation for them is assumed that they cannot

buy any camera.

A : I want to buy ‘a’ because it is a compact and light

camera. (A

1

=[compact(a), light(a), camera(a),

∀x.compact(x) ∧ light(x) ∧ camera(x) → buy(x),

buy(a)].)

B : We cannot buy ‘a’ because it is out of stock.

(B

1

= [¬inStock(a),∀x.¬inStock(x) → ¬buy(x),

¬buy(a)].)

B : I want to buy ‘b’ because it is high-resolution

camera with a long battery life. (B

2

=

[resolution(b,high), battery(b,long),camera(b),

∀x.resolution(x,high) ∧ battery(x, long) ∧

camera(x) → buy(x),buy(b)].)

A : We cannot buy ‘b’ because it is beyond our bud-

get. (A

2

= [overBudget(b),∀x.overBudget(x) →

¬buy(x),¬buy(b)].)

Each A

1

,A

2

,B

1

, and B

2

is the argument that is a for-

mal description of the informal statement preceding

the argument. Both B

1

and A

1

, and A

2

and B

2

defeat

each other due to their logical inconsistency. Further,

A

1

and B

2

defeat each other due to the existence of al-

ternatives. In this situation, neither A

1

nor B

2

is justi-

fied by argumentation semantics, in other words, nei-

ther A

1

nor B

2

is nonmonotonic consequence. This

leads to the evaluation that both the options buy(a)

and buy(b) are unacceptable as a choice of A and B

and it is hard to prioritize these options within the

scope of logic. Of course, outside the scope of logic,

decision theory and game theory allow agents to pri-

oritize these options in different ways. However, it

is the case that they have to choose from the given

options under the situation that neither A nor B has

any other alternative options. On the other hand, in

real life, we often work out new options by giving up

some of our concerns and try to break such a stalemate

peacefully. Let us consider the following argument.

A : How about ‘c’ because it is user-friendly camera

with a long battery life. (A

3

= [userFriendly(c),

battery(c,long),camera(c),userFriendly(x) ∧

battery(x,long) ∧ camera(x) → buy(x),buy(c)].)

The option buy(c) concluded by A

3

reflects each

agent’s concerns partly, not completely, under the

background knowledge compact(x) ∧ light(x) →

userFriendly(x). For A, A

3

reflects user-friendliness

derived from the knowledge and the attributes that A’s

initial option buy(a) has. For B, A

3

reflects height

of resolution that B’s initial option buy(b) has. Ar-

guments are constructed from knowledge bases using

various kinds of reasoning. From the viewpoint of

logic, the question we are interested in here is: What

type of reasoning is needed to make the argument

with the compromise? Obviously, induction and ab-

duction do not address the compromise because they

aim to make up for lacks of knowledge so as to ex-

plain all of given examples. In the above dialogue,

one possible inductive hypothesis is a general rule

∀x.camera(x) → buy(x) when, for example, buy(a)

and buy(b) are assumed to be examples. The rule r :

∀x.userFriendly(x)∧battery(x,long)∧camera(x) →

buy(x) that allows A to make A

3

is not a hypothesis of

these reasoning, and therefore, the derivation of the

rule is outside of the scope of these reasoning. Deduc-

tion neither address the compromise because it aims

to derive all necessary conclusions of given theory. In

the above dialogue, deduction only derives the cam-

eras satisfying each agent’s concerns completely, not

partly. Therefore, deriving buy(c) is outside the scope

of deductive reasoning. We think agents need another

type of reasoning that finds a middle ground among

agents’ concerns such as r. We think that the studies

of such reasoning open up a new horizon for argumen-

tation in artificial intelligence especially in argument-

based deliberation or negotiation.

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

356

3 PRELIMINARIES

A complete lattice is a 2-tuple of a set and a binary

relation of the set. Both of them are abstract in the

sense that the internal structures are unspecified.

Definition 1 (Complete Lattice). Let < L,> be a

quasi-ordered set. If for every S ⊆ L, a least upper

bound of S and a greatest lower bound of S exist, then

< L,> is called a complete lattice.

The abstract argumentation framework (Dung,

1995), denoted by AF, gives a general framework for

nonmonotonic logics. The frameworkallows us to de-

fine various semantical notions of argumentation ex-

tensions that intended to capture various types of non-

monotonic consequences. The basic formal notions,

with some terminological changes, are as follows.

Definition 2. (Dung, 1995) The abstract argu-

mentation framework is defined as a pair AF =<

AR,defeat > where AR is a set of arguments, and de-

feat is a binary relation on AR, i.e. defeat ⊆ AR×AR.

• A set S of arguments is said to be conflict-free if

there are no arguments A,B in S such that A de-

feats B.

• An argument A ∈ AR is acceptable with respect to

a set S of arguments iff for each argument B ∈ AR:

if B defeats A then B is defeated by an argument

in S.

• A conflict-free set of arguments S is admissible iff

each argument in S is acceptable with respect to

S.

• A preferred extension of an argumentation frame-

work AF is a maximal (with respect to set inclu-

sion) admissible set of AF.

An argument is justified with respect to AF if it is

in every preferred extension of AF, and is defensible

with respect to AF if it is in some but not all preferred

extensions of AF (Prakken and Sartor, 1997).

The argumentation system (Prakken, 1997) uses

Reiter’s default logic (Reiter, 1980) for defining inter-

nal structures of argumentsand defeat relations in AF.

The language consists of a first-order language L

0

and

a set of defeasible rules ∆ defined below. Informally,

L

0

is assumed to be divided into two subsets; one is

the set F

c

of contingent facts and the set F

n

of neces-

sary facts. A default theory is a set F

c

∪ F

n

∪ ∆ where

F

n

∪ F

c

is consistent. We extract some necessary def-

initions of the argumentation system (Prakken, 1997).

Definition 3. (Prakken, 1997)

• Let ϕ

1

,...,ϕ

n

,ψ ∈ L

0

. A defeasible rule is an ex-

pression of the form

ϕ

1

∧ · · · ∧ ϕ

j

∧ ∼ ϕ

k

∧ · · · ∧ ∼ ϕ

n

⇒ ψ

ϕ

1

∧ ··· ∧ ϕ

j

is called the antecedent, ∼ ϕ

k

∧

·· · ∧ ∼ ϕ

n

is called the justification and ψ is called

the consequent of the rule. For any expression

∼ ϕ

i

in the justification of a defeasible rule, ¬ϕ

i

,

classical negation of ϕ

i

, is an assumption of the

rule. And an assumption of an argument is an as-

sumption of any rule in the argument.

• Let ϕ

1

,...,ϕ

n

,ψ ∈ L

0

. Defeasible modus ponens,

denoted by DMP, is an inference rule of the form

ϕ

1

∧ · · · ∧ ϕ

j

∧ ∼ ϕ

k

∧ · · · ∧ ∼ ϕ

n

⇒ ψ ϕ

1

∧ · · · ∧ ϕ

j

ψ

• Let Γ be a default theory. An argument based

on Γ is a sequence of distinct first-order formu-

lae and/or ground instances of defaults [ϕ

1

,...,ϕ

n

]

such that for all ϕ

i

:

– ϕ

i

∈ Γ; or

– There exists an inference rule ψ

1

,...,ψ

m

/ϕ

i

∈

R such that ψ

1

,...,ψ

m

∈ {ϕ

1

,...,ϕ

i−1

}.

For argument A, ϕ is a conclusion of A, denotedby

ϕ ∈ CONC(A), if ϕ is a first-order formula in A.

ϕ is an assumption of A, denoted by ϕ ∈ ASS(A),

if ϕ is an assumption of a default in A.

• Let A

1

and A

2

be two arguments.

– A

1

rebuts A

2

iffCONC(A

1

)∪CONC(A

2

)∪F

n

⊢

⊥ and A

2

is defeasible and A

1

is strict.

– A

1

undercuts A

2

iff CONC(A

1

) ∪ F

n

⊢ ¬φ and

φ ∈ ASS(A

2

).

In the definition of defeasible rule, special sym-

bol ∼, called weak negation, is introduced in order

to represent unprovable propositions. It makes that

the language of the system has the full expressiveness

of default logic. In DMP, assumptions in defeasible

rules are ignored, and the ignorance can be correctly

disabled by undercutting. An argument is a deduction

incorporating default reasoning using defeasible rules

and DMP ∈ R . R is assumed to consist of all valid

first-order inference rules plus DMP. We say that an

argument is strict if there exist only valid first-order

inference rules in the argument. Otherwise, the ar-

gument is defeasible. Symbols ⊥ and ⊢ represent a

logical contradiction and a logical consequence rela-

tion, respectively. Rebutting caused by priorities of

defeasible rules is excluded from original definition.

Definition 2 takes no account of the aspect of

proof theory that gives a way to determine whether

an individual argument is justified or defensible. We

extract some necessary definitions of the proof theory

for argumentation system, called the dialectical proof

theory (Prakken, 1999).

Definition 4. (Prakken, 1999)

FORMALIZING DIALECTICAL REASONING FOR COMPROMISE-BASED JUSTIFICATION

357

• A dialogue is a finite nonempty sequence of moves

moves

i

= (Player

i

,Arg

i

) (i > 0), such that

1. Player

i

= P iff i is odd; and Player

i

= O iff i is

even;

2. If Player

i

= Player

j

= P and i 6= j, then Arg

i

6=

Arg

j

;

3. If Player

i

= P(i > 1), then Arg

i

strictly defeats

Arg

i−1

;

4. If Player

i

= O, then Arg

i

defeats Arg

i−1

.

• A dialogue tree is a tree of dialogues such that

if Player

i

= P then the children of move

i

are all

defeaters of Args

i

• A player wins a dialogue if the other player can-

not move. And a player wins a dialogue tree if it

wins all branches of the tree.

• An argument A is a provably justified argument if

there is a dialogue tree with A as its root, and won

by the proponent.

The condition 1 in the definition of a dialogue re-

quires that the proponent begins and then the players

take turns. Condition 2 prevents the proponent from

repeating moves and condition 3 and 4 are burdens

of proof for P and O. In the definition of a dialogue

tree, all defeaters for every arguments of P are con-

sidered. The idea of the definition of win is that if

P’s last argument is undefeated, it reinstates all previ-

ous arguments of P that occur in the same branch of a

tree, in particular the root or the tree. It is proved that

arguments are justified iff the arguments are provably

justified (Prakken, 1999).

4 SEMANTICS FOR REASONING

In this section, we give a declarative definition of rea-

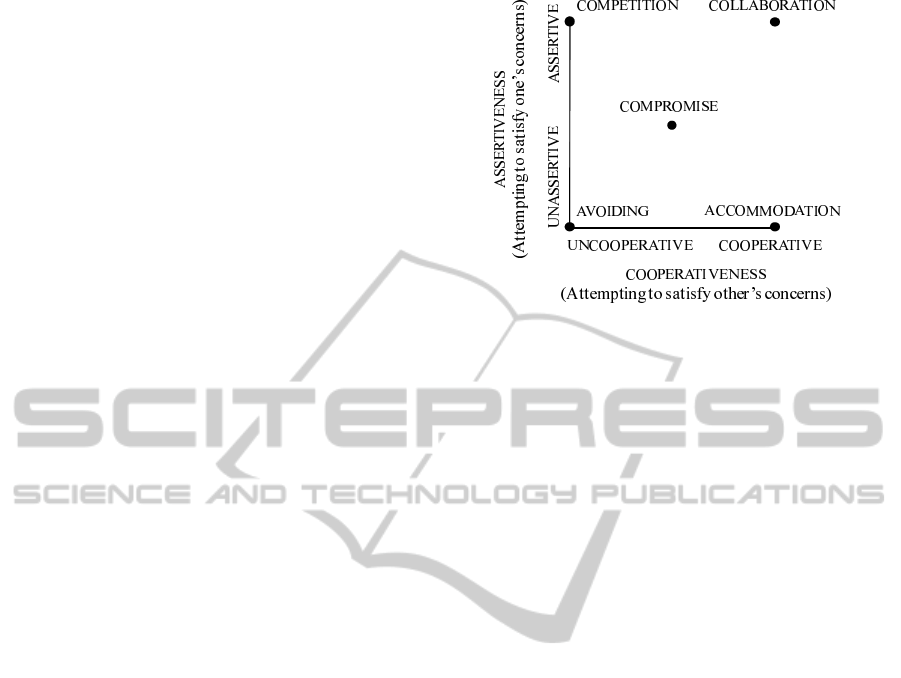

soning. Figure 1 shows the classification of our con-

flict handling modes into 5 groups (Thomas, 1992).

The vertical and the horizontal axes represent the

strength of assertiveness and that of cooperativeness,

respectively. Competition, collaboration, avoiding,

and accommodation are explained as assertive and

uncooperative mode, assertive and cooperative mode,

unassertive and uncooperative mode, and unassertive

and cooperative mode, respectively. Compromise is a

mode taking the middle attitude among the four. In

terms of importance in computational argumentation,

we focus on the notion of compromise and give more

concrete interpretation that compromise is a statement

satisfying each agent’s statement at least partly, not

completely. Our idea here is that we formally define

the notion of compromise using a complete lattice.

We impose two conditions, incompleteness and rel-

evance, as fundamental requirements to be compro-

Figure 1: Two-dimensional taxonomy of conflict handling

modes (Thomas, 1992).

mise. The incompleteness requires that compromise

must not satisfy each agent’s statement completely

and the relevance requires that compromise must sat-

isfy each agent’s statement at least partly. Further,

we impose additional two conditions, collaborative-

ness and simplicity, in order to capture our intuitions

about compromise. The collaborativeness requires

that compromise must retain a common ground that

all agents commonly have in advance. The simplicity

requires that compromise does not include any redun-

dant statements.

Definition 5 (Compromise). Let < L,> be a com-

plete lattice and X

1

,...,X

n

,Y be elements of L satisfy-

ing inf{X

i

| 1 ≤ i ≤ n} ≁ ⊥. Y is a compromise among

X

1

,...,X

n−1

, and X

n

iff

1. incompleteness: ∀X

i

(Y X

i

); and

2. relevance: ∀X

i

(inf{X

i

,Y} ≁ ⊥); and

3. collaborativeness: Y inf{X

j

| 1 ≤ j ≤ n}; and

4. simplicity: Y ∼ sup{inf{X

i

,Y} | 1 ≤ i ≤ n}.

Definition 5 says that there is no compromise

among X

i

(1 ≤ i ≤ n) if their common lower element

is only bottom. Intuitively, the condition states that

there is no common ground among X

i

(1 ≤ i ≤ n). The

incompleteness states that Y is not upper than X

i

, for

all X

i

. The relevance states that there exists a common

nonbottom greatest lower bound of {X

i

,Y}, for all X

i

.

The collaborativeness states that the common lower

element of all X

i

is also lower than Y and the sim-

plicity states that any lower element of Y is common

lower element of Y and X

i

.

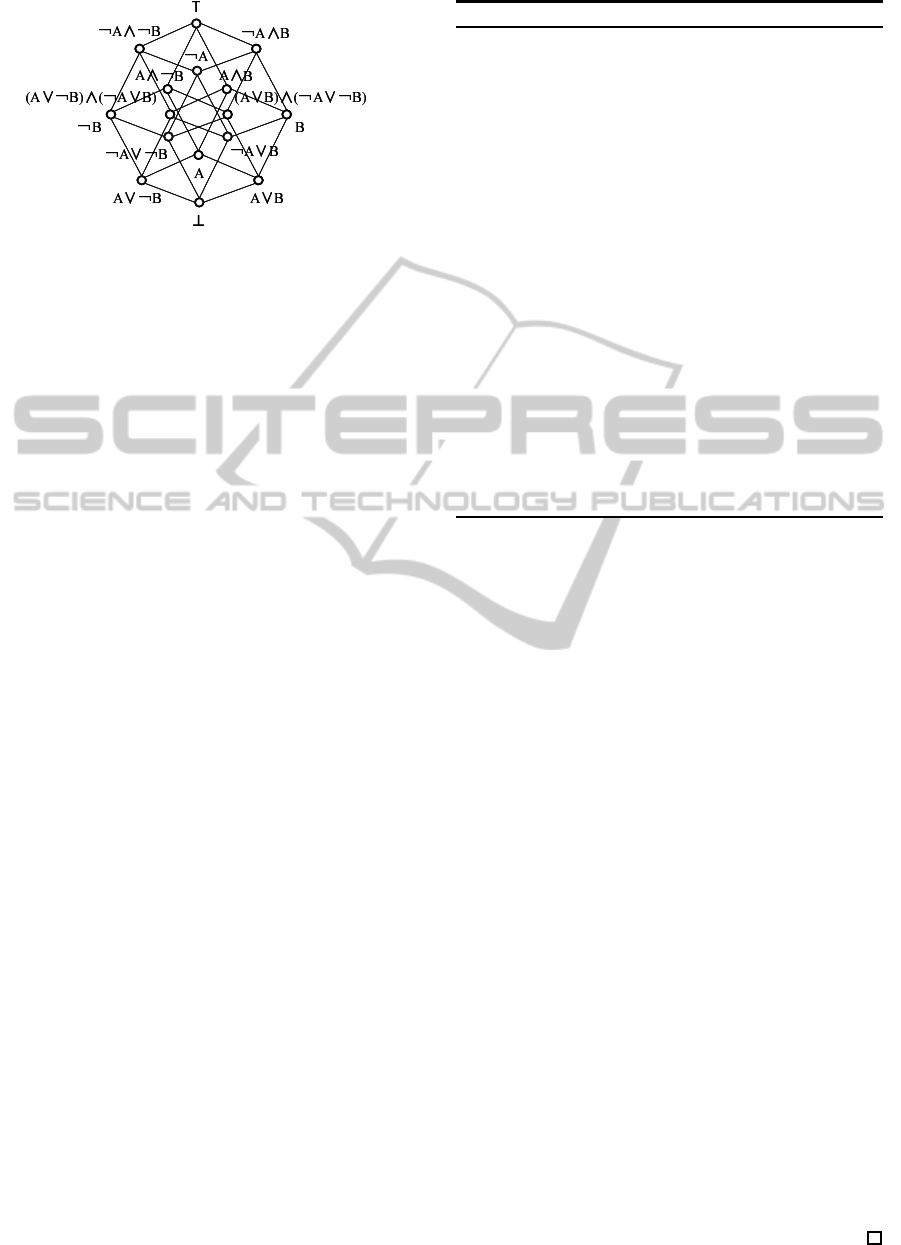

Example 1 (compromise based on entailment). Let

L be propositional language that has all well-formed

formulae composed of alphabets A and B, and be

a satisfiability relation on L. < L,> is a com-

plete lattice shown in Figure 2. ⊤ and ⊥ denote

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

358

Figure 2: Complete lattice < L,>.

false and true, respectively, in accordance with com-

mon usage in inductive logic programming. Defini-

tion 5 is characterized as follows: 1. ∀X

i

(Y 2 X

i

),

2. ∀X

i

(2 X

i

∨ Y), 3. Y X

1

∨ · ·· ∨ X

n

, and 4. Y ≡

(X

1

∨Y) ∧ ·· · ∧ (X

n

∨Y). X ≡ Y denotes X Y and

Y X. Each of A∨ B,(A∨B) ∧(¬A∨ ¬B), and B is a

compromise between ¬A∧ B and A.

5 DIALECTICAL REASONING

5.1 Abstract Dialectical Reasoning

In this section, we give a procedural definition of di-

alectical reasoning that derives compromise. As with

the definition of compromise, the algorithm is formal-

ized on an abstract complete lattice < L,>. The

algorithm describes common procedures with which

each concrete dialectical reasoning complies.

The inputs of Algorithm 1 are X

1

,...,X

n

∈ L. Al-

gorithm 1 calculates a set of lower elements of each

X

i

(1 ≤ i ≤ n) at line 4. For every Y ∈ Y

i

, if Y satis-

fies the conditions given at line 6 then Y is collected

in W

i

at line 7. At line 12, the least upper bound of

{W

i

| 1 ≤ i ≤ n} is calculated and collected in Z. If

the least upper bound satisfies the condition at line

16 then it is eliminated from Z. In summary, the

overall reasoning process has two primal phases: de-

riving lower elements Y

i

of given X

i

(1 ≤ i ≤ n) and

the common upper element Z of the lower elements

Y

i

(1 ≤ i ≤ n). Note that we cannot detail the proce-

dures at lines 4, 6, 12 and 16 in Algorithm 1 anymore

because the complete lattice < L,> in Algorithm 1

is abstract. One of the algorithms with the concrete

procedures for dialectical reasoning will be given in

Algorithm 2. In Algorithm 1, we assume that these

derivation and the comparison are computable, i.e.,

there exist algorithms that can return the right answers

to the procedures at lines 4, 6, 12 and 16.

Theorem 1 (Soundness and Completeness). Let <

Algorithm 1: Dialectical Reasoning on < L, >.

Require: inf{X

i

| 1 ≤ i ≤ n} ≁ ⊥

1: Z :=

/

0

2: for i := 1 to n do

3: W

i

:=

/

0

4: compute Y

i

= {Y | Y X

i

}

5: for all Y ∈ Y

i

do

6: if Y ≁ ⊥ and Y inf{X

j

| 1 ≤ j ≤ n} then

7: W

i

= W

i

∪ {Y}

8: end if

9: end for

10: end for

11: for all (W

1

,...,W

n

) ∈ W

1

× ... × W

n

do

12: compute Z ∼ sup{W

i

| 1 ≤ i ≤ n}

13: Z = Z ∪ {Z}

14: end for

15: for i := 1 to n do

16: if Z X

i

then

17: Z = Z \ {Z}

18: end if

19: end for

20: return Z

L, > be a complete lattice and X

1

,...,X

n

,Z ∈ L

that satisfy inf{X

i

| 1 ≤ i ≤ n} ≁ ⊥. Z is a com-

promise among X

1

,...,X

n−1

, and X

n

iff Z is an ele-

ment of the output of Algorithm 1 where the inputs

are X

1

,...,X

n−1

, and X

n

.

Proof. (Soundness) If Z is an output of Algorithm 1,

Z obviously satisfies the condition of incompleteness

due to the conditionat line 16 in Algorithm 1. Further,

if Z is an output of Algorithm 1, there exists at least

one Y

i

X

i

for all X

i

such that Y

i

≁ ⊥,Y

i

inf{X

j

|

1 ≤ j ≤ n}, and Z ∼ sup{Y

i

| 1 ≤ i ≤ n}. Now we let

W

i

∼ Y

i

. Since Z ∼ sup{W

i

| 1 ≤ i ≤ n} and X

i

W

i

,

inf{X

i

,Z} W

i

. Since inf{X

i

,Z} W

i

and W

i

≁ ⊥,

inf{X

i

,Z} ≁ ⊥ for all X

i

. Therefore, relevance holds.

Since inf{X

i

,Z} W

i

and W

i

inf{X

j

| 1 ≤ j ≤ n},

inf{X

i

,Z} inf{X

j

| 1 ≤ j ≤ n} for all X

i

. Therefore,

collaborativeness holds. Since Z inf{X

i

,Z} for all

X

i

, Z sup{inf{X

i

,Z} | 1 ≤ i≤ n}. On the other hand,

since Z ∼ sup{W

i

| 1 ≤ i ≤ n} and inf{X

i

,Z} W

i

for all X

i

, Z sup{inf{X

i

,Z} | 1 ≤ i ≤ n}. Hence,

Z ∼ sup{inf{X

i

,Z} | 1 ≤ i ≤ n}, and therefore, sim-

plicity holds. (Completeness) It is sufficient to show

that, for all compromises Z among X

1

,...,X

n−1

, and

X

n

, (1) Z X

i

for all X

i

, and (2) there exists at least

one Y

i

X

i

for all X

i

such that Y

i

≁ ⊥, Y

i

inf{X

j

|

1 ≤ j ≤ n} , and Z ∼ sup{Y

i

| 1 ≤ i ≤ n}. The condi-

tion of incompleteness directly satisfies condition (1).

Nowwe letY

i

∼ inf{X

i

,Z}. Then,Y

i

≁ ⊥,Y

i

inf{X

j

|

1 ≤ j ≤ n}, X

i

Y

i

, and Z ∼ sup{Y

i

| 1 ≤ i ≤ n} are

satisfied, and therefore, condition (2) is satisfied.

FORMALIZING DIALECTICAL REASONING FOR COMPROMISE-BASED JUSTIFICATION

359

5.2 Concrete Dialectical Reasoning

We give a concrete and sound algorithm for dialectical

reasoning characterized by definite clausal language

and generalized subsumption. Generalized subsump-

tion is a quasi-order on definite clauses with back-

ground knowledge expressed in a definite program,

i.e., a finite set of definite clauses. It is approxi-

mation of relative entailment, i.e., logical entailment

with background knowledge, and it can be reduced to

ordinary subsumption with empty background knowl-

edge (Nienhuys-Cheng and de Wolf, 1997). The read-

ers are referred to (Buntine, 1988) for the definition.

Let L

1

be a definite clausal language, such that L

1

has

finite constants, finite predicate symbols and no func-

tion symbols, D ⊆ L

1

be a set of definite clauses that

have the same literal in their head, and ≥

B

be gener-

alized subsumption with respect to B where B ⊆ L

1

is a definite program. Algorithm 2 is the algorithm for

dialectical reasoning on complete lattice < D,≥

B

>.

Algorithm 2: Dialectical Reasoning on < D, ≥

B

>.

Require: B ∪ {X

i

} are satisfiable for all X

i

and

inf{X

i

| 1 ≤ i ≤ n} ≁ ⊥.

1: Z :=

/

0

2: for i := 1 to n do

3: W

i

:=

/

0

4: compute Y

i

⊆ {Y | Y is a tautology, or there

exists SLD-derivation of Y

′

with X

i

as a top

clause and members of B as input clauses and

Y ∈ ρ

m

L

(Y

′

).

5: for all Y ∈ Y

i

do

6: if Y is a definite clause, Y

+

α /∈ L and Y ⊒

{(X

1

∨ · · · ∨ X

n

)

+

θ} ∪ M then

7: W

i

:= W

i

∪ {Y}

8: end if

9: end for

10: end for

11: for all (W

1

,...,W

n

) ∈ W

1

× ... × W

n

do

12: compute LGS Z of {{W

+

i

σ

i

} ∪ N

i

| 1 ≤ i ≤ n}

13: Z := Z ∪ {Z}

14: end for

15: for i := 1 to n do

16: if Z ⊒ {X

+

i

φ

i

} ∪ O

i

then

17: Z := Z \ {Z}

18: end if

19: end for

20: return Z

The inputs of Algorithm 2 are B,D, X

1

,...,X

n

∈

D, and iteration number m for refinement operator ρ

L

for subsumption. ρ

L

denotes a downward refinement

operator for < C ,⊒> where C is a set of clauses. The

readers are referred to (Nienhuys-Cheng and de Wolf,

1997) for the definition of ρ

L

. In summary, ρ

L

is

a function whose input is a clause and output is a

set of clauses which the input clause subsumes. The

derivation is achieved by substituting functions, con-

stants or variables, or adding new literals for the in-

put clause. ρ

m

L

in Algorithm 2 means that ρ

L

is it-

erated m-time where an element of the output of ρ

i

L

is returned to the input of ρ

i+1

L

. Clause X subsumes

clause Y, denoted by X ⊒ Y in Algorithm 2, if there

exists a substitution θ such that Xθ ⊆ Y. For defi-

nite clause X, X

+

denotes a head of X and X

−

de-

notes a set of the literals in the body of X. S de-

notes a set of negations of formulae in set S. α,θ,σ

i

,

and ϕ

i

denote Skolem substitution for Y with respect

to B, Skolem substitution for X

1

∨ ·· · ∨ X

n

with re-

spect to B ∪ {Y}, Skolem substitution for W

i

with

respect to B ∪ {W

i

| 1 ≤ j ≤ n}, and Skolem substi-

tution for X

i

with respect to B ∪ {X

j

| 1 ≤ j ≤ n},

respectively. L,M,N

i

, and O

i

are the least Herbrand

models of B ∪Y

−

α,B ∪(X

1

∨·· · ∨X

n

)

−

θ,B ∪W

−

i

σ

i

,

and B ∪ X

−

i

φ

i

, respectively. For simplicity, we do not

explicitly describe the procedures for calculating the

Skolem substitutions and the least Herbrand models.

Under the restriction for L

1

we impose, there exists

a finite least Herbrand model on L

1

and it is com-

putable by fixed operator T

P

(Emden and Kowalski,

1976). We assume the results of the operator.

The computation at line 4 in Algorithm 2 is equiv-

alent to the computation of Y from X

i

and B, such that

X

i

≥

B

Y. This is based on the proposition that, for all

X,Y ∈ D, X ≥

B

Y iff there exists an SLD-deduction

of Y, with X as top clause and members of B as

input clauses (Nienhuys-Cheng and de Wolf, 1997).

In the algorithm, SLD-deduction is split into SLD-

derivation and subsumption, and refinement operator

ρ

L

for < L

1

,⊒> calculates subsumption. ρ

L

is com-

putable because of its locally finiteness (Nienhuys-

Cheng and de Wolf, 1997). At line 6, the algorithm

evaluates whether Y is a definite clause, Y ≁

B

⊥ and

Y ≥

B

inf{X

i

| 1 ≤ i ≤ n}. For decidability, general-

ized subsumption is translated to decidable ordinary

subsumption based on the proposition that if the least

Herbrand model of B ∪Y

−

σ is finite, then X ≥

B

Y iff

X ⊒ {Y

+

σ} ∪ M, for all X,Y ∈ D (Nienhuys-Cheng

and de Wolf, 1997). At line 12, the algorithm com-

putes the least generalization, under generalized sub-

sumption, of {W

i

| 1 ≤ i ≤ n} by alternatively comput-

ing the least generalization, under subsumption, de-

noted by LGS, of {{W

+

i

σ

i

} ∪ M

i

| 1 ≤ i ≤ n}. At line

16, the algorithm evaluates Z 6≥

B

X

i

for all X

i

by trans-

lating generalized subsumption to ordinary subsump-

tion. Therefore, the following proposition holds.

Proposition 1. Dialectical reasoning on < D,≥

B

>

is sound with respect to compromise on < D,≥

B

>.

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

360

Example 2 (dialectical reasoning on < D,≥

B

>).

Consider the following two clauses and the back-

ground knowledge.

• X

1

= compact(x) ∧ light(x) ∧ camera(x) →

buy(x)

• X

2

= resolution(x, high) ∧ battery(x,long) ∧

camera(x) → buy(x)

• B = {compact(x)∧light(x) → user-Friendly(x)}

Following each Y

i

(i = 1, 2) is SLD-deducible with X

i

as top clause because X

i

subsumes Y

i

.

• Y

1

= battery(x,long) ∧ compact(x) ∧ light(x) ∧

camera(x) → buy(x)

• Y

2

= user-Friendly(x) ∧ resolution(x,high) ∧

battery(x,long) ∧ camera(x) → buy(x)

ρ

L

derivesY

1

from X

1

by adding literals ¬battery(y,z)

and substituting {y/x,z/long} into X

1

. ρ

L

derives Y

2

from X

2

by adding literal ¬userFriendly(y) and sub-

stituting {y/x} into X

2

. For simplicity, these literals

are arbitrary chosen to satisfy the condition at line 6

in Algorithm 2. Following L

1

,L

2

, and M are the least

Herbrand models of B ∪Y

−

1

{x/a} , B ∪Y

−

2

{x/b} , and

B ∪(X

1

∨ · · · ∨ X

n

)

−

{x/c}, respectively.

• L

1

= {battery(a,long), compact(a),light(a),

camera(a),user-Friendly(a)}

• L

2

= {user-Friendly(b),resolution(b,high),

battery(b,long),camera(b)}

• M = {compact(c),light(c),user-Friendly(c),

camera(c), resolution(c, high),battery(c,long)}

We let σ

1

= {x/d} and σ

2

= {x/e}. Then, the least

Herbrand models N

i

of B ∪Y

−

σ

i

are as follows.

• N

1

= {battery(d, long),compact(d), light(d),

camera(d),user-Friendly(d)}

• N

2

= {user-Friendly(e),resolution(e,high),

battery(e,long), camera(e)}

Then, following Z is the least upper bound, under sub-

sumption, of {{Y

+

i

σ

i

}∪ N

i

| 1 ≤ i ≤ n}, and therefore,

the least upper bound, under generalized subsump-

tion, of {Y

1

,Y

2

}.

• Z = user-Friendly(x) ∧ battery(x, long) ∧

camera(x) → buy(x)

By similar evaluation,Z turns out to be a consequence

of the dialectical reasoning.

In this paper, we focus on defining a concrete and

sound algorithm for dialectical reasoning, and we do

not address the problem with the search space reduc-

tion using various biases. We assume the results of

Example 2 in the next section.

6 COMPROMISE-BASED

JUSTIFICATION

6.1 Handling Compromise Arguments

In contrast to reasoning about what to believe, i.e.,

theoretical reasoning, reasoning about what to do, i.e.,

practical reasoning, is closely-linked to agents’ goals

or desires because it depends not only on their be-

liefs, but also on their goals. We assume that agents

have their common goal G described by a first-order

formula with zero or more free variables. Obviously,

compromise should be effective only in practical rea-

soning. In order to handle compromise arguments in

the argumentation system, we distinguish practical ar-

guments from theoretical argumentsbased on whether

the arguments satisfy a agents’ common goal or not.

An argument is called practical if it satisfies agents’

common goal, and otherwise it is called theoretical.

Definition 6 (Practical Argument). Let G be a goal

and A be an argument. A is a practical argument

for G if there exists a ground substitution α such that

CONC(A) ∪ F

n

Gα.

A ground substitution is a mapping from a finite

set of variables to terms without variables. We expand

the notion of compromise into practical arguments.

A compromise argument is a practical argument that

uses dialectical reasoning.

Definition 7 (Compromise Argument). Let G be a

goal and A,B,·· · ,M and N be distinct practical ar-

guments for G. A is a compromise argument among

B,· ·· , M and N if a ∈ CONC(A) is a compromise

among b ∈ CONC(B),...,m ∈ CONC(M) and n ∈

CONC(N).

The rebutting and the undercutting shown in Defi-

nition 3 can be viewed as theoretical in the sense that

the grounds of conflicts are logical contradiction. On

the other hand, practical arguments conflict each other

due to the existence of alternatives. Such practical

conflict occurs when they satisfy a same goal in dif-

ferent ways. We define the notion of defeat based on

the three perspectives: the rebutting, the undercutting

and the existence of alternatives.

Definition 8 (Defeat). Let G be a goal and A and B

be distinct arguments. A defeats B iff

• A rebuts B and B does not rebut A; or

• A undercuts B; or

• Both A and B are practical arguments for G such

that they have distinct substitutions α and β for

G, respectively, and B is not a compromise among

arguments, one of which is A.

FORMALIZING DIALECTICAL REASONING FOR COMPROMISE-BASED JUSTIFICATION

361

We say that A strictly defeats B iff A defeats B and B

does not defeat A.

6.2 An Illustrative Example

We detail the motivational example about camera de-

cision in Section 2. Both agents A and B are assumed

to have their common goal G ≡ buy(x) ∧ camera(x)

and the following individual knowledge bases, de-

noted by Γ

A

= F

A

n

∪F

A

c

∪∆

A

and Γ

B

= F

B

n

∪F

B

c

∪∆

B

,

respectively.

• F

A

n

= {camera(a),camera(c),overBudget(b),

∀x.compact(x) ∧ light(x) ∧ camera(x) → buy(x)

(= X

1

),∀x.overBudget(x) → ¬buy(x),

takeShoot(b,200)}

• F

A

c

= {compact(a),userFriendly(c)(= f

1

),

battery(c,long),∀x.compact(x) ∧ light(x) →

userFriendly(x)(= r

1

),∀x∀y.takeShoot(x,y)∧ >

(300,y) → ¬battery(x,long)(= r

2

)}

• ∆

A

= {∼ ¬light(x) ⇒ light(x)(= d

1

)}

• F

B

n

= {camera(b),¬inStock(a),

∀x.resolution(x,high) ∧ battery(x, long) ∧

camera(x) → buy(x)(= X

2

),∀x.¬inStock(x) →

¬buy(x), price(b,$200), ∀x∀y.price(x,y)∧ ≥

($300, y) → withinBudget(x)(= r

3

)}

• F

B

c

= {resolution(b,high)(= f

2

)}

• ∆

B

= {∼ ¬battery(x, long) ⇒ battery(x, long)

(= d

2

)}

Further, only deductive reasoning, DMP, and dialecti-

cal reasoning on < D,≥

B

> can be used for construct-

ing arguments. Agents construct arguments only

from their own individual knowledge bases with op-

ponent’s arguments previously stated. They advance

argumentation by constructing dialogue trees whose

roots are practical arguments, which means that they

try to justify their own practical arguments for G

straightforwardly. For readability, we express argu-

ments using proof trees to visualize the reasoning.

Deductive reasoning, DMP, and dialectical reasoning

are expressed by ‘—,’ ‘·· ·,’ and ‘= =,’ respectively.

Following A

1

,A

2

and A

3

, that A

2

strictly defeats A

1

and A

3

defeats A

1

, form a dialogue tree.

A

1

:

d

1

{x/a}

. . . . . . .. ..

light(a) compact(a) cam(a) X

1

buy(a)

A

2

:

¬inStock(a) ¬inStock(x) → ¬buy(x)

¬buy(a)

A

3

:

d

2

{x/b}

...... . . . .. .......

battery(b,long) f

2

cam(b) X

2

buy(b)

buy(a) concluded by A

1

is rated as unacceptable be-

cause A cannot win the dialogue tree. Then, in turn,

B tries to make a practical argument for G. Follow-

ing A

3

,A

4

,A

5

and A

6

with A

1

, that A

4

defeats A

3

, A

5

strictly defeats A

4

, A

6

strictly defeats A

3

, and A

1

de-

feats A

3

, form a dialogue tree.

A

4

:

∼ withinBudget(b) ⇒ ¬buy(b)

............. ....... .. .. .. . . . . . . .

¬buy(b)

A

5

:

price(b,$200) ≥ ($300,$200) r

3

withinBudget(b)

A

6

:

takeShoot(b,200) > (300,200) r

2

¬battery(b,long)

Neither B can win the dialogue tree. Thus, buy(b) is

rated as unacceptable. Neither of them can make an-

other practical argument for G using only deductive

reasoning and DMP. However, A can construct fol-

lowing compromise argument A

7

between A

1

and A

3

using dialectical reasoning in combination with these

reasoning. X

1

,X

2

, and Z in A

7

are same as Example

2.

A

7

:

X

1

X

2

r

1

Z f

1

battery(c,long) cam(c)

buy(c)

Neither A and B can make any defeating arguments

against A

7

. Thus, A

7

forms the dialogue tree by itself

and buy(c) is rated as acceptable . Note that the argu-

ment concluding buy(c) cannot be constructed from

Γ

1

∪ Γ

2

without dialectical reasoning.

7 RELATED WORKS

The prime difference between dialectical reasoning

and inductive or abductive reasoning is that whereas

the consequence of dialectical reasoning satisfies in-

completeness, given in definition 5, inductive and ab-

ductive hypotheses satisfy completeness. Moreover,

dialectical reasoning differs from deductive reason-

ing in the sense that the consequences of dialectical

reasoning are not necessarily deducible. For instance,

in Example 1, Z is not a semantical consequence of

B ∪{X

1

,X

2

}, although Z is a compromise between X

1

and X

2

. However, if the complete lattice is character-

ized by satisfiability relation, then any compromise is

a semantical consequence of the premises. Nonethe-

less, dialectical reasoning has significance because

neither of other reasoning mechanisms address what

compromise is and how agents infer compromise.

In (Amgoud et al., 2008), the authors introduce

the notion of concession as an essential element of

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

362

argument-based negotiation. They define concession

as a given offer supported by an argument that has

suboptimal state in argumentation. Thus, in contrast

to our approach, concession is not realized by reason-

ing mechanism. Similarly, game theory does not ad-

dress the rational generation of a new option although

it gives the way for rational choices. In (Sawamura

et al., 2003), the authors introduce seven dialectical

inference rules into dialectical logic DL and weaker

dialectical logic DM (Routley and Meyer, 1976) in or-

der to make concession and compromise from an in-

consistent theory. The authors, however, do not show

an underlying principle of these rules. Further, con-

trary to the philosophical opinion (Sabre, 1991), the

set of the premises of each inference rules is restricted

to logical contradiction. In contrast, we give the un-

derlying principle of dialectical reasoning by defining

abstract reasoning on a complete lattice. Further, as

shown in Example 2, we do not restrict the premises

to contradiction.

8 CONCLUSIONS AND FUTURE

WORKS

We defined compromise on an abstract complete lat-

tice, and proposed a sound and complete algorithm

for dialectical reasoning with respect to compromise.

Then, we proposed the concrete algorithm for the di-

alectical reasoning characterized by definite clausal

language and generalized subsumption. The concrete

algorithm was proved to be sound with respect to the

compromise. We expanded the argumentation sys-

tem proposed by Prakken (Prakken, 1997) to handle

compromise arguments, and illustrated that a compro-

mise argument realizes a compromise-based justifica-

tion towards argument-based deliberation.

We plan to elaborate more applicable algorithms

by incorporating language and search biases into our

algorithms. Furthermore, recently, some kinds of

practical reasoning are proposed for argument-based

reasoning (Bench-Capon and Prakken, 2006). How-

ever, there is little work that focuses on the rela-

tion between phases of argumentation and reasoning.

Especially, compromise should be taken at the final

phase of deliberation or negotiation. We will enable

agents to use appropriate reasoning depending on the

phase of argumentation.

REFERENCES

Amgoud, L., Dimopoulos, Y., and Moraitis, P. (2008). A

general framework for argumentation-based negoti-

ation. In Proc. of The 4th International Workshop

on Argumentation in Multi-Agent Systems (ArgMAS

2007), pages 1–17.

Bench-Capon, T. J. M. and Prakken, H. (2006). Justifying

actions by accruing arguments. In Proc. of The First

International Conference on Computational Models

of Argument (COMMA 2006), pages 247–258.

Buntine, W. (1988). Generalized subsumption and its appli-

cations to induction and redundancy. Artificial Intelli-

gence, 36:146–176.

Carnielli, W., Coniglio, M. E., and Marcos, J. (2007). Log-

ics of Formal Inconsistency, volume 14, pages 1–93.

Springer, handbook of philosophical logic, 2nd edi-

tion.

Dung, P. M. (1995). On the acceptability of arguments

and its funedamental role in nonmonotonic reasoning,

logic programming, and n-person games. Artificial In-

telligence, 77:321–357.

Emden, M. H. V. and Kowalski, R. A. (1976). The seman-

tics of predicate logic as a programming language.

Journal of the Association for Computing Machinery,

23:733–742.

Gordon, T. F. (1995). The Pleadings Game – An Artifi-

cial Intelligence Model of Procedural Justice. Kluwer

Academic Publishers.

Hamblin, C. L. (1970). Fallacies. Methuen.

Nienhuys-Cheng, S.-H. and de Wolf, R. (1997). Foundation

of Inductive Logic Programming. Springer.

Nisbett, R. E. (2003). The Geography of Thought: How

Asians and Westerners Think Differently ... and Why.

FREE PRESS.

Prakken, H. (1997). Logical Tools for Modelling Legal

Argument: A Study of Defeasible Reasoning in Law.

Kluwer Academic Publishers.

Prakken, H. (1999). Dialectical proof theory for defeasible

argumentation with defeasible priorities (preliminary

report). In Proc. of The 4th ModelAge Workshop ‘For-

mal Models of Agents’, pages 202–215.

Prakken, H. and Sartor, G. (1997). Argument-based ex-

tended logic programming with defeasible priorities.

Journal of Applied Non-classical Logics, 7:25–75.

Reiter, R. (1980). A logic for default reasoning. Artificial

Intelligence, 13:81–132.

Rescher, N. (2007). Dialectics – A Classical Approach to

Inquiry. ontos verlag.

Routley, R. and Meyer, R. K. (1976). Dialectical logic, clas-

sical logic, and the consistency of the world. Studies

in East European Thought, 16(1-2):1–25.

Sabre, R. M. (1991). An alternative logical framework for

dialectical reasoning in the social and policy sciences.

Theory and Decision, 30(3):187–211.

Sawamura, H., Yamashita, M., and Umeda, Y. (2003).

Applying dialectic agents to argumentation in e-

commerce. Electronic Commerce Research, 3(3-

4):297–313.

Thomas, K. W. (1992). Conflict and conflict management:

Reflections and update. Journal of Organizational Be-

havior, 13:265–274.

FORMALIZING DIALECTICAL REASONING FOR COMPROMISE-BASED JUSTIFICATION

363