PALMPRINT RECOGNITION BASED ON REGIONS SELECTION

Salma Ben Jemaa

Higher Institute of Computer and Multimedia, Sfax University, Tunis Street, Sfax, Tunisia

Mohamed Hammami

Faculty of Sciences, Sfax University, Sokra Street, Sfax, Tunisia

Keywords:

Palmprint recognition, Biometric, Contactless, Local Binary Pattern (LBP), Sequential Forward Floating Se-

lection (SFFS).

Abstract:

Palmprint recognition, as a reliable personal identity method, has been received increasing attention and be-

come an area of intense research during recent years. In this paper, we propose a generic biometric system

that can be adopted with or without contact depending of the capture system to ensure public security based on

identification with palmprint. This system is based on a new global approach which is to focus only on areas

of the image having the most discriminating features for recognition. Experimental results have been under-

taken on two large databases, namely,“CASIA-Palmprint” and “PolyU-Palmprint” show promising result and

demonstrate the effectiveness of the proposed approach.

1 INTRODUCTION

The progress in informatics field, the development of

common operations as well as the recent threats ter-

ritories have naturally led the subject of security for

a reliable identification of persons. Recently, biomet-

rics has been emerging as a new and effective identifi-

cation technology. In the biometrics family, palmprint

is new but promising member. Palmprint characteris-

tics are relatively stable, unique and the hand present

high user acceptability. Usually, palmprint biometrics

require contact with the capture system, therefore, all

the users are obliged to touch the same glass. For

this reason some users refuse to put their hand on the

same plate for hygienic reasons. Recently, few studies

(Doublet et al., 2007) (Goh et al., 2008) are interested

in making it more comfortable and more hygienic by

removing the requirement of contact. Although palm-

print is relatively a new biometric technology, a num-

ber of interesting approaches in this field have been

proposed in the literature over ten years ago. There

are mainly two categories of approaches to palmprint

recognition. The first category is the structural ap-

proaches such as based on the principal lines (Wu

et al., 2004), wrinkles (Chen et al., 2001), ridges and

features point (Duta et al., 2002). Unfortunately, it

is difficult to get a good recognition rate while using

only the principal lines because of their resemblance

among different individuals. Besides, wrinkles and

ridges of the palm are always crossing and overlap-

ping each other, which complicates the features ex-

traction task. The second category is the global ap-

proaches, such as Gabor filters (Zhang et al., 2003),

Eigenpalm (Lu et al., 2003), Fisherpalms (Wu et al.,

2003), Fourier transform (Li et al., 2002), Various in-

variant moments (Kan and Srinath, 2002), Morphol-

ogy operation (Wu et al., 2004) (Han et al., 2003) and

Local Binary Pattern (Wang et al., 2006). The global

approach is proved to be the most efficient in the liter-

ature, therefore, it could be used efficiently for palm-

print recognition. Previous researchers mostly use the

entire area of the palmprint as input to the recognition

algorithm. The main contribution of this work is to

focus only on areas of the image having the most dis-

criminating features for recognition to propose a bio-

metric system for contactless applications.

The remainder of this paper is organized as fol-

lows: Section 2 describes the proposed palmprint

recognition system. Section 3 presents some experi-

ments and results to show the effectiveness of the pro-

posed approach. Finally, Section 4 summarizes the

main results and offers concluding remarks.

320

Ben Jemaa S. and Hammami M..

PALMPRINT RECOGNITION BASED ON REGIONS SELECTION.

DOI: 10.5220/0003317803200325

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2011), pages 320-325

ISBN: 978-989-8425-47-8

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

2 THE PROPOSED SYSTEM

The proposed biometric recognition system is com-

posed of four steps: (1) preprocessing (2) features ex-

traction (3) features selection and finally (4) matching

and decision making.

2.1 Preprocessing

The goal of preprocessing is to robustly locate the Re-

gion Of Interest (ROI) of palm. In our case, the pre-

processing step consist of three phases. First, we start

with a detection phase of hand image, followed by a

ROI extraction phase and finally a ROI preprocessing

phase.

2.1.1 Hand Detection

First of all, the palmprint image is rotated 90

◦

in

clockwise direction. Next, the image is segmented

into foreground and background using the Otsu’s

method (Otsu, 1979). They can be some fingers dis-

connected from the hand for the users wearing some

rings. Thus, we use morphology operations to resolve

this problem and to fill any holes which might even-

tually be present in the foreground and background of

the segmented image. Finally, the binary images are

drawn to obtain the contours of hand shape by mak-

ing use of the border tracing algorithm (Shapiro and

Stockman, 2001).

2.1.2 Region of Interest (ROI) Extraction

To extract the ROI of the palmprint image, our system

is based on the detection of the four local minima

(Finger-webs) which are focused on the hand contour.

Once these points are detected, it would be possible

to classify the hand in left hand or right hand. This

classification serves us to locate the ROI.

A. Finger-webs Determination

In order to detect the four local minima, we apply

the radial distance to a reference point technique

(Konukoglu et al., 2006). First, the middle point Wm

of where the arm or wrist region crosses the image

edge is chosen as the reference point (Konukoglu

et al., 2006) as shown in Figure 1.(a) and an Eu-

clidean distance to all the border pixels from Wm is

calculated. Then, a distance distribution diagram is

plotted (Figure 1.(b)). Finally, the local minima in

the distance distribution diagram which represent the

Finger-webs locations are found (Figure 1.(c)).

This method has however some disadvantages:

it is sensitive to contour irregularities which lead

to false peaks detection. So, we use the smoothing

(a) (b) (c)

Figure 1: Finger-webs determination (a) Reference point

Wm (b) Distance distribution diagram of the hand contour

points to a reference (c) Finger-webs locations.

method by applying a low pass filter.

B. Classification of Hands into Right and Left Hand

The proposed system provides the flexibility for the

user to use one of the two hands for recognition.

Therefore, we apply a classification step for the

database. This step allows reducing the number of

comparisons and subsequently reducing computation

time and recognition time as only half of the database

needs to be searched. Therefore, it is very interesting

for real-time applications. The following rules shown

are applied to determine the right and left hands:

• If Y1 > Y4 then left hand

• If Y1 < Y4 then right hand

Where Y1 and Y4 are the first and fourth local

minimum ordinates previously detected.

This classification rules suffers from a problem in

the absence of the thumb which causes the detection

of three local minima. To overcome this limitation,

we count the number of skin pixels intersected with

the left edge, if it exceeds the number of pixels

intersected with the right edge so it’s a left hand

otherwise it is a right hand.

C. Region of Interest (ROI) Location

After hand detection, it is necessary to extract the ROI

independently of the distance between the hand and

the capture system. Our extraction is based on hand

dimensions and the palm extraction method described

by (Doublet et al., 2007). In the work of (Doublet

et al., 2007), the width of the palm is calculated by the

Euclidean distance between two points which repre-

sent two indexes in the hand’s shape model fixed after

experiments at 30 and 125. In our work, these two

points are defined differently depending on the size of

the hand. To determine the width of the palm, a line is

formed between point A and B (Figure 2.(a)). Then,

we trace the mediator [OE] of the segment [AB] with

[OE]= 1 / 2 [AB]. Finally, we trace the segment that

passes through the point E, which is perpendicular to

the segment [OE], its intersection with the edge of the

hand corresponds to the two points F1 and F2. The

PALMPRINT RECOGNITION BASED ON REGIONS SELECTION

321

Euclidean distance between the point F1 and F2 gives

us the width of the palm denoted L (Figure 2. (a)).

Once the palm’s width L is determined, we could cre-

ate the ROI based on palm dimension. We begin first

by tracing the segment [OO1], which is perpendicular

to the segment [AB] with [OO1] = 1 / 10 L (Dou-

blet et al., 2007), then we trace the segment [E1E2]

that passes through the point O1 and perpendicular to

the segment [OO1] with [E1E2] = 2 / 3 L (Doublet

et al., 2007), finally we continue to trace the other

three sides, each having the same size 2 / 3 L. Figure

2. (b) shows the creation of the ROI.

(a) (b)

Figure 2: Region of interest location (a) palm width L

determination (b) ROI creation with [OO1] = 1 / 10 L

[E1E2]=[E1E3]=[E3E4]=[E4E2] = 2 / 3 L.

After locating the ROI, we applied a mask having

the same size and shape on the original image in order

to extract the ROI from the rest of the hand.

2.1.3 ROI Preprocessing

As the ROI may have different sizes and orientations,

a normalization step is necessary. First, the images are

rotated to the right-angle position by using the verti-

cal axis as the rotation-reference axis. After that, as

the size of the ROI vary from hand to hand, they are

resized to a standard image size. In our work, the im-

ages are resized to T*T with T = 180 pixels. Finally,

to improve the quality of the image, we attenuate the

noise by applying a low pass filter.

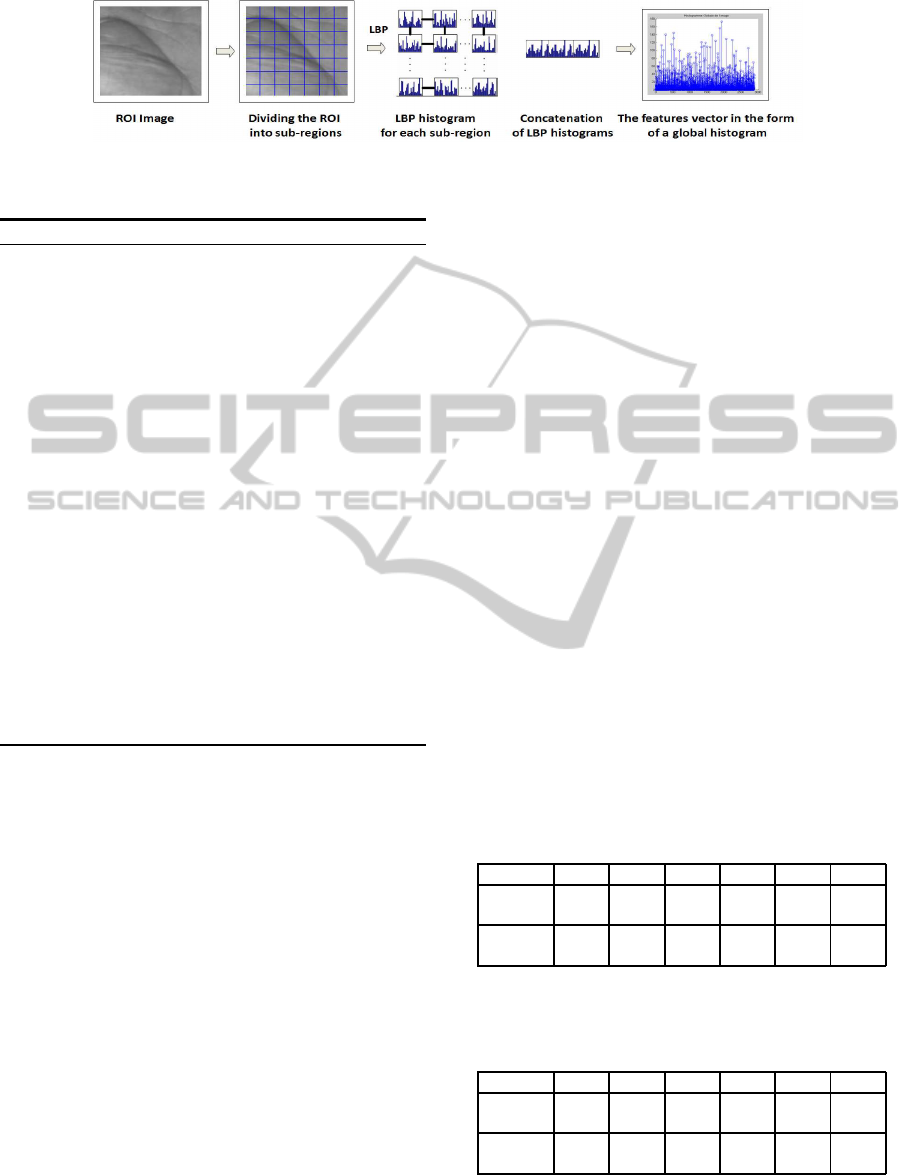

2.2 Features Extraction

Features extraction is defined to describe the ROI by

the features that best discriminate the palmprint. In

our work, we propose a new way to apply the local

binary patterns (LBP) texture descriptor.

The LBP operator, being introduced by (Ojala

et al., 1996), is a simple yet powerful texture descrip-

tor which has been used in various applications. LBP

operator labels every pixel in an image by threshold-

ing its neighboring pixels with the center value. After

the labels have been determined, a histogram H of the

labels with dimension 255 is constructed as:

H

l

=

∑

i, j

{L(i, j) = l} l = 0, . .. , n− 1 (1)

Where n is the number of different labels pro-

duced by the LBP operator, while i and j refer to the

pixel location. To improve the robustness and gener-

alization ability of the original LBP operator, it has

been extended by (Ojala et al., 2002) to take account

neighborhoods of different sizes and shapes. Another

extension to the original LBP operator introduced by

(Ojala et al., 2002) is to use so called uniform pat-

terns. Ojala et al also found that only 58 of 256 LBP

patterns are uniform. (Ahonen et al., 2004) found in

their experiments with texture images, that 90% of

patterns are uniform. Subsequently, the amount of

data can be reduced by constructing a histogram of

dimension 59. The whole procedure of our palmprint

features extraction is illustrated in Figure 3.

First, we divided the ROI of the palmprint into r

* r non-overlapped square sub-regions R0,..., R (r *

r) -1, each of them has the side length of T/r. This

division allows us to take into account the spatial re-

lations of the palmprint regions. Then, we apply uni-

form LBP in the (8, 1) neighborhood within each of

the sub-regions to describe the texture features of the

palmprint. Finally, the concatenation of histograms

produced by each region allows getting a global his-

togram that represents our features vector.

2.3 Features Selection

When the image has been divided into regions, it

can be expected that some of the regions contain

more useful information than others in terms of distin-

guishing between people. Therefore, we use Sequen-

tial Forward Floating Selection (SFFS), developed by

(Pudil et al., 1994), as a features selection method.

The principle of the SFFS algorithm is as follows: it

allows adding a features (e.g. sub-region) at each step

and deletes multiple as the subset result improves the

objective function: the minimization of the false clas-

sification rate. The pseudo-code of our features selec-

tion algorithm is the following:

Where E

0

is the error rate (false classification

rate), Cj refers to the sub-regions, n to the number

of selected sub-regions, k to the total number of sub-

regions and Sn to the pool of the selected sub-regions.

2.4 Features Matching

Several possible dissimilarity measures have been

proposed for histograms. In this work, we use the

following X

2

statistic:

X

2

(H

P

, H

G

) =

l

∑

i=0

(H

P

i

− H

G

i

)

2

(H

P

i

+ H

G

i

)

(2)

VISAPP 2011 - International Conference on Computer Vision Theory and Applications

322

Figure 3: Diagram of palmprint features extraction.

Algorithm 1: Features selection algorithm.

Initialize an empty subset S

0

; E

0

= 100%; n = 0;

/Find the best feature that minimize the objective func-

tion and update S

n

( forward)/

while n < k do

C

j

= argmin

C

j

6∈S

n−1

E

S

n−1

∪C

j

S

n

= S

n−1

∪C

j

E

n

= E(S

n−1

∪C

j

)

n = n+ 1

/Find the worst feature that minimize the objective

function and update S

n

(backward)/

while n > 2 do

C

j

= argmin

C

j

∈S

n

E

S

n

\C

j

B

e

= S

n

\C

j

E

B

e

= E(B

e

)

if E

B

e

< E

n

then

S

n−1

= B

e

E

n−1

= E

B

e

n = n− 1

else

Break

end if

end while

end while

where l is the length of the features vector of the

palmprint image, H

P

refers to a target palmprint his-

togram and H

G

to a model palmprint histogram.

3 EXPERIMENTAL RESULTS

AND COMPARISON

In this section, we present the experiments performed

during the on-line stage. Before presenting them, we

will briefly describe the databases used.

3.1 Palmprint Databases

In order to test our recognition process, two palm-

print databases are adopted, including “CASIA-

Palmprint” Database (CASIA-Palmprint-Database,

2003) and “PolyU-Palmprint” Databases (PolyU-

Palmprint-Database, 2005). “CASIA-Palmprint”

Database contains 4512 palmprint images captured

from 282 subjects. For each subject, we collect 8

palmprint images from both left and right palms. All

palmprint images are collected in the same session.

“PolyU-Palmprint” Database contains 7752 palm-

print images collected of 193 subjects. In this dataset,

we collected the palmprint images on two separate

sessions. On each session, the subject was asked to

provide about 10 images, each of the left palm and the

right palm. We used “CASIA-Palmprint” database as

a training base for the SFFS algorithm, as well as a ba-

sis for evaluating the performance of the whole pro-

cess of our approach. To validate this performance,

we used “PolyU-Palmprint” database.

3.2 On-line Experiments

In our on-line phase, three experiments were con-

ducted for six different divisions using 8 images

of each hand of 282 users taken from “CASIA-

Palmprint” databases. So, first we randomly selected

5, then 4, and finally 3 images of each hand for the

gallery and the rest for the probe. The result of the

Recognition Rate (RR) achieved in these experiments

is shown in Table 1, Table 2 and Table 3 respectively.

Table 1: Comparison of recognition rates with and without

selection using 5 images of each hand for the gallery and 3

images of each hand for the probe.

RR(%) 2*2 3*3 4*4 5*5 6*6 7*7

Without 94,38 95,62 96,67 96,85 96,73 96,98

Selection

With 94,38 94,81 96 96,98 96,79 97,53

Selection

Table 2: Comparison of recognition rates with and without

selection using 4 images of each hand for the gallery and 4

images of each hand for the probe.

RR(%) 2*2 3*3 4*4 5*5 6*6 7*7

Without 92,53 94,46 95,42 95,79 96,20 96,29

Selection

With 92,53 94,23 95 96,34 95,46 96,66

Selection

These three experiments show a gain in terms of

RR for different numbers of divisionsinto sub-regions

and for different numbers of images in the gallery and

PALMPRINT RECOGNITION BASED ON REGIONS SELECTION

323

Table 3: Comparison of recognition rates with and without

selection using 3 images of each hand for the gallery and 5

images of each hand for the probe.

RR(%) 2*2 3*3 4*4 5*5 6*6 7*7

Without 88,93 92,79 93,85 93,92 94,54 94,14

Selection

With 88,93 91,52 94 94,14 94,04 94,72

Selection

the probe, therefore, the importance of the selection

phase. This gain is the result of ignorance of the re-

gions with useless information e.g. regions that in-

crease the intra-class variability among the palmprint

images. From the results of the three previous experi-

ments, it is noted that division 7 gives the best identi-

fication rate. Therefore, we have opted for division 7

in our work. We also conclude from these three exper-

iments, the effect of increasing the images number in

the gallery. According to (Tana and Songcan, 2006),

as the number of images per users in the gallery is

high, better the performance of the recognition sys-

tem. Nevertheless, even with a reduced number of

images stored in the gallery as shown in the third ex-

periment, the performance of our system is not too af-

fected which proves their robustness and persistence

among increase in data.

To further validate our contribution regarding the

selection of the most discriminating sub-regions, we

conduct another comparison. This comparison is con-

cerned not only the RR obtained but also the size of

the features vector, identification time and the total

time of our recognition process. Table 4 shows this

comparative study which is done using the division 7

and the same conditions as the first experiment.

Table 4: Comparison of the RR, the size of the features vec-

tor, the identification time and total execution time without

and with selection of discriminating regions.

Without With

Selection Selection

RR 96,98% 97,53%

Size of the 2891 1416 for left hand

features vector 1121 for right hand

Identification 1,35 s 0,75 s

Time

Total execution 2,4 s 1,8 s

Time

As we have seen from these results, three interpre-

tations can be drawn: the first is in terms of perfor-

mance: an improvement gain of about 0,55% in RR,

the second is in terms of storage space generated by

the gain of more than half of size of features vector,

and finally, the third is in terms of speed: a decrease

in identification time and in total running time as half

which is very interesting for real-time applications.

The promising results of our approach have encour-

aged us to further test its performance over “PolyU-

Palmprint” database. The following experimental re-

sult is achieved using 6438 palmprint images taken

by 160 users (Table 5). Indeed, we used images taken

from the 1st session as the gallery (3236 images) and

images from the 2nd session as probe (3202 images)

using the chosen division 7. When images of the

palmprint are collected in two different sessions, sev-

eral problems such as orientation, translation, texture

deformation and lighting conditions vary from one

session to another, which seems always the case in a

real application. This experimental result can be one

of the newexperimentalresults published in the litera-

ture since the majority of work uses palmprint images

are collected in the same session.

Table 5: Comparison of recognition rates with and without

selection on “PolyU-Palmprint” databases.

Without Selection With Selection

Recognition rate 94,41% 95,35%

Although our approach is tested on a database

with significant size using two different sessions

which present more variability, the previous experi-

ence recorded an identification rate of 95,35% and we

have once again shown the importance of the selec-

tion step with a gain of 0,94%.

3.3 Comparison with other Works

In this section, we compare our work with two of the

most famous works in the literature, namely, the work

of (Lu et al., 2003) and the work of (Wu et al., 2003).

Table 6 presents the result of this comparison.

Table 6: Comparison of the obtained performance from the

proposed approach and the work of Lu et al. and Wu et al.

Our approach [Lu et al., 2003]

98,56% 99,14%

Our approach [Wu et al., 2003]

98,83% 99,18%

It can be observed from the previous two experi-

ments that the performance of our approach is slightly

lower comparable to that of Lu et al. and Wu et al.

Apart from the promising results, LBP has another

big advantage over other methods which its simplic-

ity in computation (Goh et al., 2008).

4 CONCLUSIONS

We have presented in this article a new approach for

personal identification by palmprint. In the first place,

VISAPP 2011 - International Conference on Computer Vision Theory and Applications

324

lets say that the step of preprocessing is very impor-

tant for contactless palmprint images and it is used to

locate ROI from each individual hand. Then, a pro-

cedure of partitioning the whole image palmprint into

sub-regions is achieved and the LBP operator is ap-

plied to describe the texture features within each sub-

region. In order to keep only the most discriminating

regions for recognition, the SFFS algorithm has been

the basis for this selection.

To validate our work and our contributions more

precisely, we conducted several on-line experiments

on two real databases with significant sizes “CASIA-

Palmprint” and “PolyU-Palmprint”. These experi-

ments achieved a RR of 97,53% and 95,35% respec-

tively on the two databases. The results obtained were

satisfactory and show a considerable increase in RR

with the selection of discriminating regions which

prove the interest of our approach and also validate

the choices made.

Our future orientation concerns the use of another

solution for automatic segmentation of the hand to

process images taken in a more complex environment.

ACKNOWLEDGEMENTS

Portions of the research in this paper use the “CASIA-

Palmprint” Image Database collected by the Chinese

Academy of Sciences Institute of Automation.

Portions of the work tested on the “PolyU-

Palmprint” Database 2nd version collected by the

Biometric Research Center at the Hong Kong Poly-

technic University.

REFERENCES

Ahonen, T., Hadid, A., and Pietikainen, M. (2004). Face

recognition with local binary patterns. In Proceed-

ings of the European Conference on Computer Vi-

sion(ECCV), pages 469–481.

CASIA-Palmprint-Database (2003). Casia palmprint

database. http://www.cbsr.ia.ac.cn/english/

Palmprint%20Databases.asp.

Chen, J., Zhang, C., and Rong, G. (2001). Palmprint recog-

nition using crease. In Proceeding International Con-

ference on Image Process, volume 3, pages 234 – 237,

Thessaloniki , Greece.

Doublet, J., Lepetit, O., and Revenu, M. (2007). Con-

tact less palmprint authentication using circular ga-

bor filter and approximated string matching. In Sig-

nal and Image Processing(SIP), volume 3, pages 495–

500, Honolulu,United States.

Duta, D., Jain, A. K., and Mardia, K. V. (2002). Match-

ing of palmprints. Pattern Recognition Letters(PRL),

23(4):477–485.

Goh, M. K., Connie, T., and Teoh, A. B. (2008). Touch-

less palm print biometrics: Novel design and im-

plementation. Image and Vision Computing(IVC),

26(12):1551–1560.

Han, C. C., Cheng, H. L., Lin, C. L., and Fan, K. C.

(2003). Personal authentication using palm-print fea-

tures. Pattern Recognition(PR), 36(2):371–381.

Kan, C. and Srinath, D. M. (2002). Invariant

character recognition with zernike and orthogonal

fourier-mellin moments. Pattern Recognition(PR),

35(1):143–154.

Konukoglu, E., Yoruk, E., Darbon, J., and Sankur, B.

(2006). Shape-based hand recognition. IEEE Trans-

actions on Image Processing, 15(7):1803–1815.

Li, W., Zhang, D., and Xu, Z. (2002). Palmprint iden-

tification by fourier transform. International Jour-

nal of Pattern Recognition and Artificial Intelli-

gence(IJPRAI), 16(4):417–432.

Lu, G., Zhang, D., and Wang, K. (2003). Palmprint recog-

nition using eigenpalm features. Pattern Recognition

Letters(PRL), 24(9-10):1463–1467.

Ojala, T., Pietikainen, M., and Maenpaa, T. (2002). Mul-

tiresolution gray-scale and rotation invariant tex-

ture classification with local binary patterns. IEEE

Transaction on Pattern Analysis and Machine Intel-

ligence(PAMI), 24(7):971–987.

Ojala, T., Pietikinen, M., and Harwook, D. (1996). A

comparative study of texture measures with classifi-

cation based on feature distribution. Pattern Recogni-

tion(PR), 29(1):51–59.

Otsu, N. (1979). A threshold selection method from gray-

level histograms. In IEEE Transactions on SMC (Sys-

tems, Man, and Cybernetics), volume 9, pages 62–66.

PolyU-Palmprint-Database (2005). Polyu palmprint

database. http://www4.comp.polyu.edu.hk/

∼biometrics/.

Pudil, P., Novovicova, J., and Kittler, J. (1994). Floating

search methods in feature selection. Pattern Recogni-

tion Letters(PRL), 15(11):1119–1125.

Shapiro, L. and Stockman, G. (2001). Computer Vision.

Prentice Hall.

Tana, X. and Songcan, C. (2006). Face recognition from a

single image per person: A survey. Pattern Recogni-

tion(PR), 39(9):1725–1745.

Wang, X., Gong, H., Zhang, H., Li, B., and Zhuang, Z.

(2006). Palmprint identification using boosting local

binary pattern. In Proceedings of the 18th Interna-

tional Conference on Pattern Recognition(ICPR), vol-

ume 3, pages 503–506.

Wu, X., Zhang, D., and Wang, K. (2003). Fisherpalms

based palmprint recognition. Pattern Recognition Let-

ters(PRL), 24(15):2829–2838.

Wu, X., Zhang, D., Wang, K., and Huang, B. (2004).

Palmprint classification using principle lines. Pattern

Recognition(PR), 37(10):1987–1998.

Zhang, D., Kong, W., You, J., and Wong, M. (2003).

Online palmprint identification. IEEE Transaction

on Pattern Analysis and Machine Intelligence(PAMI),

25(9):1041–1050.

PALMPRINT RECOGNITION BASED ON REGIONS SELECTION

325