USER-CENTRIC USABILITY EVALUATION FOR

ENTERPRISE 2.0 PLATFORMS

A Complementary Multi-method Approach

Andreas Auinger, Dietmar Nedbal, Alexander Hochmeier

Research Unit Digital Economy, Department for Marketing and Electronic Business

Upper Austria University of Applied Sciences, Steyr, Austria

Andreas Holzinger

Research Unit Human–Computer Interaction, Institute for Medical Informatics, Medical University Graz, Graz, Austria

Keywords: Enterprise 2.0, Eye Tracking, Usability Evaluation, Heuristic Evaluation.

Abstract: Enterprise 2.0 projects offer a great potential for enabling the effective identification, generation and

utilization of information and knowledge within complex organizational processes; and have a deep impact

on organizational changes. This paper focuses on a usability methodology, whereby the critical success

factors include (i) efficient organization, (ii) guided by organizational needs, (iii) dealing with

organizational complexity, and (iv) providing end user requirements. We present a complementary multi-

method approach of user-centric usability evaluation methods including eye tracking, heuristic evaluation

and a feedback blog. This evaluation approach in combination with continuous user training allows

comprehensive enhancement of ability and motivation of the users, which leads to excellent project

performance.

1 INTRODUCTION

Enterprise 2.0 (McAfee, 2006), the use of interactive

and collaborative Web 2.0 concepts and

technologies within and between enterprises, offers

great potential for flexible, loosely-coupled

integration and ad-hoc information exchange. This

requires organizations to move away from their

traditional concepts of competition towards a

networked, service-oriented economic thinking and

the dissolution of hierarchical structures in favour of

decentralized, networked forms of organization. But

Enterprise 2.0 projects are different from common

IT projects by their nature (Chui et al., 2009; Koch

and Richter, 2009). They always have a deep impact

on organizational and cultural changes by enabling

employees to pro-actively enlarge their own role,

mandatorily need a critical mass of user involvement

(Chui et al., 2009), have to face the fact of missing

best practices and reputation, confront the users with

unused ways of working with IT systems (e.g. the

use of tagging, the syntax of enterprise wikis, etc.)

and are not yet an established part of a company’s

state-of-the-art IT portfolio. In addition, the value of

an Enterprise 2.0 platform for organizations and

their employees is – in contrast to e.g. an ERP

system - still neither clear nor proven, however,

seems to address an increase of the enterprises’

productivity by enabling the users to do their job

more effective and efficient through better

availability of resources including organizational

knowledge (Koch and Richter, 2009).

If Enterprise 2.0 projects are carried out without

considering the aspects mentioned above, they often

fail because of e.g. long lasting implementation

processes without delivering results accepted by the

users or additional projects of higher priority using

resources necessary for the Enterprise 2.0 projects.

Consequently, to increase the success of Enterprise

2.0 projects, the whole project’s phases and tasks

must be organization-driven to consider the

increasing complexity of organizations. The critical

success factors identified range from (i) technical

barriers as in usability issues that lead to the denial

of the new system (Venkatesh, 2000), (ii)

119

Auinger A., Nedbal D., Hochmeier A. and Holzinger A..

USER-CENTRIC USABILITY EVALUATION FOR ENTERPRISE 2.0 PLATFORMS - A Complementary Multi-method Approach.

DOI: 10.5220/0003521501190124

In Proceedings of the International Conference on e-Business (ICE-B-2011), pages 119-124

ISBN: 978-989-8425-70-6

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

managerial barriers, as in a lack of commitment

from the executives, mis-alignment of project goals

and enterprise goals, insufficient resources, time or

money resulting from concurrent projects, or the fact

of volatility in customer requirements (Mohan et al.,

2008; Franken et al., 2009; Sirkin et al., 2005), to

(iii) cultural barriers, such as the “Not Invented

Here” syndrome, the fear of the unknown, or apathy

(Chesbrough, 2006).

In respect of the complex variety of these

success factors, the main focus of this paper is to

address the mentioned technical barriers, especially

in the means of usability issues. The objective of this

paper is to present a complementary multi-method

approach of usability evaluation methods using eye

tracking, heuristic evaluation and a feedback blog,

and its contribution to the successful implementation

of Enterprise 2.0 platforms. Consequently, the

authors show the use of these methods, and how

they complete each other in order to deliver practical

insights into employee-driven usability issues. The

remaining of the paper is arranged as follows: In

section 2 the projects’ implementation methodology

is explained. Section 3 provides insight into the

usability testing methodology and presents key

results of the usability evaluation. Section 4

discusses the contribution of the multi-method

approach and section 5 closes with findings,

limitations and possible future research.

2 THE IMPLEMENTATION

METHODOLOGY

In the course of the 3-year R&D project „SCIM 2.0”

(funded under the program “COIN – Cooperation &

Innovation“, a joint initiative by the Austrian

Federal Ministry for Transport, Innovation and

Technology and the Austrian Federal Ministry of

Economy, Family and Youth) the authors have

created a participative, evolutionary design (Koch

and Richter, 2009) for the implementation of

Enterprise 2.0 projects and practically evaluated it in

two separate projects within Austrian mid-sized

companies. The overall methodology included the

phases: (i) Assessment (“Whether to start the

Enterprise 2.0 project”), (ii) Analysis (“What are the

requirements”), (iii) Design (“How can the

requirements be realized”), (iv) Realization (“Do the

implementation and roll it out”), and (v) Operation

(“Support and evaluate the productive information

system”). Within these for IT projects common and

well-established phases, the authors used specific

methods to explicitly address the success factors of

Enterprise 2.0 projects and the corresponding change

management issues.

The implementation of the Enterprise 2.0

platform was done with Microsoft Sharepoint 2010

in an evolutionary manner – within Web 2.0 projects

usually referenced as perpetual beta (Koch and

Haarland, 2004). Perpetual beta is a rapid and agile

software develop method which recommends to roll

out the software (in our case the Enterprise 2.0

platform) in a “beta release” stadium and to train and

involve the end users in a very early phase.

Feedback from the users is collected by using a

feedback blog and conducting usability tests

including eye tracking analysis and heuristic

evaluation. This multi-method approach provides

multi-dimensional evaluation insights, which are

discussed in the next two sections.

3 MULTI-METHOD USABILITY

EVALUATION

This section highlights the methods and tools used to

improve the usability of the Enterprise 2.0 platform.

This includes the use of (i) a feedback blog, (ii) eye

tracking, and (iii) heuristic evaluation. The usability

methods (see e.g. Holzinger, 2005) were applied

during the beta phase of the project which included

about 50 selected beta users of departments the tools

were targeted at.

3.1 Feedback Blog

A feedback blog went online parallel to the first user

training for the first prototype, and was used during

the rest of the implementation phase. In this period

of time (about 5 months), 53 blog posts and 64

comments in total were submitted. The blog posts

were categorized and clustered into general usability

and functional suggestions, nice-to-have

improvements, and implementation bugs. Examples

of important usability issues and missing features

that were identified by the users are: (i) As most user

generated content will be provided in blogs and

wikis, the users suggested adjusting the placement of

the edit button in the Enterprise wiki layout, to meet

the users’ expectations. (ii) The functionality for

comments and notes within wiki pages: Microsoft

Sharepoint provides this functionality within all

pages, but it is hidden in a tab within “Tags &

Notes“, and therefore not usable for most users. The

solution was to embed this function directly on the

wiki page. (iii) To make contents of blogs, wikis and

lists available offline as a nice to have-feature.

ICE-B 2011 - International Conference on e-Business

120

3.2 Eye Tracking

The eye tracking analysis (cf. Auinger et al., 2011)

was conducted with 12 test subjects using the first

closed beta version of the prototype. The probands

were divided into two research groups of 6 subjects

each. All probands were given a two-hour training

on the prototype, with one group being tested before

the training and the other group after the training.

The goal of the test was to complete tasks by

locating pre-defined design elements or hyperlinks

positioned somewhere on the web site and to

confirm it by fixing the element with their eyes. For

each of the 16 tasks they had up to 5 seconds time to

complete the task.

The eye tracking data was recorded by a 120Hz

eyegaze eye tracking system from the vendor

Interactive Minds. It enabled us to elate the

intuitiveness of the system for inexperienced users,

and to demonstrate how the training affects the use

of the system. As a side effect, it was intended to

raise awareness by involving the users more deeply

into the implementation process by tailoring a

system that fits their needs.

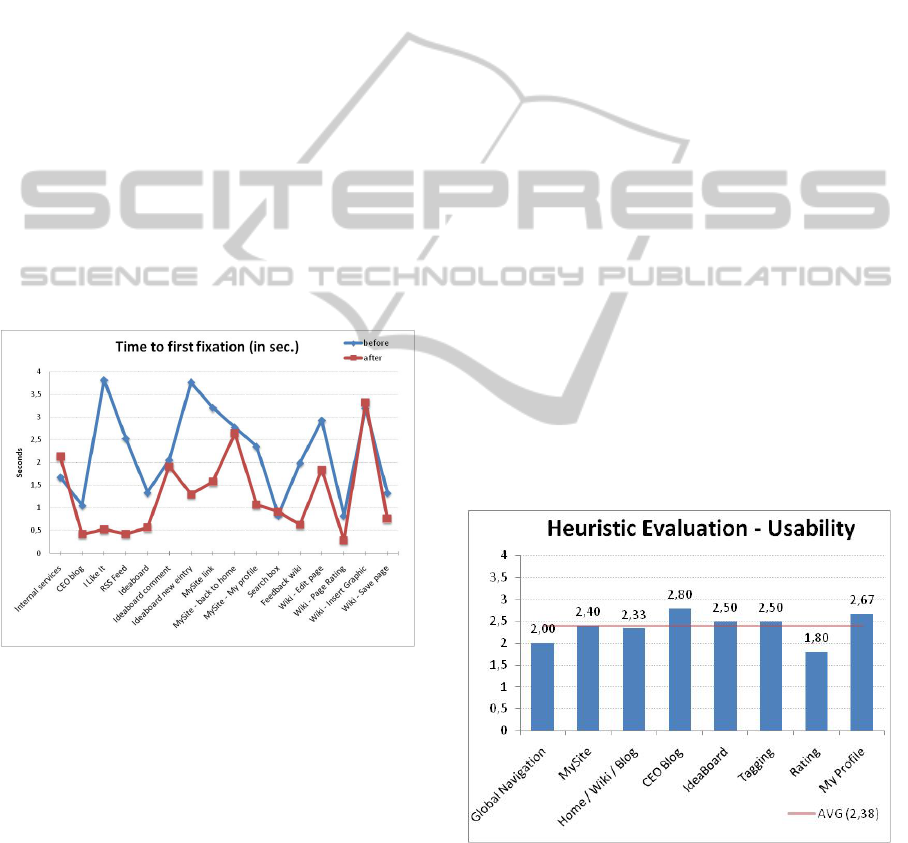

Figure 1: Time to first fixation before and after training.

In order to be able to calculate the relevant

indicators, areas of interest were defined with the

eye tracking analysis software Nyan 2.3.5 for each

task. Additionally, it was possible to calculate the

average time to finish each task. Based on the results

it can be stated that the training had a positive

impact on the handling of the system. As expected,

the results of the analysis after training were

significantly better than those carried out before the

training. The average time to first fixation, i.e. the

time required to find the desired element or

hyperlink on the web site, was in 14 of the 16 tasks

lower than before (cf. Figure 1). The highest

difference in time to first fixation was observed in

the task to find the “I Like It” tag. Probands needed

on average 3.82 seconds before and 0.525 seconds

after the training. Before the training, the search was

an unstructured hit or miss trial to find the element.

After the training, the first view went in most cases

straight to the “I Like It” tag and stayed there.

3.3 Heuristic Evaluation

Supplemental to eye tracking and the feedback blog

the Enterprise 2.0 platform was evaluated using an

adapted form of heuristic evaluation method with 6

probands. The method was deployed right after a

two hours training session for the Enterprise 2.0

platform prototype. So, the users could give

immediate feedback of what they had just

experienced during the training. The probands were

interviewed by an experienced usability expert,

using a basic heuristic evaluation questionnaire as

described in the following. To handle possibly bias,

the evaluation was carried out individually in a

separate room and the answers were made

anonymous. The probands were chosen by their

experience and knowledge concerning user

interfaces and web applications. The questionnaire

was based on (Petrie and Buykx, 2010). Probands

were asked to rate the overall satisfaction and their

impression of the usability of each functionality on

level of top menus with a five-point Likert-type

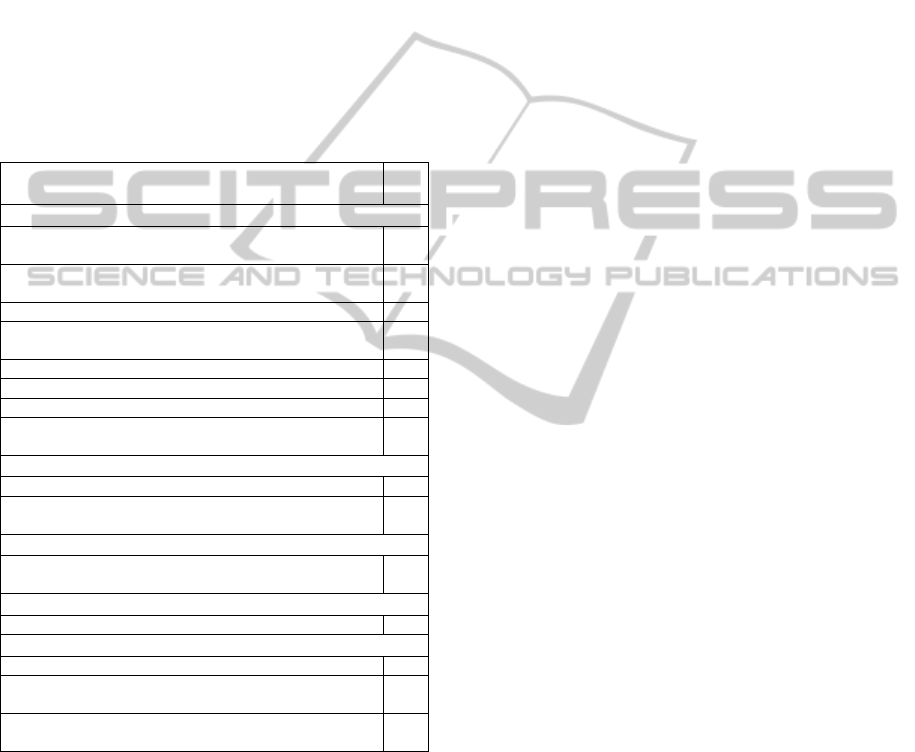

scale. With an average of 2.38 (cf. Figure 2) the

overall usability was rated between “good” and

“moderate”.

Figure 2: Heuristic evaluation of usability.

Additionally, the probands were asked for the exact

reason for usability problems, in line with the

following criteria, based on the dialog principles of

the ISO 9241-110 (ISO, 2006) standard (the

“heuristics”, cf. Table 1). Each criterion was rated

USER-CENTRIC USABILITY EVALUATION FOR ENTERPRISE 2.0 PLATFORMS - A Complementary Multi-method

Approach

121

on a 5-point Likert-type scale for heuristic

evaluation (Petrie and Buykx, 2010). Whereas the

overall satisfaction and usability was rated as “good”

on the average, the deeper look revealed some more

severe usability issues. Table 1 contains a summary

of the critical and catastrophic usability problems

identified grouped by the heuristic the problem

mainly addresses. The table shows that primarily the

heuristic “self descriptiveness and findability” was

concerned. In terms of Enterprise 2.0 tools the main

identified areas with usability issues were blogging

(6 issues), personal profile & social networking (i.e.

Sharepoint’s My Site, 4 issues), tagging (3 issues),

and wikis (2 issues).

Table 1: Critical (Rating = 3) and catastrophic (Rating = 4)

usability problems identified by the heuristic evaluation.

ISO 9241-110: Dialog principle /

Short description of the problem

Ra-

ting

ISO: Self descriptiveness and findability

Where and how can I add a colleague to “My Team”

within the My Site?

4

The link back to the homepage (from the My Site) is

not present/findable

4

The link to the edit page of a blog is hard to find 4

It is not clear how to find certain ideas in the IdeaBoard

when the entries increase

4

The tag cloud is hard to find 4

How can I find new blogs (e.g. knowledge blogs) 3

The term “Manage posts” is not self descriptive 3

It is unclear how I can tag external sources and make

tags “private”

3

ISO: Suitability for the task and functionality

It is unclear how the structure of a wiki is generated 3

The IdeaBoard surely gets a mess if no clear guidelines

are applied

3

ISO: Controllability and completeness of functionality

The My Site is rather complex, therefore it should be

“clearer” and with less functionality

3

ISO: Consistency & conformity with user expectations

The edit functions of the blog are missing 3

ISO: Understandability & suitability for learning

For what can I use tagging in the context of my work? 4

A user documentation (wiki) should be made available

for all users

3

The My Site is hard to understand because of the

complex functionality

3

4 DISCUSSION OF THE

MULTI-METHOD APPROACH

As usability is multifaceted it must be assessed by

using a variety of different measures (Agarwal and

Venkatesh, 2002). The mix of the three usability

methods described in the previous section provides

insights that would not have been possible with only

one source of data (Cyr et al., 2009) and therefore

increases the overall usability of the system.

Especially involvement of the users including the

possibility to articulate, publish and rate continuous

feedback is a key factor for successful Enterprise 2.0

projects because it addresses the reputation, fosters

participation and reflects the intrinsic motivation of

the users (Chui et al., 2009). Factors which are most

often ignored are control (internal and external self-

efficacy and facilitating conditions), intrinsic

motivation, and emotion (also conceptualized as

computer anxiety) (Venkatesh, 2000). Indeed the

motivation of employees is the most crucial success

factor in any enterprise and must be considered of

top importance (Holzinger, 2011). Actually, ability

and motivation are the two top factors for

performance (Lawler, 2003).

It is known that blog-based knowledge

management solutions can contribute to solve the

problem of knowledge sharing and knowledge

creation in organizations where the emphasis is both

on push and pull style in knowledge capturing (Li,

2007). In a previous study it could be shown that

blogs can be used to increase learning performance

(Holzinger et al., 2009). In the described case the

blog used as feedback channel proved very useful as

not only that issues that occurred during usage of the

system could be directly reported but also the

practice of blogging was trained. It could be proven

that users that were posting in the feedback blog

were subsequently also intensively utilizing the

various blogs to inform on new issues and comment

on other’s posts. Even sceptical users, identified by

the stakeholder analysis during the analysis phase,

behaved that way. Thus, the feedback blog helped to

reduce barriers against the new system, motivated

the users to participate on the new system and

positively influenced the overall acceptance of the

system.

Eye tracking has been chosen as additional

method for evaluation in this multi-method approach

because it is a reliable method in many studies

(Duchowski, 2007). Eye tracking has also been

approved as an appropriate methodology for

usability studies (Nielsen and Pernice, 2010),

especially in Enterprise 2.0 environments in order to

test user experience (Djamasbi et al., 2010; Herendy,

2009; Jacob and Karn, 2003). User experience

encompasses emotion, which is an important mental

and physiological state, influencing the cognition,

perception and communication of the users and can

be measured by application of the valence arousal

space for emotion modelling, e.g. to test the

correlation between performance and emotional

states (Stickel et al., 2009). The essence is to avoid

negative emotional influences within the application.

ICE-B 2011 - International Conference on e-Business

122

The eye tracking result in the first impression was

what was to be expected, as training definitely

improves response time to obtain an eye fix. But the

eye tracking method was intended to provide more

insight than just measuring the effectiveness of

training. By comparing the before and after results

the study the need for training and familiarization of

the prototype to avoid negative emotional influences

could be demonstrated. Especially users that are not

familiar with the concepts of Enterprise 2.0 (like

blogs, wikis, tagging, and rating) and their

implementation within Microsoft Sharepoint 2010

are more likely to get frustrated. The scan paths and

heat maps revealed that the probands sometimes had

problems to find the desired functionality leading to

frustration and denial of the new system.

Furthermore, during the beta test, additional

usability flaws were discovered by eye tracking. For

example, the “My Site – Home” task proved that it is

hard to find the way back to the home page from the

My Site even after a training due to inconsistencies

in navigation in Microsoft Sharepoint 2010. To

summarize the outcome of the eye tracking

evaluation it can be stated that it improved the

motivation of the users to participate as they are

more involved. It also showed the need for

continuous improvement of the platform in rather

short cycles via perpetual beta.

Heuristic evaluation is a systematic usability

inspection method for evaluation of a user interface

design (Nielsen and Molich, 1990) that still appears

to be one of the most actively used method

(Hollingsed and Novick, 2007). The goal of this

informal method is to find usability problems in the

user interface in an early phase and address them as

part of an iterative process. These characteristics

made the heuristic evaluation the ideal supplemental

method for receiving user feedback from the

experienced beta users. In addition it served as kind

of a fallback to the feedback blog, if the blog would

not have been accepted as feedback channel by the

users. Nielsen and Molich recommended using three

to five probands for heuristic evaluation, as five

probands detect between 55% and 90% of usability

problems. Adding more probands will not

significantly increase the detection rate (Nielsen and

Molich, 1990). We used six evaluations because our

probands were no usability experts, but were

identified as the most advanced system users. To

compensate the lack of usability know-how, the

evaluation was conducted in an interview setting by

an usability expert. The heuristic evaluation served

well as quick and easy evaluation method to get

immediate feedback from the users after the training.

The main outcome was that several urging usability

problems could be identified and consequently be

addressed during the perpetual beta implementation.

The heuristic evaluation also was a motivating factor

for the users, when they saw that their individual

recommendations became part of the system during

the perpetual beta.

5 CONCLUSIONS

Enterprise 2.0 offers the great potential for enabling

the effective identification, generation and

utilization of information and knowledge within

complex organizational processes. Hence, Enterprise

2.0 projects always have a deep impact on

organizational and cultural changes and

consequently to the individual users. To increase the

success of such projects, the critical success factors

of Enterprise 2.0 projects have to be considered in

the single project phases and tasks, which requires to

be (i) well and tight organized, outlined by the

organizational experiences and parameters, (ii)

driven by organizational needs, (iii) consider the

organizational complexity, and (iv) care for the user-

individual functional and usability requirements.

This paper focused on the demonstrated usability

methodology in order to address the critical success

factor “technical barriers”. The main objective was

to present a complementary multi-method approach

of a user-centric usability evaluation using eye

tracking, heuristic evaluation and a feedback blog,

and its contribution to the successful implementation

of Enterprise 2.0 in companies. Hence, the main

findings of the paper are: (i) The strength of

Enterprise 2.0 lies in the possibility of linking well-

defined processes and standardized information

flows with unstructured communication and

collaboration processes that have high priority but

are insufficiently supported by existing enterprise

solutions. (ii) The social dimension in general is one

of the biggest challenges within an Enterprise 2.0

project and needs to be addressed from the

beginning of the project. This starts with the

identification and motivation of key users and

promoters that support and push the project is

crucial. (iii) The conducted user-centric multi-

method usability evaluation approach in

combination with continuous user training allows

comprehensive enhancement of ability and

motivation of the users, which leads to excellent

project performance.

As the current research is limited to pilot project

implementations, future research is needed to

consolidate the user-centric multi-method usability

evaluation methodology and, to embed it into an

USER-CENTRIC USABILITY EVALUATION FOR ENTERPRISE 2.0 PLATFORMS - A Complementary Multi-method

Approach

123

overall Enterprise 2.0 project framework.

REFERENCES

Agarwal, R., Venkatesh, V., 2002. Assessing a Firm's

Web Presence: A Heuristic Evaluation Procedure for

the Measurement of Usability. Information Systems

Research, 13(2), 168–186.

Auinger, A., Holzinger, A., Kindermann, H., Aistleithner,

A., 2011. Conformity with User Expectations on the

Web. In Proceedings of HCI International (to be

published). Lecture Notes in Computer Science (pp.

1–10). Orlando, USA: Springer.

Chesbrough, H., 2006. Open business models: How to

thrive in the new innovation landscape. Boston, Mass.:

Harvard Business School Press.

Chui, M., Miller, A., Roberts, R. P., 2009. Six ways to

make Web 2.0 work. McKinsey Quarterly(2), 64–73.

Cyr, D., Head, M., Larios, H., Bing Pan, 2009. Exploring

Human Images in Website Design: A Multi-Method-

Approach. MIS Quarterly, 33(3), 539-A9.

Djamasbi, S., Siegel, M., Tullis, T., Dai, R., 2010.

Efficiency, Trust, and Visual Appeal: Usability

Testing through Eye Tracking. In Proceedings of the

43rd Hawaii International Conference on System

Sciences (HICSS) (pp. 438–447). Los Alamitos.

Duchowski, A., 2007. Eye Tracking Methodology: Theory

and Practice. London: Springer-Verlag.

Franken, A., Edwards, C., Lambert, R., 2009. Executing

Strategic Change: Understanding the critical

management elements that lead to success. California

Management Review, 51(3), 49–73.

Herendy, C., 2009. How to Research People’s First

Impressions of Websites? Eye-Tracking as a Usability

Inspection Method and Online Focus Group Research.

In C. Godart, N. Gronau, S. Sharma, & G. Canals

(Eds.), Software Services for e-Business and e-Society,

vol. 305. IFIP Advances in Information and

Communication Technology (pp. 287–300): Springer

Boston.

Hollingsed, T., Novick, D. G., 2007. Usability inspection

methods after 15 years of research and practice. In

Proceedings of the 25th annual ACM international

conference on Design of communication. SIGDOC ‘07

(pp. 249–255). New York, NY, USA: ACM.

Holzinger, A., 2005. Usability engineering methods for

software developers. Communications of the ACM, 48,

71–74.

Holzinger, A., 2011. Successful Management of Research

& Development, 1st edn. Norderstedt: Books on

Demand.

Holzinger, A., Kickmeier-Rust, M. D., Ebner, M., 2009.

Interactive technology for enhancing distributed

learning: a study on weblogs. In Proceedings of the

23rd British HCI Group Annual Conference on People

and Computers: Celebrating People and Technology.

BCS-HCI ‘09 (pp. 309–312). Swinton, UK: British

Computer Society.

ISO, 2006. Ergonomics of human-system interaction --

Part 110: Dialogue principles(ISO 9241-110:2006).

http://www.iso.org/iso/iso_catalogue/catalogue_tc/cata

logue_detail.htm?csnumber=38009.

Jacob, R. J. K., Karn, K. S., 2003. Eye Tracking in

Human-Computer Interaction and Usability Research:

Ready to Deliver the Promises. In J. Hyönä, R.

Radach, & H. Deubel (Eds.), The Mind's Eye (First

Edition) (pp. 573–605). Amsterdam: North-Holland.

Koch, M. C., & Haarland, A., 2004. Generation Blogger,

1st edn. Bonn: mitp-Verl.

Koch, M., & Richter, A., 2009. Enterprise 2.0: Planung,

Einführung und erfolgreicher Einsatz von Social

Software in Unternehmen, 2nd edn. München:

Oldenbourg.

Lawler, E. E., 2003. Treat people right!: How

organizations and individuals can propel each other

into a virtuous spiral of success, 1st edn. San

Francisco: Jossey-Bass.

Li, J., 2007. Sharing Knowledge and Creating Knowledge

in Organizations: the Modeling, Implementation,

Discussion and Recommendations of Weblog-based

Knowledge Management. International Conference on

Service Systems and Service Management, 1–6.

McAfee, A. P., 2006. Enterprise 2.0: The Dawn Of

Emergent Collaboration. MIT Sloan Management

Review, 47(3), 21–28.

Mohan, K., Peng Xu, Ramesh, B., 2008. Improving the

Change-Management Process. Communications of the

ACM, 51(5), 59–64.

Nielsen, J., Molich, R., 1990. Heuristic evaluation of user

interfaces. In Proceedings of the SIGCHI conference

on Human factors in computing systems: Empowering

people. CHI ‘90 (pp. 249–256). New York, NY,

USA: ACM.

Nielsen, J., & Pernice, K., 2010. Eyetracking web

usability. Berkeley, Calif.: New Riders.

Petrie, H., Buykx, L., 2010. Collaborative Heuristic

Evaluation: Improving the effectiveness of heuristic

evaluation. Proceedings of UPA 2010 Conference.

Sirkin, H. L., Keenan, P., Jackson, A., 2005. The Hard

Side of Change Management. Harvard Business

Review, 83(10), 108–118.

Stickel, C., Ebner, M., Steinbach-Nordmann, S., Searle,

G., Holzinger, A., 2009. Emotion Detection:

Application of the Valence Arousal Space for Rapid

Biological Usability Testing to Enhance Universal

Access. In Proceedings of the 5th International

Conference on Universal Access in Human-Computer

Interaction. Addressing Diversity. Part I: Held as Part

of HCI International 2009. UAHCI ‘09 (pp. 615–624).

Berlin, Heidelberg: Springer-Verlag.

Venkatesh, V., 2000. Determinants of Perceived Ease of

Use: Integrating Control, Intrinsic Motivation, and

Emotion into the Technology Acceptance Model.

Information Systems Research, 11(4), 342–365.

ICE-B 2011 - International Conference on e-Business

124