Multi-device Power-saving

An Investigation in Energy Consumption Optimisation

Jean-Marc Andreoli and Guillaume Bouchard

Xerox Research Centre Europe, Grenoble, France

Keywords:

Energy Consumption Modelling, Sequential Decision Processes, Exact Multi-dimensional Optimisation.

Abstract:

We are interested here in the problem of optimising the energy consumption of a set of service offering de-

vices. Our target is a printing device infrastructure as typically found in a medium or large office, where all

the devices are connected to the network, and clients can submit jobs to individual devices through that net-

work. We formulate a cost optimisation problem to find a trade-off between the energy consumption of the

infrastructure and the cost of allocating jobs to devices. The latter cost results from the potential gap between

the expectation of the clients and the quality of the service delivered by the devices. We present a model of

some of the typical constraints occurring in such a system. We then present a method to solve the optimisation

problem under these constraints, and conclude the paper with some experimental results.

1 INTRODUCTION

1.1 Description of the Problem

We are interested here in the problem of optimising

the operation of a set of service offering devices. Our

target is a printing device infrastructure as typically

found in a medium or large office, where all the de-

vices are connected to the network, and clients can

submit jobs to individual devices through that net-

work. In the sequel, we use that target application

to illustrate our approach, although it may apply to

other contexts as well. Our goal is to design a con-

troller which processes jobs (service requests) and ad-

justs devices (service providers) in some optimal way

which minimises the total cost of operation of the in-

frastructure. There are two main sources of control-

lable cost:

• Each device has a specific energy consumption

profile which defines several operations modes,

each with its own consumption rate and transition

cost to other modes. Typically, a device can be

either in “working” mode, when it is performing

some work for a client (printing), or in one of sev-

eral “waiting” modes, where it is simply waiting

for a new job to arrive. The waiting modes have

different energy consumption patterns. We look at

the simple case where there are only two waiting

modes:

ready

and

sleep

. In

ready

mode, the

energy consumption rate is higher than in

sleep

mode, but when a new job is assigned to the de-

vice, the wake up energy consumption is lower.

To control the energy consumption cost, we as-

sume that the controller has the ability to switch

at any time any device in

ready

mode to

sleep

mode.

• Each client is characterised by an assignment util-

ity function which indicates, for each device and

job, the adequacy of assigning that job to that de-

vice, from the point of view of the client. For ex-

ample, if the job is in colour and device B is black

and white only or does not support the full palette,

while device A does, the client estimates the cost

incurred by assigning the job to B instead of A.

This amounts to quantifying the loss of quality of

the printed document, the cost of which depends

on the intended use of the document. Similarly,

if the job has a high resolution beyond the reach

of device B but within that of A (loss of quality,

again), or if B is physically much further from the

client than A for collection (loss of time). Full

elicitation of the utility function of each client is

not realistic. Instead, we assume that each job

comes together with a cost menu, i.e. a mapping

which specifies for each device the client estimate

of the cost of assigning that job to that device. To

control the assignment cost, we assume that the

assignment of each job is performed by the con-

troller when presented the cost menu of the job.

232

Andreoli J. and Bouchard G..

Multi-device Power-saving - An Investigation in Energy Consumption Optimisation.

DOI: 10.5220/0004042002320237

In Proceedings of the 9th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2012), pages 232-237

ISBN: 978-989-8565-21-1

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

• The total cost of operation of the device infras-

tructure depends on the energy consumption char-

acteristics of the infrastructure on the one hand, on

the client assignment utility functions on the other

hand, and, of course, on the actual demand, i.e.

the sequence of jobs. Demand is intrinsically non

deterministic, and may evolve over time, but we

assume here that it is governed by some stationary

stochastic process which can be learnt from obser-

vation over a sufficiently long period of time.

We seek to develop a controller which is in charge of

both assigning devices to jobs and dynamically set-

ting timeouts, in such a way as to minimise the total

cost of operation, i.e. the sum of the energy consump-

tion cost and the job assignment cost.

1.2 Related Work

Dynamic power management has been the topic of

numerous investigations (Benini et al., 2000) and oc-

curs in many contexts: electronic design (Wang et al.,

2011), data centre management (Raghavendra et al.,

2008; Urgaonkar et al., 2010), wireless sensor net-

works (Sinha and Chandrakasan, 2001). The prob-

lem we tackle in this paper shares many concepts

with DPM. As DPM, we seek the optimal control

of the energy consumption of a set of hardware de-

vices with controllable energy consumption regimes,

under a stochastic flow of service requests, each of

which being satisfied by a controllable subset of de-

vices (actually, exactly one device per request in our

case). However, unlike many DPM studies, our as-

sumptions derive from a rarely studied, although quite

frequent configuration, where requests come together

with soft allocation constraints in the form of cost

menus, and the devices can at any time be dynami-

cally reprogrammed for energy optimisation. Our so-

lution relies on a very generic technique for sequen-

tial decision problems, known as Markov Decisions

Processes (MDP), widely investigated in the litera-

ture (Bertsekas, 2005; B¨auerle and Rieder, 2011), and

which is also used by many other DPM solutions.

We assume the simplest flavour of MDPs, where the

stochastic demand is assumed known in advance, typ-

ically learnt from the observation of past sequences.

We focus instead on the problem of simultaneously

optimising the set of devices at each step of the deci-

sion process, allowing the revision of the energy con-

sumption regime of all the devices each time a request

is submitted.

2 PROBLEM MODELLING

2.1 Parametrisation

At any time, a device has a mode which is either

ready

or

sleep

. Each device k=1:K is characterised

by:

• a

k

: cost rate in

ready

mode; a

k

: cost rate in

sleep

mode; we have a

k

< a

k

and we let a

k

= a

k

− a

k

;

•

ˇ

b

k

: cost of jump from

ready

to

sleep

;

ˆ

b

k

: cost of

jump from

sleep

to

ready

;

Let g

k

(x, τ) the overhead cost of maintaining device k

in

ready

mode during up to τ time units over a period

of x time units. We have:

g

k

(x, τ) =

def

I[x < τ]a

k

x+ I[x ≥ τ](a

k

τ+

ˇ

b

k

)

The device infrastructure receives job requests in se-

quence. For the n-th request in the sequence, let X

n

∈

R

+

be the time elapsed since the previous request; let

J

n

be the total information available about the associ-

ated job (both about its content and its client); and let

C

n

be the client cost menu which is the K-dimensional

vector whose k-th component for k=1:K holds the

client estimate of the cost of assigning job J

n

to de-

vice k.

• We assume that the random variable O

n

=

(XJC)

1:n

, which captures all that has been ob-

served just after the n-th request, can be determin-

istically summarised by a single state variable Z

n

,

called the demand state, which may live in an ar-

bitrary space Z , and which satisfies the following

Markov condition

p(O

n

|O

n−1

) = p(X

n

Z

n

|Z

n−1

)p(J

n

|Z

n

)p(C

n

|Z

n

)

Thus, state Z

n

summarises all the information of

the past demand which has an impact on the fu-

ture demand. The choice of a good demand state

space Z and model satisfying the Markov assump-

tions depends on the characteristics of the actual

demand.

• We further assume that the distributions

p(Z

n

X

n

|Z

n−1

) and p(C

n

|Z

n

) are known and

stationary (independent of n). We introduce

distributions P, Q, which are the drivers of the

demand process, as follows

1

:

p(X

n

Z

n

|Z

n−1

) = P(X

n

Z

n

|Z

n−1

)

p(C

n

|Z

n

) = Q(C

n

|Z

n

)

1

P is a joint distribution and we use the same symbol P

to denote the marginals and conditionals.

Multi-devicePower-saving-AnInvestigationinEnergyConsumptionOptimisation

233

• Finally, we assume that job execution time is neg-

ligible. So we do not try to model it, nor the

queueing effect which may result from it on de-

vices. This is a natural assumption for the kind of

device infrastructures we are targetting, where de-

vices are far from full utilisation and spend most

of their time waiting for jobs.

2.2 Formulation as a Sequential

Decision Process

The state of the system at any time is given by a pair

hσ, zi where the control state σ is the subset of indices

of the devices in

ready

mode and z ∈ Z denotes the

demand state of the infrastructure. We seek to build

a controller which takes as input the stream of job re-

quests, maintains the state of the system and uses it to

make decisions at each new job:

• First, the controller must choose the index k of the

device to which the job is assigned. We assume

here that jobs are immediately assigned upon re-

ception.

• Then, just after the assignment, the controller

must choose a family (τ

k

)

k∈σ

of non negative

timeouts, where σ is the control state at that time,

and each τ

k

denotes the sleep schedule for device

k ∈ σ i.e. the time after which device k is to be

switched to

sleep

mode if no job has been re-

ceived in between.

Thus, the problem is formulated as the optimisation of

a sequential decision process. We consider the opti-

misation at infinite horizon with discount factor γ. Let

V

!

hσ, zi and Vhσ, zi be the optimal cost to go associ-

ated with state hσ, zi, respectively before and after an

assignment. The optimality equation is given in Fig-

ure 1.

• Equation (1) concerns the total cost of assigning,

in state hσ, zi, a job with cost menu c to a device k:

it consists of the client assignment cost c

k

speci-

fied in the cost menu, plus, if device k is in

sleep

mode (i.e. k 6∈ σ), the wake up cost to lift it to

ready

mode, plus the cost to go after assignment

from the new state hσ ∪ {k}, zi in which k is now

in

ready

mode. The demand state is unchanged

because we ignore job execution time, so the job

is assumed to be completed immediately after as-

signment.

• Equation (2) concerns the cost of setting time-

outs (τ

k

)

k∈σ

for the devices in

ready

mode in

state hσ, zi, when the next job arrives after time

x in a demand state z

′

: it consists of the cost

g

k

(x, τ

k

) of the energy consumption until time x

of each device k in

ready

mode with timeout τ

k

,

plus the discounted cost to go from the new state

h{k ∈ σ|x < τ

k

}, z

′

i where the control state con-

sists exactly of those devices in σ for which the

timeout was not reached at time x (i.e. τ

k

> x).

If functions V and V

!

satisfy Equations (1) and (2),

then the optimal policy for the controller can be for-

mulated as follows:

• When receiving a job with cost menu c in state

hσ, zi, assign it to the device k which minimises

the minimisation objective of Equation (1).

• Just after assignment in state hσ, zi, set timeouts

(τ

k

)

k∈σ

which minimise the minimisation objec-

tive of Equation (2).

3 SOLVING FOR OPTIMALITY

We are looking for a solution in V

!

,V to the system of

Equations (1) and (2). We follow the general proce-

dure of value iteration, which alternates updates to V

!

from V using Equation (1) and updates to V from V

!

using Equation (2). We assume that the demand state

space Z is finite, so the overall state space is finite and

both V

!

,V can be represented as finite dimension vec-

tors. The update using Equation (1) is quite straight-

forward: minimisation can be done by enumeration

(of the devices), and the integral is turned into a sum,

assuming distribution Q is discrete. The update us-

ing Equation (2) is more involved, as it requires solv-

ing a K-dimensional optimisation. Unfortunately, the

optimisation objective has no good properties, such

as convexity or smoothness, which would make it

amenable to standard optimisation techniques. Fur-

thermore, it is important to reach a global optimum

and not just a local one. The rest of this section is

devoted to solving the optimisation in Equation (2).

3.1 Transformation of the Objective

Although the optimisation in Equation (2) occurs in

the (up to) K-dimensional space of possible timeouts,

it can in fact be turned into a sequence of (up to)

K uni-dimensional optimisations. To show this, we

introduce two side functions V

◦

ht, σ, zi and vht, σ, zi

where t is a positive scalar, and σ, z is a state. It can

then be shown that the solution in V to Equation (2)

can be obtained by solving the system of equations in

V

◦

, v,V shown in Figure 2. In that system, all the op-

timisations in the space of timeouts are captured by a

single operator ↓, defined for any function f on posi-

tive scalars by

↓ f(τ) = min

t≥τ

f(t)

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

234

V

!

hσ, zi =

Z

c

min

k

[c

k

+ I[k 6∈ σ]

ˆ

b

k

+Vhσ∪{k}, zi]dQ(c|z) (1)

Vhσ, zi = min

(τ

k

)

k∈σ

Z

xz

′

[

∑

k∈σ

g

k

(x, τ

k

) + γV

!

h{k ∈ σ|x < τ

k

}, z

′

i]dP(xz

′

|z) (2)

Figure 1: The optimality equations for the system.

V

◦

ht, σ, zi =

Z

xz

′

(−g

σ

(x,t) + γI[t ≤ x]V

!

hσ, z

′

i)dP(xz

′

|z) (3)

vh.,

/

0, zi = V

◦

h.,

/

0, zi (4)

vh.,

6=

/

0

σ

, zi = ↓((min

k∈σ

vh., σ\{k}, zi) −V

◦

h., σ, zi) +V

◦

h., σ, zi (5)

Vhσ, zi = vh0, σ, zi +

Z

x

g

σ

(x, 0)dP(x|z) (6)

Figure 2: Solving Equation (2) by a sequence of unidimensional optimisations.

In words, this operator returns the greatest monotonic

(non decreasing) lower bound of f . It is the only op-

erator in Figure 2 which involves a non discrete opti-

misation. In more details, we have

• Equation (3) computes a function V

◦

from V

!

. We

use the notation g

σ

as a short hand for

∑

k∈σ

g

k

.

• Then Equations (4) and (5) inductively compute

a function v from V

◦

. Eq. (4) yields the values

of v at σ =

/

0, while Eq. (5) yields the values of

v at σ 6=

/

0 based on its values at σ \ {k} for each

k ∈ σ. Hence, function v can be entirely computed

in K iterations of updates using initially Eq. (4)

and then repeatedly Eq. (5).

• Finally, Equation (6) yieldsV by taking the values

of v at t = 0.

The main problem with this transformation is that,

while V,V

!

can be represented as the finite dimen-

sion vectors of their values, since they have a finite

domain, that is not the case of V

◦

and v as these func-

tions have an argument which lives in a continuous

space. The traditional solution to this problem is to

approximate such functions by linear combinations of

suitably chosen basis functions. We take a different

approach here, which does not rely on approximation.

3.2 Solution Space

Given a demand state z, let F

o

be the finite set consist-

ing of the initial functions V

◦

h., σ, zi for all possible

control state σ. What we are looking for is a function

space F with the following properties:

• Finite Representability: functions in F can be

represented by some finite parametric structures;

• Pointwise Computability: for any scalar x and

function f in F given by its parametric represen-

tation, the scalar f(x) can be computed;

• Stability by the operators of Figure 2:

(A )

∀ f ∈ F

o

f ∈ F

∀ f, g ∈ F min( f, g) ∈ F

∀ f ∈ F , g ∈ F

o

↓( f − g) + g ∈ F

A function space satisying all these requirements is

called a solution space, since it can be used to update

exactlyV fromV

!

using the updates given in Figure 2.

Now, the main formal result of our study is the follow-

ing proposition.

Proposition 1. If F

o

satisfies some minimal condi-

tions, then a solution space can be constructed.

The conditions on F

o

for Proposition 1 to hold are

the following, where ∆F

o

denotes the set of functions

of the form f − g with f, g ∈ F

o

.

• There is a procedure EVAL which, given a scalar

t and a function f in F

o

, returns the scalar f(t).

This includes t = +∞, in which case the returned

value is the limit (assumed finite) of f(t) when t

tends to infinity. In other words, the initial func-

tions must at least be pointwise computable.

• There is a procedure BEHAVIOUR which, given a

function h in ∆F

o

, returns the table of variations

of h, i.e. a finite interval partition (A

i

)

i∈I

of R

+

,

and for each i ∈ I, an indicator s

i

∈ {−1, 1} with

s

i

positive (resp. negative) meaning that h is non

decreasing (resp. non increasing) on interval A

i

.

When these conditions hold, the solution space

promised by Proposition 1 is the function space F

∗

defined as follows: F

∗

is the space of functions

Multi-devicePower-saving-AnInvestigationinEnergyConsumptionOptimisation

235

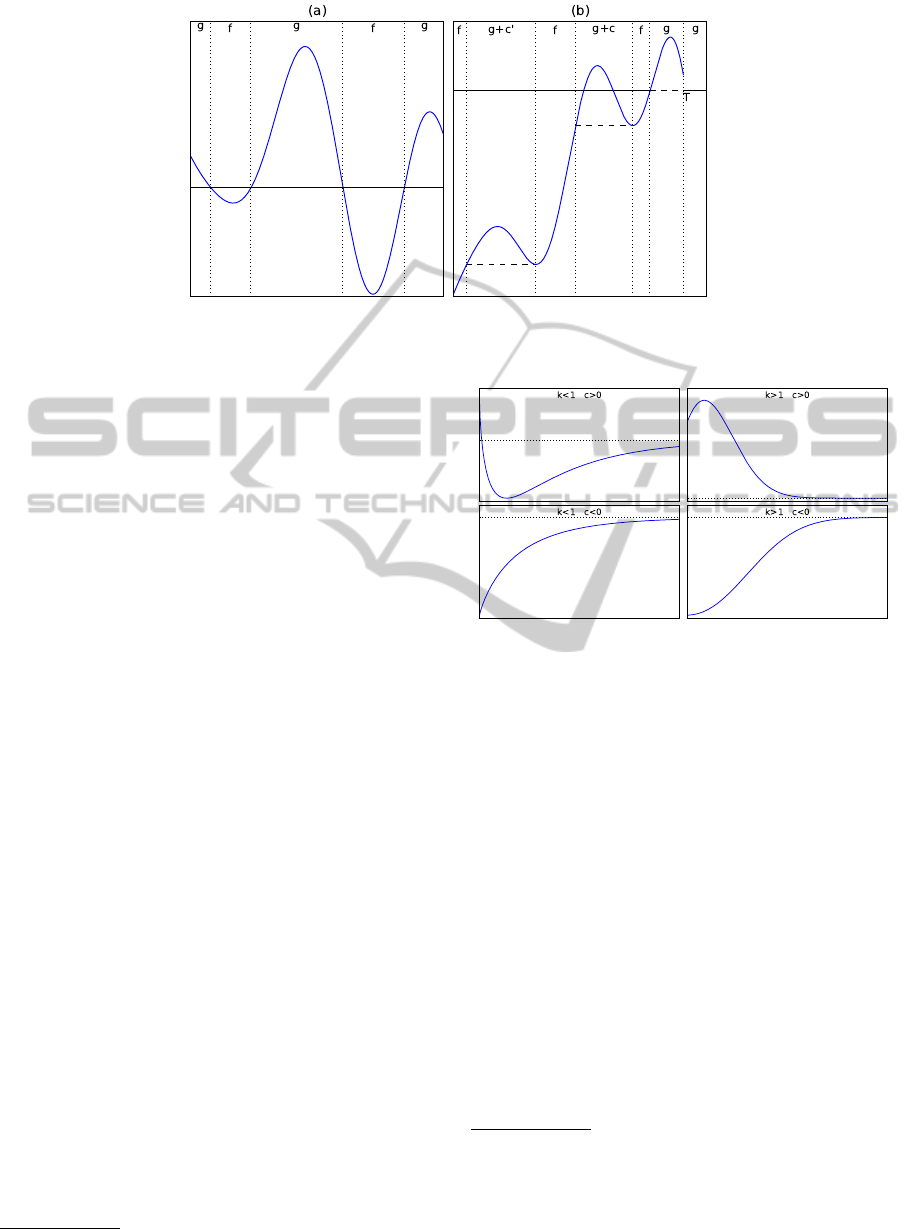

Figure 3: Computing (a): min( f, g) and (b): ↓( f −g)+g given f − g (blue curve); the result (indicated at the top) is piecewise

identical to either f or g plus a scalar.

which are piecewise F

o

-plus-constant, i.e. of the

form

∑

i∈I

1

A

i

( f

i

+ r

i

1) where (A

i

)

i∈I

is a finite inter-

val partition of R

+

, ( f

i

)

i∈I

is a family of functions in

F

o

, and (r

i

)

i∈I

is a family of scalars, called the offsets.

1

A

where A is any interval of R

+

is the characteristic

function of A, which returns 1 for the scalars in A and

0 elsewhere. 1 is the constant function which always

return 1. Let’s sketch the proof that F

∗

is a solution

space:

• F

∗

is finitely representable. Indeed, a function

in F

∗

is entirely described by the bounds of its

underlying (finite) interval partition, and for each

interval, the corresponding element in F

o

(which

is finite) and corresponding offset.

• F

∗

is pointwise computable. Given a function f

in F

∗

and a scalar x, the value f(x) is computed

by first determining the interval of the underlying

partition of f to which x belongs (this consists in

a sequence of comparisons with the bounds of the

intervals) and then using procedure EVAL with the

function in F

o

corresponding to that interval, and

adding its offset.

• Finally F

∗

satisfies the stability conditions (A ).

The first condition is obvious. The intuition for

the proof of the other two conditions can be read

on Figure 3. Essentially, if f, g are any functions,

and f − g, represented by the blue curve on the

figure, is reasonably well-behaved, then min( f, g)

and ↓( f − g) + g are piecewise equal to either f

or g + r1 where r is a scalar. If the table of vari-

ations of f − g is known, as provided by proce-

dure BEHAVIOUR, then the exact bounds at which

min( f, g) and ↓( f − g) + g alternate between the

two cases can be computed precisely (as well as

the values of r), by simple bi-section

2

on each in-

2

We mention here bisection, because it is the simplest

Figure 4: Different shapes of the functions ψ + cψ

′

in the

case of Weibull demand with shape parameter κ.

terval of monotonicity of f − g using procedure

EVAL. Technically, what is needed in the proof is

the property above for ↓(1

[0,T)

( f − g)) + g for T

scalar or infinite, as shown in the figure.

3.3 An Example

Consider the case where the demand state space is fi-

nite, and, for a given demand state z, distribution P

given z is the independent product of a Weibull distri-

bution

3

and a multinomial:

dP(xz

′

|z) = π

z

′

κx

κ−1

exp(−x

κ

)dx

where

∑

z

′ π

z

′

= 1. It is easy to show that, up to some

scaling factors, the functions in F

o

(and hence those

in ∆F

o

) are of the form ψ(.;z) + cψ

′

(.;z), where

way to obtain a root of a uni-dimensional function using

only a zero-order oracle like procedure EVAL. If higher or-

der oracles are available, then of course more refined meth-

ods can be used instead.

3

Observe that the scale parameter of the Weibull is taken

to be 1, thus fixing the time unit.

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

236

ψ(t;z) =

1

κ

γ(

1

κ

,t

κ

)

ψ

′

(t;z) = exp(−t

κ

)

For a given c, function ψ(.;z) + cψ

′

(.;z) can assume

the different shapes, depending on κ and c, shown in

Figure 4 and has the following characteristics (when

κ 6= 1):

• It is continuous, has value c at 0, and has a limit at

infinity given by

1

κ

Γ(

1

κ

) where Γ is the complete

Gamma function.

• It is monotonically increasing if c ≤ 0, otherwise

it has a single local optimum, at t

∗

= (

1

cκ

)

1

κ−1

,

which is a maximum if κ > 1 and a minimum if

κ < 1.

• It is concave if c ≤ 0 and κ < 1, otherwise it has a

single inflection point solution of the simple poly-

nomial equation 1−

1

κ

+

1

cκ

t − t

κ

= 0.

All this information is sufficient to implement proce-

dures BEHAVIOUR, EVAL, and even higher order ora-

cles than EVAL.

3.4 Experimental Validation

The conditions required for Proposition 1 to hold

essentially depend on distribution P in the demand

model. They actually hold for a wide range of dis-

tributions, and we have derived solutions for various

standard distributions (Exponential, Weibull, etc.).

We have also conducted experiments both on simu-

lated and real data, which cannot be reported here for

lack of space. They are available on demand from the

authors. Essentially, in our experiments, we compare

the TRANSFER policy obtained by the algorithm pro-

posed here, which minimises the overall cost of the

infrastructure, to the SELFISH policy, where each job

is assigned the device which minimises only the cost

for the client (as given in the cost menu). Unsurpris-

ingly, the TRANSFER policy always performs better,

and the gain can be arbitrarily high depending on a

characteristic of the demand called the transfer loss

defined as

Q

+

=

def

min

z

Z

c

c

+

dQ(c|z)

where, for any cost menu c, we let c

+

= min

k,c

k

>0

c

k

(it is assumed, without loss of generality, that the

smallest value in a cost menu is always 0, so c

+

is

the second smallest).

4 CONCLUSIONS

In this paper, we have studied the tradeoff between

the client cost of job assignment and the energy con-

sumption of the devices in a framework in which a

controller mediates the interaction between client and

devices. The kind of system we target is different

from the typical jobshop, for which optimisation is

a well studied topic. Instead, we target infrastructures

in which

• devices spend most of their time waiting for jobs,

and the controller can set the energy levelat which

they do that;

• clients set device assignment constraints, and the

controller can override some of them at a price.

The role of the controller is to find a tradeoff between

the price of overiding client constraints and the idle

energy consumption of the devices. We propose a se-

quential decision process model of the system as well

as a method to achieve the optimal solution.

REFERENCES

B¨auerle, N. and Rieder, U. (2011). Markov Decision Pro-

cesses with Applications to Finance. Springer Verlag.

Benini, L., Bogliolo, A., and De Micheli, G. (2000). A

survey of design techniques for system-level dynamic

power management. IEEE Transactions on very large

scale integration systems, 8(3):299–316.

Bertsekas, D. (2005). Dynamic Programming and Optimal

Control. Athena Scientific.

Raghavendra, R., Ranganathan, P., Talwar, V., Wang, Z.,

and Zhu, X. (2008). No “power” struggles: Coordi-

nated multi-level power management for the data cen-

ter. Operating systems review, 42(2):48–59.

Sinha, A. and Chandrakasan, A. (2001). Dynamic power

management in wireless sensor networks. IEEE De-

sign & Test of Computers, 18(2):62–74.

Urgaonkar, R., Kozat, U., Igarashi, K., and Neely, M.

(2010). Dynamic resource allocation and power

management in virtualized data centers. In Proc.

of IEEE/IFIP Network Operations and Management

Symposium, pages 479–486, Osaka, Japan.

Wang, Y., Xie, Q., Ammari, A., and Pedram, M. (2011). De-

riving a near-optimal power management policy using

model-free reinforcement learning and bayesian clas-

sification. In Proc. of 48th Design Automation Con-

ference, pages 41–46, San Diego, CA, U.S.A.

Multi-devicePower-saving-AnInvestigationinEnergyConsumptionOptimisation

237