Testing Discovered Web Services Automatically

Pinar Karagoz

1

and Selma Utku

2

1

Department of Computer Eng, Middle East Technical University, Ankara, Turkey

2

Aselsan Inc, Ankara, Turkey

Keywords:

Web Service, Web Service Testing, Semantic Dependency Analysis, Mutation Analysis.

Abstract:

The reliability of web services is important for both users and software developers. In order to guarantee the

reliability of the web services that are invoked and integrated at runtime, mechanisms for automatic testing

of web services are needed. A basic issue in web service testing is to be able to generate appropriate input

values for web services and to estimate whether the output obtained is proper for the functionality. In this

work, we propose a method for automatic web service testing that uses semantics dependency-based and data

mutation-based techniques to generate different test cases and to analyze web services. We check whether the

services function properly under the input values generated and enriched from various data sources and we

check robustness of web services by generating random and erronous data inputs. Experimental evaluation

with real web services show that proposed mechanisms provide promising results for automatic testing of web

services.

1 INTRODUCTION

Web services provide interaction between different

distributed applications and the use of web services

becomes one of the most preferable technologies by

software developers and web users. They are pub-

lished in high numbers, they become outdated very

rapidly, and there is no standard control mechanism

for their reliability. Traditional offline and manual

testing processes are not always applicable to testing

of web services. Therefore, it is necessary to have a

mechanism for online and automated testing of dy-

namically discovered and selected web services to be

included in a software application (Wang et al., 2007),

(Dranidis et al., 2007).

In this work, we present a method for automated

testing of web services. An important issue that we

target in this work is to generate appropriate input val-

ues for web services automatically. To this aim, we

adopt two previous techniques from different areas:

mutation analysis, and semantic analysis. In mutation

analysis, a web service is tested by using random and

specified values in different ranges that are set accord-

ing to the parameter type. Whereas in semantic anal-

ysis, dependencies among web services that are pub-

lished by the same service provider are semantically

analysed. Different test cases are generated and the

test score of a web service is obtained by examining

the outputs in two dimensions. Since we do not know

the exact behaviour of the services, we aim to employ

simple yet effective methods here. In the first dimen-

sion, if an exception occurs during the execution of

the web service request, the web service is consid-

ered unsuccessful. The second dimension is based on

checking whether the web service returns different re-

sults for different test cases.

In this study, we work on web services specified

in Web Services Description Language (WSDL)

1

,

which is the prominent specification method for web

services. The methods proposed in this work do not

need semantic description of services. The reason be-

hind this choice is based on the observation that al-

most all of the published web services do not have

semantic definitions. One basic assumption is that

web services have providers and they publish a set

of services, and these service are generally related

such that they belong to the same domain and out-

put of one service may be input for another one. We

use such dependencies in semantic analysis of web

services. The proposed method is implemented in a

domain specific web service discovery system. The

web service testing module in this system is in charge

of checking whether the discovered services function

correctly. The performance of the proposed method

is evaluated on synthetic and real world web services.

1

http://www.w3.org/TR/wsdl

160

Karagoz P. and Utku S..

Testing Discovered Web Services Automatically.

DOI: 10.5220/0004833601600167

In Proceedings of the 10th International Conference on Web Information Systems and Technologies (WEBIST-2014), pages 160-167

ISBN: 978-989-758-023-9

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

The experimental results indicate the usability of the

approach.

The main contribution of this work in comparison

to previous work can be summarized as follows: The

proposed method does not rely on an external mech-

anism or framework, it can be easily applied. It does

not assume availability of semantic service descrip-

tion or description of internal mechanism of web ser-

vice. For input generation for web services, it com-

bines data mutation based technique with semantic

dependency analysis among web services. Test score

generation process is performed automatically.

The rest of this paper is organized as follows. In

Section 2, related work is summarized. In Section 3,

general architecture of the proposed web service test-

ing method is described. In Section 4, mutation-based

analysis is presented. In Section 5, semantic based

analysis is given and this is followed by the score gen-

eration described in Section 6. Evaluation results are

presented in Section 7. The paper is concluded in Sec-

tion 8 with an overview and future work.

2 RELATED WORK

Before using the web service by service consumers,

testing is needed to guarantee the correctness and ro-

bustness of web services. The study of Martin et al.

(Martin et al., 2006) is one of studies that empha-

size the robustness testing of web services by using

WSDL. It presents a framework to generate and exe-

cute robustness test cases automatically.

In (Bai et al., 2005), Bai et al. propose an ap-

proach about WSDL-based test generation and test

case documentation to provide reusability of gener-

ated test cases. Test cases are generated in four levels:

test data generation, individual test operation gener-

ation, operation flow generation, and test specifica-

tion generation. Test data is generated by analyzing

WSDL message definitions. In individual test oper-

ation generation level, input parameters of web ser-

vices are analyzed and test operations are generated.

In operation flow generation level, the sequence of the

web services are determined by the analysis of depen-

dencies between the web services. In this approach,

three dependencies are used; input dependency, in-

put/output dependency and output dependency.

Siblini et al. (Siblini and Mansour, 2005) propose

a mutation testing method for web services. The aim

of this approach is to find errors relevant to both the

WSDL interface and the logic of web service pro-

gramming. In their work, mutant operators to the

WSDL document of web services are defined and mu-

tated web service interfaces are generated. With each

modification, a new version of test case is created and

it is called a mutant. Mutant operators are applied

to input parameters and output parameter of web ser-

vices and the data types that are defined in the WSDL

document. .

Obtaining valid inputs and outputs is a tedious

work and often such information is not readily avail-

able. AbuJarour et al. (AbuJarour and Oergel, 2011)

propose an approach to generate annotations for web

services, i.e.., valid input parameters, examples of

expected outputs and tags, by sampling invocations

of web services automatically. The generated anno-

tations are integrated to web forms to help service

consumers for actual service invocations. In this ap-

proach, in order to generate valid parameters, various

resources such as random values, outputs of other web

services that are provided by the same web service

provider and different providers, external data sources

such as WordNet, DBpedia, Freebase, are used.

Although the proposed method have similarities

with (Bai et al., 2005), (Siblini and Mansour, 2005)

and (AbuJarour and Oergel, 2011), as the basic dif-

ference, in this study, the emphasis is on generating

appropriate inputs for testing and generate an overall

test score automatically.

3 GENERAL ARCHITECTURE

The proposed work aims to automatically test web

services that are specified in WSDL. Each service

provider has a WSDL document to specify the infor-

mation about the provided web services. This doc-

ument contains the names of the web services, the

attributes of input and output parameters of the web

services and also user defined types. This document

provides valuable information for service invocation.

However, this information is not sufficient to test the

web services. Since the behavioral information of

web service is not available, only black-box testing

can be performed for validation and testing of web

service.

Within the scope of this work, web service valida-

tion is defined as the process of checking whether the

web service is still alive and accessible or not by in-

voking the web service by simple appropriate param-

eter values. On the other hand, web service testing is

the task of checking whether a web service functions

as it should be. Both processes require generation of

input values for test cases. To this aim, firstly, the

types of the input and output parameters of a given

web service should be identified. As the atomic pa-

rameter types, boolean, character, integer and floating

point number are the most common ones. By using

TestingDiscoveredWebServicesAutomatically

161

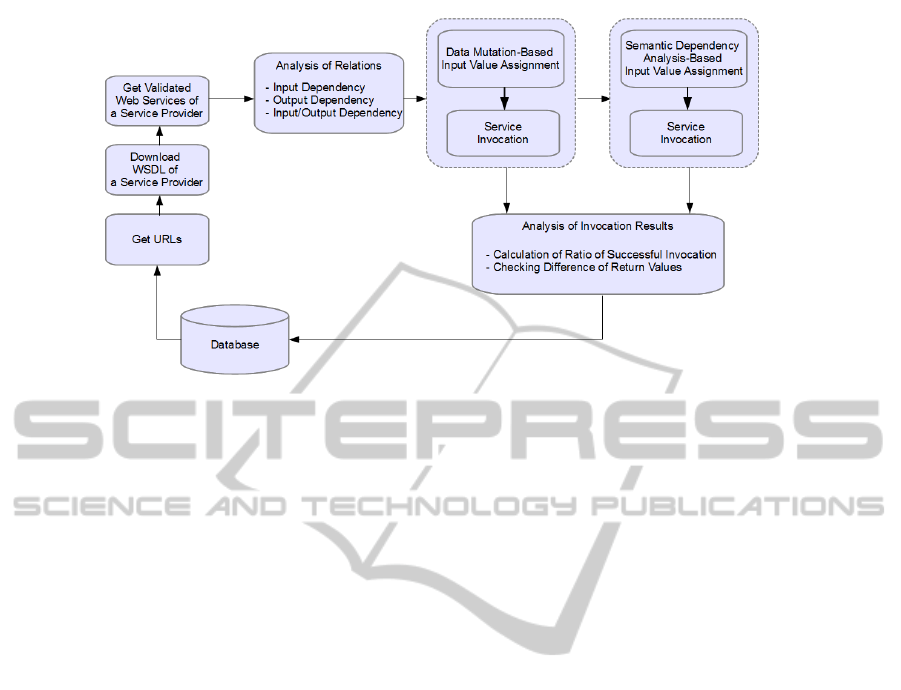

Figure 1: General Architecture of Web Testing Approach.

the atomic types, user-defined complex types can be

constructed. To analyze and generate a value for ba-

sic types is more straightforward than that of complex

types. In order to generate test data of complex data

types, the structure is recursively analyzed until it en-

counters with basic data types. Since the aim of the

validation process is to check whether the web service

is still alive and accessible or not by invoking the web

service, setting simple default values to input param-

eters is sufficient for validation process.

In order to test a web service, one criteria is that no

exception is taken when the web service is invoked by

using the generated input values. Another important

point is to check whether the service generates dif-

ferent outputs for different input values. If a service

always returns the same value in spite of different in-

put parameters, it is considered that it is a test service

or this service has no implementation. Such services

fail the test.

The general architecture of the proposed approach

is shown in Figure 1. Web service testing process

starts with getting the URLs of service providers. At

this point, we assume that the service is already dis-

covered, and hence service provider’s address is avail-

able. By downloading and analyzing the content of

WSDL documents, information as to which web ser-

vices are provided and how to invoke are obtained.

For each provided web service, the validation process

is performed in order to check whether it is alive or

not. On the validated services set, input dependency,

output dependency and input/output dependency rela-

tions are analyzed.

The next step is test case generation with data

mutation-based method. By this method, the values

of input parameters are generated according to the pa-

rameter type declarations in the service description.

With the generated input parameters, the web service

is invoked and the invocation result is saved for score

calculation. Following this, test case generation with

semantic dependency analysis is performed. For each

web service, the values for input parameters of web

service are determined according to the semantic de-

pendency analysis and the web service is invoked with

the generated input parameters. As in the previous

step, for each invocation, the results are saved. Fi-

nally, for each web service, the final test result is cal-

culated on the basis of the results of all invocations.

4 MUTATION ANALYSIS

In software engineering, the purpose of the mutation

testing is to help the tester develop effective tests

or locate weaknesses in the test data used for the

program or in sections of the code that are seldom

or never accessed during execution. The proposed

method is inspired from mutation testing methods,

however the aim is quite different. The aim of mu-

tation testing is to measure test adequacy. On the

other hand, the aim of data mutation in the proposed

method is to generate test cases. In traditional muta-

tion testing, mutation operators are used to transform

the program under test. In contrast, this method is

applied for generating random and erroneous data in-

puts.

In mutation-based test case generation, a param-

eter can have different values in the range of its type

domain. In this method, the various random and error-

prone data input values are generated and they are

grouped. When we use the generated values in each

WEBIST2014-InternationalConferenceonWebInformationSystemsandTechnologies

162

range for input parameter, we expect that invoked web

service presents different behaviours.

We mention that types of input parameters can be

fundamental or complex type. In case of complex

type, the generator recursively analyzes the structure

of the input parameter type until it reaches the funda-

mental type. Various values in the range of its type are

generated and the generated values are grouped. For

each group, the range of parameter value is also con-

strained. We apply several versions of data nutation.

In Mutant Version 0, positive values are generated for

input parameters. When the web service is invoked

by using these values, it is expected that invoked web

service returns a proper value. In Mutant Version 1,

2, 3, 4, 5 and 6, erroneous input values are generated.

For numeric types, in Mutant Version 1, input pa-

rameter is set to 0. By this way, it can be checked

whether the web service prevents division by zero er-

ror or pointer address error. In Mutant Version 2,

the numeric input parameter is set to -1 and thus it is

checked whether unsigned to signed conversion error,

out-of-bounds memory access error, signed/unsigned

mismatch warning in comparison is prevented by web

service. Incorrect sign conversions can lead to unde-

fined behaviour and the web service can be crashed.

In Mutant Version 3 and 4, boundary values are gen-

erated. In Mutant Version 5 and 6, very high and low

values are generated randomly. Thus, the fault resis-

tance of the web service is checked. For each type, the

values that can be generated are different. Therefore,

the mutant groups are constructed differently with re-

spect to the parameter type.

For String typed parameters, it is very hard to gen-

erate valid values. Although the web services are not

annotated, if we know the domain of the web ser-

vices, it is possible that the instances can be directly

taken from the ontology or the ontology can be popu-

lated with instances by using public resources. As the

first step, the name of input parameters are semanti-

cally compared with the ontology terms in order to

find the similarity value and ontological position of

the input parameters. We obtain the ontology terms

with matching degree above the threshold value. We

check whether there is any instance for the ontology

term corresponding to the name of input parameter.

If it has at least one instance, a random one of them

is used for input parameter value in test case genera-

tion. Otherwise, the hyponym terms of the name of

input parameter are searched and if any term is ob-

tained, the instances of the obtained hyponym terms

are used, if available.

5 SEMANTIC DEPENDENCY

ANALYSIS

A service provider generally publish multiple services

and some of them have interactions with each other.

In such a situation, for testing atomic web services,

test cases can be generated by using these interac-

tions. Semantic dependency analysis considers the

following three types of dependencies:

• Input dependency: A web service WS1 is input

dependent on WS2 if and only if WS1 and WS2

share at least one input parameter that has the

same type and same name.

• Output dependency: A web service WS1 is output

dependent on WS2 if and only if output parame-

ters of WS1 and WS2 have the same type and the

same name.

• Input/output dependency: A web service WS1 is

input/output dependent on WS2 if and only if at

least one input parameter of WS1 has the same

type and similar name with at least one field of

output parameter of WS2.

In this work, especially, semantic input/output de-

pendency is used. The generation of the values for in-

put parameters of each web service that are provided

by the same service provider is a very time consuming

process. To deal with this problem, the input depen-

dency and output dependency are also used. How-

ever, the output and input dependency analysis is per-

formed just syntactically by comparing the types of

input or output parameters of the web services.

Initially, input dependencies, output dependencies

and the input/output dependencies between all vali-

dated services of each service provider are analyzed.

On this basis of this analysis, test cases are generated.

A web service may have no input parameter and also

may return no value. For each validated web service

with at least one input parameter, the dependencies

with the other validated web services that are pro-

vided by the same service provider are analyzed.

For semantic input/output dependency analysis,

similarity between the names of the parameters is

found through matching degree calculation. For

this calculation phase, the functions of word match-

ing library presented in (Canturk and Senkul, 2011)

is used. This matching method is extended from

WordNet and it performs both syntactic and semantic

matching. It is possible to use any other word match-

ing tool that returns a score for similarity. However,

we preferred to use this new matching technique since

it has shown to provide promising improvement over

similar techniques, especially for semantic matching.

While finding matches, each term in the first pa-

TestingDiscoveredWebServicesAutomatically

163

rameter is compared with the all of the terms in sec-

ond parameter. As the result of this similarity calcu-

lation, we obtain a similarity degree array with length

of n. However, we desire to get a single value as the

similarity degree of whole output term to whole input

term. Therefore, the average value of these similarity

degrees is calculated for the final similarity value (s1)

by using Equation 1.

sim(term

1

, term

2

) =

1

n

n

∑

j=1

sim(term

1

, term

2

word

j

)

(1)

We devise also another method to calculate the

similarity degree between input and output terms

where domain ontology is available. Note this on-

tology is not for service description, but for describ-

ing the domain of the services, such as Car or Movie

domain. In this method, the distance between the in-

put term and each ontology term and the distance be-

tween each output term and each ontology term are

calculated. These distances are used to determine the

ontological positions of the terms. By calculating

the average of the differences of these distance val-

ues with each ontology term, the similarity value (S2)

between input term and output term is calculated by

using Equation 2.

ontsim(term

1

, term

2

) = 1 −

1

n

n

∑

k=1

|sim(term

1

, ontTerm

k

)

−sim(term

2

, ontTerm

k

)|

where n is the number of ontology terms.

The final similarity value with the name of the out-

put term and the web service whose output parameter

is analyzed are recorded to input/output dependency

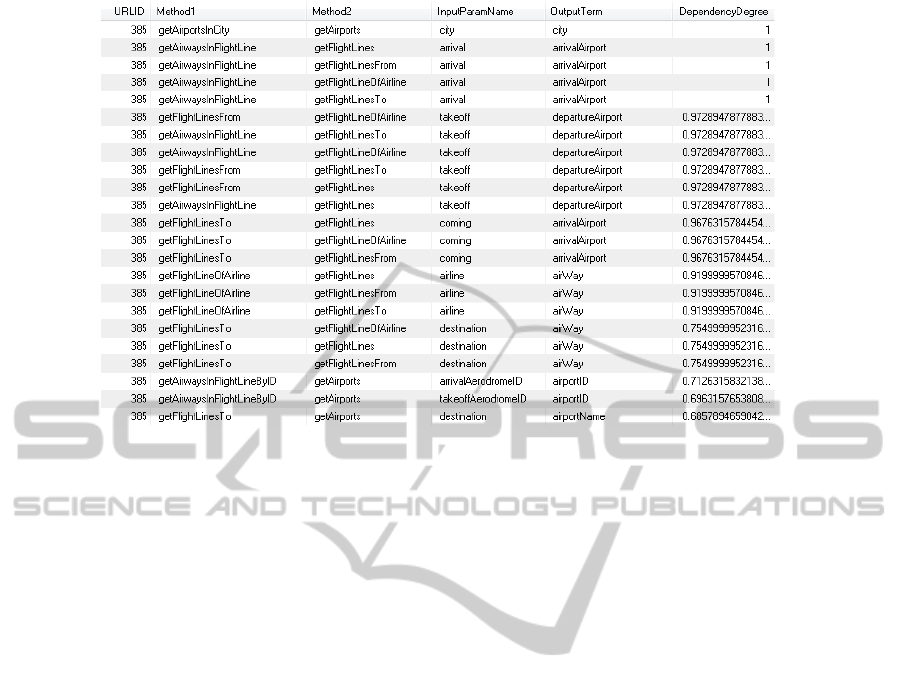

list of analyzed input parameter. A sample list of

input/output dependency relations of web services is

shown in Figure 2.

6 OVERALL TEST SCORE

GENERATION

Overall test score of a web service is calculated

through its performance in mutation-based and se-

mantic dependency analysis based test generation

phases. For all of validated web services, different

test cases based on both semantic analysis operation

and data mutations are generated.

Firstly, the test case generation based on data mu-

tant analysis is performed. By using each version of

mutant value generation mentioned in the previous

section, different values are generated for each input

parameter. Web service is invoked with these input

parameters and return value is obtained. In Mutant

Version 0, five different values in the given range are

generated for each input parameter. By setting the in-

put parameters to these generated values, the test case

is prepared for the web service. The next step is test

case execution. In this phase, web services are in-

voked and the results are checked. If an exception oc-

curs during the execution of the web service request,

then the web service is accepted as unsuccessful. This

result is recorded to statistics of testing of web ser-

vice. On the other hand, it is expected that the web

services that cause no exception have different return

values. Currently, a web service pass the test if it pro-

duces different outputs to different inputs. However,

the obtained result value will be further analyzed in

detail. The similar steps are followed in the other mu-

tant versions. In Mutant Version 1, 2, 3, 4, 5 and 6,

just one test case is generated. To test the web ser-

vice successfully, it is expected that no exception is

occurred. In Mutant Version 5 and 6, the possibility

of being failed is higher than the other versions be-

cause the web services might not handle the values in

these ranges. As mentioned for Mutant Version 0, the

return values are analyzed in detail and the test results

are recorded.

For mutant version in which the values of input

parameters from ontology instances are obtained, one

test case is generated in which the values of input pa-

rameters from WordNet instances are obtained. As

the last mutation version, input parameter switching

is performed. The test cases that are generated in mu-

tant version 0 and that provide successful test result

are used again in switching version, if the parameters

are suitable.

As the second phase, test case generation based on

dependency analysis is performed. If the web service

has no input parameter, it is invoked without generat-

ing any input value and the invocation result is ana-

lyzed. If it has at least one input parameter, the value

set is generated for each input parameter by perform-

ing the following steps.

Firstly, the dependency list is analyzed. If it has

no dependency with the return parameters of the other

web services, considering its type, a random value

is generated by using version 0 in the mutant-based

method. Otherwise, by starting with the first web ser-

vice in the dependency list, the test cases of web ser-

vices is analyzed to get the appropriate value from its

return value. The dependency relation list is sorted by

dependency degree, therefore the analysis operation

is started with the first one. If the first web service in

the list has no successful test case, it is not used for

WEBIST2014-InternationalConferenceonWebInformationSystemsandTechnologies

164

Figure 2: Input/Output Dependency Relations of Web Services.

generation and the analysis is continued with the next

web service in the dependency list.

7 EXPERIMENTAL EVALUATION

7.1 Analysis Method for Test Results

As we described in previous sections, web service in-

vocation results are obtained by calling a web ser-

vice by using the input parameters that are generated

with data mutation and semantic dependency analy-

sis based method. The test results are generated by

examining the invocation results in two dimensions.

The first one is based on checking the execution re-

sults. An exception can occur during the execution of

the web service request. In this case, the web service

is accepted as unsuccessful. On the other hand, if it

works properly and causes no exception, it is accepted

as successful.

The second dimension is based on checking

whether the service gives different outputs for differ-

ent input values. If a service always returns the same

value in spite of different input parameters, it is con-

sidered as a test service or this service has no imple-

mentation. If the same output value is obtained after

all successful execution of test cases, the web services

is failed for this point.

The other checking is for the input parameter

switching method. It is expected that different return

values are obtained after the executions of the test

cases that use the switching method and the source

test cases whose input parameters are switched to

generate the new test case. For each test case using

input parameter switching method, if the return val-

ues of itself and source test case are different, it is

accepted as successful in this checking process. Oth-

erwise it fails the test.

By using the obtained results of the web services

for two dimensions mentioned above, the final test re-

sult is calculated. In testing of a web service, its suc-

cessful invocation is more important than its returning

different values. The web services that throw excep-

tion when they are invoked are never preferred by the

service consumers. Therefore, the overall success re-

sult of the test cases is more weighted than having dif-

ferent outputs for different input values. Therefore, a

weighted average is applied, where the weight of first

dimension is set to 0.7, under empirical evaluation.

7.2 Experiments with Synthesized Web

Services

In this set of experiments, we created web service

providers that include various services, whose be-

haviours are already known. These web services be-

long to Aviation and Car domains. For Aviation do-

main, the provided web services are used to get the

information about flights in Turkey.

For each web service, the proposed approach is

applied and the test results are obtained after the test-

ing process. As we mentioned in previous sections,

different input values are generated for each param-

eter and with these generated input parameters, web

services are invoked. After the invocation, the return

values of web services are analyzed.

TestingDiscoveredWebServicesAutomatically

165

Table 1: MSE on Synthesized Data Set.

Web Service Name Expected(T) Estimated(E) (E − T)

2

sayHello 0.5 0.7 0.04

getAirways 1 0.7 0.09

getAirports 1 0.7 0.09

getFlightLines 1 0.7 0.09

getAirportsInCity 1 1 0

getFlightLinesTo 1 1 0

getFlightLinesFrom 1 1 0

getAirwaysInFlightLine 1 0.85 0.0225

getAirwaysInFlightLineByID 1 0.94 0.0036

getFlightLineOfAirline 1 1 0

isRouteBidirectional 0 0 0

getValue 0.5 0.7 0.04

getAllCities 1 0.7 0.09

getAllCarModels 1 0.7 0.09

getCostumers 1 0.7 0.09

getAvailableCarsInTown 1 0.85 0.0225

getCarBookingsByTownId 1 1 0

getCarBookingsByTownName 0 0.63 0.3969

getCarBookingsByDate 1 0.7 0.09

getCarBookingsOfPerson 1 1 0

insertNewCarBookingItem 1 0.97 0.0009

MSE 0.2346

Table 2: Statistical Information on Web services in Car Domain.

Total number of web service providers 904

Total number of validated web services 5713

Total number of input parameters 15284

Total number of string input parameters 9966

Total number of string input parameters

whose value can be assigned from WordNet instances 1426

Average number of services per service provider 6.3

Average number of input parameter per web service 2.7

In order to obtain the accuracy of our proposed

algorithm, we calculate the root mean square error

(RMSE). Accuracy results for the syntactically gener-

ated web services and average accuracy are presented

in Table 1. The proposed testing method predicts the

reliability of this set of web services with 0.2346 ac-

curacy error. Since the similar studies in the literature

do not produce a comparable overall test score, it is

not possible to make a direct comparison with the lit-

erature. However, this error value is promising for the

usability and effectiveness of the proposed method.

7.3 Experiments with Real Web

Services

In addition to experiments with synthetic data set, the

proposed method is tested on a set of real web ser-

vices collected in Car, Aviation, Film and Sports do-

mains. In this set of experiments, it is not possible to

obtain a success rate, as the services are not annotated.

The results rather provides an approximate picture of

the current situation for the reliability of the published

services. The web services are collected through web

search given the domain name as the keyword. The

number of the web services that are provided by the

same provider, and the number and type of the param-

eters have a high variation in this collection. Due to

space limitation, we present the results for web ser-

vices in Car domain.

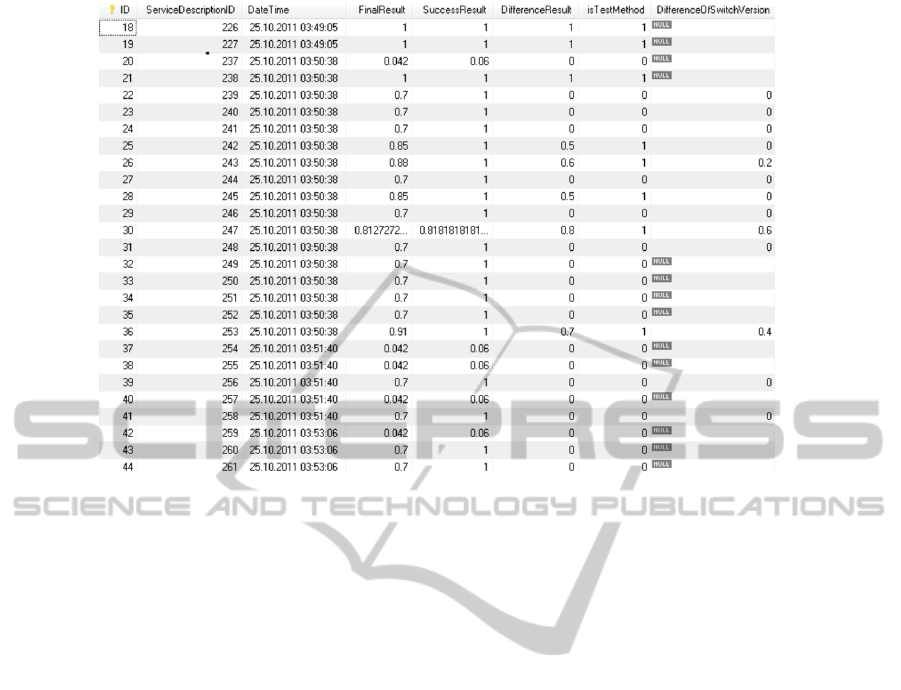

Some of the statistical information for the web ser-

vices collected in Car domain is given in Table 2. In

this collection, out of all web services, 349 services

have the overall test score of 1 hence they success-

fully passed the test cases. The overall test score of

2545 web services is 0, which means that they are to-

tally failed in the test process. 2501 web services are

successfully invoked in all test cases of data mutation-

based method. 2649 web services are successful in

all test cases that are generated by using semantic

dependency-based method. 2474 web services are

successful in both of two methods. Number of web

services that return same value under switching of in-

put values is 2453. Sample results for this set of web

services is given in Figure 3.

8 CONCLUSION

In this work, we proposed a method to test discovered

web services and to generate a test scores automati-

cally. To realize the proposed method, an application

is designed and a graphical user interface is also pre-

sented to test the web services automatically through

running the method step by step for a given web ser-

vice.

In our approach, data mutation based and seman-

WEBIST2014-InternationalConferenceonWebInformationSystemsandTechnologies

166

Figure 3: Sample test results for Web Services in Car Domain.

tic dependency based input data generation methods

are used. In data mutation, web services are tested by

using random and specific values in different ranges.

Values in the specified ranges are generated accord-

ing to the input type. Different data mutation groups

are constructed to generate values for input parame-

ters. Since the internal working mechanism of web

services are not available in practice, and for most of

the time, the exact respond is not known as well, we

prefer to use simple, yet as much as possible tech-

niques for testing.

To be able to evaluate the success of the proposed

method, we generated a synthetic data set whose be-

haviour is known. In this data set, error value is 0.23

under RMSE. It is not possible to make a direct com-

parison with similar studies since they do not generate

such an overall test score. However, this error value

is promising for the applicability of the approach. In

addition to synthetic data set, we tested the real web

services. In this evaluation, it is not possible to pro-

vide a success rate as these services are not annotated.

This evaluation show that the number of web services

that can pass all test cases is about 20%.

REFERENCES

AbuJarour, M. and Oergel, S. (2011). Automatic sampling

of web services. In Proc. IEEE Int. Web Services Conf.

(ICWS), pages 291–298.

Bai, X., Dong, W., Tsai, W. T., and Chen, Y. (2005).

WSDL-based automatic test case generation for web

services testing. In Proc. of SOSE, pages 207–212.

Canturk, D. and Senkul, P. (2011). Semantic annotation of

web services with lexicon-based alignment. In Proc.

of IEEE 7th World Congress on Services (SERVICES),

page 355362.

Dranidis, D., Kourtesis, D., and Ramollari, E. (2007). For-

mal verification of web service behavioral confor-

mance through testing. Annals of Mathematics, Com-

puting and Teleinformatics, 1:36–43.

Martin, E., Basu, S., and Xie, T. (2006). Automated ro-

bustness testing of web services. In Proc. of the 4th

SOAWS.

Siblini, R. and Mansour, N. (2005). Testing web services. In

Proc. 3rd ACS/IEEE Int. Computer Systems and Ap-

plications Conf.

Wang, Y., Bai, X., Li, J., and Huang, R. (2007). Ontology-

based test case generation for testing web services. In

JProc. of ISADS, page 4350.

TestingDiscoveredWebServicesAutomatically

167