Facial Signs and Psycho-physical Status Estimation for Well-being

Assessment

F. Chiarugi, G. Iatraki, E. Christinaki, D. Manousos,

G. Giannakakis, M. Pediaditis,

A. Pampouchidou, K. Marias and M. Tsiknakis

Computational Medicine Laboratory, Institute of Computer Science, Foundation for Research and Technology - Hellas,

70013 Vasilika Vouton, Heraklion, Crete, Greece

Keywords: Facial Expression, Stress, Anxiety, Feature Selection, Well-being Evaluation, FACS, FAPS, Classification.

Abstract: Stress and anxiety act as psycho-physical factors that increase the risk of developing several chronic

diseases. Since they appear as early indicators, it is very important to be able to perform their evaluation in a

contactless and non-intrusive manner in order to avoid inducing artificial stress or anxiety to the individual

in question. For these reasons, this paper analyses the methodologies for the extraction of respective facial

signs from images or videos, their classification and techniques for coding these signs into appropriate

psycho-physical statuses. A review of existing datasets for the assessment of the various methodologies for

facial expression analysis is reported. Finally, a short summary of the most interesting findings in the

various stages of the procedure are indicated with the aim of achieving new contactless methods for the

promotion of an individual’s well-being.

1 INTRODUCTION

Although well-being is a subjective term, which

refers to how individuals perceive their quality of

life, there are objective conditions such as health that

influence this judgement. A healthy status combined

with low risk factors for developing chronic diseases

is essential to the well-being. However, human way

of living in modern societies induces psycho-

physical states such as stress and anxiety which may

lead to the origin of various illnesses.

Stress is a state being present as a part of

pressures of accelerated life rhythms. Stressors are

perceived by the human body as threats mobilizing

all of its resources to face them. Recurrent incidents

of stress can also cause heart attack, due to the

increase of the heart rate (Stress.org).

Anxiety is, in general terms, the unpleasant

feeling of worrying, fear and uneasiness when a

perceived threat is present (Lox 1992). It is

correlated with many other disorders such as

attention-deficit hyperactive disorder, oppositional

defiant disorder, and obsessive-compulsory disorder.

Under certain circumstances, anxiety can be

conceived as a mental disorder called generalized

anxiety disorder, characterized by a disproportionate

uncontrollable and irrational worry in common life

activities (Rowa et al. 2008).

The detection of stress and anxiety in their early

stages turns to be of great significance, especially if

achieved without the excessive use of sensors or

other monitoring equipment, which may by

themselves cause extra stress or anxiety to the

individual. Anxiety and stress are reflected in the

human face, similarly to emotional states, where

global categories of facial expressions of emotions

exist, overcoming cultural differences (Ekman et al.

2003). Hence, facial expression recognition can be a

useful non-invasive technique for studying anxiety

and stress. A preliminary study towards this

direction has already been presented in (Chiarugi et

al. 2013).

Facial expressions are among the most

significant parts of non-verbal communication of

human emotions. Several studies have been

published, using facial expressions in the recognition

of 6 basic emotions (Ekman et al. 2002), but only

few studies report approaches of stress and anxiety

recognition.

In this paper it is assumed that facial expressions

for different psycho-physical statuses can be

characterised through compositions of different

facial signs, such as eye blinking, trembling of lips

555

Chiarugi F., Iatraki G., Christinaki E., Manousos D., Giannakakis G., Pediaditis M., Pampouchidou A., Marias K. and Tsiknakis M..

Facial Signs and Psycho-physical Status Estimation for Well-being Assessment.

DOI: 10.5220/0004934405550562

In Proceedings of the International Conference on Health Informatics (SUPERHEAL-2014), pages 555-562

ISBN: 978-989-758-010-9

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

etc. The scope is to review the state of the art in

facial expression analysis methods and to give

insights towards a non-invasive assessment of well-

being, through the analysis of facial features or

expressions for stress and anxiety.

2 FACIAL SIGNS OF STRESS

AND ANXIETY

Symptoms of stress can be linked with fluctuations

either in physiological (e.g. heart rate, blood

pressure, galvanic skin response) or in physical

measures, as well as with facial features. In fact,

gaze spatial distribution, saccadic eye movement,

pupil diameter increase and high blink rates carry

information that indicates increased stress levels

(Sharma et al. 2012). Additionally, jaw clenching,

grinding teeth, trembling of lips, and frequent

blushing are also signs of stress (Stress.org).

Lerner et al. (Lerner et al. 2007), in their

experiment, induced stress to their subjects by

assigning them difficult mental arithmetic tasks.

With the help of the EMotional Facial Action

Coding System (Friesen et al. 1983) they coded

facial expressions of fear, anger and disgust. Their

results showed that anger and fear can be well

correlated to action units (AUs, see Section 3) linked

to upper-face areas. They found anger and disgust to

be negatively correlated with cardiovascular and

cortisol stress responses. Fear on the contrary, can

be directly associated with the aforesaid

physiological signals.

Anxiety affects the total psycho-emotional

human state triggering both psychological and

physical symptoms. It is considered a composite

feeling that is affected mainly by fear (Harrigan et

al. 1996). Therefore, when individuals are

experiencing anxiety, facial signs of fear would be

expected. In addition, it is argued that anxiety and

fear separation is not reachable by using just

psychometric means (Perkins et al. 2012).

Although facial signs of anxiety can be

ambiguous and literature is not consistent yet, main

effects of anxiety on human face are considered

reddening, lip deformations, and eye blinking.

Further facial symptoms include strained face, facial

pallor, dilated pupils, and eyelid twitching

(Hamilton 1959). Eye blink rate has also been

correlated with an anxiety personality (Zhang et al.

2006). Accordingly, eyelid response was found to

differentiate anxious and non-anxious groups

(Huang et al. 1997). Finally,

Simpson et al. (Simpson

et al. 1971) found significant effect on pupil size as a

result of audience anxiety.

3 FACIAL PARAMETER

REPRESENTATION SYSTEMS

Over the years, various measurement systems for

facial parameters have been proposed, the most

remarkable of which are the facial action coding

system (FACS) (Ekman et al. 2002), the facial

animation parameters (FAPs) (Pandzic et al. 2003),

the facial expression spatial charts (Madokoro et al.

2010) and the maximally discriminative facial

movement coding system (MAX) (Izard 1979).

FACS is a comprehensive system, standardizing

sets of muscle movements known as action units

(AUs) that produce facial expressions. It consists of

44 unique AUs and their combinations, each of

which reflects distinct momentary changes in facial

appearance. However, due to its subjective nature,

FACS may be biased and image annotation is time

consuming (Hamm et al. 2011).

The next coding system is defined by the Moving

Picture Expert Group in the formal standard

ISO/IEC 14496 (MPEG-4). The MPEG-4 standard

supports facial animation by providing 66 low-level

“FAPs”. The FAPs represent a complete set of facial

actions, along with head motion, tongue, eye and

mouth control, which deform a face model from its

neutral state. The FAP value indicates the magnitude

of the deformation caused on the model.

Facial expression spatial charts is a system able

to incorporate intensity levels of facial expression

organized as happiness, anger and sadness

quadrants. It was applied to represent stress levels

and profiles (Madokoro et al. 2010).

MAX is a system that describes selected changes

in facial expressions in infants. It assumes continuity

of emotion expressions from infancy through

adulthood and identifies facial expressions

hypothesized to be indexes of discrete emotion in

infants (Izard 1979).

4 FACIAL FEATURE

EXTRACTION METHODS

In order to evaluate facial expressions for emotion or

psycho-physical status recognition, specific facial

features need to be extracted. Stable facial features

are characteristics of the face like lips, mouth, eyes

and furrows that have become permanent with age

HEALTHINF2014-InternationalConferenceonHealthInformatics

556

and may be deformed due to facial expressions.

Transient facial features are features not present at

rest, which appear with facial expressions as a result

of feelings or emotional states mainly in regions

surrounding the mouth, the eyes and the cheeks.

There are various methods in the literature,

extracting facial features towards facial expression

recognition. Most of them can be applied either to

the whole face (holistic approach) or to specific

regions of interest like mouth, nose or eyes (local

approach). Although the holistic approach provides a

complete picture of facial information, in cases

where a facial expression affects only specific

regions, a local approach gives more detailed and

distinguishable information. Facial feature extraction

methods can be categorised in: muscle/geometric

based, model/appearance based, motion based and

their combination as hybrid methods (Figure 1).

Figure 1: Categorization of facial features extraction

methods.

4.1 Muscle based Methods

Muscle/geometric based techniques of feature

extraction estimate facial muscle deformation from

videos and images. A facial expression is a result of

one or more facial features due to the contraction of

the muscles of the face. Facial features change either

their motion or position (eye, eyebrows, nose and

mouth) or their geometric characteristics and shape.

In this context, dependencies between AUs, like

“inner brow raiser” and “outer brow raiser”, can be

exploited (Tong et al. 2010). Hence, the presence or

absence of either AU can help to infer the state of

the other AU, under ambiguous situations.

Furthermore, expert systems of emotion recognition

from facial expression using distance and geometric

features can be developed (Pantic et al. 2000).

In another study (Aleksic et al. 2006) FAPs have

been utilized as facial features, extracted by the

active contour method. In the FAP extraction

process the outer-lip and eyebrow contours are

tracked for each frame and compared to the

corresponding contours of the neutral frame of the

sequence in order to calculate FAPs in terms of

facial animation parameter units. In another

approach a landmark template is created in order to

extract geometric features of a test face (Hamm et al.

2011). The face is aligned to the template by

similarity transformations to suppress intra-subject

head pose variations and inter-subject geometric

differences.

4.2 Model based Methods

Model (appearance) based methods depend on the

general appearance of the face or/and specific

regions (skin texture, wrinkles, furrows etc.) for

fitting 2D or 3D face models. The most known are

CANDIDE (Rydfalk 1978), the anatomical and

physical model of the face (Terzopoulos et al. 1993),

the canonical wire-mesh face model (Pighin et al.

2006) and the wireframe face model (Wiemann

1976).

Active appearance models (AAMs) (Cootes et al.

2001) provide a consistent representation of the

shape and appearance of the face and have been used

for facial expression classification (Hamilton 1959).

Active shape models (ASMs) were used to track 2D

and 3D facial information from a model in order to

quantify facial actions and subsequently expressions

(Tsalakanidou et al. 2010).

Additionally, facial texture information and

shape analysis using a 3D model was performed for

feature extraction (Boashash et al. 2003). The fusion

of texture and shape information was applied in

(Feng et al. 2005) providing good results. Many

studies have employed local binary patterns (LBP)

(Kämäräinen et al. 2011; Shan et al. 2009) in

extracting facial texture information. LBP followed

by linear programming (Eisert et al. 1997), support

vector machine (SVM) (Ojala et al. 1996), kernel

discriminant isomap (Zhao et al. 2011) was used in

facial expression with remarkable recognition

results.

4.3 Motion based Methods

Motion based methods utilize features derived from

movements, extracted from image sequences of

either facial components or the whole face.

Optical flow (Mase et al. 1991) is the method

adopted by the majority of facial motion analysis

systems. An extension of FACS, the FACS+, which

enables the coding of expressions also by means of

motion, was established using this method along

with geometric dynamic models (Essa et al. 1997).

FacialSignsandPsycho-physicalStatusEstimationforWell-beingAssessment

557

A local optical flow approach for facial features or

expression recognition was used in another study

(Rosenblum et al. 1996).

As an alternative, Gabor wavelets can be

employed in facial motion analysis. A fully

automatic system based on motion analysis was

proposed in (Littlewort et al. 2006) with automatic

face detection, Gabor representation and SVM

classification.

4.4 Hybrid Methods

Hybrid methods use features from local and holistic

approaches in order to improve the results. These

approaches reflect the human perception that utilizes

both local face signs and the whole face to recognize

a facial expression.

A combination of muscle based and appearance

based methods was presented by Zhang et al. (Zhang

et al. 1998) and a combination of appearance based

methods using model and shape perspectives by

Kotsia et al. (Kotsia et al. 2008). Another promising

perspective is to combine motion information with

spatial texture information (Donato et al. 1999).

5 FACIAL EXPRESSION CODING

5.1 Dimensionality Reduction of Facial

Features

Automatic facial expression recognition systems aim

to classify transient and permanent facial features

into the desired classes. The classification

performance mainly depends on the technique of

feature selection and whether appropriate

discriminant features were extracted reliably and

accurately. An important factor affecting the

classifier optimality is the amount of data which are

to be analysed. In most cases, the feature extraction

methods produce a large bundle of features which

increase the computational cost for classification.

Thus, methods for dimension reduction must be used

to project those data into a lower dimensional

feature subspace, still obtaining robust and accurate

classification.

The most common techniques for data reduction

are principal component analysis (PCA),

independent component analysis (ICA) and linear

discrimination analysis (LDA). PCA is a well-

established technique for dimensionality reduction

that performs data mapping from a higher

dimensional space to a lower dimensional space

(Jolliffe 2005). A generalization of PCA is ICA, in

which the goal is to find a linear representation of

data so that the reduced components are statistically

independent, or as independent as possible

(Hyvarinen 1999). LDA searches for those vectors

in the underlying space that best discriminate among

classes in order to provide a small set of features that

carry the most relevant information for classification

purposes (Etemad et al. 1997).

5.2 Classification of Facial Features

In a recent overview (De la Torre et al. 2011) of

emotion detection algorithms, the classification

techniques were divided into supervised and

unsupervised approaches. Some of the proposed

supervised learning methods are hidden Markov

models, dynamic Bayesian networks and naive

Bayes classifiers. The main proposed unsupervised

learning methods are geometric-invariant clustering,

aligned cluster analysis and AAMs to learn the

dynamics of person-specific facial expression

models.

According to Whitehill et al. (Whitehill et al.

2013), the main kinds of approaches for converting

the extracted features into a facial expression class

are machine learning classifiers and rule-based

systems such as the one proposed by Pantic et al.

(Pantic et al. 2006). The main machine learning

classifiers can be linear or multiclass and include

SVMs, AdaBoost or GentleBoost, k-nearest

neighbours, multivariate logistic regression and

multilayer neural networks.

In general, as mentioned before, the choice of the

classifier is strictly correlated to the selection of

features since the classification performance

depends both on the extraction of features with high

discriminative capability and on the choice of an

appropriate highly discriminant classifier.

5.3 Coding Classes into

Psycho-physical Statuses

There has been a lot of research that endeavoured to

classify human emotions into specific categories.

The early philosophy of mind posited that all

emotions can be categorized into basic classes (e.g.

pleasure and pain), but since then more in depth

theories have been put forth (Handel 2011).

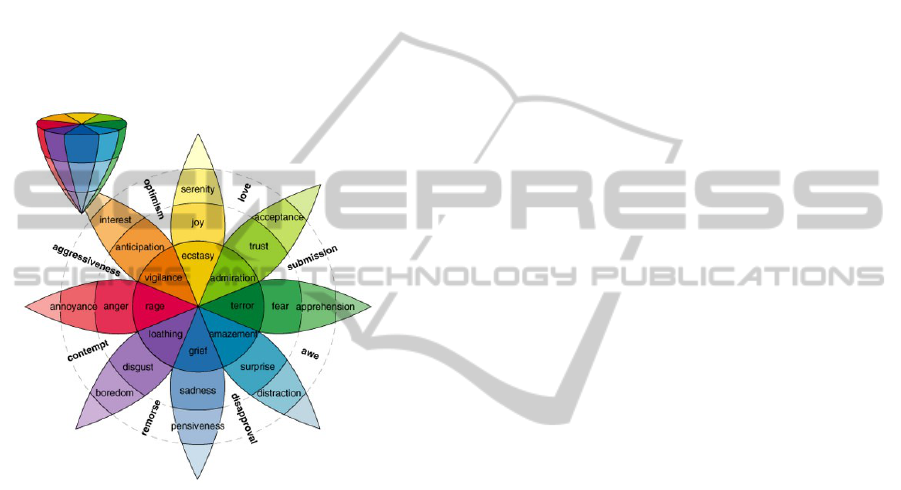

A conception of emotions, called the “wheel of

emotions”, demonstrates how different emotions

can blend into one another and create new emotions

(Plutchik 2001). In this study, eight basic and eight

advanced emotions, classified as opposite emotions,

define more complex emotions based on their

HEALTHINF2014-InternationalConferenceonHealthInformatics

558

differences in intensities (Figure 2). A theory

consisting of a tree type structured list (including

over 100 emotions) leading to the classification of

deeper emotions as primary, secondary and tertiary

is described in (Parrott 2001).

Facial expressions can show aspects of internal

psychology, reflective emotions such as delight,

anger, sorrow and pleasure (Kazuhito 2013). Stress

and anxiety might be measured by using a

combination of the emotions described in (Plutchik

2001; Parrott 2001). Based on their categorization of

emotions it is possible to assess secondary or tertiary

emotions (such as anxiety) derived from the

primary.

Figure 2: Plutchik’s wheel of emotion.

Action units can be divided into primary (AUs or

AU combinations that can be clearly classified to

one of the six basic emotions without ambiguities)

and auxiliary (AUs that can be only additively

combined with primary AUs to provide

supplementary support to the facial expression

classification). Their combination formulates a facial

expression. Interpretation of AUs related to the

psycho-physical statuses of anxiety and stress does

not appear explicitly in the literature, but as aspects

of fear. However, fear seems to vary among studies

as it can be considered as the combination of various

non-identical AU subsets (Ekman et al. 2003; Lewis

et al. 2008; Zhang et al. 2005).

5.4 Assessment through Available

Datasets

Annotated datasets for assessing the psycho-physical

statuses are necessary to reach the objective of any

related study, which in the current research is the

identification of stress and anxiety. The majority of

existing datasets focus on basic emotions (McIntyre

2010; van der Schalk et al. 2011). “MMI” is an

exhaustive database of 79 series of expressions

based on combination of AUs (Valstar et al. 2010).

Studies examining depression severity (AVEC2013)

are also of great interest, since depression is closely

related to anxiety. Few approaches have been made

for stress assessment (Sharma et al. 2014). To the

best of the authors’ knowledge, none of the existing

datasets covers entirely the needs of the current

research.

The different expressions required for the dataset

creation can be obtained either by asking the

subjects to fake the emotion or by inducing it to the

subjects. Emotion can be elicited by showing videos

as affective stimuli (Koelstra et al. 2012; McIntyre

2010; Soleymani et al. 2012). Protocols like the

“Trier Social Stress Test” (Kirschbaum et al. 1993)

can be used for the induction of stress.

For the image acquisition, the use of a colour

camera is common (Koelstra et al. 2012; McIntyre

2010; Valstar et al. 2010), while extending to

multiple views is becoming more and more popular

(Soleymani et al. 2012; van der Schalk et al. 2011)

in an effort to achieve pose invariance. Infrared

imaging has also been employed in stress assessment

(Sharma et al. 2014). Image resolutions used for the

acquisitions include 640x480 and 720x576, while

frame rates range from 25 to 60 fps (Koelstra et al.

2012; Sharma et al. 2014; Soleymani et al. 2012;

Valstar et al. 2010; van der Schalk et al. 2011).

Illumination in most cases is controlled with only

one case relying on natural lighting (Valstar et al.

2010).

Facial signs indicating stress and anxiety, as

explained in detail in Section 2, include small

particulars in eye and lips movements. Thus, it is

important to have a high frame rate, in order to allow

the accurate evaluation of the eye blinking rate and

the lips trembling (25 fps is considered satisfactory),

and a resolution higher than 800x600 with the face

taking about one fourth of the screen in frontal

position in order to capture pupil dilation. Good

illumination conditions are of high importance, since

skin colour information is also valuable.

6 DISCUSSION

AND CONCLUSIONS

This paper gives an account of the facial signs of

stress and anxiety, as well as of methodologies for

FacialSignsandPsycho-physicalStatusEstimationforWell-beingAssessment

559

the extraction of these signs. Various ways of

characterizing facial expressions as compositions of

these facial signs are described. Furthermore, the

coding of facial expressions into psycho-physical

statuses is presented. Finally, existing datasets or

requirements for creating specifically annotated

datasets are examined towards the suitability for the

assessment of stress and anxiety.

It is worth noting that, while the relations

between basic emotions and facial expressions are

strongly consolidated in the literature, a definite

understanding of the relations existing among

individuals' psycho-physical statuses and facial

expressions is still lacking and is an open field for

further scientific investigation. However, there are

promising research directions that can be exploited

in order to non-invasively estimate the psycho-

physical states under investigation with respect to

well-being assessment.

Considering the facial expression of stress and

anxiety it has been identified that gaze spatial

distribution, saccadic eye movement, pupil dilation,

as well as high blink rates carry information that

indicates increased stress levels (Sharma et al.

2012). Jaw clenching, grinding teeth, trembling of

lips, and frequent blushing are also useful signs of

stress (Stress.org). For anxiety, pupil dilation/size,

eye blinking, strained face, facial colour

(reddening/pallor), lip deformations, eyelid

twitching and response have been reported as facial

signs of significant interest. These signs can be

represented through existing well-assessed

representation systems such as FACS and FAPs with

the addition of colour information.

As reflected in the wide research area of facial

expression analysis, the reported feature extraction

methods produce satisfactory results (De la Torre et

al. 2011) that, in some cases, can be enhanced using

hybrid methods. The selection criteria are mainly

defined by the application environment. Moreover,

the choice of facial features delegates the technique

required for their extraction, but is also a function of

image/video data quality and resource constraints of

the domain. In a similar manner, the choice of the

classifier is strictly correlated to the selection of

features since the classification performance

depends both on the extraction of features with high

discrimination capability and on the choice of an

appropriate highly discriminant classifier.

Stress and anxiety might be measured by using a

combination of the basic emotions, such as those

defined by Plutchik (Plutchik 2001) and Parrott

(Parrott 2001). A possible approach is to assess

anxiety and stress as secondary or tertiary emotions

derived from the primary.

Finally, to the best of the authors’ knowledge,

none of the existing datasets covers completely the

needs of the current research related to stress and

anxiety facial expression analysis. For this reason,

some requirements for the acquisition of a

specifically targeted dataset have also been provided

in this study.

ACKNOWLEDGEMENTS

This work was performed in the framework of the

FP7 Specific Targeted REsearch Project

SEMEOTICONS (SEMEiotic Oriented Technology

for Individual’s CardiOmetabolic risk self-

assessmeNt and Self-monitoring) partially funded by

the European Commission under Grant Agreement

611516.

REFERENCES

Aleksic, P.S. and Katsaggelos, A.K., 2006. Automatic

facial expression recognition using facial animation

parameters and multistream HMMs. IEEE Trans on

Information Forensics and Security, 1(1): 3-11.

AVEC2013. 3

rd

International Audio/Visual Emotion

Challenge and Workshop. [Online] Available at:

http://sspnet.eu/avec2013/.

Boashash, B., Mesbah, M. and Colditz, P.G., 2003. Time-

frequency detection of EEG abnormalities. In Time-

frequency signal analysis and processing: A

comprehensive reference. Elsevier Science, pp. 663-

670.

Chiarugi, F. et al., 2013. A virtual individual’s model

based on facial expression analysis: a non-intrusive

approach for wellbeing monitoring and self-

management. 13th IEEE International Conference on

BioInformatics and BioEngineering.

Cootes, T.F., Edwards, G.J. and Taylor, C.J., 2001. Active

appearance models. IEEE Trans Pattern Anal Mach

Intell, 23(6): 681-685.

De la Torre, F. and Cohn, J.F., 2011. Facial expression

analysis. In Visual Analysis of Humans. Springer

London, pp. 377-409.

Donato, G., Bartlett, M.S., Hager, J.C., Ekman, P. and

Sejnowski, T.J., 1999. Classifying facial actions. IEEE

Trans Pattern Anal Mach Intell, 21(10): 974-989.

Eisert, P. and Girod, B., 1997. Facial expression analysis

for model-based coding of video sequences. ITG

FACHBERICHT: 33-38.

Ekman, P. and Friesen, W.V., 2003. Unmasking the face:

A guide to recognizing emotions from facial clues.

ISHK.

Ekman, P., Friesen, W.V. and Hager, J.C., 2002. Facial

action coding system. The manual on CD-ROM.

HEALTHINF2014-InternationalConferenceonHealthInformatics

560

Essa, I.A. and Pentland, A.P., 1997. Coding, analysis,

interpretation, and recognition of facial expressions.

IEEE Trans Pattern Anal Mach Intell, 19(7): 757-763.

Etemad, K. and Chellappa, R., 1997. Discriminant

analysis for recognition of human face images. JOSA

A, 14(8): 1724-1733.

Feng, X., Pietikainen, M. and Hadid, A., 2005. Facial

expression recognition with local binary patterns and

linear programming. Pattern Recognition And Image

Analysis C/C of Raspoznavaniye Obrazov I Analiz

Izobrazhenii, 15(2): 546.

Friesen, W.V. and Ekman, P., 1983. Emfacs-7: emotional

facial action coding system. Unpublished manuscript,

University of California at San Francisco, 2.

Hamilton, M., 1959. The assessment of anxiety-states by

rating. British Journal of Medical Psychology, 32(1):

50-55.

Hamm, J., Kohler, C.G., Gur, R.C. and Verma, R., 2011.

Automated Facial Action Coding System for dynamic

analysis of facial expressions in neuropsychiatric

disorders. J Neurosci Methods, 200(2): 237-256.

Handel, 2011. The Emotion Machine. (Online) Available

at: http://www.theemotionmachine.com/classification-

of-emotions.

Harrigan, J.A. and O'Connell, D.M., 1996. How do you

look when feeling anxious? Facial displays of anxiety.

Personality and Individual Differences, 21(2): 205-

212.

Huang, C.L. and Huang, Y.M., 1997. Facial expression

recognition using model-based feature extraction and

action parameters classification. Journal of Visual

Communication and Image Representation, 8(3): 278-

290.

Hyvarinen, A., 1999. Survey on independent component

analysis. Neural computing surveys, 2(4): 94-128.

Izard, C.E., 1979. The maximally discriminative facial

movement coding system (MAX). Newark: University

of Delaware Instructional Resources Center.

Jolliffe, I., 2005. Principal component analysis. Wiley

Online Library.

Kämäräinen, J.-K., Hadid, A. and Pietikäinen, M., 2011.

Local representation of facial features, Handbook of

Face Recognition. Springer, pp. 79-108.

Kazuhito, M.O.K., 2013. Analysis of psychological stress

factors and facial parts effect on international facial

expressions. 3rd International Conference on Ambient

Computing, Applications, Services and Technologies,

pp. 10.

Kirschbaum, C., Pirke, K.M. and Hellhammer, D.H.,

1993. The ‘Trier Social Stress Test’–a tool for

investigating psychobiological stress responses in a

laboratory setting. Neuropsychobiology, 28(1-2): 76-

81.

Koelstra, S. et al., 2012. Deap: A database for emotion

analysis; using physiological signals.

IEEE Trans on

Affective Computing, 3(1): 18-31.

Kotsia, I., Buciu, I. and Pitas, I., 2008. An analysis of

facial expression recognition under partial facial image

occlusion. Image and Vision Computing, 26(7): 1052-

1067.

Lerner, J.S., Dahl, R.E., Hariri, A.R. and Taylor, S.E.,

2007. Facial expressions of emotion reveal

neuroendocrine and cardiovascular stress responses.

Biol Psychiatry, 61(2): 253-260.

Lewis, M.D., Haviland-Jones, J.M. and Barrett, L.F.,

2008. Handbook of emotions. Guilford Press.

Littlewort, G., Bartlett, M.S., Fasel, I., Susskind, J. and

Movellan, J., 2006. Dynamics of facial expression

extracted automatically from video. Image and Vision

Computing, 24(6): 615-625.

Lox, C.L., 1992. Perceived threat as a cognitive

component of state anxiety and confidence. Perceptual

and Motor Skills, 75(3): 1092-1094.

Madokoro, H. and Sato, K., 2010. Estimation of

psychological stress levels using facial expression

spatial charts. 2010 IEEE International Conference on

Systems Man and Cybernetics (SMC): 2814-2819.

Mase, K. and Pentland, A., 1991. Automatic lipreading by

optical-flow analysis. Systems and Computers in

Japan, 22(6): 67-76.

McIntyre, G.J., 2010. The computer analysis of facial

expressions: on the example of depression and

anxiety. PhD Thesis. College of Engineering and

Computer Science, The Australian National

University, Canberra.

Ojala, T., Pietikainen, M. and Harwood, D., 1996. A

comparative study of texture measures with

classification based on feature distributions. Pattern

Recognition, 29(1): 51-59.

Pandzic, I.S. and Forchheimer, R., 2003. MPEG-4 facial

animation: the standard, implementation and

applications. Wiley.

Pantic, M. and Patras, I., 2006. Dynamics of facial

expression: Recognition of facial actions and their

temporal segments from face profile image sequences.

IEEE Trans on Systems Man and Cybernetics Part B-

Cybernetics, 36(2): 433-449.

Pantic, M. and Rothkrantz, L.J.M., 2000. Expert system

for automatic analysis of facial expressions. Image and

Vision Computing, 18(11): 881-905.

Parrott, W., 2001. Emotions in social psychology:

Essential readings. Psychology Press.

Perkins, A.M., Inchley-Mort, S.L., Pickering, A.D., Corr,

P.J. and Burgess, A.P., 2012. A facial expression for

anxiety. J Pers Soc Psychol, 102(5): 910-924.

Pighin, F., Hecker, J., Lischinski, D., Szeliski, R. and

Salesin, D.H., 2006. Synthesizing realistic facial

expressions from photographs. ACM SIGGRAPH 2006

Courses. ACM, 19.

Plutchik, R., 2001. The nature of emotions - Human

emotions have deep evolutionary roots, a fact that may

explain their complexity and provide tools for clinical

practice. American Scientist, 89(4): 344-350.

Rosenblum, M., Yacoob, Y. and Davis, L.S., 1996.

Human expression recognition from motion using a

radial basis function network architecture. IEEE Trans

on Neural Networks, 7(5): 1121-1138.

Rowa, K. and Antony, M.M., 2008. Generalized anxiety

disorder. Psychopathology: History, Diagnosis, and

Empirical Foundations: 78.

FacialSignsandPsycho-physicalStatusEstimationforWell-beingAssessment

561

Rydfalk, M., 1978. CANDIDE: A Parameterized Face.

Linkoping Image Coding Group.

Shan, C.F., Gong, S.G. and McOwan, P.W., 2009. Facial

expression recognition based on local binary patterns:

A comprehensive study. Image and Vision Computing,

27(6): 803-816.

Sharma, N. and Gedeon, T., 2012. Objective measures,

sensors and computational techniques for stress

recognition and classification: A survey. Computer

Methods and Programs in Biomedicine, 108(3): 1287-

1301.

Sharma, N. and Gedeon, T., 2014. Modeling a stress

signal. Applied Soft Computing, 14: 53-61.

Simpson, H.M. and Molloy, F.M., 1971. Effects of

audience anxiety on pupil size. Psychophysiology,

8(4): 491-496.

Soleymani, M., Lichtenauer, J., Pun, T. and Pantic, M.,

2012. A multimodal database for affect recognition

and implicit tagging. IEEE Trans on Affective

Computing, 3(1): 42-55.

Stress.org 50 common signs and symptoms of stress.

(Online) Available at: http://www.stress.org/stress-

effects.

Terzopoulos, D. and Waters, K., 1993. Analysis and

synthesis of facial image sequences using physical and

anatomical models. IEEE Trans Pattern Anal Mach

Intell, 15(6): 569-579.

Tong, Y., Chen, J.X. and Ji, Q., 2010. A unified

probabilistic framework for spontaneous facial action

modeling and understanding. IEEE Trans Pattern Anal

Mach Intell, 32(2): 258-273.

Tsalakanidou, F. and Malassiotis, S., 2010. Real-time

2D+3D facial action and expression recognition.

Pattern Recognition, 43(5): 1763-1775.

Valstar, M. and Pantic, M., 2010. Induced disgust,

happiness and surprise: an addition to the mmi facial

expression database. International Conference in

Language Resources and Evaluation, Workshop on

EMOTION, pp. 65-70.

van der Schalk, S.T., Fischer, A.H. and Doosje, B., 2011.

Moving faces, looking places: validation of the

Amsterdam dynamic facial expression set (ADFES).

Emotion, 11(4): 907-920.

Whitehill, J., Bartlett, M.S. and Movellan, J.R., 2013.

Automatic facial expression recognition. Social

Emotions in Nature and Artifact: 88.

Wiemann, J.M., 1976. Unmasking face. In Ekman, P. and

Friesen, W.V. Journal of Communication, 26(3): 226-

227.

Zhang, Y. and Ji, Q., 2005. Active and dynamic

information fusion for facial expression understanding

from image sequences. IEEE Trans on Pattern

Analysis and Machine Intelligence, 27(5): 699-714.

Zhang, Y.M. and Ji, Q., 2006. Active and dynamic

information fusion for multisensor systems with

dynamic Bayesian networks. IEEE Trans on Systems

Man and Cybernetics Part B-Cybernetics

, 36(2): 467-

472.

Zhang, Z., Lyons, M., Schuster, M. and Akamatsu, S.,

1998. Comparison between geometry-based and

Gabor-wavelets-based facial expression recognition

using multi-layer perceptron. 3rd IEEE International

Conference on Automatic Face and Gesture

Recognition: 454-459.

Zhao, X.M. and Zhang, S.Q., 2011. Facial expression

recognition based on local binary patterns and kernel

discriminant isomap. Sensors, 11(10): 9573-9588.

HEALTHINF2014-InternationalConferenceonHealthInformatics

562