Complex Motion Planning for NAO Humanoid Robot

Walaa Gouda

1

and Walid Gomaa

1,2

1

Computer Science and Engineering, Egypt-Japan University for Science and Technology (E-JUST), Alexandria, Egypt

2

Faculty of Engineering, Alexandria University, Alexandria, Egypt

Keywords:

Whole Body Motion, Humanoid Robot, Complex Dynamic Motions , Robot Kinematics , Recognized Object.

Abstract:

In this paper, we introduce an integrated approach that enables a humanoid robot to plan and robustly execute

whole body motions including stepping over, climbing up or down obstacles as well as climbing up straight

staircase using only onboard sensing. Reliable and accurate sequence of motions for humanoid robots op-

erating in complex indoor environments is a prerequisite for robots to fulfill high level tasks. The design of

complex dynamic motions is achievable only through the use of robot kinematics. Based on the recognized

object from the robot database, using the robot camera, a sequence of actions for avoiding that object is ex-

ecuted. As demonstrated in simulation as well as real world experiments with NAO humanoid, NAO can

reliably execute robustly whole body movements in cluttered, multi-level environments containing objects of

various shapes and sizes.

1 INTRODUCTION

Robots have always been a subject of curiosity for

both generalists and technologists alike. Humanoids,

robots with multiple degrees of freedom, have be-

come popular research platforms as they are con-

sidered to be the future of robotics. The human

like design and locomotion allow humanoid robots to

perform complex motions. This includes balancing,

walking, access different types of terrain, standing

up, step over or onto obstacles, reaching destinations

only accessible by stairs or narrow passages, and to

navigate through cluttered environments without col-

liding with objects. These abilities would make hu-

manoid robots ideal assistants to humans, for instance

in housekeeping or disaster management (Graf et al.,

2009; Maier et al., 2013).

Autonomous obstacle avoidance by stepping over,

onto/down the obstacle, climbing stairs with hu-

manoid robots is a challenging task, since humanoids

typically execute motion commands only inaccurately

(Graf et al., 2009; Maier et al., 2013; Shamsuddin

et al., 2011). This is due to the fact that humanoids

possess only a rough odometry estimate; they might

slip on the ground depending on the ground friction,

and backlash in the joints might occur. Additionally,

the observations of their small and light weighted sen-

sors are inherently affected by noise. This all can lead

to uncertain pose estimates or inaccurate motion exe-

cution (Oßwald et al., 2011).

However, there are reasons that explain why hu-

manoid robots aren’t used frequently in practical ap-

plications. One of these reasons is that humanoids are

expensive in cost, as they consist of complex pieces

of hardware and are manufactured in small numbers

(Maier et al., 2013). Also, many researchers apply

navigation algorithms that represent a humanoid us-

ing wheels instead of legs, but the limitation of this

model is that it does not respect all the navigation ca-

pabilities of humanoid robots and therefore more ap-

propriate approaches are necessary for navigation in

cluttered and multi-level scenarios (Maier et al., 2013;

Hornung et al., 2010; Gouda et al., 2013).

In the beginning, humanoid robotics research fo-

cused on specific aspects like walking, but now cur-

rent systems are more complex. Many humanoid

robots are already equipped with full body control

concepts and advanced sensors like stereo vision,

laser, auditory and tactile sensor systems which is the

essential condition to deal with complex problems,

such as walking and grasping. Motion planning is

a promising way to deal with complex problems, as

planning methods allow the flexibility of different cri-

teria satisfaction. The design of complex dynamic

motions is achievable only through the use of robot

kinematics, which is an analytical study of the motion

of the robot manipulator (Maier et al., 2013; Kucuk

and Bingul, 2006; Gienger et al., 2010).

402

Gouda W. and Gomaa W..

Complex Motion Planning for NAO Humanoid Robot.

DOI: 10.5220/0005051904020409

In Proceedings of the 11th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2014), pages 402-409

ISBN: 978-989-758-040-6

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

More specifically, robot kinematics provide the

transformation from the joint space, where the kine-

matic chains are defined, to the Cartesian space,

where the robot manipulator moves, and vice versa

(Kofinas, 2012). Robot kinematics are quite useful,

because it can be used for planning and executing

movements, as well as calculating actuator forces and

torques. Robot kinematics can be divided into for-

ward and inverse kinematics. The forward kinematics

refers to the use of the kinematics equations of the

robot to compute the position of the end effector from

specified values of the joint parameters (Kucuk and

Bingul, 2006). On the other hand the inverse kine-

matics refers to the use of the kinematics equations

of a robot to determine the joint parameters that pro-

vide a desired position of the end effector. It is easy

to see why kinematics is required in any kind of com-

plex motion design (Kucuk and Bingul, 2006; Kofi-

nas, 2012).

The relationship between forward and inverse

kinematics is illustrated in figure 1. Balancing meth-

ods rely on the ability to calculate the center of mass

of the robot, which is constantly changing as the robot

moves. Finding the center of mass is made possible

only if the exact position and orientation of each part

of the robot in the three dimensional space is known

(Graf et al., 2009).

Figure 1: The schematic representation of forward and in-

verse kinematics.

Humanoid robots performing complex motions

tasks need to plan whole body motions that satisfy

a variety of constraints. As the robot must maintain

its balance, self-collisions and collisions with obsta-

cles in the environment must be avoided and, if pos-

sible, the capability of humanoid robots to step over

or onto objects, navigate in multi-level environment

needs to be taken into account. These constraints and

the high number of degrees of freedom of the hu-

manoid robot make whole body motion planning a

challenging problem (Graf et al., 2009). The main

goal of whole body balancing motion is to generate

and stabilize consistent motions and adapt robot be-

havior to the current situation (AldebaranRobotics,

2014).

In this paper, an integrated whole body motion

planning framework has been developed. The frame-

work enables the robot to robustly execute whole

body balancing sequences of actions, including step-

ping over and climbing up/down obstacles as well as

climbing up straight staircase in a 3D environment,

shown in figure 2. Relying only on the robot on-

board sensors, joint encoders, an efficient whole body

motions planning perform safe motions to robustly

navigate in challenging scenes containing obstacles

on the ground as shown in figure 2. Our approach

determines the appropriate motion that consists of a

sequence of actions according to the detected obsta-

cle using monocular camera and bumper sensors. As

demonstrated in practical experiments with a NAO

humanoid and in a series of simulations experiments

using Webots for NAO humanoid robot, which is a

simulation software for modeling, programming and

simulating robots (Cyberbotics, 2014), our system

leads to robust whole body movements in cluttered,

multi-level environments containing objects of vari-

ous shapes and sizes.

Figure 2: The simulated environment.

The remainder of this paper is structured as fol-

lows. Related work is discussed in the Section II.

Section III describes the humanoid robot, also mo-

tion design and object learning phase used for exper-

imentation are described in this section . Section IV

illustrates the robustness and accuracy of our motion

planning approach in experiments. Finally, Section V

concludes the paper.

2 RELATED WORK

Humanoid motion planning has been studied inten-

sively in the last few years. For instance the ap-

proach presented by (Oßwald et al., 2011) enabled an

equipped NAO humanoid robot with a 2D laser range

finder and a monocular camera, to autonomously

climb up spiral staircases. While (Hornung et al.,

2010) presented a localization method for NAO hu-

manoid robots navigating in arbitrary complex indoor

environments using only onboard sensing. Also the

approach developed by (Nishiwaki et al., 2002) al-

lowed NAO to climb single steps after manually posi-

tioning the robot in front of them without integrating

any sensory information to detect the stairs. Footstep

actions plan to climb staircases consisting of three

steps with HRP-2 is introduced by (Chestnutt et al.,

ComplexMotionPlanningforNAOHumanoidRobot

403

2007). While (Samakming and Srinonchat, 2008)

presented a technique for climbing stair robot using

image processing technique besides reducing the pro-

cessing time.

While (Maier et al., 2013) designed motion, called

T-step, that allows the robot to make step over actions,

as well as parameterized step onto and step down ac-

tions. The authors in (Yoshida et al., 2005) investi-

gated a dynamic pattern generator that provides dy-

namically feasible humanoid motion including both

locomotion and task execution such as object trans-

portation or manipulation. While (Shahbazi et al.,

2012) introduced a learning approach for curvilinear

bipedal walking of NAO humanoid robot using pol-

icy gradient method. Their proposed model allows

for smooth walking patterns and modulation during

walking in order to increase or decrease robot speed.

A suitable curvilinear walk, very similar to human or-

dinary walking, was achieved.

Furthermore an approach to whole body motion

planning with a manipulation of articulated objects

such as doors and drawers is introduced in (Burget

et al., 2013). Their experiments with a NAO hu-

manoid opening a drawer, a door, and picking up

an object, showed their framework ability to gener-

ate solutions to complex planning problems. A new

walking algorithm implemented on NAO robot is de-

scribed in (Gouaillier et al., 2010). The authors in

(Shamsuddin et al., 2011) discussed the current trends

in control methods of biped walks and behavior inter-

face tools for motion control for NAO and imminent

findings in both research areas.

In (Hugel and Jouandeau, 2012) a detailed de-

scription of a walking algorithm is presented. Their

algorithm was designed for 3D simulation of locomo-

tion and path planning of humanoid robots and was

implemented on the NAO humanoid. The authors in

(Pierris and Lagoudakis, 2009) introduced Kouretes

Motion Editor (KME), which is an interactive soft-

ware tool for designing complex motion patterns on

robots with many degrees of freedom using intuitive

means.

3 PROPOSED APPROACH

In this section, the proposed algorithm (see Algorithm

1) and action set for the NAO humanoid (see figure

3) that is used during the experimental evaluation are

described.

Figure 3: Aldebaran NAO H25.

Algorithm 1: Navigate through the environment.

1 move three steps forward

2 stop moving

3 pitch down NAO head by 30

◦

4 switch to NAO lower camera

5 look for obstacle

6 if obstacle found then

7 fire object recognition module

8 execute stable whole body motions

depending on the recognized object

9 else

10 go to 1

11 end

3.1 NAO Robot Platform

NAO is a small sized humanoid with five kinematic

chains (head, two arms, two legs) developed by Alde-

baran Robotics (AldebaranRobotics, 2014), it is 58cm

in height; 5.2kg weighs. In general, the robot is sup-

posed to be fully symmetric, but interestingly, accord-

ing to the manufacturer, some joints on the left side

have a different range from the corresponding joints

on the right side (Kofinas, 2012). Also, some joints

appear to be able to move within a large range, the

hardware controller of the robot prohibits access to

the extremes of these ranges, because of possible col-

lisions with NAO shell (Kofinas, 2012). NAO robot

has 25 degrees of freedom (DOF), therefore it can per-

form several complex moves like walking, kicking a

ball, standing up, etc. Kinematics are quite useful for

NAO software developers, because they can be used

for planning and executing such complex movements

(Kofinas, 2012).

The geometric model of NAO gives the effector

positions (X = [P

x

,P

y

,P

z

,P

wx

,P

xwy

,P

wz

]) relative to an

absolute space in function of all the joint positions

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

404

(q = [q

1

,...,q

n

]).

X = f (q) (1)

The direct kinematic model is presented in equation

(2) which is the derivative of equation (1) with respect

to time.

˙

X =

δ

δt

f (q) ˙q = J(q) ˙q (2)

where J(q) is called the Jacobian matrix. A control

on the end effector and deduction of the joint position

is needed, so that the inverse kinematic model, shown

in equation (3), is needed

˙q = J

−1

˙

X (3)

In many cases, J isn’t inversible directly (ma-

trix not square), this problem is solved mathemat-

ically using Moore-Penrose pseudoinverse (Alde-

baranRobotics, 2014).

Aldebaran Robotics provides values of the joints

in the robot documentation. The center of mass for

each link/joint is represented by a point in the three

dimensional space of that joint assuming a zero pos-

ture of that joint. The swing foot can be placed at most

8cm to the front and 16cm to the side and the peak el-

evation is 4cm using the provided walking controller.

The size of the robots feet is approximately 16cm x

9cm. From these numbers, it is clear that NAO is

not able to step over, onto, or down obstacles using

the standard motion controller as shown in figure 4

(AldebaranRobotics, 2014; Maier et al., 2013; Kofi-

nas, 2012).

Figure 4: Clip with maximum outreach (Aldebaran-

Robotics, 2014).

3.2 Motion Design

A kinesthetic teaching is applied to enable the robot

to overcome these limitations. Here, Choregraphe

(Pot et al., 2009), a graphical tool developed by Alde-

baran Robotics, and python programming language

are used to program the NAO H25 humanoid. A

special motion design (described in algorithm 2),

inspired from (Maier et al., 2013) and (Gouda and

Gomaa, 2014), is presented which allows the robot

to step over, onto/down an obstacle according to the

shape of the obstacle as well as climbing up stairs. In

the designed motion, the foot L

1

are placed at an angle

of 30

◦

, which is the basis for the other actions. Then

the robot will move its balance to that leg L

1

(the leg

with the angle 30

◦

) and move the other leg L

2

freely;

after that the balance is moved to L

2

, then L

1

moves

freely beside L

2

and then the balancing is made on

both legs as shown in figure 5.

The motivation for using this motion action is to

exploit the larger lateral foot displacement while mov-

ing forward. From this pose, the robot can perform a

step over action to overcome obstacles with a height

up to 4cm and width of 2cm. The motions of climbing

up stairs and stepping on/down actions are similar to

stepping over motion except for the swing foot place-

ment, as it is placed closer to the stance foot and at a

different height. The height is adjusted using inverse

kinematics based on the recognized object.

Algorithm 2: Actuate motion.

go to initial position

place right foot at angle 30

◦

move balance to right foot

let left foot move freely

if obstacle recognized is the small bar then

move left foot forward

set the foot height to zero, i.e., on the

ground

else if obstacle recognized is the large bar then

move left foot forward

if robot on the ground then

set the foot height to 2cm, i.e., onto the

bar;

else

robot on the bar set the foot height to

-2cm, i.e., down the bar;

end

else

the recognized object is stairs

move left foot forward

set the foot height to 4cm, i.e., on the stair

step;

end

move balance to left foot

let right foot move freely

move right foot forward beside the left foot

The main differences between the designed mo-

tion and the motions described by (Maier et al., 2013)

ComplexMotionPlanningforNAOHumanoidRobot

405

(a) Placing right leg at 30

◦

(b) Balancing on right leg

(c) Balancing on left leg (d) Moving right leg

(e) Balancing on both legs

Figure 5: Designed motion step.

and (Gouda and Gomaa, 2014) is in the robot foot an-

gle placement. As in the designed motion the robot

place its foot at an angle of 30

◦

, this allows the robot

to reach the balance state in short time and more safe.

But when the angle of the robot foot placement in-

crease, the balance state requires more time to be

reached and the probability for the robot to fall in-

creases.

3.3 Learning Objects

The robot uses its onboard sensor, the monocular

camera, to recognize objects in the environment.

NAO needs to learn how to recognize objects, so they

can be used during navigation, by utilizing the vision

monitor in Choregraphe (Pot et al., 2009). After the

images are learned and stored in NAO database, the

Figure 6: Object learning phase.

object recognition module should be tested to assure

that the robot is able to identify the correct object

when recognized in the environment.

During the learning phase, once the image is cap-

tured using NAO cameras, the perimeter of the object

of the captured image is manually determined, after

that a name is assigned to the determined object, then

a message appear to show the success of the process

of learning, as shown in figure 6, then the image is

stored in NAO database. Once all images are stored

into NAO database, NAO will be able to perform ob-

ject recognition. If the object marking is marked in

a wrong way, the learning process will fail and the

object won’t be learned as illustrated in figure 7.

Figure 7: Example of wrong learning.

NAO recognition process is based on the recog-

nition of visual key points and not on the external

shape of the object, so it is able only to recognize

objects that have been learned previously. The pro-

cess is partially robust to distance, ranging from half

and up to twice the distance used for learning, and an-

gles up to 50

◦

inclination for something learned fac-

ing the camera, light conditions, and rotation (Alde-

baranRobotics, 2014). Every detected key point in

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

406

the current image is matched with only one learned

key point in the database. If the score for choosing

between two objects are too close, the key point will

not be associated to any of them. Currently, the algo-

rithm does not poll for several objects, learning twice

the same area of an object will reduce its detection

rate (AldebaranRobotics, 2014).

4 EXPERIMENTAL EVALUATION

Our approach is to make the robot perform whole

body motions that enable the robot to execute com-

plex motions such as step on/down or over the ob-

stacle as well as climbing up stairs using monocular

camera. The robot will use its camera to recognize

the obstacle using the object recognition module. Ac-

cording to the recognized obstacle the robot will exe-

cute a sequence of actions. The design of such com-

plex dynamic motions is achievable only through the

use of robot kinematics (Graf et al., 2009; Kucuk and

Bingul, 2006).

The designed whole body balancing motion uses

NAO own kinematics to control directly its effectors

in the Cartesian space using an inverse kinematics

solver. The Generalized Inverse Kinematics is used,

it deals with Cartesian and joint control, balance, re-

dundancy and task priority. This formulation takes

into account all joints of the robot in a single prob-

lem. The motion obtained guaranties several speci-

fied conditions like balancing, keeping a feet fixed,

etc. Afterwards, the capabilities of the designed mo-

tion system are demonstrated in a series of simula-

tions experiments using Webots for NAO humanoid

robot, as well as real world experiments.

All experiments were carried out with NAO H25

humanoid robot. In the experiments presented, the

robot moves three steps forward, then it stops mov-

ing and pitches down its head by 30

◦

and switches to

lower camera in its head in order to scan for obstacles

on the ground in front of its feet. Once an obstacle

is detected, the object recognition module is fired for

recognition; otherwise the robot will move another

three steps forward. In the case there is an obstacle

recognized the robot will execute stable whole body

motions in order to deal with it.

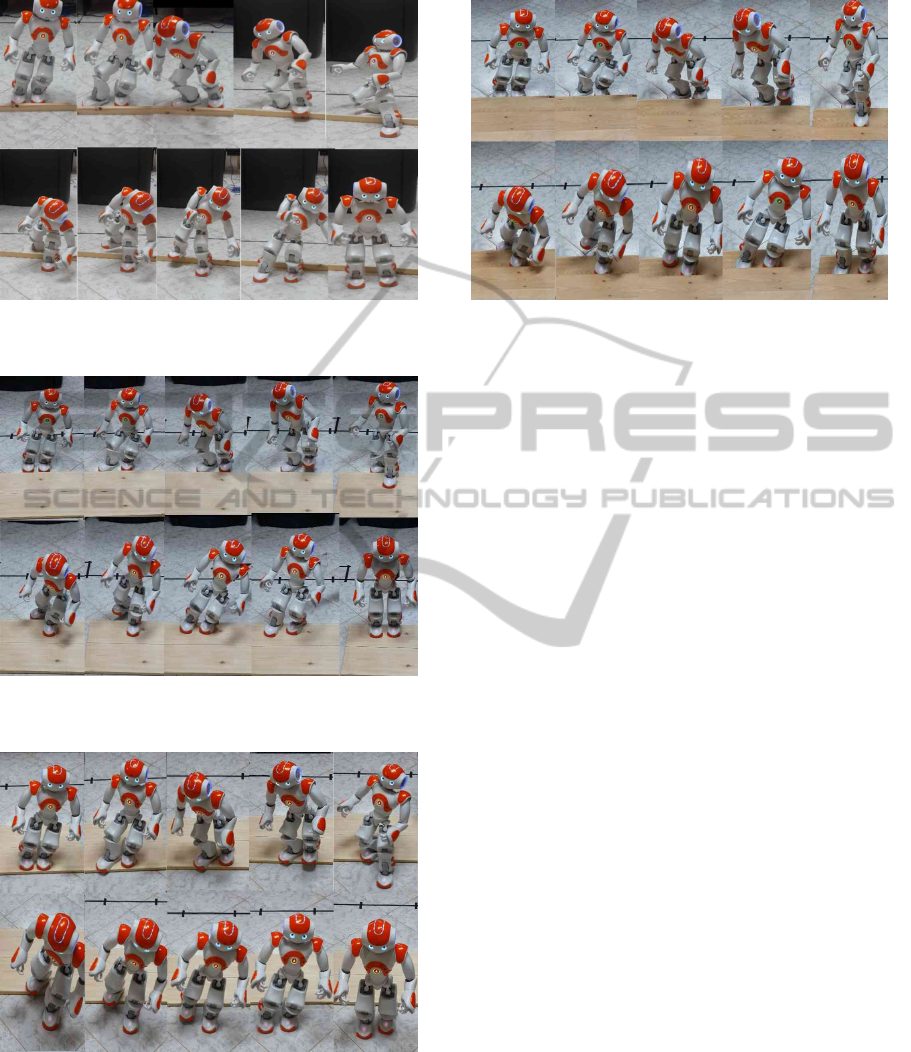

The experiments carried out for the robot step-

ping over a wooden bar of width of 40cm, height of

3.5cm, and depth of 2cm is shown in figure 8; step-

ping onto a wooden bar of width of 40cm, height of

2cm and depth of 40cm is shown in figure 9; stepping

down from that bar to the ground is shown in figure 10

and climbing up straight staircase of width of 40cm,

height of 4cm and depth of 20cm is shown in figure

11. All figures show still frames of a video sequence

where our robot successfully steps over, onto/down

the wooden bar and climbs up straight staircase.

The algorithm implemented is the same for all the

motions; the only exception is the height of its leg and

the place of the swing foot, as the swing foot is placed

closer to the stance foot in the case of climbing stairs

and step onto/down obstacles. In the case of small bar

is recognized the robot will step over it and move its

leg to the ground after the object. While in the case of

the large bar or stairs, the robot will step on/down that

bar or stairs and will move its leg on/down it. Also,

the execution time of all motions is quite similar, as it

takes 30 seconds form the robot to perform step over

motion, 29 seconds to perform step onto/down motion

and 28 seconds from the robot to climb up one stair

step.

We perform a quantitative evaluation of our ap-

proach for accurate step over, onto/down an obstacle

& and climbing up stairs. The success rate of execut-

ing these actions is evaluated using only the onboard

sensors; In ten real world runs on our straight stair-

case consisting of four steps, the robot is able to suc-

cessfully climbed 97% of the stairs. Only two out of

40 stair climbing actions lead to a fall. The robot also

successfully step over, onto/down the wooden bar ten

subsequent times on average.

Afterwards, the joints are heated by putting a force

on them for an extended period of time. Joints over-

heating changes the joints parameters, mainly stiff-

ness, and this affects the balance of the robot; so mo-

tions cannot be successfully executed anymore and

the robot may fail to override the obstacle or may

fall. The robot may also fail to override the obstacle

or climb the stair if the distance between its feet and

the object isn’t appropriate. As the robots camera has

a limitation in providing depth information, i.e. the

distance between the robot feet and the obstacle isn’t

known.

If the obstacle is located at a distance smaller than

a suitable distance to the robot, the robot will hit the

obstacle while moving its leg which leads to a change

in its feet angle, and so its balance will be disturbed

and will fall. Another situation if the obstacle is lo-

cated at a distance greater than a suitable distance the

robot may put its swing foot on the obstacle which

also will make a disturbance in its balance and will

fall.

Another problem is in the execution time of the

motion, as the robot has to have enough time to reach

balance state after performing each action in the mo-

tion or it will fall. In the case of the time is too short

the robot won’t be able to finish the action it is per-

forming, so balance won’t be reached and the robot

ComplexMotionPlanningforNAOHumanoidRobot

407

Figure 8: NAO stepping over a wooden obstacle of height

3.5cm and depth 2cm using planned whole body motion.

Figure 9: NAO stepping on a wooden obstacle of height

2cm and depth 40cm using planned whole body motion.

Figure 10: NAO stepping down from a wooden obstacle

of height 2cm and depth 40cm using planned whole body

motion.

will fall. Otherwise, if the time of motion execution

is too long, to allow the robot to finish the action it is

preforming, its joints will get hot quickly and may not

be able to keep its balance in each position for long

time so it may also fall. To overcome this problem

Figure 11: NAO climbing up a stair with height 4cm and

depth 20cm using planned whole body motion.

the foot is placed at an angle of 30

◦

, which is the ba-

sis for the other actions, that allows the robot to reach

its balance state easily and in short time, so the joints

are not heated rapidly.

These results show that our approach enables a

humanoid to reliably climb up the steps of straight

staircases, which are not marked to be easily visu-

ally detectable. Furthermore, avoiding colliding with

ground obstacles by stepping over or onto/down it is

also showed.

5 CONCLUSION

In this paper, an integrated approach that enables a

humanoid robot to plan and robustly execute whole

body balancing sequences of actions including step-

ping over and climbing up or down obstacles is in-

troduced. Our system includes recognizing objects

stored into the NAO database using NAO camera.

Based on the recognized object the robot executes

specific motions to deal with the recognized obstacle.

The robot can execute these motions ten times con-

sequently. It is possible to reduce the heating in the

joints by reducing the time spent in critical positions

or by setting stiffness to 0 after each action. In our

case the heating problem is avoided by making the

foot of the robot placed at an angle of 30

◦

, which re-

duces the time taken by the robot to go to the balance

state. The robot camera has a limitation that it can’t

provide the distance between the robot and the obsta-

cle; to overcome this limitation the robot feet bumper

is used. As demonstrated in both simulation exper-

iments, using Webots for the NAO humanoid robot,

and practical experiments with a NAO humanoid, our

approach leads to robust whole body movements in

cluttered, multi-level environments containing objects

of various shapes and sizes.

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

408

In future work, we will evaluate the capabilities

of the robot to perform more complex motions like

climbing down the stairs, climbing up or down a ramp

of 20

◦

inclination using the designed sequence of mo-

tions.

ACKNOWLEDGEMENTS

This research has been supported by the Ministry

of Higher Education (MoHE) of Egypt through a

PhD fellowship. Our sincere thanks to Egypt-Japan

University for Science and Technology (E-JUST) for

guidance and support.

REFERENCES

AldebaranRobotics (2014). Nao software 1.14.5 documen-

tation @ONLINE.

Burget, F., Hornung, A., and Bennewitz, M. (2013). Whole-

body motion planning for manipulation of articulated

objects. In Robotics and Automation (ICRA), 2013

IEEE International Conference on, pages 1656–1662.

IEEE.

Chestnutt, J. E., Nishiwaki, K., Kuffner, J., and Kagami, S.

(2007). An adaptive action model for legged naviga-

tion planning. In Humanoids, pages 196–202.

Cyberbotics (2014). Webots: the mobile robotics simula-

tion software @ONLINE.

Gienger, M., Toussaint, M., and Goerick, C. (2010). Whole-

body motion planning–building blocks for intelligent

systems. In Motion Planning for Humanoid Robots,

pages 67–98. Springer.

Gouaillier, D., Collette, C., and Kilner, C. (2010). Omni-

directional closed-loop walk for nao. In Humanoid

Robots (Humanoids), 2010 10th IEEE-RAS Interna-

tional Conference on, pages 448–454. IEEE.

Gouda, W. and Gomaa, W. (2014). Nao humanoid robot

motion planning based on its own kinematics. In

Press.

Gouda, W., Gomaa, W., and Ogawa, T. (2013). Vision

based slam for humanoid robots: A survey. In Elec-

tronics, Communications and Computers (JEC-ECC),

2013 Japan-Egypt International Conference on, pages

170–175. IEEE.

Graf, C., H

¨

artl, A., R

¨

ofer, T., and Laue, T. (2009). A ro-

bust closed-loop gait for the standard platform league

humanoid. In Proceedings of the Fourth Workshop

on Humanoid Soccer Robots in conjunction with the,

pages 30–37.

Hornung, A., Wurm, K. M., and Bennewitz, M. (2010). Hu-

manoid robot localization in complex indoor environ-

ments. In Intelligent Robots and Systems (IROS), 2010

IEEE/RSJ International Conference on, pages 1690–

1695. IEEE.

Hugel, V. and Jouandeau, N. (2012). Walking patterns for

real time path planning simulation of humanoids. In

RO-MAN, 2012 IEEE, pages 424–430. IEEE.

Kofinas, N. (2012). Forward and inverse kinematics for the

NAO humanoid robot. PhD thesis, Diploma thesis,

Technical University of Crete, Greece.

Kucuk, S. and Bingul, Z. (2006). Robot kinematics: for-

ward and inverse kinematics. Industrial Robotics:

Theory, Modeling and Control, pages 117–148.

Maier, D., Lutz, C., and Bennewitz, M. (2013). Integrated

perception, mapping, and footstep planning for hu-

manoid navigation among 3d obstacles. In Intelligent

Robots and Systems (IROS), 2013 IEEE/RSJ Interna-

tional Conference on, pages 2658–2664. IEEE.

Nishiwaki, K., Kagami, S., Kuniyoshi, Y., Inaba, M., and

Inoue, H. (2002). Toe joints that enhance bipedal

and fullbody motion of humanoid robots. In Robotics

and Automation, 2002. Proceedings. ICRA’02. IEEE

International Conference on, volume 3, pages 3105–

3110. IEEE.

Oßwald, S., Gorog, A., Hornung, A., and Bennewitz, M.

(2011). Autonomous climbing of spiral staircases with

humanoids. In Intelligent Robots and Systems (IROS),

2011 IEEE/RSJ International Conference on, pages

4844–4849. IEEE.

Pierris, G. and Lagoudakis, M. G. (2009). An interactive

tool for designing complex robot motion patterns. In

Robotics and Automation, 2009. ICRA’09. IEEE In-

ternational Conference on, pages 4013–4018. IEEE.

Pot, E., Monceaux, J., Gelin, R., and Maisonnier, B. (2009).

Choregraphe: a graphical tool for humanoid robot

programming. In Robot and Human Interactive Com-

munication, 2009. RO-MAN 2009. The 18th IEEE In-

ternational Symposium on, pages 46–51. IEEE.

Samakming, W. and Srinonchat, J. (2008). Development

image processing technique for climbing stair of small

humaniod robot. In Computer Science and Informa-

tion Technology, 2008. ICCSIT’08. International Con-

ference on, pages 616–619. IEEE.

Shahbazi, H., Jamshidi, K., and Monadjemi, A. H. (2012).

Curvilinear bipedal walk learning in nao humanoid

robot using a cpg based policy gradient method. Ap-

plied Mechanics and Materials, 110:5161–5166.

Shamsuddin, S., Ismail, L. I., Yussof, H., Ismarrubie Za-

hari, N., Bahari, S., Hashim, H., and Jaffar, A. (2011).

Humanoid robot nao: Review of control and motion

exploration. In Control System, Computing and Engi-

neering (ICCSCE), 2011 IEEE International Confer-

ence on, pages 511–516. IEEE.

Yoshida, E., Belousov, I., Esteves, C., and Laumond, J.-

P. (2005). Humanoid motion planning for dynamic

tasks. In Humanoid Robots, 2005 5th IEEE-RAS In-

ternational Conference on, pages 1–6. IEEE.

ComplexMotionPlanningforNAOHumanoidRobot

409