Integrating the Usability into the Software Development Process

A Systematic Mapping Study

Williamson Silva, Natasha M. Costa Valentim and Tayana Conte

USES Research Group, Instituto de Computação, Universidade Federal do Amazonas, Manaus, Brazil

Keywords: Usability, Human Computer Interaction, Software Engineering, HCI, SE, Systematic Mapping.

Abstract: With the increasing use of interactive applications, there is a need for a development with better quality and

a good interaction that facilitates the use for end users, because such applications are increasingly present in

daily life. Therefore, it is necessary to include usability, which is one of the important quality attributes, in

the development process for obtaining good acceptance rates and, consequently, improving the quality of

these applications. In this paper we present a Systematic Mapping Study (SM) that assists categorizing and

summarizing technologies that have been used in order to improve usability. The results from our SM show

some technologies that can help improving usability in various applications. Also, it identifies gaps that still

need to be researched. We found that most technologies have been proposed for the Testing phase (67.28%)

and that Web applications are the most evaluated type of application (52.65%). We also identified that few

technologies assist designers improving usability in the early stages of the development process (13.50%

Analysis phase and 15.95% Design phase). The results from this SM allow observing the state of the art

regarding technologies that can be integrated into the development process, aimed at improving the usability

of interactive applications.

1 INTRODUCTION

The development of interactive applications has

increased considerably. The success of these

applications is related to the quality they provide to

their end users. Therefore, there is great concern on

the part of software companies to produce high

quality applications and to ensure a good user

experience (Sangiorgi and Barbosa, 2010).

Developing interactive applications meeting

quality criteria as well as the users’ needs is a

complex activity. To minimize this problem, the

areas of Human Computer Interaction (HCI) and

Software Engineering (SE) have proposed methods

and techniques that reflect the different perspectives

in the development process (Barbosa and Silva,

2010) and aimed at improving the quality of these

interactive applications.

HCI focuses, generally, on understanding the

characteristics and needs of the system’s users, in

order to design a better user–system interaction

(Preece et al., 1994). On the other hand, SE has

developed systematic approaches to improve quality

during development process of interactive

applications (Nebe and Paelke, 2009). Therefore, in

order to improve the quality of applications, it is

necessary to integrate the approaches proposed by

the HCI and SE areas. With this integration, there

will be a mutual understanding between the two

areas, ensuring that problems encountered in the

development of the application are handled properly

throughout the development process (Juristo et al.,

2007). Several researches have been investigating

how to integrate the areas of HCI and SE. One of the

existing proposals is to incorporate the methods and

techniques proposed in HCI, which focus on

improving the usability of applications, in the

development processes proposed by SE (Fischer,

2012; Nebe and Paelke, 2009; Juristo et al., 2007).

Usability plays a critical role in interactive

applications and, it is a key quality factor that should

be considered during the development process

(Fischer, 2012). According to ISO/IEC 9241-11

(1998) standard usability is defined as “the extent to

which a product can be used by specified users to

achieve specific goals with effectiveness, efficiency

and satisfaction in a specified context of use”.

Integrating usability in the development process has

several benefits such as reduction of documentation

and training costs, as well as improving the

105

Silva W., Costa Valentim N. and Conte T..

Integrating the Usability into the Software Development Process - A Systematic Mapping Study.

DOI: 10.5220/0005377701050113

In Proceedings of the 17th International Conference on Enterprise Information Systems (ICEIS-2015), pages 105-113

ISBN: 978-989-758-098-7

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

productivity of the practitioners (Carvajal, 2009).

Usability is one of the critical success factors of

applications (Juristo et al., 2007) and an important

criterion for the acceptance of applications by end

users (Conte et al., 2010).

We performed a Systematic Mapping Study

(SM) in order to identify technologies that integrate

usability into the software development process. In

the context of this paper, the term technology is used

as a generalization of methods, techniques, models,

tools, approaches, and other proposals made by the

areas of HCI and SE. This SM provided a body of

knowledge of technologies that assist in improving

usability through various artifacts that are generated

during the development process of the applications.

This SM also aims at assisting practitioners of the

software companies in choosing technologies that

will help them design/evaluate the usability within

the development process, through the classification

carried with each technology.

This paper is organized as follows: Section 2

describes the research method used; Section 3 shows

the quantitative results; in Section 4, the qualitative

results are presented; Section 5 shows some

discussions and finally in Section 6 we present our

conclusions.

2 RESEARCH METHOD

A Systematic Mapping Study (SM) is a method of

categorizing and summarizing the existing

information about a research question in an unbiased

manner (Kitchnham and Chartes, 2007). The

activities concerning the planning and conducting

stages of our SM are described in the next sub-

sections and the results are presented in Section 3

and Section 4.

2.1 Research Question

The goal of our study is to examine technologies that

aim at improving the usability of applications, from

the point of view of the following research question:

“What technologies can improve the usability in the

software development process?”. Since the research

question was fairly wide, we defined Sub-Questions

to answer specific questions about each technology

in Sub-section 2.2.4.

2.2 Search Strategy

Two main digital libraries were used to search for

studies: IEEEXplore and Scopus. These libraries

were chosen because: (1) have a good operation and

scope of the search engines; and (2) Scopus is the

largest database indexing abstracts and citations

(Kitchenham and Chartes, 2007). To improve the

automatic search of the selected digital libraries, we

used the PICOC (Kitchenham and Charters (2007):

(P) Population: Software development process;

(I) Intervention: HCI or SE technologies that

are used in the software development process;

(C) Comparison: Not applicable, since the goal

is not to make a comparison between technologies,

but to characterize them;

(O) Outcome: The improvement of the

application in terms of usability through the

developed artifacts by using the technologies that

design/evaluate usability attributes;

(C) Context: Not applicable, since there is no

comparison, it is not possible to determine a context.

After that, were searched terms that represented

the (P), (I) and (O) and designed a search string.

Table 1 shows the search string in which Boolean

OR has been used to join alternate terms, while the

Boolean AND has been used to join the three parts.

Table 1: Applied search string.

Population (software development OR

software project OR software

engineering OR software process)

AND

Intervention (technique OR method OR

methodology OR tool)

AND

Outcome (usability inspection OR usability

evaluation OR usability design

OR usability testing)

2.3 Selection of Papers

In the first step, called 1st filter, two researchers

evaluated only the title and the abstract of each

paper to according inclusion and exclusion criteria

(see Table 2) and selecting papers that would be

within the scope of the research question.

In the second stage (or 2nd filter), researchers

conducted a thorough reading of the selected papers

from the 1st filter. And the papers were

included/excluded according to the inclusion and

exclusion criteria.

Table 2: Inclusion/Exclusion Criteria.

# Inclusion Criterion

IC1

Papers describing HCI and SE technologies that

are applied to promote the usability in the software

development process can be selected;

IC2

Papers presenting tool support that can be

employed by designers to improve the usability of

the software process can be selected;

ICEIS2015-17thInternationalConferenceonEnterpriseInformationSystems

106

Table 2: Inclusion/Exclusion Criteria (cont.).

# Inclusion Criterion

IC3

Papers that discuss aspects regarding the inclusion

of usability in the software process can be

selected;

IC4

Papers that have improved usability in one of the

phases of the software process in any organization

can be selected.

# Exclusion Criterion

EC1

Papers in which the language is different from

English and Portuguese cannot be selected;

EC2

Papers that are not available for reading and data

collection (papers that are only accessible through

paying or are not provided by the search engine)

cannot be selected;

EC3

Duplicated papers cannot be selected;

EC4

Publications that do not meet any of the inclusion

criteria cannot be selected.

2.4 Strategy for Data Extraction

We extracted the following information from each of

the selected papers.

Regarding SQ1 (Type of Technology), its goal

was to identify the type of technology described in

the paper, such as method, technique, template, tool

or another procedure adopted in HCI or SE.

Regarding SQ2 (Origin of the Technology), its

goal was to identify if the identified technologies are

new or have been created based on other

technologies proposed in the HCI or SE areas. The

technologies can be rated according to the following

answers:

New: the paper presents a technology, but it is

not based on other technologies;

Existent: the paper presents a technology, but

this proposal was based on other technologies.

Regarding SQ3 (Context of Use), its goal was to

identify where the technologies are being proposed

and currently used. The technologies can be rated

according to the following answers:

Industrial: the papers presents a technology

used or evaluated in an industrial context;

Academic: the papers presents a technology

used or evaluated in an academic context;

Both: the paper presents a technology used or

evaluated in industrial and academic contexts.

Regarding SQ4 (Phase of the Development

Process), its goal was to identify in what stage the

new technologies can be used. The technologies can

be classified in one or more SWEBOK (Software

Engineering Body of Knowledge) high-level process

(SWEBOK, 2004):

Requirements: technologies employed to

design/evaluate the artifacts aimed at

identifying the users’ needs;

Design: technologies that help to

design/evaluate the artifacts that are created

before coding;

Construction: technologies that help

designers as they carry out the coding of

application;

Verification, Validation & Testing (V,

V&T): technologies that help: (a) to verify

that the product meets the user requirements

(Verification), (b) to ensure consistency,

completeness and correctness of the

application (Validation); and (c) to examine

the behavior of the application through its

execution (Testing);

Maintenance: technologies that verify the

usability while maintaining the application.

Regarding SQ5 (Specific life cycle), its goal was

to identify which technologies can be applied (or

not) in specific development processes. We verified

whether the identified technology is applied in a

specific life cycle:

Yes: the technology is used in a specific life

cycle (Spiral, Star, among others);

No: the technology is not employed in a

specific life cycle.

Regarding SQ6 (Designed/Evaluated Object), its

goal was to identify the object in which the

technology was employed. For example, prototypes,

web applications, among others.

Regarding SQ7 (Empirical Evaluation), its goal

was to assist in the identification of which

technologies were empirically evaluated. The

technologies can be classified according to the

following answers:

No: if it does not provide any type of

empirical evaluation of the technology

presented in the paper;

Yes: if the paper presented any kind of

empirical evaluation of the proposed

technology.

Regarding SQ8 (Research Type), its goal was to

identify the type of research that was adopted in the

paper. A paper can be classified according to

classification proposed by Wieringa et al. (2006):

Evaluation Research: the paper shows how

the technologies are implemented in practice

and what are the benefits and drawbacks;

Proposal of Solution: the paper proposes

solution technologies and argues for its

relevance, without a full-blown validation.

The technologies must be novel, or at least a

significant improvement of an existing

technique;

Validation Research: the paper shows

IntegratingtheUsabilityintotheSoftwareDevelopmentProcess-ASystematicMappingStudy

107

technologies that are novel and have not yet

been implemented in practice;

Philosophical Papers: these papers sketch a

new way of looking at existing things by

structuring the field in form of a taxonomy or

conceptual framework;

Opinion Papers: these papers contain the

author’s opinion about what is wrong or good

about something, how we should do

something, etc.;

Personal Experience Papers: these papers

explain on what has been done in practice.

Regarding SQ9 (Tools support), its goal was to

identify which technologies need (or not) tool

support in order to be applied. The technology can

be classified according to the following answers:

Yes: the technology presented in the paper

requires some specific tool support;

No: the technology presented in the paper

does not require specific tool support.

The package containing information about this

SM is available in Silva et al. (2014).

2.5 Selection of Papers

The execution of this SM presented the following

preliminary result. A total of 124 papers (see Table

3) were selected. These papers were selected based

on the inclusion criteria (see Table 2).

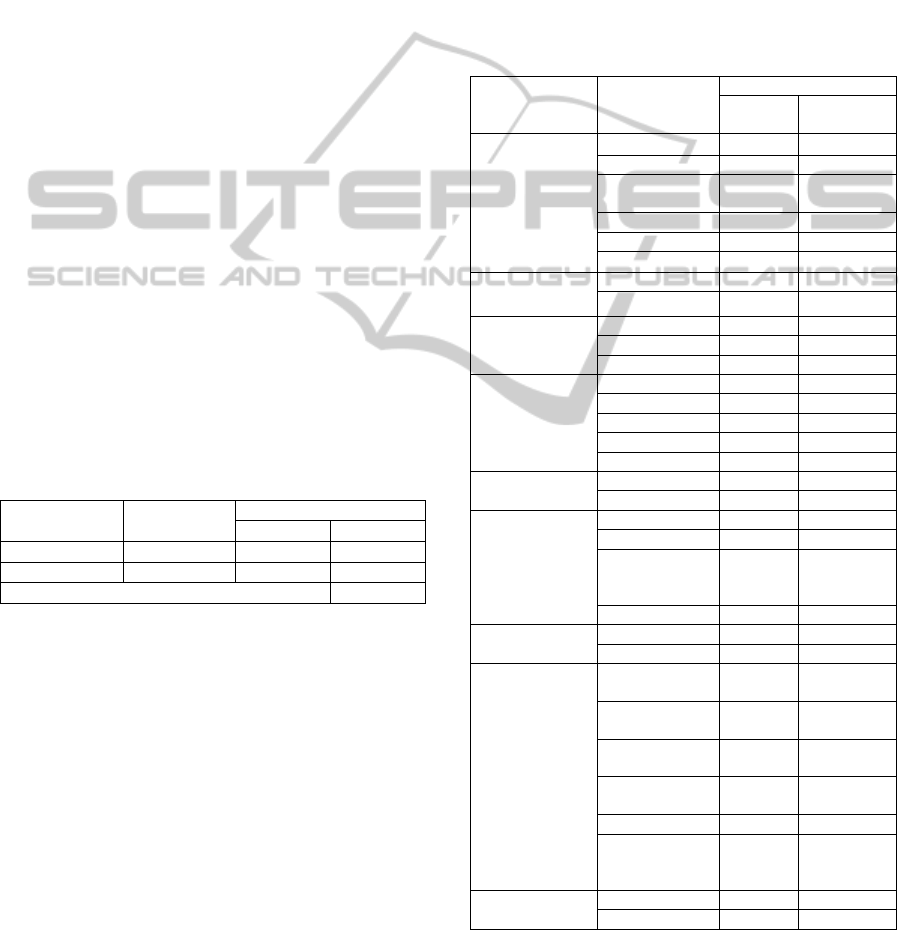

Table 3: Results of the conducting stage.

Selected

Libraries

Returned

Papers

Selected Papers

1º Filter 2º Filter

IEEExplore 59 57 36

Scopus 170 135 88

Total of Extracted Papers 124

In the selection process, few papers appeared in

more than one digital library. However, these papers

were counted only once and in the order of the

performed search (IEEExplore and then Scopus).

3 QUANTITATIVE RESULTS

3.1 Overview of the Studies

The overall results, which are based on counting the

studies that are classified in each of the answers to

our research sub-questions, are presented in Table 4.

Although 124 papers were selected, in Table 4

we only counted 123. This is because one of the

selected papers from our review is a Systematic

Literature Review. For that type of paper, we have

prepared another type of data extraction strategy.

Note that sub-questions Q4 and Q6 are not

exclusive. Therefore, a paper can be classified in one

or more of the possible answers. The summation of

the percentages is therefore over 100%. For

example, regarding Q4, some technologies may be

used in more than one stage of the development

process. Similarly, in sub-question Q6, a technology

can be applied to design / evaluate more than one

artifact.

Table 4: Results from the SM for each of the Sub-

Questions.

Research sub-

question

Possible

answers

Results

Number

Percentage

(%)

Q1. Type of

technology

Methods 50 40.65

Tools 25 20.33

Frameworks /

Approach

23 18.70

Techniques 16 13.01

Models 6 4.88

Methodology 3 2.44

Q2. Origin of

the technology

New 12 9.76

Existing 111 90.24

Q3. Context of

use

Industrial 27 21.95

Academic 87 70.73

Both 9 7.32

Q4. Phase of

the

development

process

Requirements 22 13.58

Design 27 16.67

Construction 4 2.47

V, V & T 109 67.28

Maintenance 0 0.0

Q5. Specific

life cycle

Yes 17 13.82

No 106 86.18

Q6. Designed /

Evaluated

Object

Applications 76 50.67

Models 24 16.00

Interfaces,

Mockups or

Prototype

43 28.67

Others objects 7 4.66

Q7. Empirical

Evaluation

Yes 90 73.17

No 33 26.83

Q8. Research

Type

Evaluation

Research

5 4.07

Proposal of

Solution

37 30.08

Validation

Research

77 62.60

Philosophical

Papers

0 0.00

Opinion Papers 1 0.81

Personal

Experience

Papers

3 2.44

Q9. Tools

support

Yes 28 22.76

No 95 77.24

3.2 Publication Year

The reviewed papers were published between 1988

ICEIS2015-17thInternationalConferenceonEnterpriseInformationSystems

108

and 2013. From a temporal point of view (Figure 1),

there was an increasing number of publications

between the years 2005 and 2007. One can also see,

according to the papers collected from this SM, that

in the years 2008 and 2011 there was a decrease in

the number of published papers. The year of 2012 is

the year with most published papers (15.32%),

followed by 2010 (11.29%), 2009 (10.48%) and

2007 (10.48%). As this SM was conducted in

January 2014, not all conferences held in 2013 had

its publications in indexed searchable digital

libraries. This may be the reason for the low number

of papers in that year.

Figure 1: Temporal view of papers.

4 QUALITATIVE RESULTS

4.1 Type of Technology (SQ1)

The results for this sub-question showed that about

40.65% of the papers presented methods. For

example, Fernandez et al. (2012a) proposed a

usability inspection method called WUEP (Web

Usability Evaluation Process). Around 20.33% of

the papers present a tool. For instance, Vaz et al.

(2012) presented WDT Tool that assists the

identification of usability problems in web

applications. Around 18.70% of the papers presented

some approach/framework. For example, Liang and

Deng (2009) presented a framework that describes

the Computer-Supported Cooperative Work from the

perspective of the Cognitive Walkthrough technique.

Around 13.01% of the papers presented a technique.

For example, Bonifácio et al. (2012) proposed a

usability inspection technique for web mobile

applications. Around 4.88% of the papers showed

models. Ibrahim et al. (2007) presented a model that

helps to assess usability in sonification applications.

Furthermore, 2.44% of the papers presented

methodologies, as presented in Sivaji et al. (2013).

4.2 Origin of the Technology (SQ2)

The results for this sub-question showed that around

90.24% of the technologies found in the papers were

based on other existing technologies in the literature.

For instance, Conte et al. (2007) proposed a

technique that combines perspectives of Web design

with the heuristics proposed by Nielsen (1994).

Around 9.76% of the papers describe a technology

that is not based in other technologies. For example,

Pankratius (2011) presents a tool that aiming to

collect subjective information from the programmer

while it performs the coding of the application.

The results of this sub-question (SQ2) and the

previous sub-question (SQ1) indicate that several

technologies are being proposed in the literature.

These technologies aim at assisting primarily both

the designers of IHC as well as software engineers,

improving usability in the development process of

applications by designing or evaluating usability.

4.3 Context of Use (SQ3)

The results for this sub-question showed that about

70.73% of the technologies presented were used in

an academic context. For example, Fernandes et al.

(2012) conducted two empirical studies in an

academic context with undergraduate students.

Around 21.95% of the technologies were applied in

an industrial context. Sivaji et al. (2013), for

example, conducted two case studies in two industry

software projects with experts in usability.

Furthermore, 7.32% of the technologies presented

were applied in both Academic and Industrial

context, as presented in Vaz et al. (2012).

The results of this sub-question show that most

of the technologies found in this SM are being

proposed and/or evaluated in the academic context.

This because is more costly for the industry to

provide part of the time of the practitioners to

perform evaluations. One of the solutions found by

the researchers is to conduct these evaluations in the

academic context, with undergraduate or graduated

students. Some of these students working in the

industry and already have the professional profile

expected to participate of the evaluations.

4.4 Phase of the Development Process

(SQ4)

The results for this sub-question revealed that

67.28% of the technologies are used during the V,

V&T phase. In this phase, we have divided the

selected technologies in two categories as suggest by

IntegratingtheUsabilityintotheSoftwareDevelopmentProcess-ASystematicMappingStudy

109

Fernandez et al. (2011): (1) Usability Inspection and

(2) Usability Testing. Of the total number of

technologies applied during the V, V&T, 44.95% are

technologies for Usability Inspection. For example,

Fernandes et al. (2012) present an usability usability

inspection technique, called WE-QT (Web-Question

Evaluation Technique) which helps novice

inspectors to identify usability problems for Web

applications. And, of all the technologies used in V,

V&T phase, 55.05% are technologies are for

Usability Testing. One example is the proposed tool

by Fabo et al. (2012) that identifies usability

problems through automatic data capture. Around

16.67% of the technologies can be used in the

Design phase, as technique proposed by Rivero and

Conte (2012). Around 13.58% of the technologies

can be used in the Requirements stage. For example,

Ormeño et al. (2013) presented a new method to

capture usability requirements. Around 2.47% of the

technologies can be used while developers perform

the coding of application, as the tool proposed by

Pankratius (2011). We did not find any a technology

that is used in the maintenance phase.

The results of this sub-question indicate that

there is a need for technologies that can be used in

the initial stages of development (Requirements and

Design). However, the found usability problems in

the final stages are corrected with the higher the

cost, also increasing the time for professional

development and maintenance of the application.

4.5 Specific Life Cycle (SQ5)

The results for this sub-question revealed that

86.18% of the technologies are not used in a specific

life cycle. Thus, such technologies may be suitable

for the development life cycles adopted in industry.

Moreover, 13.82% of the technologies are used in a

specific life cycle. For example, Sivaji et al. (2013)

presented a hybrid approach that integrates the

Heuristic Evaluation and Usability Testing in a life

cycle of specific development. The results for this

sub-question indicate that 86.18% of technologies

are used regardless of the life cycle adopted by the

development team. However, 13.82% of the selected

technologies are using a specific life cycle (e.g. the

Model Driven Development - MDD, the

Architecture Driven Development - ADD).

4.6 Designed / Evaluated Object (SQ6)

The results for this sub-question revealed that

50.67% of the technologies presented already

developed applications as an object being designed

or evaluated. From the total technologies that

design/evaluate applications, 52.65% of these

technologies have been used in Web applications,

26.30% in Desktop applications, 3.94% in Mobile

applications and 17.12% did not specify which

application the technology was evaluating.

Approximately 28.67% of the technologies

employed interfaces, mockups or prototypes as

objects. An example is the method proposed by

Ormeño et al. (2013). Around 16% of the

technologies employed models as objects, as the

method proposed by Rivero and Conte (2012). And

4.66% of the technologies employed other objects,

such as the lines of code (2%), log files (2%) and

user tasks (0.66%).

The results for this sub-question indicate that

many technologies are being developed to improve

the usability in applications, especially focused on

the Web. However, a point to be considered is the

low number of technologies that help to improve the

usability in Mobile applications. With the growth in

use of mobile devices, mobile applications are

becoming increasingly present among users.

4.7 Empirical Evaluation (SQ7)

The results for this sub-question revealed that in

26.83% of the selected technologies didn’t any type

of empirical evaluation. For instance, Díscola and

Silva (2003) describe an approach, but the authors

did not perform an empirical study. Around 73.17%

of the technologies were evaluated empirically. For

instance, Santos et al. (2011) describes the evolution

of an assistant through empirical studies.

The results for this sub-question show that

almost 74% of the papers carried out an empirical

evaluation in the technologies they are proposing.

Conducting empirical studies is a common practice

in the areas of Human Computer Interaction and

Software Engineering. These two areas are

interested in evaluating and improving the proposed

technologies so that they can assist practitioners

during the usability design/evaluation.

4.8 Research Type (SQ8)

The results for this sub-question revealed that

62.60% of the papers presented a Research

Validation. Conte et al. (2009) presented a Research

Validation. Approximately 30.08% of the papers

presented a Proposed Solution. Ormeno et al. (2013)

presented a proposed solution, but did not present

how this proposal would be used in practice. Around

4.07% of the papers presented a Evaluation

ICEIS2015-17thInternationalConferenceonEnterpriseInformationSystems

110

Research. For example, Winter et al. (2011)

presented a technology and also commented on the

advantages and disadvantages of using the proposed

technology for the industry. Approximately 2.44%

of the papers present Papers of Professional

Experience. For example, Nayebi et al. (2012)

reported how three usability evaluation methods can

be used in mobile applications. And 0.81% of the

papers presented a Opinion Papers. Mueller et al.

(2009) present several important points of how to

conduct usability testing. We did not find any

Philosophy Paper in this SM.

The results for this sub-question indicate that

many papers are presented to validate the

technologies that are being proposed. This may be

an indication that researchers are trying to improve

the proposed technologies for them to be used in the

academic or industrial context. Moreover, another

important result is the number of papers that present

new Proposed Solutions (30.08%), describing

technologies that aim to solve usability problems

during the development process.

4.9 Tools Support (SQ9)

The results for this sub-question indicated that

22.76% of the technologies require a tool or

framework to assist practitioners. As mentioned

earlier, there are the tools proposed by Vaz et al.

(2012) and Santos et al. (2011). However, about

77.24% of the technologies do not require tool

support. As mentioned earlier, we have the research

by Ormeno et al. (2013) and others. The tools found

in the majority (22.76%) are available under a (paid)

license or are unavailable for use by practitioners

(academic tools). Such features translate into a

higher employment of technologies that do not

require tool support. Tools can increase performance

by reducing overhead and facilitating the work of

practitioners in the development process. Therefore,

tool support technologies that are available for use

can reduce the effort of practitioners during the

development of interactive applications and,

consequently, provide many benefits to the industry.

5 DISCUSSION

As mentioned before, in this SM we found a

secondary study, a systematic review proposed by

Fernandez et al. (2012). This systematic review

cannot be classified according to our research sub-

questions because the data collected in our sub-

questions is specific to technologies rather than

systematic mappings or systematic reviews. We

related the technologies found in our systematic

mapping with the technologies found in the

systematic review by Fernandez et al. (2012).

Through this relationship, one can see that the

technologies found by Fernandez et al. (2012) are

more specific (in context) than the technologies

found in this SM. This happened because Fernandez

et al. (2012) searched for technologies that evaluate

usability in the context of Web applications. Our

SM, on the other hand, aimed at identifying

technologies in a broader context, that is,

technologies that assist in the design and/or

evaluation of usability in the development process.

Our SM takes into account any type of application,

not just Web applications. Therefore, our mapping is

broader. It identified technologies evaluating the

usability of web applications, mobile applications,

and desktop applications, among others.

Additionally, our SM not only identified

technologies that assess usability, but also

technologies that assist in the design process, aiming

at improving usability.

6 CONCLUSIONS

This paper presented a Systematic Mapping (SM)

that discussed the existing evidence on the

technologies proposed for the areas of HCI and SE

that can be used within the software development

process. From an initial set of 229 papers, a total of

124 research papers were selected for this SM.

The results obtained in this SM identified several

technologies that focus on supporting HCI designers

and software engineers in improving the usability of

interactive applications. The results also show that

there is a need for the creation of new technologies

to support the usability of the applications from the

early stages of the development process. This is

because correcting usability problems in the early

stages is less expensive and avoids rework effort

from practitioners. This SM found evidence of

several research gaps for researchers from both

areas, such as: the creation of new technologies for

the early stages, reducing costs and the amount of

usability problems found in the assessments of

usability in the final stages of the development

process; to assist practitioners in designing

applications aiming at usability. And, to help the

integration of technologies, with a focus on

improving the usability within the development

process of mobile applications. From the results of

this SM it is possible for both software engineers

IntegratingtheUsabilityintotheSoftwareDevelopmentProcess-ASystematicMappingStudy

111

and HCI designers to identify technologies that can

be applied in the industrial context. As future work,

we intend to expand and update this SM in order to

increase the body of knowledge with new

technologies and studies that can help identify new

research topics.

ACKNOWLEDGEMENTS

We would like to acknowledge the financial support

granted by CAPES through process AEX 10932/14-

3 and FAPEAM through processes numbers:

062.00600/2014; 062.00578 /2014; and 01135/2011.

REFERENCES

Barbosa, S. D. J., Silva, B. S., 2010. Human Computer

Interaction. Elsevier, Rio de Janeiro (in portuguese).

Bonifácio, B., Fernandes, P., Santos, F., Oliveira, H. A. B.

F., Conte, T., 2012. Usabilidade de Aplicações Web

Móvel: Avaliando uma Nova Abordagem de Inspeção

através de Estudos Experimentais. In Conference

Ibero-American on Software Engineering, pp. 236-249

(in portuguese).

Carvajal, L., 2009. Usability-enabling guidelines: a design

pattern and software plug-in solution. In Doctoral

symposium for ESEC/FSE, pp. 09-12.

Conte, T., Massollar, J., Mendes, E., Travassos, G.H.

2007. Usability Evaluation Based on Web Design

Perspectives. In Symposium on Empirical Software

Engineering and Measurement (ESEM’07), pp. 146-

155.

Conte, T., Vaz, V., Zanetti, D., Santos, G., Rocha, A. R., e

Travassos, G. H. 2010. Aplicação do Modelo de

Aceitação de Tecnologia para uma Técnica de

Inspeção de Usabilidade. In Simpósio Brasileiro de

Qualidade de Software (SBQS 2010), pp. 367-374. (in

portuguese).

Díscola Junior, S. L., Silva, J. C., 2003. Processes of

software reengineering planning supported by

usability principles. In Latin American Conference on

Human-Computer interaction (CLIHC'03), pp. 223-

226.

Fabo, P.; Dŭrikovič, R., 2012. Automated Usability

Measurement of Arbitrary Desktop Application with

Eyetracking. In 16th International Conference on

Information Visualisation (IV), pp. 625 – 629.

Fernandes, P., Conte, T., Bonifácio, B., 2012. WE-QT: A

Web Usability Inspection Technique to Support

Novice Inspectors. In XXVI Brazilian Symposium on

Software Engineering (SBES), pp. 11-20.

Fernandez, A., Abrahão, S., Insfran, E., Matera, M.,

2012a. Further Analysis on the Validation of a

Usability Inspection Method for Model-Driven Web

Development. In 6th Symposium on Empirical

Software Engineering and Measurement (ESEM

2012), pp. 153–156.

Fernandez, A., Abrahão, S., Insfran, E., 2012b. A

Systematic Review on the Effectiveness of Web

Usability Evaluation Methods. In 16th International

Conference on Evaluation & Assessment in Software

Engineering, pp.108-122.

Fernandez, A., Insfran, E., Abrahão, S., 2011. Usability

evaluation methods for the web: A systematic

mapping study". In: Journal of Information and

Software Technology, v. 53, issue 8, pp. 789 - 817.

Fischer, H., 2012. Integrating usability engineering in the

software development lifecycle based on international

standards. In 4th Symposium on Engineering

Interactive Computing Systems, pp. 321-324.

Ibrahim, A. A. A., Hunt, A., 2007. An HCI Model for

Usability of Sonification Applications. In Task models

and diagrams for users interface design (TAMODIA

2006), v. 4385, pp. 245-258.

Institute of Electrical and Electronics Engineers, 2004.

Guide to the Software Engineering Body of

Knowledge (SWEBOK). 2004 Version.

International Organization for Standardization, ISO/IEC

9241-11: Ergonomic Requirements for Office work

with Visual Display Terminals (VDTs) – Part 11:

Guidance on Usability, 1998.

Juristo, N., Moreno, A., Sánchez, M., Baranauskas, M. C.

C., 2007. A Glass Box Design: Making the Impact of

Usability on Software Development Visible. In

Internacional Conference on Human-Computer

Interaction (INTERACT 2007), pp. 541 – 554.

Kitchenham, B.; Chartes, S., 2007. Guidelines for

performing systematic literature reviews in software

engineering. In EBSE Technical Report EBSE-2007-

01, Software Engineering Group Department of

Computer Science Keele University.

Liang, L., Deng, X., 2009. A Collaborative Task Modeling

Approach Based on Extended GOMS. In Conference

on Electronic Computer Technology, pp. 375-378.

Mueller, C., Tamir, D., Komogortsev, O., Feldman, L.,

2009. An Economical Approach to Usability Testing.

In 33th Annual IEE International Computer Software

and Applications Conference, pp. 124–129.

Nayebi, F., Desharnais J.-M., Abran A., 2012. The State of

the Art of Mobile Application Usability Evaluation. In

25th IEEE Canadian Conference on Electrical &

Computer Engineering (CCECE), pp. 1-4.

Nebe, K., Paelke, V., 2009. Usability-Engineering-

Requirements as a Basis for the Integration with

Software Engineering. In 13th Int. Conf. Human-

Computer Interaction, Part I: New Trends, pp. 652-

659.

Nielsen, J., 1994. Heuristic evaluation. In: Jakob Nielsen,

Mack, R. L. (eds), Usability inspection methods,

Heurisitic Evaluation, John Wiley & Sons, Inc.

Ormeno, Y.I.; Panach, J.I.; Condori-Fernandez, N.; Pastor,

O. 2013. Towards a proposal to capture usability

requirements through guidelines. In 7th International

Conference on Research Challenges in Information

Science (RCIS), pp. 1–12.

ICEIS2015-17thInternationalConferenceonEnterpriseInformationSystems

112

Pankratius, V., 2011. Automated usability evaluation of

parallel programming constructs. In 33th International

Conference on Software Engineering, pp. 936–939.

Preece, J., Rogers, Y., Sharp, E., Benyon, D., Holland, S.,

Carey, T., 1994. Human-Computer Interaction.

Addison-Wesley, Reading.

Rivero, L., Conte, T., 2012. Using an Empirical Study to

Evaluate the Feasibility of a New Usability Inspection

Technique for Paper Based Prototypes of Web

Applications. In XXVI Brazilian Symposium on

Software Engineering (SBES), pp. 81-90.

Sangiorgi, U. B.. Barbosa, S. D. J., 2010. Estendendo a

linguagem MoLIC para o projeto conjunto de

interação e interface (in portuguese). In IX

Symposium on Human Factors in Computing Systems,

pp. 61-70.

Santos, F.; Conte, T. U., 2011. Evoluindo um Assistente

de Apoio à Inspeção de Usabilidade através de

Estudos Experimentais (in portuguese). In: XIV Ibero-

American Conference on Software Engineering

(CIbSE 2011), v. 1. p. 197-210. Rio de Janeiro.

Silva, W., Valentim, N. M. C., Conte, T. 2014. Systematic

mapping to integrate technologies of the SE and HCI

areas through of the usability (in portuguese). In USES

Technical Report USES TR-USES-2014-0001.

Available at:

http://uses.icomp.ufam.edu.br/attachments/article/42

/RT-USES-2014-0001.pdf.

Sivaji, A., Abdullah, M.R., Downe, A.G., Ahmad, W.,

2013. Hybrid Usability Methodology: Integrating

Heuristic Evaluation with Laboratory Testing across

the Software Development Lifecycle. In 10th

International Conference on Information Technology:

New Generations (ITNG 2013), pp. 375–383.

Vaz, V. T., Travassos, G. H., Conte, T., 2012. Empirical

assessment of WDP tool: A tool to support web

usability inspections. In Conferencia Latinoamericana

en Informática (CLEI 2012), pp. 1-9.

Wieringa, R., Maiden, N. A. M., Mead, N. R., Rolland, C.,

2006. Requirements engineering paper classification

and evaluation criteria: a proposal and a discussion. In:

Requirements. Engineering. 11 (1), pp. 102–107.

IntegratingtheUsabilityintotheSoftwareDevelopmentProcess-ASystematicMappingStudy

113