SignSupport: A Mobile Aid for Deaf People Learning Computer Literacy

Skills

George G. Ng’ethe

1

, Edwin H. Blake

1

and Meryl Glaser

2

1

Department of Computer Science, University of Cape Town, Private Bag X3, Rondebosch, 7701, South Africa

2

Deaf Community of Cape Town, 12 Gordon Road, Heathfield, Cape Town, South Africa

Keywords:

Assistive Technology, Authoring Tools, Computer-assisted Instruction, End User Applications.

Abstract:

This paper discusses a prototype of a learning aid on a mobile phone to support Deaf people learning computer

literacy skills. The aim is to allow Deaf people to learn at their own pace which in turn reduces the dependence

on a teacher to allow weaker learners be assisted. We studied the classroom dynamics and teaching methods to

extract how lesson content is delivered. This helped us develop an authoring tool to structure lesson content for

the prototype. A prototype has been developed using South African Sign Language videos arranged according

to the structure of pre-existing lessons. The technical goal was to implement the prototype on a mobile device

and tie the resulting exported lesson content from the authoring tool to a series of signed language videos and

images so that a Deaf person can teach him/herself computer literacy skills. Results from the user testing found

the prototype successful in allowing Deaf users to learn at their own pace thereby reducing the dependence on

the teacher.

1 INTRODUCTION

This paper describes our initial experience with a mo-

bile prototype that supports teaching computer liter-

acy skills to Deaf people, using South African Sign

Language (SASL) as the medium of instruction. Deaf

with a capital ‘D’ is distinguished from deaf or hard-

of-hearing in that Deaf people use a signed lan-

guage to communicate, thereby defining their culture

much like other groups who use textual languages

like English. Deaf people have limited literacy in

spoken and written languages (Glaser and Lorenzo,

2007). Acquiring computer skills necessitates pre-

existing knowledge of a written language. Learning

involves simultaneously developing the written lan-

guage whilst learning computer skills and technical

terminology.

Bridging communication between Deaf and hearing

people is an ongoing research area, for us we are

extending to different communication contexts from

previous SignSupport projects. All focus on con-

strained contexts where a limited collection of in-

teractions were incorporated into pre-recorded SASL

videos. The interactions previously investigated were

between a doctor and a Deaf patient (Mutemwa and

Tucker, 2010) and a between pharmacist and Deaf pa-

tients, (Chininthorn et al., 2012) implemented on a

mobile phone (Motlhabi et al., 2013).

In this paper, we examine the context of adult com-

puter literacy training. We investigate how to sup-

port Deaf people learning computer literacy skills

using the International Computer Driving License

(ICDL www.icdl.org.za) approved curriculum and the

e-learner (e-Learner, 2013), developed by Comput-

ers 4 Kids (www.computers4kids.co.za). Currently,

teaching Deaf learners involves the teacher reading

instructions from the e-learner manual and signing the

content to the learners. In the process, all Deaf learn-

ers must look at the teacher due to the visual nature of

signed language. This approach inhibits the progress

of faster learners since the pace of the class is dic-

tated by the weaker learners because when something

is unclear, all have to be interrupted.

Text literacy among Deaf people is only adequate

for social purposes, between Deaf people who ac-

cept grammatical problems rather than specific and/or

technical discussion (Glaser and Tucker, 2004). This

inadequacy creates a communication barrier which

hinders Deaf people from learning new skills. In de-

veloping regions, some Deaf people use services such

as Short Message Service (SMS) and instant messag-

ing applications such as WhatsApp to communicate

with each other and with hearing people. Deaf people

seeking higher education and employment opportuni-

501

G. Ng’ethe G., H. Blake E. and Glaser M..

SignSupport: A Mobile Aid for Deaf People Learning Computer Literacy Skills.

DOI: 10.5220/0005442305010511

In Proceedings of the 7th International Conference on Computer Supported Education (CSEDU-2015), pages 501-511

ISBN: 978-989-758-108-3

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

ties are restricted due to limited text literacy. Many

are unemployed or employed in menial jobs. This af-

fects the socio-economic level of the community as a

whole (Blake et al., 2014). SignSupport was evolved

from assisting Deaf people communicate with phar-

macists to supporting computer literacy learning in

SASL. We partnered with a grassroots NGO, DCCT

(Deaf Community of Cape Town) which is staffed by

Deaf people and serves the needs of the larger Deaf

community who primarily use SASL as their first lan-

guage.

We conducted a field study and a user study, in two

research cycles, with DCCT staff members to inves-

tigate how mobile phones could be used to support

Deaf learners training in computer literacy skills. The

purpose of the field study was to understand learn-

ing challenges that Deaf learners encounter at the task

of learning computer skills and find out the existing

technology capacity of the Deaf community. Based

on the results we obtained, we designed and imple-

mented our intervention, addressing some of the is-

sues uncovered in the field study and evaluated the

developed solution with DCCT staff members.

In the following sections of this paper, we highlight

methods used towards improvingDeaf literacy, the re-

lated work in mobile signed language communication

and Deaf computer literacy projects in Section 2. We

discuss our methodology in Section 3 and introduce

our first cycle, the computer literacy classes, in Sec-

tion 4 where we analyze the results. We introduce our

intervention in Section 5, detailing the content cre-

ation in Section 6 and discuss the results obtained in

Section 7. We conclude and outline the future work.

2 RELATED WORK

This section describes work related to SignSupport.

We look at how literacy in Deaf adults has been de-

veloped and the work that others in the area of mobile

communication technologies to support Deaf-related

challenges.

2.1 Deaf Adult Literacy

Literacy development in adult Deaf populations has

had its challenges both internationally and in South

Africa. Internationally, the average reading age of

Deaf adults is said to be at fourth grade level (Watson,

1999) and in South Africa, the average reading age of

Deaf adults who have attended schools for the Deaf

is lower than the international average (Aarons and

Reynolds, 2003). In addition apartheid caused racial

inequalities in educational development and provi-

sion resulting in varying literacy levels in Deaf people

across different racial groups (Penn, 1990).

A Bilingual-Bicultural approach is where Deaf learn-

ers are taught through a signed language to read and

write the written form of a spoken language (Gros-

jean, 1992). Other previous approaches to Deaf liter-

acy such as the principle of Oralism (Lane, 1993) and

total communication (Denton et al., 1976) approaches

neglected the need for Deaf people to learn in their

own language and promoted little literacy develop-

ment. Research has shown that Deaf learners taught

in sign language perform better than learners who are

not taught this way (Prinz and Strong, 1998). An ap-

proach that aims to redress low literacy levels among

Deaf Adults in South Africa where the use Deaf learn-

ers’ existing knowledgeof SASL and written English,

highlighting the difference between these two lan-

guages in order to facilitate the development of their

second-language skills in written English (Glaser and

Lorenzo, 2007). In the case of Deaf learners, literacy

is moving from a primary to a secondary communi-

cation form as well as moving from one language to

another. We adopt the bilingual-bicultural approach

to teaching computer literacy skills. By teaching the

lessons in sign language, we use the Deaf learners ex-

isting knowledge (the known), to introduce computer

literacy skills (the unknown).

2.2 Mobile Sign Language

Communication

MobileASL is a video compression project that uses

American Sign Language (ASL) as its medium of

communication on a mobile device. The project was

developed to enable Deaf users who use low to mid-

range commercially available mobile devices to send

sign language video over a mobile network (Caven-

der et al., 2006). The aim for MobileASL is to

make video communication possible on a mobile de-

vice without the need for specialised equipment like

a high-end video camera, but instead to use standard

equipment on the mobile device.

Cavender et al. conducted user studies to determine

the intelligibility effects of video compression tech-

niques that exploit the visual nature of sign language.

Preliminary studies suggested that the best video en-

coders, at the time of the study, could not produce the

video quality needed for intelligible ASL in realtime,

given the bandwidth and computational constraints

of even the best mobile phones. MobileASL con-

centrated on manipulating three video properties: bi-

trate, frame rate and region of interest (ROI) (Caven-

der et al., 2006). These properties were chosen as im-

CSEDU2015-7thInternationalConferenceonComputerSupportedEducation

502

portant for intelligible ASL on mobile phones. Mo-

bileASL provides the basis for intelligibility of video

for sign language. For our study we choose to focus

on the frame rate. Cavender et al. (Cavender et al.,

2006) established that at frame rates below 10 frames

per second (fps) signs were difficult to watch. As a

trade-off we chose to use a frame rate of 25 fps.

MotionSavvy is a project that developed the UNI, a

device that translates American Sign language (ASL)

into audio and spoken word to text (MotionSavvy,

2014). The UNI uses advanced gesture recognition

technology called Leap Motion (Motion, 2014) that

allows users to see how their signs appear on camera

which helps to make sure signs are input correctly and

avoid missing important information (MotionSavvy,

2014).

2.3 Computer Literacy Projects

There are a number of projects that have sought to

address increasing the educational level of Deaf and

hard-of-hearing persons. To truly meet the needs of

users, in addition to providing guidelines based on

technology, it is necessary to understand the users

and how they work with their tools (Theofanos and

Redish, 2003). One of the solutions can be in pro-

viding additional educational input using multimedia-

supported materials on the World Wide Web (Debevc

et al., 2007). This kind of user interfaces can be found

in other projects such as BITEMA (Debevc et al.,

2003) and DELFE (Drigas et al., 2005). Results from

these projects have shown that multimedia systems

additionally increased the success of learning.

Project DISNET in Slovenia, focused on providing an

alternative way of learning computer literacy using

accessible and adapted e-learning materials. It used

multimedia materials in a web-based virtual learning

environment. The aim of the project was to increase

computer literacy among Deaf and hard of hearing

unemployed people using the ICDL e-learning mate-

rial (Debevc et al., 2007). The system was designed

for people who have access to computers, high speed

broadband Internetbut without basic computer or web

browser experience.

The projects above are concerned with e-learning ma-

terials and e-learning environments and rely on the

World Wide Web to support their multimedia mate-

rials. The commonality between our work with the

projects above is the use of multimedia learning ma-

terials.

2.4 Discussion

SignSupport emphasizes video quality and resolution

much like MobileASL but differs in terms of over-

the-air data charges. The videos are stored locally on

the phone. Data costs in South Africa are higher than

in neighbouring African countries (Calandro et al.,

2014), making it uneconomical for Deaf people and

further marginalising them. Similar to project DIS-

NET, SignSupport uses multimedia ICDL learning

materials to improve computer literacy education lev-

els amongst Deaf people. It differs in terms of not

being web-based utilizing broadband internet connec-

tions. SignSupportis mobile-based and uses commer-

cially available devices.

3 METHODOLOGY

SignSupport is based in over a decade of research and

collaboration by an interdisciplinary team comprising

a diverse range of expertise. All members were in-

volved continuously through the project.

Deaf users play a steering role in the research. They

dictate how they would use it and most of the user

requirements are gathered from them by integrating

their perspectivesthereby increasing chances of an ac-

cepted solution.

A Deaf education specialist who is a link between

the technical team and the Deaf community mem-

bers and also the facilitator for the computer literacy

course. The specialist assists in design and expla-

nation of Deaf learning practices to make SignSup-

port fit Deaf users’ expectations and helps translate

the course material into SASL.

Computer scientists who are tasked with implement-

ing the design of SignSupport and to verify that the

SASL videos were shown in the correct and logical

manner. They examine how end users engaged with

SignSupport to uncover design flaws and any other

interesting outcomes.

We undertook a community based co-design pro-

cess (Blake et al., 2011) following an action research

methodology. This approach required participation

with the target groups and engaged them throughout

the design, implementation and evaluation phases and

referred back to them to show how their feedback

is incorporated into SignSupport. During all inter-

actions with Deaf participants, the facilitator who is

acceptably fluent in SASL facilitated the communi-

cation process which aided us in understanding the

usage context and building positive relationships with

the Deaf community.

We undertook two research cycles. In the first cycle

SignSupport:AMobileAidforDeafPeopleLearningComputerLiteracySkills

503

we observed and participated in the computer literacy

classes at DCCT where some of their staff members

were taking the classes and conducted unstructured

interviews with the facilitator in the form of informal

conversations and anecdotal comments made by the

facilitator during the class sessions. Data were gath-

ered using hand written notes and photographs were

used to build a cognitive system (Hutchins, 2000) of

the computer literacy classes using a distributed cog-

nition approach. The ideas generated were then used

to synthesize our solution intervention.

We collaborated with two other researchers to co-

design an XML specification that is used to structure

lesson content and is generated by a content author-

ing tool. The XML specification was an abstraction

of the hierarchical structure of the e-learner manual.

A mobile prototype was developed that used the XML

specification and mapped the content of the e-learner

and serially displayed the content in SASL videos and

images. The mobile interface design was inspired by

the work of Mothlabi (Motlhabi, 2013) such that the

video frame size covered at least 70% of the display

size and the navigation buttons and image filled up the

rest of the space.

We recorded SASL videos of two lessons chosen from

the e-learner curriculum using scripts that we created

and videos that were stored on the mobile phone’s in-

ternal memory. The mobile prototype was then eval-

uated in a live class setting and the results were taken

into account for the next design.

4 COMPUTER LITERACY

CLASSES

The computer literacy classes are taught using the

International Computer Driving license (ICDL) ap-

proved curriculum, e-Learner (e-Learner, 2013),

which has two versions: school and Adult. The Adult

version is taught at DCCT. The aim of the classes is

to equip Deaf learners with computer skills that will

result in the learners taking assessments to get the e-

Learner certificate. The Deaf learners then progress

to the full ICDL programme. The computer literacy

classes (e-Learner classes henceforth) are taught by a

facilitator who has been in a long involvement with

DCCT in addition to collaborating with researchers

from the University of Cape Town (UCT) and the Uni-

versity of the Western Cape (UWC) in the SignSup-

port project.

The Deaf learners were all DCCT staff members.

Three were female and two were male with an av-

erage age of 38.4 years. Three of the learners had re-

ceived the EqualSkills certificate (EqualSkills, 2014)

prior to beginning the e-Learner classes. EqualSkills

is a flexible learning programme that introduces basic

computer skills to people with no prior exposure.

4.1 Course and Lesson Structure

E-learner is a modular and progressive curriculum

spread over seven units. The units are similar to the

modules in the ICDL programme but contain less de-

tail. The e-learner curriculum is in two parts: a man-

ual containing lesson instructions used by the facil-

itator and software, loaded on to the computers that

the Deaf learners use to retrieve templates and lesson

resources. The seven units of the e-Learner are: IT

Basics, Files and folders, Drawing, Word processing,

Presentations, Spreadsheets and Web and Email es-

sentials. The Deaf learners use computer applications

to complete the templates following signed instruc-

tions from the facilitator. The facilitator first teaches

literacy skills in the written language to develop their

technical vocabulary.

These units are composed of lessons that have the

same structure in the following categories: Orienta-

tion, Essential and Supplementary. Lessons in differ-

ent units overlap i.e the same lesson appears in differ-

ent units. This allows for the learner to revise a lesson

or skip it having done it before. The lesson structure

is as follows:

1. Integrated activity – A class discussion on the les-

son content.

2. Task description – A brief overview of the work

the learners will perform.

3. Task steps – The individual tasks that the learners

perform to complete the lesson.

4. Final output – A diagram showing what the learn-

ers are expected to produce after performing the

task steps.

4.2 Classroom Setup

In the computer lab there are six computers in a U-

shaped arrangement. There is a server at the front

left of the classroom with a flip-board on a stand and

two white boards. The arrangement is ideal to allow

the learners to have a clear line-of-sight to view the

front of the classroom where the facilitator stands and

signs. The seating arrangement also allows the Deaf

learners to see each other which is crucial for class

discussions and to see contributions from other learn-

ers and questions.

Each computer, except for the server, is running a

copy of Microsoft Windows 7. All computers have

CSEDU2015-7thInternationalConferenceonComputerSupportedEducation

504

a copy of Microsoft Office 2007 and e-Learner Adult

version 1.3.

4.3 Results

In observation and participation in the e-Learner

classes we uncovered various themes that are dis-

cussed below.

The teaching of lessons varied. Although the lessons

in the e-Learner manual had the same structure, the

facilitator adapted the teaching method and lesson

content to make it relevant for the Deaf learners.

Teaching generally takes up a whole lesson and the

Deaf learners only get to perform the tasks in the next

class session which would be the following week.

Images played an important role in teaching. The

facilitator made use of a data projector to display

open documents in either Microsoft Word or Power-

point. There were numerous times where the facili-

tator pointed at the projected image of the computer

application that was being used in the lesson, pointing

out buttons and icons and lists to scroll through.

Teaching the Deaf learners is demanding and tiring

for the facilitator. There is one copy of the e-Learner

manual used for the lessons because the Deaf learners

are text illiterate, unable to read the English text in the

manual. The facilitator has to read the instructions,

understand them before signing the instructions to the

Deaf learners in SASL. In other instances, the facilita-

tor has an assistant who voices the instructions to the

facilitator who then signs them to the Deaf learners.

In order to gain the attention of all the Deaf learners,

the facilitator waves her hands in front of the learn-

ers. This is necessary in order to explain a concept or

give instructions to the Deaf learners due to the visual

nature of sign language. This is a distinguishing fac-

tor between Deaf and hearing learners called divided

attention. Hearing learners can simultaneously listen

instructions being given and look at their computer

monitors without looking up. Deaf learners cannot

watch the SASL signing and look at their computer

screens at the same time. Eye contact first has to be

gained before signing can begin.

Deaf learners use SASL as their principal language of

communication and it has its own structure and vo-

cabulary. English users bring all the necessary vocab-

ulary to the task of computer literacy skills learning.

Deaf learners do not have this vocabulary to rely on,

hence they are learning English vocabulary and ICT

skills at the same time. English vocabulary in com-

puter literacy classes has to be broken down by ei-

ther making use of synonyms, definitions or descrip-

tions. For example, in a lesson observed, the facilita-

tor broke down the word “duplicate” into the phrase

“make a copy” after which the Deaf learners associ-

ated copy with its respective sign in SASL.

We observed different work rates of the Deaf learn-

ers during our class participation, similar to hearing

learners. The difference is that Deaf learners have

the additional burden of having to stop and look at

the facilitator for instruction. All need to be inter-

rupted to see signed instruction. This would interrupt

the whole class and the learners work rate. The faster

learners usually finished their tasks earlier and often

spent time waiting for the slower learners to catch up.

The pace of learning as a result, was dictated by the

slower learners as the facilitator was forced to teach

at a slower pace to accommodate the slower learners.

This puts pressure on the slower learners and makes it

boring and at times frustrating for the faster learners.

The faster learners were the same three Deaf learners,

previously identified, who had acquired EqualSkills

certificates.

We also observed the Deaf learners using various

mobile phones. These phones ranged from feature

phones to smartphones. One learner had two smart-

phones: a HTC running Android OS for work and a

Blackberry for personal use. Two other participants

had Nokia feature phones with QWERTY keyboards.

These devices are capable of playing video as well as

instant messaging applications such as WhatsApp. In

addition, the Deaf learners do not have computers or

laptops at home and at work, they use old computers

hence their limited experience.

4.4 Analysis and Design Implications

We use a distributed cognition approach (Rogers

et al., 2011, p.91) to understand the e-learner class

environment. Distributed cognition studies the cog-

nitive phenomena across individuals, artefacts and in-

ternal and external representations in a cognitive sys-

tem (Hutchins, 2000) which entails:

• Interactions among people (communication path-

ways).

• The artefacts they use.

• The environment they work in.

We define our cognitive system as the e-learner class

where the top-level goal is to teach computer skills to

Deaf learners. In this cognitive system we describe

the interactions in terms of how information is prop-

agated through different media. Information is repre-

sented and re-represented as it moves across individu-

als and through an array of artefacts used (e.g. books,

spoken word, sign language) during activities (Rogers

et al., 2011, p.92).

SignSupport:AMobileAidforDeafPeopleLearningComputerLiteracySkills

505

Propagation of representational states defines how

information is transformed across different media.

Media here refers to external artefacts (paper notes,

maps, drawings) or internal representations (human

memory). These can be socially mediated (passing a

message verbally or in sign language) or technolog-

ically mediated (press a key on a computer) or men-

tally mediated (reading the time on a clock) (Rogers

et al., 2011, p.303).Using these terms we represent

the computer literacy class cognitive system showing

the propagation or representative states for the teach-

ing methods.

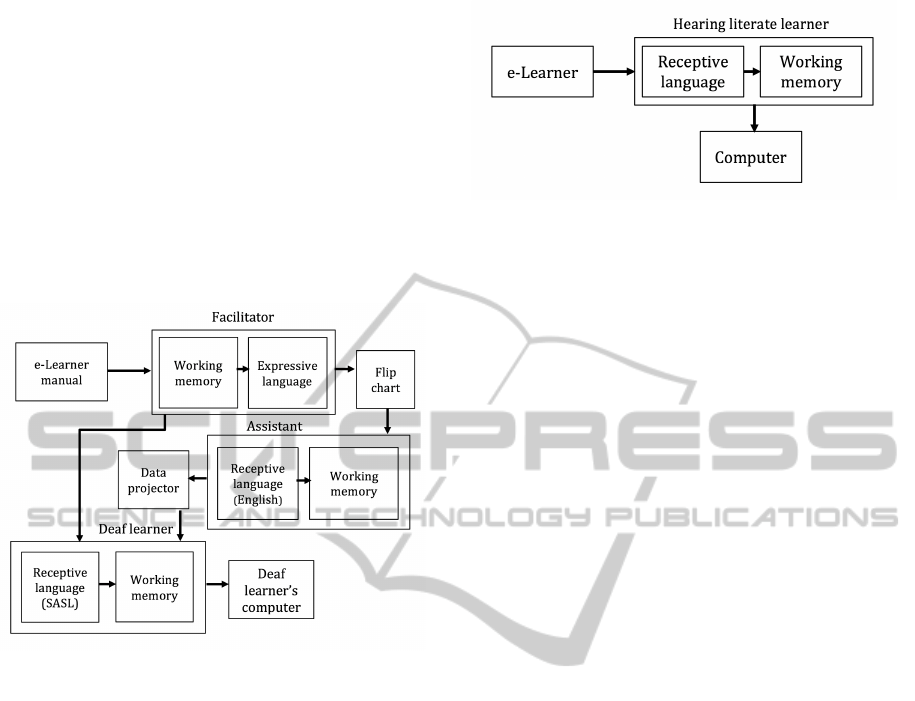

Figure 1: A diagram showing the propagation of represen-

tational states for the teaching method to deliver a single in-

struction to the Deaf learners. The boxes show the different

representational states for different media and the arrows

show the transformations.

By representing the teaching method in the diagram

(see Figure 1) we discover the task of teaching Deaf

learners is far from being a simple task, involving a set

of complex steps. Instructions are propagated through

multiple representational states, verbally when inter-

acting with the assistant, visually when interacting

with the Deaf learners and mentally in both cases.

In comparison with Figure 2 where the representa-

tional states are fewer, our proposed system attempts

to bring the Deaf learners closer to how hearing lit-

erate people learn. The design implications would

be to reduce the number of steps involved to deliver

instructions to the Deaf learners. A solution would

be to deliver the lesson instruction in SASL videos

and images, effectively removing a number of repre-

sentational states, approximately four. These SASL

videos are pre-recorded and contain the lesson in-

structions from the e-learner manual thereby eliminat-

ing the need for the assistant and the facilitator to de-

liver the lesson instructions. In addition, limited text

literacy amongst the Deaf learners means the need for

Figure 2: The diagram shows the propagation of represen-

tational states for a hearing literate person. The boxes show

the different representational states for different media and

the arrows show the transformations.

SASL instructions thereby allowing them to learn in

their preferred language.

Mobile phones provide an ideal way to deliver the les-

son content and most Deaf people use a mobile phone

to communicate with other Deaf and hearing people

(Chininthorn et al., 2012). This solution should work

on off-the-shelf mobile phones similar to the previous

SignSupport solution. Therefore, SignSupport could

be carried home by Deaf learners on their cellphones

and where they can get access to a computer teach

themselves. In addition, the socio-economic situa-

tion of the Deaf learners put them in position not able

to afford the high data costs. This eliminates use of

data networks to transfer the lesson content or host

the videos externally and stream them to the mobile

phones.

Another design consideration is to organise and struc-

ture the SASL videos to represent the logical flow of

the lessons in the e-learner. This would involve de-

sign of a data structure that would effectivelystructure

the course and lessons to reflect the e-learner manual.

Discussion of the design is in the following section.

5 DESIGN AND

IMPLEMENTATION

In this section we discuss the technical details of the

design of the data structure, the design of the content

authoring tool and the user interface of the SignSup-

port mobile prototype. This paper presents the first

version of SignSupport that has undergone its first it-

eration.

5.1 Structuring Lesson Content

For SASL videos and images to be meaningful, they

need to be organized in a logical manner that re-

flects the e-learner lesson structure (see Section 4.1).

The analysis of the e-learner classes revealed the nu-

merous number of steps involved to deliver lesson

CSEDU2015-7thInternationalConferenceonComputerSupportedEducation

506

content to Deaf learners. To model the structure of

the e-learner curriculum we chose Extensible Markup

Language (XML) (W3C, 2014) as our data format.

XML provided the necessary flexibility to represent

the curriculum in its hierarchical structure. To man-

age the lesson resources (SASL videos and images)

we chose to use Universal Resource Locators (URLs)

that would point to the location where the resource

was stored. XML represents data in text in a human

readable form between opening and closing tags. To

represent the e-learner structure we followed the pro-

cedure below:

1. Identify the course, unit and lesson structure.

2. Represent the course, unit and lesson using the

tags (course, unit and lesson).

3. Provide the course, unit and lesson with unique

identifiers.

4. Identify the sections of the lesson and provide

them with tags.

5. Identify what lesson sections are to be represented

using video tags

6. Identify what lesson sections need images to ac-

company the videos and represent them using im-

age tags.

7. Identify how to manage lesson assets (images and

videos).

Using the above procedure we abstracted the e-learner

hierarchical structure representing the course, unit

and lesson with unique identifiers course

id, unit id

and lesson

id respectively. Titles for the course,

unit and lesson were represented using course title,

unit title and lesson title respectively. Lessons had

the extra XML tag, lesson

type that identified the cat-

egory (Orientation, Essential or Supplementary) in

which the lesson was housed. The resulting XML

structure is shown in Figure 3.

Course

Unit

Lesson

Unit

Lesson Lesson

Unit

Lesson Lesson

Figure 3: The XML structure of the course.

The e-learner curriculum changed infrequently mak-

ing it beneficial to store the resources locally on the

device. This effectively make the system independent

of data networks to update the lesson content. In or-

der to manage the lesson assets and the XML lesson

files effectively we decided to store all in the folder

structure. The root folder was named SignSupport. In

that folder, there were three subfolders:

• xml – This folder stored XML data files of the

lessons.

• video – This folder stored all the SASL video files.

• shared

images – This folder stored images that

are used in the lessons.

This XML data structure is parsed using built-in XML

parsers used by the mobile prototype (see Section

5.3).

5.2 Content Authoring Tool

We needed to design a content authoring tool that

would structure the lesson content. It would allow do-

main specialists such as the facilitator to create con-

tent for their usage context without the need for a

programmer. Mutemwa and Tucker identified this as

a bottleneck to their SignSupport designs (Mutemwa

and Tucker, 2010), limiting their design to one sce-

nario within the communication context.

The design was modelled on the structure of the e-

learner manual (see Section 4.1). It uses drag-and-

drop features to add lesson resources (videos and im-

ages) to the placeholder squares that represented the

lesson description, task description and task step as

shown in Figure 4. Lesson resources are uploaded to

the authoringtool and displayed in panels on the right.

Once a lesson is created and lesson resources added,

it can be previewed to view the lesson in sequential

order from the beginning. The lesson is then added

to a unit and a course before saving and exporting the

course. Exporting the course generates the XML data

structure that then consumed by the mobile prototype

below (see Section 5.3).

Figure 4: The content authoring tool interface that allows

the facilitator to create lessons for Deaf learners.

The authoring tool was implemented using Java FX

(Oracle, 2014), a user interface framework,using Net-

SignSupport:AMobileAidforDeafPeopleLearningComputerLiteracySkills

507

beans 7.4 integrated development environment (IDE).

It was tested on both Microsoft Windows 7 and Apple

Mac OS X 10.9.5 to check for compatibility.

5.3 Mobile Prototype

We chose to investigate whether mobile devices were

a viable means to support Deaf learners because of

their ubiquitous nature.

The mobile phones we used had 25 gigabytes (GB) of

internal storage space, with a touch sensitive display

of size 4.8 inches and a resolution of 1280 by 720 pix-

els. The phones run Android OS 4.3 (Jelly bean). The

higher resolution screen was considerably larger than

the display used in the previous version of SignSup-

port (Motlhabi et al., 2013). Our version of SignSup-

port was only similar in video playback interfaces but

differed in content structure and context of use. The

extra space allowed for an image to be inserted below

the video frame in addition to the navigation buttons

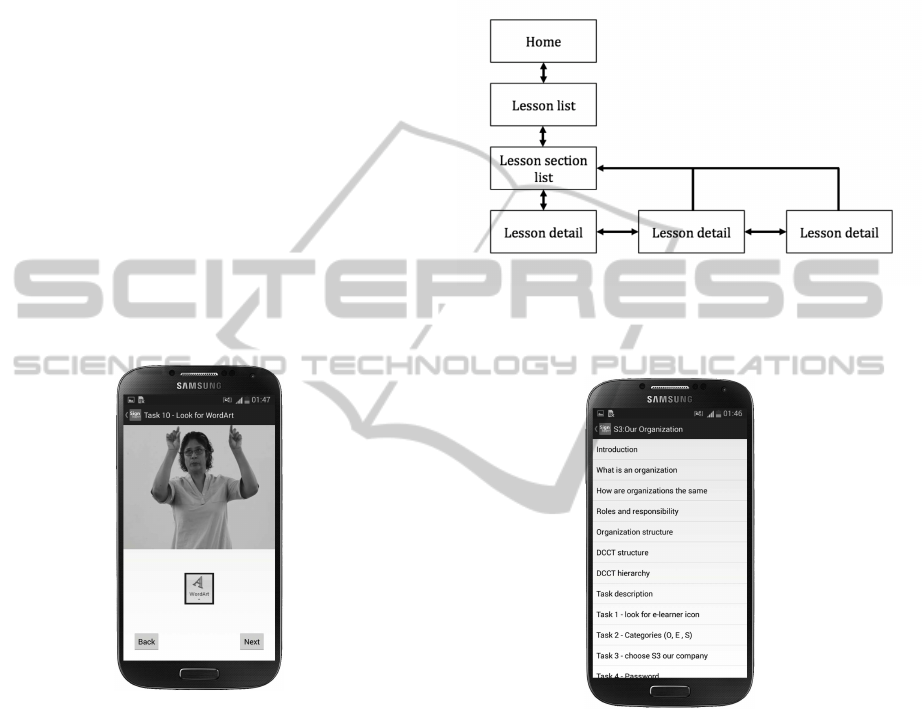

(see Figure 5).

Figure 5: The SignSupport interface with an image of an

icon beneath the video and video caption in the action bar

which indicates the instruction the learner is currently work-

ing on.

Navigating the mobile prototype interfaces is done in

two ways: linear and hierarchical navigation. To nav-

igate linearly a Deaf learner uses the next and back

buttons on the lesson detail screen shown in Figure 5

to move from one video instruction to another. The

linear structure navigates through the XML structure

(see section 5.1) that was generated from the content

authoring tool in section 5.2. Hierarchical navigation

is done moving from the home screen down to the

lesson detail screen and back shown in Figure 6. To

move down to the lesson detail screen, the Deaf user

starts on the home screen and selects a lesson from

the list of lessons (see Figure 7) by pressing on the

list item that has the lesson name. Once a lesson is

selected, the Deaf learner is presented with another

list of lesson sections where the learner clicks on a list

item to reveal the screen shown in Figure 5 that con-

tains the SASL video instructions. The depth of the

hierarchical navigation was at most two levels from

the home screen for a better user experience.

Figure 6: User interface navigation on the mobile prototype

of SignSupport. The boxes represent the different screens

the user interacts with and the arrows indicate the direction

of navigation between the screens.

Figure 7: User interface navigation on the mobile prototype

of SignSupport. The interface shows the lesson sections in

a scrollable list. The Deaf learner taps on the desired list

item to reveal the screen with the SASL video instructions.

In the backend of the mobile prototype, the XML data

format designed in Section 5.1 was parsed using the

Android interface XmlPullParser. XML files stored

in the SignSupport folder in the mobile phone internal

memory are modelled using an ArrayList data struc-

ture. Navigation is facilitated using clickable list wid-

gets and buttons on the interface and scrolling through

the list of lessons and lesson sections (see Figure 7)

was done through swipe gestures.

The mobile prototype is designed to be used concur-

rently with a computer as a tutoring system. The Deaf

learner can query the facilitator if further clarification

is needed. and when in the presence of a facilitator. It

CSEDU2015-7thInternationalConferenceonComputerSupportedEducation

508

6 CONTENT CREATION

We recorded the SASL videos with the help of a

SASL interpreter. Recording was done in a room

with sufficient lighting. The SASL interpreter stands

in-front of camera mounted on a tripod. The attire

worn by the interpreter was neutral to contrast with

the background colour. The interpreter we chose met

the following criteria:

1. A registered SASL interpreter.

2. A background in education.

Before recording the videos, we created a conversa-

tion script. The script detailed the instructions, in bul-

let point form, for the e-learner lessons. We chose two

lessons from the e-learner manual and created con-

versation scripts for them. To generate the conversa-

tion script the original instructions of the lessons were

first written down. The facilitator guided us with the

abstraction of the lesson content by insisting that in-

structions have one task or a single explanation per

bullet point. Multiple instructions were broken down

to single tasks and single explanations and computer

terminology explained further in detail. In some cases

synonyms for complex terms were used instead. For

example the word duplicate was replaced with the

phrase “copy and paste” where signs for them existed

in SASL. This was repeated until all instructions were

done and simplified.

The recording procedure involved having an inter-

preter being voiced the instructions on the conversa-

tion script. The interpreter then signs on camera un-

til all the instructions on the script have been trans-

lated into SASL. Signed instructions are separated

by writing down the number of the instruction on a

whiteboard or paper according to its position on the

script and displaying it in-front of the camera while

continuous recording. When the interpreter puts her

hands down, that is the visual cue that the signing

for that particular instruction has ended which helps

when editing the SASL videos.

The recorded SASL videos were edited in Adobe Pre-

mier Pro CS6 where the audio channel was removed

to reduce video file size. The resulting videos were

encoded using the H.264 video codec with a frame

size of 640 x 480 pixels and a frame rate of 25 frames

per second (fps) as per the ITU requirements (Hell-

strom, 1998). We converted the original video files

from MPEG2 (.MPG extension) to MOV before edit-

ing using Quicktime pro because MPEG2 compres-

sion is cumbersome to use. The final video had

MPEG-4 video compression that was compatible with

Android OS and the official video format for the plat-

form. The resulting video clips are short, the longest

video clip is 48 seconds which does not pose a high

cognitive workload on the learner.

7 EVALUATION

This section analyses the results obtained from our

user evaluation of the mobile prototype. We observed

the Deaf learners to uncover design flaws and any

other interesting use of the prototype.

7.1 Procedure

Five DCCT staff members participated in the evalu-

ation. These were the same Deaf learners in section

4. The facilitator was present to interpret on our be-

half. The Deaf participants were each given a smart

phone that contained the prototype. After a short

briefing about the project, the Deaf participants were

first trained how to use the system then given a prac-

tice lesson to do for 20 minutes to get a feel of using

the prototype and a second lesson to do for 30 min-

utes. In the first lesson, the learners were required to

pair graphics of special keyboard keys (e.g Space bar,

Shift key etc.) with images that represent their func-

tion. The second lesson required the learners to iden-

tify and name different storage media. Then, identify

which files represented by icons could fit into the stor-

age media without exceeding their capacities. Both

lessons were provided in Microsoft Excel templates.

After, the Deaf participants were invited to participate

in a focus group discussion to get their opinions and

feedback on the prototype. The session was video

recorded and photographs were taken with the help

of an assistant.

Questionnaires were not used to elicit feedback on the

system. Motlhabi noted that conducting an evaluation

with Deaf text semi-literate participants proved to be

a problem while answering questionnaires (Motlhabi,

2013). The two SASL interpreters available to inter-

pret the questionnaires questions for the eight Deaf

participants caused a bottleneck as some participants

had to wait for the interpreters to finish helping other

participants. In addition, employing more SASL in-

terpreters was not feasible because of how rare and

expensive they are in South Africa.

7.2 Results and Analysis

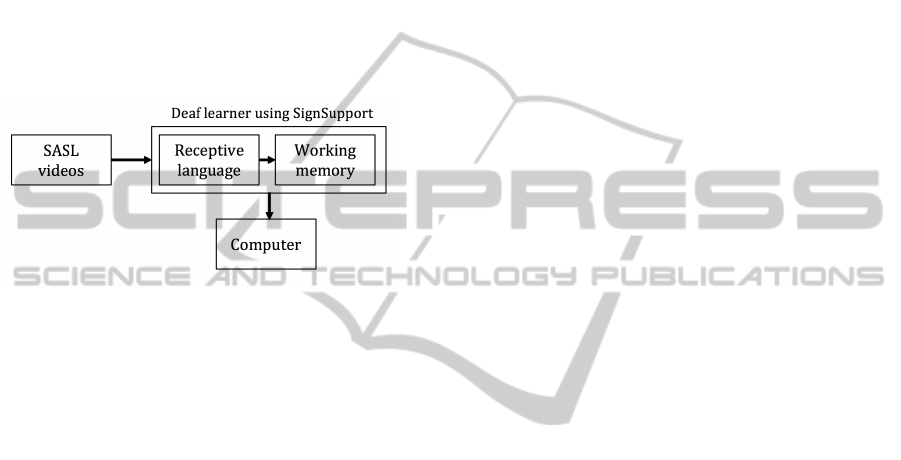

The number of representational states involved in de-

livering a single instruction reduced by 4. It elimi-

nated the facilitator, flip chart, data projector and as-

sistant states involved in the process shown in Fig-

SignSupport:AMobileAidforDeafPeopleLearningComputerLiteracySkills

509

ure 8. The reduced representational states moved the

Deaf participants closer to hearing literate users.

The participants had little difficulty navigating the

user interface. Two participants had difficulty locat-

ing the back button on the interface that navigated

back to the list of lesson sections even after train-

ing. All the participants found it easy to re-watch the

SASL videos. It was easy for them to use a tap on the

video frame to bring up the video controls to replay

the video. They also found it easy to navigate through

the lesson content using the back and next buttons on

the interface as well as navigate between the list of

lesson sections and the lesson detail screen that con-

tained the SASL videos.

Figure 8: The representational states of a single instruction

being delivered to a Deaf learner using SignSupport. The

reduced states make it simpler for Deaf learners and pro-

motes individual work.

All the participants noted that some signs used in

the videos was different to theirs, indicating dialec-

tal difference in the signs used in the SASL videos.

Despite the difference, the stronger participants were

able to understand the context of the instructions

and continue with the tasks. In this case potentially

stronger participants helped the weaker participants

understand the instructions in 18 instances observed.

We also observed, during the testing, that the Deaf

participants were individually working at their own

pace, and the facilitator helped the participants indi-

vidually in 21 different instances. Two of the 21 in-

stances of assistances were initiated by the Deaf par-

ticipants while the other 19 were initiated by the fa-

cilitator. In 9 out of the same 21 instances, the facili-

tator prompted a Deaf participant to continue with the

task, click on a button or replay a video. In the other

12 instances, the facilitator explained unclear instruc-

tions in SASL. The assistance did not affect the other

participants working individually and the role of the

facilitator changed from delivering the lesson content

to a support role. Consequently, the workload on the

facilitator reduced.

Some Deaf participantsnoted a mismatch between the

instruction and what they expected to see on the com-

puter. The mismatch occurred due to unforeseen steps

such as the monthly password that is entered in the

software to access the lesson content. The facilitator

reported that additional SASL videos with contextual

information and discourse markers (Sharpling, 2014)

were needed to provide cues for the Deaf participants

to progress to the next instruction or to perform a task.

8 CONCLUSION AND FUTURE

WORK

Deaf people with low text literacy stand to benefit

from having SignSupport to facilitate them learning

computer literacy skills in their preferred language,

South African Sign Language (SASL). This paper has

discussed the challenges of text illiterate Deaf people

learning computer skills dependent on the facilitator

using one e-learner manual. Using the distributed

cognition approach revealed the cognitive load and

the number of representational steps that were in-

volved in delivering a single instruction to Deaf learn-

ers. The prototype design implemented on commer-

cially available mobile devices has shown promise to

support Deaf users learning computer literacy skills

by having content in SASL videos. We observed that

the prototype allows for Deaf users to work individu-

ally at their own pace, with or without the assistance

from the facilitator thereby reducing the workload on

the facilitator. We also mentioned the design of an

XML data format that organised the SASL videos and

images in a logical way to represent the lesson. The

findings from this work are being used to improve the

design of the prototype and verify the SASL videos to

address instructional inconsistencies.

Future work could investigate whether the SignSup-

port effectively increases computer literacy skills

among Deaf people. This would involve a pedagogy

study with Deaf learners with pre-existing basic com-

puter knowledge.

ACKNOWLEDGEMENTS

We thank the Deaf Community of Cape Town for their

collaboration. Thanks to Computer 4 Kids for allow-

ing us to use the e-learner manual and resources in

the project. We also thank Sifiso Duma and Marsha-

lan Reddy for participating in the project.

REFERENCES

Aarons, D. and Reynolds, L. (2003). South african sign lan-

guage: Changing policies and practice. Many ways to

be deaf: International variation in deaf communities,

page 194.

CSEDU2015-7thInternationalConferenceonComputerSupportedEducation

510

Blake, E., Glaser, M., and Freudenthal, A. (2014). Teaching

design for development in computer science. Interac-

tions, 21(2):54–59.

Blake, E., Tucker, W., Glaser, M., and Freudenthal,

A. (2011). Case study 11.1: Deaf telephony:

Community-based co-design. In Rogers, Y., Sharp,

H., and Preece, J., editors, Interaction Design: Be-

yond Human-Computer Interaction, pages 412–413.

Wiley, 3rd edition.

Calandro, E., Gillwald, A., and Rademan, B. (2014). Sa

broadband quality drops but prices remain high. RIA

Policy Brief, (6):1–5.

Cavender, A., Ladner, R., and Riskin, E. (2006). Mobileasl:

intelligibility of sign language video as constrained by

mobile phone technology. In Proceedings of the 8th

International ACM SIGACCESS Conference on Com-

puters and Accessibility, pages 71–78.

Chininthorn, P., Glaser, M., Freudenthal, A., and Tucker,

W. D. (2012). Mobile communication tools for a south

african deaf patient in a pharmacy context. Informa-

tion Society Technologies-Africa (IST-Africa). Dar es

Salaam, Tanzania: IIMC International Information

Management Corporation, pages 1–8.

Debevc, M., Povalej, P., Verlic, M., and Stjepanovic, Z.

(2007). Exploring usability and accessibility of an e-

learning system for improving computer literacy. In

International Conference in Information Technology

and Accessibility, Hammamet, Tunisia.

Debevc, M., Zoriˇc-Venuti, M., and Peljhan, v. (2003). E-

learning material planning and preparation. Technical

Report May, Maribor, Slovenia.

Denton, D. M., Association, B. D., and Others (1976). The

philosophy of total communication. British Deaf As-

sociation.

Drigas, A. S., Kouremenos, D., and Paraskevi, A. (2005).

An e-learning management system for the deaf people

department of applied technologies. WSEAS Transac-

tions on Advances in Engineering Education, 2(1):20–

24.

e-Learner (2013). e-learner - a modular course of progres-

sive ict skills. http://www.e-learner.mobi/. Accessed:

2013-11-25.

EqualSkills (2014). What is equalskills.

http://www.equalskills.com/. Accessed: 2014-09-12.

Glaser, M. and Lorenzo, T. (2007). Developing literacywith

deaf adults. In Disability and Social Change: A South

African Context, pages 192–205.

Glaser, M. and Tucker, W. D. (2004). Telecommunica-

tions bridging between deaf and hearing users in south

africa. Proc. CVHI 2004.

Grosjean, F. (1992). The bilingual and the bicultural person

in the hearing and in the deaf world. Sign Language

Studies, 1077(1):307–320.

Hellstrom, G. (1998). Draft application profile sign lan-

guage and lip-reading real time conversation applica-

tion of low bitrate video communication.

Hutchins, E. (2000). Distributed cognition. Internacional

Enciclopedia of the Social and Behavioral Sciences,

pages 1–10.

Lane, H. L. (1993). The mask of benevolence: Disabling

the deaf community. Vintage Books.

Motion, L. (2014). Leap motion — 3d mo-

tion and gesture control for pc & mac.

https://www.leapmotion.com/product. Accessed:

2014-12-9.

MotionSavvy (2014). Faq — motionsavvy.

http://www.motionsavvy.com/faq/. Accessed:

2014-12-9.

Motlhabi, M., Glaser, M., Parker, M., and Tucker, W.

(2013). Signsupport: A limited communication do-

main mobile aid for a deaf patient at the phar-

macy. In Southern African Telecommunication Net-

works and Applications Conference (SATNAC), Stel-

lenbosch, South Africa.

Motlhabi, M. B. (2013). Usability and Content Verification

of a Mobile Tool to help a Deaf person with Pharma-

ceutical Instruction. Masters dissertation, University

of Western Cape.

Mutemwa, M. and Tucker, W. D. (2010). A mobile deaf-

to-hearing communication aid for medical diagnosis.

In Southern African Telecommunication Networks and

Applications Conference (SATNAC), pages 379–384,

Stellenbosch, South Africa.

Oracle (2014). Client technologies: Java plat-

form, standard edition (java se) 8 release

8. http://docs.oracle.com/javase/8/javase-

clienttechnologies.htm. Accessed: 2014-12-11.

Penn, C. (1990). How do you sign ’apartheid’? the politics

of south african sign language. Language Problems

and Language Planning, 14(2):91–103.

Prinz, P. M. and Strong, M. (1998). Asl proficiency

and english literacy within a bilingual deaf education

model of instruction. Topics in Language Disorders,

18(4):47.

Rogers, Y., Sharp, H., and Preece, J. (2011). Interaction de-

sign: beyond human-computer interaction. John Wi-

ley and Sons, 3 edition.

Sharpling, G. (2014). Discourse markers.

http://www2.warwick.ac.uk/fac/soc/al/globalpad/

openhouse/academicenglishskills/grammar/discourse.

Accessed: 2014-08-13.

Theofanos, M. F. and Redish, J. G. (2003). Bridging the

gap: Between accessibility and usability. interactions,

10(6):36–51.

W3C (2014). Extensible markup language (xml) 1.0

(fifth edition). http://www.w3.org/TR/REC-xml/. Ac-

cessed: 2014-12-07.

Watson, L. M. (1999). Literacy and deafness: the chal-

lenge continues. Deafness and Education Interna-

tional, 1(2):96–107.

SignSupport:AMobileAidforDeafPeopleLearningComputerLiteracySkills

511