Comparing Real and Virtual Object Manipulation by Physiological

Signals Analysis: A First Study

Mohammad Ali Mirzaei

1

, Jean-R

´

emy Chardonnet

2

and Fr

´

ed

´

eric Merienne

2

1

Instrument Technology, Atlas project, Embedded and FPGA team, Highview House, Station Road, Edgware, U.K.

2

LISPEN EA 7515, Arts et M

´

etiers, UBFC, HESAM, Institut Image, 2 rue Thomas Dumorey, Chalon-sur-Sa

ˆ

one, France

Keywords:

Virtual Reality, Object Manipulation, EMG, CAVE.

Abstract:

Virtual reality aims at reproducing reality and simulating actions like object manipulation tasks. Despite

abundant past research on designing 3D interaction devices and methods to achieve close-to-real manipulation

in virtual environments, strong differences exist between real and virtual object manipulation. Past work that

compared between real and virtual manipulation mainly focused on user performance only. In this paper,

we propose using also physiological signals, namely electromyography (EMG), to better characterize these

differences. A first experiment featuring a simple pick-and-place task on a real setup and in a CAVE system

showed that participants’ muscular activity reveals a clearly different spectrum in the virtual environment

compared to that in reality.

1 INTRODUCTION

Object manipulation has attracted much attention

among other interaction tasks especially in virtual rea-

lity (VR). Obviously, object manipulation depends on

environmental conditions such as the lighting, the pre-

sence of other objects and their location in the scene

and so on. With the rapid development of VR for the

last couple of years, reproducing high fidelity simula-

tions in VR became a crucial issue. For instance, with

the emergence of industry 4.0, simulations of manual

tasks such as pick-and-place, manufacturing, hand-

ling operations are more and more frequent. These

manual tasks need a precise evaluation to be valida-

ted (Fumihito and Suguru, 2012).

When it comes to simulation, VR requires techno-

logies and methods allowing to interact with the vir-

tual environment. Strong differences usually exist be-

tween object manipulation in the virtual and the real

environments because of missing sensory feedbacks

such as gravity, roughness, pressure or temperature.

In this regard, a huge piece of work has been proposed

in the past (Argelaguet and Andujar, 2013). Though

we observe for a few years great progress in natu-

ral interfaces and interactions (Bowman et al., 2012),

these techniques are still not fully mature (Mirzaei

et al., 2013; Alibay et al., 2017). We still see, through

newly commercialized VR headsets, that the most po-

pular interaction interface remains based on ray tra-

cing. These devices allow easy interaction, avoiding

any occlusion issues.

In this paper, we propose to compare between real

and virtual object manipulation. Not only the task

performance is compared but also the physiological

signals that are derived from this activity are conside-

red. We are interested in better characterizing the dif-

ferences between reality and VR. As a first study, we

consider a very simple yet common manual task that

is pick-and-place. Indeed, we do not want to consi-

der more complex manipulation tasks as they require

much more dexterity, especially for users who are not

familiar with VR, which in turn means much more

parameters monitoring.

2 RELATED WORK

One strong issue in VR is to make users feel pre-

sent in the virtual environment (Witmer and Singer,

1998). Early work has shown that high quality 3D

graphics do not suffice to get high sense of presence.

Other sensory feedback like tactile or haptic feed-

back showed to enhance the level of interaction (Stur-

man et al., 1989; Talati et al., 2005). Haptic inter-

faces were quickly developed to provide the sense of

weight, friction, touch (see for example early devices

like (Burdea, 1996; Kim et al., 2002; Koyama et al.,

108

Mirzaei, M., Chardonnet, J-R. and Merienne, F.

Comparing Real and Virtual Object Manipulation by Physiological Signals Analysis: A First Study.

DOI: 10.5220/0006924401080115

In Proceedings of the 2nd International Conference on Computer-Human Interaction Research and Applications (CHIRA 2018), pages 108-115

ISBN: 978-989-758-328-5

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

2002) or more recent devices like (Ma and Ben-Tzvi,

2015; Choi and Follmer, 2016)), all challenging with

imagination to provide users with realistic experience

in manipulating virtual objects. The main drawbacks

of such devices are: they require to be worn, meaning

they are mostly intrusive, and calibration is often nee-

ded prior to be used. L

´

eon et al. proposed a hands-on

non-intrusive haptic manipulation device that allows a

large variety of hand and finger configurations (L

´

eon

et al., 2016). This device is intented to simulate vir-

tual manipulation tasks requiring high dexterity. Ho-

wever, the main issue is its small workspace due to

the haptic arm, which may lead to repeated arm mo-

vements to move a virtual object on a large span.

Despite abundant proposals, most of these devi-

ces were not validated through comparison with ob-

ject manipulation in real environments. The question

of comparing real and virtual tasks is in fact not tri-

vial. Indeed, many parameters may encounter such as

the system latency, the perceived realism of the virtual

scene, the available field of view, stereoscopy, and so

on. Early work attempted to compare between reality

and virtual reality in training situations (Kenyon and

Afenya, 1995). Though the context was a little bit

different than ours, results showed that virtual world-

trained users were significantly more performant than

untrained users but that real world-trained users did

not perform significantly better than untrained users.

Graham and MacKenzie compared real and virtual

pointing, showing that physical pointing leads to bet-

ter results (Graham and MacKenzie, 1996). However

their study was conducted on a 2D interaction basis

whereas here we address 3D interaction. Later work

in a different context showed better performance in

virtual environments and even better in mixed reality

(also called dual reality) environments, compared to

the one in real environments (Raber et al., 2015). Ho-

wever, the interaction task in virtual reality consisted

in a 2D interaction on a touch screen, whereas inte-

raction was done in 3D space in the real condition.

Nevertheless, mixing reality and virtual reality seems

an interesting alternative.

Several past research attempted to reduce differen-

ces between reality and VR, as in (Kitamura et al.,

2002) where a manipulation method was designed

by adapting reality to virtual environment constraints.

However the proposed method requires constraining

reality, which restricts practical usage. Chapoulie et

al. proposed a framework to analyze finger-based 3D

object manipulation considering several devices that

were designed to be identical in both reality and VR

(Chapoulie et al., 2015). Results on performance sho-

wed greater errors in virtual environments than in re-

ality, with differences becoming more apparent as the

complexity of the devices increased. In fact they poin-

ted out that reproducing identically real setups in vir-

tual reality is very complex. Also they compared bet-

ween wand-like and natural interfaces, showing a ten-

dency to better results with wand-like devices. Here

we do not aim at considering finger-based interaction.

We will rather stick to wand-like interfaces to cope

with the fact that even newly commercialized VR he-

adsets come with such devices.

In all past work reported above, comparison be-

tween real and virtual environments have been con-

ducted measuring user performance (such as the com-

pletion time, task errors, cognitive load through sub-

jective questionnaires, e.g., NASA-TLX (Hart and

Stavenland, 1988)). To the best of our know-

ledge, physiological-based parameters have hardly

been considered. Electromyography (EMG) or elec-

troencephalography (EEG) has mostly been used in

human-computer interaction research work as a mean

to interact with virtual contents (for example (Ti-

san

ˇ

c

´

ın et al., 2014; Lotte et al., 2013)), rather than as

an analysis tool of interaction tasks. Through this first

study, we contribute in exploring EMG as a tool to

characterize differences between real and virtual ob-

ject manipulation. We consider here a simple pick-

and-place task.

3 EXPERIMENT DESIGN

An experiment was designed in both a real and a vir-

tual environments to compare user performance, user

perception as well as the muscular activity during a

simple object manipulation task.

3.1 Participants

10 participants were recruited on a voluntary basis

within the university students to perform the experi-

ment. No requirements regarding the level of know-

ledge in VR were specified when recruting the parti-

cipants. There was a briefing to give enough informa-

tion about the test procedure and we collected the par-

ticipants’ consent to perform the experiment. No par-

ticipants got compensation to the experiment. None

of them were familiar with VR, however they already

experienced it at least once. All were right-handed

and none of them reported any health issues.

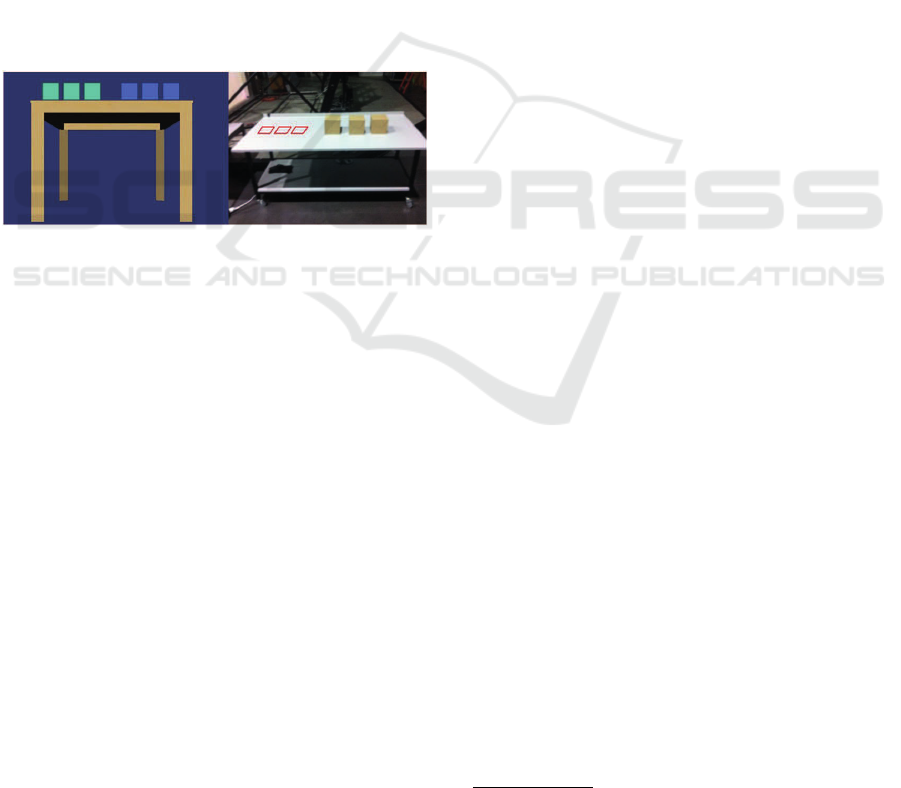

3.2 Setup

The real setup consisted in a 1.5 m-long table on

which three wooden cubes (15 × 15 × 15 cm

3

and an

approximate weight of 1 kg) were arranged. Three

Comparing Real and Virtual Object Manipulation by Physiological Signals Analysis: A First Study

109

squared marks were drawn on the table to indicate

where to place these cubes (Figure 1.b).

The setup was reproduced in a virtual environment

(VE) with the same scale (Figure 1.a). We ensured

consistency in the scale in the VE by superimposing a

virtual cube on a real one in the virtual setup, then ad-

justing the size of the virtual cube manually. The vir-

tual setup consisted in an immersive room, allowing

scale-one immersion, through a home-made software

based on OpenSceneGraph and VRPN (Taylor et al.,

2001). The immersive room was a 3×3×3 four-sided

CAVE system with a 1400×1050 px resolution per

side with stereoscopic vision, integrating an infrared-

based tracking system to track the user’s location in

the CAVE and in the VE. Manipulation of the virtual

cubes was performed using an ART Flystick 2 inte-

raction device that has several buttons and a trigger.

The Flystick was also tracked in position. Here we

did not consider tactile or haptic feedback to avoid

biases due to the intrusivity of devices incorporating

such feedback.

1 2 3

1 2 3

Figure 1: Object manipulation in (left) a virtual and (right)

a real environments.

3.3 Tasks

As shown in Figure 1.a, the object manipulation task

in the virtual environment consists in a simple pick-

and-place task, that is, replacing the three blue cu-

bes into the three green ones using the Flystick de-

vice. Selection is performed by pointing the Flystick

at the center of the target cubes then pressing the trig-

ger on the Flystick when enough close to the center

of the target cube (less than 20 cm). The selected

cube is then attached to the Flystick until the trigger

is released. Using the trigger button allows simula-

ting grasping. During displacement, the participant

keeps pressing the trigger while positioning the se-

lected cube. The blue cubes have to be carefully pla-

ced inside the marker cubes (the green cubes) during

positioning. All three blue cubes have to be placed

inside the green marker cubes in order (the first cube

inside the first marker, and so on).

The same task is performed by hand in the real

environment (Figure 1.b). The participant grabs the

first cube, holds it up and places it inside the first

red square marked up on the left side of the table (Fi-

gure 1.b), the same task is repeated for each cube.

3.4 Muscular Activity

As the object manipulation task we consider here is a

simple pick-and-place task, the active limbs are mos-

tly the arms, with a flexion/extension movement of

the elbow. The hands are also solicited to grasp the

objects. Here we will not consider hand and finger

movements as the aim is not to study grasping confi-

gurations, nor grasping stability. The biceps muscle

was therefore considered as an appropriate place to

measure the muscular activity for this task, as the bi-

ceps functions as an important flexor of the forearm,

which is needed to pick and place objects.

To measure the muscular activity, we use three-

lead wireless BIOPAC surface electromyography

(sEMG) sensors

1

. The sensors are connected to an

amplifier and an acquisition stand via wireless con-

nection, allowing wireless data logging by a PC. The

EMG signal data are transmitted at a rate of 2 kHz,

providing a high resolution wireless EMG waveform

at the receiver’s output.

The positive and negative leads were placed at the

origin and end of the biceps muscle respectively and

the ground lead right on the elbow. As the arm can

be either opened, semi-opened or closed, we ensured

stable and solid positioning of the sensors on the arm

regarding body temperature variation and movement

tension to get robust and meaningful signals.

3.5 Procedure

Each participant followed the procedure below:

1. Three BIOPAC sensors were placed on the par-

ticipant’s right arm as described in the previous

section.

2. The participants were asked to lift off the three cu-

bes one by one from the right side of the real table,

to move them in a semi-circular path to trigger

flexion of the elbow and to precisely place them

on the three red squared marks on the left side of

the table.

3. An EMG signal was recorded during this task.

This measurement was considered as a reference

measurement.

4. The participants were then introduced to the

CAVE system. They were briefly explained how

to use the Flystick and were able to train for two

minutes.

1

https://www.biopac.com/application/emg-

electromyography/

CHIRA 2018 - 2nd International Conference on Computer-Human Interaction Research and Applications

110

5. The participants had to pick the virtual blue cubes,

to move them in a semi-circular path and to place

them in the green cubes trying to be as precise as

possible.

6. An EMG signal was recorded during this task.

Throughout the experiment, the time of task com-

pletion was recorded in both environments.

At the end of the experiment, a presence question-

naire (Witmer and Singer, 1998) was filled out by the

participants. We also asked the participants’ prefe-

rence between manipulation in the real and the virtual

environments. The presence questionnaire and EMG

are used here as psychological (subjective) and physi-

ological (objective) measurements respectively.

The participants took less than 10 minutes to com-

plete the whole experiment.

3.6 Object Manipulation Evaluation

Criteria

We propose three criteria to evaluate object manipula-

tion. The length of the movement, ϕ

1

, the amount of

rotation, ϕ

2

, and the completion time, t, are conside-

red as time space features for the comparison between

the real and the virtual environments (1).

criteria =

{

ϕ

1

,ϕ

2

,t

}

(1)

3.6.1 Criteria 1: The Length of the Object

Movement Trajectory (ϕ

1

)

The first feature is the length of the movement, which

is a criterion to assess a given task (selection, mo-

vement and placement of a cube) in different environ-

ments. Besides, it can be used to compare a mani-

pulation mechanism or to compare the same task in

two different environments. As a result, the longer

the length of the movement, the harder the task. The

movement in the real environment is considered as a

semi-circular path with an average radius (¯r) and an

average length π¯r. Theoretically, the length of a given

continuous curve is calculated by (2) in 3D space.

ϕ

1

=

Z

b

a

s

dx

dt

2

+

dy

dt

2

+

dz

dt

2

dt (2)

Equation (2) can be easily substituted by (3) for a

curve with sampled data with ∆t = 1 s.

ϕ

1

=

n−1

∑

i=1

k

∆P

i

k

(3)

k

∆P

i

k

=

q

(x

i+1

− x

i

)

2

+ (y

i+1

− y

i

)

2

+ (z

i+1

− z

i

)

2

where n indicates the number of points along the path.

3.6.2 Criteria 2: The Amount of Rotation (ϕ

2

)

The amount of rotation along the circular path in a

plane is defined by (4) as specified in (Tai, 1986).

∇ × F =

I

2π

0

~

F(x,y,z)d~r (4)

where F is the movement trajectory. Since the mo-

vement is semi-circular, (5) and (6) are used to cal-

culate the amount of rotation for a continuous and a

discrete functions respectively.

∇ × F =

Z

π

0

~

F(x,y,z)d~r (5)

∇ × F = −

n−1

∑

i=1

(x

i+1

− x

i

)cos(θ)cos(φ)

k

∆P

i

k

−

n−1

∑

i=1

(y

i+1

− y

i

)cos(θ)sin(φ)

k

∆P

i

k

+

n−1

∑

i=1

(z

i+1

− z

i

)sin(θ)

k

∆P

i

k

(6)

where θ and φ represent the parameters of the sp-

here coordinates. x

i

, y

i

, z

i

are the coordinates of the

i

th

point P on curve F and n represents the number

of points on F. We assume that the reference semi-

circular path lies on the XZ plane (the vertical plane

on which the cubes lie), meaning y = 0 and φ = 0.

Moreover, the resolution of θ is chosen as

π

n

for sim-

plicity. As a result, (6) can be rewritten as (7). Using

(7) the total rotation along the path can be calculated.

∇ × F = ϕ

2

= −

n−1

∑

i=1

(x

i+1

− x

i

)cos

i

π

n

k

∆P

i

k

!

+

n−1

∑

i=1

(z

i+1

− z

i

)sin

i

π

n

k

∆P

i

k

!

(7)

4 RESULTS

4.1 Data Analysis in the Time Domain

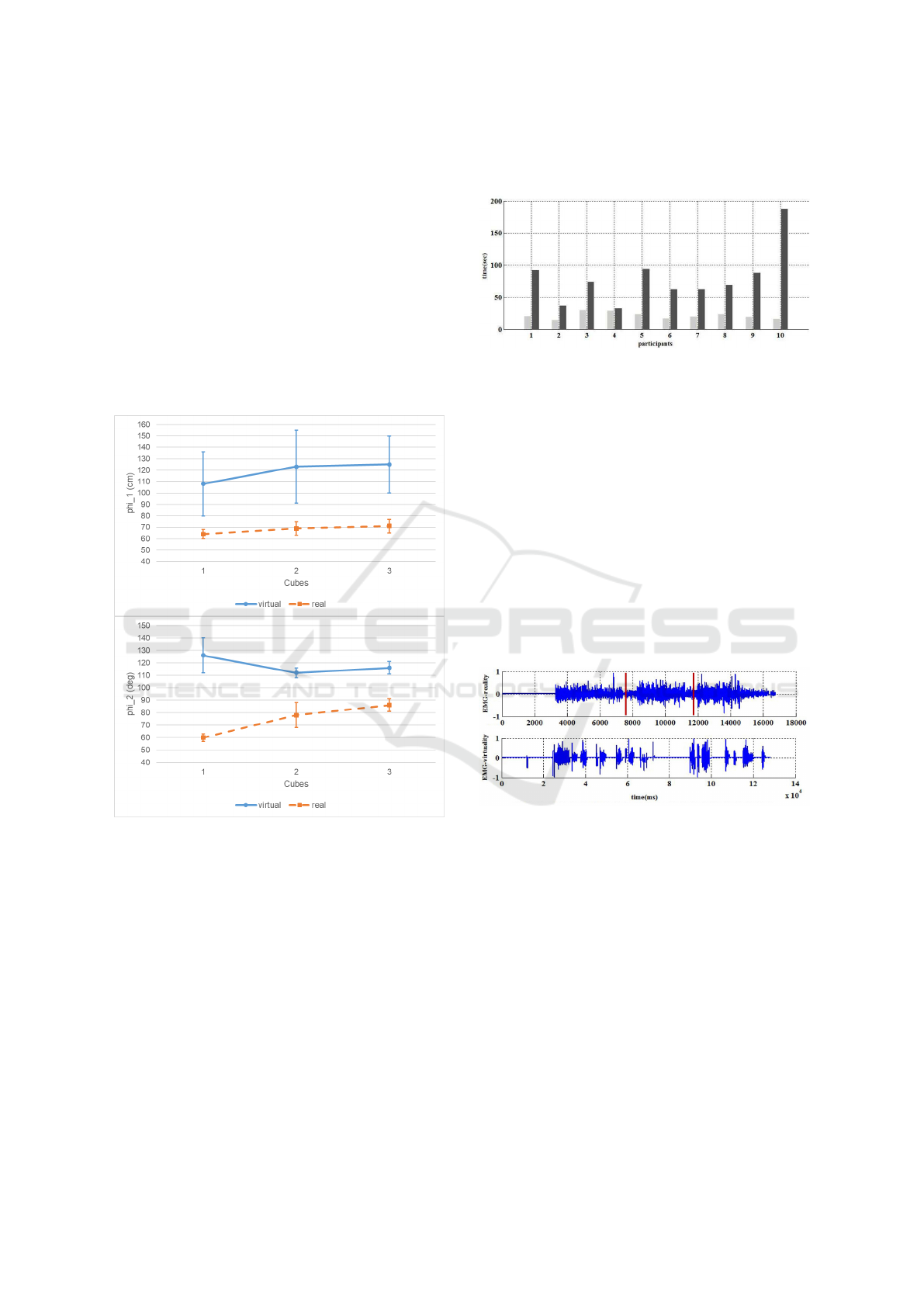

4.1.1 Length of the Movement Trajectory

By applying (3) the length of the performed mo-

vement can be calculated for each cube. The results

of this calculation are shown in Figure 2 left.

A paired Student t-test provided a significant dif-

ference between the length of the average movement

Comparing Real and Virtual Object Manipulation by Physiological Signals Analysis: A First Study

111

in the virtual (M = 1.19 m, SD = 0.28) and the real

(M = 0.68 m, SD = 0.05) environments (p = .039,

t(9) = 5.41). Besides, we performed a one-way

ANOVA test to be sure that the differences between

each cube displacement were not significant in both

environments, which was indeed the case (p = .141).

4.1.2 Amount of Rotation

The amount of total rotation can be calculated by (7).

The results of this calculation are shown in Figure 2

right. A comparison between both environments sho-

wed that in the virtual environment this value is sig-

nificantly higher than in reality (p = .0035).

Figure 2: Length of the movement trajectory (top) and

amount of rotation (bottom) in the virtual (blue) and the

real (orange) environments for the three cubes with the or-

der shown in Figure 1.

4.1.3 Completion Time

Figure 3 shows the time taken to complete the mani-

pulation task both in the virtual (black) and the real

(gray) environments. A paired Student t-test provi-

ded a significant difference between the time of com-

pletion in the virtual (M = 79.78 s, SD = 43.20) and

the real (M = 24.16 s, SD = 5.095) environments

(p = .0026, t(9) = 3.62). Therefore, the participants

spent more time in the virtual environment than in

the real one (M

v

= 79.78 > M

r

= 24.16) to complete

the manipulation task. Moreover, the variation in the

virtual environment is much larger (SD

v

= 43.20 >

SD

r

= 5.095) than in the real environment.

Figure 3: Time of task completion in the real and the virtual

environments.

4.1.4 EMG Signal

An example of a logged EMG signal in the time dom-

ain is shown in Figure 4. We can observe a clear

difference between the real and the virtual environ-

ments. As shown on the top signal, three activities

corresponding to the displacement of the three cubes

can be distinguished (separated by the red bars) in the

real environment while in the virtual environment the

activities (bottom signal) are not easily distinguisha-

ble, although the tasks in both environments are close

to each other. This is the only information we can get

in the time domain. There are high and low compo-

nents in the signal that can hardly be differentiated.

Figure 4: EMG signals recorded in the real (top) and the

virtual (bottom) environments for one participant.

4.2 Data Analysis in the Frequency

Domain

Because the time representation of the EMG signals

does not provide clear clues on the muscular acti-

vity, especially in the virtual environment, the recor-

ded EMG signals are converted to the time-frequency

space (Boashash, 2003).

Figure 5 shows the time-frequency representation

of the EMG signal for three participants. Red co-

lors indicate frequency components with high power

while blue colors indicate frequency components with

very low power. From this representation, activities

can be clearly distinguished in the real environment,

CHIRA 2018 - 2nd International Conference on Computer-Human Interaction Research and Applications

112

with three sets of components corresponding to the

displacement of the three cubes. Most of high power

components are below 100 Hz, which corresponds

to normal muscle activities (Sadoyama and Miyano,

1981). Whereas in the virtual environment, we can

observe much more components with lower power,

which supposes more movements with smaller am-

plitude of the biceps.

Figure 5: Time-frequency representation of the EMG sig-

nal in the (a) real and the (b) virtual environments for three

participants.

4.3 Questionnaire Data Analysis

First, we asked the participants to rate the level of

their satisfaction in both environments on a 7-point

Likert scale (Figure 6). As seen, the highest rate has

been given to the real environment, in average 5.9. A

paired Student t-test showed a significant difference

between the real (M = 5.9, SD = 1.17) and the vir-

tual (M = 4.5, SD = 1.19) environments (p = .0013,

t(9) = 3.45).

Figure 6: Level of satisfaction in both environments.

Then, from the presence questionnaire, the con-

trol (CF), sensory (SF) and distraction (DF) factors

were calculated as three main factors with the cor-

rection factor proposed by Witmer and Singer (Wit-

mer and Singer, 1998). The results of the calculation

are shown in Figure 7. In average, the score associa-

ted with CF and SF is higher than DF. From a paired

Student t-test, CF (M = 7.049, SD = 1.32) and SF

(M = 5.93, SD = 1.37) are significantly higher (p =

2.71 × 10

−6

, t(9) = 4.32 and p = .0009, t(9) = 3.75,

respectively) than DF (M = 3.9, SD = 0.79).

Figure 7: Sub-scores provided by the presence question-

naire in the virtual environment.

5 DISCUSSION AND

LIMITATIONS

From the results, the participants spent more time

with larger movements in the virtual environment

than in the real one (

∼

=

3 times), which shows object

manipulation in the virtual environment is harder than

in the real environment. One reason lies in the fact

that we did not provide any tactile or haptic feedback

to the participants in the virtual environment, which

confirms past work on the importance of adding ot-

her sensory cues than just visual feedback. Another

reason may lie in the choice of participants who were

not familiar with VR. Further experiments should be

conducted with VR users.

Also we observed a much larger variation of the

length of the movement trajectory in the virtual envi-

ronment than in reality. This huge difference, 9 times,

originates from different training experiences. None

of the participants experienced virtual object manipu-

lation in the near past. However, surely they experien-

ced similar object manipulation tasks in real environ-

ments. Since the participants did not have the same

experience in the virtual environment, they recalled

their experience from the real environment. Because

there is a strong difference in terms of sensory input

to the cortex between the real and the expected inputs,

the calculated motor command in the brain either is

overestimated or underestimated which in turn crea-

tes a large deviation from the mean value in the vir-

Comparing Real and Virtual Object Manipulation by Physiological Signals Analysis: A First Study

113

tual environment. Again, other experiments should be

conducted with VR users to see whether these diffe-

rences of variation tend to decrease.

Regarding EMG, we see a clear difference of

muscular activity between the real and the virtual en-

vironments. The time-frequency representation of the

EMG signals showed much more components with

lower power in the virtual environment. Indeed, in the

real environment, complete feedbacks (visual, tactile,

sound) are provided by the sensory organs to the

brain, consequently the brain can recall the appropri-

ate pattern and generate accurate motor commands to

the muscles. In the virtual environment, some sensory

information is missing; as a result the brain cannot

extract the appropriate pattern and therefore, it can-

not generate very accurate motor commands to the

muscles. Typically, the participants performed several

times small flexion/extension movements of their fo-

rearm to precisely position the cubes in the virtual en-

vironment.

Here, we considered real cubes of approximately

1 kg whereas the Flystick weighs around 300 g, which

may have affected the measurements. Unrealistic tex-

ture rendering in the virtual condition may also have

influenced movements as past work showed texture

to have an influence on weight perception (Flanagan

et al., 1995). Further experiments should be done with

exactly the same conditions in both environments.

Another limitation of our study is that in the vir-

tual condition, the participants had to hold the Flys-

tick all the time, even when they did not grasp any vir-

tual cube, which could bias the comparison with the

real condition. Though EMG signals were provided

from the biceps thus without any link with hand mo-

vements, further investigation should be carried out

to verify this aspect, using for example optical-based

hand trackers.

Looking at subjective data, the presence question-

naire revealed that the control and the sensory factors

were much higher than the distraction factor. It means

the user interface was well designed for an object ma-

nipulation task in virtual environments, was not dis-

tractive and was capable to involve several sensory in-

puts. To verify this claim, we have compared DF with

the participants involvement (INV). A paired Student

t-test showed INV (M = 3.9, SD = 0.785) to be sig-

nificantly higher than DF (M = 5.75, SD = 1.64)

(p = .0038, t(9) = 3.95) as shown in Figure 8.

Finally, as other sensory cues were missing in the

virtual environment, not surprisingly, the participants

preferred object manipulation in the real environment

than in the virtual one, with a level of satisfaction sig-

nificantly higher in the real condition than in the vir-

tual one. Investigation should be made to determine

Figure 8: Level of distraction and involvement in the object

manipulation task in the virtual environment.

the role of the interaction technique, apart from the

effect of multi-sensory cues, as in the virtual condi-

tion we imposed an interaction strategy, whereas in

the real condition, the participants were free to choose

their own grasping strategy.

6 CONCLUSION

We evaluated through a first study a simple mani-

pulation task using EMG and objective criteria, and

compared a real and a virtual environment situations.

EMG signals revealed clear differences between the

real and the virtual environments, and time space fea-

tures showed the virtual condition to require more arm

movements than in the real condition. These differen-

ces originated from a gap between the actual and the

expected sensory inputs to the brain. As virtual rea-

lity aims at reproducing real situations, characterizing

differences between the real and the virtual environ-

ments could help better design interaction devices and

methods, so that user experience can be enhanced in

virtual environments and be close to real manipula-

tion tasks.

Future work will include in-depth investigation

with more participants accustomed to VR and diffe-

rent object manipulation techniques closer to real ma-

nipulation, e.g., using finger trackers that do not re-

quire holding any device all the time. We will also

consider tactile/haptic feedback and see how phy-

siological signals behave accordingly, knowing that

past research showed tactile feedback to enhance in-

teraction in virtual environments.

REFERENCES

Alibay, F., Kavakli, M., Chardonnet, J.-R., and Baig, M. Z.

(2017). The Usability of Speech and/or Gestures in

Multi-Modal Interface Systems. In International Con-

ference on Computer and Automation Engineering,

pages 73–77.

CHIRA 2018 - 2nd International Conference on Computer-Human Interaction Research and Applications

114

Argelaguet, F. and Andujar, C. (2013). A survey of 3d

object selection techniques for virtual environments.

Computers & Graphics, 37(3):121–136.

Boashash, B. (2003). Time Frequency Analysis, A Compre-

hensive Reference. Elsevier Science.

Bowman, D. A., McMahan, R. P., and Ragan, E. D. (2012).

Questioning naturalism in 3d user interfaces. Com-

mun. ACM, 55(9):78–88.

Burdea, G. (1996). Force and Touch Feedback for Virtual

Reality. Wiley.

Chapoulie, E., Tsandilas, T., Oehlberg, L., Mackay, W.,

and Drettakis, G. (2015). Finger-based manipula-

tion in immersive spaces and the real world. In 2015

IEEE Symposium on 3D User Interfaces (3DUI), pa-

ges 109–116.

Choi, I. and Follmer, S. (2016). Wolverine: A wearable hap-

tic interface for grasping in vr. In Proceedings of the

29th Annual Symposium on User Interface Software

and Technology, UIST ’16 Adjunct, pages 117–119.

Flanagan, J. R., Wing, A. M., Allison, S., and Spenceley, A.

(1995). Effects of surface texture on weight percep-

tion when lifting objects with a precision grip. Per-

ception & Psychophysics, 57(3):282–290.

Fumihito, K. and Suguru, S. (2012). Fast grasp synthesis for

various shaped objects. Computer Graphics Forum,

31(2pt4):765–774.

Graham, E. D. and MacKenzie, C. L. (1996). Physical ver-

sus virtual pointing. In Proceedings of the SIGCHI

Conference on Human Factors in Computing Systems,

CHI ’96, pages 292–299, New York, NY, USA. ACM.

Hart, S. G. and Stavenland, L. E. (1988). Development

of NASA-TLX (Task Load Index): Results of empi-

rical and theoretical research. In Hancock, P. A. and

Meshkati, N., editors, Human Mental Workload, chap-

ter 7, pages 139–183. Elsevier.

Kenyon, R. and Afenya, M. (1995). Training in virtual and

real environments. Annals of Biomedical Engineering,

23(4):445–455.

Kim, S., Hasegawa, S., Koike, Y., and Sato, M. (2002). Ten-

sion based 7-dof force feedback device: Spidar-g. In

Proceedings IEEE Virtual Reality 2002, pages 283–

284.

Kitamura, Y., Ogata, S., and Kishino, F. (2002). A mani-

pulation environment of virtual and real objects using

a magnetic metaphor. In ACM Symposium on Virtual

Reality Software and Technology, VRST ’02, pages

201–207, New York, NY, USA. ACM.

Koyama, T., Yamano, I., Takemura, K., and Maeno, T.

(2002). Multi-fingered exoskeleton haptic device

using passive force feedback for dexterous teleopera-

tion. In IEEE/RSJ International Conference on In-

telligent Robots and Systems, volume 3, pages 2905–

2910.

L

´

eon, J.-C., Dupeux, T., Chardonnet, J.-R., and Perret, J.

(2016). Dexterous grasping tasks generated with an

add-on end-effector of a haptic feedback system. Jour-

nal of Computing and Information Science in Engi-

neering, 16(3):030903:1–030903:10.

Lotte, F., Faller, J., Guger, C., Renard, Y., Pfurtscheller, G.,

L

´

ecuyer, A., and Leeb, R. (2013). Combining BCI

with Virtual Reality: Towards New Applications and

Improved BCI, pages 197–220. Springer Berlin Hei-

delberg, Berlin, Heidelberg.

Ma, Z. and Ben-Tzvi, P. (2015). Design and optimization of

a five-finger haptic glove mechanism. J. Mechanisms

Robotics, 7(4):041008:1–041008:8.

Mirzaei, M. A., Chardonnet, J.-R., P

`

ere, C., and Merienne,

F. (2013). Improvement of the real-time gesture ana-

lysis by a new mother wavelet and the application

for the navigation inside a scale-one 3d system. In

IEEE International Conference on Advanced Video

and Signal-Based Surveillance, pages 270–275.

Raber, F., Kr

¨

uger, A., and Kahl, G. (2015). The comparison

of performance, efficiency, and task solution strategies

in real, virtual and dual reality environments. In Abas-

cal, J., Barbosa, S., Fetter, M., Gross, T., Palanque,

P., and Winckler, M., editors, Human-Computer In-

teraction – INTERACT 2015, pages 390–408, Cham.

Springer International Publishing.

Sadoyama, T. and Miyano, H. (1981). Frequency analysis

of surface emg to evaluation of muscle fatigue. Euro-

pean Journal of Applied Physiology and Occupational

Physiology, 47(3):239–246.

Sturman, D. J., Zeltzer, D., and Pieper, S. (1989). Hands-on

interaction with virtual environments. In Proceedings

of the 2Nd Annual ACM SIGGRAPH Symposium on

User Interface Software and Technology, UIST ’89,

pages 19–24, New York, NY, USA. ACM.

Tai, C.-T. (1986). Unified definition of divergence, curl,

and gradient. Applied Mathematics and Mechanics,

7(1):1–6.

Talati, A., Valero-Cuevas, F. J., and Hirsch, J. (2005). Visual

and tactile guidance of dexterous manipulation tasks:

An fmri study. Perceptual and Motor Skills, 101:317–

334.

Taylor, II, R. M., Hudson, T. C., Seeger, A., Weber, H., Ju-

liano, J., and Helser, A. T. (2001). VRPN: A Device-

independent, Network-transparent VR Peripheral Sy-

stem. In Proceedings of the ACM Symposium on Vir-

tual Reality Software and Technology, VRST ’01, pa-

ges 55–61.

Tisan

ˇ

c

´

ın, T., Sporka, A. J., and Pol

´

a

ˇ

cek, O. (2014). Emg

sensors as virtual input devices. In Proceedings of the

2014 Mulitmedia, Interaction, Design and Innovation

International Conference on Multimedia, Interaction,

Design and Innovation, MIDI ’14, pages 18:1–18:5,

New York, NY, USA. ACM.

Witmer, B. G. and Singer, M. J. (1998). Measuring pre-

sence in virtual environments: A presence question-

naire. Presence: Teleoperators and Virtual Environ-

ments, 7(3):225–240.

Comparing Real and Virtual Object Manipulation by Physiological Signals Analysis: A First Study

115