Using LSTM for Automatic Classification of

Human Motion Capture Data

Rog

´

erio E. da Silva

1,2

, Jan Ond

ˇ

rej

1,3

and Aljosa Smolic

1

1

V-SENSE, School of Computer Science and Statistics, Trinity College Dublin, Ireland

2

Department of Computer Science, Santa Catarina State University, Brazil

3

Volograms, Dublin, Ireland

Keywords:

Human Motion Classification, Motion Capture, Content Analysis, Deep Learning, Artificial Intelligence.

Abstract:

Creative studios tend to produce an overwhelming amount of content everyday and being able to manage these

data and reuse it in new productions represent a way for reducing costs and increasing productivity and profit.

This work is part of a project aiming to develop reusable assets in creative productions. This paper describes

our first attempt using deep learning to classify human motion from motion capture files. It relies on a long

short-term memory network (LSTM) trained to recognize action on a simplified ontology of basic actions like

walking, running or jumping. Our solution was able of recognizing several actions with an accuracy over 95%

in the best cases.

1 INTRODUCTION

Creative industry is a broad term generally used to

refer to any company devoted to create content for

games, animations, AR/VR, VFX, etc. Over time,

the amount of creative content that can be produced

by these companies can easily become overwhelming;

how big? it will depend on the size of the company

itself. Studios often rely on their own production pi-

peline to reduce time and effort spent producing new

content, therefore reducing their production costs in

order to increase profit. One effective way of redu-

cing costs is by reusing content from older producti-

ons, adapting them into new contexts, thus speeding

up the production. Despite that this notion sounds re-

asonable, achieving it in a production setting is not

easy, because, as mentioned before, being able to re-

trieve the one specific desired content among the mil-

lions of “assets” being produced every year (e.g. 3D

models, textures, sound effects, soundtracks, animati-

ons, scripts, etc.) has proven to be a high demanding

task.

One particular asset that has great interest for the

creative industry is motion captured data (mocap)

(Menache, 2011; Delbridge, 2015). Consider the fol-

lowing scenario: an animator needs to animate a se-

quence where a character walks limping from its left

leg due to an injury; instead of having the motion cap-

ture team recording a new sequence in the mocap lab,

the animator remembers that a few years back he al-

ready animated a similar sequence of a limping walk

which means he could adapt this sequence from that

old one (if he manages to remember which file con-

tains that desired animation). So, he makes a quick

search in the backup databases only to learn that the

studio has, in fact, thousands of motion captured fi-

les in storage! Now, how to find the one he’s look-

ing for? Re-recording the sequence in the mocap lab.

might be, in this case, a faster alternative to pursue

(although not cheaper).

This hypothetical situation is frequently observed

in a production pipeline, and finding ways to au-

tomatize the process for documenting creative con-

tents being produced (so to facilitate content retrie-

val) would have a big impact in reducing time and ef-

fort a production team would have to spend searching

through a database of older projects.

This work focuses on studying ways for automati-

zing the motion capture tagging process. To tag a con-

tent means to label it according to a given ontology, so

that later it could more easily be found by tag-based

searches, thus facilitating its retrieval and use. Our

approach relies on deep learning and long short-term

memory neural networks (LSTM) to analyze a se-

ries of mocap data files, classifying them accordingly.

This work is being developed under the context of the

SAUCE project (Smart Assets for re-Use in Creative

Environments) that is a three-year EU Research and

236

E. da Silva, R., Ond

ˇ

rej, J. and Smolic, A.

Using LSTM for Automatic Classification of Human Motion Capture Data.

DOI: 10.5220/0007349902360243

In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2019), pages 236-243

ISBN: 978-989-758-354-4

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

Innovation project between several companies and re-

search institutions to create a step-change in allowing

creative industry companies to re-use existing digital

assets for future productions.

The goal of SAUCE project is to produce, pilot

and demonstrate a set of professional tools and techni-

ques that reduce the costs for the production of en-

hanced digital content for the creative industries by

increasing the potential for re-purposing and re-use

of content as well as providing significantly improved

technologies for digital content production and mana-

gement (SAUCE Project, 2018).

In this work, we studied ways for using long short-

term memory networks to automatically classify con-

tent from motion captured data according to a given

ontology. We have designed a LSTM architecture

that accepts motion captured data and determines the

actions being portrayed in each file. This work re-

presents a first step for SAUCE project in developing

a general-purpose media classifier system that could

help speeding up a creative pipeline process.

In the next section we discuss the problem of hu-

man motion classification then, on Section 3 a brief

literature review on motion capture, long short-term

memory neural networks (LSTM) and a few rela-

ted works are presented and discussed. Following,

Section 4 explains our experiments and results we

obtained from applying our method. Finally, we pre-

sent our conclusions and final remarks in Section 5.

2 HUMAN MOTION

CLASSIFICATION PROBLEM

Classifying motion means determining what kind of

action (e.g. walking, running, jumping, fighting, dan-

cing, etc.) is being portrayed by any given human

motion in space. Tracking motion in space is usually

achieved by motion capture that involves decompo-

sing each motion as a series of three-dimensional po-

ses (called a skeleton) in time (see Section 3.1).

Therefore, being able to understand motion means

determining temporal relations among changes occur-

ring to specific body parts over time while performing

each given movement. In this work, we are interested

in studying ways for automatic labelling a series of

motion captured data to facilitate its reuse in future

productions by a creative studio.

According to (Martinez et al., 2017), “learning

statistical models of human motion is a difficult task

due to the high-dimensionality, non-linear dynamics

and stochastic nature of human movement”. Clas-

sifying data involves analyzing each candidate ex-

tracting a series of properties from it trying to match

them to a specific class in a set of known classes (an

ontology). Several issues need to be tackled while

working on this kind of problem:

1. finding a suitable ontology description that is an

enumeration of possible classes and their attribu-

tes (the criteria used to identify an element of that

class);

2. an adequate knowledge representation on how

to represent ontological attributes in a way that ar-

tificial intelligence can work with;

3. a prediction criteria to describe how to deter-

mine if a given candidate belongs to a certain

class.

In the following sections we describe how to

track and represent spatial motion of humans, how

long short-term memory networks work and why we

choose to use them in this research and finally, a

few related works aiming human motion classifica-

tion problem are presented.

3 STATE OF THE ART

3.1 Motion Capture

Motion capture (or mocap) is the process of recor-

ding the movements of objects or people via special

hardware setups . There are several possible technolo-

gies that can be applied to capture movement in space:

Optical Systems: utilize data captured from image

sensors to triangulate the 3D position of a subject

between two or more cameras calibrated to pro-

vide overlapping projections. This can be achie-

ved using special markers that can be passive (re-

flective) or active (synchronized flashing LEDs);

they can also use markerless tracking systems

that relies on computer vision to recognize human

parts from the set of cameras;

Non-optical Systems: any other technology that al-

lows motion tracking. The most common ones

are: inertial systems use inertial measurement

units (IMUs) containing a combination of gy-

roscopes, magnetometers, and accelerometers, to

measure rotational rates; mechanical motion are

often referred to as exoskeleton motion capture

systems, due to the way the sensors are (directly)

attached to the body to perform the tracking; and

magnetic systems calculate position and orienta-

tion by the relative magnetic flux of three orthogo-

nal coils on both the transmitter and each receiver.

Using LSTM for Automatic Classification of Human Motion Capture Data

237

One approach that is very popular these days and

is traditionally employed by animation studios invol-

ves an actor wearing a suit covered with optical mar-

kers that can then be tracked by an optical system of

infrared cameras.

From these recordings results a series of 3D coor-

dinates for each marker (tracked several times per se-

cond) that can later be mapped to a character as an

animation (this technique is called retargeting). Fi-

gure 1 shows a few frames of a skeletal animation

obtained via a motion capture session of a walk (CMU

Graphics Lab, 2018).

Figure 1: Motion captured sequence of a walk.

Regarding file formats for storing motion capture

data one popular choice is the BVH format. The Bi-

ovision Hierarchical data (BVH) file format was ori-

ginally developed by Biovision (a motion capture ser-

vice company) to distribute mocap data to their cu-

stomers. Later, it became a very popular format for

storing mocap.

The reason why we decided to adopt this format

is because while other motion capture formats, like

the C3D format (https://www.c3d.org/), store only the

coordinates for each tracked markers (Figure 2 on

the left), the BVH format also represents hierarchical

relations between joints, i.e., their physical relations

called a skeleton (Figure 3), making it simpler to cor-

relate movements between adjoined joints (Figure 2

on the right).

Figure 2: C3D markers vs BVH Skeleton.

Motion capture data is recorded as a series of mo-

tion channels, each representing one spatial location

and/or orientation of a joint. Since the amount of

channels is dependent on the number of joints and the

number of degrees of freedom (DOF) of each joint,

the size of the frame can vary from file to file.

3.2 Long Short-Term Memory Neural

Networks

The Long Short-Term Memory (LSTM) network is a

type of Recurrent Neural Network (RNN), which is a

Figure 3: Example of a skeleton and motion channels defi-

nition in a BVH file.

special type of neural network designed for sequence

problems like, for instance, texts, speech, and anima-

tions. Traditional RNNs contain cycles that feed the

network activation from a previous time step as inputs

to influence predictions at the current one (Brownlee,

2018; Hochreiter and Schmidhuber, 1997).

Despite the fact that RNNs can learn temporal re-

lations, their main limitation is regarding training in

a problem known as the “vanishing gradient”. This

problem happens when, during training of a recurrent

process, the weights change become so small that they

have no effect in learning the data (or so large in the

other way around: “exploding gradient”).

LSTMs solve these problems by design. All infor-

mation being propagated through the network should

pass first by three gates. These gates are activation

functions especially designed to work on the data so

to only allow relevant information to continue being

propagated during training. The three gates are:

Forget Gate: decides what information to discard

from each layer;

Input Gate: decides which values from the input to

update the memory;

Output Gate: decides what to output based on the

input and the memory.

In the literature, several variants of this architec-

ture can be found where the number of gates can vary

to suit specific contexts and needs .

3.3 Related Works

Two distinct classes of works involving automatic re-

cognition of the human skeleton, can be found in the

literature: human pose estimation and human action

classification. We argue that, despite the clear simila-

rities between the two in terms of their goals (i.e. the

recognition of human motion), they significantly dif-

fer in most of the technical aspects involved in how to

GRAPP 2019 - 14th International Conference on Computer Graphics Theory and Applications

238

tackle with the problem, namely, data representation

and processing:

Human Pose Estimation: aims at detect and esti-

mate human poses in video format (resulting from

actual recordings of people or graphical renditions

of 3D motion capture data), then extracting skele-

tal information that can include depth estimation

(3D) or not (2D). The most common approach

used in this context involves analyzing the pixels

of each frame of a video using classical computer

vision techniques.

Just to mention a few examples of works that

adopt this approach: (Du et al., 2016; Tekin et al.,

2016; Toshev and Szegedy, 2014).

Human Action Classification: aims at interpreting

3D motion from skeletal data analysis of actual

spatial recordings (as described in Section 3.1).

In this particular sense, not many works have been

found in the literature. Some examples that have,

somehow, influenced our work are:

• In (B

¨

utepage et al., 2017) the authors trained

a LSTM to predict future 3D poses from the

most recent past. The system is described as an

encoder-decoder network for generative 3D mo-

dels of human motion based on skeletal anima-

tion;

• In (Gupta et al., 2014) the authors propose an

approach to directly interpret mocap data via v-

trajectories that are sequences of joints connected

over a time frame to allow finding similar mocap

sequences based on pose and viewpoint;

• Another example of human motion prediction

using deep learning LSTMs is presented in (Mar-

tinez et al., 2017);

• A slightly different application but still related

to human motion prediction is the multi-people

tracking system presented by (Fabbri et al., 2018),

where the authors developed a system capable of

extracting information of people body parts and

temporal relations regarding the subjects’ moti-

ons. In this work, the system automatically de-

tects each human figure and respective skeletal in-

formation from frames of video recordings, even

if partially occluded, by matching those with a da-

tabase of body poses.

The common point between all the related works

presented here is that, despite the fact they studied

automatic approaches to identify human motion from

motion capture data, none of them focused on label-

ling the data in order to facilitate future queries and

content retrieval, which seems to remain an unsolved

problem.

4 EXPERIMENTATION

In this section its described the experiments made

with LSTM networks implemented using Python

and Keras (https://keras.io/) with Tensorflow (https:

//www.tensorflow.org/) using the aforementioned data

set built upon the CMU Motion Capture Library.

4.1 Ontology of Human Actions

Since the scope of this work focuses only on tagging

motion capture actions, instead of designing a com-

plete ontology for every possible creative media an

animation studio would be interested in cataloging,

we opted to simplify the representation to consider

only a selected set of human actions.

We are using the freely available CMU Motion

Capture library (CMU Graphics Lab, 2018) for our

experiments. Thus, the list of actions our system is

able to classify reflects the actions available in this

database.

In our experiments we considered the follo-

wing actions: bending down, climbing, dancing,

fighting, jumping, running, sitting down,

standing up, and walking.

The definition of this ontology is important be-

cause training the neural network to recognize its clas-

ses means that it needs a carefully designed data set

of mocap files for each class. Our training data set is

composed of 1136 files divided into those 9 catego-

ries, representing more than 820,000 frame samples.

4.2 Data Representation

There are two main concerns in terms of representing

data for a neural network: how to represent the input

(or training) data and how to represent the output (in

our case the labels of each class in the ontology).

For the input, we followed the data representation

presented at (B

¨

utepage et al., 2017), where each

frame of a mocap recording is represented in the

Cartesian space of each joint’s rotational data plus

the positional data for the ‘Hips’, thus resulting in

an 1D-array of size 3 × N

joints

+ 3 where N

joints

is the number of joints. Still agreeing with the

authors, we normalized all joint’s rotational angles,

centering the skeleton at the origin. So, each joint

data is represented according to the following for-

mat: <Joint> ZRotation, <Joint> YRotation,

<Joint> XRotation, e.g. LeftUpLeg Zrotation,

LeftUpLeg Yrotation, LeftUpLeg Xrotation.

Except for the Hips that also include the XYZ

positional values.

Using LSTM for Automatic Classification of Human Motion Capture Data

239

Several tests have been performed with the size of

the sample, i.e. the number of frames (N

f rames

), and a

comparative of the results is presented in Section 4.5

below.

Regarding the output, each possible outcome is re-

presented as a classical ‘one-hot’ binary string, where

the number of bits relates to the size of the ontology

(number of classes) and each label having a different

bit highlighted. For instance, since we have 9 diffe-

rent labels in the ontology, the first label “bending

down” is represented by the sequence 100000000.

These representations also influence the size of

the first and last layers of the network as described

in Section 4.4.

4.3 Class Prediction

Since we opted to split the training samples into sub-

sets of N

f rames

frames, we had to do the same with our

working data set for consistency. Thus, each motion

capture file being analyzed is also split into samples

of the same size, and each sample is then submit to the

network for prediction. As a sample can be matched

with different classes, in the end the resulting class is

obtained by taking the mode of the class prediction

array, i.e., considering the most frequent label as the

answer .

This approach has the advantage of classifying

each file in terms of how likely that file belongs to

a given class of the ontology, thus allowing for mul-

tiple interpretations of its content, much like how it

would happen in a real scenario. Consider a recor-

ding where the actor starts running in preparation for

a jump. Imagine now an animator searching for either

‘run cycles’ or ‘types of jump’, the system should be

able to understand that the particular file would be a

suitable response in either case.

4.4 Implementing a LSTM

As mentioned before, we opted to implement the pro-

totype for our tool in Python using Keras with Ten-

sorflow. The main reason for this choice was due to

the simplicity that these tools offer, making it more

adequate for quick prototyping.

The solution that was used for the experiments

discussed in this paper rely on three layers : the first

one (the input layer) is a LSTM layer of 15 neurons.

This value was chosen arbitrarily based on several

tests and can be modified to fit different needs like for

instance, different skeletal structures or computatio-

nal performance, the second layer is a similar LSTM

layer (stacked LSTM layer) with the sole purpose of

increasing depth of the network (our experiments sho-

wed that deeper networks can perform better while

predicting lengthier animations), and finally, the third

layer (the output) is the one responsible for encoding

the predicted outcome to one of the classes in the on-

tology as described in previous sections.

It is important to notice that although the size of

the input layer does not necessarily need to match the

size of the input data, the output layer does need to

match the size of the output, i.e., the number of pos-

sible labels that can be outputted.

Listing 1: LSTM implementation in Keras.

1 nNe u r ons = 15

2 numLabe l s = 9

3 s a m p l e S i z e = 5

4 d s S i z e = l e n ( t r a i n i n g D a t a S e t )

5 n J o i n t s = 38

6 d s S h a p e = ( sa m p l e S i z e , n J o i n t s ∗ 3 )

7

8 model = S e q u e n t i a l ( )

9 model . a d d ( LSTM( nNeurons , r e t u r n s e q u e n c e s = True ,

10 i n p u t s h a p e = d sS h a p e ) )

11 model . a d d ( LSTM( n N e uro n s ) )

12 model . a d d ( Dense ( numLabels , a c t i v a t i o n = ’ s o f t m a x ’ ) )

13 model . c o m p i l e ( l o s s = ’ mse ’ , o p t i m i z e r = Adam( l r = 0 . 0 0 1 ) ,

14 m e t r i c s = [ ’ a c c u r a c y ’ ] )

15 model . summary ( )

4.5 Preliminary Results

Several experimental tests have been performed with

our tool covering different network architectures and

several subsets of the ontology. In our experiments,

as a way to better assess the accuracy of the model.

We prepared a series of motion capture files carefully

editing their content to portray only a single action per

file.

In this section, we describe three of such experi-

ments:

1. We performed a series of 5 predictions with the

model considering all 9 categories. Our prediction

data set in this case was composed by 54 files (6

for each category);

2. Later, a subset containing only the four larger

classes data sets have been considered for a se-

cond round of predictions, and the results are pre-

sented next in Section 4.5.2;

3. Finally, the previous experiments’ results showed

that the despite the differences in size of the trai-

ning data sets, four specific classes appeared to

have been better modeled by the network, so we

decided to performed a third round of predictions

using only these four ones. The results for these

are presented in Section 4.5.3.

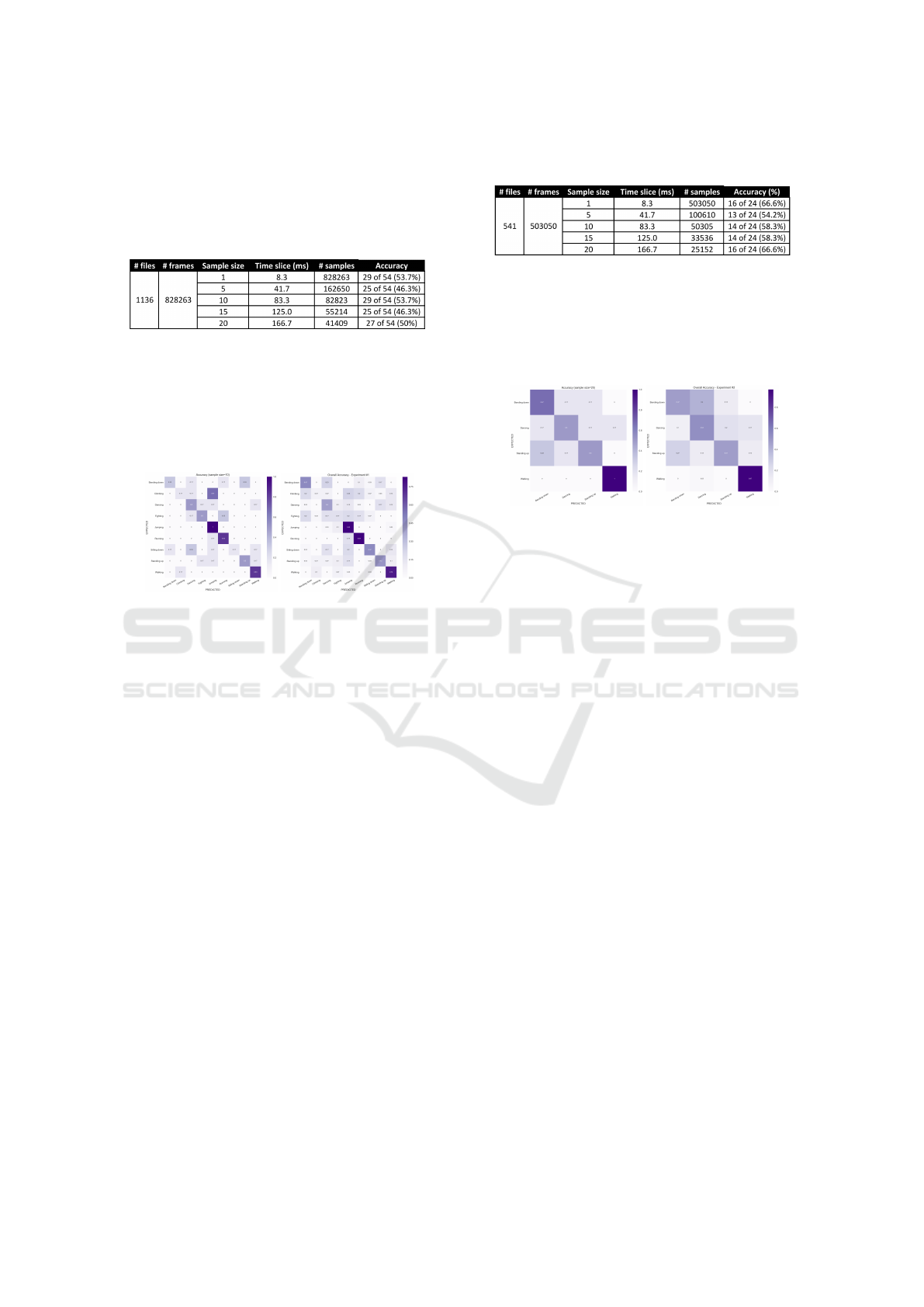

4.5.1 Experiment # 1

Table 1 summarizes the results obtained from these

predictions for the first trial. It’s important to notice

GRAPP 2019 - 14th International Conference on Computer Graphics Theory and Applications

240

that the larger the size of the sample, the lower the

amount of samples, although temporal relations are

better represented.

Table 1: First experiment accuracy results considering dif-

ferent page size and all 9 labels of the ontology.

Figure 4 shows the confusion matrix we obtained

by taking, as an example, the best result in our ex-

periment (the one where sample size = 10). In this

experiment we can clearly see as the darker regions

of the matrix, that some of classes like ‘jumping’

or ‘walking’ has been better learned by the network

than other classes.

Figure 4: Confusion matrices for the predictions conside-

ring sample size = 10 (on the left) and the overall result

after combining all 5 predictions (on the right).

These results were indicating that the network was

underperforming while predicting some of the clas-

ses, most likely due to the problem of underfitting

(Brownlee, 2018) since the size of the training data

set for each category varies significantly (the smal-

ler data set corresponds to 31% the size of the largest

one).

4.5.2 Experiment # 2

In order to try to minimize these effects, a se-

cond round of predictions have been performed

considering only the four larger data sets avai-

lable: bending down, dancing, standing up,

walking where each of them have more than 100,000

frames, changing the ratio between the smaller and

larger data sets to 76%.

The results obtained on this second round of pre-

dictions are presented in Table 2 below. They are

evidence that the low accuracy detected in the first

experiment was due to underfitting the model, which

means that with a larger training data set the network

should perform better even when considering larger

ontologies.

Once more, taking the best result as an example,

calculating the confusion matrix for the experiment

Table 2: Accuracy results after second experiment that con-

sidered only the four larger training data sets.

resulted in Figure 5. Here the results were signi-

ficantly better relatively to the previous experiment.

This can be observed considering that the values in

the main diagonal of the matrix are higher than the

rest of the matrix (ideally a confusion matrix would

appear as an identity matrix).

Figure 5: Confusion matrices for the predictions conside-

ring sample size = 20 and the four larger training data sets

(on the left) and the overall result after combining all 5 pre-

dictions (on the right).

Although the results after this experiment repre-

sented an improvement in regard to the last experi-

ment, they still were not as good as one could ex-

pect. After a careful analysis of the confusion ma-

trices obtained after the combined results for each

experiment, it was noticeable that a specific set of

classes were performing better despite the fact those

were not the larger data set at disposal. Figure 4

on the right show this combined matrix where it is

possible to infer this alternative four classes of prefe-

rence: “bending down”, “jumping”, “running” and

“walking” as the four ones showing the most promi-

sing results.

Next section present the results for the third expe-

riment considering exactly these four classes. Worth

noticing that these data sets have significantly diffe-

rent training data set sizes and still the network were

able to train satisfactorily in them. We hypothesize

that this is due the nature of those specific actions that

significantly vary from each, making it simpler for the

network to differentiate them from each other.

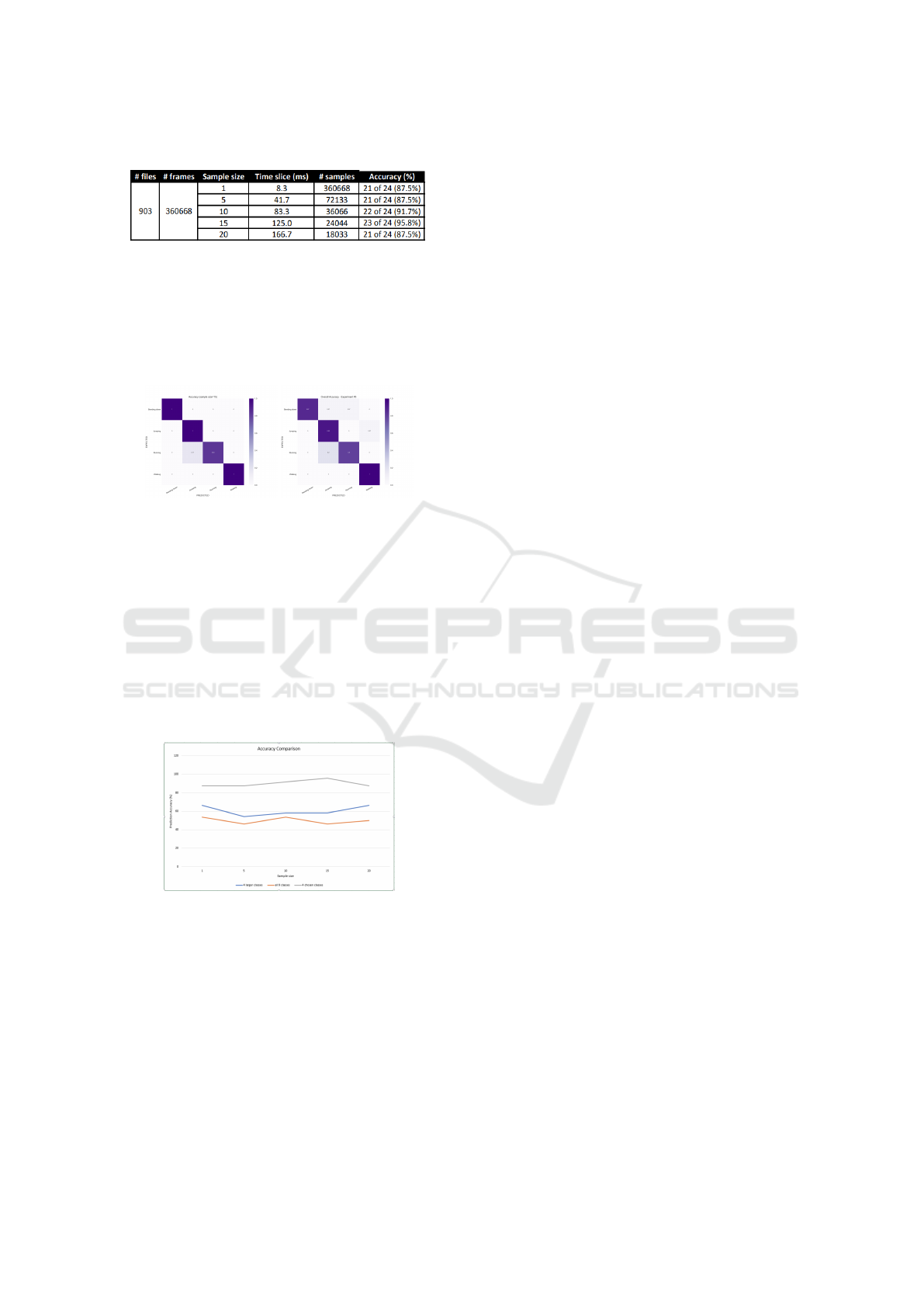

4.5.3 Experiment # 3

In this experiment all training data set was composed

of 903 files, separated into four categories: “bending

down”, “jumping”, “running” and “walking” and the

prediction data set containing 24 files.

This was the most successful experiment of them

all and the results clearly demonstrate the feasibility

Using LSTM for Automatic Classification of Human Motion Capture Data

241

Table 3: Accuracy results after third experiment.

of the model and allow us to conclude that the other

experiments would also perform better if more trai-

ning would be available.

Figure 6 below depicts the results for the best ex-

periment performed under the described conditions

(size = 15) and also the resulting obtained by com-

bining all five experiments with this ontology.

Figure 6: Confusion matrices considering sample size = 15

and four selected labels (on the left) and the overall result

of the experiment on the right.

4.5.4 Summary

Figure 7 compares the accuracy (vertical axis) obtai-

ned with the experiments using different sample sizes

(horizontal axis). In orange it is shown the predicti-

ons considering all 9 categories, in blue the predicti-

ons using a subset containing only the four larger data

sets and in gray the experiment selecting the four most

successfully recognized in the first experiment.

Figure 7: Comparison between the results of the three ex-

periments.

In summary, the results obtained with these expe-

riments can be considered promising, indicating the

viability of the model, showing that a stacked LSTM

neural network can successfully learn how to classify

human actions from motion capture data (proof-of-

concept).

4.6 Limitations

• Right now our system has the limitation of only

using CMU library skeleton structure (Figure 3),

which means that all training files and prediction

data have to have the same length (N

joints

). A

more generic approach that shall consider retar-

geting different skeleton structures into a baseline

model, thus allowing using multiple representati-

ons together is under development;

• Also, another limitation regarding data is the fact

that we are constrained to the size of the trai-

ning data set available for experimentation. So,

the results that have been obtained reflect that.

Although, the significant improvement the second

experiment showed relatively to the first, make us

confident that this, at least, indicates the feasibi-

lity of the model and that, with larger databases,

the system should perform much better in terms

of accuracy classifying the data thus solving the

underfitting problem;

• Allowing recognition of other features from the

motion captured data, such as affective body pos-

tures (Kleinsmith et al., 2011), and gestures, e.g.

a ‘happy walk’ or a ‘sad handshake’. This feature

would be of the most importance when trying to

retrieve creative content that involves digital ac-

tors (pantomime) and crowd simulations;

• Extending the ontology in a way that would al-

low developing automatic recognizers for any ot-

her type of media related to the creative process in

a studio.

5 CONCLUSIONS

Finding new (more efficient) ways of authoring crea-

tive content is a feature that interest the most to com-

panies in this sector. This work aims at studying ways

of improving productivity by reducing time and ef-

fort authoring new animations by reusing older me-

dias into new projects. Since the volume of material

produced by such companies can be extremely large,

cataloging old ‘assets’ to facilitate tag-based searches

for future reference is a key aspect when dealing with

problems of this nature.

In this project, we are interested in developing

a tool for automatic classification of motion capture

content in terms of the actions the actor is performing,

like walking, running, jumping, etc.

We designed a system that relies on deep lear-

ning, more specifically on long short-term memory

(LSTM) neural networks, to analyze the content of

GRAPP 2019 - 14th International Conference on Computer Graphics Theory and Applications

242

motion capture files in BVH format and classify it

according to labels defined by a simplified ontology

composed of 9 action tags.

Our approach considered a separate data set of ca-

refully edited mocap files for training the network on

how to recognize each action. These data sets were

adapted from the freely available CMU Motion Cap-

ture Library. After training, the network was tested

using a different set of files that did not have been

used during training. For the sake of assessment, each

of these files were manually annotated with the ex-

pected label.

Comparing the results obtained by the classifica-

tion software against the expected manually annota-

ted tags, the system showed, in several of the tests, an

accuracy in some cases better than 95%, what can in-

dicate that the original hypothesis have been satisfied.

For the future, it’s expected to improve training

by adding other actions to the ontology like, for in-

stance, considering affective body postures and/or ot-

her kinds of medias that might be of interest in a pro-

duction pipeline like textures, sounds, etc.

The ultimate goal would be to design an extenda-

ble modular content annotator capable of annotating

with different types of medias, based on a general-

purpose ontology.

Another possible application that might gain from

this automatic motion capture action recognition

technology is authoring character animations for the

purpose of retargeting crowd behaviors to different

scenarios. In theory, such an AI system could help

understanding each character’s movements in a given

situation and then help adapting the animations to new

target scenarios, and facilitate authoring crowd simu-

lation.

ACKNOWLEDGMENT

This publication has emanated from research sup-

ported in part by a research grant from Science

Foundation Ireland (SFI) under the Grant Number

15/RP/2776 and in part by the European Unions Hori-

zon 2020 Research and Innovation Programme under

Grant Agreement No 780470.

REFERENCES

Brownlee, J. (2018). Long Short-Term Memory Networks

with Python - Develop Sequence Prediction Models

With Deep Learning. Machine Learning Mastery.

[eBook].

B

¨

utepage, J., Black, M. J., Kragic, D., and Kjellstr

¨

om,

H. (2017). Deep representation learning for hu-

man motion prediction and classification. CoRR,

abs/1702.07486. http://arxiv.org/abs/1702.07486.

CMU Graphics Lab (2018). CMU graphics lab motion cap-

ture database. http://mocap.cs.cmu.edu/.

Delbridge, M. (2015). Motion Capture in Performance -

An Introduction. Palgrave Macmillan UK, first edition

edition.

Du, Y., Wong, Y., Liu, Y., Han, F., Gui, Y., Wang, Z.,

Kankanhalli, M., and Geng, W. (2016). Marker-less

3D human motion capture with monocular image se-

quence and height-maps. In European Conference on

Computer Vision, pages 20–36. Springer.

Fabbri, M., Lanzi, F., Calderara, S., Palazzi, A., Vezzani,

R., and Cucchiara, R. (2018). Learning to detect and

track visible and occluded body joints in a virtual

world. CoRR, abs/1803.08319. http://arxiv.org/abs/

1803.08319.

Gupta, A., Martinez, J., Little, J. J., and Woodham, R. J.

(2014). 3d pose from motion for cross-view action re-

cognition via non-linear circulant temporal encoding.

In 2014 IEEE Conference on Computer Vision and

Pattern Recognition, pages 2601–2608.

Hochreiter, S. and Schmidhuber, J. (1997). Long short-

term memory. Neural Computation, 9(8):1735–1780.

D.O.I.: 10.1162/neco.1997.9.8.1735, https://doi.org/

10.1162/neco.1997.9.8.1735.

Kleinsmith, A., Bianchi-Berthouze, N., and Steed, A.

(2011). Automatic recognition of non-acted affective

postures. Trans. Sys. Man Cyber. Part B, 41(4):1027–

1038. D.O.I.: 10.1109/TSMCB.2010.2103557.

Martinez, J., Black, M. J., and Romero, J. (2017). On

human motion prediction using recurrent neural net-

works. CoRR, abs/1705.02445. http://arxiv.org/abs/

1705.02445.

Menache, A. (2011). Understanding Motion Capture for

Computer Animation. Morgan Kaufmann, second edi-

tion edition.

SAUCE Project (2018). Smart asset re-use in creative envi-

ronments - SAUCE. http://www.sauceproject.eu.

Tekin, B., Rozantsev, A., Lepetit, V., and Fua, P. (2016). Di-

rect prediction of 3d body poses from motion compen-

sated sequences. In 2016 IEEE Conference on Com-

puter Vision and Pattern Recognition (CVPR), pages

991–1000.

Toshev, A. and Szegedy, C. (2014). Deeppose: Human pose

estimation via deep neural networks. In The IEEE

Conference on Computer Vision and Pattern Recog-

nition (CVPR).

Using LSTM for Automatic Classification of Human Motion Capture Data

243