ID-Softmax: A Softmax-like Loss for ID Face Recognition

Yan Kong

1

, Fuzhang Wu

1

, Feiyue Huang

2

and Yanjun Wu

3

1

Institute of Software, Chinese Academy of Sciences, Beijing, China

2

YouTu Lab, Tencent, China

3

State Key Laboratory of Computer Science, Beijing, China

Keywords:

Face Recognition, Loss Function, Softmax, Convolutional Neural Network.

Abstract:

The face recognition between photos from identification documents (ID, Citizen Card or Passport Card) and

daily photos, which is named FRBID(Zhang et al., 2017), is widely used in real world scenarios. However,

traditional Softmax loss of deep CNN usually lacks the power of discrimination for FRBID. To address this

problem, in this paper, we first revisit recent progress of face recognition losses, and give the theoretical and

experimental analysis on the reason why Softmax-like losses work badly on ID-daily face recognition. Then

we propose an novel approach named ID-Softmax, which use ID face features as class ’agent’ to guide the deep

CNNs to learn highly discriminative features between ID photos and daily photos. In order to promote the ID-

daily face recognition, we collect a large dataset ID74K, which includes 74,187 identities with corresponding

ID photos and daily photos. To test our approach, we evaluate the feature distribution and face verification

performance on dataset ID74K. In experiments, we achieve the best performance when comparing with other

state-of-the-art methods, which verifies the effectiveness of the proposed ID-Softmax loss.

1 INTRODUCTION

Face recognition is a biometric identification techno-

logy based on human facial feature information, as

it has been studied generally for over 50 years. In re-

cent years, with the rapid development of big data and

deep learning, there have achieved remarkable impro-

vements in deep face recognition (verification in par-

ticular) (Sun et al., 2014; Lu and Tang, 2014). In real

world applications, face recognition between ID pho-

tos and daily photos, which is known as face recog-

nition between photos from identification documents

(ID, Citizen Card or Passport Card) and daily pho-

tos (FRBID) is gaining more attention because it uses

face from an ID photo as gallery and thus does not

require the probe to be registered in advance (Zhang

et al., 2017). We show examples of ID photo and daily

photos in Figure 1. In this paper, we represent face ve-

rification between ID face photo and daily face photo

as ID-daily, and represent face verification between

daily face photo and daily face photo as daily-daily.

Though the previous deep face models can achieve

fascinating results(Schroff et al., 2015; Wen et al.,

2016; Liu et al., 2016; Liu et al., 2017; Wang et al.,

2018), researchers find that the recognition perfor-

mance drops dramatically when these models are ap-

plied to the real world security certificate applications

(Zhou et al., 2015). There are several issues associa-

ted with FRBID.

The first challenge is the data imbalance of ID

photos and daily photos in train phase. Benefiting

from the dramatic increased web data, we can col-

lect millions of daily photos easily. Due to the photo

capture environment and privacy issues, the ID photos

are always restricted on the Internet. Hence, the col-

lection of large scale and pair-wised ID-daily photos

is still expensive. How to apply deep learning on an

unbalanced ID photos dataset remains a general pro-

blem.

The second challenge encountered is the hetero-

geneity of shooting environment between the gallery

set and probe set (Xie et al., 2015). In real world sce-

narios, even though the ID photos or e-passports are

captured in a very stable environment, most ID photos

are compressed with low quality parameter because of

ROM(Read-Only Memory) limitation. Furthermore,

the probe photos are captured in a highly unstable en-

vironment using equipments such as surveillance and

mobile-phone cameras. Noise, blur, arbitrary pose

and age changing increase the recognition difficult be-

tween ID photos and daily photos (Hong et al., 2017).

Under the scenario of FRBID, an obvious diffe-

412

Kong, Y., Wu, F., Huang, F. and Wu, Y.

ID-Softmax: A Softmax-like Loss for ID Face Recognition.

DOI: 10.5220/0007370904120419

In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2019), pages 412-419

ISBN: 978-989-758-354-4

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

rence is the frequency of ID-daily face recognition be-

tween train phase and test phase. In train phase, the

sampling frequency of ID-daily photos is low because

of imbalance of ID photos and daily photos. In real

world application(test phase), all recognitions are be-

tween ID photos and daily photos. Most recently face

recognition algorithms (Wen et al., 2016; Liu et al.,

2016; Liu et al., 2017; Wang et al., 2018) are not de-

signed to be optimized well under imbalance FRBID

scenario. To overcome the difficulty of FRBID, we

propose a novel ID-Softmax loss, which aims to opti-

mizing ID-daily face recognition directly.

Our major contributions can be summarized as

follows:

(1)To train an available CNN model for real world

ID-daily face recognition applications, we collect a

face dataset named ID74K, which contains ID photos

and the corresponding daily photos for each person.

(2)We propose a new Softmax-like loss (ID-

Softmax) as training supervision to solve the imba-

lance of ID-daily face photos in training datasets. By

simulating real ID-daily face recognition scenario, we

use ID face features as class ’agent’ to guide the deep

CNNs to learn highly discriminative features between

ID photos and daily photos. Our ID-Softmax loss

could improve the performance of FRBID obviously.

(3)Our experiments show that with the supervi-

sion of ID-Softmax, the trained CNN model achieves

better recognition performance when comparing with

other existing methods.

2 RELATED WORK

Deep Convolutional Neural Network. Convolutio-

nal neural networks (CNNs) have been widely used

in computer vision community, and improve the state-

of-the-art performance for a wide variety of computer

vision task significantly. Face recognition as an im-

portant computer vision application, has achieved sig-

nificant progress thanks to the great success of deep

CNN models, such as VGG(Simonyan and Zisser-

man, 2014), GooLeNet(Szegedy et al., 2015), Res-

Net(He et al., 2016) and so on.

Face Recognition Loss Function. Loss function

plays an important role in deep face recognition. In

the early work of deep face recognition (Sun et al.,

2014; Taigman et al., 2014), model is trained on a la-

beled facial dataset supervised by Softmax loss, and

then the feature vector is taken from an intermedi-

ate layer of the network for face recognition. Since

Softmax loss does not directly optimize the face fea-

ture comparison in face recognition, in order to furt-

her improve the discriminative of face feature, resear-

chers proposed new loss functions running in Eucli-

dean space and angular space.

In Euclidean space based loss, the Contrastive loss

(Chen et al., 2014) and the Triplet loss (Schroff et al.,

2015) use pair training strategy to reduce inner-class

variations and increase inter-class variations. Howe-

ver, a good sampling method is essential for the Con-

trastive loss and the Triplet loss to guarantee a good

model convergence. In order to reduce the optimizing

difficulty, Center loss (Wen et al., 2016) learns class

feature centers for each identities, which looses the

constraint metric from pairwise distance to instance-

center distance, but it still need to combine with Soft-

max loss to training recognition model.

Benefiting from better geometric interpretation,

the angular space based losses are attracting more at-

tention of researchers. Both Large Margin Softmax

(Liu et al., 2016) and SphereFace (Liu et al., 2017)

add angular constraints for each identities by mul-

tiplying a parameter m on feature angle. In order

to make both cosine loss function can be optimized,

a piecewise function is introduced to guarantee the

monotonicity. Furthermore, Large Margin Softmax

and SphereFace also need original Softmax to ensure

the convergence. To overcome the optimization dif-

ficulty, CosFace (Wang et al., 2018) introduces mar-

gin in cosine space instead of angular space. CosFace

can be implemented easily and archives the state-

of-the-art performance on MegaFace (Kemelmacher-

Shlizerman et al., 2016).

Normalization. Feature and weight normalization

have be proved very effective for face recognition.

NormFace (Wang et al., 2017) normalizes the lear-

ned deep features and weight matrix of the fully con-

nected (FC) layer before Softmax loss layer, which

forces CNN to concentrate more on the angle opti-

mization while ignoring radial variation. SphereFace

(Liu et al., 2017) and CosFace (Wang et al., 2018) also

use normalization to improve face recognition perfor-

mance.

3 THE PROPOSED APPROACH

In this section, we firstly introduce the dataset ID74K

used for our training and testing. Then, we revi-

sit recent progress on Softmax loss and analysis its

drawback on FRBID. Finally, we introduce our ID-

Softmax loss.

3.1 Data Collection

Most famous public face recognition datasets, such

as LFW(Huang et al., 2008), CASIA-WebFace (Yi

ID-Softmax: A Softmax-like Loss for ID Face Recognition

413

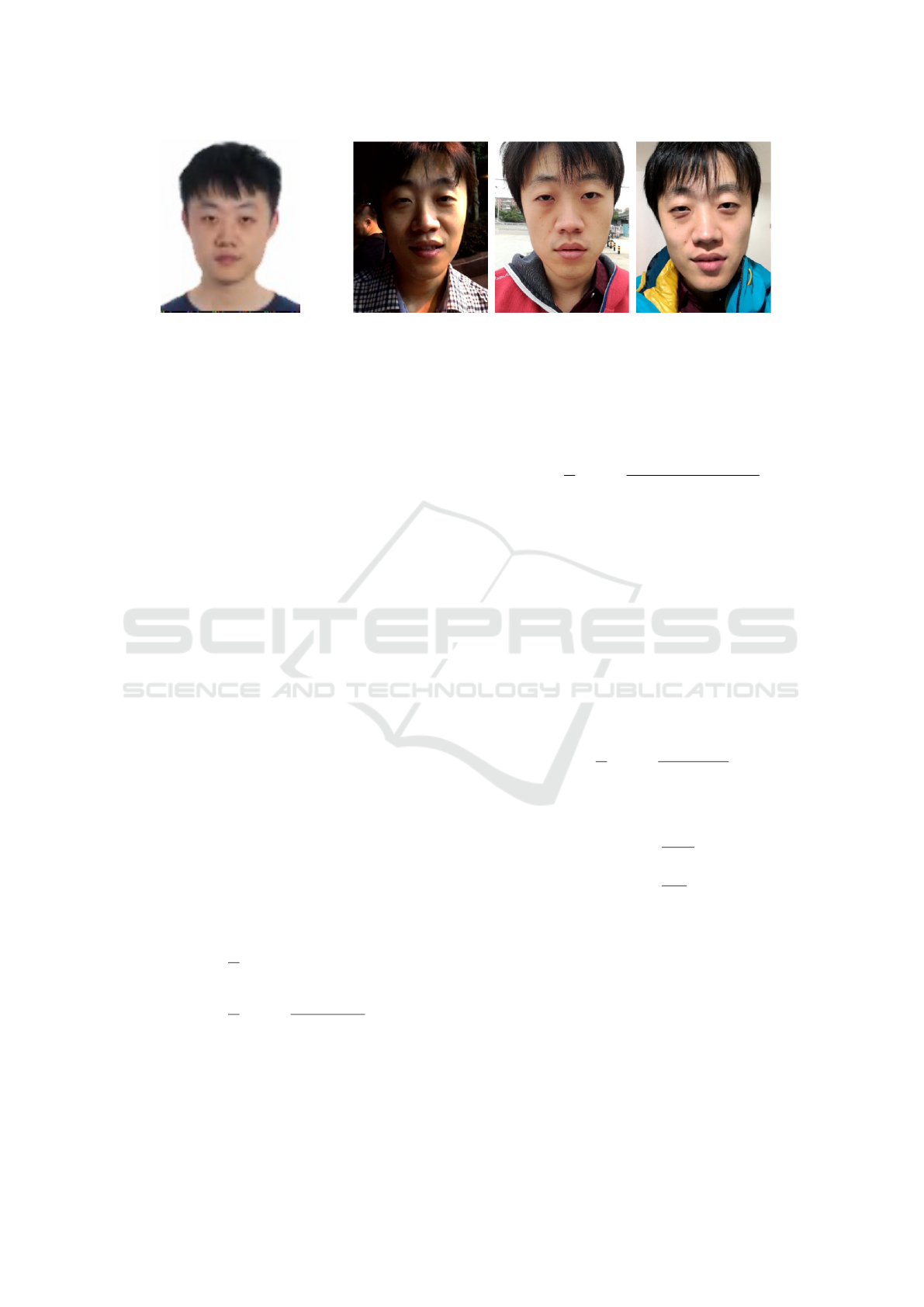

(a) ID photo (b) Daily photos

Figure 1: An example of ID photo and daily photos in our ID74K dataset. (a) is an ID photo collected from IC chip embedded

in Chinese Identity Card by a Card Reader, and its image quality is low because of image compression, (b) is a series of daily

life photos captured by mobile-phone cameras.

et al., 2014), MegaFace (Kemelmacher-Shlizerman

et al., 2016), are crawled from Internet. Due to lack

of ID face photos, researchers find that the recognition

performance drops dramatically when models trained

on public datasets are applied on (Zhou et al., 2015).

In order to overcome the data limitation, we collect

an ID-daily face recognition dataset named ID74K.

There are 74,187 identities in ID74K, and each iden-

tity contains 1 ID face photo and 5 daily photos (some

examples showed in Figure 1) . The ID face photos

are collected from IC chip embedded in Chinese Iden-

tity Card by a Card Reader, while the daily photos are

captured by mobile-phone cameras from real life. All

these photos are provided by volunteers with reasona-

ble payment. In our experiments, we use 70,000 iden-

tities for training and use 4,187 identities for evalua-

tion. It need to note that our dataset is collected from

daily life, which is very different from public face re-

cognition dataset, and the distribution of ID photos

and daily photos are still heavy unbalanced.

3.2 Recent Progress on Softmax

Classical Softmax. As an important part of deep

image classification, Softmax loss is existed in deep

models generally. Softmax function is a generaliza-

tion of the logistic function. Given an input feature

vector x

i

and its corresponding label y

i

, the classical

Softmax loss can be written as

L

s

= −

1

N

N

∑

i=0

log(p

i

)

= −

1

N

N

∑

i=0

log(

e

W

T

y

i

x

i

+b

y

i

∑

j

e

W

T

j

x

i

+b

j

).

(1)

In equation 1, let d be the feature dimension of x

i

,

W

j

is the j-th column of W ∈ R

d×n

in the last fully

connected layer, b ∈ R

n

is the bias term, and b

j

is

the j-th element of b. The size of mini-batch is N.

We fix the bias b = 0 for simplicity, and the classical

Softmax function can be rewritten as

L

s

= −

1

N

N

∑

i=0

log(

e

||W

y

i

||||x

i

||cos(θ

y

i

,i

)

∑

n

j=0

e

||W

j

||||x

i

||cos(θ

j

,i)

). (2)

where the θ

j,i

is the angle between W

j

and x

i

.

Normalized Softmax. Feature and weight normali-

zation have been proved effective for face recogni-

tion (Wang et al., 2017). With L2 normalization of

W

j

and x

i

, the neural network can directly optimize

the cosine similarity. After normalization, the neural

network will fail to converge. In order to avoid the

convergence difficulty, we follow the suggestion in

NormFace (Wang et al., 2017), and introduce a sca-

lar factor s to the normalization version of Softmax

loss. Hence, the Softmax loss with cosine distance

can be rewritten as

L

n

= −

1

N

N

∑

i=0

log(

e

scos(θ

y

i

,i

)

∑

j

e

scos(θ

j,i

)

).

(3)

subject to

˜

W =

W

||W||

,

˜

x =

x

||x||

,

cos(θ

j,i

) =

˜

W

T

j

˜

x

i

.

Large Margin Cosine Softmax. Deep features le-

arned by classical Softmax and normalized Softmax

are still not sufficiently discriminative because these

losses only emphasize correct classification. In order

to further reduce inner-class variations and increase

inter-class variations of face feature, CosFace (Wang

et al., 2018) add cosine margin to the classification

boundary by introducing a parameter m, which is na-

turally incorporated with the cosine formulation of

Softmax. The large margin cosine Softmax is defined

VISAPP 2019 - 14th International Conference on Computer Vision Theory and Applications

414

as

L

c

= −

1

N

N

∑

i=0

log(

e

s·(cos(θ

y

i

,i

)−m)

e

s·(cos(θ

y

i

,i

)−m)

+

∑

j6=y

i

e

s·cos(θ

j,i

)

).

(4)

3.3 ID-Softmax

At the beginning of this section, we analysis the

Softmax-like loss theoretically. Inspired by Norm-

Face (Wang et al., 2017), normalized

˜

W

T

j

˜

x

i

could be

rewritten as

˜

W

T

j

˜

x

i

= 1 −

1

2

||

˜

W −

˜

x

i

||

2

2

, (5)

Hence, we reformat formula (3) as

L

0

n

= −

1

N

N

∑

i=0

log(

e

s(1−

1

2

||

˜

W

y

i

−

˜

x

i

||

2

2

)

∑

j

e

s(1−

1

2

||

˜

W

j

−

˜

x

i

||

2

2

)

)

= −

1

N

N

∑

i=0

log(

e

s

· e

−

s

2

||

˜

W

y

i

−

˜

x

i

||

2

2

∑

j

e

s

· e

−

s

2

||

˜

W

j

−

˜

x

i

||

2

2

).

(6)

Due to

s

2

||

˜

W

j

−

˜

x

i

||

2

2

≥ 0 and f (x) = e

x

is monoto-

nously, the learning process of Softmax for CNN can

be formulated as

argmin

W,x

||

˜

W

j

−

˜

x

i

||

2

2

, i f y

i

= j

argmax

W,x

||

˜

W

j

−

˜

x

i

||

2

2

, i f y

i

6= j,

(7)

which is similar to triplet loss,

L = max(0, m + ||x

i

− x

p

||

2

2

− ||x

i

− x

n

||

2

2

)

y

i

= y

p

, y

i

6= y

n

.

(8)

Hence, the W

i

can be treated as the ’agent’ of the i-th

class. During the training phase, Softmax loss mi-

nimizes the angle between W

y

i

and x

i

, and maximi-

zes the angle between W

j6=y

i

and x

i

. After network

convergence, the W

i

will roughly correspond to the

means of features of the i-th class, because Softmax

assumes that individual samples of classes are equally

important.

However, in real world FRBID scenario, all face

comparison are between ID photos and daily photos.

As mentioned in section 1, there are obvious diffe-

rence between ID photos and daily photos, which le-

ads that the feature of i-th class ID photos is far away

from W

i

.

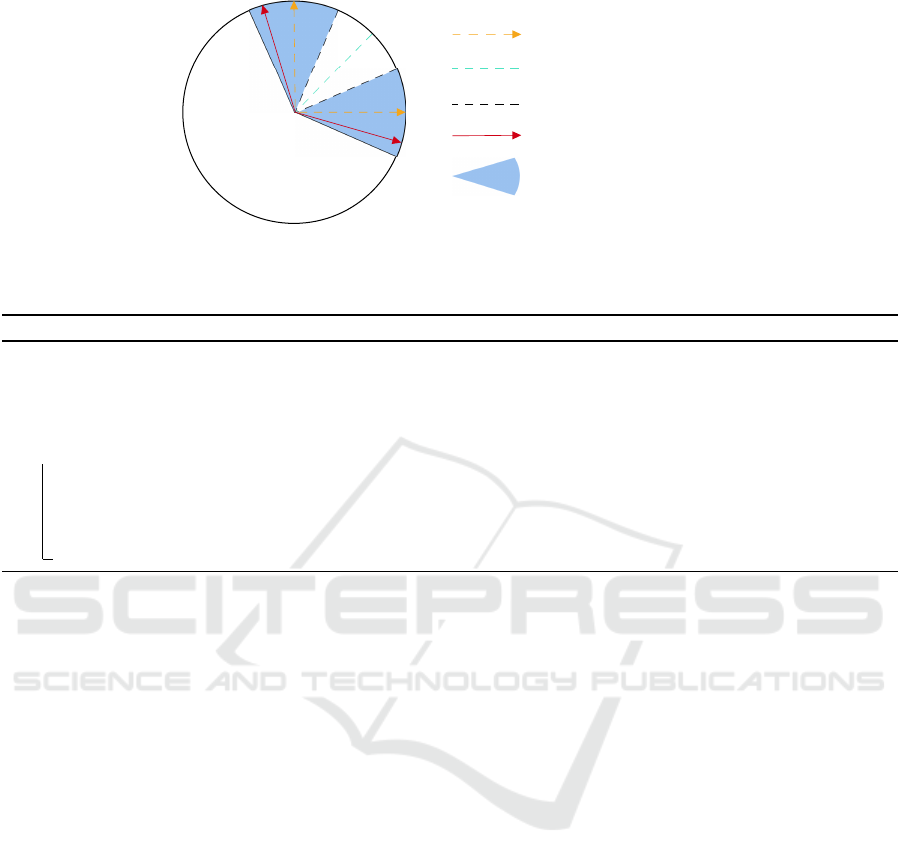

As illustrated in Figure 2, under classical Softmax

loss, W

0

and W

1

will converge to the means of fea-

tures of class 0 and class 1 respectively. Since there

is a large margin between W

0

and z

0

, the learned ID

face feature z

0

is not a good representation of class 0.

The mean θ reported in Table 1 reflects the margin be-

tween W

0

and z

0

. The cosine margin introduced by

CosFace can reduce the difference between W

0

and

z

0

, but it cannot solve the problem intrinsically.

According to the above theoretical and experi-

mental analysis, the optimization goals of recent pro-

posed Softmax losses are different from the FR-

BID scenario. So, how to develop an effective loss

function to improve the discriminative power in FR-

BID scenario ? It is intuitive to replace the ’agent’

of i-th class (W

i

) with the feature of i-th class ID

photo(z

i

). Let’s rewrite the formula (3) as

L

id

= −

1

N

N

∑

i=0

log(

e

s||

˜

z

y

i

||||

˜

x

i

||cos(θ

0

y

i

,i

)

∑

j

e

s||

˜

z

j

||||

˜

x

i

||cos(θ

0

j,i

)

),

= −

1

N

N

∑

i=0

log(

e

s

· e

−

s

2

||

˜

z

y

i

−

˜

x

i

||

2

2

∑

j

e

s

· e

−

s

2

||

˜

z

j

−

˜

x

i

||

2

2

)

(9)

where the θ

0

j,i

is the angle between z

j

and x

i

. The for-

mulation effectively characterizes the ID-daily face

feature variations. Ideally, we need to update z and x

as the deep feature changed. In other words, we need

to extract ID photo features of every class in every

iteration, which is inefficient even impractical.

To address this problem, one solution is using pair

training strategy like contrastive loss and triplet loss,

which is not easy enough to training. In our solution,

we make necessary modification for Formula 9. We

replace the ID face feature z

i

with the snapshot of ID

face feature z

0

i

. In each iteration, the z

0

i

is replaced

by the ID face feature of the corresponding classes in

mini-batch, which means ’agent’ of some classes may

not update. In other words, if there are ID card fea-

tures in one mini-batch, then only z

0

i

s corresponding

these ID cards are updated. Formally, we adopt Large

Margin Cosine Softmax and define ID-Softmax as

L

id

= −

1

N

N

∑

i=0

log(

e

s(cos

0

(θ

y

i

,i

)−m)

e

s(cos

0

(θ

y

i

,i

)−m)

+

∑

j6=y

i

e

s(cos

0

(θ

j,i

))

).

(10)

subject to

˜

z

0

=

z

0

||z

0

||

,

˜

x =

x

||x||

,

cos

0

(θ

j,i

) =

˜

z

0

T

j

˜

x

i

.

The learning procedure of ID-Softmax can be sum-

marized as Algorithm 1.

ID-Softmax: A Softmax-like Loss for ID Face Recognition

415

w0

z0

z1

w1

P

1

P

0

P

2

Weight of last FC layer

Softmax decision boundary

CosFace decision boundary

ID face feature

vector

Target feature region

Figure 2: Geometrical interpretation of Softmax loss under feature perspective. In FRBID scenario, z

0

is used as the repre-

sentation of class 0. However, since there is a large margin between W

0

and z

0

, the learned ID face feature z

0

is not a good

representation of class 0.

Algorithm 1: The ID-Softmax feature learning algorithm.

Input: Training data I

i

. Nerual network feature function f (θ

C

, I

i

). Initialized face feature network

parameter θ

C

. Feature parameter of last fully connected layer z

0

y

i

. (The learning rate of z

0

y

i

is set

to 0.) Learing rate µ

t

. The number of iterator t = 0.

Output: face feature network parameter θ

C

while network not converge do

t = t + 1;

Foward network and compute the ID-Softmax loss L

id

;

For each I

i

in current iteration, replace the parameter z

0

y

i

by f (θ

C

, I

i

) if I

i

is ID photo. ;

Backward network and update parameter θ

C

.

4 EXPERIMENTS

4.1 Implementation Details

Preprocessing. For all face photos, we use public

available MTCNN (Zhang et al., 2016) open source

implementation to detect and align faces. The 5 facial

points generated by MTCNN are used to perform si-

milarity transformation. All face photos are resized to

120x120 size. Each pixel of RGB photos is normali-

zed by subtracting 127.5 then dividing by 128.

Training. Since CNN have achieved the outstan-

ding performance in the face recognition tasks, we use

ResNet-18 (He et al., 2016) with 512 output of fully

connected layer as CNN architecture. Our model and

training code are implemented on MxNet framework

(Chen et al., 2015). The CNN models are trained by

SGD with momentum. We set momentum to 0.9, ini-

tial learning rate to 0.01, weight decay to 0.0005, ba-

tch size to 128. The networks are trained on 4 Nvidia

Tesla P40 GPUs. For all models, we train CNN by

120 epochs, and the learning rate is divided by 10 at

the 40, 80, 120 epochs. The training faces are hori-

zontally flipped for data augmentation. As mentio-

ned in Section 3.1, in our experiments, we use 70,000

identities for training and use 4,187 identities for eva-

luation. There is no overlap between training dataset

and evaluation dataset.

4.2 Evaluation

In this section, we compare the face recognition per-

formance of different loss functions on FRBID sce-

nario, and evaluate the influence of different update

strategies. For fair comparison, we respectively train

four kind of models under the supervision of Norma-

lized Softmax(Model NormFace), Large Margin Co-

sine Softmax loss (Model CosFace) and ID-Softmax

loss(Model ID-Softmax A, Model ID-Softmax B).

Due to lack of ID face photos in public available da-

tasets, such as LFW, CSAIA-WebFace and so on, we

only evaluate different methods on our ID74K data-

set. The experiment results of Table 2 show that our

method is also competitive in “daily vs daily” face re-

cognition scenario.

Model NormFace: We use NormFace as baseline

model. The training procedure is described in section

4.1. Furthermore, we set scaling parameter s to 64,

which is used by CosFace paper (Wang et al., 2018).

It takes 45 hours to train this model.

Model CosFace: The CosFace has been proven ef-

fective to learn compact face feature for face recog-

VISAPP 2019 - 14th International Conference on Computer Vision Theory and Applications

416

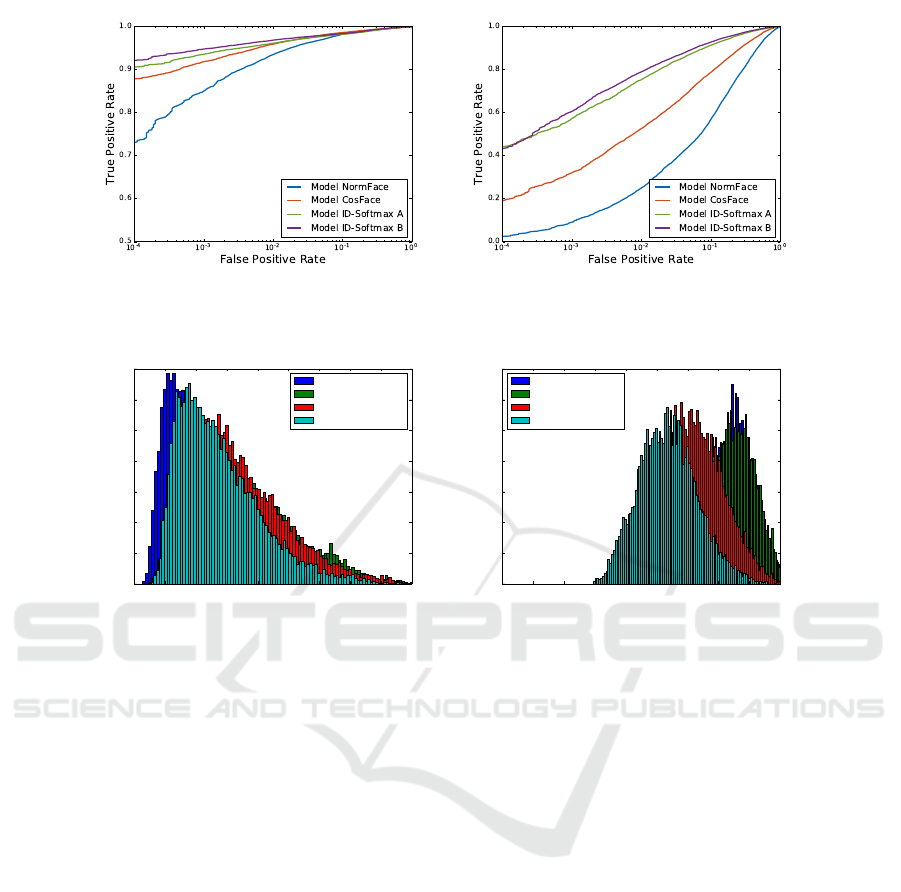

(a) ROC curves of daily-daily scenario. (b) ROC curves of ID-daily scenario.

Figure 3: We draw the ROC curves of Model NormFace, Model CosFace, Model ID-Softmax A, Model ID-Softmax B in

ID-daily/daily-daily scenario respectively.

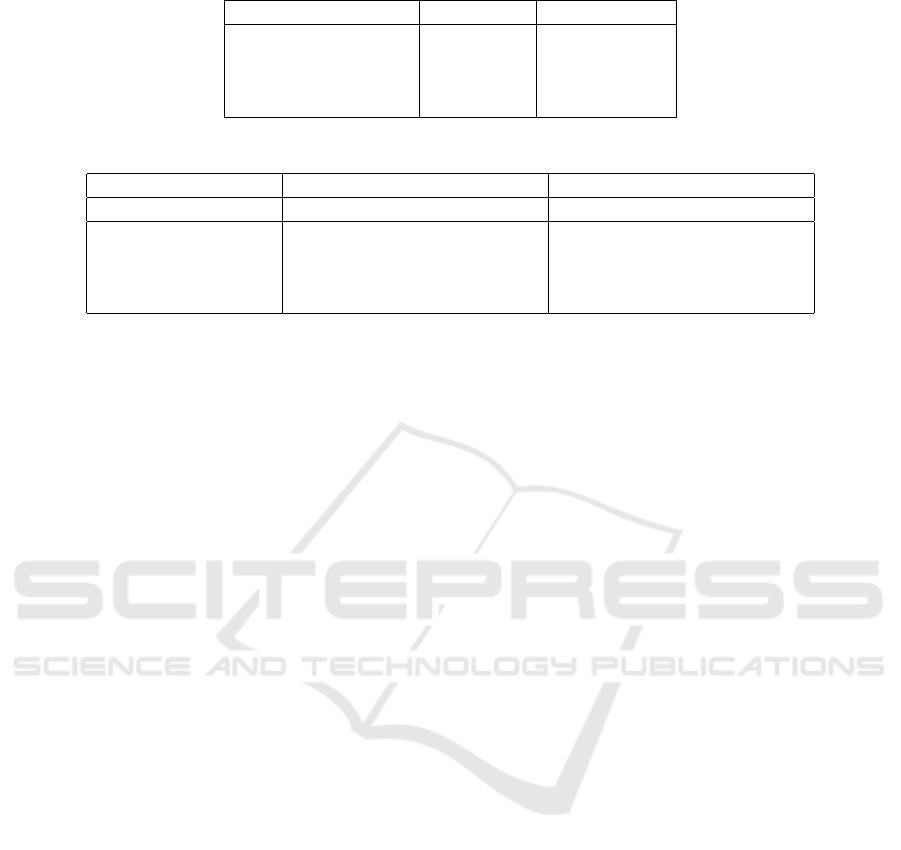

0 10 20 30 40 50 60 70 80 90

θ

0

100

200

300

400

500

600

700

Numbers

Model NormFace

Model CosFace

Model ID-Softmax A

Model ID-Softmax B

(a) θ distribution of daily-daily scenario.

0 10 20 30 40 50 60 70 80 90

θ

0

50

100

150

200

250

300

350

Numbers

Model NormFace

Model CosFace

Model ID-Softmax A

Model ID-Softmax B

(b) θ distribution of ID-daily scenario.

Figure 4: We draw the θ distribution of Model NormFace, Model CosFace, Model ID-Softmax A, Model ID-Softmax B in

ID-daily/daily-daily scenario respectively.

nition. The training procedure is described in section

4.1. Furthermore, we set scaling parameter s to 64,

and the margin parameter m is set to 0.35 which is

suggested by CosFace paper (Wang et al., 2018). It

takes 45 hours to train this model.

Model ID-Softmax A: This model is trained by ID-

Softmax loss. We update parameter z

0

in every mini-

batch iteration, and only the ID photo features existed

in current mini-batch are adopted for update. The le-

arning rate of Model ID-Softmax A is set to 0. It takes

45 hours to train this model.

Model ID-Softmax B: This model is trained by ID-

Softmax loss. We update parameter z

0

in every

60 mini-batch iteration. Compared with Model ID-

Softmax A, we extract ID features of ID photos in

training dataset for update all at the once. We up-

date z

0

every 60 mini-batch iteration instead of every

iteration because updating all ID’s z

0

s is computing

expensive. We choose 60 as update frequency empiri-

cally to accelerate network convergence. The learning

rate of Model ID-Softmax B is set to 0. It takes 120

hours to train this model.

4.2.1 Experiments on the Feature Distribution

In this section, we evaluate the effectiveness of mini-

mizing the intra-class distances between the ID face

photos and the daily photos for all the compared mo-

dels. In order to reflect the difference visually and

quantitatively, we calculate the average angle between

ID photos and daily photos for 4000 individuals of

ID74K dataset. In Figure 4(b), we visualize the dis-

tribution of θ between ID features and daily featu-

res. It’s easy to find that the average angle of our

ID-Softmax model is the smallest, which intuitively

proves that ID-Softmax loss is able to narrow the an-

gle between the ID face photos and daily face photos

in the feature space. The average angles are reported

in Table 1. We can note that the average feature an-

gle generated by our model is the smallest when com-

paring with other models in ID-daily scenario. The

difference of θ distribution in daily-daily scenario is

small(Figure 4(a)), and the mean θ of Model Norm-

Face is the smallest in daily-daily scenario.

ID-Softmax: A Softmax-like Loss for ID Face Recognition

417

Table 1: mean θ of different models.

ID vs Daily Daily vs Daily

Model NormFace 72.72 26.81

Model CosFace 74.30 32.40

Model ID-Softmax A 61.67 33.43

Model ID-Softmax B 53.64 27.57

Table 2: Face verification performance of different models.

ID vs Daily Daily vs Daily

TPR@FAR 1% 0.1% 0.01% 1% 0.1% 0.01%

Model NormFace 25.18% 9.23% 2.56% 93.55% 85.04% 73.20%

Model CosFace 52.73% 32.16% 19.34% 95.95% 91.87% 87.92%

Model ID-Softmax A 75.53% 57.48% 44.32% 96.15% 93.61% 90.67%

Model ID-Softmax B 79.17% 60.88% 43.54% 96.86% 94.80% 92.18%

4.2.2 Experiments on the Feature Verification

Face verification is one of the most widely used appli-

cation of face recognition. For face verification, the

algorithm should decide a given pair of photos is the

same person or not. Generally, we use True Accept

Rate(TAR) and False Accept Rate(FAR) to evaluate

the performance of face verification. In our experi-

ments, we follow the common protocol that is used

for face verification evaluation. Specifically, in ID-

daily scenario, we random sample ID photos and daily

photos from same individual as positive pair, sample

ID photos and daily photos from different individuals

as negative pair. In daily-daily scenario, we random

sample daily photos from same individual as positive

pair, sample daily photos from different individuals as

negative pair. We use cosine distance of L2 normali-

zed face feature as comparison method.

In Figure 3, we report the Receiver Operating

Characteristic (ROC) curves of different models at

different scenarios. In Table 2, we report TARs un-

der 1%, 0.1%, 0.01% FAR separately at different sce-

nario. From these results we have following obser-

vations. Firstly, not surprisingly, the performance of

daily-daily scenario is obviously better than ID-daily

scenario for all models. A model that works well in

daily-daily scenario may not be qualified for the ID-

daily scenario. We have show the theoretical and ex-

perimental analysis in Section 3.3. Secondly, models

trained by ID-Softmax(Model ID-Softmax A, Model

ID-Softmax B) have large advantage in ID-daily sce-

nario. There is a large performance gap between ba-

seline model (Model NormFace) and ID-Softmax mo-

dels. Compared with Model NormFace, the intra-

class variation of Model CosFace is smaller, because

the cosine margin improves the discriminative of deep

model. Surprisingly, in the daily-daily scenario, mo-

dels trained by ID-Softmax are better than others.

Thirdly, the Model ID-Softmax B has a small advan-

tage over the Model ID-Softmax A. The reason for the

better performance may be that we update the weights

synchronously during the training process of Model

ID-Softmax B. It is worth noting that the performance

of Model ID-Softmax A and Model ID-Softmax B is

still quite comparable, and the training speed of Mo-

del ID-Softmax A is faster (same with Model Cos-

Face). Through the experiment, we can conclude that

the performance of ID-Softmax loss is more competi-

tive, especially in the ID-daily scenario.

5 CONCLUSIONS

In this paper, we propose an novel approach named

ID-Softmax to guide the deep CNNs to learn highly

discriminative features in FRBID scenario, which

can boost the performance of face recognition. We

first revisit recent progress of face recognition losses,

then give the theoretical and experimental analysis on

the reason why Softmax-like losses work badly on

ID-daily face recognition. In order to promote the

ID-daily face recognition, we collect a large dataset

ID74K, which includes 74,187 identities with corre-

sponding ID photos and daily photos. Through the

experiments, we verify the effectiveness of the propo-

sed ID-Softmax loss. In the future, we intend to furt-

her analyze the impact of different training strategies,

and study the reason why our algorithm performs bet-

ter than CosFace in the daily-daily scenario.

REFERENCES

Chen, T., Li, M., Li, Y., Lin, M., Wang, N., Wang, M., Xiao,

T., Xu, B., Zhang, C., and Zhang, Z. (2015). Mx-

net: A flexible and efficient machine learning library

VISAPP 2019 - 14th International Conference on Computer Vision Theory and Applications

418

for heterogeneous distributed systems. arXiv preprint

arXiv:1512.01274.

Chen, Y., Chen, Y., Wang, X., and Tang, X. (2014). Deep

learning face representation by joint identification-

verification. In International Conference on Neural

Information Processing Systems, pages 1988–1996.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep resi-

dual learning for image recognition. In Proceedings of

the IEEE conference on computer vision and pattern

recognition, pages 770–778.

Hong, S., Im, W., Ryu, J., and Yang, H. S. (2017). Sspp-

dan: deep domain adaptation network for face recog-

nition with single sample per person. In Image Pro-

cessing (ICIP), 2017 IEEE International Conference

on, pages 825–829. IEEE.

Huang, G. B., Mattar, M., Berg, T., and Learned-Miller, E.

(2008). Labeled faces in the wild: A database forstu-

dying face recognition in unconstrained environments.

In Workshop on faces in’Real-Life’Images: detection,

alignment, and recognition.

Kemelmacher-Shlizerman, I., Seitz, S. M., Miller, D., and

Brossard, E. (2016). The megaface benchmark: 1 mil-

lion faces for recognition at scale. In Proceedings of

the IEEE Conference on Computer Vision and Pattern

Recognition, pages 4873–4882.

Liu, W., Wen, Y., Yu, Z., Li, M., Raj, B., and Song, L.

(2017). Sphereface: Deep hypersphere embedding for

face recognition. In The IEEE Conference on Compu-

ter Vision and Pattern Recognition (CVPR), volume 1,

page 1.

Liu, W., Wen, Y., Yu, Z., and Yang, M. (2016). Large-

margin softmax loss for convolutional neural net-

works. In ICML, pages 507–516.

Lu, C. and Tang, X. (2014). Surpassing human-level face

verification performance on lfw with gaussianface.

Computer Science.

Schroff, F., Kalenichenko, D., and Philbin, J. (2015). Fa-

cenet: A unified embedding for face recognition and

clustering. pages 815–823.

Simonyan, K. and Zisserman, A. (2014). Very deep con-

volutional networks for large-scale image recognition.

arXiv preprint arXiv:1409.1556.

Sun, Y., Wang, X., and Tang, X. (2014). Deep learning

face representation from predicting 10,000 classes. In

IEEE Conference on Computer Vision and Pattern Re-

cognition, pages 1891–1898.

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Angue-

lov, D., Erhan, D., Vanhoucke, V., and Rabinovich, A.

(2015). Going deeper with convolutions. In Procee-

dings of the IEEE conference on computer vision and

pattern recognition, pages 1–9.

Taigman, Y., Yang, M., Marc, and Wolf, L. (2014). Deep-

face: Closing the gap to human-level performance in

face verification. In IEEE Conference on Computer

Vision and Pattern Recognition, pages 1701–1708.

Wang, F., Xiang, X., Cheng, J., and Yuille, A. L. (2017).

Normface: l 2 hypersphere embedding for face verifi-

cation. In Proceedings of the 2017 ACM on Multime-

dia Conference, pages 1041–1049. ACM.

Wang, H., Wang, Y., Zhou, Z., Ji, X., Li, Z., Gong, D.,

Zhou, J., and Liu, W. (2018). Cosface: Large margin

cosine loss for deep face recognition. arXiv preprint

arXiv:1801.09414.

Wen, Y., Zhang, K., Li, Z., and Qiao, Y. (2016). A Discri-

minative Feature Learning Approach for Deep Face

Recognition. Springer International Publishing.

Xie, X., Cao, Z., Xiao, Y., Zhu, M., and Lu, H. (2015).

Blurred image recognition using domain adaptation.

In IEEE International Conference on Image Proces-

sing.

Yi, D., Lei, Z., Liao, S., and Li, S. Z. (2014). Lear-

ning face representation from scratch. arXiv preprint

arXiv:1411.7923.

Zhang, K., Zhang, Z., Li, Z., and Qiao, Y. (2016). Joint

face detection and alignment using multitask casca-

ded convolutional networks. IEEE Signal Processing

Letters, 23(10):1499–1503.

Zhang, S., He, R., Sun, Z., and Tan, T. (2017). Demeshnet:

Blind face inpainting for deep meshface verification.

IEEE Transactions on Information Forensics & Secu-

rity, 13(3):637–647.

Zhou, E., Cao, Z., and Yin, Q. (2015). Naive-deep face

recognition: Touching the limit of lfw benchmark or

not? Computer Science.

ID-Softmax: A Softmax-like Loss for ID Face Recognition

419