Social Touch in Human-agent Interactions in an Immersive Virtual

Environment

Fabien Boucaud

1

, Quentin Tafiani

1

, Catherine Pelachaud

1

and Indira Thouvenin

2

1

Institut des Systèmes Intelligents et de Robotique, CNRS UMR 7222, Sorbonne Universités, UPMC Campus Jussieu,

75005 Paris, France

2

Laboratoire Heudiasyc, CNRS UMR 7253, Sorbonne Universités, Université de Technologie de Compiègne,

60200 Compiègne, France

Keywords: Virtual Reality, Immersive Environment, Human-agent Interaction, Immersive Room, Embodied

Conversational Agents, Social Touch, Empathic Communication.

Abstract: Works on artificial social agents, and especially embodied conversational agents, have endowed them with

social-emotional capabilities. They are being given the abilities to take into account more and more

modalities to express their thoughts, such as speech, gestures, facial expressions, etc. However, the sense of

touch, although particularly interesting for social and emotional communication, is still a modality widely

missing from interactions between humans and agents. We believe that integrating touch into those

modalities of interaction between humans and agents would help enhancing their channels of empathic

communication. In order to verify this idea, we present in this paper a system allowing tactile

communication through haptic feedback on the hand and the arm of a human user. We then present a

preliminary evaluation of the credibility of social touch in human-agent interaction in an immersive

environment. The first results are promising and bring new leads to improve the way humans can interact

through touch with virtual social agents.

1 INTRODUCTION

Anthropology has shown how touch has always been

our main modality of interaction with tools (Leroi-

Gourhan, 1964). This is still true today in the digital

era, as we can see with the addition of more and

more touch-based properties to our smartphones or

computers (Cranny-Francis, 2011).

Artificial social agents such as the embodied

conversational agents can express thoughts and

emotions as well as interpret those of their

interlocutors through more and more interaction

modalities. Touch, however, is a sense still widely

missing from social interactions between human and

agents. For many cultural as well as technical

reasons, researches on social functions of touch only

started relatively recently (Cranny-Francis, 2011).

Those recent studies show that touch is a sense with

a lot of interesting communicative functions in the

same way as other types of non-verbal

communication like gestures or facial expressions

(M. J. Hertenstein, J. M. Verkamp, A. M. Kerestes,

and R. M. Holmes, 2006). Touch is considered

especially useful for empathic communication, i.e.

the communication of emotions.

With this paper, we intend to show a way to

integrate social touch into human-agent interaction

modalities in the context of virtual reality. It is our

belief that this would enhance empathic

communication channels between human and

agents. We therefore present a system and a

preliminary study allowing us to explore the idea

that an exchange of social touches between human

and agent, with the support of appropriate facial

expressions and gestures, enables a credible

empathic communication.

2 DEFINING SOCIAL TOUCH

AND SOCIAL TOUCH

TECHNOLOGIES

2.1 What Is Social Touch?

Social touch designates all the uses of touch with so-

Boucaud, F., Tafiani, Q., Pelachaud, C. and Thouvenin, I.

Social Touch in Human-agent Interactions in an Immersive Virtual Environment.

DOI: 10.5220/0007397001290136

In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2019), pages 129-136

ISBN: 978-989-758-354-4

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

129

cial intentions. A salutation handshake, a tap of

encouragement in the back, or any type of non-

accidental interpersonal touch can be considered as

an example of social touch.

Works of definition and classification of social

touch (M. J. Hertenstein, D. Keltner, B. App, B. a.

Bulleit, and A. R. Jaskolka, 2006) (M. J.

Hertenstein, R. Holmes, M. McCullough, and D.

Keltner, 2009) (Bianchi-Berthouze and Tajadura-

Jiménez, 2014) are an essential source of

information to elaborate the needs of technological

systems able to produce credible social touch. These

studies show how the many different types of touch

can be defined through their physical properties and

how each type of touch can be more particularly apt

to express certain specific emotions. Those studies

also show how touch is in itself a very multi-modal

sense with characteristics as diverse as pressure,

impact velocity, speed of the touch movement on the

skin (in the event of a caress for example), total

duration of the gesture,… But even then, Hertenstein

et al. also show how these are not sufficient to

correctly interpret the communicative intention of a

touch. Touch is indeed based on the principles of

equipotentiality and equifinality. That is to say that

one unique type of touch, such as hitting someone,

can be used to express anger as well as to express

encouragement if it is used with a sport teammate

for example: this is the concept of equipotentiality.

On the other hand, two different types of touch, such

as pushing and grasping someone, can still be used

indifferently to express the same emotion of anger:

this is the concept of equifinality. This means that

other factors than the sole physical properties of a

touch must be taken into account when socially

interpreting any touch event. Among those other

factors we can name: the situation in which the

touch takes place (competitive setting, salutations,

etc.), the relationship between the person touching

and the one being touched, their respective cultures,

the part of the body that is touched, etc.

2.2 Related Works on Social Touch

Technologies

From the technical point of view, haptic

technologies (technologies producing kinesthetic or

tactile sensations) are very diverse, covering

vibration technologies, force-feedback devices or

thermal technologies and many more (M. Teyssier,

G. Bailly, É. Lecolinet and C. Pelachaud, 2017).

Pseudo-haptics, as defined by Gómez Jáuregui,

Argelaguet Sanz, Olivier, Marchal, Multon and

Lécuyer (2014), allow to give the illusion of a

credible force-feedback by using appropriate visual

cues to reinforce a simpler existing haptic feedback.

However, there are still no technology able to

completely reproduce real touch sensations on every

level. When it comes to studies on social touch,

devices such as the sleeve equipped with vibrators

TASST made by Huisman, Darriba Frederiks, van

Dijk, Heylen and Krose (2013) are often used.

In his works, Gijs Huisman (2017) differentiates

social touch mediation technologies, which focus on

transmitting touch from one human to another

through a technological interface, from social touch

simulation technologies, which generate a tactile

behaviour on their own, without human input. While

social touch simulation often use social touch

mediation technologies to produce its haptic

feedback, it also needs the “intelligence” to adapt its

behaviour and decide what kind of tactile behaviour

it should adopt, based on a decision model.

As to whether mediation and simulation of social

touch have the same properties as natural social

touch, Van Erp and Toet’s studies (2013) prove

three principles. Emotions can be transmitted

through touch only, without any other cues.

Interpersonal communication of emotion or social

intention can still be achieved through a

technologically mediated touch. Finally, systems are

also capable of using technologically mediated touch

to successfully transmit emotions, just like humans.

Although with nuanced results, it was shown

how simulated social touch enhanced empathic

communication when using augmented reality to

materialize agents in the social context of a

cooperative game (Huisman, Kolkmeier and Heylen,

2014).

Works by Yohanan (2012) on the “Haptic

Creature”, which has an animal-like appearance,

show that humans are expecting the agent to react in

a mimetic way when touched. However, there are

still very few works that have studied the agent’s

reaction to being touched when it comes to

humanoid agents.

Where most of the works we discussed here were

focused on either the agent touching the human or

the human touching the agent, our work focuses on

using a virtual humanoid embodied conversational

agent that will be able to both touch and be touched

by the user. We will measure the credibility of the

interaction throughout the whole interactive loop.

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

130

Figure 1: A touch-based human-agent interaction inside

the immersive room TRANSLIFE.

3 TO TOUCH AND BE TOUCHED

IN AN IMMERSIVE VIRTUAL

ENVIRONMENT

3.1 How to Touch a Virtual Agent in

an Immersive Environment

To achieve this a priori counter-intuitive idea of

touching a virtual agent and having it be aware of

the touch, we took inspiration in Nguyen,

Wachsmuth and Kopp’s works (2007) on the tactile

perception of a virtual agent inside an immersive

room. The immersive room system produces an

immersive environment of virtual reality through the

projection of the 3D environment on each of the

three walls and the floor it is made up of. This,

coupled with motion capture cameras and the use of

stereoscopic 3D glasses, allows the user to

experience the environment to the 1:1 scale (Cruz-

Neira, Sandin and DeFanti, 1993). This very specific

setup allows the user to experience a virtual

environment while still being able to see and

perceive his own body (unlike with most of the

head-mounted displays for virtual reality). The user

will be able to see himself touch the agent with his

own hand and be touched on his own arm.

To make the agent able to perceive touch,

Nguyen et al. idea is to cover the 3D model of the

virtual agent with a virtual “skin” made up of

“tactile cells”, which are virtual receptors put on the

surface of the body of the agent and taking the form

of geometries varying in size and shape. When any

element of the real world tracked in the immersive

room is detected as colliding with any of the cells,

through comparison of coordinates, we consider that

there is touch and we can record its different

properties, such as location on the body. This allows

to make the agent aware of when it is being touched.

Basing ourselves on these ideas we gave

colliders to the 3D model of our virtual agent to

reproduce the principle of Nguyen et al.’s skin

receptors. These colliders can be seen in Figure 2.

Upon collision with the virtual representation of the

hand, we record the cell that was touched but also

properties such as the duration of the touch, the

initial velocity of the hand when the touch occurred,

etc. This is done every time a tactile cell is activated

and we can build a sequence of touches that will

represent the whole touch gesture.

Figure 2: The virtual environment with the agent and its

tactile cells (in green).

Without physical embodiment though, our hand

will still go through the visual representation of the

agent without resistance. It is thus very difficult to

measure physical properties such as pressure or to

perform types of touch such as holding the arm.

3.2 How to Be Touched by a Virtual

Agent in an Immersive

Environment

To make touching and being touched by our virtual

agent a credible experience, we can’t satisfy

ourselves with only seeing our hand colliding with

and go through the body of the agent. Our

interactions with reality are based on our habits of

perceiving the world through our senses. When we

see our hand coming into contact with something,

we are always expecting to feel touch. If that

sensation was missing when touching the agent,

there would be perceptive dissonance, which would

produce discomfort and a loss of credibility of the

interaction. In order to give the user a substitute

sensory feedback able to compensate perceptively

the immaterial nature of the virtual body, we turned

ourselves towards the design and creation of a sleeve

and a glove able to perform haptic feedbacks. Those

two devices are required to simulate the touch of the

Social Touch in Human-agent Interactions in an Immersive Virtual Environment

131

agent on the human (sleeve) and to offer a suitable

perceptive substitution when the human touches the

agent (glove).

4 DESIGNING HAPTIC

INTERFACES

In order to implement haptic feedback for the user,

we designed an interface composed of two devices:

a glove equipped with four vibrators (similar to the

ones we can find in a smartphone in terms of size

and power) on each corner of the palm of the hand,

and a sleeve using the same vibrators in the shape of

a matrix of two columns and four lines of those

vibrators. The arm and the hand are privileged

places for social touch, where it is generally well

received even between strangers (Suvilehtoa,

Glereana, Dunbarb, Haria and Nummenmaa, 2015).

We chose to use vibrations for its lightweight,

making it easy to wear on the body, as well as for

the richness of the scientific literature on how we

can use them to produce interesting haptic sensations

(Huisman et al., 2013). Despite their inherent

limitations when it comes to reproducing human

touch sensation, we used the principles of the tactile

brush algorithm (Israr and Poupyrev, 2011) on the

sleeve and achieved the simulation of four different

types of touch by manipulating duration and

intensity levels of the vibrations. Those four types of

touch were based on Hertenstein et al. (2006) (2009)

categorization of the types of touch and were chosen

for their ability to transmit different emotions. Those

types of touch are hitting, tapping, stroking and what

we will call a neutral touch. We defined the physical

properties of those touches as follow:

- A hit is a short touch (400 ms) without any

movement and with a high intensity.

- A tap is a very short touch (200 ms) without any

movement and with a moderate intensity.

- A stroke is a longer touch (4*200 ms) with a

movement on the skin and with a lower intensity.

- A neutral touch is a longer touch (1000ms) without

any movement and with a lower intensity.

As natural human touch has much more physical

properties than the few we can take into account

with vibrations, some types of touch can’t be

reproduced with those devices, such as any type of

touch using pressure. Nevertheless, we believe that

those four types of touch can be simulated in a

satisfactory way by vibrations and suffice to produce

an understandable haptic feedback for the user.

To prevent another perceptive dissonance, the

gesture visually performed by the agent also had to be cor-

rectly synchronized with the vibrations. The

prototype of the sleeve built for our system can be

seen in Figure 3 and makes use of the Arduino

technology.

We will now present the preliminary study

conducted to make a first evaluation of the system.

Figure 3: The sleeve prototype.

5 PRELIMINARY STUDY

With this first experiment, we aim to produce a

preliminary study on the credibility of simulated

social-touch based interactions between human and

agent in our immersive room TRANSLIFE. This

will also serve as an evaluation of the system we

built and presented in the previous sections.

5.1 Experimental Protocol

This preliminary experiment is split in two distinct

phases in which the participant will have to touch

and be touched by a virtual agent. A between subject

design was chosen in order to prevent fatigue (the

experiment being already quite long) as well as any

bias based on the participant learning from the

phases. Participants are thus divided in three groups

depending on the emotion they have to transmit and

the emotion being transmitted to them by the agent.

Before the beginning of the experiment, in order

to reduce the novelty effect, the participant is put in

a test environment which allows him to familiarize

himself with the virtual environment, the haptic

feedbacks and a different virtual agent than the one

used in the rest of the experiment.

The actual environment (see Figure 2) is then

launched and the participant is asked to get the

attention of the agent, who is first turned away from

the participant, by placing himself on the white

marking and touching the agent. The agent then

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

132

turns around and proceeds to introduce itself as

Camille and explains the experiment.

Phase 1. The participant will first express an

emotion by touching the agent with the vibratory

glove, and the agent will answer to the touch and the

emotion transmitted with an adequate facial

expression. Practically, the participant touches the

agent four times and is left free to use any touch type

he considers appropriate, while being warned that

only his hand is recognized by the system.

During this phase, emotional scenarios will first

be read to the participant in order to indicate the

emotion that must be transmitted and its intensity to

the participant. There are two sessions of four

touches in which the same emotion is being

transmitted but each session is preceded by a

different scenario indicating a different intensity of

the emotion. Our goal in using two distinct

emotional intensities is to observe and determine if

the participant uses different kinds of touch. Three

emotions were chosen to be transmitted, they are

sympathy (C1), anger (C2) and sadness (C3). Those

emotions benefit from being very different from

each other while being a priori easily understandable

for the participants. Emotional scenarios are based

on works by Bänziger, Pirker and Scherer (2006)

and by Scherer, Banse, Wallbott and Goldbeck

(1991). As an example, the following low emotional

intensity scenario was used to indicate sympathy:

“You meet a friend of yours, Camille, that you

hadn’t seen for some time. You express what you

are feeling to her.” High emotional intensity

scenarios involve more emphatic adjectives and

expressions.

Phase 2. Still inside the room, the virtual agent

will then touch the participant where the vibratory

sleeve is worn, while performing facial expression

and gesture adequate to the emotion being

expressed. The emotion being expressed is different

from the one expressed in the previous phase to

prevent any kind of learning bias (future works

should beware order bias though).

There are also two sessions of four touches in

this phase, and it is the same emotion that is being

expressed in both phases but this time it is the type

of touch that changes between the sessions. The

agent uses stroking and tapping to express sympathy

while tapping and hitting are used for anger, and

stroking and neutral touch are used for sadness.

As said before, physical properties of touch are

not sufficient for the correct interpretation of social

touch. We chose to add other non-verbal cues, facial

expressions and gestures corresponding to the

emotions being transmitted, so that we can evaluate

if this setting is already sufficient to the

interpretation of touch.

In-between each session of the experiment and at

the end, the participant is asked to answer some

questions from the questionnaire. At the very end,

after having answered the questionnaire, the

participant is debriefed about the experiment.

5.2 Setup and Questionnaire

In this setup, we are using a wizard-of-oz type of

procedure where the reactions of the agent are

prepared in advance and activated by the person

conducting the experiment.

The agent is monitored and animated through the

use of the GRETA software platform (De Sevin,

Niewiadomski, Bevacqua, Pez, Mancini, Pelachaud,

2010), which allows us to manage the social

behaviour of such agents both in terms of verbal and

non-verbal cues.

As for the questionnaire, it is inspired by works

by Demeure, Niewiadomski and Pelachaud (2011).

In the first phase, participants are asked to describe

the properties of the types of touch they chose to

use, so that we can confront the answers to the

information recorded by the system as well as to the

results from the literature. The participants are also

asked to evaluate the degree to which they

considered the reaction of the agent to their touch as

credible and why. We understand credibility here as

the degree to which the participant feels the agent

behaved itself in an adequate human-like way.

In the second phase, participants are asked to

describe the tactile sensation they felt when the

agent touched them and to name it. Finally,

participants were asked to determine to what degree

they felt like the agent was expressing sadness,

anger or sympathy, or any other kind of emotion

they believed they had felt, and to evaluate to which

degree they considered the behaviour of the agent as

credible and why.

5.3 Participants

The experiment, which lasted one hour on average,

was conducted with twelve participants, among

which there were eight women and four men. Nine

of those participants had no prior experience of

virtual reality. Ten considered themselves as having

a good touch receptivity (they thought they received

touch well) and two didn’t know. All the participants

were between 18 and 39 years old and were of

occidental culture. Mean age value was 23,25 and

standard deviation was approximately 5,7897.

Social Touch in Human-agent Interactions in an Immersive Virtual Environment

133

6 RESULTS

Subjective data was gathered with 5-items Likert

scales. Since we had very few participants (twelve

split in three groups of four), conducting future new

experiments with more participants and improved

procedures should allow to confirm or infirm the

following elements.

6.1 Touching and Being Touched

All of the participants that had to transmit sadness

through touch expressed a big difficulty to decide

how to touch the agent for this emotion.

Unexpectedly, and even though they had been

clearly informed that only the glove was tracked and

taken account for their touch on the agent, all the

participants used a type of touch that we considered

as inadequate to virtual reality at least once. In the

case of sympathy and sadness most of the

participants tried to hug the virtual agent.

Seven out of the eight participants concerned

recognized correctly, by name, the vibration pattern

that corresponded to a stroking, and more than half

of the participants concerned could identify the

patterns that simulated both the hit and the tap.

However, no participant identified the “neutral

touch”, which could be explained by the fact that

“neutral touch” might not be a natural term.

6.2 Overall Credibility of the Touch

Interaction and the Agent’s

Behaviour

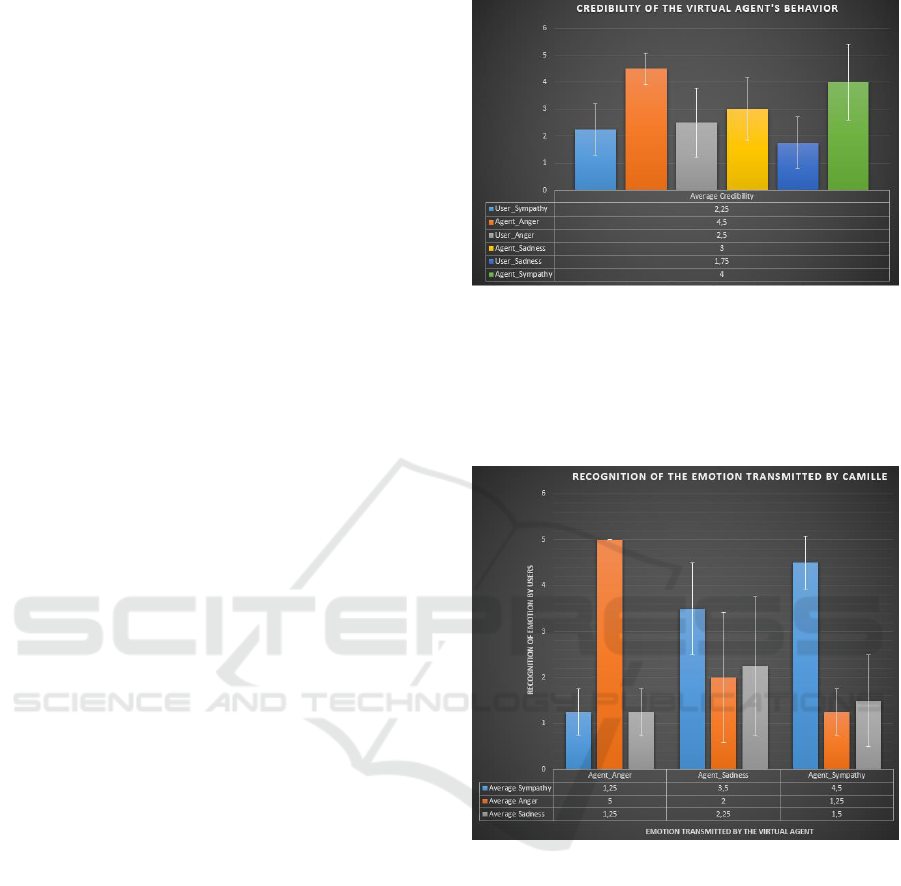

The results shown in Figure 4 (User stand for when

the participant touched the agent and Agent stands

for when the agent touched the participant) indicate

that the agent appeared as more credible when it

touched the participants to express anger (red

column) and sympathy (green column), with the

participants rating its credibility around or above 4

on average. The agent was however much less

credible when it reacted to being touched or when it

tried to express sadness. In their answers to the

questionnaire, participants have said that facial

reactions were hardly noticeable when they touched

the agent, which can partly explain the low

credibility of the agent when it was being touched.

The results shown in Figure 5 indicate that

the emotion transmitted by the agent was correctly

recognized as anger in group C1 and as sympathy in

group C3 by almost all the participants, but that the

group that was confronted to sadness had a lot more

Figure 4: Credibility of the virtual agent’s behaviour

according to participants.

trouble to correctly identify the emotion. We can add

that half of the participants from group C2 have said

that the agent was trying to comfort them or to be

compassionate instead of expressing sadness.

Figure 5: Recognition rate of the emotion transmitted by

the agent.

6.3 Discussion

Despite their overall obviously low significance

considering the number of participants, we believe

the answers support the idea that social touch is a

viable modality to enhance empathic communication

channels between human and agent. It notably

shows how agents using touch to express emotions

can be considered as credible by humans. Results are

less encouraging when it comes to the credibility of

the reaction of the agent to touch. This means that

the agent was not perceived as having noticed the

touch performed on itself by the participants, or that

its reaction was not felt human-like. However, we

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

134

believe that this is something that can be improved

by enhancing the quality of the other reaction cues

of the agent (speech, gestures and especially facial

expressions) and by the implementation of a real

computational model of emotion that would allow

the agent to have a full and autonomous interaction.

Another interesting result is that sadness was

poorly recognized and felt hard to transmit through

touch. When asked about it, participants said that

when they feel sad they are more expecting to be

touched by someone else (in order to be comforted

or shown empathy) than they are prone to go touch

someone. It thus appears that an emotion such as

being sorry-for someone would be more appropriate

in a social touch context than sadness in itself.

It is also noteworthy that even though results

were very encouraging about the recognition rate of

the types of touch simulated with the sleeve, all the

participants have expressed that they didn’t feel like

vibrations were an appropriate feedback for

imitating the natural touch sensations.

Despite this, participants have unexpectedly not

hesitated to use types of touch that we had thought

inadequate in the context of virtual reality, such as

hugging or pushing, all of those being types of touch

requiring some kind of physical resistance from the

object being touched. While participants absolutely

realized that only their hand was detected and

received haptic feedback, they still tried to use the

types of touch that seemed the most natural to them

to express the emotion they had to express.

When asked what kind of perceptive substitution

they would have preferred, participants described

force-feedback devices. Such devices could indeed

give a more realistic sensation of touching

something with a physical presence.

Among the other possibilities that can be

explored, one of the participant remarked that the

vibratory sensation might have seemed less

surprising and more credible if there had been some

sort of mediation of the touch and the vibratory

feedback through some kind of physical tool, such as

a HTC Vive controller or any other command device

of this kind, instead of the glove. It seemed to the

participant that such a proxy would have made the

vibrations feel less dissonant, since it would have

used a tool that doesn’t look like it aims at perfectly

imitating the sensation of natural touch.

This idea seemed particularly interesting to us

considering that social touch is overall a rarely used

social interaction modality in our daily-lives (at least

outside ritualistic usages and more intimate

relationships), but is, on the other hand, our main

modality of interaction with technical objects and

tools. André Leroi-Gourhan (1964) has shown how

by becoming bipeds and thus freeing their hands, our

main touching organs, the first humans have been

able to develop themselves technically and

cognitively through the handling of external tools.

Using some kind of proxy to mediate our touch in a

virtual environment could therefore be a relevant

and interesting way to produce a credible social

touch sensation even with a sensory feedback very

different from the actual sensation of touch. In the

context of virtual reality, such a mediation coupled

with pseudo-haptics could greatly enhance the

quality of the perceptive substitution.

The question remains as to what kind of

mediation tool could be relevant in the context of

virtual reality. How using such a proxy would

influence the behavior of the human towards the

agent also needs to be studied with more attention,

as it could potentially put distance between them.

7 CONCLUSIONS

To sum things up, our goal was to estimate in what

measure credible social interactions based on touch

can be implemented between human and embodied

conversational agent in a virtual immersive

environment. With the system and the preliminary

experiment presented in this paper, we hope to have

shown that a credible empathic communication

between human and agent can indeed be performed

with the use of simulated social touch based on

vibrations. In particular, we have shown how

patterns of vibrations can be recognized as specific

types of touch and how emotions transmitted

through a combination of touch and facial

expressions can also be identified by humans in an

immersive virtual environment. Leads on how to

improve both the system proposed here and the

evaluation protocol have been identified and should

allow to pursue new studies on touch-based human-

agent social interactions in immersive virtual

environments.

However, our agent doesn’t meet, yet, all the

requirements mentioned in the literature (Huisman,

Bruijnes, Kolkmeier, Jung, Darriba Frederiks et al,

2014) that would make it qualify as an autonomous

social agent. If it has the ability to perceive and to

perform touch, it still lacks the intelligence to

interpret the touches and to adapt its behavior

accordingly. With an adequate computational model

of emotion, a maintained exchange of social touches

between human and agent could happen.

Social Touch in Human-agent Interactions in an Immersive Virtual Environment

135

ACKNOWLEDGEMENTS

This work was realized thanks to the ANR’s funding

in the context of the Social Touch project (ANR-17-

CE33-0006). We also thank the FEDER and the

Hauts de France for their funding of the immersive

room TRANSLIFE at UMR CNRS Heudiasyc Lab.

REFERENCES

T. Bänziger, H. Pirker, and K. Scherer. 2006. “GEMEP-

Geneva Multimodal Emotion Portrayals: A corpus for

the study of multimodal emotional expressions”. In

Proceedings of LREC.

N. Bianchi-Berthouze, and A. Tajadura-Jiménez. 2014.

“It’s not just what we touch but also how we touch it”.

In Paper presented at the TouchME workshop, CHI

Conference on Human Factors in Computing Systems.

Anne Cranny-Francis. 2011 Semefulness: a social

semiotics of touch, Social Semiotics, 21:4, 463-481,

DOI: 10.1080/10350330.2011.591993

Carolina Cruz-Neira, Daniel J. Sandin and Thomas A.

DeFanti. "Surround-Screen Projection-based Virtual

Reality: The Design and Implementation of the

CAVE", SIGGRAPH'93: Proceedings of the 20th

Annual Conference on Computer Graphics and

Interactive Techniques, pp. 135–142,

DOI:10.1145/166117.166134

V. Demeure, R. Niewiadomski and C. Pelachaud. 2011.

“How is Believability of Virtual Agent Related to

Warmth, Competence, Personifiation and

Embodiment?”. In Presence (pp. 431-448).

Jan B. F. van Erp, and Alexander Toet. 2013. “How to

Touch Humans Guidelines for Social Agents and

Robots that can Touch”. In Human Association

Conference on Affective Computing and Intelligent

Interaction.

D.A. Gómez Jáuregui, F. Argelaguet Sanz, A.H. Olivier,

M. Marchal, F. Multon, A. Lécuyer, 2014. "Toward

“Pseudo-Haptic Avatars”: Modifying the Visual

Animation of Self-Avatar Can Simulate the Perception

of Weight Lifting", IEEE Transactions on

Visualization and Computer Graphics (Proceedings of

IEEE VR 2014), pp.654-661.

M. J. Hertenstein, J. M. Verkamp, A. M. Kerestes, and R.

M. Holmes. 2006. “The communicative functions of

touch in humans, nonhuman primates and rats: A

review and synthesis of the empirical research”. In

Genetic, Social, and General Psychology

Monographs.

M. J. Hertenstein, D. Keltner, B. App, B. a. Bulleit, and A.

R. Jaskolka. 2006. “Touch communicates distinct

emotions”. In Emotion.

G. Huisman. 2017. “Social Touch Technology: A Survey

of Haptic Technology for Social Touch”. In IEEE

Transactions on Haptic.,10(3), pp. 391-408.

DOI:10.1109/TOH.2017.2650221

Gijs Huisman, Merijn Bruijnes, Jan Kolkmeier, Merel

Jung, Adu´en Darriba Frederiks, et al.. 2014.

“Touching Virtual Agents: Embodiment and Mind.”

Yves Rybarczyk; Tiago Cardoso; Jo˜ao Rosas; Luis

M. Camarinha-Matos. 9th International Summer

Workshop on Multimodal Interfaces (eNTERFACE),

Jul 2013, Lisbon, Portugal. Springer, IFIP Advances in

Information and Communication Technology, AICT-

425, pp.114-138, Innovative and Creative

Developments in Multimodal Interaction Systems.

Huisman, Gijs and Darriba Frederiks, Aduen and van

Dijk, Betsy and Heylen, Dirk and Krose, Ben, 2013

“The TaSST: Tactile Sleeve for Social Touch”. IEEE

World Haptics Conference 2013, 14-18 April,

Daejeon, Korea.

G. Huisman, J. Kolkmeier, D. Heylen. 2014. “With Us or

Against Us: Simulated Social Touch by Virtual Agents

in a Cooperative or Competitive Setting”. In

International Conference on Intelligent Virtual

Agents.

Ali Israr and Ivan Poupyrev. 2011. “Tactile Brush:

Drawing on Skin with a Tactile Display”. Disney

Research Pittsburgh.

Leroi-Gourhan, André. 1964. Le geste et la parole. Paris,

A. Michel. Tome I: Technique et langage. 325 p.

N. Nguyen, I. Wachsmuth, and S. Kopp. 2007. “Touch

perception and emotional appraisal for a virtual

agent”. In Proceedings Workshop Emotion and

Computing.

K. R. Scherer, R. Banse, H. G. Wallbott, T. Goldbeck.

1991. “Vocal cues in emotion encoding and

decoding”. In Motivation and Emotion.

E. de Sevin, R. Niewiadomski, E. Bevacqua, AM. Pez, M.

Mancini, C. Pelachaud. 2010. « Greta, une plateforme

d'agent conversationnel expressif et interactif ». In

Technique et Science Informatique.

J. T. Suvilehtoa, E. Glereana, R. I. M. Dunbarb, R. Haria,

and L. Nummenmaa. 2015. “Topography of social

touching depends on emotional bonds between

humans”. In National Academy of Sciences.

M. Teyssier, G. Bailly, É. Lecolinet, C. Pelachaud. 2017.

« Revue et Perspectives du Toucher Social en IHM ».

In AFIHM. 29ème conférence francophone sur

l’Interaction Homme-Machine.

Yohanan, S., 2012. "The Haptic Creature: Social Human-

Robot Interaction through Affective Touch," Ph.D.

Thesis, University of British Columbia.

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

136