Towards Multi-UAV and Human Interaction Driving System

Exploiting Human Mental State Estimation

Gaganpreet Singh, Rapha

¨

elle N. Roy and Caroline P. Carvalho Chanel

ISAE-SUPAERO, Universit

´

e de Toulouse, France

Keywords:

Unmanned Aerial Vehicles(UAV), Multi-UAVs and Human Interaction, Manned-Unmanned Teaming

(MUM-T), Mental Workload, Engagement, Physiological Computing.

Abstract:

This paper addresses the growing human-multi-UAV interaction issue. Current active approaches towards

a reliable multi-UAV system are reviewed. This brings us to the conclusion that the multiple Unmanned

Aerial Vehicles (UAVs) control paradigm is segmented into two main scopes: i) autonomous control and

coordination within the group of UAVs, and ii) a human centered approach with helping agents and overt

behavior monitoring. Therefore, to move further with the future of human-multi-UAV interaction problem,

a new perspective is put forth. In the following sections, a brief understanding of the system is provided,

followed by the current state of multi-UAV research and how taking the human pilot’s physiology into account

could improve the interaction. This idea is developed first by detailing what physiological computing is,

including mental states of interest and their associated physiological markers. Second, the article concludes

with the proposed approach for Human-multi-UAV interaction control and future plans.

1 INTRODUCTION

Recent automation progress in terms of control, nav-

igation, and decision making brings the speculation

of autonomous decision-making multi-UAV systems’

deployment closer to reality (Schulte et al., 2015).

However, keeping the human in the (decisional) loop

is still a compulsory point (Valavanis and Vachtse-

vanos, 2015; Schurr et al., 2009). In particular,

unmanned aircraft’s engineering for optimal control

strategies are still evolving and the idea of having bet-

ter control of an unmanned aircraft is shifting to the

desire of controlling several UAVs at once.

The actual ratio factor between UAV operators (O)

and UAV units (N) is O≥N. For example, in the US

army, a UAV is managed by several operators: one is

in charge of following the flight parameters, other is

in charge of payload, and the last one is responsible

for the mission supervision. In the next future, this

ratio would probably be inverted (O<N) (Gangl et al.,

2013a).

Indeed, UAVs are getting more and more auto-

mated, taking decisions by themselves, which light-

ens the need for such a number of operators. The idea

is that UAVs could explore safety automation to en-

sure a completely autonomous navigation and even

a completely autonomous mission planning. How-

ever, the human operator is still vital, and unfor-

tunately, considered as a providential agent (Schurr

et al., 2009; Casper and Murphy, 2003), who gets over

the autonomous or automatic system when some haz-

ardous event occurs. Yet, it is known that, in UAV op-

erations, Human Factors represent the most important

part of accidents (Williams, 2004; Haddal and Gertler,

2010). Nevertheless, in some cases, it is mandatory

to handle a mission from close proximity, by keep-

ing human agents in the loop, which are in charge of

taking the difficult decisions.

However, to leverage the advantage of being smart

and adaptable like humans, and consistent and precise

like machines, the idea is to bring them in close con-

tact beforehand, and so, to design a system that pro-

vides authority and integrity to both (non unerring)

actors - human and machine - while helping a single

human to work collaboratively with multiple UAVs.

In particular, considering that neither of them is lead-

ing, but both of them are helping each other in accom-

plishing the mission goal(s). This interesting posi-

tioning is also known as mixed-initiative for Human-

Machine Interaction (HMI) (Jiang and Arkin, 2015),

which should consider that each of the agents could

seize (e.g. relinquish) the initiative from the other(s).

From a human point of view, such a system that takes

over us is not always acceptable (or even desirable),

294

Singh, G., Roy, R. and Chanel, C.

Towards Multi-UAV and Human Interaction Driving System Exploiting Human Mental State Estimation.

DOI: 10.5220/0007575002940301

In Proceedings of the 12th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2019), pages 294-301

ISBN: 978-989-758-353-7

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

but should at least be welcome when human capabili-

ties (cognitive or physical) are not forthcoming.

Therefore, this paper proposes a multi-UAV inter-

action driving concept. Where multiple UAVs are in-

teracting with a single human agent from a plane’s

cockpit while the latter is being part of the mission,

and its physiological measurements are exploited to

enhance coordination between man and machine. In

other words, the human and UAVs can be seen as a

Manned-Unmanned Teaming (MUM-T). In missions

scenarios, where a MUM-T is suitable, hot events

can occur and the human agent, which is in charge

of harder decisions, can experience degraded mental

states. In this context, the main idea is to explore

the use of mental state estimation in real time (Singh

et al., 2018) to drive the Human and Multi-UAVs In-

teraction.

The paper is organized as follows: section 2

presents multi-UAV applications that take (or not)

into account the human operator inputs, directly in the

supervisory control-loop, or to well-drive human and

multi-UAV interaction. In section 3 promising phys-

iological parameters that could be useful to estimate

the cognitive state of human operator are reviewed.

The proposed approach is presented in section 4, as

well as, a brief description of the experiment scenario

currently being designed. At the end, conclusions and

future works in section 5 closes this paper.

2 MULTI-UAVs IN ACTION

With the emergence of unmanned aircrafts and the

evolution of automation, researchers are pushing the

limits to attain the capability of controlling multiple

UAVs. But there is still a debate between having a

fully autonomous group of UAVs performing differ-

ent sub-tasks to achieve one common goal or having

one human controlling several UAVs all together to-

wards the success of a mission. Moreover, both of

these ideologies settle in one common urge of having

a fully capable multi-UAV system.

Several multi-UAV applications have already been

designed and demonstrated. The COMETS project

(Ollero et al., 2005) is one of them with several re-

search organizations involved in design and imple-

mentation of a new control architecture for multiple

heterogeneous UAVs working cooperatively in forest

fire missions. Maza and collaborators (Maza et al.,

2011) designed a multi-UAV distributed decisional ar-

chitecture to autonomously cooperate and coordinate

with UAVs while accomplishing high level tasks by

dividing and assigning low level tasks to each UAV

with respect to the capabilities of each one of them.

Perez and collaborators (Perez et al., 2013) devel-

oped a ground control station for dynamically assign-

ing tasks to several UAVs, and Schere and collabora-

tors (Scherer et al., 2015) created a distributed con-

trol system to coordinate multiple UAVs and over-

ride autonomy when required. Brisset and collabora-

tors (Brisset and Hattenberger, 2008) have conducted

two multi-UAV experiments using Paparazzi (a free

autopilot) (Brisset and Drouin, 2004; Brisset et al.,

2006). In these experiments, a formation flight us-

ing first 3 UAVs, and secondly 2 UAVs at different

locations in Germany and France were controlled by

the same Ground Control Station in Germany by two

operators. Franchi and collaborators (Franchi et al.,

2012) also studied the involvement of human in con-

trol loop of multi-UAVs with self arranged autonomy.

Whereas, Muller and collaborators (Mueller et al.,

2017) targeted 2 problems of multi-UAVs operation:

first an effective Human System Interface for better

understanding, control, and monitoring of overall ac-

tivity; and second to authorize UAVs to plan, verify,

and act when the connection to the human operator is

lost.

Such systems are trying to diminish the need of a

human operator by making systems capable enough

to take decisions and accomplish the mission without

any human intervention, or just with little supervisory

control, or takeover when required.

On the other hand, recent works are heading to-

wards an integrated system with human involvement

in critical situations (Donath et al., 2010; Gangl et al.,

2013a; Gangl et al., 2013b; Schulte et al., 2015). The

main idea behind these researches is the involvement

of a human operator not just in the supervisory, or

control loop, but in the mission itself. The human

operator is not remotely controlling the UAVs to per-

form tasks, but one is also involved in the mission

plan and performs the required supervision of accom-

panying UAVs from the cockpit of a plane. The ad-

vantages of this kind of system over those that keep

the human operator only for supervision from ground

control stations are tremendous. For instance, with

this setup there is less physical limitation between the

UAVs and the control station (Gangl et al., 2013a),

there are long range mission possibilities. Moreover,

there is a better availability of the human operator for

critical decision making considering better situation

awareness, along with real time task (re)planning.

Donath and collaborators (Donath et al., 2010)

worked on assistant systems to help human pilot in

managing multiple UAVs from a manned aircraft, and

also evaluated the workload experienced by the pi-

lot. They used human behavior models to repre-

sent the workload experienced and to provide pos-

Towards Multi-UAV and Human Interaction Driving System Exploiting Human Mental State Estimation

295

sible solutions to balance it. Their work was fur-

ther evaluated by Gangl and collaborators (Gangl

et al., 2013b) with Unmanned Combat Aerial Vehi-

cles (UCAVs). In this particular study, Artificial Cog-

nitive Units (ACUs) were used to control each UCAV

separately along with the presence of a human pilot

in a manned aircraft’s cockpit working hand-in-hand

with the UCAVs.

A very critical issue of mental workload expe-

rienced by human pilots in a Manned-Unmanned

Teaming (MUM-T) scenarios has been raised in these

works (Donath et al., 2010; Gangl et al., 2013a; Gangl

et al., 2013b; Schulte et al., 2015). However, a

promising way, in our point of view, is to use physi-

ological features over subjective or behavioral human

measurements or models in order to better estimate

mental workload, this could provide a unique advan-

tage. Indeed, estimating humans’ mental state using

subjective and behavioral measures can only tell what

might have occurred but cannot measure and reveal

what actually went on. Hence, physiological mea-

sures can help extracting pilots’ mental states in real

time and can provide better estimates of such states

than overt measurements which are relatively sparse

(Mehta and Parasuraman, 2013).

In this sense, this work’s position aims to explore

the benefits of using physiological computing to es-

timate pilots’ mental state in order to drive human-

multi-UAVs interaction.

3 PHYSIOLOGICAL

COMPUTING

Physiological computing provides a revolutionized

way towards Human-Machine Interaction (HMI) by

directly monitoring, analyzing, and responding to

covert physiological features of a user in real time

(Fairclough, 2008). A system that explores physio-

logical computing, works through reading and trans-

forming psychophysiological signals as inputs to a

control signal without going through any direct com-

munication channel with the human operator (Byrne

and Parasuraman, 1996). It brings in an efficient HMI,

or rather opens up a communication channel which

was left unused before (Hettinger et al., 2003). The

use of such physiological features or markers is a

great means to provide sixth sense for research and

other applications that are willing to peak into the core

of human activity and want to get insight of how a

person is actually experiencing the world in their cog-

nitive realm without putting the operator in any direct

or indirect conversation.

Previous research supports the use of physio-

logical features to better understand human men-

tal state and enhance a task’s outcome (Roy et al.,

2016b; Senoussi et al., 2017; Drougard et al., 2017a;

Drougard et al., 2017b). For aeronautical applica-

tions, several mental states are particularly relevant to

try and estimate such as: Mental Workload, Engage-

ment, Fatigue, and Drowsiness. The work presented

here focuses on Mental Workload and Engagement

since these states are great contributors to human per-

formance modulation in risky settings.

3.1 Mental Workload and Engagement

Workload was defined in several different ways, but

it could be understood as ones’ information process-

ing capacity or amount of resources required to meet

system demand (Eggemeier et al., 1991) or the dif-

ference in the capacity of an information processing

system to satisfy a task’s performance and the avail-

able capacity at any given time (Gopher and Donchin,

1986). A closely related concept is therefore the En-

gagement, or attentional/cognitive resource engage-

ment. The Engagement level of an operator varies de-

pending on several factors such as time-on-task (and

therefore vigilance/alertness), task demands, and mo-

tivation (Berka et al., 2007; Chaouachi and Frasson,

2012; McMahan et al., 2015). In our understanding,

trying and estimating mental workload is akin to es-

timate engagement if one thinks in terms of mental

resources. Hence, these two concepts will be consid-

ered as one in the remaining parts of this paper.

3.2 Associated Physiological Markers

Mental workload and mental resource engagement

have been widely studied (Mehta and Parasuraman,

2013), which allowed to reveal several physiolog-

ical parameters that can enable to effectively esti-

mate human engagement state. Both cerebral and

peripheral physiological measures can be used to in-

fer the engagement state. Hence, in order to per-

form engagement estimation, electroencephalograph-

ical (EEG) features in the temporal and frequency do-

main can be used (Pope et al., 1995).

In the temporal domain, an example is the use of

Event Related Potentials (ERP) which are the time-

locked cerebral responses to specific events (Fu and

Parasuraman, 2007). The amplitude of these voltage

variations can be extracted at various time points and

is known to fluctuate with engagement. For example,

after 300 ms post-event (e.g. after an alarm) there is

a lower positive deflection at posterior electrode sites

if the operator has not engaged enough resources to

correctly process this event.

BIOINFORMATICS 2019 - 10th International Conference on Bioinformatics Models, Methods and Algorithms

296

Next, in the frequency domain one can use mod-

ulations in the Power Spectral Density (PSD) of dif-

ferent EEG frequency bands (e.g. θ: 4-8 Hz, α: 8-12

Hz and β: 13-30 Hz) (Roy et al., 2016a; Roy et al.,

2016c; Heard et al., 2018a). For instance, a widely

accepted and evaluated Engagement Index (EI) devel-

oped by Pope and collaborators (Pope et al., 1995) can

be used to modulate task allocation in a closed-loop

system and is computed using band power as follows:

EI = β/(α + θ) (Chaouachi and Frasson, 2012; Berka

et al., 2007).

As for peripheral measures that can be useful

to estimate operator’s mental state, markers can be

extracted from the electrocardiogram (ECG). Well

known ones are the Heart Rate (HR; time domain

metric) and the Heart Rate Variability (HRV; can be

computed both in the time and the frequency do-

mains). These metrics are sensitive to workload and

engagement but not specific to it, indeed they are also

modulated by physical activity (Heard et al., 2018a).

Another way of recording peripheral activity is to

use an eye-tracker device, which records ocular ac-

tivity. Thanks to this device, one can for instance ex-

tract Blink Frequency (BF; i.e. number of blinks per

minute), Fixation Duration (FD; i.e. amount of time

the eyes fixated a particular area) and Blink Latency

(BL; i.e. amount of time between two blinks) which

variate with engagement (Heard et al., 2018a; Heard

et al., 2018b).

4 THE PROPOSED APPROACH

Machine intelligence still does not have far reaching

capabilities to match human intelligence and abilities,

in particular to work in unpredictable and continu-

ously changing environments. Since the human brain

does have far reaching capabilities, a better integra-

tion of its capabilities with machines could bring un-

matched results.

As before highlighted, research has already taken

place to achieve high levels of autonomy in UAVs

and to make their control system (in terms of flying)

capable enough to handle flight parameters without

much human intervention. Therefore, this work is

not directed towards controlling the dynamics and au-

tonomy of the UAVs. It is neither directed towards

achieving a super smart interface to enable human op-

erators to supervise several UAVs through that.

Rather, this work focuses on unveiling and esti-

mating human pilot’s mental states involved in the

mission to enhance Human-UAVs interaction. Sec-

ondly, it focuses on developing decisional systems

that understand the situation and choose appropriate

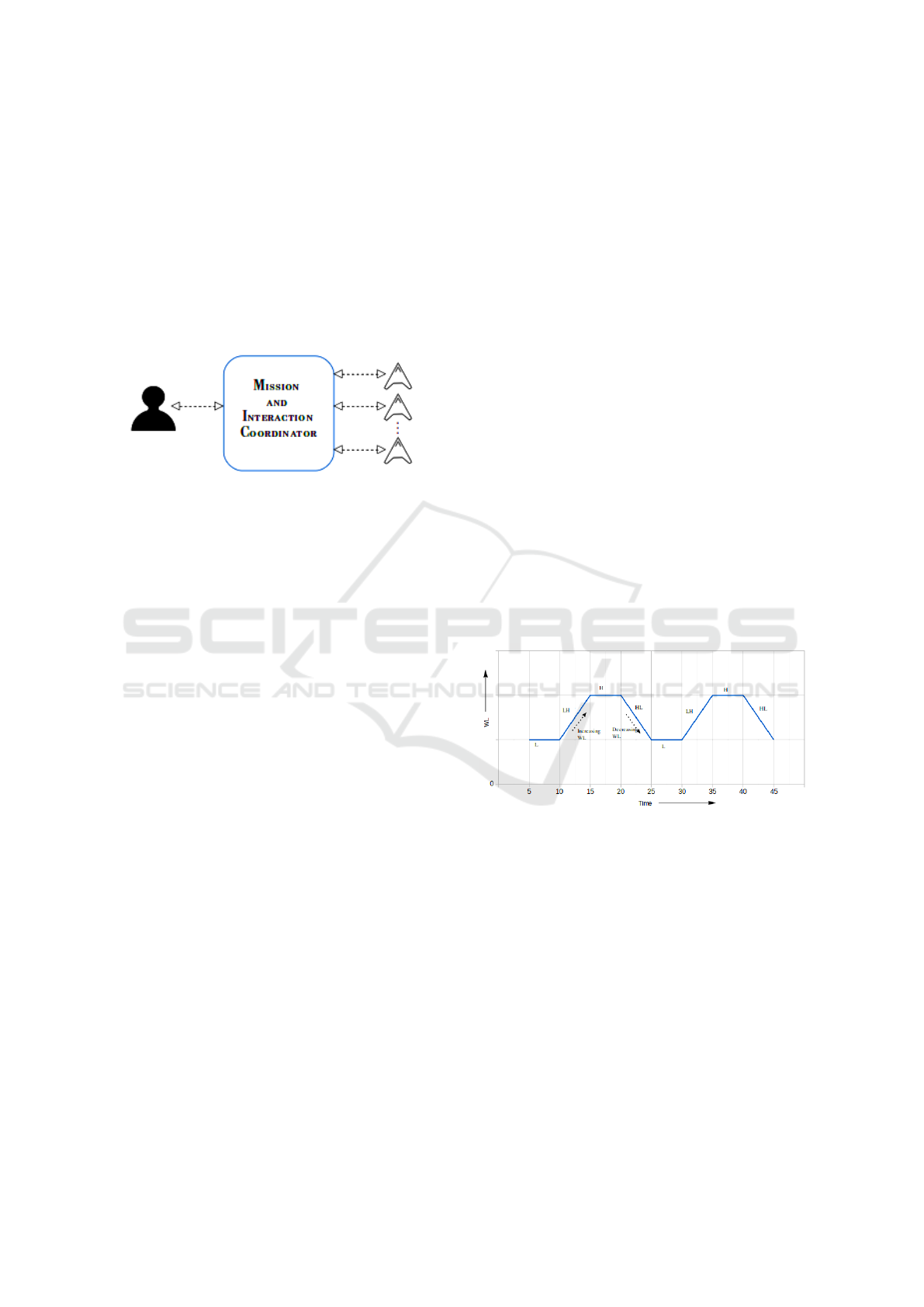

Figure 1: Project layout.

actions. Here, understanding the situation means how

the overall system’s coordinator estimates the state of

both human and UAV units, being able to predicts its

influence on mission achievement. The appropriate

actions could be: managing the amount of work the

human pilot could (or should) handle; managing the

tasks that could done by the UAV; when and how in-

formation should be transferred to the human pilot;

and how tasks should be shared between human and

UAVs. These concepts should bring to a coordinative

approach towards mission success.

Researchers have been trying to improve mission

success, focusing in three main problems associated

with Unmanned Aerial Vehicles (UAVs): higher ac-

cident rates (Haddal and Gertler, 2010), higher hu-

man to machine ratio (Gangl et al., 2013b), and

state awareness of human counterpart (Schulte et al.,

2015). The approach, here proposed, will try to tackle

these issues by increasing state awareness for both hu-

man and machine, eventually decreasing human er-

rors and increasing system’s performance. The aim

is to invert the higher human-to-machine ratio into a

higher machine-to-human ratio.

The overall system will contain a human pilot and

several UAVs working as a team (MUM-T) on a com-

mon mission, see Figure 1. There will be a main sys-

tem i.e. Mission and Interaction Coordinator (MIC)

that has knowledge of overall mission plan and goals,

see Figure 2. A search and rescue mission (Souza

et al., 2016; Gateau et al., 2016) will be the core of

the scenario. In such a mission several actions would

be considered: UAV requests to perform identifica-

tion and confirmation of possible targets; which agent

Towards Multi-UAV and Human Interaction Driving System Exploiting Human Mental State Estimation

297

should visit dangerous or accidental zones; communi-

cate the targets position; present or not to present the

information to the human pilot; etc. The challenge

behind it is to choose when to launch a request to the

pilot. The system’s coordinator should decide based

on the availability of the human pilot i.e. inverse

of workload (Gateau et al., 2016), ethical commit-

ment (Souza et al., 2016), or based on degraded men-

tal states like attentional tunneling (de Souza et al.,

2015).

Figure 2: Project architecture.

On the basis of these, the overall mission coordi-

nator has to estimate human pilot’s mental state, to

assign tasks to UAVs, and to change the level of au-

tonomy to help maintain a human pilot’s engagement

within a suitable window i.e. neither too high nor too

low (Ewing et al., 2016), while maintaining an ac-

ceptable system’s performance to achieve mission’s

goal(s).

4.1 Research Milestones

This research will be carried out in three phases: i)

data collection, ii) implementation, and iii) closed-

loop validation.

In the first phase, the hard-coded experiment

should allow behavioral and physiological data col-

lection from the human pilot, equipped with an EEG,

an ECG, and an ET. Therefore, for this phase, a con-

trolled environment where a human pilot will han-

dle a simulated flight along with interactions with the

UAVs is considered. The experiment is designed to

take place considering four experimental conditions:

low workload (L), high workload (H), low to high

workload transition (LH), and high to low workload

transition (HL) (see Fig. 3 for an example). The

four conditions will be split in a pseudo-random man-

ner: L-LH-H-HL or H-HL-L-LH, and are expected to

bring up engagement-workload variations. The hu-

man pilot’s workload level will be manipulated by

means of:

• choosing from time to time way-points to meet

the requirement of staying in a given distance

range that allows to maintain communicating with

UAVs. These way-points will transform into Air

Traffic Control (ATC) instructions for flying the

plane and will be given to pilot in the form of au-

dio messages. (Risser et al., 2002) and (Gateau

et al., 2018) showed that, depending on their

length and complexity, recalling ATC’s instruc-

tion (e.g. speed, altitude, heading) can create a

high cognitive load.

• answering to pop-ups containing UAVs’ requests

that concern the identification and recognition of

detected targets as in (Gateau et al., 2016). Partic-

ularly in a search and rescue mission, where lives

are at stake (Souza et al., 2016). Errors of iden-

tification or recognition may be avoided, which

implies an important human involvement;

• and performing checklists related, for instance,

to malfunctioning of flight equipment or UAVs

embedded systems. Note that, interruptions dur-

ing checklists can be considered as an issue

(Loukopoulos et al., 2001), and can potentially

increase the workload while decreasing perfor-

mance (Loft and Remington, 2010);

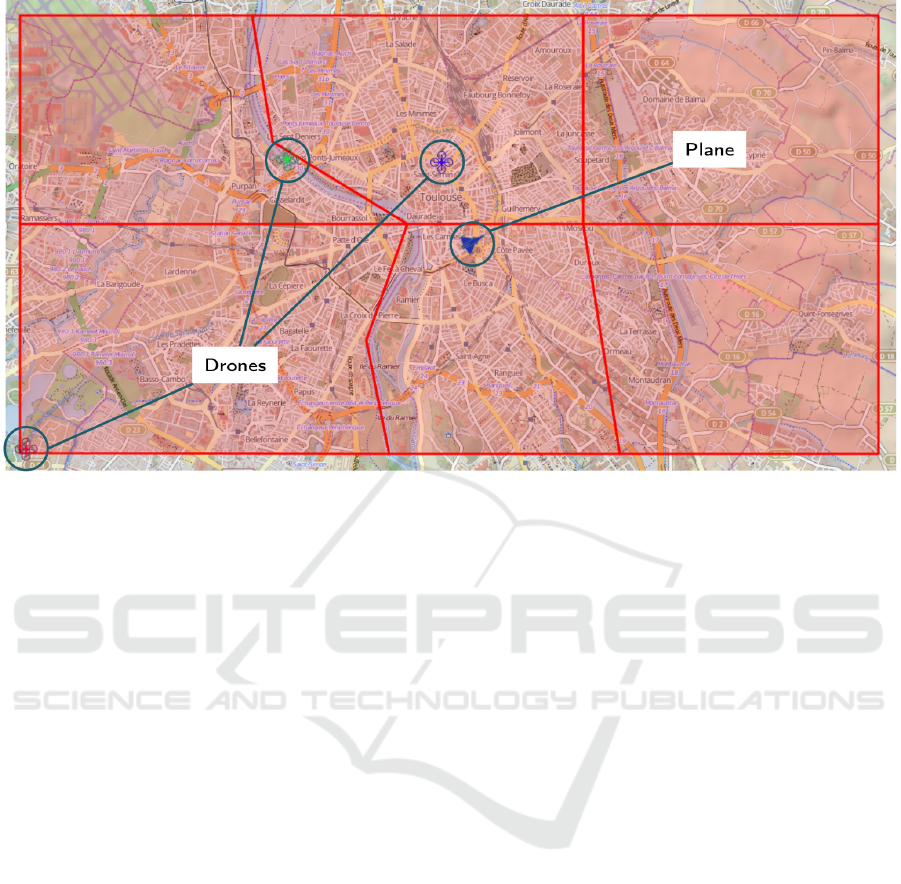

Currently we have designed the application shown in

Figure 4, that lets the human pilot interact with UAVs

during the experiment (e.g. like sending requests for

areas to search; answer UAV queries for validation of

recognized objects).

Figure 3: Experiment structure with conditions designed to

elicit variations of pilots’ engagement.

Following this first experiment (data collection)

under the conditions listed, it is expected to design a

smart tool (implementation phase) that could estimate

mental states of human pilots in real time based on the

physiological markers reviewed in Sec. 3. This real

time estimation would serve as an input to an overall

system’s state estimator (i.e. mental state of human

and UAVs’ states).

Once the overall system’s state estimator is de-

signed, a decisional framework, called Mission and

Interaction Coordinator (MIC) would reason in a

long-term way. In other words, it will predict fu-

ture states of the whole system (i.e. agents and mis-

sion’s states) and will have to choose an appropri-

ate action, bringing coordination between all involved

BIOINFORMATICS 2019 - 10th International Conference on Bioinformatics Models, Methods and Algorithms

298

Figure 4: Developed application that enables the human pilot to interact with UAVs (e.g. to choose regions to search, to

answer UAVs requests, or to define way-points to meet).

agents (i.e. human and UAVs) while ensuring mission

success. This will constitute the closed-loop phase

of this research. The evaluation of the closed-loop

framework will be handled with an experiment simi-

lar to the one described above.

5 CONCLUSION AND FUTURE

WORK

This paper presents our current research towards a

multi-UAV and human interaction driving system that

would exploit human’s mental state estimation. The

main idea is to integrate the latest advances of phys-

iological computing into a high-level mission coor-

dinator. State-of-the-art approaches were presented

as well as promising physiological markers. A mis-

sion scenario was also proposed, in which a human

pilot should coordinate his actions along with UAVs’

requests (MUM-T). In this scenario, four conditions

would be evaluated in order to study the variations of

engagement of human pilot. In particular, such con-

ditions would constitute labels to the collected data

therefore allowing the design of the subsequent smart

estimation system.

The next step of this work is to define the exper-

imental protocol of the proposed mission scenario in

details, to implement a rigorous experimental setup,

hence ensuring the validity of the expected results.

Such results will be used in the forthcoming stages

of this work, in which an intelligent artificial system

will have to reason in a long-term manner. In other

words, it should predict future states of agents (i.e.

mental state of the human pilot, and UAVs’ states) and

requirements of overall mission (i.e. needs and possi-

ble future actions of the human pilot or of UAVs) in

order to take optimal actions to balance the load be-

tween human pilot and UAVs while maximizing sys-

tem’s performance.

REFERENCES

Berka, C., Levendowski, D. J., Lumicao, M. N., Yau,

A., Davis, G., Zivkovic, V. T., Olmstead, R. E.,

Tremoulet, P. D., and Craven, P. L. (2007). Eeg corre-

lates of task engagement and mental workload in vig-

ilance, learning, and memory tasks. Aviation, space,

and environmental medicine, 78(5):B231–B244.

Brisset, P. and Drouin, A. (2004). Paparadziy: do-it-

yourself uav. Journ

´

ees Micro Drones, Toulouse,

France.

Brisset, P., Drouin, A., Gorraz, M., Huard, P.-S., and Tyler,

J. (2006). The paparazzi solution. In MAV 2006, 2nd

US-European competition and workshop on micro air

vehicles.

Brisset, P. and Hattenberger, G. (2008). Multi-uav control

with the paparazzi system. In HUMOUS 2008, con-

ference on humans operating unmanned systems.

Byrne, E. A. and Parasuraman, R. (1996). Psychophysiol-

ogy and adaptive automation. Biological psychology,

42(3):249–268.

Towards Multi-UAV and Human Interaction Driving System Exploiting Human Mental State Estimation

299

Casper, J. and Murphy, R. R. (2003). Human-robot interac-

tions during the robot-assisted urban search and res-

cue response at the world trade center. IEEE Transac-

tions on Systems, Man, and Cybernetics, Part B (Cy-

bernetics), 33(3):367–385.

Chaouachi, M. and Frasson, C. (2012). Mental workload,

engagement and emotions: an exploratory study for

intelligent tutoring systems. In International Con-

ference on Intelligent Tutoring Systems, pages 65–71.

Springer.

de Souza, P. E. U., Chanel, C. P. C., and Dehais, F. (2015).

Momdp-based target search mission taking into ac-

count the human operator’s cognitive state. In Tools

with Artificial Intelligence (ICTAI), 2015 IEEE 27th

International Conference on, pages 729–736. IEEE.

Donath, D., Rauschert, A., and Schulte, A. (2010). Cog-

nitive assistant system concept for multi-uav guid-

ance using human operator behaviour models. HU-

MOUS’10.

Drougard, N., Carvalho Chanel, C., Roy, R., and Dehais,

F. (2017a). An online scenario for mixed-initiative

planning considering human operator state estimation

based on physiological sensors. In IROS Workshop in

Synergies Between Learning and Interaction (SBLI).

Drougard, N., Ponzoni Carvalho Chanel, C., Roy, R. N., and

Dehais, F. (2017b). Mixed-initiative mission planning

considering human operator state estimation based on

physiological sensors.

Eggemeier, F. T., Wilson, G. F., Kramer, A. F., and Damos,

D. L. (1991). Workload assessment in multi-task en-

vironments. Multiple Task Performance, page 207.

Ewing, K. C., Fairclough, S. H., and Gilleade, K. (2016).

Evaluation of an adaptive game that uses eeg mea-

sures validated during the design process as inputs to a

biocybernetic loop. Frontiers in human neuroscience,

10:223.

Fairclough, S. H. (2008). Fundamentals of physiological

computing. Interacting with computers, 21(1-2):133–

145.

Franchi, A., Secchi, C., Ryll, M., Bulthoff, H. H., and Gior-

dano, P. R. (2012). Shared control: Balancing auton-

omy and human assistance with a group of quadro-

tor uavs. IEEE Robotics & Automation Magazine,

19(3):57–68.

Fu, S. and Parasuraman, R. (2007). Event-related potentials

(erps) in neuroergonomics. In Neuroergonomics the

brain at work, pages 32–50, New York. Oxford Uni-

versity Press.

Gangl, S., Lettl, B., and Schulte, A. (2013a). Management

of multiple unmanned combat aerial vehicles from

a single-seat fighter cockpit in manned-unmanned

fighter missions. In AIAA Infotech@ Aerospace (I@

A) Conference, page 4899.

Gangl, S., Lettl, B., and Schulte, A. (2013b). Single-seat

cockpit-based management of multiple ucavs using

on-board cognitive agents for coordination in manned-

unmanned fighter missions. In International Confer-

ence on Engineering Psychology and Cognitive Er-

gonomics, pages 115–124. Springer.

Gateau, T., Ayaz, H., and Dehais, F. (2018). In silico ver-

sus over the clouds: On-the-fly mental state estima-

tion of aircraft pilots, using a functional near infrared

spectroscopy based passive-bci. Frontiers in human

neuroscience, 12:187.

Gateau, T., Chanel, C. P. C., Le, M.-H., and Dehais, F.

(2016). Considering human’s non-deterministic be-

havior and his availability state when designing a col-

laborative human-robots system. In Intelligent Robots

and Systems (IROS), 2016 IEEE/RSJ International

Conference on, pages 4391–4397. IEEE.

Gopher, D. and Donchin, E. (1986). Workload-an exam-

ination of the concept. handbook of perception and

human performance, vol ii, cognitive processes and

performance.

Haddal, C. C. and Gertler, J. (2010). Homeland security:

Unmanned aerial vehicles and border surveillance.

Heard, J., Harriott, C. E., and Adams, J. A. (2018a). A sur-

vey of workload assessment algorithms. IEEE Trans-

actions on Human-Machine Systems.

Heard, J., Heald, R., Harriott, C. E., and Adams, J. A.

(2018b). A diagnostic human workload assessment

algorithm for human-robot teams. In Companion

of the 2018 ACM/IEEE International Conference on

Human-Robot Interaction, pages 123–124. ACM.

Hettinger, L. J., Branco, P., Encarnacao, L. M., and Bon-

ato, P. (2003). Neuroadaptive technologies: apply-

ing neuroergonomics to the design of advanced inter-

faces. Theoretical Issues in Ergonomics Science, 4(1-

2):220–237.

Jiang, S. and Arkin, R. C. (2015). Mixed-initiative human-

robot interaction: definition, taxonomy, and survey. In

Systems, Man, and Cybernetics (SMC), 2015 IEEE In-

ternational Conference on, pages 954–961. IEEE.

Loft, S. and Remington, R. W. (2010). Prospective mem-

ory and task interference in a continuous monitoring

dynamic display task. Journal of Experimental Psy-

chology: Applied, 16(2):145.

Loukopoulos, L. D., Dismukes, R., and Barshi, I. (2001).

Cockpit interruptions and distractions: A line obser-

vation study. In Proceedings of the 11th international

symposium on aviation psychology, pages 1–6. Ohio

State University Columbus.

Maza, I., Caballero, F., Capit

´

an, J., Mart

´

ınez-de Dios, J. R.,

and Ollero, A. (2011). Experimental results in multi-

uav coordination for disaster management and civil

security applications. Journal of intelligent & robotic

systems, 61(1-4):563–585.

McMahan, T., Parberry, I., and Parsons, T. D. (2015).

Evaluating player task engagement and arousal us-

ing electroencephalography. Procedia Manufactur-

ing, 3:2303–2310.

Mehta, R. K. and Parasuraman, R. (2013). Neuroer-

gonomics: a review of applications to physical and

cognitive work. Frontiers in human neuroscience,

7:889.

Mueller, J. B., Miller, C., Kuter, U., Rye, J., and Hamell, J.

(2017). A human-system interface with contingency

planning for collaborative operations of unmanned

BIOINFORMATICS 2019 - 10th International Conference on Bioinformatics Models, Methods and Algorithms

300

aerial vehicles. In AIAA Information Systems-AIAA

Infotech@ Aerospace, page 1296.

Ollero, A., Lacroix, S., Merino, L., Gancet, J., Wiklund, J.,

Remuss, V., Perez, I., Gutierrez, L., Viegas, D., Ben-

itez, M., et al. (2005). Architecture and perception is-

sues in the comets multi-uav project. multiple eyes in

the skies. IEEE Robotics and Automation Magazine,

12:46–57.

Perez, D., Maza, I., Caballero, F., Scarlatti, D., Casado, E.,

and Ollero, A. (2013). A ground control station for a

multi-uav surveillance system. Journal of Intelligent

& Robotic Systems, 69(1-4):119–130.

Pope, A. T., Bogart, E. H., and Bartolome, D. S. (1995).

Biocybernetic system evaluates indices of operator en-

gagement in automated task. Biological psychology,

40(1-2):187–195.

Risser, M. R., McNamara, D. S., Baldwin, C. L., Scerbo,

M. W., and Barshi, I. (2002). Interference effects on

the recall of words heard and read: Considerations

for atc communication. In Proceedings of the Human

Factors and Ergonomics Society Annual Meeting, vol-

ume 46, pages 392–396. SAGE Publications Sage CA:

Los Angeles, CA.

Roy, R. N., Bonnet, S., Charbonnier, S., and Campagne,

A. (2016a). Efficient workload classification based on

ignored auditory probes: a proof of concept. Frontiers

in human neuroscience, 10:519.

Roy, R. N., Bovo, A., Gateau, T., Dehais, F., and Chanel,

C. P. C. (2016b). Operator engagement during pro-

longed simulated uav operation. IFAC-PapersOnLine,

49(32):171–176.

Roy, R. N., Charbonnier, S., Campagne, A., and Bonnet,

S. (2016c). Efficient mental workload estimation us-

ing task-independent eeg features. Journal of neural

engineering, 13(2):026019.

Scherer, J., Yahyanejad, S., Hayat, S., Yanmaz, E., Andre,

T., Khan, A., Vukadinovic, V., Bettstetter, C., Hell-

wagner, H., and Rinner, B. (2015). An autonomous

multi-uav system for search and rescue. In Proceed-

ings of the First Workshop on Micro Aerial Vehicle

Networks, Systems, and Applications for Civilian Use,

pages 33–38. ACM.

Schulte, A., Donath, D., and Honecker, F. (2015). Human-

system interaction analysis for military pilot activ-

ity and mental workload determination. In Systems,

Man, and Cybernetics (SMC), 2015 IEEE Interna-

tional Conference on, pages 1375–1380. IEEE.

Schurr, N., Marecki, J., and Tambe, M. (2009). Im-

proving adjustable autonomy strategies for time-

critical domains. In Proceedings of The 8th Interna-

tional Conference on Autonomous Agents and Multia-

gent Systems-Volume 1, pages 353–360. International

Foundation for Autonomous Agents and Multiagent

Systems.

Senoussi, M., Verdiere, K. J., Bovo, A., Ponzoni Car-

valho Chanel, C., Dehais, F., and Roy, R. N. (2017).

Pre-stimulus antero-posterior eeg connectivity pre-

dicts performance in a uav monitoring task.

Singh, G., Berm

´

udez i Badia, S., Ventura, R., and Silva,

J. L. (2018). Physiologically attentive user inter-

face for robot teleoperation: real time emotional state

estimation and interface modification using physiol-

ogy, facial expressions and eye movements. In 11th

International Joint Conference on Biomedical Engi-

neering Systems and Technologies, pages 294–302.

SCITEPRESS-Science and Technology Publications.

Souza, P. E., Chanel, C. P. C., Dehais, F., and Givigi, S.

(2016). Towards human-robot interaction: A framing

effect experiment. In Systems, Man, and Cybernet-

ics (SMC), 2016 IEEE International Conference on,

pages 001929–001934. IEEE.

Valavanis, K. P. and Vachtsevanos, G. J. (2015). Future of

unmanned aviation. In Handbook of unmanned aerial

vehicles, pages 2993–3009. Springer.

Williams, K. W. (2004). A summary of unmanned aircraft

accident/incident data: Human factors implications.

Technical report, DTIC Document.

Towards Multi-UAV and Human Interaction Driving System Exploiting Human Mental State Estimation

301