Eye Gesture in a Mixed Reality Environment

Almoctar Hassoumi and Christophe Hurter

French Civil Aviation University, ENAC, Avenue Edouard Belin, Toulouse, France

Keywords:

Eye-movement, Interaction, Eye Tracking, Smooth Pursuit, Mixed Reality, Accessibility.

Abstract:

Using a simple approach, we demonstrate that eye gestures could provide a highly accurate interaction modal-

ity in a mixed reality environment. Such interaction has been proposed for desktop and mobile devices.

Recently, Gaze gesture has gained a special interest in Human-Computer Interaction and granted new interac-

tion possibilities, particularly for accessibility. We introduce a new approach to investigate how gaze tracking

technologies could help people with ALS or other motor impairments to interact with computing devices. In

this paper, we propose a touch-free, eye movement based entry mechanism for mixed reality environments

that can be used without any prior calibration. We evaluate the usability of the system with 7 participants,

describe the implementation of the method and discuss its advantages over traditional input modalities.

1 INTRODUCTION

The standard hand gesture interactions in a mixed re-

ality environment have been successfully used in a

great number of applications (Chaconas and Hllerer,

2018; Piumsomboon et al., 2013), for example in vir-

tual text entry (Figure 1). Yet, despite its effective-

ness, many questions still persist. For example, how

could we extend the interactions for accessibility. In

addition, the input methods proposed in traditional

systems are tedious, uncomfortable and often suffer

from spatial positioning accuracy (Kyt

¨

o et al., 2018).

Not surprisingly, people with body weaknesses, poor

coordination, lack of muscle control or motor impair-

ments could not rely on these conventional hand ges-

tures to communicate with computing devices. For

these persons, other means or input are required. Gen-

erally, the eye muscles are not affected and could

be used for interacting with systems (Zhang et al.,

2017). In this work, we focus on a touch-free gesture

interaction that builds on smooth pursuit eye move-

ment. Smooth pursuit is a bodily function which al-

lows maintaining a moving object in the fovea. The

advantage of this eye movement is that users can per-

form it voluntarily in contrast to other types of eye

movements, i.e., saccade and fixation (Collewijn and

Tamminga, 1984). For example, blinking has been

proposed as an interaction technique (Mistry et al.,

2010). However, human often blinks subconsciously

in order to protect the eyes from external irritants or

spread tears across the cornea. To implement our

approach, we leverage two simple modules. The

user pupil center location and the user interface that

draws the trajectories of the moving stimuli. Pupil

images are captured using a Pupil Labs Eye camera

(Kassner et al., 2014). The Hololens serves as the

mixed reality device. Therefore, the user interface is

displayed in the Hololens field of view (FOV). Let

p

i

∈ R

2

be the x, y-coordinates of the i-th pupil cen-

ter position in the camera frame I. Then the vector

P = (p

|

1

, p

|

2

, . . . , p

|

n

)

>

∈ R

2n

denotes the pupil cen-

ter positions in I during a smooth pursuit movement.

Notice that we do not use the world camera of the

eye tracker and no prior calibration is performed. We

will comment shortly on the pupil center detection al-

gorithm. The second module is the evolution of the

moving stimuli with time. We will only consider the

case of a constant speed v = const. However, the

speed of the moving stimuli could be accelerated or

decelerated. The Pearson’s correlation is used to cal-

culate the correlation between the pupil center loca-

tions and the targets’ stimuli positions (Velloso et al.,

2018). The results show that our approach is not af-

fected by geometrical transformations (scaling, rota-

tion and translation), at least, when the trajectories are

in the field of view of the user. To illustrate our ap-

proach, we investigate the specific case of PIN entry.

PINs are traditionally entered using key pressing or

touch input. In a mixed reality environment, a virtual

keyboard appears in the FOV and is used as an in-

put modality. People with motor disabilities are often

helped by an assistant, even for entering a password

in a system. This reduces considerably their privacy.

Hassoumi, A. and Hurter, C.

Eye Gesture in a Mixed Reality Environment.

DOI: 10.5220/0007684001830187

In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2019), pages 183-187

ISBN: 978-989-758-354-4

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

183

2 RELATED WORKS

This work builds upon recent studies in eye move-

ment research. The use of eye movement in Human-

Computer interfaces systems such as PDAs, ATMs,

smartphones, and computers has been well studied

(Feit et al., 2017). Smooth pursuit, scanpath, sac-

cades, and vestibulo-ocular reflex are some of the

common ways to use gaze gestures in order to inter-

act with a system. Recently, there has been a great

variety of cheap eye-tracking systems that enable es-

timating user gaze position accurately (Kassner et al.,

2014). Eye gaze interactions have been proposed as

a reliable input modality (Zhang et al., 2017), espe-

cially for people with motor impairments. The Dwell

method which allows selecting a target after a pre-

defined time is one of the most used methods (Mott

et al., 2017). However, this approach requires a prior

calibration session where the user fixates on a series

of stimuli placed at different locations (Santini et al.,

2017; Hassoumi et al., 2018). The most accurate sys-

tems require a 9-points calibration before the gaze di-

rection is accurately estimated (Kassner et al., 2014).

In this work, we investigate a novel calibration-free

approach that leverages the potential of smooth pur-

suit eye movement to select a target by following its

movement. In addition, the dwell approach is limited

by the time threshold. For example, if the threshold is

defined for 200 ms, the user cannot fixate on a target

for more than 200 ms, otherwise, a selection is trig-

gered. Recently smooth pursuit eye movement has

allowed a calibration-free gaze-based interaction. In

SmoothMoves, Esteves et al. (2015) computed the

correlation between targets on-screen movements and

user’s head movement for selection. Delamare et al.

(2017) proposed G3, a system for selecting different

tasks based on the relative movements of the eyes. Or-

bits (Esteves et al., 2015) and PathWord (Almoctar

et al., 2018) allowed selecting a target by matching its

movement. Subsequently, a great number of applica-

tions using smooth pursuit have been proposed. See

(Esteves et al., 2015) for a review.

3 IMPLEMENTATION

We use a Microsoft Hololens with a Pupil Labs Eye

tracker. The device is equipped with one eye cam-

era

1

. The computer vision algorithms used to detect

and track the pupil center positions reduced the frame

rate by 5%. A C# desktop software was built using

the EmguCv 3.1

2

on an XPS 15 9530 Dell Laptop

1

Sampling rate: 120 Hz, resolution: 640 × 480 pixels

2

An OpenCV 3.1 wrapper for C#

Figure 1: A keyboard interface in a mixed reality environ-

ment. Selection is made with Air Tap and Bloom gestures.

Figure 2: Our proposed PIN entry interface that uses user

relative smooth pursuit eye movement for selection.

Figure 3: A user trying to select the digit 2 . A-A user eye

under infrared light, captured by the camera. See how the

pupil is darker compared to other features of the eye. B-The

digit being selected.

64 bits with an Intel(R) Core(TM) I7-4712HQ CPU

2.30GHz, 4 core(s), 8 processes, 16GB of Random

Access Memory, 2GB swapping Memory.

3.1 Overview of the Method

Digits provide a useful and simple way to enter a pass-

word in a system. The method has been efficiently im-

plemented on mobile devices to protect users against

shoulder surfing and smudge attacks (Almoctar et al.,

2018). We examine the method in a mixed reality en-

vironment. Numbers from zero to nine are drawn on

the user interface using simple mathematical formu-

las (Circles and Lines equation only, see Figure 5).

For instance, the digit 3 consists of two half cir-

cles and the digit 2 is drawn using a three-quarter

circle, another quarter circle and a segment between

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

184

two points (Figure 5). The remaining digits are drawn

similarly. A moving stimulus (blue circle in Figure

2) is displayed on each digit and moves along the tra-

jectory defined by the digit. Therefore, whenever the

user needs to select a digit, he carefully follows the

moving stimuli (Figure 3B) that moves on the digit’s

shape. While following the stimulus, the user pupil

center moves accordingly and implicitly draws the

shape of the digit. The 2D points representing the

pupil trajectory P = (p

|

1

, p

|

2

, . . . , p

|

n

)

>

∈ R

2n

are com-

pared against every digit in order to select the best

match. The digit which points are more correlated in

both x and y-axes, is likely to be the selected number.

However, to avoid false activation and subsequent er-

rors, the correlations must exceed a predefined thresh-

old. On a scale of -1 to 1, we set the threshold to 0.82

based on a pilot study with participants. Remarkably,

since the stimuli are moving constantly, the probabil-

ity to start following it, at the start of its trajectory is

very low. In most cases, the user will start follow-

ing the stimulus after it has already started its move-

ment. However, the simple mathematical shapes used

to draw the digits allow obtaining a unique represen-

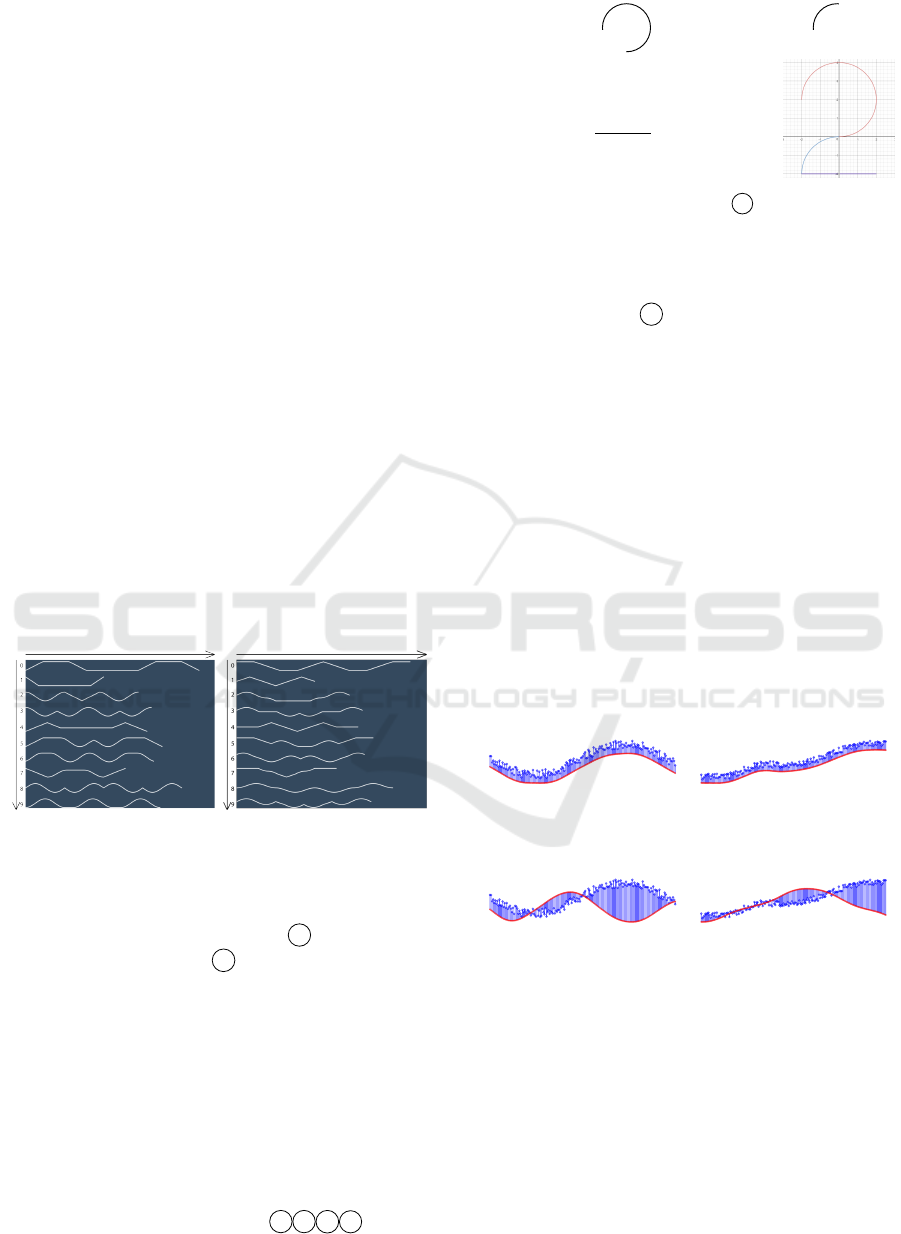

tation for every digit. Figure 4 below shows the rep-

resentation of the digits in the x-axis and y-axis sepa-

rately. Notice how the shapes are different from each

other in both axes separately.

Number of Points Number of Points

Positions of x-values

Positions of y-values

Figure 4: Representation of the digit from zero to nine in x

(left) and y-axis (right).

The lengths of each digit in x and y-axes are different,

that is, the number of points used to draw each digit

is different. For instance, the digit 1 is drawn with

fewer points than the digit 8 . The unique form of the

shapes on 1-dimension (x- or y-axis only) is sufficient

to obtain accurate results, however, in order to make

the algorithm more robust, we used both axes, that is,

the correlation in both x and y axes are calculated.

3.2 Interaction and Metric

Figure 2 shows the user interface of the proposed ap-

proach. The user selects a single digit by following

the blue stimulus moving on its shape using their eye.

For example, inserting the PIN 2 1 8 7 , begins

by following the blue stimulus moving on the shape

{

x

=

y

=

/2,]

(C)

{

x

=

y

=

/2,]

(C)

{

M(x,y)

x

=

t

y

=

(S)

(C)U(C)U(S)

Figure 5: Composition of the digit 2 using simple math-

ematical formulas. (C

1

) represents a three-quarter circle,

(C

2

) a quarter of a second circle and (S

1

) is the segment

representing the bottom line of the digit.

defined by the digit 2 in the user interface (Figure

3). Thereafter, the subsequent digits are selected sim-

ilarly. Since the blue circle moves gradually at a con-

stant velocity, the pupil positions of the user change

accordingly in the eye camera imaging frame. The

positions of the blue circles on all digits along with

the positions of the pupil centers are stored for fur-

ther processing. A mathematical measurement that

gives the strength of the linear association between

two sets of data is computed. This measure is the

Pearson product-moment correlation coefficient (Vi-

dal et al., 2013), named in honor of the English statis-

tician Karl Pearson (1857-1936). Previous work used

this metric, and to the best of our knowledge, Vidal et

al. (2013) were the first to initiate this approach for

eye tracking interaction. Examples of high (Figure 6)

and low (Figure 7) pupil − target stimulus correla-

tions are shown below.

x−values

y−values

X-values

Y-values

Figure 6: Illustration of a high similarity between pupil cen-

ter positions (blue circles) and a moving stimulus position

(red circles).

y−values

x−values

X-values

Y-values

Figure 7: Illustration of a dissimilarity between pupil center

positions (blue circles) and a moving stimulus position (red

circles).

3.3 Pupil Detection

Our system uses the eye camera of a Pupil Labs Eye

tracker. The camera is set up with an Infrared pass-

ing filter. A Near-Infrared LED illuminator is located

in the immediate vicinity of the camera to illuminate

the eye which conducts to corneal reflections in the

subjects eye image. The presence of the visible light

is circumvented and it becomes easier to separate the

pupil from the iris. The pupil appears then as a darker

Eye Gesture in a Mixed Reality Environment

185

circular blob in the eye image.

Initially, the pupil center of the subject is detected

and tracked by the infrared camera. An accurate pupil

center detection is essential in this prototype. The

pupil detection algorithm implemented in this study

locates the features of the dark pupil present in the

IR illuminated eye camera frame. The algorithm is

implemented so that the user can move their head

freely, thus, we do not use pupil corneal reflection

to compensate small head movements. A 640 × 480

frame is grabbed from the IR illuminated eye cam-

era. The image pixels color are thus converted from

3 channels RGB color space to 1-channel gray inten-

sity value. The grayscale image is, afterward, used

for automatic pupil area detection. We call this part

automatic thresholding: starting with a user-defined

threshold value T = 21 chosen experimentally, thresh-

olding is used to create a binary image. T must be

comprised between 0 and 255. Thresholding con-

sists of replacing each pixel of a grayscale image into

black or white. The pixel which has a gray intensity

value smaller than the defined threshold (I(i,j) < T)

is transformed into black and the pixel having a gray

value intensity greater than the threshold (I(i,j) > T)

is transformed into black. Since People have different

pupil darkness, the user is allowed to define a range of

black pixels that will define their pupil pixels. By de-

fault, we set a range r =[2000-4000] (chosen empir-

ically). The algorithm checks if the number of black

pixels is included in that range. If so, the next step of

the pupil detection process is executed, otherwise, the

defined threshold value is incremented and the algo-

rithm checks again if the number of black pixels is in-

cluded in that range. The process is repeated again un-

til the number of black pixels is included in the range.

However, it is important to note that choosing a

high value for the range’s maximum value may lead

to an increase or number of false black pixel apper-

taining to the pupil. Choosing a small value for the

range’s minimum may lead to getting small pupil

area, thus providing an inaccurate pupil center. If

Figure 8: Illustration of pupil tracking and detection

pipeline.

a pupil area is found, the algorithm detects closed

contours in the thresholded image Using (Fitzgibbon

et al., 1999) algorithm (Figure 8), the ellipse that best

fits, in a least-square sense, each contour found in the

thresholded image is detected (step 4 of Figure 8).

The center is then saved. The convex Hull (Sklan-

sky, 1982) of the points representing each contour is

found. The Convex Hull that has the highest isomet-

ric quotient, i.e. the best circularity is considered as

the one representing the pupil. Its corresponding best

fit ellipse center is stored as the pupil center for this

frame.

4 PILOT STUDY RESULTS

We have investigated the perceived task load of

the technique using a NASA-TLX questionnaire. 7

healthy participants (4 females) were recruited for

the experiment. a 5-minutes acquaintance period was

given to the participants in order to be familiar with

the interaction. The tasks were counterbalanced to

reduce learning effects. The primary results indi-

cated that, among the feature tested, the frustration

gave the lowest work load as shown in Figure 9,

(µ = 4.16, σ = 3.81). The highest load was obtained

for Effort (µ = 11.66, σ = 3.72). During the indi-

vidual interview with the participants, we found that

they indicated a high effort load because this is their

first smooth pursuit interaction attempt. The remain-

ing perceived loads were as follows: µ

Mental

= 9.0

(σ

Mental

= 4.33), µ

Physical

= 8.33 (σ

Physical

= 6.15),

µ

Temporal

= 8.83 (σ

Temporal

= 4.21), µ

Per f ormance

= 7.5

(σ

Mental

= 4.41). It can be noted that the effort could

be reduced by changing different parameters of the al-

gorithm, for example, the size or the orientation of the

targets. In addition, the speed could be modified for

each user.

0 5 10 15

Mean Load Index

Effort

Frustration

Mental Demand

Performance

Physical Demand

Temporal Demand

Figure 9: Results of the pilot study evaluation.

5 LIMITATIONS

Our approach suffers from common known issues.

For example, Lighting conditions, head poses, and

eyelashes reduce the accuracy of the pupil detection.

Moreover, the digits must have an acceptable size,

otherwise, the pupil center positions will appear to be

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

186

static or unchanged. This problem may also occur

when the user interface is displayed far from the user

FOV. We tested our approach with 7 participants and

although the initial feedbacks are positive and encour-

aging, we plan to conduct an experiment in real-world

scenarios and report the results in follow-up studies.

As we are improving the system, we are implement-

ing algorithms and methods to detect the digits faster.

In addition, the algorithm was tested with user with

full mobility. Additional evaluation will help under-

stand the effectiveness of the approach in real-world

scenarios with subjects restricted in their motor skills.

6 CONCLUSION

In this paper, we presented a novel eye-based inter-

action entry that uses smooth pursuit eye movement.

A key point of this paradigm is that a selection im-

plies that the user has followed a moving target, thus

eliminating the Midas touch problem. Other applica-

tions may benefit from this interaction technique, for

example entering a flight level in a virtual Air Traf-

fic Control Simulator. Future work will explore digits

recognition time and investigate the potential of this

method on alphanumeric characters.

REFERENCES

Almoctar, H., Irani, P., Peysakhovich, V., and Hurter, C.

(2018). Path word: A multimodal password en-

try method for ad-hoc authentication based on digits’

shape and smooth pursuit eye movements. In Pro-

ceedings of the 20th ACM International Conference

on Multimodal Interaction, ICMI ’18, pages 268–277,

New York, NY, USA. ACM.

Chaconas, N. and Hllerer, T. (2018). An evaluation of bi-

manual gestures on the microsoft hololens. In 2018

IEEE Conference on Virtual Reality and 3D User In-

terfaces (VR), pages 1–8.

Collewijn, H. and Tamminga, E. P. (1984). Human smooth

and saccadic eye movements during voluntary pursuit

of different target motions on different backgrounds.

The Journal of Physiology, 351(1):217–250.

Esteves, A., Velloso, E., Bulling, A., and Gellersen, H.

(2015). Orbits: Gaze interaction for smart watches

using smooth pursuit eye movements. In Proceedings

of the 28th Annual ACM Symposium on User Interface

Software Technology, UIST ’15, pages 457–466, New

York, NY, USA. ACM.

Feit, A. M., Williams, S., Toledo, A., Paradiso, A., Kulka-

rni, H., Kane, S., and Morris, M. R. (2017). Toward

everyday gaze input: Accuracy and precision of eye

tracking and implications for design. In Proceedings

of the 2017 CHI Conference on Human Factors in

Computing Systems, CHI ’17, pages 1118–1130, New

York, NY, USA. ACM.

Fitzgibbon, A., Pilu, M., and Fisher, R. B. (1999). Di-

rect least square fitting of ellipses. IEEE Transac-

tions on Pattern Analysis and Machine Intelligence,

21(5):476–480.

Hassoumi, A., Peysakhovich, V., and Hurter, C. (2018). Un-

certainty visualization of gaze estimation to support

operator-controlled calibration. Journal of Eye Move-

ment Research, 10(5).

Kassner, M., Patera, W., and Bulling, A. (2014). Pupil: An

open source platform for pervasive eye tracking and

mobile gaze-based interaction. In Proceedings of the

2014 ACM International Joint Conference on Perva-

sive and Ubiquitous Computing: Adjunct Publication,

UbiComp ’14 Adjunct, pages 1151–1160, New York,

NY, USA. ACM.

Kyt

¨

o, M., Ens, B., Piumsomboon, T., Lee, G. A., and

Billinghurst, M. (2018). Pinpointing: Precise head-

and eye-based target selection for augmented reality.

In Proceedings of the 2018 CHI Conference on Hu-

man Factors in Computing Systems, CHI ’18, pages

81:1–81:14, New York, NY, USA. ACM.

Mistry, P., Ishii, K., Inami, M., and Igarashi, T. (2010).

Blinkbot: Look at, blink and move. In Adjunct Pro-

ceedings of the 23Nd Annual ACM Symposium on

User Interface Software and Technology, UIST ’10,

pages 397–398, New York, NY, USA. ACM.

Mott, M. E., Williams, S., Wobbrock, J. O., and Morris,

M. R. (2017). Improving dwell-based gaze typing

with dynamic, cascading dwell times. In Proceed-

ings of the 2017 CHI Conference on Human Factors in

Computing Systems, CHI ’17, pages 2558–2570, New

York, NY, USA. ACM.

Piumsomboon, T., Clark, A., Billinghurst, M., and Cock-

burn, A. (2013). User-defined gestures for augmented

reality. In CHI ’13 Extended Abstracts on Human Fac-

tors in Computing Systems, CHI EA ’13, pages 955–

960, New York, NY, USA. ACM.

Santini, T., Fuhl, W., and Kasneci, E. (2017). Calibme:

Fast and unsupervised eye tracker calibration for gaze-

based pervasive human-computer interaction. In Pro-

ceedings of the 2017 CHI Conference on Human Fac-

tors in Computing Systems, CHI ’17, pages 2594–

2605, New York, NY, USA. ACM.

Sklansky, J. (1982). Finding the convex hull of a simple

polygon. Pattern Recogn. Lett., 1(2):79–83.

Velloso, E., Coutinho, F. L., Kurauchi, A., and Morimoto,

C. H. (2018). Circular orbits detection for gaze inter-

action using 2d correlation and profile matching algo-

rithms. In Proceedings of the 2018 ACM Symposium

on Eye Tracking Research & Applications, ETRA ’18,

pages 25:1–25:9, New York, NY, USA. ACM.

Vidal, M., Bulling, A., and Gellersen, H. (2013). Pur-

suits: spontaneous interaction with displays based on

smooth pursuit eye movement and moving targets. In

Proceedings of the 2013 ACM international joint con-

ference on Pervasive and ubiquitous computing, pages

439–448. ACM.

Zhang, X., Kulkarni, H., and Morris, M. R. (2017).

Smartphone-based gaze gesture communication for

people with motor disabilities. In Proceedings of the

2017 CHI Conference on Human Factors in Comput-

ing Systems, CHI ’17, pages 2878–2889, New York,

NY, USA. ACM.

Eye Gesture in a Mixed Reality Environment

187