Specification of a UX Process Reference Model towards the Strategic

Planning of UX Activities

Suzanne Kieffer

1

, Luka Rukonic

1

, Vincent Kervyn de Meerendré

1

and Jean Vanderdonckt

2

1

Institute for Language and Communication, Université Catholique de Louvain, Louvain-la-Neuve, Belgium

2

Louvain Research Institute in Management and Organizations, Université Catholique de Louvain,

Louvain-la-Neuve, Belgium

Keywords: User Experience (UX), UX Process Reference Model, UX Methods, UX Artifacts.

Abstract: In this conceptual paper, we present a UX process reference model (UXPRM), explain how it builds on the

related work and report our experience using it. The UXPRM includes a description of primary UX lifecycle

processes, and a classification of UX methods and artifacts. This work draws an accurate picture of UX base

practices and allows the reader to compare and select methods for different purposes. Building on that basis,

our future work consists of developing a UX Capability/Maturity Model (UXCMM) intended for UX activity

planning according to the organization’s UX capabilities. Ultimately, the UXCMM aims to facilitate the in-

tegration of UX processes in software engineering, which should contribute to reducing the gap between UX

research and UX practice.

1 INTRODUCTION

To date there is no consensual definition of User Ex-

perience (UX). While the origin of the term is gener-

ally attributed to Norman et al. (1995), the relevant

literature reports numerous perspectives on and defi-

nitions of UX (Hassenzahl, 2003, 2008; Hassenzahl

and Tractinsky, 2006; ISO 9241-210, 2008; Law et

al., 2009). The International Organization for Stand-

ardization (ISO) defines UX as “a person’s percep-

tions and responses that result from the use or antici-

pated use of a product, system or service“ (ISO 9241-

210, 2008). Law et al. (2009) surveyed the views of

275 UX researchers and practitioners on their under-

standing of UX and its key characteristics. Respond-

ents not only reported varying opinions about the na-

ture and scope of UX but they also expressed mixed

reactions to the ISO UX definition: according to re-

spondents, although the definition integrates well the

aspects of subjectivity and usage, the concepts of ob-

ject (e.g. ‘product‘) and context (e.g. social context

and temporality) need clarifications. A recent analy-

sis of the ISO UX definition based on formal logic

illustrates similar inconsistencies and ambiguities in

its formulation and structure (Mirnig et al., 2015).

The lack of consensus on the definition of UX has

led to confusion over UX measurement and UX

evaluation methods. Whether UX measures should

integrate usability is a question that divides the UX

community (Law et al., 2008, 2014). As pointed out

by Bargas-Avila and Hornbæk (2011), UX research

has become dichotomic between those who focus on

the hedonic aspects of UX such as visual aesthetics,

beauty, joy of use or personal growth, and those who

focus on the pragmatic characteristics of the

interactive product such as usability, utility or safety.

The relevant Human-Computer Interaction (HCI)

literature reports two approaches for UX

measurement: either as a variation of the satisfaction

construct of usability within a ‘traditional‘ HCI

approach focused on task-oriented, instrumental

goals (Bevan, 2008; Grandi et al., 2017; ISO 13407,

1999; Albert and Tullis, 2013) or as a set of hedonic

qualities different from usability within a ‘new

paradigm‘ in HCI focused on non-task oriented, non-

instrumental goals (Hassenzahl, 2003, 2008;

Hassenzahl and Tractinsky, 2006). Furthermore,

whether UX measurement should follow a qualitative

or a quantitative approach is another question that

divides the UX community. Bargas-Avila and

Hornbæk (2011) showed in their review of 66

empirical UX studies that 50% were qualitative, 33%

quantitative and 17% combined both approaches.

Lallemand et al. (2015) conducted a replication of the

survey of Law et al. (2009) amongst 758 practitioners

74

Kieffer, S., Rukonic, L., Kervyn de Meerendré, V. and Vanderdonckt, J.

Specification of a UX Process Reference Model towards the Strategic Planning of UX Activities.

DOI: 10.5220/0007693600740085

In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2019), pages 74-85

ISBN: 978-989-758-354-4

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

and researchers. The authors found no clear answer

on respondents’ attitude towards UX measurement

although they reported a higher preference for

qualitative approaches in industry, which seems to be

consistent with the UX trend depicted in (Bargas-

Avila and Hornbæk, 2011). Interestingly, despite the

aforementioned division between traditional and new

HCI paradigm, the UX community employs mostly

traditional HCI/usability evaluation methods such as

survey research, interview, observation and

experimentation (Bargas-Avila and Hornbæk, 2011;

Daae and Boks, 2015; Gray, 2016; Roedl and

Stolterman, 2013; Vermeeren et al., 2010).

Questionnaire is the prevailing technique supporting

UX data collection (Bargas-Avila and Hornbæk,

2011; Law et al., 2014; Venturi et al., 2006). The

questionnaires used are either validated (e.g.

AttrakDiff, Flow State Scales, Game Experience

Questionnaire, Self-assessment Manikin, CSUQ,

SUS) or self-developed. Bargas-Avila and Hornbæk

(2011) also report the emergence of constructive

methods such as probes, collage/drawings, or

photographs, and express concerns about the validity

of such new methods.

Nevertheless, from a buzzword in the late 90‘s

UX has become a core concept of HCI, leading to the

proliferation of UX methods intended to support and

improve both UX activities and system development

(Venturi et al., 2006). Yet, the relevant literature

consistently highlights contrasting perspectives on

UX methods between academia and industry

(Lallemand et al., 2015; Law et al., 2009, 2014).

While the academia mainly focuses on the

development and the testing of new UX methods, the

industry documents recommendations for their use in

industrial context promoting design thinking as a

strategy for innovation. Gray (2016) interviewed 13

UX practitioners about their use of UX methods.

Participants reported adapting and combining UX

methods according to the design situation, revealing

a UX practice that is rather ad hoc than based on

codified, deterministic procedures. According to

earlier findings (Roedl and Stolterman, 2013), this

pattern in UX practice results from issues with

research outputs such as the over-generalization of

design situations, the disregard for the complexity of

group decision-making or for time and resources

constraints at the workplace.

In this conceptual paper, we present a UX process

reference model (UXPRM), explain how it builds on

the related work and report our experience using it.

The UXPRM includes a description of primary UX

lifecycle processes, and a classification of UX

methods and artifacts. This work draws an accurate

picture of UX base practices and allows the reader to

compare and select methods for different purposes.

Building on that basis, our future work consists of

developing a UX Capability/Maturity Model

(UXCMM) intended for UX activity planning

according to the organization’s UX capabilities.

Ultimately, the UXCMM aims to facilitate the

integration of UX processes in software engineering,

which should contribute to reducing the gap between

UX research and UX practice.

2 RELATED WORK

In this section, we define the concept of process ref-

erence model and discuss three methodologies related

to UX practice: Usability Engineering (UE), User-

Centered Design (UCD) and Agile User-Centered

Design Integration (AUCDI). We have selected these

three methodologies as they involve UCD methods

articulated across a lifecycle, which fits the definition

of UX of Law et al. (2009): “UX must be part of HCI

and grounded in UCD practice“.

2.1 Process Reference Model

A process reference model describes a set of pro-

cesses and their interrelations within a process lifecy-

cle (ISO 15504-1, 2004, 2012). The description of

each process includes its objectives and its outcomes.

Outcomes, also referred to as work products, are the

artifacts associated with the execution of a process.

Process reference models are refined into base prac-

tices that contribute to the production of work prod-

ucts (ISO 15504-1, 2012). A primary process is a

group of processes that belong to the same category

and are associated with the same objectives. Usually,

a process reference model is associated with a process

assessment model, which is a measurement structure

for the assessment of the capability or performance of

organizations to implement processes (ISO 15504-1,

2004, 2012). Together, a process reference model and

a process assessment model constitute a capabil-

ity/maturity model (CMM). Typically, CMMs in-

cludes five maturity levels that describe the level of

maturity of a process: initial (level 1), repeatable

(level 2), defined (level 3), managed (level 4) and op-

timized (level 5). The purpose of such models is to

support organizations moving from lower to higher

maturity levels. In a CMM, both base practices and

work products serve as indicators of the capabil-

ity/maturity of processes.

For the record, this conceptual paper focuses on

the specification of a UX process reference model and

Specification of a UX Process Reference Model towards the Strategic Planning of UX Activities

75

not on that of a UX process assessment model. To

date, there is, to the best of our knowledge, no process

reference model for the UX process. Lacerda and

Gresse van Wangenheim (2016) recently conducted a

systematic literature review of usability capabil-

ity/maturity models. Out of the 15 relevant models

they identified, five were UXCMM. None of the five

UXCMM explicitly defined a UXPRM.

2.2 Usability Engineering

UE is a set of activities that take place throughout a

product lifecycle and focus on assessing and improv-

ing the usability of interactive systems (Mayhew,

1999; Nielsen, 1993). There are small differences be-

tween Mayhew and Nielsen‘s product lifecycle. May-

hew groups the methods into three phases: require-

ments analysis; design, development, testing; instal-

lation. Nielsen advocates 11 stages in the UE lifecycle

ranging from the achievement of process objectives

(e.g. know the user or collect feedback from field use)

to the use of methods (e.g. prototyping or empirical

testing). Yet, both authors argue for conducting anal-

ysis activities as early as possible in the UE lifecycle,

before design activities, in order to specify User Re-

quirements (UR). In line with this recommendation,

additional references demonstrate the significance of

such early stages activities (Bias and Mayhew, 2005;

Force, 2011).

2.3 User-Centered Design

Also referred to as Human-Centered Design (HCD),

UCD aims to develop systems with high usability by

incorporating the user’s perspective into the software

development process (Jokela, 2002). There are five

processes in the UCD life cycle: plan UCD process,

understand and specify context of use, specify user

and organizational requirements, produce designs and

prototypes, and carry out user-based assessment (ISO

13407, 1999). The specification of User Require-

ments (UR) is critical to the success of interactive sys-

tems and is refined iteratively throughout the lifecy-

cle: most work products and findings from the five

UCD processes directly feed into the UR specifica-

tion (Maguire, 2001; Maguire and Bevan, 2002).

Many business and industrial sectors such as telecom-

munications, financial services, education or

healthcare have adopted UCD (Venturi et al., 2006).

Regarding the healthcare sector, a Healthcare Infor-

mation and Management Systems Society taskforce

developed a Health Usability Maturity Model (Force,

2011).

2.4 Agile User-Centered Design

Also referred to as User-Centered Agile Software De-

velopment (UCASD), AUCDI is concerned with the

integration of UCD/usability into agile software de-

velopment methods. Agile UCD is different from

non-agile UCD. Begnum and Thorkildsen (2015)

compared agile versus non-agile UCD and found sys-

tematic differences in methodological practices be-

tween the two approaches in terms of breath of meth-

ods used, degree of user contact and type of strategies

employed. The scientific consensus on AUCDI re-

ported in two recent, independent studies (Brhel et al.,

2015; Salah et al., 2014) is the following: UCD and

agile activities should be iterative and incremental,

organized in parallel tracks, and continuously involve

users. Both studies also report two main challenges

associated with AUCDI: the lack of time for carrying

out upfront UCD activities such as user research or

design, and the difficulty optimizing the work dynam-

ics between developers and UCD practitioners. Re-

garding the first challenge, da Silva et al. (2015) also

noticed that it is difficult for agile organizations to

perform usability testing due to the tight schedules

and the iterative nature inherent to agile. Regarding

the second challenge, Garcia et al. (2017) identified a

series of artifacts that can serve as facilitators in com-

munication between developers and designers. These

artifacts are prototypes, user stories and cards.

The first published works analyzing the possible

benefits associated with AUCDI appeared in the late

2000s. Since then, the number of publications about

AUCDI has steadily increased demonstrating a strong

interest of the agile community in this research topic

(Brhel et al., 2015). Several models have been pro-

posed for supporting the management of the AUCDI

process (Forbrig and Herczeg, 2015; Losada et al.,

2013).

In line with the aforementioned paradigm shift

from usability to UX, Peres et al. (2014) proposed a

reference model for integrating UX in agile method-

ologies in small companies willing to achieve level 2

maturity. The proposed model includes practices, rec-

ommendations, and UX techniques and artifacts in

four process areas: requirements management; pro-

ject planning; process and product quality assurance;

measuring and assessment. However, the proposed

model does not include any lifecycle describing the

interrelations between the four process areas, the

terms UX and usability are used in an interchangeable

way in the recommendations and base practices sec-

tions, and the suggested UX techniques and artifacts

are exclusively traditional HCI ones.

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

76

2.5 Product Development Lifecycles

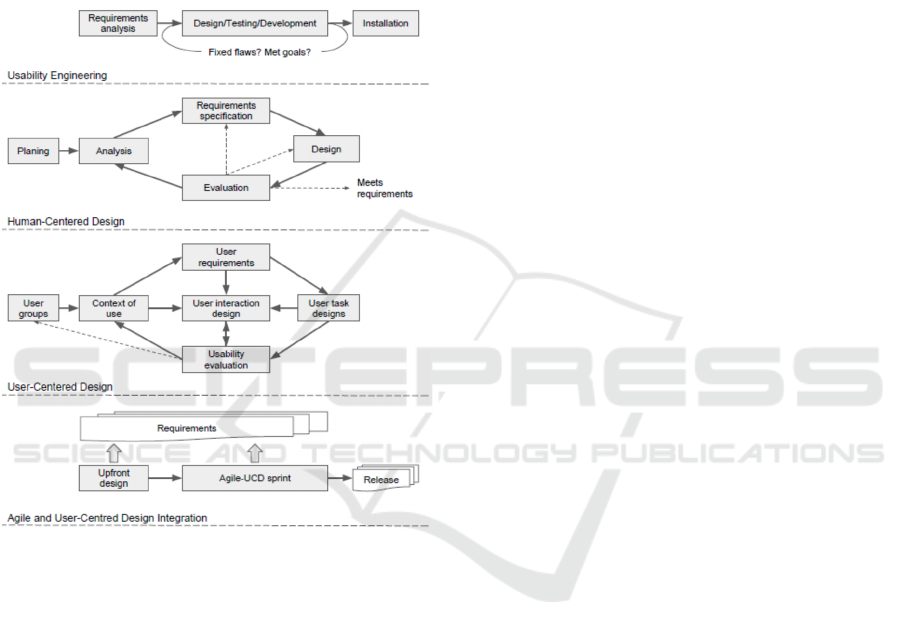

Figure 1 compares the product development lifecy-

cles found in the related work. The product develop-

ment lifecycles found in the related work include

(Mayhew, 1999; Nielsen, 1993) for UE, (ISO 13407,

1999; Maguire, 2001; Maguire and Bevan, 2002) for

HCD, (Jokela, 2002) for UCD, and (Begnum and

Thorkildsen, 2015; Forbrig and Herczeg, 2015; Salah

et al., 2014) for AUCDI.

Figure 1: Comparison between the lifecycles found in the

related work. Gray rectangles represent primary processes,

black arrows their temporal interrelations, and gray arrows

show how primary processes feed into process outcomes,

i.e., multilayered white rectangles.

As can be seen from Figure 1, product development

lifecycles are very similar:

They all are iterative (see for example the UE

lifecycle where Design/Testing/Development

is an iterative process or the AUCDI lifecycle

where the Agile-UCD sprint is also iterative);

They use a similar terminology: requirements,

analysis, design, testing or evaluation;

Except for the AUCDI lifecycle, they follow a

similar sequence of processes: analysis, design

and evaluation.

The main difference between these product devel-

opment lifecycles lies in the perspective on the re-

quirements. Requirements correspond to a primary

process in UE (see requirement analysis), HCD (see

requirements specification) and in UCD (see user re-

quirements). By contrast, requirements correspond in

AUCDI to a process outcome fed throughout the de-

velopment lifecycle by the primary processes.

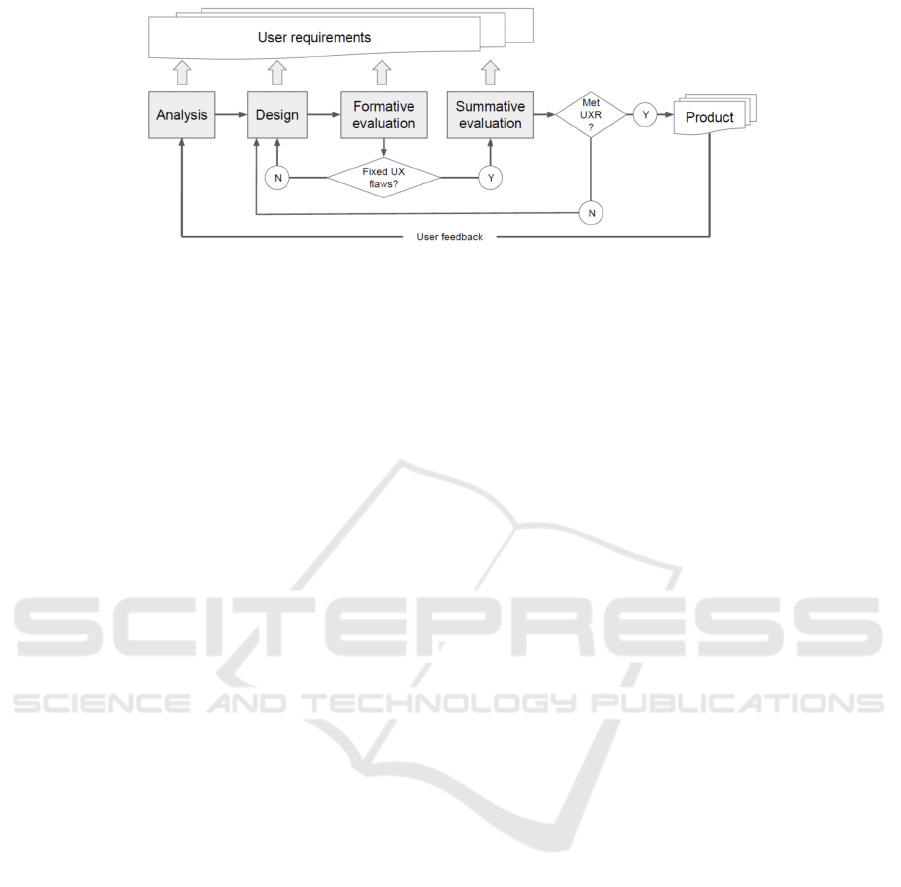

3 PROPOSED UX LIFECYCLE

AND PRIMARY PROCESSES

Figure 2 depicts the proposed UX lifecycle and its pri-

mary processes. Based on the related work, the pro-

posed UX lifecycle is iterative and includes four pri-

mary processes (analysis, design, formative and sum-

mative evaluation) and produces two outcomes (user

requirements and product). We chose the name of pri-

mary processes and outcomes according to their fre-

quency in the related work. We aligned the four pri-

mary processes with the sequence (analysis, design

and evaluation) identified in the related work.

3.1 Analysis

The analysis process primarily aims to render a first

account of the UR. The objectives of this process are

to specify the context of use, to gather and analyze

information about the user needs, and to define UX

goals. Maguire (2001) proposes a set of five elements

to specify the context of use: user group, tasks, tech-

nical, physical and organizational environment. The

analysis of user needs consists of defining which key

functionalities users need to achieve their UX goals.

UX goals include pragmatic goals (success rate, exe-

cution time or pragmatic satisfaction) and hedonic

goals (pleasure, aesthetic or hedonic satisfaction)

(Bevan, 2008). The success of this process relies on

the early involvement of users, as it improves the

completeness and accuracy of UR specification (Bai-

ley et al., 2006).

3.2 Design

The design process primarily aims to turn design

ideas into testable prototypes. The objective of this

process is to provide the software development team

with a model to follow during coding.

Specification of a UX Process Reference Model towards the Strategic Planning of UX Activities

77

Figure 2: Primary UX lifecycle processes.

This model includes Information Architecture (IA)

design, Interaction Design (IxD), User Interface (UI)

design, visual and graphic design. Calvary et al.

(2003) recommend modeling the UI incrementally

according to three levels of abstraction (abstract, con-

crete and final), which correspond to similar levels

recommended by Mayhew (1999) (conceptual model

design, screen design standards and detailed UI). An-

other approach consists of reasoning according to the

level of fidelity (low, medium and high) of prototypes

(Lim et al., 2008; McCurdy et al., 2006; Walker et al.,

2002). At the end of the design process, work prod-

ucts such as conceptual models or screen design

standards directly feed into UR, while testable proto-

types become inputs of evaluation.

3.3 Evaluation

The evaluation process primarily aims to check

whether the design solution meets the UX goals doc-

umented in the UR. The objective of this process is to

measure the UX with the testable prototype and to

compare results against UX goals. The evaluation of

earlier design solutions relies on formative evalua-

tion, which refers to the iterative improvement of the

design solutions. On the other hand, the evaluation of

later design solutions typically involves summative

evaluation, which refers to finding out whether peo-

ple can use the product successfully. Together, form-

ative and summative evaluation form the evaluation

process. At the end of the evaluation process, design

solutions documented in the UR are updated, while

low- or high-fidelity prototypes become inputs of

coding/programming if they meet the UX goals doc-

umented in the UR.

3.4 Iterative and Incremental Release

of Product

Design and evaluation are intertwined within an iter-

ative and incremental test-and-refine process that

aims to improve the product. While formative evalu-

ation supports the detection of UX design flaws, the

design process supports the production of redesign

solutions that fix those UX flaws. The product devel-

opment team repeats this cycle until UX flaws are

fixed. Once they are fixed, the redesigned solution

passes through the summative evaluation process to

check whether users can use the product successfully

before programming. The relevant literature (Calvary

et al., 2003; Forbrig and Herczeg, 2015; Holtzblatt et

al., 2004; Mayhew, 1999; Peres et al., 2014) is con-

sistent regarding this iterative and incremental aspect

of the design process. In addition, formative evalua-

tion requires low investment in resources and effort,

which efficiently supports decision-making through-

out the design process and significantly helps reduc-

ing late design changes (Albert and Tullis, 2013; Ar-

nowitz et al., 2010; Bias and Mayhew, 2005; May-

hew, 1999; Nielsen, 1993).

3.5 Iterative and Incremental

Specification of UR

The cornerstone of the proposed UX lifecycle is the

iterative and incremental specification of UR. As can

be seen from Figure 2, the outcomes of each of the

four processes directly feed into UR. The work prod-

ucts resulting from the analysis process (typically,

summary information learned) document a first ver-

sion of the UR, which is later completed and/or re-

fined as the other process areas take place. In other

words, the specification of UR consists of concatenat-

ing UX work products and artifacts delivered and re-

fined by the product development team throughout

the UX lifecycle. The UR typically include the fol-

lowing sections: the specification of the context of

use, the specification of UX goals, the general design

principles, the screen design standards and strategies

for the prevention of user errors.

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

78

Table 1: References collected through the TLR.

Field or discipline Books, proceedings, technical reports Papers

Agile and AUCDI (Patton and Economy, 2014) (Brhel et al., 2015; da Silva et al., 2015; Garcia

et al., 2017; Wautelet et al., 2016)

Cognitive science

and psychology

(Crandall et al., 2006; Fowler Jr, 2013; Hutton

et al., 1997; Lavrakas, 2008)

(Cooke, 1994; Trull and Ebner-Priemer, 2013)

HCI (Albert and Tullis, 2013; Arnowitz et al., 2010;

Bailey et al., 2006; Card et al., 1983; Carter and

Mankoff, 2005; Ghaoui, 2005; Holtzblatt et al.,

2004; Mayhew, 1999; McCurdy et al., 2006;

Nielsen, 1993; Theofanos, 2007)

(Calvary et al., 2003; Grandi et al., 2017; Khan

et al., 2008; Lim et al., 2008; Mackay et al.,

2000; Maguire, 2001; Maguire and Bevan,

2002; Markopoulos, 1992; Rieman, 1993; Tsai,

1996; Vanderdonckt, 2008, 2014; Walker et al.,

2002)

UX (Law et al., 2008, 2007) (Bargas-Avila and Hornbæk, 2011; Bevan,

2008; Law et al., 2014; Vermeeren et al., 2010)

4 SUPPORTING UX METHODS

AND ARTIFACTS

4.1 Identification

To identify the supporting UX methods, we ran a Tar-

geted Literature Review (TLR) instead of conducting a

Systematic Literature Review (SLR). A SLR usually

aims at addressing a predefined research question by

extensively and completely collecting all the refer-

ences related to this question by considering absolute

inclusion and exclusion criteria. Inclusion criteria re-

tain references that fall in scope of the research ques-

tion, while exclusion criteria reject irrelevant or non-

rigorous references. The TLR, which is a non-system-

atic, in-depth and informative literature review, is ex-

pected to guarantee keeping only the references max-

imizing rigorousness while minimizing selection bias.

We chose this method for the following four reasons:

1. Translating our research question into a repre-

sentative syntactical query to be applied on dig-

ital libraries is not straightforward and may lead

to many irrelevant references (Mallett et al.,

2012);

2. If applied, such a query may result into a very

large set of references that actually use a UX

method, but which do not define any UX

method or contribution to such a method;

3. The set of relevant references is quite limited

and stems for a knowledgeable selection of

high-quality, easy-to identify references on UX

method, as opposed to an all-encompassing list

of irrelevant references;

4. TLR is better suited at describing and under-

standing UX methods one by one, at comparing

them, and at understanding the trends of the

state of the art.

The TLR allowed us to collect 41 references listed

in Table 1 and the following on-line resources: http://

www.allaboutUX.org, http://www.nngroup.com and

http://UXpa.org.

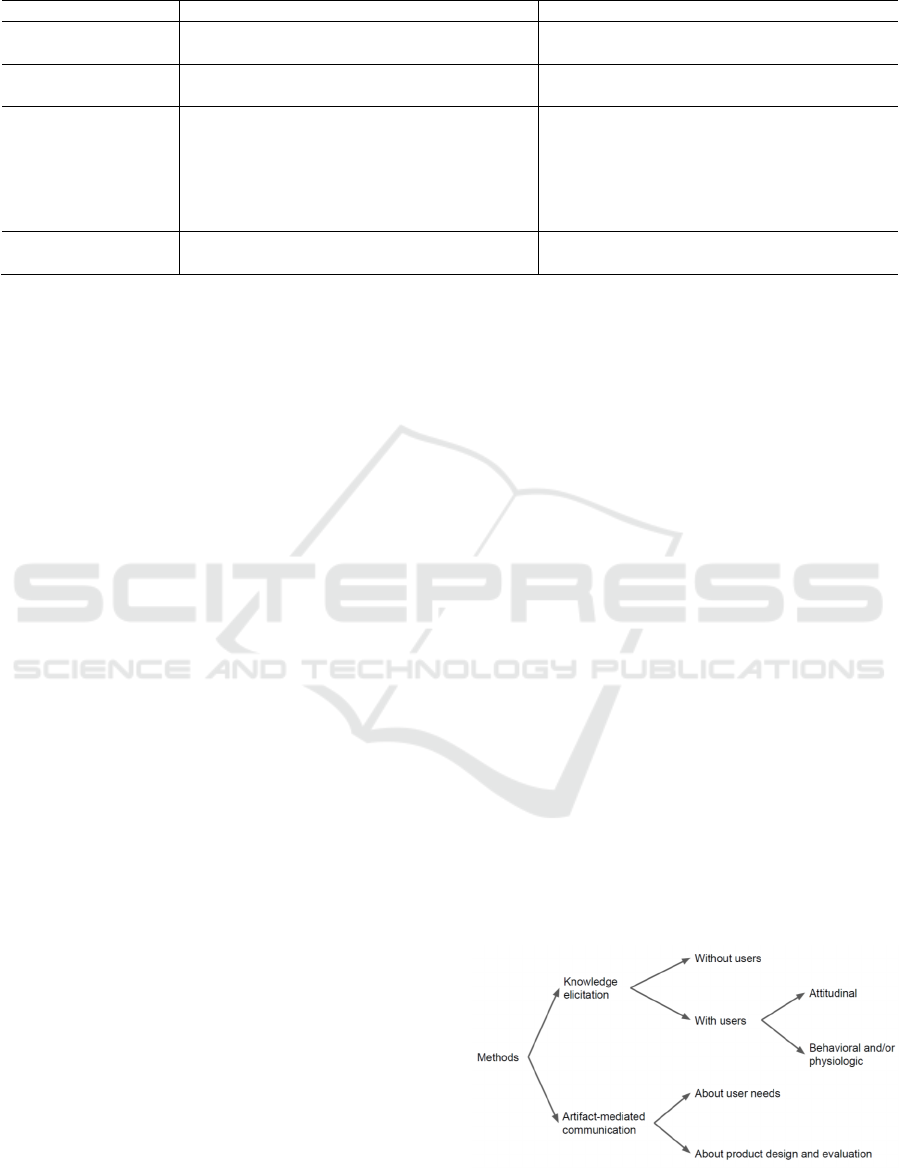

4.2 Classification

To classify UX methods (Figure 3), we first distin-

guished between methods that focus on knowledge

elicitation and methods that focus on artifact-medi-

ated communication, as they serve a different pur-

pose. Knowledge elicitation methods aim to describe

and document knowledge (Cooke, 1994) while arti-

fact-mediated communication methods aim to facili-

tate the communication and collaboration between

stakeholders (Brhel et al., 2015; Garcia et al., 2017).

Then, within knowledge elicitation methods, we dis-

tinguished between those involving users versus

those not involving users, as they also differ in terms

of purpose and planning. Methods not involving users

(Table 2) aim to predict the use of a system. These

methods do not involve user data collection; instead,

they rely on the opinion or expertise of an expert.

Methods involving users aim to incorporate the user’s

perspective into software development and as such,

rely on user data collection.

Figure 3: Classification of UX methods.

Specification of a UX Process Reference Model towards the Strategic Planning of UX Activities

79

Table 2: Knowledge elicitation methods not involving users.

Method Techniques Objectives UX activities

GOMS GOMS CMN-GOMS,

CPM-GOMS, NGOMSL,

Keystroke-Level Model

to produce quantitative and qualitative predic-

tions of how people will use a proposed system

UX evaluation

hierarchical

task analysis

hierarchical task analysis to identify the cognitive skills, or mental de-

mands, needed to perform a task proficiently

cognitive task anal-

ysis

inspection cognitive walkthrough; de-

sign or expert review; heu-

ristic evaluation

to predict the learnability of a system; to predict

usability and UX problems

UX evaluation

literature re-

view

(systematic) literature re-

view; systematic mapping

to locate, analyze, synthetize relevant published

and/or unpublished work about a topic; to un-

derstand the current thinking and the state of the

marketplace about a topic

context-of-use,

stakeholder analy-

sis; user research

The methods for user data collection include:

Attitudinal methods (Table 3) focused on cap-

turing self-reported data about how users feel;

Behavioral methods (Table 4) focused on cap-

turing data about/measuring what users do

and/or user physiologic state.

We distinguished artifact-mediated methods fo-

cused on communicating about user needs (Table 5)

from those focused on communicating about product

design and evaluation (Table 6).

4.3 Knowledge Elicitation Methods

Table 2-4 include four columns: the identification of

the method, the related techniques used as base prac-

tice for carrying out the method, the objectives of the

method, and the related UX activities. To feed these

tables, we adopted a bottom-up approach:

1. We extracted UX methods and techniques from

the resources identified during the TLR;

2. We described each technique in terms of related

methods, objectives and UX activities;

3. We grouped the techniques into categories ac-

cording to the description of their objectives;

4. We suppressed duplicates;

5. We labeled each technique category with the

name of the method they relate to in (Cooke,

1994; Gvero, 2013; Albert and Tullis, 2013;

Vermeeren et al., 2010) and then compared the

names resulting from this first round against the

remainder of the methods identified in step 1 to

check for and fix inconsistencies;

6. We assigned each method a class amongst

without users, attitudinal, behavioral and/or

physiologic.

To distinguish between methods and techniques,

we complied with the hierarchical arrangement be-

tween approach, method and technique defined in

(Anthony, 1963): “The organizational key is that

techniques carry out a method which is consistent

with an approach“. For example, heuristic evaluation

and expert review are techniques to carry out the in-

spection method, brainstorming and focus group are

techniques to carry out the group interview method.

4.4 Artifact-Mediated Communication

Methods

Table 5-6 include three columns: the identification of

the artifact, the objectives of the artifact, and the re-

lated UX activities. To feed these tables, we adopted

a bottom-up approach:

1. We extracted UX artifacts from (Bargas-Avila

and Hornbæk 2011; Garcia et al., 2017;

Holtzblatt et al., 2004; Mayhew 1999) as they

are representative of the contrasting perspec-

tives on UX of the relevant communities;

2. We described each artifacts in terms of its re-

lated objectives and UX activities;

3. We suppressed duplicates;

4. We checked for and fixed inconsistencies with

the remaining of the TLR literature;

5. We assigned each artifact a class amongst about

user needs or about product design and evalua-

tion.

Table 5-6 do not include any column for the meth-

ods, as artifact-mediated communication methods go

by the name of their resulting artifact (e.g. persona is

the artifact resulting from the method entitled "creat-

ing personas").

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

80

Table 3: Attitudinal methods.

Method Techniques Objectives UX activities

cards

cards; emocards; emotion

cards

to identify user mood and reactions about their in-

teraction with a system

UX evaluation

experience

sampling

daily or repeated-entry

diary

to identify user thoughts, feelings, behaviors,

and/or environment on multiple occasions over

time

job/task analysis;

contextual inquiry;

user research; UX

evaluation

group inter-

view

brainstorming; group dis-

cussion; focus group;

questionnaire

to identify users and stakeholders who may be im-

pacted by the system; to improve existing ideas or

generate new ideas

context-of-use

analysis; job analy-

sis; stakeholder

analysis; user re-

search

prospective in-

terview

contextual, in person or

remote interview; ques-

tionnaire; role-play;

twenty questions

to identify key users, user characteristics, user

goals, user needs; to identify user behavior; to im-

prove existing ideas or generate new ideas

job/task analysis;

contextual inquiry;

user research; UX

evaluation

retrospective

interview

cognitive or elicitation

interview

to gain insights into particular aspects of cognitive

performance during user past experience with a

system

cognitive task anal-

ysis; contextual in-

quiry; UX evalua-

tion

survey interview; questionnaire

to assess thoughts, opinions, and feelings of a sam-

ple population about a system

user research; UX

evaluation

think-aloud

co-discovery; talk-aloud

protocol; (retrospective)

think-aloud protocol

to gain insights into the participant’s cognitive pro-

cesses (rather than only their final product); to

make thought processes as explicit as possible dur-

ing task performance

job/task analysis;

contextual inquiry;

user research; UX

evaluation

Table 4: Behavioral methods.

Method Techniques Objectives UX activities

automated ex-

perience sam-

pling

automated interaction

logs

to gain insights into the user experience with a

system based on automatic logging of user actions

job/task analysis;

contextual inquiry;

user research; UX

evaluation

constructive

collage/drawings; photo-

graphs; probes

to identify unexpected uses of a system or concept

formative UX eval-

uation

experiment

A/B testing; controlled

experiment; remote ex-

periment

to support, refute, or validate a hypothesis about

sample population, task, system; to establish

cause-and-effect relationships

job/task analysis;

user research; UX

evaluation

instrument-

based experi-

ment

experiment with cali-

brated instrument (bio-

metrics, eye tracker, sen-

sors, etc.)

to gain insights into user behavioral, emotional

and physiologic responses with a system (e.g.

gaze, happiness, stress, etc.)

cognitive task anal-

ysis; UX evaluation

observation

field observation; sys-

temic observation (from

afar)

to identify how users perform tasks or solve prob-

lems in their natural setting

contextual inquiry;

user research; UX

evaluation

simulation

paper-and-pencil evalua-

tion; Wizard of Oz exper-

iment

to detect UX problems; to identify the use and ef-

fectiveness of a system which has not been imple-

mented yet

formative UX eval-

uation

Specification of a UX Process Reference Model towards the Strategic Planning of UX Activities

81

Table 5: Artifact-mediated communication methods about user needs.

Artifact Objectives UX activities

customer

journey map

to depict key interactions users have with the system over time (i.e., touchpoints);

to map touchpoints with user thoughts, feelings and emotional responses

specification of

the context of use

service blue-

print

to depict relationships between different service components (front-end, back-end

and organizational processes) that are directly tied to touchpoints in a specific cus-

tomer journey

specification of

the context of use

persona to depict key user profiles (personality, roles, goals and motivations, frustrations,

etc.)

specification of

the context of use

work model to depict the current work organization of users; to depict intents, triggers, break-

downs in the tasks (problems, errors and workarounds)

specification of

the context of use

UX goals to establish specific qualitative and quantitative UX goals that will drive UX de-

sign

UX goals setting

Table 6: Artifact-mediated communication methods about product design and evaluation.

Artifact Objectives UX activities

affinity diagram to organize and cluster user data (typically from contextual in-

quiry or brainstorming) based on their natural relationships

design ideation

concept map to organize and explain relationships between concepts and

ideas from knowledge elicitation

design ideation

card sort: closed or open card

sort

to organize and label topics into categories that make sense to

users

IA design; UX

evaluation

user scenario: full-scale or task-

based scenarios

to describe how users achieve their goals with the system, iden-

tifying various possibilities and potential barriers

UX design; UX

evaluation

user story and epic to capture a description of a software feature from the user’s

perspective

functional re-

quirements

task model to describe the tasks that the user and the system carry out to

achieve user goals; to review relationships between tasks

UX design

low-fidelity prototype: paper,

sketch, wireframe or video

to turn design ideas into testable mock-ups; to test-and-refine

design ideas; to fix UX problems early in the product lifecycle

UX design; form-

ative UX testing

high-fidelity prototype: coded,

wireframe or WOz

to turn mockups into highly-functional and interactive proto-

types; to evaluate how well the prototype meets UX require-

ments

summative UX

testing

general design principles: Ge-

stalt theory, visual techniques,

guidelines and standards

to arrange screens in such a way that they are aesthetic and con-

sistent and communicate ideas clearly (color schemes; fonts; in-

teractors; semiotics)

graphic and/or

visual design

5 USE OF THE UXPRM

We currently use the UX process reference model

(UXPRM) for planning UX activities in two industrial

projects. Our mission in these two projects is to support

the integration of UX practice in an organization,

whose core business is the sector of energy (Project 1)

and the automotive sector (Project 2). Both organiza-

tions use an agile approach for software development.

In both projects, we use the UX process reference

model in the two following ways. On the one hand, we

use the proposed UX lifecycle to communicate about

primary UX lifecycle processes, especially to advocate

for the integration of analysis activities as early as pos-

sible in the product development lifecycle.

On the other hand, we use the classifications of UX

methods and artifacts for roughly assessing the UX

capabilities of our industrial partners; especially we use

the Tables 2-6 as an interview guide or checklist during

semi-structured interviews to identify the UX methods

consistently employed/delivered by the development

teams. Even rough, such assessment of UX capabilities

has allowed us to gain insights into the current organi-

zation of software development. In addition, we were

able to identify the potential barriers (e.g. limited ac-

cess to users) and opportunities (e.g. important needs

for better UX with products) regarding the integration

of UX. In particular, we were able to better scope and

plan UX activities by aligning UX activities with the

UX capabilities of the organization.

The UXPRM, we believe, can provide practition-

ers with a basis tool for assessing UX capability and

planning UX activities, and therefore help better an-

swering the needs and expectations of the industry.

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

82

We also believe that our conceptual and methodolog-

ical approach is a promising and exciting research av-

enue to explore further.

6 CONCLUSION

The lack of consensus on the definition of UX has led

to confusion over UX processes and UX practice,

which results into important contrasting perspectives

on UX between the traditional HCI and the UX com-

munity as well as between academia and industry. To

contribute to reducing this gap, we propose a UX pro-

cess reference model (UXPRM), which depicts the

primary UX lifecycle processes and a set of UX meth-

ods and artifacts to support UX activities. The UX-

PRM draws an accurate picture of the UX base prac-

tices and methods supporting UX activities. The con-

tribution of this paper is twofold:

Conceptual, as it specifies a complete UX pro-

cess reference model including both the de-

scription of primary UX lifecycle processes

and a set of UX methods and artifacts that serve

as UX base practice. To date, there is, to the

best of our knowledge, no such UX process ref-

erence model.

Methodological, as it can support researchers

and practitioners to plan UX activities based on

the rough assessment of the UX capabilities of

an organization. This is a first step towards the

strategic planning of UX activities.

7 FUTURE WORK

Building on the promising usefulness of the proposed

UXPRM for supporting UX practice, our future work

consists of developing a UX capability/maturity

model (UXCMM) in order to facilitate the integration

of UX activities into software development. In turn,

this aims to reducing the gap between UX research

and UX practice. We argue that planning the most

profitable and appropriate UX methods to achieve

specific UX goals depends on the alignment between

the capability of an organization to perform UX pro-

cesses consistently and the capability of UX methods

to support the achievement of UX goals cost-effi-

ciently. Accordingly, our future work consists of de-

veloping a UX processes assessment model (UX-

PAM), which is a measurement structure for the as-

sessment of UX processes. Typically, UXPAMs

specify indicators, scales and levels of the achieve-

ment of UX processes, together with measurement

tools such as questionnaires or models.

Both the UXPRM and the UXPAM form the in-

tended UXCMM, which will support the assessment

of the UX capability/maturity of an organization and

the identification of the UX methods that best align

with the organizations’ capabilities and maturity. The

UXCMM, we believe, will ultimately allow UX prac-

titioners and researchers to deliver better UX activity

plans.

ACKNOWLEDGEMENTS

The authors acknowledge the support by the projects

HAULOGY 2021 and VIADUCT under the refer-

ences 7767 and 7982 funded by Service public de

Wallonie (SPW), Belgium.

REFERENCES

Albert, W. and Tullis, T., 2013. Measuring the user experi-

ence: collecting, analyzing, and presenting usability met-

rics. Newnes.

Anthony, E.M., 1963. Approach, method and technique.

English language teaching, 17(2), pp. 63-67.

Arnowitz, J., Arent, M. and Berger, N., 2010. Effective pro-

totyping for software makers. Elsevier.

Bailey, R.W., Barnum, C., Bosley, J., Chaparro, B., Dumas,

J., Ivory, M.Y., John, B., Miller-Jacobs, H. and Koyani,

S.J., 2006. Research-based web design & usability guide-

lines. Washington, DC: US Dept. of Health and Human

Services.

Bargas-Avila, J.A. and Hornbæk, K., 2011, May. Old wine

in new bottles or novel challenges: a critical analysis of

empirical studies of user experience. In Proceedings of

the SIGCHI conference on human factors in computing

systems (pp. 2689-2698). ACM.

Begnum, M.E.N. and Thorkildsen, T., 2015. Comparing

User-Centred Practices In Agile Versus Non-Agile De-

velopment. In Norsk konferanse for organisasjoners

bruk av IT (NOKOBIT).

Bevan, N., 2008, June. Classifying and selecting UX and us-

ability measures. In International Workshop on Mean-

ingful Measures: Valid Useful User Experience Meas-

urement (Vol. 11, pp. 13-18).

Bias, R.G. and Mayhew, D.J., 2005. Cost-justifying usability:

an update for an Internet age. Elsevier. Second edition.

Brhel, M., Meth, H., Maedche, A. and Werder, K., 2015. Ex-

ploring principles of user-centered agile software devel-

opment: A literature review. Information and Software

Technology, 61, pp. 163-181.

Calvary, G., Coutaz, J., Thevenin, D., Limbourg, Q., Bouil-

lon, L. and Vanderdonckt, J., 2003. A unifying reference

framework for multi-target user interfaces. Interacting

with computers, 15(3), pp. 289-308.

Specification of a UX Process Reference Model towards the Strategic Planning of UX Activities

83

Card, S. K, Newell, A. and Moran, T.P., 1983. The Psychol-

ogy of Human-Computer Interaction. L. Erlbaum Asso-

ciates Inc., Hillsdale, NJ, USA.

Carter, S. and Mankoff, J., 2005, April. When participants do

the capturing: the role of media in diary studies. In Pro-

ceedings of the SIGCHI conference on Human factors in

computing systems (pp. 899-908). ACM.

Cooke, N. J., 1994. Varieties of knowledge elicitation tech-

niques. International Journal of Human-Computer Stud-

ies, 41(6), pp. 801-849.

Crandall, B., Klein, G., Klein, G.A. and Hoffman, R.R.,

2006. Working minds: A practitioner's guide to cognitive

task analysis. Mit Press.

da Silva, T. S., Silveira, M. S. and Maurer, F., 2015, January.

Usability evaluation practices within agile development.

In Proceedings of the 48th Hawaii International Confer-

ence on System Sciences (HICSS), (pp. 5133-5142).

IEEE.

Daae, J. and Boks, C., 2015. A classification of user research

methods for design for sustainable behaviour. Journal of

Cleaner Production, 106, pp. 680-689.

Forbrig, P. and Herczeg, M., 2015, September. Managing the

Agile process of human-centred design and software de-

velopment. In INTERACT (pp. 223-232).

Force, H.U.T., 2011. Promoting Usability in Health Organi-

zations: initial steps and progress toward a healthcare us-

ability maturity model. Health Information and Manage-

ment Systems Society.

Fowler Jr, J.J., 2013. Survey research methods. Sage publi-

cations.

Garcia, A., da Silva, T.S. and Selbach Silveira, M., 2017,

January. Artifacts for Agile User-Centered Design: A

Systematic Mapping. In Proceedings of the 50th Hawaii

International Conference on System Sciences (HICSS).

IEEE.

Ghaoui, C., 2005. Encyclopedia of human computer interac-

tion. IGI Global.

Grandi, M.P.F. and Pellicciari, M., 2017, July. A Reference

Model to Analyse User Experience in Integrated Product-

Process Design. In Transdisciplinary Engineering: A

Paradigm Shift: Proceedings of the 24th ISPE Inc. Inter-

national Conference on Transdisciplinary Engineering,

July 10-14, 2017 (Vol. 5, p. 243). IOS Press.

Gray, C. M., 2016, May. It's more of a mindset than a method:

UX practitioners' conception of design methods. In Pro-

ceedings of the 2016 CHI Conference on Human Factors

in Computing Systems (pp. 4044-4055). ACM.

Gvero, I., 2013. Observing the user experience: a practition-

er's guide to user research by Elizabeth Goodman, Mike

Kuniavsky, and Andrea Moed. ACM SIGSOFT Software

Engineering Notes, 38(2), pp. 35-35.

Hassenzahl, M., 2003. The thing and I: understanding the re-

lationship between user and product. In Blythe M.A.,

Overbeeke K., Monk A.F., Wright P.C. (Eds.), Funol-

ogy: From Usability to Enjoyment (pp. 31-42). Springer.

Hassenzahl, M., 2008, September. User experience (UX): to-

wards an experiential perspective on product quality. In

Proceedings of the 20th Conference on l'Interaction

Homme-Machine (pp. 11-15). ACM.

Hassenzahl, M. and Tractinsky, N., 2006. User experience-a

research agenda. Behaviour & information technology,

25(2), pp. 91-97.

Holtzblatt, K., Wendell, J.B. and Wood, S., 2004. Rapid con-

textual design: a how-to guide to key techniques for user-

centered design. Elsevier.

Hutton, R.J.B., Militello, L.G. and Miller, T.E., 1997. Ap-

plied Cognitive Task Analysis (ACTA) instructional

software: A practitioner's window into skilled decision

making. In Proceedings of the Human Factors and Er-

gonomics Society. Annual Meeting (Vol. 2, p. 896). Sage

Publications Ltd.

ISO 13407, 1999. Human-centred design processes for inter-

active systems. Standard. International Organization for

Standardization, Geneva, CH.

ISO 15504-1, 2004. Information Technology — Software

Process Assessment - Part 1: Concepts and Introductory

Guide. Standard. International Organization for Stand-

ardization, Geneva, CH.

ISO 15504-1, 2012. Information Technology — Software

Process Assessment - Part 5: An Assessment Model and

Indicator Guidance. Standard. International Organiza-

tion for Standardization, Geneva, CH.

ISO 9241-210, 2008. Ergonomics of human system interac-

tion-Part 210: Human-centred design for interactive sys-

tems. Standard. International Organization for Standard-

ization, Geneva, CH.

Jokela, T., 2002, October. Making user-centred design com-

mon sense: striving for an unambiguous and communi-

cative UCD process model. In Proceedings of the second

Nordic conference on Human-computer interaction (pp.

19-26). ACM.

Khan, V. J., Markopoulos, P., Eggen, B., IJsselsteijn, W. and

de Ruyter, B., 2008, September. Reconexp: a way to re-

duce the data loss of the experiencing sampling method.

In Proceedings of the 10th international conference on

Human computer interaction with mobile devices and

services (pp. 471-476). ACM.

Lacerda, T. C. and von Wangenheim, C.G., 2017, June. Sys-

tematic literature review of usability capability/maturity

models. Computer Standards & Interfaces, 55, pp. 95-

105.

Lallemand, C., Gronier, G. and Koenig, V., 2015. User expe-

rience: A concept without consensus? Exploring practi-

tioners’ perspectives through an international survey.

Computers in Human Behavior, 43, pp. 35-48.

Lavrakas, P. J., 2008. Encyclopedia of survey research meth-

ods. Sage Publications.

Law, E., Bevan, N., Gristou, G., Springett, M. and Larusdot-

tir, M., Meaningful measures: valid useful user experi-

ence measurement-VUUM Workshop 2008, Reykjavik.

In COST Action.

Law, E. L. C., Roto, V., Hassenzahl, M., Vermeeren, A. P.

and Kort, J., 2009, April. Understanding, scoping and de-

fining user experience: a survey approach. In Proceed-

ings of the SIGCHI conference on human factors in com-

puting systems (pp. 719-728). ACM.

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

84

Law, E. L. C., van Schaik, P. and Roto, V., 2014. Attitudes

towards user experience (UX) measurement. Interna-

tional Journal of Human-Computer Studies, 72(6), pp.

526-541.

Law, E. L. C., Vermeeren, A. P., Hassenzahl, M. and Blythe,

M., 2007, September. Towards a UX manifesto. In Pro-

ceedings of the 21st British HCI Group Annual Confer-

ence on People and Computers: HCI... but not as we

know it-Volume 2 (pp. 205-206). BCS Learning & De-

velopment Ltd.

Lim, Y. K., Stolterman, E. and Tenenberg, J., 2008. The anat-

omy of prototypes: Prototypes as filters, prototypes as

manifestations of design ideas. ACM Transactions on

Computer-Human Interaction (TOCHI), 15(2), p. 7.

Losada, B., Urretavizcaya, M. and Fernández-Castro, I.,

2013. A guide to agile development of interactive soft-

ware with a “User Objectives”-driven methodology. Sci-

ence of Computer Programming, 78(11), pp. 2268-2281.

Mackay, W. E., Ratzer, A. V. and Janecek, P., 2000, August.

Video artifacts for design: Bridging the gap between ab-

straction and detail. In Proceedings of the 3rd conference

on Designing interactive systems: processes, practices,

methods, and techniques (pp. 72-82). ACM.

Maguire, M., 2001. Methods to support human-centred de-

sign. International journal of human-computer studies,

55(4), pp. 587-634.

Maguire, M. and Bevan, N., 2002. User requirements analy-

sis: a review of supporting methods. In Usability (pp.

133-148). Springer, Boston, MA.

Mallett, R., Hagen-Zanker, J., Slater, R. and Duvendack, M.,

2012. The benefits and challenges of using systematic re-

views in international development research. Journal of

development effectiveness, 4(3), pp. 445-455.

Markopoulos, P., 1992. Adept a task based design environ-

ment. In Proceedings of the 48th Hawaii International

Conference on System Sciences (HICSS), (pp. 587-596).

IEEE.

Mayhew, D. J., 1999. The Usability Engineering Lifecycle: A

Practitioner’s Handbook for User Interface Design.

Morgan Kaufmann Publishers Inc.

McCurdy, M., Connors, C., Pyrzak, G., Kanefsky, B. and

Vera, A., 2006, April. Breaking the fidelity barrier: an

examination of our current characterization of prototypes

and an example of a mixed-fidelity success. In Proceed-

ings of the SIGCHI conference on Human Factors in

computing systems (pp. 1233-1242). ACM.

Mirnig, A. G., Meschtscherjakov, A., Wurhofer, D., Me-

neweger, T. and Tscheligi, M., 2015, April. A formal

analysis of the ISO 9241-210 definition of user experi-

ence. In Proceedings of the 33rd Annual ACM Confer-

ence Extended Abstracts on Human Factors in Compu-

ting Systems (pp. 437-450). ACM.

Nielsen, J., 1993. Usability engineering. Elsevier.

Norman, D., Miller, J. and Henderson, A., 1995, May. What

you see, some of what's in the future, and how we go

about doing it: HI at Apple Computer. In Conference

companion on Human factors in computing systems (p.

155). ACM.

Patton, J. and Economy, P., 2014. User story mapping: dis-

cover the whole story, build the right product. "O'Reilly

Media, Inc.".

Peres, A. L., da Silva, T.S., Silva, F.S., Soares, F.F., De

Carvalho, C.R.M. and Meira, S.R.D.L., 2014, July. AG-

ILEUX model: towards a reference model on integrating

UX in developing software using agile methodologies. In

Agile Conference (AGILE), 2014 (pp. 61-63). IEEE.

Rieman, J., 1993, May. The diary study: a workplace-ori-

ented research tool to guide laboratory efforts. In Pro-

ceedings of the INTERACT'93 and CHI'93 conference on

Human factors in computing systems (pp. 321-326).

ACM.

Roedl, D. J. and Stolterman, E., 2013, April. Design research

at CHI and its applicability to design practice. In Pro-

ceedings of the SIGCHI Conference on Human Factors

in Computing Systems (pp. 1951-1954). ACM.

Salah, D., Paige, R. F. and Cairns, P., 2014, May. A system-

atic literature review for agile development processes and

user centred design integration. In Proceedings of the

18th international conference on evaluation and assess-

ment in software engineering (p. 5). ACM.

Theofanos, M. F., 2007. Common Industry Specification for

Usabilty--Requirements (No. NIST Interagency/Internal

Report (NISTIR)-7432).

Trull, T .J. and Ebner-Priemer, U., 2013. Ambulatory assess-

ment. Annual review of clinical psychology, 9, pp. 151-

176.

Tsai, P., 2006. A survey of empirical usability evaluation

methods. GSLIS Independent Study, pp. 1-18.

Vanderdonckt, J., 2008. Model-driven engineering of user in-

terfaces: Promises, successes, failures, and challenges. In

Proceedings of ROCHI, 8, p. 32.

Vanderdonckt, J., 2014. Visual design methods in interactive

applications. In Content and Complexity (pp. 199-216).

Routledge.

Venturi, G., Troost, J. and Jokela, T., 2006. People, organi-

zations, and processes: An inquiry into the adoption of

user-centered design in industry. International Journal of

Human-Computer Interaction, 21(2), pp. 219-238.

Vermeeren, A. P., Law, E. L. C., Roto, V., Obrist, M., Hoon-

hout, J. and Väänänen-Vainio-Mattila, K., 2010, Octo-

ber. User experience evaluation methods: current state

and development needs. In Proceedings of the 6th Nordic

Conference on Human-Computer Interaction: Extending

Boundaries (pp. 521-530). ACM.

Walker, M., Takayama, L. and Landay, J.A., 2002, Septem-

ber. High-fidelity or low-fidelity, paper or com-puter?

Choosing attributes when testing web prototypes. In Pro-

ceedings of the human factors and ergonomics society

annual meeting (Vol. 46, No. 5, pp. 661-665). Sage CA:

Los Angeles, CA: SAGE Publications.

Wautelet, Y., Heng, S., Kolp, M., Mirbel, I. and Poelmans,

S., 2016, June. Building a rationale diagram for evaluat-

ing user story sets. In Proceedings of the 10

th

Interna-

tional Conference on Research Challenges in Infor-

mation Science (pp. 1-12). IEEE.

Specification of a UX Process Reference Model towards the Strategic Planning of UX Activities

85