Comparative Study of a Commercial Tracking Camera and

ORB-SLAM2 for Person Localization

Safa Ouerghi, Nicolas Ragot, Remi Boutteau and Xavier Savatier

Normandie Univ., UNIROUEN, ESIGELEC, IRSEEM, 76000 Rouen, France

Keywords:

Intel T265, ORB-SLAM2, Benchmarking, Person Localization.

Abstract:

Aiming at localizing persons in industrial sites is a major concern towards the development of the factory of

the future. During the last years, developments have been made in several active research domains targeting

the localization problem, among which the vision-based Simultaneous Localization and Mapping paradigm.

This has led to the development of multiple algorithms in this field such as ORB-SLAM2 known to be the most

complete method as it incorporates the majority of the state-of-the-art techniques. Recently, new commercial

and low-cost systems have also emerged in the market that can estimate the 6-DOF motion. In particular, we

refer here to the Intel Realsense T265, a standalone 6-DOF tracking sensor that runs a visual-inertial SLAM

algorithm and that accurately estimates the 6-DOF motion as claimed by the Intel company. In this paper,

we present an evaluation of the Intel T265 tracking camera by comparing its localization performances to

the ORB-SLAM2 algorithm. This benchmarking fits within a specific use-case: the person localization in an

industrial site. The experiments have been conducted in a platform equipped with a VICON motion capture

system, which physical structure is similar to a one that we could find in an industrial site. The Vicon system

is made of fifteen high-speedtracking cameras (100 Hz) which provides highly accurate poses that were used

as ground truth reference. The sequences have been recorded using both an Intel RealSense D435 camera to

use its stereo images with ORB-SLAM2 and the Intel RealSense T265. The two sets of timestamped poses

(VICON and the ones provided by the cameras) were aligned then calibrated using the point set registration

method. The Absolute Trajectory Error, the Relative Trajectory Error and the Euclidian Distance Error metrics

were employed to benchmark the localization accuracy from ORB-SLAM2 and T265. The results show a

competitive accuracy of both systems for a handheld camera in an indoor industrial environment with a better

reliability with the T265 Tracking system.

1 INTRODUCTION

Improving the performance and safety conditions in

industrial sites represent a major challenge that par-

ticularly requires people tracking to verify in real time

their authorization to accomplish the task they are do-

ing. To be able to fulfill such a high level task, the

pose of humans in the industrial space has to be accu-

rately known.

Within the context of localization and tracking,

developments in several active research fields such

as SLAM (Simultaneous Localization and Mapping),

computer vision, Augmented Reality (AR), Virtual

Reality (VR), indoor Geographic and Information

Systems (GIS) have been made. Nowadays, visual

SLAM (V-SLAM) for tracking is a systematic prob-

lem. The core of the algorithm development has be-

come mature, but the success still relies on a complete

and robust hardware-software solution that fits within

the application.

For instance, the localization issue has primar-

ily been tackled within traditional industrial applica-

tions and in autonomous vehicles that involve robots

with limited mobility and a defined kinematic model.

However, in most studies, the SLAM used by humans

and humanoid robots doesn’t make specific optimiza-

tion for the motion characteristics. It rather directly

carries experiments and evaluates the results of other

SLAM modules. Hence, although the maturity of

SLAM, new applications imply that additional exper-

iments have to be carried out.

Furthermore, over the last few years, new sensors

such as Time Of Flight (TOF) and RGB-D cameras

have pushed the boundaries of robot perception sig-

nificantly (Zollh

¨

ofer et al., 2018). The maturity of V-

SLAM has also contributed to the emergence of low-

Ouerghi, S., Ragot, N., Boutteau, R. and Savatier, X.

Comparative Study of a Commercial Tracking Camera and ORB-SLAM2 for Person Localization.

DOI: 10.5220/0008980703570364

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 4: VISAPP, pages

357-364

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

357

cost systems in the market such as the Intel RealSense

T265 tracking camera that estimates the 6-DOF mo-

tion (Intel, 2019). Thus, it would be worth consid-

ering to investigate such available commercial visual

sensors and discuss about their usability for a reliable

tracking of a human as for instance in the context of

industrial environments.

This paper aims at evaluating the tracking perfor-

mance of the new imaging and tracking system, the

Intel RealSense T265 released by Intel in 2019 by

comparing its performances to ORB-SLAM2 (Mur-

Artal and Tardos, 2016). ORB-SLAM2 has been par-

ticularly chosen as it is one of the most accurate open-

source V-SLAM algorithms that integrates the ma-

jority of state-of-the-art techniques including multi-

threading, loop-closure detection, relocalization, bun-

dle adjustment and pose graph optimization. As has

been previously stated, the context of the benchmark-

ing involves a hand-held camera by a person moving

in an industrial environment.

The paper is organized as follows: section 2

presents some works including V-SLAM and new

imaging systems. Section 3 gives details about the

used sensors in this study as well as the evaluation

metrics used to assess their performance. Section 4

highlights the calibration method used between the

camera estimation and the VICON’s one to put both

of them in the same reference frame. Finally, Sec-

tion 5 presents a comparative study between the Re-

alSense T265 tracking camera and the stereo ORB-

SLAM2 followed by a discussion of the findings and

conclusions.

2 RELATED WORK

Our work is related to the fundamental and heav-

ily researched problem in computer vision: the vi-

sual SLAM, through the comparison of the perfor-

mances of the new low-cost RealSense tracking sen-

sor T265 and the RealSense D435 coupled with the

ORB-SLAM2 algorithm running in the stereo mode.

The history of the research on SLAM has been over

30 years, and the models for solving the SLAM prob-

lem can be divided into two main categories: filtering

based methods and graph optimization based meth-

ods. The filtering based methods usually use the Ex-

tended Kalman Filter (EKF), Unscented Kalman Fil-

ter (UKF) or Particle Filter (PF). These methods first

predict both the pose and the 3D features in the map

and then update these latters when a measurement is

acquired. The state of the art key-methods based on

filtering are the MonoSLAM (Davison et al., 2007)

that uses an EKF and FastSLAM (Montemerlo et al.,

2002) that uses a PF. The methods based on graph

optimization generally use bundle adjustment to si-

multaneously optimize the poses of the camera and

the 3D points of the map which corresponds to an

optimization problem. A key-method is PTAM pro-

posed by Klein et al. (Klein and W. Murray, 2009)

which introduced the separation of the localization

and mapping tasks into different threads and perform-

ing bundle-adjustment on keyframes in order to be

able to meet the real-time constraint. ORB-SLAM

uses multi-threading and keyframes as well (Mur-

Artal et al., 2015) and could be considered as an

extension of PTAM. On top of these functionalities,

ORB-SLAM performs loop-closing and the optimiza-

tion of a pose-graph. ORB-SLAM was first intro-

duced to work with monocular cameras and has sub-

sequently been extended to stereo and RGB-D cam-

eras in (Mur-Artal and Tardos, 2016). It therefore

represents the most complete approach in the state-

of-the-art-methods and has been used as a reference

method in several works. Moreover, a popular re-

search axis in SLAM is the visual-inertial SLAM

based on the fusion of vision sensor measurements

with an Inertial Measurement Unit (IMU). As well as

visual SLAM, VI-SLAM methods can be divided into

filtering-based and optimization-based. A review of

the main VI-SLAM methods has been presented in

(Chang et al., 2018).

In addition, new camera technologies have been

investigated in the context of visual SLAM. RGB-D

cameras have been extensively used in recent years

and several works document their performance. In

(Weng Kuan et al., 2019), a comparison of three

RGB-D sensors that use near-infrared (NIR) light pro-

jection to obtain depth data is presented. The sensors

are evaluated outdoors where there is a strong sun-

light interference with the NIR light. Three kinds of

sensors have been used namely a TOF RGB-D sen-

sor, the Microsoft Kinect v2, a structured-light (SL)

RGB-D sensor, the Asus Xtion Pro Live and an ac-

tive stereo vision (ASV) sensor the Intel RealSense

R200. These three sensors have been as well com-

pared in the context of indoor 3D reconstruction and

concluded that the Kinect v2 has better performance

in returning less noisy points and denser depth data.

In (Yao et al., 2017), a spatial resolution compar-

ison has been presented between Asus Xtion Pro,

Kinect v1, Kinect v2 and the R200. This compari-

son showed that the Kinect v2 performs better than

both the Primesense sensors and the Intel R200 in-

doors. In (Halmetschlager-Funek et al., 2019), ten

depth cameras have been evaluated. The experiments

have been performed in terms of several evaluation

metrics including bias, precision, lateral noise, dif-

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

358

ferent lighting conditions, materials and multiple sen-

sor setups in indoor environments. Authors expressed

that the Microsoft Kinect v2 behaves significantly dif-

ferently compared to the other sensors as it outper-

forms all sensors regarding, bias, lateral noise and

precision for d > 2m and is less precise for the range

0.7m < d < 2m than the structured light sensors.

Recently, Intel has released the Tracking sensor T265

(Intel, 2019) from the Intel RealSense line of prod-

ucts. The T265 is a standalone tracking camera that

uses a proprietary visual inertial SLAM algorithm

for accurate and low-latency tracking targeting multi-

ple applications such as robotics, drones, augmented

reality (AR), and virtual reality. Current literature

does not seem to include any research work to di-

rectly compare the performance of the T265 track-

ing camera with an existing state-of-the-art algorithm.

The investigation is therefore centered on comparing

the T265 RealSense camera with the stereo ORB-

SLAM2.

3 MATERIALS AND

EVALUATION METRICS

This section briefly describes the operational princi-

ples of the used camera sensors namely the Intel Re-

alSense D435 and the Intel RealSense T265. Then,

it presents the evaluation metrics used to benchmark

the performance of the T265 tracking system vs ORB-

SLAM2.

3.1 Characteristics of the Used Sensors

3.1.1 The Intel D435 RealSense Depth Camera

The Intel RealSense D400 depth camera series tech-

nology represent an important milestone as it intro-

duces inexpensive, easy-to-use 3D cameras for both

indoor and outdoor. The Intel D435 is the successor

of the depth camera D415. Both are stereo cameras

and have an Infra Red (IR) projector to obtain a good

field rate and an RGB camera as well. The difference

between them is that D435 has a wider field of view.

The RealSense D-400 series support depth output and

enable capturing a disparity between images up to a

1280 × 720 resolution, at up to 90 fps (Intel, 2017).

3.1.2 The Intel T265 Tracking Camera

The Intel RealSense Tracking Camera T265 is a stan-

dalone 6-DOF tracking sensor that runs a visual-

inertial SLAM algorithm onboard. It can, addition-

ally, integrate wheel odometry for greater robustness

in robotics. The T265 uses inputs from dual fisheye

cameras and an IMU along with processing capabili-

ties from the Movidius MA215x ASIC allowing it to

be a low-power, high performance device, adequate

for embedded systems. The SLAM algorithm running

onboard is a proprietary algorithm based on fusing

images, inertial data, sparse depth and wheel odom-

etry if available in an embedded system. It also uses

a Sparse-Kalman filtering approach, poses at 200Hz

and an appearence-based relocalization. Intel claims

that the loop-closure error is below 1% of path length.

Intel states that the T265 tracking camera is for use

with drones, robots and AR/VR applications. In fact,

the two fisheye cameras provide a large field of view

for robust tracking even with fast motion. However,

unlike previous Intel RealSense cameras such as the

D400 series, the T265 is not a depth camera. Intel

does note that it is possible to use the image feed

from the two fisheye lenses and sensors to compute

dense depth, but the results would be poor compared

to other RealSense depth cameras, as the lenses are

optimized for wide tracking field of view, rather than

depth precision, and there is no texture projected onto

the environment to aid in depth fill. However, the

T265 can be paired with the RealSense D400 camera

for increased capabilities where the tracking camera

and the depth camera are used in combination as for

instance for occupancy mapping and obstacle avoid-

ance (Intel, 2019).

3.2 Evaluation Metrics

For evaluating the trajectory accuracy, some evalua-

tion metrics have been employed including the abso-

lute trajectory error (ATE) and the Relative Pose Er-

ror (RPE) as presented by Sturm et al. (Sturm et al.,

2012). We, additionally, use the Euclidean Error (EE)

to benchmark the T265 tracking performance.

3.2.1 Absolute Trajectory Error

This metric evaluates the global consistency of the

estimated trajectory by comparing the absolute dis-

tances between the estimated and the ground truth

ones. This metric was introduced in (Sturm et al.,

2012) and consists first in aligning the two trajectories

and then evaluating the root mean squared error over

all time indices of the translational components. The

alignment allows to find the rigid-body transforma-

tion S referring to the least-squares solution that maps

the estimated trajectory P

1:n

onto the ground truth tra-

jectory Q

1:n

, where n is the number of poses. Hence,

the absolute trajectory error F

i

at time step i can be

computed as

F

i

= Q

−1

i

SP

i

. (1)

Comparative Study of a Commercial Tracking Camera and ORB-SLAM2 for Person Localization

359

The root mean squared error over all time indices of

the translational components could, hence, be evalu-

ated which refers to

RMSE(F

1:n

) = (

1

n

n

∑

i=1

k

trans(F

i

k

2

)

1/2

. (2)

3.2.2 Relative Pose Error

The RPE measures the local accuracy of a trajectory

over a fixed time interval which refers to the drift in a

trajectory suitable for evaluating visual odometry sys-

tems. While the ATE assesses only the translational

errors, the RPE evaluates both: the translational and

rotational errors. Therefore, the RPE is always greater

than the ATE (or equal if there is no rotational error).

The RPE metric gives, indeed, a away to combine ro-

tational and translational errors into a single measure.

The instant RPE is defined at time step i as E

i

E

i

= (Q

−1

i

Q

i+∆

)

−1

(P

−1

i

P

i+∆

), (3)

where ∆ is a fixed time interval that needs to be cho-

sen. For instance, for a sequence recorded at 30 Hz,

∆ = 30 gives the drift per second which is useful for

visual odometry systems as previously stated. From

a sequence of n camera poses, we obtain in this way

m = n −∆ individual relative pose errors along the se-

quence. From these errors the RMSE over all time

indices of the rotation component is computed.

RMSE(E

1:n

,∆) = (

1

m

m

∑

i=1

k

trans(E

i

k

2

)

1/2

. (4)

In fact, it has been reported in (Sturm et al., 2012) that

the comparison by translational errors is sufficient as

rotational errors show up as translational errors when

the camera is moved. For SLAM systems, this metric

is used by averaging over all possible time intervals

by computing

E

1:n

=

1

n

n

∑

∆=1

RMSE(E

1:n

,∆). (5)

3.2.3 Euclidian Error

We report the use of the Euclidian Distance Error

root-mean squared as an additional evaluation met-

ric. As we are targeting the localization of a person,

we use this metric to evaluate the pose error on the

ground plane. We define the root-mean squared Eu-

clidian Error (EE) as ε

ε = RMSE(T

1:n

) = (

1

n

n

∑

i=1

T

2

i

)

1/2

, (6)

where T

i

is the magnitude of the Euclidean Distance

Error along the horizontal plane between the esti-

mated and the ground truth pose at instant i.

4 GEOMETRIC CALIBRATION

BETWEEN THE CAMERA AND

THE VICON

The extrinsic calibration consists in estimating the rel-

ative pose between the camera and the VICON mo-

tion capture system. The camera sensors D435, T265

and the markers tracked by the VICON system are

rigidly attached to the same support. The knowledge

of this rigid transformation between the camera’s op-

tical center and the VICON’s reference is essential in

order to express the camera estimate in the VICON’s

reference frame. This implies first the time alignment

of the poses and then the estimation of the rigid body

transformation.

4.1 Time Alignment

This step is essential in order to synchronize the

timestamped data of the two sensors. An opensource

method presented in (Furrer et al., 2017) has been

used. This method relies on first resampling the

poses at the lower frequency of the two pose signals,

then, correlating the angular velocity norms of both

of them.

4.2 Rigid Transformation

The transformation is calculated using the corre-

sponding point set registration. Considering two sets

of 3D points, Set

vicon

and Set

camera

with Set

vicon

given

in the VICON’s reference frame and Set

camera

given

in the camera’s coordinate frame, solving for R and t

from:

Set

vicon

= R.Set

camera

+ t, (7)

allows to find the rotation matrix R and the translation

vector t that transform the points from the camera’s

frame to the VICON’s frame. This consists in finding

the optimal rigid transformation. First, the centroids

of the two datasets are found using

centroid

vicon

=

1

N

N

∑

i=1

P

i

vicon

, (8)

centroid

camera

=

1

N

N

∑

i=1

P

i

camera

, (9)

where N is the number of corresponding points in the

two datasets, P

vicon

a 3D point in the VICON’s frame

and P

camera

the corresponding point in the camera’s

frame with P = [xyz]

T

.

The rotation matrix R is found by SVD where H is

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

360

first calculated

H =

N

∑

i=1

(P

i

vicon

−centroid

vicon

)(P

i

camera

−centroid

camera

)

(10)

Then,

[U,S,V] = SVD(H) (11)

allows to find the rotation matrix by performing

R = V.U

T

. (12)

The translation vector is also found by using

t = −R.centroid

camera

+ centroid

vicon

. (13)

Using the rotation matrix and the translation vector,

the coordinates expressed in the camera’S frame can

be transformed to the VICON’s frame.

5 EXPERIMENTS AND RESULTS

5.1 Experimental Setup

We considered a real environment that is covered by

the VICON system cameras at the number of 15. A

snapshot of the hall, close to an industrial environ-

ment, is depicted in Figure 1. As previously stated,

the VICON measurements serve as ground truth ref-

erences as they are highly accurate (Merriaux et al.,

2017). The experiments were conducted with ROS

(Melodic version) on a Linux computer, with an In-

tel Core i7-4710 CPU. The opensource implementa-

tion of ORB-SLAM2 has been used with a moving

stereo camera, the Intel D435. In order to emulate the

human localization in an industrial environment, 6 se-

quences were recorded, described in Table 1. The first

two have been recorded using the D435 stereo cam-

era only, the next two using the T265 tracking camera

only and the last two using both the D435 and T265

cameras rigidly fixed on the same support.

Figure 1: Snapshot from the environment of the recorded

sequences.

Table 1: Experimental Sequences.

Sequence Camera 3D length(m)

Seq1 D435 34.99

Seq2 D435 13.15

Seq3 T265 24.95

Seq4 T265 31.99

Seq5 D435 and T265 58.10

Seq6 D435 and T265 43.16

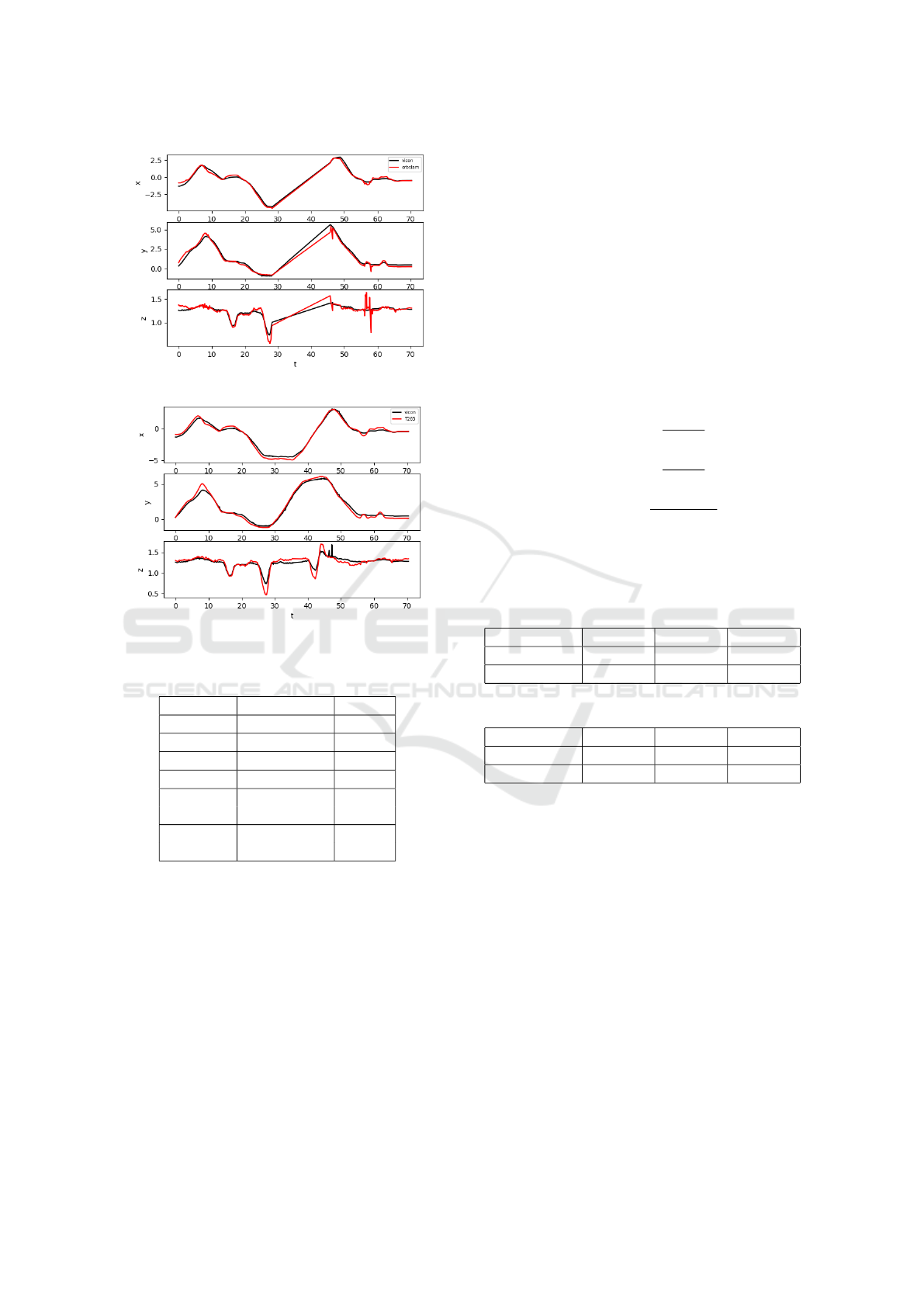

5.2 Experiments

The experiments considered a localization task of a

hand-held moving camera in an industrial environ-

ment. The metrics presented in Section 3 have been

employed to benchmark the Intel T265 tracking cam-

era. Table 2 compares the Absolute Trajectory Error

(ATE) for the different sequences recorded presented

in Table 1. We also compare the Relative Pose Er-

ror (RPE) in Table 3 that is averaged over all possi-

ble time intervals and finally the Euclidian Error (EE)

in the ground plane in Table 4. The values of ATE,

RPE and EE are root mean squared. We also show

the aligned trajectories over X, Y and Z directions for

Sequence 6: ORB-SLAM2’s estimation in Figure 2

and T265’s one in Figure 3.

Table 2: ATE with OrbSLAM2 and T265.

Seq System ATE[m] % of Seq length

Seq1 ORB-SLAM2 0.2597 0.74

Seq2 ORB-SLAM2 0.2511 1.9

Seq3 T265 0.4007 1.6

Seq4 T265 0.5217 1.63

Seq5

ORB-SLAM2 0.4591 0.79

T265 0.4262 0.73

Seq6

ORB-SLAM2 0.3762 0.87

T265 0.4303 0.99

Table 3: RPE with ORB-SLAM2 and T265.

Sequence System RPE[m]

Seq1 ORB-SLAM2 2.8047

Seq2 ORB-SLAM2 1.7381

Seq3 T265 3.0803

Seq4 T265 3.8270

Seq5

ORB-SLAM2 3.9459

T265 3.8996

Seq6

ORB-SLAM2 2.6213

T265 3.1742

Based on Table 2, the ORB-SLAM2 algorithm

and the T265 Tracking camera perform almost equiv-

alently in terms of accuracy. The rotational error cal-

culated in degrees per second presented in Table 3

corroborates this observation. The rotational error is

Comparative Study of a Commercial Tracking Camera and ORB-SLAM2 for Person Localization

361

Figure 2: OrbSLAM2 vs Vicon over X, Y and Z axes.

Figure 3: T265 vs Vicon over X, Y and Z axes.

Table 4: EE root-mean squared with ORB-SLAM2 and

T265.

Sequence Camera EE[m]

Seq1 ORB-SLAM2 0.4761

Seq2 ORB-SLAM2 0.4456

Seq3 T265 0.5939

Seq4 T265 0.6997

Seq5

ORB-SLAM2 0.5818

T265 0.5614

Seq6

ORB-SLAM2 0.5616

T265 0.6262

more important than the translational one as shown

by the RPE values that encode both translational and

rotational errors compared to the ATE values reflect-

ing only the translational errors. We analyse the se-

quences 5 and 6 more thoroughly in Figure 4 where

some statistical parameters are compared namely the

RMSE, the mean, the median, the standard devia-

tion(std), the maximum value (max) and the mini-

mum value (min). We denote that for the estimation

of these parameters, 1065 poses have been used for

ORB-SLAM2 against 4093 poses for T265 for Se-

quence 5 depicted in Figure 4a. For Sequence 6, 817

aligned poses for ORB-SLAM2 against 4754 poses

for T265 have been used (Figure 4b). In fact, the fre-

quency of the VICON system used is these experi-

ments is of 100 Hz, the ORB-SLAM2 algorithm out-

puts estimations at a frequency around 20 Hz while

the T265 outputs estimations at a much higher fre-

quency around 200 Hz which justifies the varying

number of camera poses aligned between the cam-

era and the VICON despite the use of the same se-

quences. Thus, in order to have a better comparison

of the results obtained from sequences 5 and 6, we

rely on normalizing the RMSE. Various methods of

RMSE normalization have been reported in literature

including but not limited to the normalization by the

mean, the standard deviation (std) and the difference

between the maximum and minimum values as fol-

lows

NRMSE

1

=

RMSE

mean

(14)

NRMSE

2

=

RMSE

std

(15)

NRMSE

3

=

RMSE

max − min

(16)

The normalized RMSE values (NRMSE) according to

the stated normalization methods for the Sequences 5

and 6 are presented in Table 5 and Table 6.

Table 5: Normalized RMSE for sequences 5.

Method NRMSE

1

NRMSE

1

NRMSE

1

ORB-SLAM2 1,057 3,0777 0,5979

T265 1,0660 2,8914 0,5832

Table 6: Normalized RMSE for sequences 6.

Method NRMSE

1

NRMSE

1

NRMSE

1

ORB-SLAM2 1,0995 2,4056 0,2499

T265 1,0619 2,9713 0,5112

5.3 Discussion

From these results, it can be deduced that ORB-

SLAM2 and T265 tracking camera give competitive

accuracy. However, the T265 provides pose estima-

tions at a 10× higher frequency. It should be noted

that we relied on a simple stereo system for ORB-

SLAM2 as industrial environments may contain ei-

ther indoor or outdoor sites and the use of depth

(RGB-D) cameras to reconstruct large outdoor envi-

ronments is not feasible due to lighting conditions

and low depth range. In fact, although the compet-

itive accuracy, we evaluate the localization provided

by T265 as more reliable for two main reasons. On

the one hand, the localization provided by the T265

tracking camera is at a much higher frequency (200

Hz vs an average of 17 Hz for ORB-SLAM2). On

the other hand, the statistical parameters in Figure

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

362

(a) Sequence 5.

(b) Sequence 6.

Figure 4: Benchmark evaluation over two sequences using different parameters.

4 showed maximum error values higher with ORB-

SLAM2 and closer mean and median errors for T265

than for ORB-SLAM2 which fits better a gaussian

error and assesses more reliability against outliers.

However, the T265 camera is only dedicated for the

localization task while using the stereo output of the

D435 with ORB-SLAM2 allows the person localiza-

tion as well as other functionalities such as mapping

and localizing objects using the depth information.

6 CONCLUSION

In this paper, we proposed a benchmarking of the Re-

alSense T265 tracking camera for person localization

in an industrial environment. The presented work is

based on a comparative study between the T265 cam-

era and ORB-SLAM2 known to be the most complete

up-to-date visual SLAM method as it includes the ma-

jority of state-of-the-art techniques. The study con-

sisted in an experimental evaluation based on com-

paring the localization performances of both systems

with the very accurate motion capture system VICON

used as ground-truth. The estimated and ground-truth

trajectories were first time-synchronized then com-

pared using literature metrics such as the Absolute

Trajectory Error and the Relative Pose Error as well

as the Euclidian distance Error (EE) used to evaluate

error on the ground plane. The experimental evalu-

ation showed that both vision-based localization sys-

tems provide competitive accuracy, but the localiza-

tion provided by the Intel RealSense T265 is more

reliable. Furthermore, it has been noted that the In-

tel RealSense Tracking Camera T265 complements

Intel’s RealSense D400 series cameras, and the data

from both devices can be coupled for advanced appli-

cations like occupancy mapping, advanced 3D scan-

ning and improved navigation and crash avoidance in

indoor environments.

ACKNOWLEDGEMENTS

This work was carried out as part of the COPTER

research project, and is co-funded by the European

Union and the Region Normandie. Europe is involved

in Normandy with the European Regional Develop-

ment Fund (ERDF).

REFERENCES

Chang, C., Zhu, H., Li, M., and You, S. (2018). A review of

visual-inertial simultaneous localization and mapping

from filtering-based and optimization-based perspec-

tives. Robotics, 7:45.

Comparative Study of a Commercial Tracking Camera and ORB-SLAM2 for Person Localization

363

Davison, A. J., Reid, I. D., Molton, N. D., and Stasse,

O. (2007). MonoSLAM: Real-Time Single Camera

SLAM. IEEE TPAMI, 29(6):1052–1067.

Furrer, F., Fehr, M., Novkovic, T., Sommer, H., Gilitschen-

ski, I., and Siegwart, R. (2017). Evaluation of Com-

bined Time-Offset Estimation and Hand-Eye Calibra-

tion on Robotic Datasets. Springer International Pub-

lishing.

Halmetschlager-Funek, G., Suchi, M., Kampel, M., and

Vincze, M. (2019). An empirical evaluation of ten

depth cameras: Bias, precision, lateral noise, different

lighting conditions and materials, and multiple sen-

sor setups in indoor environments. IEEE Robotics Au-

tomation Magazine, 26(1):67–77.

Intel (2017). Intel

R

RealSense

TM

Depth Camera D400-

Series. 0.7 edition.

Intel (2019). Intel

R

RealSense

TM

Tracking Camera T265

datasheet. 001 edition.

Klein, G. and W. Murray, D. (2009). Parallel tracking and

mapping on a camera phone. pages 83–86.

Merriaux, P., Dupuis, Y., Boutteau, R., Vasseur, P., and Sa-

vatier, X. (2017). A study of vicon system positioning

performance. Sensors, 17:1591.

Montemerlo, M., Thrun, S., Koller, D., and Wegbreit, B.

(2002). Fastslam: A factored solution to the simulta-

neous localization and mapping problem. In In Pro-

ceedings of the AAAI National Conference on Artifi-

cial Intelligence, pages 593–598. AAAI.

Mur-Artal, R., Montiel, J. M. M., and Tard

´

os, J. D. (2015).

Orb-slam: a versatile and accurate monocular slam

system. CoRR, abs/1502.00956.

Mur-Artal, R. and Tardos, J. (2016). Orb-slam2: an open-

source slam system for monocular, stereo and rgb-d

cameras. IEEE Transactions on Robotics, PP.

Sturm, J., Engelhard, N., Endres, F., Burgard, W., and Cre-

mers, D. (2012). A benchmark for the evaluation of

rgb-d slam systems. pages 573–580.

Weng Kuan, Y., Oon Ee, N., and Sze Wei, L. (2019). Com-

parative study of intel r200, kinect v2, and primesense

rgb-d sensors performance outdoors. IEEE Sensors

Journal, PP:1–1.

Yao, H., Ge, C., Xue, J., and Zheng, N. (2017). A high spa-

tial resolution depth sensing method based on binocu-

lar structured light. Sensors (Switzerland), 17.

Zollh

¨

ofer, M., Stotko, P., G

¨

orlitz, A., Theobalt, C., Nießner,

M., Klein, R., and Kolb, A. (2018). State of the art

on 3d reconstruction with rgb-d cameras. Computer

Graphics Forum, 37:625–652.

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

364