Dynamic Detectors of Oriented Spatial Contrast

from Isotropic Fixational Eye Movements

Simone Testa

1

, Giacomo Indiveri

2 a

and Silvio P. Sabatini

1 b

1

Department of Informatics, Bioengineering, Robotics and Systems Engineering, University of Genoa, Genoa, Italy

2

Institute of Neuroinformatics, University of Z

¨

urich and ETH Z

¨

urich, Z

¨

urich, Switzerland

Keywords:

Active Vision, Fixational Eye Movements, Event-based Sensor, Neuromorphic Computing, Receptive Fields,

Spiking Neural Networks.

Abstract:

Good vision proficiency and a complex set of eye movements are frequently coexisting. Even during fixa-

tion, our eyes keep moving in microscopic and erratic fashion, thus avoiding stationary scenes from fading

perceptually by preventing retinal adaptation. We artificially replicate the functionalities of biological vision

by exploiting this active strategy with an event-based camera. The resulting neuromorphic active system re-

distributes the low temporal frequency power of a static image into a range the sensor can detect and encode

in the timing of events. A spectral analysis of its output attested both whitening and amplification effects

already postulated in biology depending on whether or not the stimulus’ contrast matched the 1/k falloff typ-

ical of natural images. Further evaluations revealed that the isotropic statistics of fixational eye movements is

crucial for equalizing the response of the system to all possible stimulus orientations. Finally, the design of a

biologically-realistic spiking neural network allowed the detection of stimulus’ local orientation by anisotropic

spatial summation of synchronous activity with both ON/OFF polarities.

1 INTRODUCTION

Visual perception is a fundamentally active process.

Humans and many other mammals are endowed with

a specific and complex set of eye movements through

which they incessantly scan the environment (Land,

2019). This active method has long been proven to

overcome loss of vision during fixation of static ob-

jects (Ditchburn and Ginsborg, 1952) thanks to a pe-

culiar ensemble of oculomotor mechanisms known

as Fixational Eye Movements (or FEMs) (Martinez-

Conde and Macknik, 2008). In particular, while the

desensitization properties of retinal ganglion cells to

unchanging stimuli would lead to perceptual fading of

stationary objects during retinal stabilization, FEMs

enable refreshing neural responses by inducing tem-

poral transients (Riggs and Ratliff, 1952). The fact vi-

sual systems so strongly depend on temporal changes

suggests that the still-camera model of the eye and

the spatial coding idea is at least lacking. Actually, in

order to extract and code spatial information, a com-

bination of spatial sampling and temporal processing

a

https://orcid.org/0000-0002-7109-1689

b

https://orcid.org/0000-0002-0557-7306

is required (Rucci et al., 2018). The performance gap

between artificial and biological visual systems could

therefore depend on substantial differences about how

information is acquired and encoded. Biological evi-

dences (Gollisch and Meister, 2008) indicate that reti-

nal ganglion cell outputs are massively parallel, data-

driven (asynchronous) and with high temporal resolu-

tion. Here, a temporal encoding scheme is adopted,

where information is carried in the timing of activa-

tion, as opposed to pure spatial encoding schemes,

which are solely based on the identity of activated re-

ceptors.

Similar to a biological retina, a neuromorphic

camera, such as the Dynamic Vision Sensor (DVS),

only responds to temporal transients in the visual

scene, by converting them into a stream of asyn-

chronous events uniquely based on time-variations of

luminance contrast (Lichtsteiner et al., 2008). In ad-

dition to the position and the timing of brightness

change, each event brings information about its po-

larity, i.e. ON or OFF events, that represent dark-to-

light or light-to-dark transitions, respectively. By in-

terfacing these sensors to mixed signal analog-digital

neuromorphic electronic processors, such as the DY-

namic Neuromorphic Asynchronous Processor (DY-

674

Testa, S., Indiveri, G. and Sabatini, S.

Dynamic Detectors of Oriented Spatial Contrast from Isotropic Fixational Eye Movements.

DOI: 10.5220/0009170606740681

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 5: VISAPP, pages

674-681

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

NAP) (Moradi et al., 2017), real-time and energy-

efficient vision processing systems can be imple-

mented. However, despite the outstanding capabili-

ties of neuromorphic sensors with respect to classical

frame-based cameras, their intrinsic blindness to sta-

tionary images limits their application in several real-

world scenarios, as a continuous relative motion be-

tween the scene and the sensor is required. Hence, a

commonly adopted approach is to use moving stimuli

displayed on a monitor, resulting however in record-

ing artifacts due to video refreshing (Orchard et al.,

2015). Yet, by emulating the FEMs of biological vi-

sion systems, we will be able to extract information

from otherwise undetectable static stimuli and simul-

taneously avoid such artifacts.

In this paper, non-saccadic FEMs were modeled

by a Brownian motion and physically induced on the

sensor by using a pan-tilt unit (PTU). The resulting

active approach proved to be effective for making

event-based sensors responsive to static scenes, thus

closely matching a strategy that biology has evolved

to perform for similar tasks. The spatial frequency

characterization of our sensing system confirmed be-

haviors postulated in biological studies (Rucci and

Victor, 2015). Further analysis revealed that the spa-

tial statistical distribution of micro arbitrary move-

ments (particularly their isotropy) equalizes sensor

activity with respect to the orientation of visual stim-

uli, preserving the efficacy of subsequent feature ex-

traction stages. Specifically, a Spiking Neural Net-

work (SNN) was designed to properly discriminate

stimulus orientation at a local scale. The detection

mechanism exploits highly synchronized events of

both polarities, emitted by jittery pixels aligned with

oriented edges, collected by means of a push-pull con-

figuration of anisotropic spatial kernels, that resemble

biological receptive fields of simple cells in the pri-

mary visual cortex.

The rest of the paper is organized as follows. Sec-

tion 2 introduces both the set-up considered for FEM

reproduction on the DVS and the experiments con-

ducted, providing a description on how information

is encoded by the system. Section 3 details the spec-

tral analysis of the overall behavior of the active sens-

ing system and the influence of isotropic random-like

motion on the response to differently oriented stimuli.

Section 4 presents the SNN for the detection of local

orientation and, finally, in Section 5 we draw the con-

clusions.

2 MATERIALS AND METHODS

2.1 Active Vision System and Stimuli

Inducing bio-inspired fixational eye movements on

a sensor requires, first of all, a mathematical model

of such motion based on its characteristics in natural

viewing. To this aim, we used a Brownian motion

model to approximate some peculiar components of

FEMs (Kuang et al., 2012). Four different random

seeds of Brownian motions (with number of steps

varying between 30 to 50) have therefore been gener-

ated through Python simulations. The resulting paths

should keep unchanged the erratic aspect, mean fre-

quency and size of biological FEMs, adapting the lat-

ter to the characteristics of neuromorphic sensors.

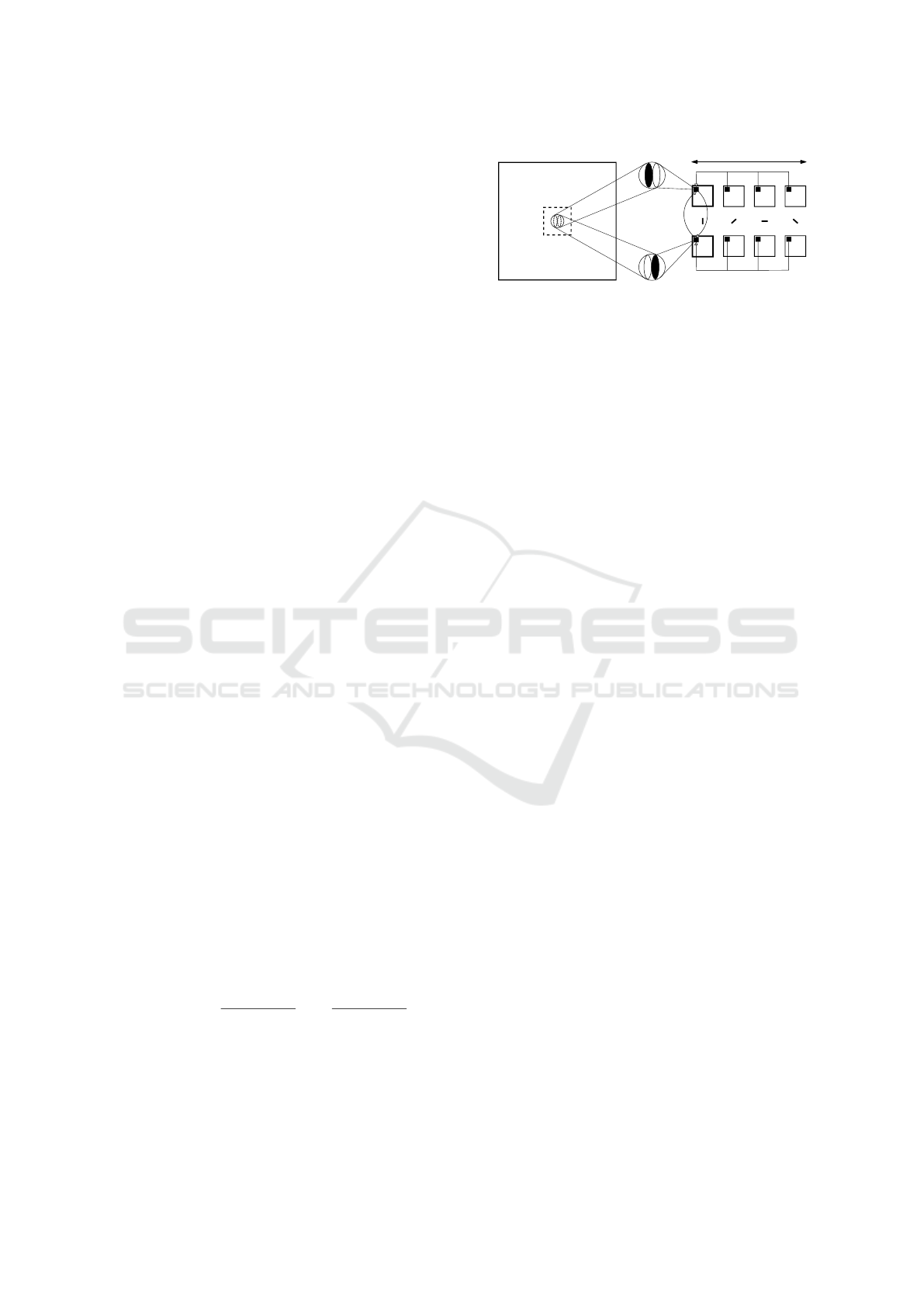

The overall experimental set-up is shown in

Fig. 1a. It is mainly composed of a neuromorphic

sensing hardware and a remotely controlled motor-

ized unit for the generation of precise pan and tilt ro-

tations of the camera. Specifically, we used a neu-

romorphic sensor DVS128 (having a 128 × 128 pixel

array) with a TV-lens C-mount F1.4-16 (6 mm focal

length) and a PTU-D46-17 from Directed Perception

Inc. (chosen for its resolution, as low as 3.1 arcmin).

In order to obtain reproducible results, all acquisitions

were conducted in controlled lighting conditions, by

executing them in a specifically-dedicated dimly lit

room. Stimuli were generated using PsychoPy and

displayed on a 19

0

LCD Philips monitor with a reso-

lution of 1440 × 900 pixels, and refresh rate of 60 Hz.

The distance between sensor and screen was kept at

50 cm for all the experiments. The event-based data,

provided by the sensor in the Address-Event Repre-

sentation (AER) protocol, have been visualized on a

computer’s screen and saved to disk for off-line pro-

cessing.

The same custom Python script simulating the

Brownian motion model converts the whole FEM-

like path in a specific set of commands encoded in

ASCII format. These commands were sent to the

servo-motors of the PTU by means of a terminal em-

ulator, which serves as interface for the controller, in

order to drive the position of the PTU over time, ac-

cording to the simulated path. As the field of view

(FOV) of a single DVS pixel (∼ 22.9 arcmin, leading

to a maximum discernible spatial frequency of ∼ 1.3

cyc/deg) strongly differs from that of human photo-

receptors (∼ 0.6 arcmin in the fovea), typical sizes of

natural FEMs have been scaled up accordingly. Thus,

the maximum step amplitude for artificial FEM in-

duced on the DVS is ∼ 190 arcmin (corresponding to

5 arcmin of biological motion), while the minimum is

∼ 3.1 arcmin (corresponding to 5 arcsec for biologi-

Dynamic Detectors of Oriented Spatial Contrast from Isotropic Fixational Eye Movements

675

Events

from the DVS

Driving commands

to the PTU

(a)

t=t

1

t=t

2

t=t

3

t

0

t

1

OFF

t

2

t

3

t

1

t

2

ON

t

0

t

1

t

1

t

2

t

2

t

3

t

0

t

3

Resulting events

from selected pixel

t=t

0

(b)

Figure 1: Set-up and consequence of fixational movements. (a) The experimental set-up: the DVS-PTU system is placed in

front of a monitor for active scanning of artificial visual stimuli. Driving commands (in ASCII code) are sent from a host

computer’s serial port to the PTU controller for generation of pan and tilt rotations. Conversely, data coming from the sensor,

in AER protocol, are sent to the computer via USB interface. (b) Example of a natural visual scene and relative ON/OFF events

generated by a single pixel of the DVS during fixational eye movements (green path). The inset shows results of the isotropic

analysis of the movement (green polar histogram) with respect to a perfectly isotropic motion (black circle, representing the

reference probability value of ∼ 8.3%, as 12 directions are considered). Red boxes represent the image portions falling in the

FOV of the pixel at three different times.

cal motion). Lastly, the speed of both rotations was

also controlled, in order to keep the whole motion at

a mean temporal frequency between 40 and 50 Hz.

By varying the seed of the random process, dif-

ferent FEMs have been induced on the sensor while

it is exposed to various stimuli, and the relative data

streams have been recorded. Visual experiments have

been conducted with artificial grating stimuli with

varying contrast, spatial frequency and orientation. A

first set of stimuli was composed of 180 gratings with:

unitary contrast, spatial frequency k evenly spaced be-

tween 0.2 and 1.6 cyc/deg (15 discrete values), and

orientation θ evenly spaced between 0 and 165 deg

(12 discrete values, with a 15 deg step). An additional

set of 180 gratings was considered, having same val-

ues of k and θ but an adjusted contrast for every tested

spatial frequency, according to the 1/k falloff of nat-

ural image spectrum amplitude (Field, 1987).

2.2 Spatiotemporal Coding

Figure 1b shows an example of how static spatial in-

formation is temporally encoded in the activity of the

neuromorphic sensor as a result of the induced fix-

ational movements. As a matter of fact, simulated

FEMs ensure that the spatial structure of a static im-

age is encoded both in space and time, as both the po-

sition and the timing of an activated pixel is informa-

tive. Specifically, camera movements transform a sta-

tionary spatial scene into a spatiotemporal luminance

flow, thus redistributing its power in the (non-zero)

temporal frequency domain which is capable of acti-

vating sensors pixels. Spatial luminance discontinu-

ities are now converted into synchronous firing activ-

ity due to the combined effect of microscopic camera

movements and the event-based (i.e., non-redundant)

acquisition process in DVS pixels (see also Section 4).

It is worth noting that a similar role of natural FEMs

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

676

Space

Visual Scene

Time Response Spectral Amplification

Spike Count

(a)

Space

Visual Scene

Time Response Spectral Whitening

Spike Count

(b)

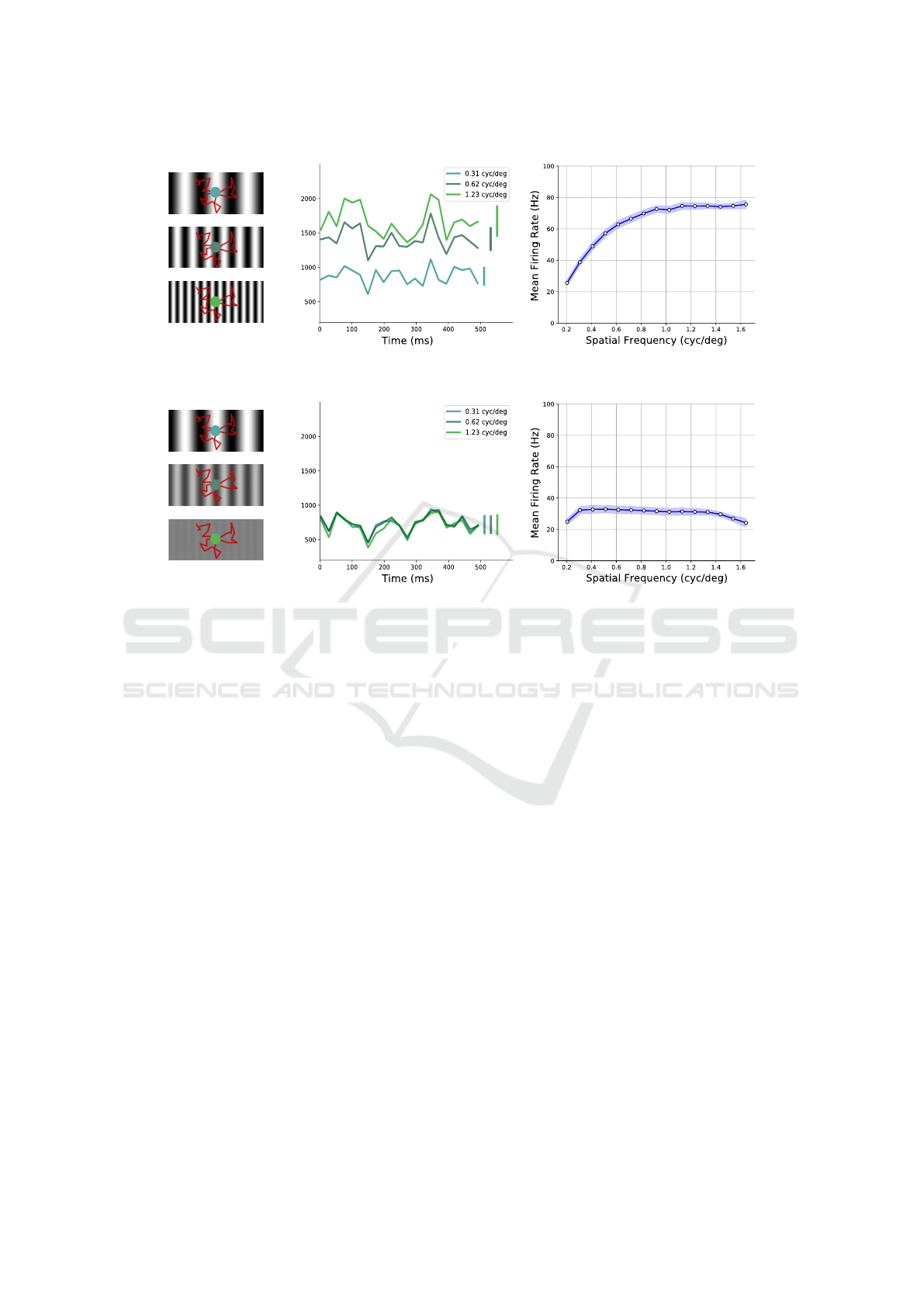

Figure 2: Sensors spectral characterization, for comparison with the results in (Rucci and Victor, 2015). (a) On the left,

examples of unit-valued contrast gratings with 3 different spatial frequencies. FEM-like movements, induced on the sensor,

are superimposed (red path). The central plot presents the resulting activity fluctuations exhibited by the 30 × 30 central pixels

of the DVS during 500 ms of recording: response is measured in number of events occurring in time windows of ∼ 24 ms.

On the right, the amplification of the system to high-frequency grating stimuli with unitary contrast; standard deviation

for different orientations of the gratings is represented by light-blue shaded regions. (b) On the left, gratings having same

frequencies as in (a) but with contrast adjusted according to the 1/k falloff of natural images. Resulting activity fluctuations

of the neuromorphic active system are shown in the central plot, and spectral whitening effect to all frequencies on the right

(shaded regions as in (a), right plot).

in biological early visual processing has been pos-

tulated both in (Greschner et al., 2002) and (Kuang

et al., 2012), for initiating edge extraction and provid-

ing redundancy reduction of spatial information to-

wards an economical representation of the image sig-

nal. Furthermore, phase shifts of activation in nearby

pixels, that are subject to the same movement, should

reflect spatial variations of luminance discontinuities

impinging on nearby receptors, as proposed in the

dynamic theory of vision presented in (Ahissar and

Arieli, 2001).

It is worth noting that, as the DVS is sensitive

to temporal changes of luminance only, anisotropic

movements are undesirable, as they put into an ad-

verse condition all stimuli having the same orienta-

tion of the directional bias of FEMs. Hence, to bet-

ter investigate the effects of the erratic motion on

the perception of such variously oriented stimuli, an

isotropic analysis was conducted. To this aim, the

possible angles that each step of the Brownian mo-

tion could take was limited to multiples of 15 deg (as

for stimuli). Results of the analysis (further described

in section 3.2) for one particular realization of Brow-

nian motion are shown in the polar plot in the inset of

Fig. 1b. We anticipate that oriented luminance transi-

tions would be the feature that benefit of such a prop-

erty of FEM-like movements, supplying the system

with an effective method for unbiased perception of

local elements in the image signal.

3 SYSTEM RESPONSE

CHARACTERIZATION

By taking inspiration from neuroscience studies

(Rucci and Victor, 2015), we characterize the be-

havior of our active sensing system with respect to

stimulus’ spatial frequency - i.e. we examine sen-

Dynamic Detectors of Oriented Spatial Contrast from Isotropic Fixational Eye Movements

677

sor response to individual spatial gratings for differ-

ent contrast conditions (either constant or matching

the statistics of natural images). Certainly, the pro-

cess for event generation in the DVS, the structure

of the visual stimulus, and the specific camera move-

ment adopted, collectively affect such a behavior at

any moment. Therefore, we expect that the activ-

ity elicited in each receptor of our artificial retina

complies with the statistical properties of natural im-

ages in the same way it happens in the biological one

(Kuang et al., 2012). Furthermore, isotropic FEMs

(i.e., for which all directions are visited with equal

probability at each step) should provide an unbiased

orientation information in the output signal. Accord-

ingly, we have analyzed the mean response of the

sensor with respect to this visual feature by system-

atically using gratings of different orientations. It is

worth noting that all the results shown in the follow-

ing are relative to one particular Brownian motion se-

quence, but same results were achieved independently

of the random seed we considered.

3.1 Spectral Analysis

If we compute the mean firing rate of sensors pix-

els, for each spatial frequency k tested and by aver-

aging over all the orientations, we get a measure of

the overall spectral response of the system. In case

of stimuli with maximum contrast, we can notice that

pixels’ mean activity increases with spatial frequency

(see Fig. 2a rightmost panel): stimuli with higher fre-

quencies elicit stronger responses in the system and a

plateau value is reached approximately when spatial

frequency k approaches the spatial resolution limit of

the pixel array. As a matter of fact, by randomly mov-

ing around, as k increases, each receptor scans an in-

creasing number of light-dark transitions, thus elicit-

ing an increasing number of events. Remarkably, by

adjusting the gratings’ contrast according to the 1/k

falloff of natural image amplitude spectrum (Field,

1987), the frequency response with the same drift tra-

jectory gives a roughly constant profile over the whole

range of discernible spatial frequencies (see Fig. 2b

rightmost panel). Hence, the active system tends to

intrinsically oppose to the 1/k trend of natural image

distribution across spatial frequencies, thus counter-

balancing the latter and enabling a whitened response.

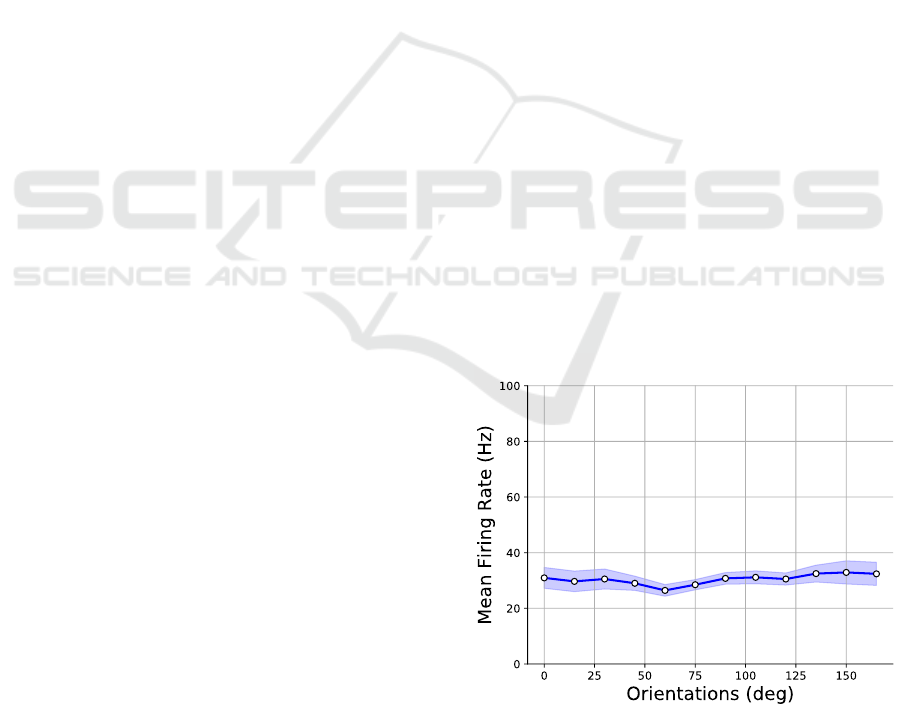

3.2 Orientation Analysis

First, we computed the circular statistics of the Eu-

clidean distances travelled in each of the 12 direc-

tions considered for the stimuli, relative to the whole

path length. The aim was to quantify the isotropy of

FEM-like movements of our active system, in relation

with the effect that each step of the whole movement

causes on the perception of a specific orientation of

the stimulus (that is why the isotropy was computed in

the 0 − π range rather than on the full circle). Results

for a FEM-like motion sequence of 35 steps (e.g., see

the inset of Fig. 1b, where the 12 probability values

were remapped on a full circle for a more intuitive

graphical representation) indicate that the maximum

deviation from circularity is of 3%, thus evidencing

a good approximation of an isotropic behavior. It is

worth noting that the longer is the duration of active

fixations the better is the isotropy we achieve. As a

matter of fact, by considering 80 Brownian motion se-

quences with increasing number of steps (up to 400),

the maximum discrepancy from isotropy decreases

with this number (reaching a minimum of ∼ 1%), in

line with the fact that vision improves for longer fix-

ational periods. By analogy with previous analysis,

we have then computed the mean firing rate of sen-

sor’s pixels as a function of stimulus’ orientation θ,

by averaging across spatial frequencies. As the sensor

moves in isotropic fashion, we expect that the mean

response of the system does not change with respect

to different oriented gratings, as evidenced in Fig. 3,

which refers to 1/k falloff contrast gratings. Simi-

lar behavior has been obtained for constant contrast

gratings as well (not shown). This equalized activity,

in the form of spike sequences from each DVS pixel,

provides a convenient input to a subsequent stage for

unbiased detection of such orientation. Thus, we have

designed and tested a bio-inspired spiking neural net-

work for the detection of static objects’ orientations

on a local scale during active fixation.

Figure 3: Average sensor response (mean pixel firing rate)

to different oriented gratings (blue curve). The light-blue

shaded region represents the standard deviation of pixels fir-

ing rate for different spatial frequencies.

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

678

4 ORIENTATION DETECTION

Orientation detectors consist of spiking neurons orga-

nized in a specific network architecture and fed with

the event-based data provided by the neuromorphic

camera. A spiking neural network is a biologically-

realistic computational primitive where neurons and

synapses are modeled as differential equations, repre-

senting the dynamics of membrane and synaptic cur-

rents (or voltages) respectively (Maass, 1997). There-

fore, SNNs are powerful instruments for exploiting

both space and time information of events from a

jittery sensor. Besides, they can be implemented

onto neuromorphic electronic processors (namely, the

DYNAP) for bio-inspired, highly-efficient and real-

time computing systems. To this purpose, we had to

take into account hardware limitations both in the de-

sign and simulation phases. Accordingly, the neuron

model used for software simulations of the network is

the Differential Pair Integrator (DPI) (Indiveri et al.,

2011), a variant of the generalized integrate-and-fire

model, which is implemented by silicon neurons in

the DYNAP. Likewise, the DPI model of synapses

(Bartolozzi and Indiveri, 2007) was considered.

4.1 Network Architecture

The custom-designed detectors aim to mimic the

functionality of simple cells in the primary visual cor-

tex (V1). At any given spatial scale, detection is

achievable by means of a 2D spatial kernel - i.e. a

receptive field (RF) profile that defines the (feedfor-

ward) synaptic weights between the DVS pixels and

a single V1-like spiking neuron. Neurons’ selectivity

to specifically-oriented edges relates to the direction

of kernel’s elongation (θ), i.e. by the anisotropic spa-

tial summation of events provided by the sensor. In

particular, we used difference of 2D offset elongated

Gaussians that model two sub-fields organized in a

push-pull configuration (i.e. adjacent excitatory and

inhibitory regions) for antagonistic effects of ON and

OFF events, as postulated by experimental evidence -

e.g., see (Hirsch and Martinez, 2006). We specifically

used RF sub-fields with approximate size of 10 × 3

pixels and orientations (θ) evenly spaced between 0

and 165 deg (as we have done for the stimuli). The

kernel was defined as:

h(x

θ

,y

θ

) = e

−

(x

θ

+σ)

2

+(y

θ

/p)

2

2σ

2

− e

−

(x

θ

−σ)

2

+(y

θ

/p)

2

2σ

2

(1)

where x

θ

,y

θ

define a rotated reference frame, with re-

spect to x,y, σ represents the standard deviation (in

number of pixels) along x

θ

, and p > 1 defines the

elongation of the RF along y

θ

. The specific values

of the parameters are σ = 1.08 and p = 4. It is worth

128

128 30

30

9

+

+

ONOFF

Hypercolumn

-

Fovea

Pixel Array

OFFON

9

9

-

-

-

Figure 4: Schematic representation of network organiza-

tion. The 30 × 30 central region (fovea) of the whole DVS

pixel array provides input to the SNN. Each neuron of

a 9 × 9 orientation column takes excitatory synaptic con-

nections from the fovea through specific anisotropic spa-

tial kernels acting as receptive fields (RFs). These RFs

are characterized by two adjacent sub-regions selective to

ON and OFF events respectively, and such distinction is

interchanged for neurons in the two hypercolumns. The

push-pull configuration is achieved via reciprocal inhibitory

connections between corresponding neurons in the hyper-

columns. Within a given hypercolumn, recurrent inhibition

between different orientation columns is also considered.

noting that, in order to restrict the number of neurons

in the network to a size that can be contained on the

DYNAP board, only the central 30 × 30 pixel region

of the DVS was considered as input of the network,

which is comparable to the biological fovea. Fur-

thermore, in order to satisfy the constraints imposed

by the DYNAP on the maximum number of connec-

tions available for each neuron, synaptic weights hav-

ing absolute values lower than 0.2 were set to 0, thus

limiting the actual size of the kernel.

A schematic (non-exhaustive) overview of net-

work organization can be found in Fig. 4. The whole

SNN consists of two neuronal populations, which we

call “hypercolumns”, each one comprising 12 “ori-

entation columns” (for the sake of clarity, only 4 are

shown for each hypercolumn in Fig. 4). A single col-

umn constitutes a group of neurons having same tun-

ing selectivity to a specific orientation of the stimulus

as a matter of fact, the name relates to the columnar-

like structures by which cortical orientation-selective

cells are known to be organized in V1 (Hubel and

Wiesel, 1974). The center of each RF was shifted by 3

pixels for nearby neurons belonging to the same col-

umn (i.e. their RFs share the same θ but have different

centers on the receptor array). Thereby, each orienta-

tion column includes 81 neurons, i.e. 9×9, starting

from position (3,3) of the DVS fovea and ending in

(27,27). The set of all orientation columns, internal to

a same hypercolumn, constitutes a total of 972 neu-

rons (81×12), which can then be contained in a sin-

gle chip of the DYNAP board. Finally, the distinction

between the two hypercolumns is given by the rel-

ative organization of their neuronal receptive fields.

Particularly, if one sub-region of a RF is selective to

Dynamic Detectors of Oriented Spatial Contrast from Isotropic Fixational Eye Movements

679

ON events, the adjacent sub-field will be selective to

OFF events only, and this selectivity is interchanged

between neurons in the two hypercolumns. The push-

pull configuration is then achieved by means of re-

ciprocal inhibition between neurons sharing the same

location in the two hypercolumns (therefore sensitive

to opponent contrast polarity). Furthermore, recurrent

connectivity within both hypercolumns was consid-

ered, with the aim of optimizing discrimination by lat-

eral inhibition (Blakemore et al., 1970) between neu-

rons having different orientation selectivity but RFs

centered in the same location of the pixel array. The

weight of such connections was, in absolute value, 10

times higher than that used for feedforward synapses.

Note that, in order to optimize network stabilization,

an inhibitory neuron (not shown in Fig. 4), spiking

according to a Poisson process (with a 80 Hz firing

rate), was also connected to all other neurons.

4.2 Simulation Results

Python simulations of the SNN were performed on

the event-based data corresponding to the second set

of grating stimuli, having a spatial frequency of ∼

0.5 cyc/deg and all possible orientations. It is worth

noting that the movement of the sensor induces syn-

chronized activity of DVS receptors, as also postu-

lated for biological FEMs (Greschner et al., 2002;

Kuang et al., 2012), and the spatial structure of the

static image will therefore be temporally encoded in

the relative activity of nearby pixels. Hence, by ex-

ploiting this attribute thanks to the designed kernels

TH

Spike

Elicited

No

Response

Membrane Potential

TH

Time

1

1

2

2

3

3

1

2

3

1

2

3

Figure 5: (Top) A cartoon illustrating the spiking dependent

spatial summation of ON and OFF events. A simple visual

scene is shown on the left, where 3 pixels aligned (blue) or

not (red) with the edge are displayed. The FEM-like path is

superimposed (green). (Bottom) An example of membrane

potential in neurons taking inputs from blue or red retinal

neurons is also shown: only blue pixels, thanks to their syn-

chronized activity (for both ON/OFF polarities), are able to

elicit a response on the target V1 model neuron.

and the timing properties of neurons, we can achieve

optimal discrimination performances. Hence, at any

given time, only the membrane potential of neurons

that integrate synchronized events (i.e. from pix-

els that are aligned with the edge) will exceed the

threshold for generating a spike, and thus an output

response. The same behavior cannot be observed

in neurons collecting events from pixels that are not

aligned with the edge, as these activities are not syn-

chronous. Figure 5 illustrates such a mechanism.

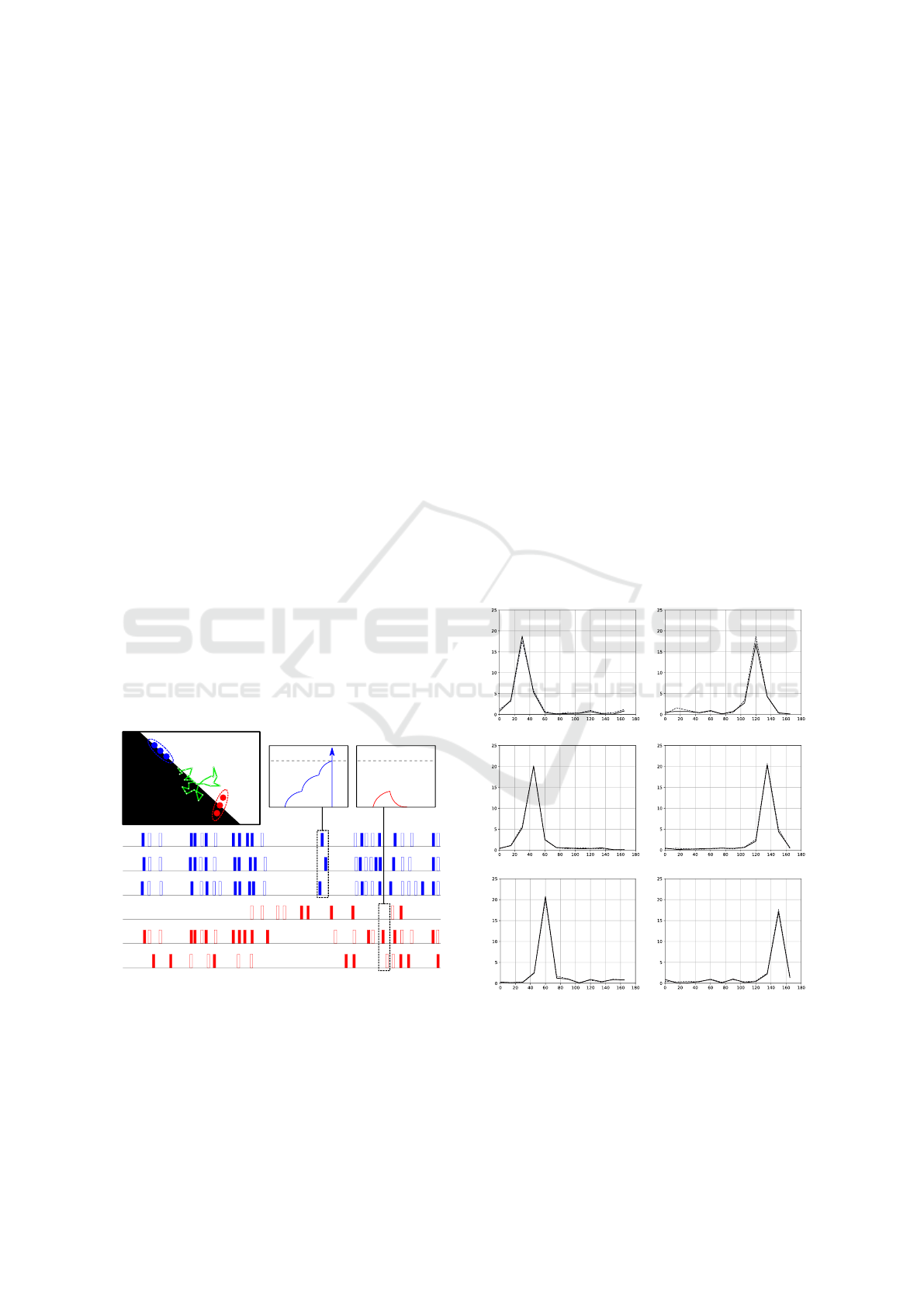

To present detection results of our network, we

have computed the mean activity of all neurons within

a single column, when exposed to a set of differently

oriented gratings. Accordingly, we derived the tuning

curve of such neuronal group. Figure. 6 shows, on

6 different plots, the simulation results for the same

number of distinct orientation columns in both hyper-

columns. As expected, the tuning curves have a peak

in correspondence of the RFs θ value. Hence, we can

infer that the designed SNN is capable of a reliable

estimation of local orientations. Finally, it is worth

noting that the trend is very similar for the two curves

in all plots. This could be due to the fact that random-

like movements of a receptor across an edge trigger

almost the same amount of ON and OFF events.

Stimulus Orientation (deg)

Mean Firing Rate (Hz)

Mean Firing Rate (Hz)

Mean Firing Rate (Hz)

Mean Firing Rate (Hz)

Stimulus Orientation (deg)

Stimulus Orientation (deg)

Mean Firing Rate (Hz)

Mean Firing Rate (Hz)

Stimulus Orientation (deg)

Stimulus Orientation (deg)

Stimulus Orientation (deg)

Figure 6: Resulting tuning curves. They describe, for both

hypercolumns (dashed and solid black curves), the firing ac-

tivity, with respect to all tested orientations of the stimuli,

corresponding to 6 different orientation columns (with tun-

ing of 30, 45 and 60 deg from top to bottom on the left, and

120, 135 and 150 deg on the right).

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

680

5 CONCLUSIONS

Bio-inspired fixational eye movements can transform

a static scene into a spatiotemporal input luminance

signal to the event-based camera. As a consequence,

the low temporal frequency power of a static scene

is shifted into a range that the DVS can properly de-

tect. Besides preventing “perceptual fading” of static

scenes, we show that FEMs can play a central role in

event-based vision by providing an efficient strategy

for acquiring and processing information from nat-

ural images, both enhancing the perception of fine

spatial details in the scene, and facilitating or im-

proving the extraction of important features. Par-

ticularly, due to camera motion, edges in the visual

scene will provoke highly time-correlated activity of

nearby pixels. Due to the randomness of such motion,

events with both polarities can be elicited over time

in each pixel as a result of a same spatial luminance

discontinuity. Therefore, synchronized events with

both polarities eventually encode the spatial structure

of the underlying static image. The push-pull con-

figuration, at which the network operates, exploits

the distinction between events’ polarities, inducing

appropriate excitation or inhibition of ON and OFF

events, for optimizing detection performances. The

whole artificial neural architecture proposed is fully

bio-inspired, both at single unit (neuron model) and at

network level, and is entirely conceived to satisfy the

constrains imposed by ultra low-power mixed signal

analog-digital neuromorphic processors for a future

hardware implementation.

ACKNOWLEDGEMENTS

This project has received funding from the European

Research Council under the Grant Agreement No.

724295 (NeuroAgents).

REFERENCES

Ahissar, E. and Arieli, A. (2001). Figuring space by time.

Neuron, 32(2):185–201.

Bartolozzi, C. and Indiveri, G. (2007). Synaptic dynamics

in analog vlsi. Neural computation, 19:2581–2603.

Blakemore, C., Carpenter, R. H., and Georgeson, M. A.

(1970). Lateral inhibition between orientation detec-

tors in the human visual system. Nature, 228:37–39.

Ditchburn, R. and Ginsborg, B. (1952). Vision with a stabi-

lized retinal image. Nature, 170(4314):36.

Field, D. J. (1987). Relations between the statistics of natu-

ral images and the response properties of cortical cells.

Journal of the Opt. Soc. of America, 4:2379–2394.

Gollisch, T. and Meister, M. (2008). Rapid neural coding

in the retina with relative spike latencies. Science,

319(5866):1108–1111.

Greschner, M., Bongard, M., Rujan, P., and Ammerm

¨

uller,

J. (2002). Retinal ganglion cell synchronization by

fixational eye movements improves feature estima-

tion. Nature neuroscience, 5(4):341.

Hirsch, J. A. and Martinez, L. M. (2006). Circuits that

build visual cortical receptive fields. Trends in neu-

rosciences, 29(1):30–39.

Hubel, D. H. and Wiesel, T. N. (1974). Sequence regular-

ity and geometry of orientation columns in the mon-

key striate cortex. Journal of Comparative Neurology,

158(3):267–293.

Indiveri, G., Linares-Barranco, B., Hamilton, T. J.,

Van Schaik, A., Etienne-Cummings, R., Delbruck, T.,

Liu, S.-C., Dudek, P., H

¨

afliger, P., Renaud, S., et al.

(2011). Neuromorphic silicon neuron circuits. Fron-

tiers in neuroscience, 5:73.

Kuang, X., Poletti, M., Victor, J. D., and Rucci, M. (2012).

Temporal encoding of spatial information during ac-

tive visual fixation. Current Biology, 22(6):510–514.

Land, M. (2019). Eye movements in man and other animals.

Vision research, 162:1–7.

Lichtsteiner, P., Posch, C., and Delbruck, T. (2008). A

128×128 120 dB 15µs latency asynchronous tempo-

ral contrast vision sensor. IEEE journal of solid-state

circuits, 43(2):566–576.

Maass, W. (1997). Networks of spiking neurons: the third

generation of neural network models. Neural net-

works, 10(9):1659–1671.

Martinez-Conde, S. and Macknik, S. L. (2008). Fixational

eye movements across vertebrates: comparative dy-

namics, physiology, and perception. Journal of Vision,

8:1–18.

Moradi, S., Qiao, N., Stefanini, F., and Indiveri, G. (2017).

A scalable multicore architecture with heterogeneous

memory structures for dynamic neuromorphic asyn-

chronous processors (DYNAPs). IEEE transactions

on biomedical circuits and systems, 12(1):106–122.

Orchard, G., Jayawant, A., Cohen, G. K., and Thakor, N.

(2015). Converting static image datasets to spiking

neuromorphic datasets using saccades. Frontiers in

neuroscience, 9:437.

Riggs, L. and Ratliff, F. (1952). The effects of counteracting

the normal movements of the eye. 42:872–873.

Rucci, M., Ahissar, E., and Burr, D. (2018). Temporal

coding of visual space. Trends in cognitive sciences,

22(10):883–895.

Rucci, M. and Victor, J. D. (2015). The unsteady eye: an

information-processing stage, not a bug. Trends in

neurosciences, 38(4):195–206.

Dynamic Detectors of Oriented Spatial Contrast from Isotropic Fixational Eye Movements

681