Quote Surfing in Music and Movies with an Emotional Flavor

Vasco Serra and Teresa Chambel

LASIGE, Faculdade de Ciências, Universidade de Lisboa, Portugal

Keywords: Songs, Lyrics, Movies, Quotes, Time, Memory, Emotions, Explore, Compare, Interactive Media Access,

Synchronicity and Serendipity.

Abstract: We all go through the situation where we listen to a movie or a song lyrics quote and without giving it much

thought, immediately know where it comes from, like an instant and often emotional memory. It is also very

common to be in the opposite scenario where we try hard to remember where we know these words from,

want to discover, and also find it interesting to see them quoted in different contexts. These situations remind

us of the importance of quotes in movies and music, that sometimes get more popular than the movie or song

they belong to. In fact, quotes, music and movies are among the contents that we most treasure, for their

emotional impact and the power to entertain and inspire us. In this paper, we present the motivation and the

interactive support for quotes in As Music Goes By, giving users a chance of searching and surfing quotes in

music and movies. Users can find, explore and compare related contents, and access quotes in a contextualized

way in the movies or song lyrics where they appear. The preliminary user evaluation results, focusing on

perceived usefulness, usability and user experience, were very encouraging, proving the concept, informing

refinements and new developments, already being addressed. Users valued most the search, navigation,

contextualization and emotional flavor: to be able to access and compare quotes in movies and in lyrics, to

navigate across movies and songs, the emotional dimension and representation also for quotes. Future work

will lead us further with the focus on rich, flexible and contextualized interactive access to quotes, music and

movies, aiming for increased understanding of their meaning and relations, chances for serendipitous

discoveries and to get inspired and moved by these media that we treasure.

1 INTRODUCTION

Quotes have been treasured and used since a long

time, and they come from different sources and

contexts. It is common that people identify

themselves with certain lyrics or quotes, inspiring or

reminding them of personal experiences. People

remember quotes and their origin when they induce

strong emotions (Flintlock, 2017; Jenkins, 2014).

Phrases that tend to become popular and quotes

(Danescu-Niculescu-Mizil et al., 2012) often use less

common words but common syntactic patterns, and

work in many different scenarios, making them

“portable” or quotable. The context in which a phrase

is proffered can greatly influence its degree of

memorability, making it relevant to provide users

with the opportunity to access quotes in their original

context, like in songs and movies.

People value music primarily because of the

emotions it evokes (Juslin and Vastfjall, 2008), and

music is often accompanied by lyrics that tell stories

with overt emotional messages. Lyrics play a relevant

role in conveying emotions in songs, tending to

emphasize the negative (like sad and angry) and to

detract from the more positive ones (like happy and

calm), although melodies are often more donimant

than lyrics in eliciting emotions (Ali and

Peynircioğlu, 2006). Chou et al. (2010) demonstrated

the relevance of song lyrics in music advertising,

finding that previously heard old songs have positive

effects by evoking good moods or favorable nostalgia

thoughts, adding to the idea that songs can be very

impactful, with the potencial of altering the behavior

of listeners (Dickens, 1998).

Movies are also very impactful (Oliveira et al.,

2013). In fact, music and movies have a significant

presence and impact in our lives and they have been

playing together since the early days of cinema, even

in silent movies (almost always accompanied by live

music on piano or small orchestras), allowing to

convey a shared meaning, strongly by their lyrics and

subtitles, often quoted and significant to many people.

Serra, V. and Chambel, T.

Quote Surfing in Music and Movies with an Emotional Flavor.

DOI: 10.5220/0009177300750085

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 2: HUCAPP, pages

75-85

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

75

But this relation of music and movies has not been

much explored in media access.

In our previous work, MovieClouds (Chambel et

al., 2013) allowed to access, explore and watch

movies based on their content, mainly in audio and

subtitles, and with a focus on emotions expressed in

the subtitles, in the mood of the music, and felt by the

users. Subtitles were processed and sincronized with

movie watching, but for the songs, we focused on

mood based on the audio, not on lyrics. In As Music

Goes By (Moreira and Chambel, 2019), the aim is to

access and explore music in versions (or covers) and

movies where they appear along time, also with a

focus on emotional impact. Now, as described in this

paper, it is being enriched with support for quotes in

both music and movies, allowing to find, explore and

compare relevant related contents, and to access,

watch and listen to them in context, with the ability to

inform and inspire us. We hypothesized that this

support for quotes, their contextualized access in

movies and lyrics, and their emotional dimention are

relevant to have, and went on researching, designing

and testing.

The paper discusses the motivation, relevance and

means for accessing quotes in the context of music

and movies. It describes the background of related

and previous work in section 2, and presents the

support we are designing and developing for quotes

in As Music Goes By interactive web application in

section 3. A preliminary user evaluation is presented

next, followed by conclusions and future work.

2 BACKGROUND

Quotes have been treasured and used since a long

time. With the advent of the web, sites have appeared

to make them available, based on curators or user

contributions. Brainyquote (.com) e.g. rely on

curators to select quotations and collections to

inspire, motivate and entertain, with the aim to create

a rewarding experience to their users. Their quotes

have historical, political or cultural relevance, others

are for fun, coming from diverse contexts and often

by famous people. Goodreads(.com) is more

especialized in quotes from literature. Other more

specific quotes appear e.g. in the context of self-

development with the aim to inspire and help people

on their path, such as the case of louisehay(.com)

presenting Louise Hay’s affirmation (quote) of the

day and providing access to many more, presented in

different visual styles to help convey their mood. The

concept of quote of the day is also adopted in the

previous sites, and some have categories (e.g.

BrainQuote: love, art, nature, funny, and many other

topics; while LouiseHay is more focused on:

forgiveness, happiness, health, love, inspiration, etc.).

But most of these sites do not include many quotes

from movies or songs, and though in topics one may

find e.g. movies, then quotes are usually about, not

from, movies. Next approaches are more focused on

songs and movies, usually in separate.

Many quotes come from song lyrics. In the old

days listeners got lyrics from listening to songs, but it

became increasingly common to publish the lyrics in

records sleves or booklets, and several authors and

fans already publish songs in videos that present the

lyrics as the video plays. But to make them more

available and searcheable for a vast number of songs,

there are some lyrics-dedicated websites or platforms

since the early 2000s, and even Google displays lyrics

in searches for songs or lyrics, since 2014, using

Google Play. From the early days, e.g. AZLyrics

(.com) allows to browse by artist and to search by free

text, getting results organized by artist, album and

song; and a special section dedicated to soundtracks.

MetroLyrics (.com) has a similar purpose, but has

evolved to include more information like top songs,

videos and news about songs and artists, and allow

users to add meaning. In Songmeanings (.com), users

contribute with lyrics and discuss interpretations.

People tend to find meaning in lyrics and enjoy

knowing the authors’ and other people perspectives.

Genius (.com), possibly the world's biggest collection

of lyrics and musical knowledge, supports song

meaning from users and artists.

With a closer connection with the actual song,

Musixmatch (.com) supports lyrics translations,

having extensions to also synchronize lyrics with

music for Youtube, Spotify among others, aligned

with research that has been carried out in this area

(Wang et al., 2004). Whereas approaches like Shazam

(.com) process audio content to discover a song given

its audio, in situations where a song is playing and a

user wants to find out the name, the lyrics and the

artist, and more recently supporting TV programs and

adds. Besides this practical benefit of music

identification, Typke et al. (2005) already identified

that finding musical scores similar to a query could

help musicologists find out how composers influence

one another or how their works are related to earlier

works of their own or by other artists.

In a related perspective, relying on textual

analysis of lyrics, Logan et al. (2004) described a tool

to characterize songs semantics and determine artist

similarities, concluding that it was better than random

but inferior to acoustic similarity. LyricsRadar

(Sasaki et al., 2014) is a more recent lyric retrieval

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

76

system that enables users to browse song lyrics by

analyzing their topics, taking into account the context

in which words are used (e.g. “tears” can be of

sadness or joy) to help users navigate to lyrics of

interest.

The support for quotes in IMDb, possibly the

most relevant platform about movies, reinforces their

relevance also in movies. It has a quote section for

each movie, in which users can find dialog scenes

highlighting quotes from the movie; and users can

also search quotes by text and get a list of movies and

quotes that contain the text. The longer the input, the

more precise the result. A similar search if provided

e.g. by QuoDB(.com) with a different database and

filters for movie title and genre. And there are sites

that organize most popular quotes by topic or in tops,

e.g. Top 100 quotes in the American Film Institute

(afi.com).

Song lyrics are a very rich resource and many

types of textual features can be extracted from them

(Hu et al., 2009; Jenkins, 2014), serving as motivation

to explore a new lyrics analysis and comparison

system, be it comparing musics with each other or

music with dialog from films, also relevant in our

approach. But none of the approaches above relate

movie quotes to lyrics. In our previous work we have

been addressing the crossroads of movies and music,

as highlighted in the introduction, and are now

extending it to address and support quotes in movies

and lyrics, reinforcing bridges among them.

3 QUOTES ACROSS LYRICS AND

MOVIES IN “AS MUSIC GOES

BY”

As Music Goes By is an interactive web application

that allows users to explore movies and music

individually, but more importantly, together (Moreira

and Chambel, 2019). This application allows users to

search, visualize and explore music and movies from

complementary perspectives that highlight the music

in their different versions, the artists, and the movie

soundtracks they belong to, along time. We are now

designing and developing the support for quotes in

both music and movies, allowing to find and explore

richer and more relevant related contents, and to

watch and listen to them in context. Users can search

for quotes and obtain details about them, be it to

remind them of a movie or song they know since a

long time ago, or something new that caught their

curiosity, and go on navigating, relating media

through similar quotes and experiencing them in the

context the music and movies they appear in. This can

help them really understand what these quotes mean,

and may surprise them with unexpected coincidences

and discoveries in serendipitous moments.

Relevant properties like time, music genre and

emotional impact are highlighted, as in the previous

version, and the previous features are still available,

now extended with the quotes. Next we present the

main features following the navigation illustrated in

the figures, and refer to main upgrades post-

preliminary evaluation reported in section 4.

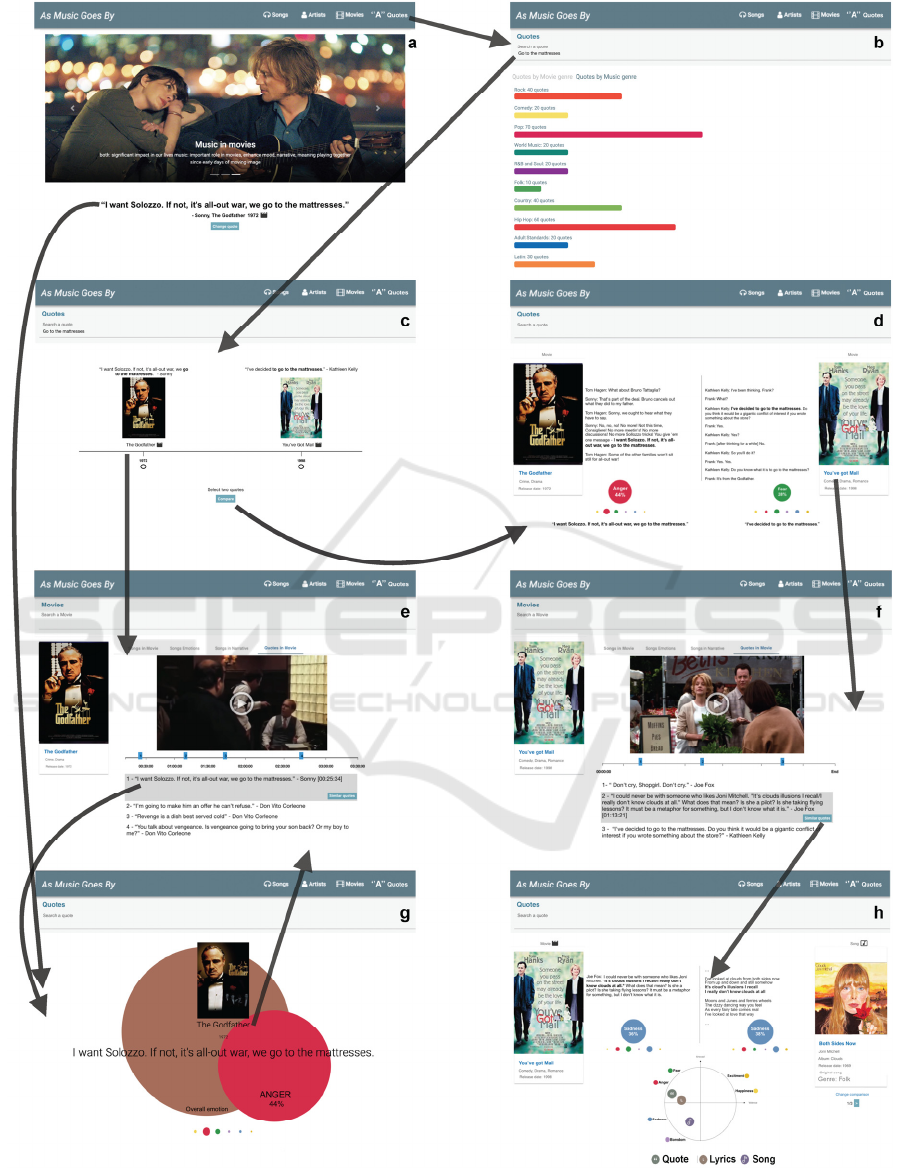

3.1 Homepage and Random Quotes

In the Homepage (Fig.1a), the user is presented with

a carousel of images highlighting main features of the

application, and a random quote, identifying the

movie or song it is from. This allows to create

opportunities to find unexpected information through

quotes that may happen to be relevant to the user, in

serendipitous encounters (Chambel, 2011). A click on

the quote gets to the Quote View with more details

(Fig.1g), a click on the movie gets to the movie page

with more quotes on that movie (Fig.1e) and the

“change the quote” button picks another random

quote. This feature provides a discovery factor for

users that have no search in mind and just want to

explore the platform.

3.2 Quotes overView

Entering the Quotes View (on the top menu), users can

explore quotes in overviews, or search for quotes and

browse the results, analyzing and accessing them

individually or simultaneously - to compare quotes

from different songs and movies. Fig.1b presents a

chart overview representing how keen each music or

movie genres (selectable as an alternate view through

the title above) is to generate quotes. Post-evaluation:

we are also considering ordering bars by different

criteria (e.g. genre and amount) in line with the user

evaluation, and other representations (e.g. based on

circles). In a study, Condit-Schultz and Huron (2015)

found that different music genres have different levels

of lyric memorability and we would expect similar

results from movie genres.

Quote Surfing in Music and Movies with an Emotional Flavor

77

Figure 1: Movie Quotes and Song Lyrics in As Music Goes By - navigation example.

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

78

3.3 Searching for a Quote

Searching for a quote can return the results in a list

(Fig.2, evaluated) or a timeline (Fig1.c, post-

evaluation), with different songs or movies in which

that searched text was found. The timeline is being

shown with thumbnails, but is to change the

representation when the number of results is high,

adopting circles like in the music versions in As

Music Goes By. In the results (and all the views

where quotes are displayed), users can click on the

quote to go to the Quote View.

Figure 2: As Music Goes By - Quote search results in list

format.

3.4 Quote View and Emotions

In this view (Fig1.g), the quote text is presented along

with its source (movie or song) title, and a date. There

are different alternate views, chosen like in a

carrossel, that can help convey the message and the

emotional mood in different ways. The one

exemplified and evaluated has as backgroud the

cover/poster image representing the movie, and

circles colored in accordance with the dominat

emotions, as we describe next. Other alternatives

would include: the image being a frame from the

movie scene where the quote appears; an image

associated with the mood of the quote; no image; or

no emotional information.

As Music Goes By supports the emotional

dimension in songs, based on Valence (polarity, in the

horizontal axis) and Energy (intensity, in the vertical

axis) values provided by Spotify (SpotifyFtrs-ref), in

accordance with the bidimentional model of emotions

by Russel (1980). In this model, emotions can be

represented in a 2D continuous circumplex, where

discrete categorical emotions can be represented as a

reference, in a wheel of emotions (like the one in

Fig.1h). In As Music Goes By, we are adopting 12

emotions as a reference, 3 per quadrant.

For the quotes, we are adopting a set of 6

emotions, the ones supported by the current

emotional text processing approach we are using for

quotes and lyrics (ParDots-ref; Singh, 2017):

Happiness, Sadness, Anger, Fear, Excitement, and

Boredom. The first 4 also belong to Ekman’s 6 basic

emotions (Ekman, 1992) (the others being: disgust

and surprise, for some authors not as basic as the other

4), and the other 2 (excitement and boredom) add

emotions with high and low energy (arousal) to the

set. All these emotions exist in Plutchick’s (80)

model, where they inherited the color map for their

representation in As Music Goes By (Fig.1d,g,h):

Happyness-Yellow, Sadness-Blue, Anger-Red, Fear-

Green, Excitement-GoldenYellow, and Boredom-

Light Purple.

For the Quote View (Fig1.g), the emotions

associated to the quote are found (ParDots-ref; Singh,

2017) (more details in section 3.9), with a percentage

associated with each one of the 6 emotions in the

model. These are represented beneath by small

colored circles, using the adopted colormap, and the

circle dimention reflecting its percentage in the quote.

In the example, the highest percentage is for anger

(44%) in red, followed by fear in green. The name and

percentage of the emotions are presented on over.

Highlighted above, in a larger size and as a

background to the quote, there are two colored

circles: the largest one represents the global emotion

in a color that is calculated as a weighted average of

the 6 basic colors in their corresponding (brownish in

the example, Fig1.g); whereas the smaller circle

represents the dominant emotion, in its original

color, and an area that represents its percentage (in

this case: anger in red, 44% of the larger circle’s area).

This way, the highlight goes to the overall and to the

most dominant emotion, with the opportunity to know

in detail the % of all the emotions. Post-evaluation:

labels were added to the larger circles to make their

meaning and values visible without user action.

3.5 Movie and Song Views

The image or title (of the movie or song) in the Quote

View will lead to the Movie or Song View (Fig.1g-e).

But these can also be accessed after searching for

movies (or anywhere a movie appears in the

application), like in task 4 in the evaluation, leading

to (Fig.1f). Exemplified Movie Views (Godfather in

Fig.1e, You’ve Got Mail in Fig.1f) have Quotes Tab

open to access the quote in the context of the movie

scene (song lyrics in Song Views) where it appears.

Clicking on a quote navigates to Quote View (Fig.1e-

g). Quotes include the timestamp; and to increase the

Quote Surfing in Music and Movies with an Emotional Flavor

79

contextualization, in post-evaluation: also the

character that proffered the quote, and will be used as

an index to the video (when a quote is clicked, the

movie plays from the time when the quote appears).

Quotes will be synchronized with the movie when it

is playing, becoming highlighted when their time

comes (in the list and the timeline). Other tabs (above

the video) can be accessed for other views (e.g. Songs

in Movie is a view of the movie soundtrack (Moreira

and Chambel, 2019)).

3.6 Comparing Movie Quotes or Song

Lyrics in Context

From the results view (Fig2, Fig1.c-d), the user can

also select two results and compare them, by

exploring the dialogs in which the similar quotes

appear – Godfather and You’ve Got Mail movies in

the example, for the searched quote “Go to the

matresses” (comparing lyrics of 2 songs is similar).

Post-evaluation: to ease the comparison, the quotes

with the searched text are highlighted in the context

of the dialogues where they appear, contributing to

the comprehension of their meaning.

Beneath each dialogue, users find the emotional

information for the corresponding quote,

highlighting the colored circle of the dominant

emotion and, as in the Quote View (Fig.1g), the

other circles beneath representing all the emotions,

with their size proportional to their percentages.

More information can be found on over, reveling the

name and % of each emotion. In this example, a

situation where an expression in a movie dialogue

(from the The Godfather) is quoted in another movie

(You’ve Got Mail) it could be expected that the

dominant emotions would be quite similar, but the

results reveal some differences. In fact, only part of

the sentence is the same: “go to the mattresses”. The

quote is proferred in a more aggressive context in

Godfather: “I want Solozzo. If not, it’s all out war,

we go to the mattresses”, leading to a dominance of

anger (second dominant: fear); whereas in You’ve

Got Mail: “I’ve decided to go to the mattresses” was

classified with a dominance of fear (second

dominant: sadness), making sence in their context.

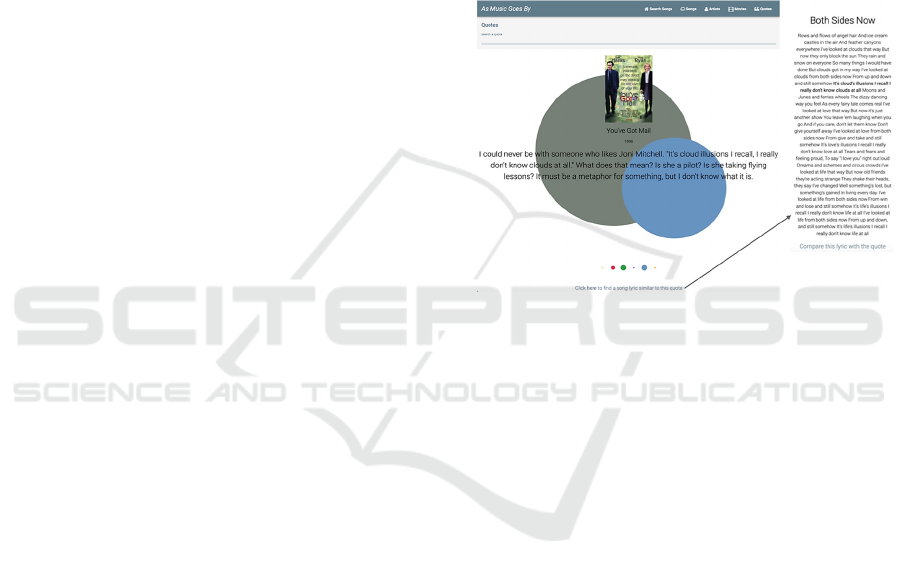

3.7 Comparing Movie Quotes with

Song Lyrics in Context

In the Quote View, users could compare the quote

with a related song (Fig.3). The lyrics are showed on

the right as a close up in the Fig, but appear bellow

the quote on the screen. In the evaluation (task 4) this

feature was considered less useful, satisfactory and

easy to use than the ones where the compaisons where

made in the context of both movies (task 6.2) or songs

(task 7). So, post-evaluation: these comparisons

(illustrated in Fig.1f-h), are done in the context of

both movie and song (Fig.1h). Here the quote “Its

clouds illusions I recall” in the dialogue from You’ve

Got Mail movie is compared with the lyrics of Joni

Mitchell’s song “Both Sides Now” that includes this

quote, common text highlighted in bold. S in the other

comparisons, the user will also be able to view, and

cycle through other results featuring the searched

quote or part of it (exemplified by “Change

comparison 1/3 >” beneath the song).

Figure 3: Comparing movie quote with song lyrics.

The emotional information of the quotes is

presented beneath the movie dialog and the song

lyrics by colored circles, as in the previous

comparison views. In this example (Fig1.h), the

dominant emotion is the same in the movie quote and

the song lyrics: sadness, with similar percentages

(36% and 38%). In the movie, the 2nd dominant is

fear and the 3rd is anger, while the 2nd and 3rd

dominant emotions in the song are the same but in the

oposite order.

In addition, and following the appreciation users

showed for the emotional dimension, post-evaluation:

this view illustrates the comparison of the emotions

associated with movie quote, song lyrics, and the

music itself, in the emotional wheel (in the middle,

and can be made visible or hidden in these

comparison views) where the 6 basic emotions were

represented as reference around the wheel. For the

movie quote and song lyrics, the position and the

color of the circle is the weigthed average of the

position and color of the basic emotions in the wheel;

for the music, the position is defined directly by the

valence and energy values from Spotify, and the color

is calculated by interpolation taking into account the

relative position to the basic colored emotions in the

wheel. In addition to a color, the circles have an icon

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

80

identifying their content type (quotation mark (”) for

movie quotes, L for lyrics and a musical note for

music). In the example, the 3 have a negative valence,

aligned with the dominance of sadness, but the music

itself was considered a bit less energetic than the

movie quote and the song lyrics, in a more nostalgic

mood than what is conveyed by the text alone, which

we could recognize and perceive when listening to the

music.

3.8 More Quotes

In addition to the quotes shown (based on

MovieQuotes (MQuotes-ref)) there might be other

ones that are relevant and found in the subtitles. For

example, in the movie You’ve Got Mail, “Go to the

matresses” is shown twice in the dialog between

Kathleen and Frank, but other occorrences of same

quote exist in the dialog she previously had with Joe,

that were not identified, and not all (other) quotes

from Godfather were identified. Also Kathleen is a

fan of Joni Mitchell and other quotes exist to another

of her songs, but only this one is registered as a quote.

Multiple occurences of a quote could be detected

on the subtitles, providing a better contextualization

and additional connections to explore. For different

movie quotes, for now we are relying on external

sources and not considering that any text in the

subtitles can be seen as a quote. Though the full lyrics

text is being considered for songs (acceptable since

they are more focused and small in each song, than

subtitles are in movies). This way, through the quotes

found, we can discover relations with movies, and

processing their subtitles, we can find and explore

richer characterizations and relations in movies.

3.9 Behind the Curtain

As Music Goes By has a three tier architecture based

on the MEAN stack, using Angular-JS and D3 in the

Presentation Layer, and external REST APIs to

collect data. In the first phase, APIs from: Spotify for

music and artists; SecondHandSongs for original and

cover versions; YouTube links for the videos; and

WhatSong for song information in movies.

In this new phase, adding movie quotes and lyrics,

with an emotional perspective, other services were

explored. For song lyrics, Genius (Gen-ref) was

studied, for its focus on informationa about music and

their lyrics, but not readily accessible through their

API. Instead we adopted musiXmatch (MxMatch-ref)

with aprox. 13 million songs. For movie quotes,

MovieQuotes API (MQuotes-ref) was used, having

quotes for more than 500 movies, adequate for our

proof of concept, although limited if to build a full

service in the future

To detect emotions in quotes and lyrics we are

using ParallelDots Emotion API (version 4)

(ParDots-ref; Singh, 2017). It uses convolutional

neural networks, adequate for feature detection

trained with datasets catalogued with emotional

terms, and adopts deep learning techniques that have

been adequate for this type of classifiers. We are also

following up on our previous content processing

research (Chambel et al. 2013), mainly for subtitles

and audio, to complement these approaches and

address other type of characteristics and content.

4 PRELIMINARY USER

EVALUATION

A preliminary user evaluation was conducted to

assess how users would perceive the Quote features

in As Music Goes By in terms of usefulness,

satisfaction and ease of use, how they would use

them, and their opinions about the interface and the

functionalities. The results of this evaluation are

already being considered to refine and evolve the

application, as commented in section 3 and 4.3.

4.1 Methodology

After explaining the evaluation purpose and

procedure, asking some demographic questions and

breifly introducing the application to the subjects,

they performed a set of pre-defined tasks with the

different features. In this task-oriented evaluation, we

observed users performing the tasks, and for each

one, we annotated success, speed of completion,

errors, hesitations, comments and suggestions.

Usefulness, Satisfaction and Ease of use of each

feature was evaluated after each task based on USE

(Lund, 2001), in a questionnaire.

Table 1: Tasks in the User Evaluation.

T1: Read the “random” quote on the homepage (Fig.1a-g).

T2.1: Which dominant emotion in its quote view? (Fig.1g)

T2.2: Which is its less dominat emotion? what %? (Fig.1g)

T3: Access this quote in its movie view. (Fig.1g-e) In what

time does it appear? (at eval: only in the quotes, no timeline)

T4: Access You’ve Got Mail movie, then quote “I could

never…”, compare it with related song lyrics. (Fig.1f -Fig.3)

T5: In quotes (over)view, how many quotes in comedy movies?

and in hip-hop songs? (Fig.2b)

T6: Search for quotes with a string, check results in list. (Fig.2)

T7.1: Select 2 movies, compare their quotes. (Fig.1c-d)

T7.2: Which dominant quote emotion, and movie genre? (Fig.1d)

T8: Search for quotes (like in T6), compare 2 songs’ lyrics.

Quote Surfing in Music and Movies with an Emotional Flavor

81

After the tasks, the users provided a global

appreciation of the application, also through a USE

rating, and were asked to refer to the features that they

liked the most, and leave suggestions about things

they would like to see improved or included in the

future. They were also to characterize the application

with most relevant perceived ergonomic, hedonic and

appeal quality aspects (Hassenzahl et al., 2000),

selecting pre-defined terms.

4.2 Participants

Ten subjects participated in the user evaluation: 8

male, 2 female, 23-55 years old (Mean 31.1, Std.Dev

12.1); 2 with highschool, 6 BSc, 2 MSc degrees; from

diverse backgrounds, such as marketing, informatics,

management, international relations, entrepreneur-

ship, and video production; all having their first

contact with this application, allowing to percieve

most usability issues and a tendency in user

satisfaction. About their habits and motivations in this

context: all users listen to music on a daily basis (all

use Spotify, 4 Youtube, 1 Apple Music, 1

SoundCloud, 1 CD); 2 watch movies daily, 6 weekly,

2 2-3 times/month (9 use Netflix, 2HBO, 1 Youtube,

Prime video, TV, or cinema); 5 search for information

about music and movies 2-3 times/month, 3 daily, 2

weekly; (for movies: 8 use IMDB, 1 Rotten Tomatos,

Netflix, Instagram, TV box); (for music: 10 Google,

4 Spotify, 3 Genius). Regarding movie quotes and

song lyrics: 3 consider very interesting, 3 interesting,

3 medium interest, 1 not very interesting; Main

motivations: to know the lyrics of a song that is

playing; and to discuss about movies and be able to

remember and use main quotes; To access

information about quotes: 5 use IMDb; 2 Google; To

access song lyrics: 8 use Google, 3 Genius, and 2

AZLyrics.

4.3 Results

All users completed the tasks successfully, quite fast,

without many hesitations, and reported having

enjoyed using the application, although having their

preferences for different features. The results are

presented in the next tables and figure, and briefly

commented in the text along with the suggestions

made by the users.

Table 2: USE evaluation of Quotes in As Music Goes By

Likert Scale:0-4: X=Useful(U); Satisfactory(S); Easy to

use(E); 0:Not X; 1:Not much X; 2:Medium(*); 3:X; 4:Very X

(*) Useful without Medium (2); M=Mean; SD=Std. Deviation.

Task U S E

T# Feature

M SD M SD M SD

1. Home: Random quotes 3.1 0.3 3.0 0.7 3.3 0.7

2.1 Quote View: dom. emotion 2.8 1.0 2.9 0.6 2.4 0.7

2.2 less dominant emotion 2.7 0.9 2.9 0.6 3.0 0.9

3. Movie View: quote & time 3.0 0.8 3.0 0.7 2.9 0.6

4. Compare m.quote & lyrics 2.7 0.9 3.0 0.5 2.7 0.9

5. Quotes overView 3.0 0.8 3.1 0.6 3.1 0.6

6. Quote Search 3.2 0.9 3.2 0.6 3.6 0.5

7.1 Compare 2 movies’ quotes 3.0 1.2 3.0 0.5 3.3 0.7

7.2 dominant emotion & genre 3.3 0.9 3.1 0.7 3.5 0.5

8. Compare 2 songs lyrics 2.9 1.4 2.9 0.6 3.0 0.7

Global Evaluation

2.9 0.7 3.1 0.6 3.0 0.5

Total per Task (mean) 3.0 0.9 3.0 0.6 3.1 0.7

Overall, participants were satisfied with their user

experience in the application, also finding it useful

and easy to use. The global USE classification

assigned to the application (U:2.9; S:3.1; E:3.0) at the

end is very similar, with a slight fluctuation favoring

satisfaction over usefulness and ease of use, when

compared with the average of the scores along the

individual tasks (U:3.0; S: 3.0; E: 3.1). Table 2 and

Fig.4 summarize these results.

Figure 4: USE evaluation of Quotes in As Music Goes By in

stacked bar charts. Likert Scale: (0-4), same as in Table 2.

Worth noticing that the first time the users interpreted

the emotions, in the quote view (T2.1), they did not

find it as easy as other tasks (E:2.4). But this was one

of their favorite features, and the next views with

emotions were found easier. In any case, one of the

reasons for their perception was the need to hover to

find the meaning, inspiring adjustments in the

labeling, as reported in section 3.4. In a similar way,

the first quote comparison (T4) was not found as easy

as the next ones, and quote comparison is also in the

top list of favorite features. Also notice that we had a

slight difference in the Usefulness scale: there was no

middle value (2: medium), to enforce a more explicit

positive or negative opinion. As a result, the lowest

scores for U went a bit lower than the lowest for S and

E, but on average not so noticeable (those that would

have chosen medium (2) may have split almost even

between 1 and 3). Most of the lowest scores were

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

82

assigned by one of the participants who is not very

fond of this type of application, and does not usually

access media online, preferring to watch movies on

TV and cinema. Would be considered outside of our

target audience.

As for the most favorite features and

functionalities, and aligned with their previous

scores, most users chose: to search and compare

quotes in movies and in lyrics; to navigate across

movies and songs; having emotions in quotes; the

color mix based on the emotions in a quote (i.e. the

global emotion, with a weighted average of the

indivitual emotions’ colors); and to access all the

quotes in the context of a movie. Some users also

highlighted the flexibility in the search, with few or

several words for more general or more specific

queries. These results are aligned with the high scores

for the search and comparisons tasks, but highlight the

preference for the global emotion in a feature with

scores that did not stand out (T2.1 and T2.2). Users

favor the dominant and global emotions, the ones we

highlight in the application, over the less dominant, but

like to know them all in detail, when exploring. So our

options aligned with their preferences.

As suggestions, some participants mentioned

highlighting more relevant content (which we are

already addressing in the contextualization of quote

comparison, as reported in 3.6 and 3.7), and some

minor adjustments (we are also addressing) like the

position of a couple buttons to avoid scroll, increasing

font size where too small, and make more evident

what is clickable; and one suggested to have an alarm

clock playing songs or movie scenes that the users

had been interested about, when waking them up.

As a final classification, users summarized their

appreciation of the application choosing most

relevant perceived hedonic (7 positive | 7 negative

(opposite)), ergonomic (8+|8-) and appeal (8+|8-)

quality aspects in (Hassenzahl et al., 2000).

Table 3: Quality terms users chose for As Music Goes By.

H:Hedonic; E: Ergonomic; A: Appeal; Clear (+) vs

Confusing (-); Predictable (+) vs Unpredictable (-).

Terms type # Terms type #

Simple

E 7 Good A 3

Interesting

H 6 Sympathetic A 3

Comprehensible

E

6 Impressive H 2

Pleasant

A 6 Inviting A 2

Original H 4 Controlable E 1

Aesthetic A 4 Familiar E 1

Innovative H 3 Desirable A 1

Trustworthy E 3 Motivating A 1

Clear E 3 Confusing E 1

Attractive A 3 Unpredictble E 1

Simple was the most chosen term. Interesting,

Comprehensible and Pleasant were also chosen by

more than of the subjects. Only two negative terms

were chosen: Confusing and Unpredictable, only

once. But users chose more often terms that are the

opposite (Clear: 3 users), or in a way balance these

out: Comprehensive (5 users) and Original (4 users)

and innovative (3 users), originality and innovation

tend to bring about some unpredictability. Users

made a reasonable balanced choice of terms in the 3

categories, with more emphasis in Ergonomic and

Appeal (H: 4+ terms (in 7+), chosen 15 times; E: 6+

(in 8+) 21 times, 2- (in 8-) 2 times; A: the 8+ terms,

23 times), confirming and complementing the

feedback they had provided along the evaluation.

5 CONCLUSIONS AND

PERSPECTIVES

This paper presented the inclusion of quotes in As

Music Goes By, for both music lyrics and movies,

allowing to find, explore and compare related

contents, and access quotes in a contextualized way

in the movies or songs where they appear,

highlighting their emotional dimension, aiming to

contribute to an increased awareness and

understanding of their meaning and impact. It allows

to access quotes that we search for, or to discover

them accidentally, increasing chances for significant

and unexpected serendipitous discoveries (Chambel,

2011), to experience in a more conscious way the

movies and songs that touch us the most.

The preliminary evaluation proved the concept to

be valued and appreciated. Users found the

application and the features useful, satisfying and

easy to use, and enjoyed in particular the flexible

search, the contextualized access and comparison of

quotes in movies and in lyrics, to navigate across

movies and songs, and the emotions, aligned with

what we have hypothesized. Simple, Interesting,

Comprehensible and Pleasant were the most

perceived qualities, followed by Original and

Aesthetic. Overall, the results were encouraging, and

are informing our new developments.

For the future, we plan to refine further,

reevaluate and extend the interactive features.

Directions include: to create more visualizations with

integrated overviews, beyond relation of genres with

amount of quotes; and enrich relations, especially

between movies and songs with similar phrases, same

actors, or similar emotional impact.

Quote Surfing in Music and Movies with an Emotional Flavor

83

New developments in content processing (e.g.

subtitles, lyrics, quotes, and audio) and emotional

impact (automatic or based on self assessment) could

also enrich and automatize further finding relations

that contribute to an increased comprehension of

these contents. In section 3 we already exemplified

some directions mainly with subtitles (for enriched

and multiple quotes) and emotions. One of our goals

is also to reach a unified model for the emotions that

are relevant in the context of music and movies. In

this proof of concept, we are using two sources of

classification for quotes and music (Parallel Dots and

Spotify) with different models. The representation of

the emotions in the same circumpex, based on arousal

and valence (Fig.2h) is already going in the direction

of a coherent unified model and representation, and

aligned with our research in content and user emotion

detection (Chambel et al., 2013; Oliveira et al., 2013;

Bernardino et al., 2016).

Different modalities and contexts of use could

also be taken into account to access information in a

richer and more flexible way, possibly mediated by

conversational and intelligent agents. For example,

identifying a music that is playing, or what a character

is saying in the movie being watched, to direct the

users to the corresponding information, to other

content related to this one and the situation that they

are living in the moment.

Regarding quotes, and as a complement to the

automatic detection of the underlying emotions, users

could identify in their perspectives the emotions they

associate to them (what they feel and makes them

memorable and valuable), and quotes (from movies

and songs) could be suggested or collected in

personal journals as inspirational sources, aligned

with the more recent developments in (Chambel and

Carvalho, 2020). Designs for quotes in the Quote

View (Fig1.g) and in users’ personal journals could

be automatically created based on colors of the movie

scenes and emotions conveyed, in a similar approach

to (Kim and Suk, 2016), or in styles created or

selected by the users for inspiration and self

expression contexts (Nave et al., 2016).

ACKNOWLEDGEMENTS

This work was partially supported by FCT through

funding of the AWESOME project, ref. PTDC/CCI/

29234/2017, and LASIGE Research Unit, ref. UIDB

/00408/2020.

REFERENCES

Ali, S. O. and Peynircioğlu, Z. F., 2006. Songs and

emotions: are lyrics and melodies equal partners?,

Psychology of Music, 34(4), pp. 511–534.

Bernardino, C., Ferreira, H.A., and Chambel, T. 2016.

Towards Media for Wellbeing. In Proc. of ACM TVX'

2016, ACM. 171-177.

Chambel, T. 2011. Towards Serendipity and Insights in

Movies and Multimedia. In Proc. of International

Workshop on Encouraging Serendipity in Interactive

Systems. Interact'2011. 12-16.

Chambel, T. and Carvalho, P., 2020. Memorable and

Emotional Media Moments: reminding yourself of the

good things!.In Proceedings of VISIGRAPP 2020

(HUCAPP: International Conference on Human Com-

puter Interaction Theory and Applications), 13 pgs.

Chambel, T., Langlois, T., Martins, P., Gil, N., Silva, N.,

Duarte, E., 2013. Content-Based Search Overviews and

Exploratory Browsing of Movies with Movie-Clouds.

International Journal of Advanced Media and

Communication, 5(1): 58-79.

Chou, H. Y., & Lien, N. H., 2010. Advertising effects of

songs' nostalgia and lyrics' relevance. Asia Pacific

Journal of Marketing and Logistics, 22.3: 314-329.

Condit-Schultz, N., and Huron, D., 2015. Catching the

lyrics: intelligibility in twelve song genres. Music

Perception: An Interdisciplinary Journal, 32.5: 470-

483.

Danescu-Niculescu-Mizil, C., Cheng, J., Kleinberg, J., Lee,

L., 2012. You had me at hello: How phrasing affects

memorability. In Proceedings of the 50th Annual

Meeting of the Association for Computational

Linguistics: Long Papers-Volume 1 (pp. 892-901).

Association for Computational Linguistics.

Dickens, E., 1998. Correlating Teenage Exposure to

Rock/Rap Themes with Associated Behaviors and

Thought Patterns.

Ekman, P., 1992. Are there basic emotions? Psychological

Review, 99(3):550-553.

Flintlock, S., 2017. The Importance of Song Lyrics: why

lyrics matter in songs, Beat, Vocal Media. https://

beat.media/the-importance-of-song-lyrics

Gen-ref: Genius API. https://docs.genius.com/

Hassenzahl, M., Platz, A., Burmester, M, Lehner, K., 2000.

Hedonic and Ergonomic Quality Aspects Determine a

Software’s Appeal. ACM CHI 2000. The Hague,

Amsterdam, pp.201-208.

Hu, X., Downie, J. S., Ehmann, A. F., 2009. Lyric text

mining in music mood classification. American music,

183 (5,049), 2-209.

Jenkins, T., 2014. Why does music evoke memories?,

Culture, BBC. http://www.bbc.com/culture/story/

20140417-why-does-music-evoke-memories

Juslin, P. N., Vastfjall, D., 2008. Emotional responses to

music: The need to consider underlying mechanisms.

Behavioral and Brain Sciences, 31(5), 559-575.

Kim, E., Suk, H. J., 2016. Key Color Generation for

Affective Multimedia Production: An Initial Method

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

84

and Its Application. In Proceedings of ACM Multi-

media, ACM, pp. 1316-1325.

Logan, B., Kositsky, A., Moreno, P., 2004. Semantic

analysis of song lyrics. In IEEE International

Conference on Multimedia and Expo (ICME)(IEEE

Cat. No. 04TH8763), Vol. 2, pp. 827-830. IEEE.

Lund, A. M., 2001. Measuring usability with the USE

questionnaire. Usability Interface, 8(2), pp.3-6.

Maehner, J., 2015. Under The Covers: Second Hand Songs

That Matter, Cuepoint. https://medium.com/

cuepoint/under-the-covers-5ffe85ac96d0

Moreira, A. and Chambel, T., 2019. This Music Reminds

Me of a Movie, or Is It an Old Song? An Interactive

Audiovisual Journey to Find out, Explore and Play. In

Proc. of VISIGRAPP 2019 (GRAPP: International

Conference on Computer Graphics Theory and Appli-

cations, Interactive Environments Area), 145-158.

MQuotes-ref: Movie Quotes API. https://juanroldan.com

.ar/movie-quotes-api/

MxMatch-ref: musiXmatch API. https://rapidapi.com/

musixmatch.com/api/ musixmatch/details

Nave, C., Correia, N., Romão, T., 2016. Exploring Emoti-

ons through Painting, Photography and Expressive

Writing: and Early Experimental User Study. In

Proceedings of the 13th International Conference on

Advances in Computer Entertainment Technology

(ACE’16), 8 pgs, ACM.

Oliveira, E., Martins, P., and Chambel, T. 2013. Accessing

Movies Based on Emotional Impact. ACM/Springer

Multimedia Systems Journal, 19(6), Nov. 559-576.

ParDots-ref: ParallelDots, Emotion Detection API. http://

apis.parallel-dots.com/text_docs/index.html#emotion

Plutchik, R. 1980. Emotion: A psychoevolutionary

synthesis. Harpercollins College Division.

Russell, J. A. 1980. A circumplex model of affect. Journal

of Personality and Social Psychology, 39(6), 1161-

1178.

Sasaki, S., Yoshii, K., Nakano, T., Goto, M., Morishima,

S., 2014. LyricsRadar: A Lyrics Retrieval System

Based on Latent Topics of Lyrics. In Ismir, pp. 585-

590.

Singh, A., 2017. Emotion Detection Using Machine Lear-

ning, ParallelDots Blog, April 21: https://blog.parallel-

dots.com/product/emotion-detection-using-machine-

learning/

SpotifyFtrs-ref: Spotify Audio Features. https://developer.

spotify.com/web-api/get-audio-features/

Typke, R., Wiering, F., Veltkamp, R. C., 2005. A survey of

music information retrieval systems. In Proceedings of

the 6th International Conference on Music Information

Retrieval. pp.153-160. Queen Mary, University of

London.

Wang, Y., Kan, M. Y., Nwe, T. L., Shenoy, A., Yin, J.,

2004. LyricAlly: automatic synchronization of acoustic

musical signals and textual lyrics. In Proceedings of the

12th international conference on Multimedia, ACM,

pp. 212-219.

Quote Surfing in Music and Movies with an Emotional Flavor

85