Heuristics and Usability of a Video Assessment Evaluation Tool for

Teachers

Sara Cruz

1a

, Clara Coutinho

2b

and José Alberto Lencastre

2c

1

School of Technology, Polytechnic Institute of Cávado and Ave, Campus Barcelos, 4750-810, Barcelos, Portugal

2

Institute of Education, University of Minho, Campus Gualtar, 4710-057, Braga, Portugal

Keywords: Technology-enhanced Learning, Deeper Understanding, Usability.

Abstract: The article presents the design and development of an organized multimedia Web tool to help teachers

evaluate videos produced by students according to the JuxtaLearn learning process. We use a development

research methodology, fulfilling the following phases of the protocol: (1) preliminary investigation, (2)

theoretical embedding, (3) empirical testing and (4) documentation, analysis and reflection on process and

outcomes. We started with the exploratory analysis phase where it was intended identify scientificity and

pedagogical potential of the video. Based on the data obtained in this phase, the tool was designed and further

developed. Usability evaluation tests were carried out with experts and the target audience in order to adapt

the product. Based on the results of usability testing, we can say that the prototype responded to the teachers'

needs, arousing their interest in promoting video production with their students.

1 INTRODUCTION

Video editing by student can lead to reflection on

their own learning (Otero et al., 2013; Adams et al.,

2013). The editing process can improve the quality of

reflection around concepts (Fadde, Aud, & Gilbert,

2009; Forman, 2000).These reflection are considered

important factors for learning (Novak, 2010). By

stimulating creativity through the video creation

process, students have the opportunity to reflect on

the information they collect and clarify possible

mistakes (Adams et al., 2013; Otero et al., 2013) and

identify doubts about the concepts focused (Hechter

& Guy, 2010). Video construction allows a creative

process for success in understanding concepts,

leading to a shared understanding of a potentially

difficult theme or concept (Fuller & Magerko, 2011).

The use of video in an educational context has

aroused interest on some researchers, but the

difficulty of evaluating the videos constructed by the

students led us to think of an evaluation instrument by

points, that allows to estimate / evaluate the student's

level of understanding only viewing the video. This

reflection coupled with the guidelines obtained from

a

https://orcid.org/0000-0002-9918-9290

b

https://orcid.org/0000-0002-2309-4084

c

https://orcid.org/0000-0002-7884-5957

the literature in the area of video in education and in

the area of knowledge assessment triggered this

construction work of an instrument that allows the

teacher who accompanied a video editing process by

the student, viewing the video, estimate his level of

understanding about the content.

In this article, we present the design and

development of a video assessment tool to be used by

teachers who applied the Juxtalearn learning process.

The development process of this assessment

instrument took place over the following phases: (1)

preliminary investigation, (2) theoretical embedding,

(3) empirical testing and (4) Documentation, analysis

and reflection on process and outcomes.

We started with an exploratory analysis phase and

based on the data obtained and the existing theoretical

references on Bloom Digital Taxonomy, we

proceeded to the design and subsequent construction

of an instrument for observing the videos produced

with the JuxtaLearn methodology that we present

here. Throughout this process, evaluation tests were

carried out with experts and with the target audience

in order to adapt it to that audience.

Cruz, S., Coutinho, C. and Lencastre, J.

Heuristics and Usability of a Video Assessment Evaluation Tool for Teachers.

DOI: 10.5220/0009570504450454

In Proceedings of the 12th International Conference on Computer Supported Education (CSEDU 2020) - Volume 2, pages 445-454

ISBN: 978-989-758-417-6

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

445

2 RELATED WORK

2.1 The JuxtaLearn

The lack of motivation, coupled with a certain social

predisposition translated into some conformity to

accept the poor results in the STEM areas, normally

does not help to overcome obstacles. A possible

approach can be positively influenced by the video

creative editing (Adams et al., 2013; Otero et al.,

2013), which can be an important contribution to

giving the student a clearer view of the concepts. The

Juxtalearn project focuses on the use of video to

stimulate students' curiosity about difficult concepts,

called Threshold Concepts in the literature, leading to

deep learning by the student (Adams et al., 2013).

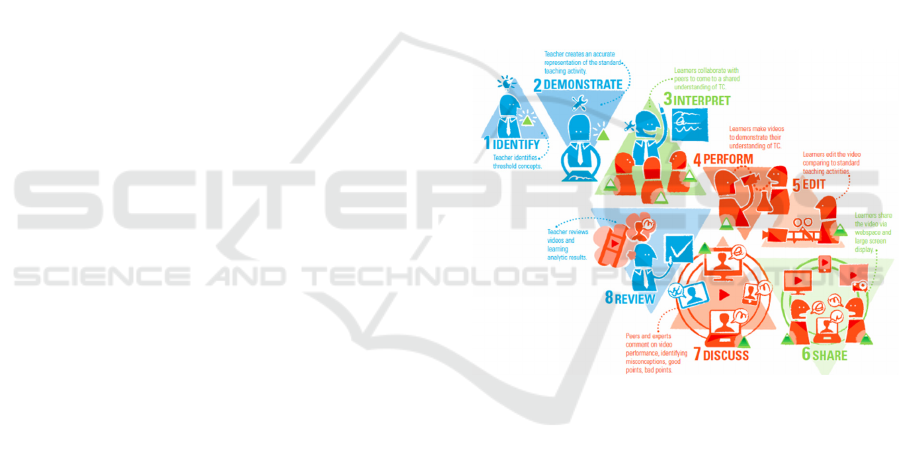

The JuxtaLearn learning process is a cyclical

process consisting of eight stages, centered on the

student (Martín et al., 2015).

In step 1, based in his / her previous experience

with students, the teacher identifies difficult concepts

to understand by the student. Each of these concepts

can be divided into simpler concepts, called

stumbling blocks.

In step 2, the teacher creates one or more

Activities around the identified stumbling blocks. In

this phase, the teacher also creates a diagnostic quiz

where each question is constructed in order to focus

on one or more of the identified stumbling blocks.

In step 3, the teacher applies the diagnostic

questionnaire to students to determine their level of

understanding about the concept identified. Then, the

result and responses to the diagnostic questionnaire

are analysed by the teacher and each student.

In step 4, the teacher proposes that students,

organized in groups, create a storyboard to explain a

concept. The storyboard is a framework for the

development of ideas and the overall visual design of

a video (Hartnett, Malzahn & Goldsmith, 2014). Its

construction assumes planning of sequentially related

actions, promoting a different view of the concept.

In step 5, students, based on the storyboard they

created, capture an image, choose sound and edit the

video. The greater the student's involvement in video

editing, the greater the didactic effectiveness of this

process (Cruz, 2019). The editing process can

improve the quality of this reflection, since that

structures it and encourages students to focus on the

content itself (Fadde, Aud, & Gilbert, 2009; Adams

et al., 2013).

In step 6, students share their work and reflect

together. Reflection is considered an important factor

for learning (Novak, 2010), leads to a deep

understanding of scientific concepts allowing

students to identify misinterpretations and doubts

about the concepts (Hechter & Guy, 2010).

In the step 7, discussion is promoted between

students and teachers allowing the social construction

of knowledge, a better understanding of concepts, the

presentation of videos, debate on the methodologies

adopted and possible improvements for their

implementation.

In step 8, students fill out the diagnostic

questionnaire again to verify their knowledge of the

concept and evaluation of the improvements.

The JuxtaLearn process is a way to support

students in the deep understanding of a concept

throughout a creative process in a stimulating and

flexible approach, characteristic of the teaching of

threshold concepts. The use and editing of the video

is a natural process and can play a relevant

educational role (Adams et al., 2013). In the

following image we present a representation of this

process.

Figure 1: The JuxtaLearn Process.

2.2 Bloom's Taxonomy

The Bloom's taxonomy emerged as a result of work

developed by several universities in the United States,

led by Benjamin S. Bloom. Bloom organized a

hierarchical structure made up of educational

objectives. This structure allows to classify the

learning in three great domains with different levels

of depth: cognitive domain, affective domain and

psychomotor domain.

Bloom Digital Taxonomy is a tool that follows the

thinking process and allows to structure the cognitive

domain at levels of increasing complexity. So, to

understand a concept, it is necessary to first remember

it and, in order to apply the knowledge, it is necessary

to understand it (Churches, 2009). The categorization

used in Bloom's digital taxonomy not only presents

CSEDU 2020 - 12th International Conference on Computer Supported Education

446

learning outcomes but also a dependency relationship

between learning levels, because the results are

cumulative. Cognitive development benefits from

hierarchical structuring that allows students to be able

to transfer, in a multidisciplinary way, the knowledge

acquired (Ferraz & Belhot, 2010). In Bloom's

perspective (taxonomy), lower levels of learning

provide a basis for higher levels of knowledge and

higher-order thinking involves overcoming

difficulties and the ability to relate and combine new

information from a given context (King, Goodson &

Rohani, 1998). This cognitive development allows to

reach higher order thinking, Higher Order Thinking

Skills (HOTS). The concept of Higher Order

Thinking Skills arises from learning taxonomies,

namely Bloom's Taxonomy, where achieving higher

order thinking involves the acquisition of complex

skills, such as critical thinking and problem solving.

Bloom's Digital Taxonomy is a hierarchical structure

that classifies, learning objectives in the cognitive

domain at six levels (Doyle & Senske, 2017). To

Ferraz and Belhot (2010), using Bloom's taxonomy in

an educational context allows the development of

assessment tools and organize differentiated

strategies in order to stimulate performance in

knowledge acquisition levels.

Rahbarnia, Hamedian and Radmehr (2014), in

their study, where they intended to understand the

relationship between each of the multiple

intelligences and the resolution of mathematical

problems, performed multiple intelligence scans

based on the Digital Bloom Taxonomy. They

concluded that Bloom Taxonomy is a useful tool, as

it allows to go beyond individual cognitive processes

and focus math assessment on complex aspects of

learning and thinking. The results of these authors

show that intelligences such as mathematical logic

and spatial visualization are positively related with

solving mathematical problems. The abstraction of

the content is developed from the cognitive

development of the transition from concrete / real

situations to abstract situations (Ferraz & Belhot,

2010).

2.3 Construction of Videos by Students

The creation of video content and its integration in

learning activities with students are extremely

important for teachers in the century XXI (Kumar &

Vigil, 2011). The use of cell phones or a tablet opens

the possibility of new pedagogical approaches using

video, because it allows to record video, edit it and

share it (Múller, Otero, Alissandrakis & Milrad,

2014). The use of video in a pedagogical environment

can facilitate the understanding of content, involving

students in the teaching process itself, as it favours

their participation in the learning context (Cruz,

Lencastre, Coutinho, José, Clough & Adams, 2017).

The creation of videos through meaningful

experiences for students, allows the creation of

engaging and favourable learning moments for the

acquisition of knowledge (Otero, Alissandrakis,

Müller, Milrad, Lencastre, Casal & José, 2013).

Dadzie, Muller, Alissandrakis and Milrad (2016)

reported two student-centered studies for social and

constructive learning of concepts through creative,

collaborative and reflective video composition. In

their study, they explored the influence of software

designed to increase students' reaction and

collaboration in video editing . The results obtained

by these authors led us to suggest the use of mobile

devices to access shared information, to increase

students' ability to follow the constructive learning

process.

Aspects such as the elements of the video design,

the pedagogical component involved in the process

and ethics are fundamental, for the integration of this

technology in the school environment. There are

several ways to integrate video into the learning

process, favouring students to build strong cognitive

structures in understanding (Dadzie, Muller,

Alissandrakis & Milrad, 2016). The video thus

assumes itself as an enriching resource for the school

environment, capable of offering a clear focus,

experiences from the natural world, historical

retrospectives, the understanding of current issues,

facilitating the clarity of concepts, provide unique

visual experiences, capable of favouring the teaching

process and the acquisition of skills in students.

Creative performance through the creation of

participatory videos is a way to involve students in

science, technology, engineering and mathematics

(STEM), arousing the curiosity of students and the

public (Hartnett, Malzahn, & Goldsmith, 2014). The

video presents a narrative structure that manages to

captivate the viewer's attention and encourages him

in a constructive learning (Adams, Hartnett, Clough,

Grand & Goldsmith, 2014). In addition to the

motivational feature that characterizes it, video

editing can be a good form of assessment, as students

tend to think more carefully about what is presented.

Video editing should focus on the process, not the

product (Adams, Rogers, Coughlan, Vander-Linden,

Clough, Martin, Haya & Collins, 2013). This process

involves students so that they are an active part of the

learning process. The greater the student's

involvement in video editing, towards creative

Heuristics and Usability of a Video Assessment Evaluation Tool for Teachers

447

manipulation and the discovery of solutions, the

greater the didactic effectiveness of this process.

Sengül and Dereli (2013) conducted a study, on

which they intended to investigate the effect of using

cartoons on students' attitudes towards mathematics,

when cartoons are used to teach integers. The authors

involved sixty-one students and concluded that

teaching through cartoons positively influenced the

students' affective characteristics and their attitude

towards the discipline of mathematics. Creative

performance, through the creation of participatory

videos, encourages deeper reflection and

understanding (Hartnett, Malzahn, & Goldsmith,

2014). The data obtained by Sengül and Dereli (2013)

is also consistent with the discovery that it would be

important to use conceptual cartoons to teach abstract

disciplines, as mathematics, and in the development

of students' affective characteristics in relation to this

discipline. Also Loch, Jordan, Lowe and Mestel

(2014) developed an image capture work with

mathematical concepts, through which they

investigated whether short video recordings

explaining mathematical concepts, which are

prerequisites for certain content, are a useful tool for

improving student learning. They concluded that

viewing short explanatory videos can be useful in

reviewing concepts that are prerequisites for

mathematics. Lencastre, Coutinho, Cruz, Magalhães,

Casal, José, Clough and Adams (2015) developed a

study that involved the organization of a video

contest, in which students were guided in the creation

of videos around specific curricular topics. The

results obtained suggest that students are receptive to

video creation.

3 METHOD

To understand how creating and producing a video on

some concepts helps students to decode and

understand it, we based our analysis on the learning

objectives described in Bloom's Digital Taxonomy.

We believed that creative editing and video

production about a difficult concept for students

could favour student development, knowledge at a

higher level.

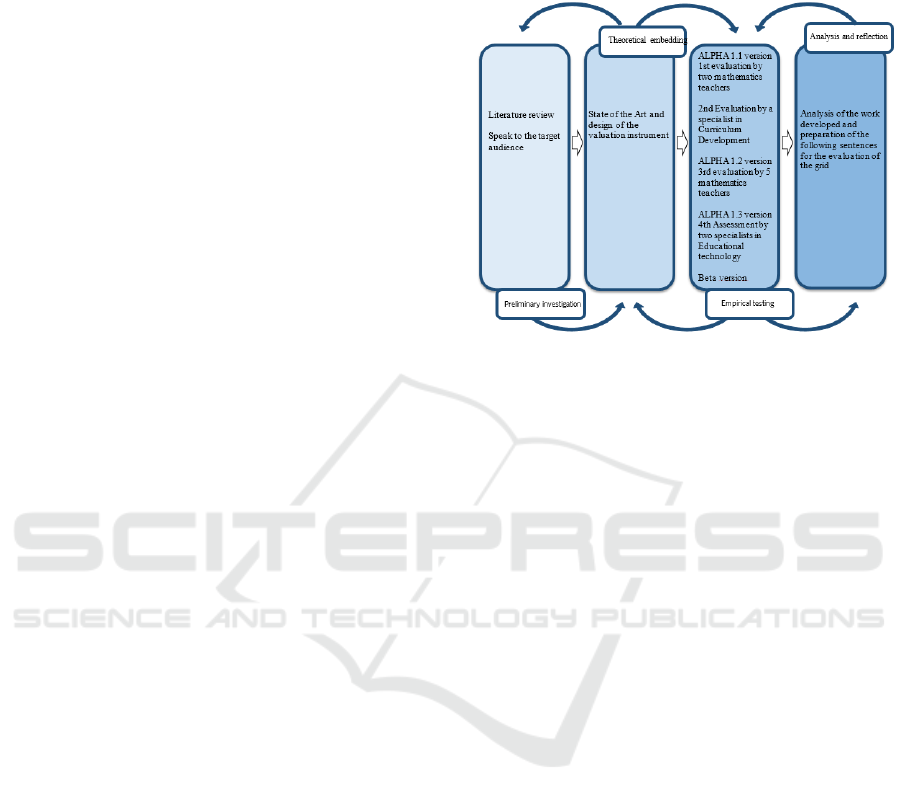

The development and evaluation process of the

explanatory video analysis grid, followed a

Development Research methodology. The

Development Research allows to use both practical

and theoretical approaches, allowing not only the

analysis of a phenomenon but also the grounded

construction of a model. The construction of the

evaluation instrument allowed to analyse the impact

of its use in an educational environment (Lencastre,

2012). So, our methodology is described in the

following diagram:

Figure 2: Methodology adopted for development the tool.

3.1 Participants

In the first phase, Preliminary investigation, we had

the participation of nine teachers from various subject

areas which scientifically and pedagogically

validated the content presented in the videos by eight

teachers, one teacher from each subject area of the

videos produced. As our work is about students

editing video on math concepts, in the phase

Empirical testing, three mathematics teachers were

involved, essentially in the construction of the various

versions so that their profile would be similar to the

target audience. Three experts were also used, one in

Curriculum Development and two in Educational

Technology.

3.2 Development of the Tool

In the first phase, bibliography on Bloom's Taxonomy

was consulted and the informal opinion of some

teachers regarding the use of this assessment tool,

perspectives and particularly about its application in

practice.

Google Forms technology was used to build the

online version of the tool. Throughout the process of

designing the multimedia prototype, evaluation tests

with experts and users were carried out

simultaneously with the bibliographic research, in

order to achieve usability conditions.

3.2.1 Preliminary Investigation

The exploratory test with the target audience was

divided into two phases and aimed to survey and

CSEDU 2020 - 12th International Conference on Computer Supported Education

448

identify the knowledge of a group of teachers about

the scientificity and pedagogical potential of the

video, to understand their difficulties and

suggestions.

The first stage of the test was carried out through

a questionnaire survey, which allows estimating

attitudes, gauging opinions or collecting other

information from respondents. The questions were

directed in order to allow to understand: (i) if the

video is scientifically correct, (ii) if it has pedagogical

potential and (iii) if the teacher would use the video

in his classes. This questionnaire included closed

response items and open response items, using a scale

of Likert degree according to 5 points (from 1 =

strongly disagree to 5 = strongly agree). We opted for

the Likert scale to measure the teachers' evaluation of

the videos evaluated. This survey was applied to a

group of teachers who accompanied a group of

students in the making of an explanatory video, all

from the disciplinary area of the content covered in

the video. We chose these teachers because they are

aware of the process carried out during creative

editing and because they have pedagogical and

scientific mastery of the content covered in the video.

With this test we intend to infer about the scientific

and pedagogical value given by the teacher to the

videos whose execution he followed. Following

Tuckman's guidelines (2000), we applied the

questionnaire to a group of teachers who are part of

the population of teachers under study. A copy of the

online’s questionnaire was sent to each of the

teachers. These teachers were chosen because they

were teachers who participated in an initiative

promoted by the portuguese team of the

ANONYMOUS project and followed a group of

students during the process of creating an explanatory

video. After the first validation phase already

described, there was a need to change some aspects.

Thus, we have divided the domain of scientific

evaluation into two subdomains: (i) scientific

correction and (ii) information correction.

The second stage of the test was done by filling a

grid. In this grid, the questions were directed in order

to allow a scientific assessment under two

subdomains (i) scientific correction and (ii)

information correction. In this phase we involved

eight teachers, each teacher would have to score from

1 to 4 each of the dimensions. In this step, the same

videos from the previous stage were evaluated, we

chose teachers from the same school and from the

subject area of the content of the videos produced,

who did not followed the process of creating the

videos so that we could compare.

The results obtained by this test constituted the

basis for the collection of information in the literature

and for the creation of an instrument that allows a

teacher to estimate the understanding achieved with

the production of a video by JuxtaLearn

methodology.

3.2.2 ALPHA 1.1 Version

Based on the literature and Bloom's Digital

Taxonomy, a first version of the evaluation

instrument was built with the aim of detecting errors

in its construction and identifying situations to

improve.

Bloom's Digital Taxonomy contemplates the

following phases: remember, understand, apply,

analyse, evaluate and create. The general idea of this

taxonomy is that lower levels of cognition support

higher levels (Doyle & Senske, 2017). We intend to

carry out the analysis of explanatory videos on math

concepts created by students under the supervision of

a teacher. So, the assessment tool provides a method

that can support teachers / educators in this analysis.

Thus, this assessment instrument was organized

according to the dimensions of Bloom's Digital

Taxonomy. The version ALPHA 1.1 was designed to

evaluate six dimensions: (i) create, (ii) evaluate, (iii)

analyse, (iv) apply, (v) understand and (vi) remember.

We consider the six levels of cognitive processes

considered in Bloom's Digital Taxonomy to name the

dimensions. Each of these dimensions was in turn

subdivided into two sub-dimensions: thinking and

communication. Each of these sub-dimensions was

also divided into indicators.

3.2.3 Heuristic Assessment Tests

The heuristic evaluation allows to make a continuous

evaluation of the whole process, involving

mathematics teachers and specialists who evaluated

based on a set of usability principles, called

heuristics. It is an accessible method that seems to

predict the problems of the end user (Mack &

Nielsen, 1994).

The ALPHA 1.1 version, was evaluated only by

mathematics teachers in two phases. With the

usability tests we intend to find difficulties in the use

of the evaluation instrument, problems of application

with the teachers and make recommendations that

allowed to improve it. Following the guidelines of

Lencastre and Chaves (2007), we carried out this

process throughout the design and development of the

assessment instrument.

The first heuristic assessment test was with

mathematics teachers, informally through the

Heuristics and Usability of a Video Assessment Evaluation Tool for Teachers

449

visualization and filling of the tool by the teachers,

recording of the observations made and difficulties

encountered by the teachers, complemented by the

researcher's self-observation. The teachers performed

a free exploration of the evaluation instrument

without defined criteria, choosing an order, so that we

could more easily detect mistakes susceptible to

correction. The information collected during the

heuristic evaluation was used to reformulate the tool

according to the observations made by the teachers,

the recommendations of the experts and the needs

diagnosed by us.

The second heuristic assessment test was done by

a specialist in Curriculum Development. The test was

previously scheduled, during which the specialist

made a free exploration of the video evaluation

instrument.

The third heuristic assessment test was carried out

with five math teachers and accompanied some of

their students in the creative editing of videos under

the JuxtaLearn methodology.

The fourth test was carried out by specialists in

the field of educational technology. One of the

specialists is male and the other female. The two

experts have a PhD in Education, specializing in

Educational Technology. The evaluation by experts is

essential to allow the detection of mistakes that can

be altered and corrected (Lencastre & Chaves, 2007).

These tests were previously scheduled with each of

the specialists and their responses recorded. In both

heuristic evaluation tests, we used paper-writing

material to make the records and the computer to

search for anything that was needed.

The evaluation test with the educational

technology expert resulted in the transition from a

paper version of the evaluation instrument to a digital

version.

3.2.4 ALPHA 1.2 Version

In this version we adjusted the order of the

information presented, to clarify the text of the

indicators and to better adapt the indicators to

Bloom's Digital Taxonomy and the JuxtaLearn

methodology. The tool was reformulated, and with

the 3rd heuristic evaluation test, we intend to find

difficulties in using the tool, to detect situations to

correct and consider opinions in order to improve it

and make it more suitable for use. With this test we

intend bringing it closer to the target audience, so we

involved six teachers and they were all familiar with

the process.

The test was previously scheduled with the group

of teachers and was held at the school where they

taught in a room equipped with computers. Each

teacher had access to a computer and the printed

assessment instrument. We ask them to choose one of

the videos made by their students and evaluate it by

filling in the tool. We also asked them to tell us their

opinion about the assessment instrument, listing

mistakes to correct, ambiguous or hard interpretation

words in order to improve them. With this heuristic

evaluation test, we also intend to understand the

receptivity and acceptability of the evaluation

instrument with teachers who accompanied their

students in preparing the video, in order to improve it.

Heuristic evaluation is a method that allows, in a

simple, fast and relatively inexpensive application, to

obtain results that allow to improve a product. During

the test, some questions were asked to highlight some

of the potential of the grid in the analysis of the

information transmitted by the video. Throughout the

test, notes were taken in the logbook that made

possible to complement the collection of information

during the test. In this way, we tried to perceive

possible difficulties in the interpretation of the

information provided in the evaluation instrument

and weaknesses in the instrument's ability to evaluate

the student's creative video editing work. We also

intend to evaluate the consistency of the indicators of

each dimension with the JuxtaLearn learning process

implemented by the student and the prevention of

errors.

3.2.5 ALPHA 1.3 Version

In the construction of the ALPHA 1.3 version, we

took into account the suggested changes and indicated

the corrected errors. This version continued to be built

on paper based on Bloom's Digital Taxonomy and in

addition to some term simplifications, the main

changes in this version were structural.

We started by reorganizing the information

related to each dimension, which initially appeared all

at the beginning of the assessment instrument and

now appears when it is needed. The dimensions are

also separated, and the dimension name is no longer

on the side of the indicators and starts at the

beginning, immediately before the description.

With the 4th heuristic evaluation test with an

expert in Educational Technology, we intend to find

difficulties in the use of the evaluation instrument,

namely in the interpretation of information and

problems on adapting the indicators to the video

evaluation. We also intend to detect errors or

situations where the usability criteria is not met,

which can be improved. Thus, the video evaluation

instrument was subjected to an evaluation by two

CSEDU 2020 - 12th International Conference on Computer Supported Education

450

specialists in the field of Educational Technology.

The information collected with this heuristic

assessment will serve to reformulate the video

assessment tool based on the experts'

recommendations.

This test was previously scheduled with the two

specialists, on a date and place established for that

purpose. Data collection was obtained using the

method think aloud, where while the experts were

analysing the video evaluation instrument, they were

talking and we were recording what was going on to

later review. The experts had a paper version of the

evaluation instrument and performed a free

exploration of the document, without previously

defined criteria in order to detect anomalies that we

can correct. We asked to analyse the video evaluation

tool and verbalize the strengths, weaknesses and

suggestions for improvement. The material used in

this test was written material on paper for registration,

the printed video evaluation instrument and the

computer for viewing videos created by the students.

Throughout this test, recommendations were made,

suggestions to clarify the information present in the

indicators and errors detected that were corrected in

the following version (Beta).

3.2.6 Beta Version

With the results of the heuristic evaluation tests

carried out and the experts' guidelines, we made the

necessary adaptations and changes, going forward to

the Beta version of the video evaluation instrument.

In the heading in the new version we present the

objective of the evaluation instrument and we also

replace the title with the one suggested.

To make the video evaluation tool accessible, it

became available online through a Google Forms

form. This led to some changes in the initial structure.

We chose to place the first dimension to be evaluated

[Remember] next to the header of the evaluation

instrument and the dimensions on separate pages to

facilitate the analysis and completion by the

evaluating teacher. Going online allowed the teacher

after completing the filling, to submit the assessment

and the data gets organized in the same document that

allows further analysis. In this way, the same teacher

can evaluate several videos and, in the end, analyse

the results obtained.

4 RESULTS

In the first stage of the preliminary investigation, all

teachers admitted, that the videos were scientifically

correct and that they have pedagogical potential. Only

5 teachers indicated that they totally agree with the

scientificity and pedagogical potential of the video.

Only one of the teachers showed no desire to use the

video in their classes.

In the second stage of the preliminary

investigation, with regards to scientific correction, the

teachers reported that most of the videos had an

excellent mastery of concepts. Regarding the

correction of information, the teachers reported that

the videos present well-articulated information,

without grammatical corrections or without scientific

language inaccuracies. Teachers said that, the videos

were scientifically correct and have educational

potential. They only point out some inaccuracies in

terms of the explanation of the information. But they

recognize that the information presented by students

in the videos is well articulated.

In the 1st heuristic evaluation test with two

mathematics teachers, teachers were informed that

the test consisted of assessing the clarity of the

instructions, and in relation to the mathematical

concept covered. The evaluators started by viewing a

video on divisibility criteria and reading the text of

the evaluation tool. We noticed that the way the sub-

dimensions were presented and the fact that they were

the same in each dimension created some confusion

in the interpretation of the grid. Mistakes also arose

regarding the significance of the grid's dimensions.

Teachers questioned us about what each

dimension meant and what it implied for the video

evaluation process. We realized that we needed to

further adjust the information contained in the

indicators to the stages of the Juxtalearn learning

process, to facilitate its interpretation for the user. We

also noticed that some of the indicators were not clear

enough to allow a quick response. Teachers were

unable, for example, to respond to the indicators

“plan a coherent explanation structure”, “formulate

hypotheses” and “list the essential aspects of the

information presented”. The indicator “marking the

key aspects of the concept” and the indicator “shows

understanding about the concept” also raised doubts

and different interpretations regarding what it

referred to, how it was intended to be marked. The

indicator “use mathematically correct and clear

terminology” in the opinion of one of the teachers

should have only the word “correct”, because

according to this teacher, the word “clear” is

understood to be correct already.

In the 2nd heuristic evaluation test with an expert

in Curriculum Development, the collection of

information from this test served to reformulate the

tool according to its recommendations. In this test,

Heuristics and Usability of a Video Assessment Evaluation Tool for Teachers

451

previously scheduled, we presented the expert, the

ALPHA 1.1 version on paper. We also presented an

example of a video created by a student so that he

could explore it. The expert, read, analysed and

performed a free exploration without previously

defined criteria, in order to detect mistakes and

irregularities in the evaluation instrument to be

corrected by us. The test was carried out using the

think aloud data collection method. In this way, the

expert commented aloud on his observations while

analysing each of the dimensions of the evaluation

instrument. During the test, the analysis verbalized by

the expert, the expert's reaction to the information

presented was recorded for later analysis.

The second expert is a doctor in Education,

specialist in curriculum development, author of

several presentations in the field of assessment. He

currently works as a teacher in a group of schools in

the north of the country and as coordinator of several

projects at school level. The Specialist started by

reading the video evaluation instrument, then viewed

the example of one of the videos made by the students

and then analysed each of the dimensions of the tool.

He identified some mistakes: the lack of an

explanation of what each of the dimensions presented

throughout the assessment instrument means. A

different organization of the indicators was

suggested, organizing them in order of execution in

the action. He also suggested the removal of the side

numbering in each of the indicators and that we add

at the top of each dimension the phrase [The student

can…], to make it clearer what we want to evaluate.

He suggested that we standardize the categories,

putting in the same verb tense, for example

[Describes], [Understands]. The expert also

suggested that the last indicator of each dimension

should use the word of the dimension itself

corresponding to a more advanced level of

understanding, for example, in dimension "A",

[remember], in dimension "B", to [understand].

Throughout this test, the expert made suggestions that

were met and corrected in the next version of the

video assessment instrument. At the beginning of the

tool we present a brief description of each of the

dimensions. We reorganized the indicators according

to the sequence of steps in the JuxtaLearn learning

process. We removed the numbering in each of the

indicators. We have standardized some terms used in

the text of the indicators. We reviewed the verbal

forms used in each of them and add in each dimension

an indicator with the word used to characterize the

dimension. We also simplified the presentation of

each of the dimensions, presenting in the new version

only its name.

With the 3rd heuristic evaluation test, we realized

that we would have to better clarify some of the

indicators. The indicator [clarifies the obstacles] of

the [remember] dimension, the indicators [Focuses

presentation on information about the concept],

[informs about the concept] and [deconstructs the

concept] of the [Analyze] dimension raised doubts

regarding its interpretation, in relation to what was

expected to be evaluated with these indicators. As the

test progressed, we also noticed that some teachers

had difficulty distinguishing the indicator [presenting

information for the perception of the concept, without

which it would not be noticeable]. Still in this

dimension, three of the teachers asked us what was

the difference between the indicator [makes

generalizations in relation to mathematical ideas and

procedures] and the indicator [makes inferences

about the information presented]. Similarly, in the

dimension [Create] the difference between the

indicator [building a connection of ideas capable of

exemplifying the concept] and the indicator

[producing an explanation of the concept] also

generated in the teachers the feeling that they were

evaluating the same thing.

In the 4th heuristic evaluation test, the experts in

Educational Technology detected some mistakes and

suggested moving the video evaluation instrument

from paper to digital format. It was also suggested

that the title should become just [Video assessment

based on Bloom Digital Taxonomy]. Regarding the

supporting information that accompanied each of the

dimensions, they suggested that it was just [For each

statement, check the option that best applies to the

video you are evaluating]. In the response options,

they suggested replacing [Not applicable] with [I

don't know] to simplify the task for the evaluator. In

the [Recall] dimension, they proposed replacing the

word [Recognizes] in the first indicator with the word

[Identifies]. They suggested replacing the second

indicator with [Recognizes the obstacles that make up

the concept], removing the last two indicators and

adding the following [Recalls information related to

the content]. In the [Understand] dimension, the

experts suggested reducing the number of indicators

presented. In the [Apply] dimension, they proposed

replacing the word [uses] by [makes] and removing

the third indicator. In the [Analyse] dimension, they

suggested replacing the first indicator with

[deconstructs the concept into simpler components]

and eliminating the second, third and eighth indicator.

In the [Evaluate] dimension, experts suggested

replacing the indicators with the following:

[formulate hypotheses for explaining the concept],

[try an explanatory hypothesis], [judge the solution

CSEDU 2020 - 12th International Conference on Computer Supported Education

452

found], [making value judgments about it and

evaluate the solution found]. In the [Create]

dimension, the experts suggested replacing the

indicator [underlining the supporting information,

without which the concept would not be perceived]

by [idealizing a coherent answer to explain the

concept], the indicator [building a link of ideas] able

to exemplify the concept by the indicator [draws a

logical sequence of ideas capable of explaining the

concept], and the indicator [build a correct logical-

mathematical explanation] by the indicator [build a

correct explanation from the scientific point of view].

They also warned about the need to change the word

[created] with the word [create] in the last indicator.

The results obtained in this test, led to the next

version in digital format. In the new version, we

corrected the title, supporting information and the text

of the indicators according to the suggestions that

were made by the experts. In the [Understanding]

dimension, we replaced the indicators for: Interpret

the concept, compare the concept with related

information, exemplify the concept in similar

situations and understand the essential aspects to

apply the concept.

5 DISCUSSIONS AND

CONCLUSION

Seeking to answer the problem of the lack of an

instrument that allows evaluating videos produced by

the students themselves according to the JuxtaLearn

process (Cruz, et. All., 2017), this study aimed to

design and develop a tool capable of assisting

teachers in this task.

Throughout this article we describe the various

stages of developing a tool to evaluate the videos

produced according to the JuxtaLearn process.

Throughout the tool's creation stages, we followed

Nielsen's (1993) guidelines, according to which they

must be appealing, intuitive and be products that can

be used with (i) ease of learning, (ii) efficiency in

performing tasks and (iii) satisfaction.

In the Analyse phase, in addition to a study of the

state of the art, we applied an exploratory test with the

target audience in order to understand their

characteristics, needs and interests. Then, in the

Design phase, we developed the content and drafted

the ALPHA version that we thought would meet the

needs of our target audience. We carried out the

heuristic evaluation by experts in order to detect

possible errors in order to solve them before the tool

is tested as a target audience. After correcting the

detected errors, in the develop phase we applied the

tool to teachers similar to the target audience to see if

they could easily learn to use the video evaluation tool

and understand if it was a resource they needed.

The pedagogical system is in need of a new

paradigm, to which the traditional school is unable to

respond, and an active search for possibilities for

change, which will put the development of the

individual first, instead of memorizing an infinity of

facts. Society is becoming dependent on technology

and new ways of integrating it into the learning

process, where being able to learn and adapt to the

new training skills needed is a basic skill (Laal, 2013).

It is necessary to learn based on research carried out

in the area of learning and focus teaching on

understanding and practice (Dadzie, Benton, Vasalou

& Beale, 2014). In general, all teachers were able to

evaluate videos produced by their students with the

tool. The application of the Beta version allowed us

to realize that the prototype was useful for teachers

who used it and may be useful for teachers in general.

Allows quick assessment of student knowledge.

It also seems appropriate to analyse in future

research if the level of reflection achieved, with the

use of the evaluation instrument of videos created by

the Juxtalearn methodology, contributes to change the

teachers' professional practice.

ACKNOWLEDGEMENTS

We would like to thank to the teachers of the Palmeira

School who collaborated in the tests. We also would

like to thank the experts for their contributions in

improving the assessment tool.

REFERENCES

Adams, A., Rogers, Y., Coughlan, T., Van-der-Linden, J.,

Clough, G., Martin, E., & Collins, T. (2013), Teenager

needs in technology enhanced learning. Workshop on

Methods of Working with Teenagers in Interaction De-

sign, CHI 2013, Paris, France, ACM Press.

Adams, A., Hartnett, E., Clough, G., Grand, A., &

Goldsmith, R. (2014). Artistic participatory video-

making for science engagement, CHI 2014 annual

ACM SIGCHI Conference on Human Factors in

Computing Science, Toronto, Canada.

Cruz, S., Lencastre, J. A., Coutinho, C., José, R., Clough,

G., & Adams, A. (2017). The JuxtaLearn process in the

learning of maths’ tricky topics: Practices, results and

teacher’s perceptions. In Paula Escudeiro, Gennaro

Costagliola, Susan Zvacek, James Uhomoibhi and

Bruce M. McLaren (ed) Proceedings of CSEDU2017,

Heuristics and Usability of a Video Assessment Evaluation Tool for Teachers

453

9th International Conference on Computer Supported

Education, Volume 1 (pp. 387-394). Porto, PT:

SCITEPRESS.

Cruz, S. M. A. (2019). A edição criativa de vídeo como

estratégia pedagógica na compreensão de threshold

concepts. (Tese de Doutoramento), Braga, Portugal.

Churches, A. Bloom's Digital Taxonomy. (2009).

Educational Origami. Recuperado de

http://edorigami.wikispaces.com/Bloom's+Digital+Ta

xonomy .

Dadzie, A. S., Benton, L., Vasalou, A., & Beale, R. (2014).

Situated design for creative, reflective, collaborative,

technology-mediated learning. In Proceedings of the

2014 conference on Designing interactive systems (pp.

83-92). ACM.

Dadzie, A. S., Müller, M., Alissandrakis, A., & Milrad, M.

(2016). Collaborative Learning through Creative Video

Composition on Distributed User Interfaces. In State-

of-the-Art and Future Directions of Smart Learning

(pp. 199-210). Springer, Singapore.

Doyle, S., & Senske, N. (2017). Between Design and

Digital: Bridging the Gaps in Architectural Education.

Charrette, 4(1), pp. 101-116.

Fadde, P., & Sullivan, P. (2013). Using Interactive Video to

Develop Pre-Service Teachers’ Classroom Awareness.

Contemporary Issues in Technology and Teacher

Education, 13(2), pp. 156-174.

Ferraz, A. P. D. C. M., & Belhot, R. V. (2010). Bloom's

taxonomy and its adequacy to define instructional

objective in order to obtain excellence in teaching.

Gestão & Produção, 17(2), pp. 421-431.

Fuller, D., & Magerko, B. (2011, November). Shared

mental models in improvisational theatre. In

Proceedings of the 8th ACM conference on Creativity

and cognition (pp. 269-278). ACM.

Hartnett, E., Malzahn, N., & Goldsmith, R. (2014). Sharing

video making objects to create, reflect & learn. In

Learning through Video Creation and Sharing (LCVS

2014), September, Graz, Austria.

Hechter, R., & Guy, M. (2010). Promoting Creative

Thinking and Expression of Science Concepts Among

Elementary Teacher Candidates Through Science

Content Movie Creation and Showcasing.

Contemporary Issues in Technology and Teacher

Education, 10(4), pp. 411-431.

King, F. J., Goodson, L., & Rohani, F. (1998). Higher order

thinking skills. Retrieved January, 31, 2011.

Kumar, S., & Vigil, K. (2011). The net generation as

preservice teachers: Transferring familiarity with new

technologies to educational environments. Journal of

Digital Learning in Teacher Education, 27(4), pp. 144-

153.

Laal, Marjan. (2013). Lifelong learning and technology.

Procedia-Social and Behavioral Sciences 83, pp. 980-

984.

Lencastre, José Alberto & Chaves, José Henrique (2007).

Avaliação Heurística de um Sítio Web Educativo: o

Caso do Protótipo "Atelier da Imagem". In Dias, P.;

Freitas, C.; Silva, B.; Osório, A. & Ramos, A. (org).

Actas da V Conferência Internacional de Tecnologias

de Informação e Comunicação na Educação -

Challenges 2007. Braga: Universidade do Minho.

1035-1043. ISBN: 978-972-8746-52-0.

Lencastre, J. A. (2012). Metodologia para o

desenvolvimento de ambientes virtuais de

aprendizagem: development research. In Educação

Online: Pedagogia e aprendizagem em plataformas

digitais. Angélica Monteiro, J. António Moreira & Ana

Cristina Almeida (org.). Santo Tirso: DeFacto Editores.

pp. 45-54.

Lencastre, J. A., Coutinho, C., Cruz, S., Magalhães, C.,

Casal, J., José, R., Clough, G., & Adams, A. (2015). A

video competition to promote informal engagement

with pedagogical topics in a school community. In

Markus Helfert, Maria Teresa Restivo, Susan Zvacek

and James Uhomoibhi (ed.), Proceedings of CSEDU

2015, 7th International Conference on Computer

Supported Education, Volume 1, (pp. 334-340),

Lisbon: SCITEPRESS – Science and Technology

Publications.

Mack, R. L., J. Nielsen. 1994. Executive summary. J.

Nielsen and R. L. Mack, eds. Usability Inspection

Methods. John Wiley and Sons, New York, 1–24.

Martín, E., Gértrudix, M., Urquiza‐Fuentes, J., & Haya, P.

A. (2015). Student activity and profile datasets from an

online video‐based collaborative learning experience.

British Journal of Educational Technology, 46(5), pp.

993-998.

Mestel, E., Malzahn, N., & Goldsmith, R. (2014). Sharing

video making objects to create, reflect & learn. In

Learning through Video Creation and Sharing (LCVS

2014), September, Graz, Austria.

Müller, M., Otero, N., Alissandrakis, A., & Milrad, M.

(2014, November). Evaluating usage patterns and

adoption of an interactive video installation on public

displays in school contexts. In Proceedings of the 13th

International Conference on Mobile and Ubiquitous

Multimedia (pp. 160-169). ACM.

Nielsen, J. (1993). Usability Engineering. New Jersey:

Academic Press.

Novak, J. D. (2010). Learning, creating, and using

knowledge: Concept maps as facilitative tools in

schools and corporations. Taylor & Francis.

Recuperado de

http://rodallrich.com/advphysiology/ausubel.pdf.

Otero, N., Müller, M., Alissandrakis, A., and Milrad, M.

(2013). Exploring video-based interactions around

digital public displays to foster curiosity about science

in schools. Proceedings of ACM International

Symposium on Pervasive Displays, 4-5 June, 2013 -

Mountain View, California.

Tuckman, B. W. (2000). Manual de Investigação em

Educação. Fundação Calouste Gulbenkian.

Urquiza-Fuentes, J., Hernán-Losada, I., & Martín, E. (2014,

October). Engaging students in creative learning tasks

with social networks and video-based learning. In

Frontiers in Education Conference (FIE), 2014 IEEE

(pp. 1-8). IEEE.

CSEDU 2020 - 12th International Conference on Computer Supported Education

454