User Reception of Babylon Health’s Chatbot

Daniela Azevedo

a

, Axel Legay

b

and Suzanne Kieffer

c

Universit

´

e Catholique de Louvain, Louvain-la-Neuve, Belgium

Keywords:

User Experience, mHealth, Babylon Health, Chatbot, Artificial Intelligence.

Abstract:

Over the past decade, renewed interest in artificial intelligence systems prompted a proliferation of human-

computer studies studies. These studies uncovered several factors impacting users’ appraisal and evaluation

of AI systems. One key finding is that users consistently evaluated AI systems performing a given task more

harshly than human experts performing the same task. This study aims to uncover another finding: by pre-

senting a mHealth app as either AI or omitting the AI label and asking participants to perform a task, we

evaluated whether users still consistently evaluate AI systems more harshly. Moreover, by picking young and

well educated participants, we also open new research avenues to be further studied.

1 INTRODUCTION

Upcoming breakthroughs in critical elements of tech-

nology will accelerate the development of artificial

intelligence (AI) and multiply its application to face

global economic, social, and ecologic crises. Un-

derstanding AI’s capabilities as well as its limits is

quintessential to progress towards context-mindful

models. Yet, little is known regarding everyday users’

reception of this thrilling and intimidating technology.

The worsening healthcare crisis deriving from an age-

ing population coupled with a global pandemic and

a decrease in public health funding, urges practition-

ers and administrators alike to find new solutions to

provide affordable care. AI powered systems are not

only cheaper in the long run, but also exponentially

increase the accuracy and computability required to

produce strategies individually tailored to each pa-

tient, making precision medicine possible and avail-

able to a majority of the population on a day-to-day

basis. With the help of AI, healthcare systems can

move away from one-size-fits-all types of care that

produce ineffective treatment strategies, which some-

times result in untimely deaths (Buch et al., 2018).

Recent years have seen a sharp increase in

mHealth applications, both in the form of web-

sites as well as mobile applications. The ser-

vices offered by mHealth apps range from disease-

specific, doctor-prescribed to generalist, occupation-

a

https://orcid.org/0000-0003-0426-3206

b

https://orcid.org/0000-0003-2287-8925

c

https://orcid.org/0000-0002-5519-8814

based apps. However, consumers’ general reluctance

to engage with AI for sensitive subjects makes imple-

menting AI based solutions complicated. User Ex-

perience (UX) offers a considerable arsenal of tools

to measure the reception of such applications and

improve them to increase adoption rates (Lew and

Schumacher, 2020). By analysing the reception of

the case of chatbot, a particularly popular technology

(Cameron et al., 2018), we aim to shed some light on

this subject and thusly facilitate further research.

2 PREVIOUS RESEARCH

2.1 AI-based Technologies in Medicine

Artificial Intelligence (AI) is an umbrella term that

refers to technologies ranging from machine learn-

ing, natural language processing, to robotic process

automation (Davenport et al., 2020). The idea be-

hind the term AI is that the program, algorithm, sys-

tems and machines demonstrate or exhibit aspects of

intelligence and mimic intelligent human behaviour.

Several technological breakthroughs are expected to

galvanize the AI-researchers community and signifi-

cantly transform fields in which it is applied (Gruson

et al., 2019; Campbell, 2020). In particular, chatbots

are predicted to soon become users’ preferred inter-

face, over traditional webpages or mobile applications

(Cameron et al., 2018). In the United Kingdom, even

before the pandemic, over a million users already pre-

ferred to use a chatbot app called Babylon – an app

134

Azevedo, D., Legay, A. and Kieffer, S.

User Reception of Babylon Health’s Chatbot.

DOI: 10.5220/0010803000003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 2: HUCAPP, pages

134-141

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

that uses a question-based interaction with users re-

garding their disease symptoms to establish a diag-

nosis – rather than contacting their National Health

Services (NHS) (Chung and Park, 2019).

Despite reservations regarding the implementa-

tion of AI emerging from both users and healthcare

professionals (Zeitoun and Ravaud, 2019; Lew and

Schumacher, 2020), healthcare slowly departs from

an expert-led approach towards a patient-centred,

independent and self-sufficient one. Since the

democratisation of the internet, patients are increas-

ingly looking to (re)gain control over their health-

care choices (Dua et al., 2014) and the number

of people turning to websites and social media in

search of healthcare information is only increasing

(Chowriappa et al., 2014). AI-systems could offer a

more suitable alternative to expert-led healthcare, as

opposed to the raw healthcare information currently

available on the internet. Using AI-enabled applica-

tions allows users to become more active participants

in their own health, reduce costs, improve patient ex-

perience, physician experience and the health of pop-

ulations (Campbell, 2020).

User resistance increases as AI moves towards

context awareness (Davenport et al., 2020). There-

fore, for AI be successfully implemented, lay users’

perceptions and beliefs need to evolve. Researchers

in the early 2000s were already calling for greater at-

tention to consumer resistance regarding technology

alternatives (Edison and Geissler, 2003) – the more

reservations users emit, the less likely they are to

adopt AI-based products. Somat (Distler et al., 2018)

describes the process of implementing and adopting

a new product as a journey through an acceptability-

acceptation-appropriation continuum. The process

begins with a subjective evaluation before use (ac-

ceptability), after use (acceptation) and once the prod-

uct has become a part of daily life (appropriation).

Holmes et al. (2019) argue that interacting with a

chatbot is more natural and more intuitive than con-

ventional methods for human-computer interactions.

A chatbot is an intelligent interactive platform that

enables users to interact with AI through a chatting

interface (Chung and Park, 2019) using natural lan-

guage (written or spoken) aiming to simulate human

conversation (Denecke and Warren, 2020). De Gen-

naro et al. (2020, p. 3) note that ‘chatbots with

more humanlike appearance make conversations feel

more natural, facilitate building rapport and social

connection, as well as increase perceptions of trust-

worthiness, familiarity, and intelligence, besides be-

ing rated more positively’. To decrease complexity,

most chatbots opt to restrict user input to selectable

predefined items (Denecke and Warren, 2020). There

are already chatbots in the market that provide ther-

apeutics or counselling, disease or medication man-

agement, screening or medical history collection or

even symptoms collection for triage purposes (De-

necke and Warren, 2020). These AI applications can

instantly reach large amounts of users (de Gennaro

et al., 2020), reduce the need of medical staff, as well

as assist patients regardless of time and space (Chung

and Park, 2019).

2.2 UX’s Role in AI Adoption

UX plays a pivotal role in the acceptance process

(Lew and Schumacher, 2020). UX is a compound

of emotions and perceptions of instrumental and non-

instrumental qualities that arise from users’ interac-

tion with a technical device. To achieve a successful

UX, AI products, like any other product, need to meet

essential elements of utility, usability and aesthetics

(Lew and Schumacher, 2020). Wide-spread consumer

adoption requires good usability (Campbell, 2020),

which is currently lacking and thus undermining ef-

forts to deliver integrated patient-centred care.

UX is composed of many constructs, which are

complex and sometimes impossible to measure holis-

tically (Law et al., 2014). For example, trust, which

can be defined as ‘the attitude that an agent will help

achieve an individual’s goals in a situation charac-

terized by uncertainty and vulnerability’ (Lee and

See, 2004, p. 54), requires a balanced calibration to

be struck, especially regarding health-related issues.

Overtrust results in a users’ trust exceeding system ca-

pabilities, while distrust leads users to not fully take

advantage of the system’s capabilities. Two funda-

mental elements define the basis for trust: the focus

of what is to be trusted and the information regarding

what is to be trusted.

Low adoption of AI-systems is linked to low

trust rates (Sharan and Romano, 2020), because trust

strongly influences perceived success (Lew and Schu-

macher, 2020). One of the main reasons behind AI’s

failure to build user trust is that their output is often

off-point, thus lacking in accuracy. Trust is primarily

linked to UX pragmatic qualities, in particular usabil-

ity and utility. Aesthetics influences the perception

of both usability and utility through a phenomenon

called the aesthetics usability effect. This refers to

users’ tendency to perceive the better-looking product

as more usable, even when they have the exact same

functions and controls (i.e., same utility and usability)

(Norman, 2005).

User Reception of Babylon Health’s Chatbot

135

2.3 UX Challenges

Davenport et al. (2020) distinguishes four main chal-

lenges for AI adoption: (1) users hold AI to a higher

standard and are thus less tolerant to error; (2), users

tend to be less willing to use AI applications for

tasks involving subjectivity, intuition or affect, be-

cause they believe having those characteristics is nec-

essary to successfully complete the task ; (3) users

are more reluctant to use AI for consequential tasks,

such as driving a car as opposed to choosing a movie,

because it involves a higher risk and (4) user charac-

teristics (e.g., gender) also influence perception, eval-

uation and adoption of AI. When perceived as a risk,

women tend to adopt AI less since they are more risk

adverse than men (Gustafson, 1998). Individual’s at-

titude towards technologies is another key factor (Edi-

son and Geissler, 2003). Furthermore, having lit-

tle technical or digital literacy ultimately influences

users’ perception of it.

According to Araujo et al. (2020), level of ed-

ucation and programming knowledge influence per-

ception of AI: people with a lower level of education

show a stronger negative attitude towards algorithmic

recommendations and participants with higher lev-

els of programming knowledge show a higher per-

ceived fairness level. In general, people with domain-

specific knowledge, belief in equality and online self-

efficacy tend to have more positive attitudes about the

usefulness, the fairness and risk of decisions made

by AI and evaluate those decisions on par and some-

times better than human experts. However, when

users strongly identify with the domain activity, they

are less inclined to adopt AI for that activity (Daven-

port et al., 2020).

Another concern is uniqueness neglect. In a study

carried out by Longoni and al. (2019), users have

reservations about AI because they generally believe

that AI only operates in a standardized manner and is

calibrated for the ‘average’ person. Consequently, AI-

systems are perceived as less able to identify and ac-

count for users’ unique characteristics, circumstances

and symptoms, leading them to be neglected. Yet,

because same diseases show very different symptoms

based on patient’s health condition or lifestyle (Chung

and Park, 2019), it is human doctors who too often

misdiagnose patients. AI is able to simultaneously

take into account patient’s medical records and fam-

ily history, and even their genome, warn about the

disease risk and design unique treatment pathways

tailored for them (Panesar, 2019). AI-systems could

help prevent misdiagnoses by presenting doctors with

alternative diagnoses and information and thusly sup-

porting and enhancing them.

3 HYPOTHESES

H

1

. Men and women evaluate products differently.

When perceived as a risk, women tend to adopt

AI less since they are more risk adverse than men

(Gustafson, 1998). Since then, gender dynamics have

evolved. Therefore, women today may no longer tend

to evaluate AI – a technology perceived to involve

more risk – significantly differently than their male

counterparts.

H

2

. Products’ hedonic (Attractiveness, Novelty) and

pragmatic qualities (Content Quality, Trustworthiness

of Content, Efficiency, Perspicuity, Dependability,

Usefulness) are evaluated more negatively when the

product is explicitly labelled AI. Merely labelling a

product as AI may trigger in laypeople a variety of

heuristics based on stereotypes about the operation of

the application (Sundar, 2020). The outcome and the

extent of these triggers on subjective evaluation is yet

to be determined.

H

3

. User characteristics (attitude towards technology,

English proficiency, domain-specific knowledge) in-

teracts with the treatment and significantly changes

their subjective evaluation of the product. User char-

acteristics is an overarching factor of subjective eval-

uation. However, we do not know if users’ evalua-

tion differs significantly depending on the nature of

the product. For example, users’ attitude towards

technology underlines the overall reception and re-

sistances towards technologies (Edison and Geissler,

2003); language proficiency plays a pivotal role as

it can hinder users’ ability to understand the sys-

tem; users’ domain-specific knowledge (e.g., pro-

gramming knowledge or products’ domain of activ-

ity) influences the evaluation of related AI products

(Araujo et al., 2020).

4 METHOD

4.1 Why Babylon Health?

We chose Babylon Health, a free-to-use webapp that

provides various healthcare services. We focused on

their Chatbot Symptom Checker. According to Baby-

lon Health’s website, their chatbot is powered by an

AI that understands symptoms the users input and

provides them with relevant health and triage infor-

mation. Chung and Parker (2019) confirm that users

receive responses depending on their input, which are

based on data contained in a large disease database.

After the symptom check, users receive a diagno-

sis and a suggested path of action (e.g., appointment

with GP). Since the scientific literature suggests trust

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

136

in AI-systems often correlates positively with higher

degrees of anthropomorphism (Sharan and Romano,

2020), we deliberately chose an AI-system that has no

human-like element (behavioural or visual), to avoid

biasing the results with features which are more prone

to positive reception.

4.2 Participants

Typically, the last population segment to adopt inno-

vations are older people and people with lesser ed-

ucational attainment and lower socioeconomic status

(Dorsey and Topol, 2020). Therefore, to avoid biasing

the results by obtaining a sample with too many vari-

ables, we recruited exclusively young, well-educated

participants. We did not accept participants who had

previously engaged with either Babylon or very simi-

lar applications, such as Ada Health, because past ex-

periences with AI-systems may significantly alter the

trust formation process (Hoff and Bashir, 2015).

4.3 Data to Collect

Regarding gender (H

1

), participants self-identified as

either (1) male, (2) female or (3) non-binary.

Regarding pragmatic and hedonic constructs (H

2

),

we adopted a mixed approach (Law et al., 2014). As

quantitative method, we used the UEQ+ (Schrepp and

Thomaschewski, 2019), a questionnaire intended for

evaluating UX through the lens of up to 26 UX con-

structs. Since Babylon qualifies both as a word pro-

cessing device and an information website, we se-

lected the UX constructs of dependability, efficiency,

perspicuity, content quality and trustworthiness of

content. Since perceived usefulness is paramount to

adoption of AI (Araujo et al., 2020), we include a

usefulness scale. Furthermore, to measure at least

two hedonic qualities, we added the well-rounded sta-

ple of UX that is attractiveness scale and, because

the product is based on AI, a novelty scale. Since

perspicuity and dependability are jargon words, we

provided a definition. We also asked participants to

rank the eight UX constructs to establish a hierarchi-

cal scale. As qualitative method, we utilised the in-

terview to ask participants to explain their ranking in

their mother language (French).

Regarding user characteristics (H

3

), we adminis-

tered a questionnaire to assess attitude towards tech-

nology found in Edison and Geissler (2003). The

questionnaire measures technophobia with a 10 state-

ments agreement scale. To facilitate user input, we

continued using a 7-point Likert-scale instead of the

proposed 5. We calculated ‘measured technophobia’

as follows: mean scores between 1 and 2.49 are con-

sidered Highly Technophobic, from 2.5 to 3.99 Mod-

erately Technophobic, from 4 to 5.49 Mildly Techno-

phobic and from 5.5 to 7 Not Technophobic. To deter-

mine if participants were laypeople, we asked them

if they either study, work or are interested in either

health or IT. This corresponds to their domain-specific

knowledge. We evaluated participants’ English profi-

ciency by assessing the nature and frequency of their

vocabulary questions. We also took into account par-

ticipants’ self-reported proficiency.

4.4 Method of Collection

We conducted two rounds experiment. Due to

COVID-19, experiment 1 (XP1) took place on Zoom,

a cloud-based video communication app that allows

virtual video and audio set up, screen-sharing and

recording. Participants were at home and used their

personal computer. Experiment 2 (XP2) took place

on university campus, and a computer was put at the

participants’ disposal. The computer recorded the

screen, webcam and sound. In both experiments, we

used LimeSurvey to conduct the survey and to give

the instructions.

We used a randomized, post-test-only experimen-

tal design to test our hypotheses since these designs

are well suited to detect differences and cause-effect

relationships. Since today most people are unwill-

ing to take a 30-minute survey and participants’ atten-

tion and accuracy declines over time (Lew and Schu-

macher, 2020; Smyth, 2017), we decreased question

and task complexity as the experiment progressed.

We broke down the experiment into four parts: (1)

a user test (10 minutes), (2) a UX questionnaire and

ranking of UX constructs (12 minutes), (3) a short

interview (4 minutes) and (4) an attitude towards

technologies test followed-up by a sociodemographic

questionnaire (5 minutes).

To understand the influence of the term ‘Artifi-

cial Intelligence’ on users’ experience, we divided

our participants into two groups. Group 1 (No Treat-

ment Group, also known as control group) received

an introduction of the product that did not mention

AI. Group 2 (Treatment Group) received the almost

same introduction, except we mentioned AI 14 times

: twice explicitly when we presented the experiment

orally and when we introduced the app in the written

instructions, and then 12 times implicitly by remind-

ing them of this element in the title of the survey and

the subtitles above each section of the survey. These

were the sole differences in treatment between the two

groups.

User Reception of Babylon Health’s Chatbot

137

4.5 Procedure

First, we briefly explained how the experiment would

unfold. Then, we asked participants to share their

screen (XP1) or gave them the survey directly on

the computer, with the recording activated and their

screen duplicated on a monitor (XP2). Participants

then read a brief description of Babylon Health. They

proceeded to read a situation in which they were

asked to use Babylon Health to find a diagnosis. We

instructed them to use the product according to the

symptoms they were given. We told them that if a

symptom or an act was not mentioned, then it had not

happened in that situation. Participants were allowed

to read the situation as many times as they wished,

while they were using the product and inputting their

symptoms. They were instructed to return to the sur-

vey once they had reached the result page and had

read the results to their liking.

Then, we asked them to share their impressions

and subjectively evaluate the product by completing

the UEQ+. Next, we asked participants to rank UX

constructs by order of importance. We followed-up

on their ranking answers with a semi-structured inter-

view conducted to obtain a more precise account of

their thoughts and impressions. We asked participants

(1) to explain the reasoning behind their ranking, (2)

to describe their experience, (3) whether the product

met their expectations, (4) if something worked dif-

ferently than they expected, and (5) after stop shar-

ing their screen to answer questions related to their

attitude towards technology and to rate their level of

technophobia.

We then asked participants to tell us if they no-

ticed Babylon was made of AI and if so, to disclose

the moment they knew the app was AI-enabled and

to discuss the impact of this new information had on

their overall impression of the product. They could

either have a more negative opinion, have a more pos-

itive opinion or not change their impression at all. We

only report the answers of the No Treatment group,

since the Treatment group knew it all along.

Finally, we ask them to disclose their knowledge

of Babylon Health to see if participants had pre-

vious knowledge of the app and see if participants

in the Treatment Group had noticed Babylon was

AI-enabled. We also ask them to input their so-

ciodemographic information (age, gender, highest de-

gree of education, current employment status, field

of work/study and interests), as this information is

closely related to other social constructs pertaining to

users’ characteristics, which therefore influences their

user experience.

5 RESULTS

5.1 Experiment 1

Experiment 1 took place remotely between February

24th and April 18th 2021 and involved 29 partici-

pants (10 males), aged from 21 to 34 (mean 24.1),

among which 21 (72%) were enrolled bachelors and

master students, while eight (28%) had already ob-

tained a master’s degree, mostly in communication

studies (69%). Twelve out of 29 participants (41%)

barely had sufficient mastery of English to follow the

instructions and interact with the application. Further,

ten participants strongly deviated from the expected

path in the application, by inputting symptoms at the

beginning of the interaction, which were either very

broad or incorrect, and led to unrelated questions and

results from Babylon.

Participants receiving no treatment overwhelm-

ingly (80%) reported that knowing the app is made of

AI did not influence their subjective evaluation of the

product, while two participants (13%) reported hav-

ing a better impression of the product after receiv-

ing this information and one (7%) perceived it to be

worse. Regardless of treatment, participants evalu-

ated the product positively (mean ≥ 5.3 out of 7). Per-

spicuity and Content Quality got a particularly posi-

tive mean score (≥ 6), while Novelty got a mean score

of 4.85. Constructs scores varied from a minimum

of 2.25 points (Content Quality), to a maximum of

4.75 points (Novelty), which indicates the presence

of strong outliers.

H

1

. Data showed no statistical difference between

male and female. We used a Student’s t-test when

groups were normally distributed, a Mann-Whitney

test when they were not. Further, the Multivariate Test

showed no significant result for the Treatment x Gen-

der interaction, t(8,18)=.585, p=.777, partial η

2

=.206.

Given these results, we do not discriminate based on

gender.

H

2

. Data showed no statistical difference in the

evaluation of the product based on treatment (Mann-

Whitney U-test, Student’s t-test, Multivariate test).

However, five constructs (Attractiveness, Perspicuity,

Usefulness, Novelty, Trustworthiness of Content had

small effect size (d ≤ 0.2) and three (Efficiency, De-

pendability, Content Quality) only had a medium ef-

fect size (d ≤ 0.5).

H

3

. A Multivariate Test indicated no significant in-

teraction between treatment and participant’s char-

acteristics (English language skills, Domain-specific

Knowledge, Self-reported Technophobia, Measured

Technophobia). Overall, we obtained a large effect

size for all interactions (partial η

2

was always ≥ .14).

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

138

5.2 Experiment 2

Experiment 2 took place on campus between August

24 and 31st 2021 and involved 11 PhD students (10

females) from Universit

´

e catholique de Louvain, aged

from 25 to 35 (mean 28). Three participants had an

interest for either Health or IT. Participants’ English

proficiency was always deemed sufficient and only

three encountered some difficulty. They all took ap-

propriate paths in the application. All participants in

No Treatment Group reported believing that knowing

the product is AI-enabled did not influence their sub-

jective evaluation of the product. Participants evalu-

ated the product positively, with seven out of the eight

UX constructs reaching a mean ≥ 5.27 (only Novelty

obtained a mean of 4.93).

H

1

+ H

3

. There were insufficient participants to prop-

erly categorise them by their characteristics (gender,

English proficiency, domain-related knowledge, atti-

tude towards technologies).

H

2

. We were, however, able to measure if partici-

pants evaluated the product differently according to

treatment. A Student’s t-test showed that participants

in Treatment group (M=5.650, SD=0.57) evaluated

Content Quality more negatively than in No Treat-

ment Group (M=6.33, SD=0.26), t(9)=2.629, p=.027,

d=1.53. Other constructs had neither significant re-

sults nor large size effect.

5.3 Experiment 1 + 2

Participants from XP1 and XP2 ranked the level

of importance of UX constructs similarly (Table 1).

Trustworthiness of Content and Content Quality con-

tinue to be designated as the most important quali-

ties for an Health application, whereas Attractiveness

and Novelty are cited as the least important. Inter-

views showed that Content Quality is regarded as one

of the most important qualities more frequently than

written responses suggest. When asked to explain

their ranking, participants focused on aspects linked

to Trustworthiness and Content Quality. Trust was a

word that came up particularly often and was treated

at length. Aspects related to Content Quality and De-

pendability often intertwined with this ‘trust’ concept.

A Student t-test showed no statistical difference be-

tween XP1 and XP2. Furthermore, a Levene’s test

indicated that the variances for all constructs in the

two groups were equal. Therefore, we were able to

combine the results from the two experiments to gain

more statistical power.

H

1

. We found no significant difference between gen-

ders. However, since women outweighed men 3 to 1,

this analysis bears no scientific value.

Figure 1: Population Pyramid Frequency with Bell Curve

of Content Quality by Treatment, positive scale (4 to 7).

H

2

. Data showed a significant difference between

treatment groups in their evaluation of Babylon’s

Content Quality (t(38)=2.111, p=.041, d=.664). Es-

pecially, the No Treatment Group (N=21, M=6.238,

SD=.527) scored Content Quality higher than the

Treatment Group (N=19, M=5.842, SD=.657). This

means participants in the No Treatment Group found

the app to be more up-to-date, interesting, well pre-

pared and comprehensible. No other construct ob-

tained a significant result, but they also have a very

inferior effect size. Usefulness, Novelty, Trustworthi-

ness of Content had a very small effect size (d ≤ 0.1)

Attractiveness, Efficiency, Perspicuity had a small ef-

fect size (d ≤ 0.2), and Dependability had a medium

effect size (d ≤ 0.5).

H

3

. User characteristics (English proficiency,

domain-specific knowledge, attitude towards technol-

ogy) did not significantly interact with the treatment.

6 DISCUSSION

H

1

. Our study results indicate female and male partic-

ipants assess their UX with Babylon similarly, which

contradicts previous work on the importance of gen-

der in subjective evaluation of AI. Women should be

more risk-adverse than men (Gustafson, 1998). This

may indicate that gender related association to risk

might be outdated and needs to be revisited. Differ-

ences between genders might be eroding in millenni-

als and Gen Z. Another explanation is that millennials

and Gen Z do not perceive AI as a risk. This is plau-

sible if we consider that participants are not entering

real symptoms to find a real diagnosis to a real illness.

Rather, they are entertaining a fictional scenario sim-

ply because we asked them to do so. Thus, they might

not perceive the use of Babylon as an increase in risk.

The outcome being imaginary and not translated into

actual consequences might simply cancel the risk per-

ception. If so, then participants may also not feel con-

cerned by uniqueness neglect (Longoni et al., 2019),

because they only perceive themselves as unique and

not others, even when those ‘others’ are their hypo-

thetical sick selves.

User Reception of Babylon Health’s Chatbot

139

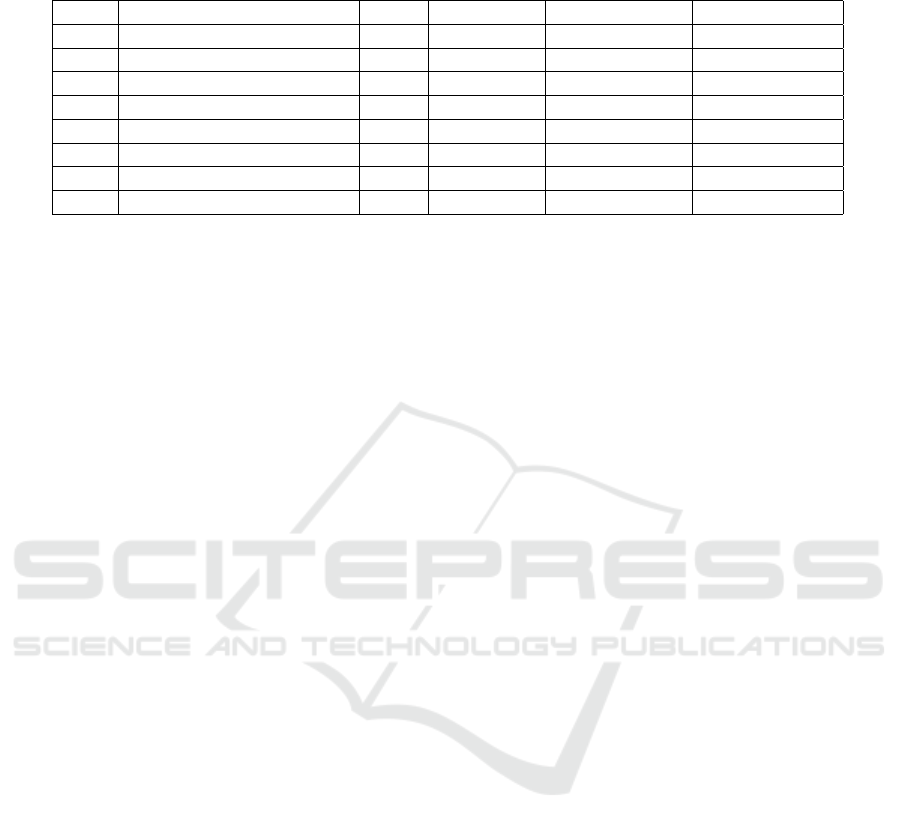

Table 1: Construct ranked by importance. We calculated scores by weighing the position of each construct in the ranking with

points (1

st

= 8 pts, 2

nd

= 7 pts. . . ) and the Mean Rank from their average points.

Rank Constructs Score Mean Rank Most important Least Important

1 Trustworthiness of Content 197 6.64 33 0

2 Dependability 167 5.70 9 0

3 Usefulness 159 5.25 8 0

4 Perspicuity 143 4.82 9 0

5 Content Quality 142 4.71 21 0

6 Efficiency 140 4.70 7 2

7 Attractiveness 75 2.35 0 21

8 Novelty 58 2.05 0 25

H

2

. We require more participants to see whether la-

belling a product as ’AI’ changes the UX with the

product. However, the significant difference regard-

ing Content Quality suggests more research might be

of interest regarding. Further, our results comply with

previous findings: the most important aspect in UX is

trust, a concept without which participants said they

would never adopt an app; users hold AI systems to

a higher standard than other applications; and users

evaluate AI systems that fail more harshly.

H

3

. The lack of interaction with the treatment might

indicate that user characteristics influence their recep-

tion of products, regardless of the ’AI’ label. The non-

significant interaction between treatment and partic-

ipants’ attitude towards technology may be due to

participants’ profile. Young and well-educated peo-

ple are not typically resistant to adopting new tech-

nologies, and might have equal reservations towards

both AI and non-AI-systems regarding health. As

for domain-specific knowledge, the mere interest in a

subject is not enough to significantly alter users’ sub-

jective evaluation of a product. Thus, assuming previ-

ous research findings apply to the younger generation,

participants need to either study or work in the related

domain to alter their evaluation of AI. Lastly, lan-

guage skills did not significantly interact with treat-

ment, suggesting other key factors are at the origin of

difference in results between treatments.

Limits and Future Research. Further research in-

volving a larger number of participants with similar

characteristics should be conducted to fully answer

our hypotheses, since small size effect prevents us

from drawing definitive conclusions. About 100 par-

ticipants per treatment group should be recruited to

acquire a meaningful size effect with a Confidence

Interval of 95% and normal distribution. To be repre-

sentative and useful to practitioners, this experiment

should also be replicated with less educated or older

populations and extended to different apps. In addi-

tion, to achieve action fidelity (Kieffer, 2017), similar

research should be conducted on users who are gen-

uinely sick with the same disease at the time of the ex-

periment to avoid artificial conditions and decrease er-

ror rate related to symptom input. The product would

likely seem more intuitive, since users would not have

to follow a scenario. Reservations regarding effects

such as users’ uniqueness neglect concerns, if appli-

cable, would then manifest themselves.

7 CONCLUSION

Our results manage to contribute to research in two

ways. First, Gustafson’s theory on gender biases as

pertaining to risk assessment might need to be re-

visited. Differences between genders are eroding

and becoming less relevant and appropriate to use

when dealing with younger generations. They may

also be much more context-dependent than previously

thought: a hot topic such as health during a pandemic

may soften or override gender-based variation in per-

ception to such an extent it might even become irrele-

vant. Second, though XP1 was conducted remotely,

we were still able to obtain actionable data: XP1

and XP2 showed very similar results, suggesting that

conducting UX experiments out of the lab is possi-

ble without inconsolably damaging the results. They

may, however, require more participants to reach the

same conclusions. This finding should be celebrated,

as we are likely to enter an era of pandemics that will

force us to change how we conduct experiments and

continue to produce valid and scientific work.

Healthcare systems require a momentous the help

of AI to properly undertake current and future chal-

lenges, such as an increase in age-related illnesses due

to ageing populations. Detecting the differences in

behaviour, perception and treatment remains an im-

portant subject to explore, since this information en-

ables UX practitioners to design counter strategies tai-

lored for medical AI apps’ unique needs.

UX research would benefit from clear, flexible and

extensive frameworks that can be used in a plethora

of contexts to evaluate users’ perceptions. Moreover,

these need to be widely employed because gaining

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

140

insights from cross-study comparisons and thus hav-

ing large bodies of comparable works is quintessential

to properly interpret components that influence users’

subjective evaluation.

REFERENCES

Araujo, T., Helberger, N., Kruikemeier, S., and de Vreese,

C. H. (2020). In AI we trust? perceptions about au-

tomated decision-making by artificial intelligence. AI

& SOCIETY, 35(3):611–623.

Buch, V. H., Ahmed, I., and Maruthappu, M. (2018). Arti-

ficial intelligence in medicine: current trends and fu-

ture possibilities. British Journal of General Practice,

68(668):143–144.

Cameron, G., Cameron, D. W., Megaw, G., Bond, R. R.,

Mulvenna, M., O’Neill, S. B., Armour, C., and

McTear, M. (2018). Best practices for designing chat-

bots in mental healthcare – a case study on iHelpr.

In Proceedings of the 32nd International BCS Human

Computer Interaction Conference.

Campbell, J. L. (2020). Healthcare experience design: A

conceptual and methodological framework for under-

standing the effects of usability on the access, deliv-

ery, and receipt of healthcare. Knowledge Manage-

ment & E-Learning, 12(4):505–520.

Chowriappa, P., Dua, S., and Todorov, Y. (2014). Intro-

duction to machine learning in healthcare informatics.

In Dua, S., Acharya, U. R., and Dua, P., editors, Ma-

chine Learning in Healthcare Informatics, pages 1–

23. Springer Berlin Heidelberg.

Chung, K. and Park, R. C. (2019). Chatbot-based heathcare

service with a knowledge base for cloud computing.

Cluster Computing, 22:1925–1937.

Davenport, T., Guha, A., Grewal, D., and Bressgott, T.

(2020). How artificial intelligence will change the fu-

ture of marketing. Journal of the Academy of Market-

ing Science, 48(1):24–42.

de Gennaro, M., Krumhuber, E. G., and Lucas, G. (2020).

Effectiveness of an empathic chatbot in combating ad-

verse effects of social exclusion on mood. Frontiers in

Psychology, 10:3061.

Denecke, K. and Warren, J. (2020). How to evaluate health

applications with conversational user interface? Stud-

ies in Health Technology and Informatics, 270:970–

980.

Distler, V., Lallemand, C., and Bellet, T. (2018). Accept-

ability and acceptance of autonomous mobility on de-

mand: The impact of an immersive experience. In

Proceedings of the 2018 CHI Conference on Human

Factors in Computing Systems, CHI ’18, pages 1—-

10, New York, NY, USA. Association for Computing

Machinery.

Dorsey, E. R. and Topol, E. J. (2020). Telemedicine 2020

and the next decade. The Lancet, 395(10227):859.

Dua, S., Acharya, U. R., and Dua, P., editors (2014). Ma-

chine Learning in Healthcare Informatics, volume 56

of Intelligent Systems Reference Library. Springer

Berlin Heidelberg.

Edison, S. and Geissler, G. (2003). Measuring attitudes to-

wards general technology: Antecedents, hypotheses

and scale development. Journal of Targeting, Mea-

surement and Analysis for Marketing, 12:137–156.

Gruson, D., Helleputte, T., Rousseau, P., and Gruson, D.

(2019). Data science, artificial intelligence, and ma-

chine learning: Opportunities for laboratory medicine

and the value of positive regulation. Clinical Biochem-

istry, 69:1–7.

Gustafson, P. E. (1998). Gender differences in risk per-

ception: Theoretical and methodological erspectives.

Risk Analysis, 18(6):805–811.

Hoff, K. A. and Bashir, M. (2015). Trust in automation:

Integrating empirical evidence on factors that influ-

ence trust. Human Factors, 57(3):407–434. PMID:

25875432.

Kieffer, S. (2017). Ecoval: Ecological validity of cues

and representative design in user experience evalua-

tions. AIS Transactions on Human-Computer Interac-

tion, 9(2):149–172.

Law, E. L.-C., van Schaik, P., and Roto, V. (2014). At-

titudes towards user experience (UX) measurement.

International Journal of Human-Computer Studies,

72(6):526–541.

Lee, J. D. and See, K. A. (2004). Trust in automation:

Designing for appropriate reliance. Human Factors,

page 31.

Lew, G. and Schumacher, R. M. (2020). AI and UX: Why

Artificial Intelligence Needs User Experience. Apress.

Longoni, C., Bonezzi, A., and Morewedge, C. K. (2019).

Resistance to medical artificial intelligence. Journal

of Consumer Research, 46(4):629–650.

Norman, D. A. (2005). Emotional Design: Why We Love

(or Hate) Everyday Things. Basic Books, 3rd edition.

Panesar, A. (2019). Machine Learning and AI for Health-

care: Big Data for Improved Health Outcomes.

Apress, 1st edition.

Schrepp, M. and Thomaschewski, J. (2019). Design

and validation of a framework for the creation of

user experience questionnaires. International Journal

of Interactive Multimedia and Artificial Intelligence,

5(7):88.

Sharan, N. N. and Romano, D. M. (2020). The effects of

personality and locus of control on trust in humans

versus artificial intelligence. Heliyon, 6(8):e04572.

Smyth, J. D. (2017). The SAGE Handbook of Survey

Methodology, pages 218–235. SAGE Publications

Ltd.

Sundar, S. S. (2020). Rise of Machine Agency: A Frame-

work for Studying the Psychology of Human–AI In-

teraction (HAII). Journal of Computer-Mediated

Communication, 25(1):74–88.

Zeitoun, J.-D. and Ravaud, P. (2019). L’intelligence arti-

ficielle et le m

´

etier de m

´

edecin. Les Tribunes de la

sant

´

e, 60(2):31–35.

User Reception of Babylon Health’s Chatbot

141