Data-Driven Fingerprint Reconstruction from Minutiae Based on Real

and Synthetic Training Data

Andrey Makrushin, Venkata Srinath Mannam and Jana Dittmann

Department of Computer Science, Otto von Guericke University, Universitaetsplatz 2, 39106 Magdeburg, Germany

Keywords:

Fingerprint Reconstruction, Minutiae Map, GAN, Pix2pix.

Abstract:

Fingerprint reconstruction from minutiae performed by model-based approaches often lead to fingerprint pat-

terns that lack realism. In contrast, data-driven reconstruction leads to realistic fingerprints, but the reproduc-

tion of a fingerprint’s identity remain a challenging problem. In this paper, we examine the pix2pix network

to fit for the reconstruction of realistic high-quality fingerprint images from minutiae maps. For encoding

minutiae in minutiae maps we propose directed line and pointing minutiae approaches. We extend the pix2pix

architecture to process complete plain fingerprints at their native resolution. Although our focus is on biomet-

ric fingerprints, the same concept fits for synthesis of latent fingerprints. We train models based on real and

synthetic datasets and compare their performances regarding realistic appearance of generated fingerprints and

reconstruction success. Our experiments establish pix2pix to be a valid and scalable solution. Reconstruction

from minutiae enables identity-aware generation of synthetic fingerprints which in turn enables compilation

of large-scale privacy-friendly synthetic fingerprint datasets including mated impressions.

1 INTRODUCTION

Fingerprint is a widely accepted and broadly used

means of biometric user authentication. Applications

making use of fingerprint authentication range from

unlocking mobile phones to access control to finan-

cial and governmental services. Hence, further devel-

opment and continuous improvement of fingerprint

matching systems cannot be overrated. The validity

of fingerprint processing and matching algorithms is

assessed empirically in experiments with large-scale

fingerprint datasets. Taking into account the current

trend of using machine learning and in particular deep

convolutional neural networks, an abundant amount

of training and validation samples is an indispensable

part of the development process.

Recent cross-border regulations on protection

of private data are a hurdle that make usage of real

biometric datasets difficult. For instance, the article

9 of the EU General Data Protection Regulation

(GDPR) prohibits processing of biometric data for

the purpose of uniquely identifying a natural person,

however with some exceptions. In general, biometric

data are seen as a special case of private data imply-

ing that collection, processing and sharing of such

data are under strong regulation. Many biometric

datasets have been recently removed from the public

access due to possible conflicts with regulations. The

prominent examples are the NIST fingerprint datasets

SD4, SD14 and SD27. The documentation of the

new NIST fingerprint dataset SD300 confirms that

all subjects whose biometrics appear in the dataset

are deceased (https://www.nist.gov/itl/iad/image-

group/nist-special-database-300). A straightforward

way to overcome the restrictions is introduction of

virtual individuals and synthesis of biometric samples

which belong to them. The synthetic fingerprints

should possess the same characteristics as real ones,

but it should be impossible to link them to any natural

person.

Fingerprint synthesis is a special case of realis-

tic image synthesis that has recently been solved by

generative adversarial networks (GAN). Generation

of random realistic fingerprints which inherit visual

characteristics of fingerprints in a training dataset is

not challenging looking at the current state of tech-

nologies. For instance, the established NVIDIA GAN

architectures such as StyleGAN (Karras et al. 2018)

can easily be trained to solve this task (Seidlitz et al.

2021 and Bahmani et al. 2021). The challenging

part is synthesis of mated impressions which requires

identity-aware conditional generation and a mecha-

nism for simulating intra-class variations.

Based on the fact that the majority of algorithms

rely on minutiae for fingerprint matching, it can be

stated that the fingerprint identity is de facto given

Makrushin, A., Mannam, V. and Dittmann, J.

Data-Driven Fingerprint Reconstruction from Minutiae Based on Real and Synthetic Training Data.

DOI: 10.5220/0011660800003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2023) - Volume 4: VISAPP, pages

229-237

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

229

by minutiae co-allocation. Minutiae are the local

characteristics of fingerprint ridges e.g. bifurcations

where a line splits up into two or terminations where

a line ends. A list of extracted minutiae is referred

to as minutiae template. There are several standards

describing the structure of a minutiae template e.g.

ISO/IEC 19794-2:2011 or ANSI INCITS 381-2004.

Hence, the straightforward way to control the

identity of synthetic fingerprints is reconstruction

from a minutiae template. On the one hand, recon-

struction from minutiae is clearly an ill-posed prob-

lem, on the other hand, minutiae locations reveal in-

formation about the ridge flow so that a reconstructed

fingerprint has both minutiae at correct locations and

a proper basic pattern. Note that reconstruction from

pseudo-random minutiae helps to fulfill requirements

on anonymity and diversity of synthetic fingerprints

(Makrushin et al. 2021) and also enables synthesis of

mated impressions.

As stated in (Mistry et al. 2020), fingerprints re-

constructed from minutiae based on mathematical

modeling lack realism. Recently, realistic finger-

prints have been reconstructed by applying condi-

tional GAN (Makrushin et al. 2022, Bouzaglo and

Keller 2022 and Wijewardena et al. 2022). Although

the identity control is a challenging part of such a

data-driven approach, it has been demonstrated that

the vast majority of reconstructed fingerprints match

the reference fingerprints.

Here, we further investigate the application of

the pix2pix network (Isola et al. 2017) to fingerprint

reconstruction from minutiae focusing on 512x512

pixel images with an optical resolution of 500 ppi.

Both, the generator and discriminator of the original

network are extended by one convolutional layer to

handle the aforementioned image size. Motivated by

(Kim et al. 2019, Makrushin et al. 2022 and Bouza-

glo and Keller 2022) we explore several minutiae en-

coding schemes for the optimal reconstruction. Last

but not least, we train the reconstruction (generative)

models not only from real but also from realistic syn-

thetic fingerprints to figure out the suitability of our

previously generated synthetic dataset for this task.

Since visual characteristics of GAN-synthesized fin-

gerprints are inherited from training samples, such a

synthesis approach is applicable not only for biomet-

ric (plain) or forensic (latent) fingerprints but also for

style transfer: plain to latent or latent to plain.

Our contribution can be summarized as follows:

• Modification of the pix2pix architecture to pro-

cess 512x512 pixel images

• Introduction of a dataset of 50k synthetic finger-

prints generated by our StyleGAN2-ada model

trained from the Neurotechnology CrossMatch

fingerprint dataset

• Training of pix2pix models from real and syn-

thetic datasets with two different types of minu-

tiae encoding: directed line and pointing minutiae

• Comparing synthetic and real datasets for the pur-

pose of training pix2pix models

• Comparing directed line and pointing minutiae

encoding approaches

Hereafter, the paper is organized as follows: Section

2 outlines the related work. Section 3 introduces our

concept of applying pix2pix to fingerprint reconstruc-

tion. Training of our generative models is described in

Section 4. Our experiments are in Section 5. Section

6 concludes the paper with the summary of results.

2 RELATED WORK

2.1 Model-Based Reconstruction

The de facto state-of-the-art approach to model-

based fingerprint synthesis is implemented in the

commercial tool SFinGe (Cappelli 2009). In or-

der to create realistic patterns the physical charac-

teristics of fingers, the contact between finger and

the sensor surface as well as sensor characteristics

are simulated. An open source implementation of a

model-based fingerprint generator similar to SFinGe

is called Anguli (Ansari 2011) and is available at

https://dsl.cds.iisc.ac.in/projects/Anguli/.

The most prominent study on inversion of finger-

print templates is (Cappelli et al. 2007). Based on the

zero-pole model (Sherlock and Monro 1993), the lo-

cations of singular points are estimated from the given

minutiae followed by estimation of orientation and

ridge frequency maps. Alternatively, ridge patterns

can be reconstructed using the minutiae triplet model

(Ross et al. 2007) or the AM-FM model (Feng and

Jain 2009 and Li and Kot 2012) which makes use of

eight neighbouring minutiae. An approach proposed

in (Cao and Jain 2015) reconstructs ridge patterns

based on patch dictionaries which allows for gener-

ating idealistic ridge patterns clearly lacking realism.

2.2 Data-Driven Reconstruction

Recently, fingerprint synthesis using GAN or a com-

bination of GAN and autoencoder has become a trend.

An identity-aware synthesis requires, however, a con-

ditional GAN in which the network is guided to gen-

erate specific data by conditioning over some mean-

ingful information rather than feeding a random latent

vector as proposed in the original GAN.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

230

As originally shown in (Kim et al. 2019) the task

of fingerprint reconstruction from minutiae can be

replaced by the image-to-image translation so that

minutiae points are drawn on an image called minu-

tiae map and then the minutiae map is translated to a

fingerprint image. The original pix2pix network has

been applied as one delivering state-of-the-art results

in image-to-image translation. The experiments are

conducted with an in-house fingerprint database. The

generalization ability of pix2pix in application to fin-

gerprint reconstruction from minutiae is challenged in

(Makrushin et al. 2022) by conducting cross-sensor

and cross-dataset experiments. The major limitation

of the original pix2pix is that it is tuned to process

images of 256x256 pixels or lower. The pix2pixHD

extension (Wang et al. 2018) is a cumbersome solu-

tion to process larger images.

In (Bouzaglo and Keller 2022) a convolutional

minutiae-to-vector encoder is used in combination

with StyleGAN2 (Karras et al. 2019) for identity-

preserving, attributes-aware fingerprint reconstruc-

tion from minutiae. The study in (Wijewardena et al.

2022) extends fingerprint reconstruction from minu-

tiae to reconstruction from deep network embeddings.

The inversion attack performances of both reconstruc-

tion schemes are evaluated and compared qualita-

tively and quantitatively.

To the best of our knowledge, in none of studies on

data-driven fingerprint reconstruction the generative

models are trained based on synthetic samples.

3 OUR CONCEPT

Let I be a fingerprint image and L : L

i

= (x

i

, y

i

, t

i

, θ

i

)

be a set of minutiae where (x

i

, y

i

) is a location of the

i-th minutiae, t

i

is a type (either bifurcation or ending)

and θ

i

is a direction. Our task is to train a conditional

GAN that is capable of generating a fingerprint im-

age I

∗

from L. The resulting synthetic fingerprint I

∗

should appear realistic and be biometrically as similar

as possible to the original fingerprint I.

3.1 Minutiae Encoding

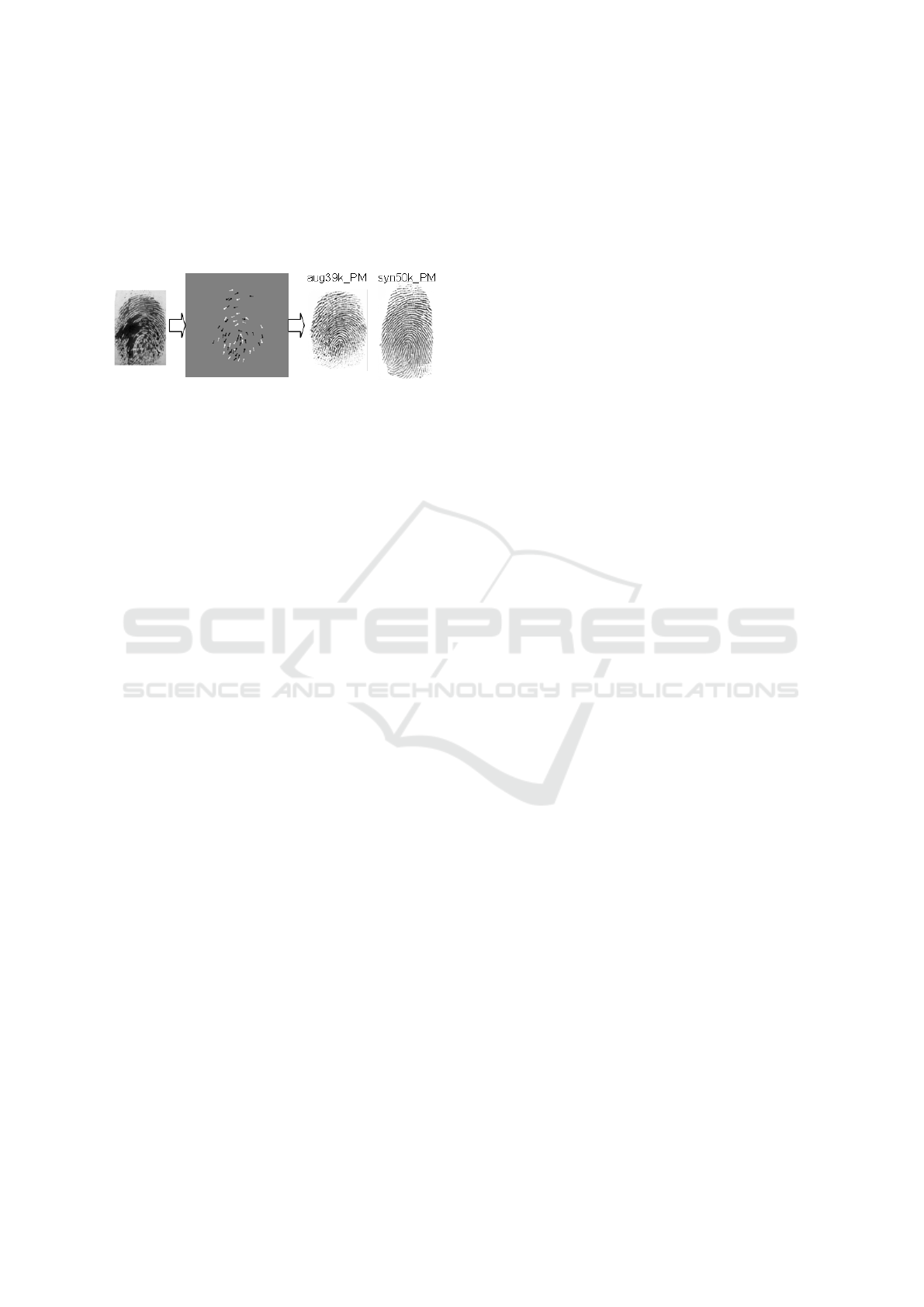

Construction of a minutiae map is visualized in Fig-

ure 1. It starts with minutiae extraction which can be

done with an arbitrary tool. We use Neurotechnology

VeriFinger SDK v12.0 (https://www.neurotechnology

.com/verifinger.html). Next, the resulting list of minu-

tiae is encoded into a minutiae map. We address three

encoding schemes: encoding by gray squares, by di-

rected lines and by pointing minutiae.

Gray Squares. Encoding minutiae by gray squares

is originally proposed in (Kim et al. 2019). For each

minutiae L

i

from the minutiae list L a gray square

of a fixed size is drawn with a center at (x

i

, y

i

). The

shade of gray encodes the minutiae angle θ

i

. In order

to differentiate between endings and bifurcations, we

use colors from 0 to 127 to quantize the directions of

endings and colors from 129 to 255 to quantize the

directions of bifurcations. The background color of a

minutiae map is set to 128. For 500 ppi fingerprints

depicted on 512x512 pixel images, the square size is

set to 13x13.

Directed Lines. As proposed in (Makrushin et al.

2022) each minutiae L

i

from the minutiae list L is

encoded by a directed line which starts at (x

i

, y

i

) and

is drawn to the direction given by the angle θ

i

. Bifur-

cations are encoded by white lines (color=255) and

endings by black lines (color=0). The background

color is set to 128. It is stated that such a color

selection emphasizes the dualism of bifurcations and

endings and the directed line encoding is superior to

a gray square encoding. It is also stated that shades

of gray used as direction encoding may dilute during

convolutions. For 500 ppi fingerprints, we set the line

length to 15 pixels and the line width to 4 pixels.

Pointing Minutiae. The idea of using pointing minu-

tiae is derived from (Bouzaglo and Keller 2022).

We define a pointing minutiae as a combination of

a square centered at (x

i

, y

i

) and a line pointing in

the minutiae direction θ

i

. Similar to directed line

encoding, bifurcations are encoded by white lines

(color=255) and endings by black lines (color=0).

The background color is set to 128. Directed line and

pointing minutiae encoding schemes perfectly reflect

the complimentary nature of endings and bifurcations

and therefore are robust to color inversion. For 500

ppi fingerprints the line length is set to 15 pixels, the

line width to 4 pixels and the square size to 7x7 pixels.

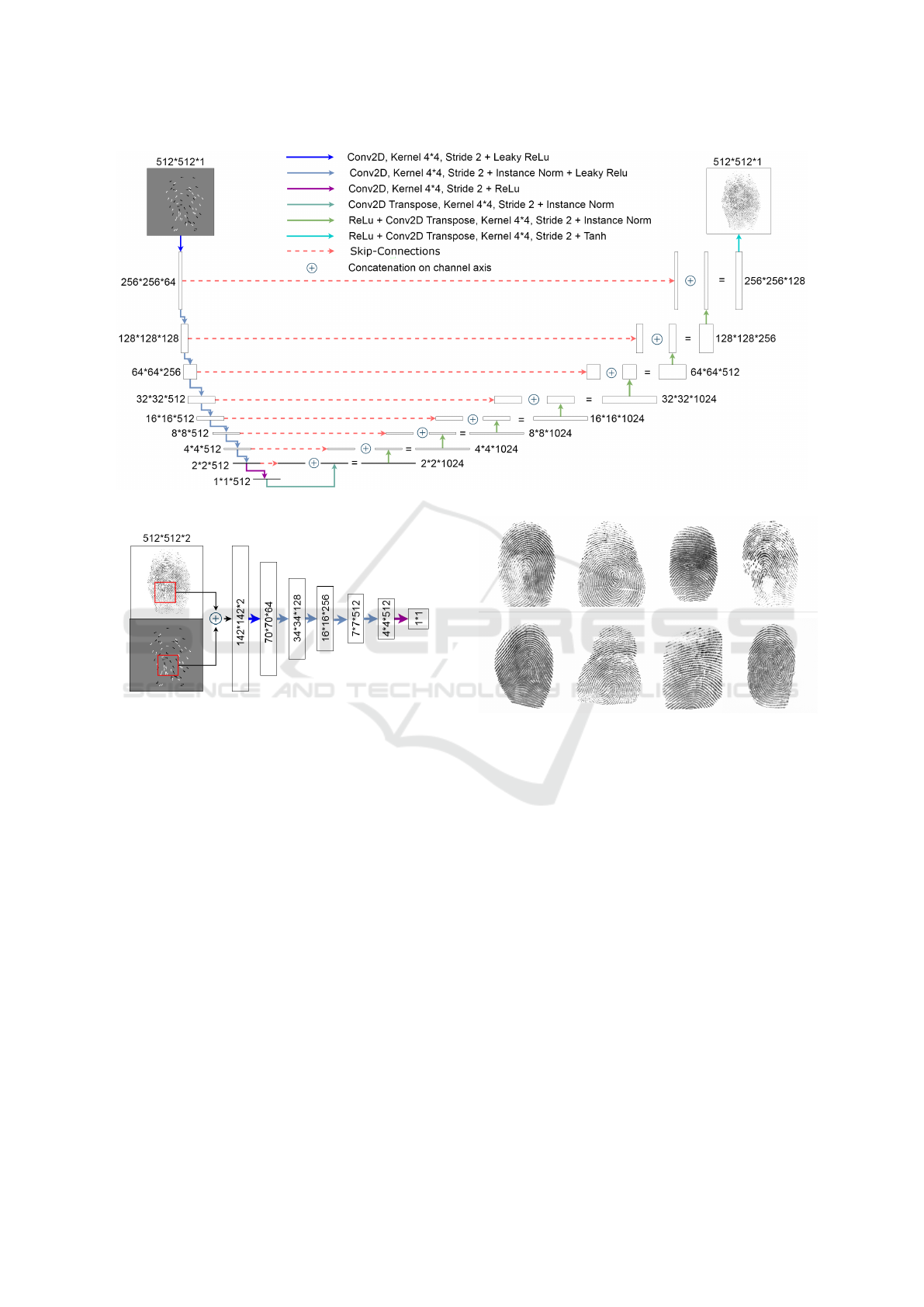

3.2 Pix2pix Architecture

The pix2pix network (Isola et al. 2017) applied in our

experiments is a conditional GAN consisting of gen-

erator and discriminator networks which are trained in

an adversarial manner. The generator produces realis-

tic images while the discriminator tells synthetic and

real images apart. In our setup, the generator trans-

lates a minutiae map into a fingerprint image and the

discriminator makes a decision for a tensor made of a

fingerprint image and a minutiae map which is taken

as a condition. After training is finished, we make no

use of the discriminator and the generator is used for

fingerprint reconstruction.

Data-Driven Fingerprint Reconstruction from Minutiae Based on Real and Synthetic Training Data

231

Figure 1: Minutiae map construction: minutiae extraction

followed by minutiae encoding (gray squares, directed lines

and pointing minutiae).

The original pix2pix architecture is designed for

256x256 pixel images which would require down-

scaling of a fingerprint image to make a complete fin-

gerprint fit into it. In order to support a fingerprint-

native resolution of 500 ppi and enable training with

images of 512x512 pixels, we extend both generator

and discriminator by one convolutional layer.

Generator. The generator architecture is based on

the U-Net originally proposed in (Ronneberger et al.

2015). In contrast to other approaches based on

encoder-decoder architecture used for solving the

image-to-image translation problem, the U-Net pass

information via skip connections to subsequent paral-

lel layers as shown in the Figure 2. Indeed, the stan-

dard encoder-decoder networks first gradually down-

sample a given input at each layer into a compressed

representation called bottleneck and then gradually

up-sample from the bottleneck at each layer to the

original size. Hence, such networks fully rely on the

bottleneck layer implying that it preserves all the in-

formation about the input. If it is not the case, the

reconstructed image might miss important details.

Discriminator.The convolutional patch-based dis-

criminator utilized in pix2pix classifies the given in-

put as synthetic or real at a patch level. It means that

the the network simultaneously makes a decision for

each image patch and the final decision is a majority

voting over all patches. The discriminator is a series

of convolution layers with an input of shape LxL and

the output of shape RxR. Each neuron at RxR clas-

sifies a single portion in LxL. The value of L at the

network input layer is set to 512 and the value of R at

the network output layer is 30 as in the original Patch-

GAN. A discriminator with a focus on single patch

classification is shown in Figure 3. Even though the

code is not explicitly written in a way to work at patch

level it happens implicitly due to the nature of a con-

volution operation. In contrast to the original work

where the receptive field (patch) size is 70x70 pixels,

the addition of a convolutional layer has led to the en-

largement of the receptive field to 142x142 pixels. It

can be thought as 142x142 patch convolves over the

given input image 30 times in each direction so that

each 142x142 patch of an input image is classified

by the corresponding bit in a 30x30 output. Finally

the majority voting is done for 900 single patch votes.

Note that the input of the discriminator is a tensor

comprised of the minutiae map given to the genera-

tor and the real or synthetic fingerprint. It is justified

that low frequencies can be captured by L1 loss and

an improvement is needed for capturing variations at

high-frequencies.

3.3 Training Datasets

The focus of this work is on generation of realistic

plain biometric fingerprints. Hence, for training our

generative models we selected high fidelity plain fin-

gerprints captured by optical biometric sensors such

as Cross Match Verifier 300. Our training dataset is

comprised of:

• The Neurotechnology CrossMatch dataset

that includes 408 samples and is provided on

https://www.neurotechnology.com/download.html

• The DB1 A+B dataset used for the Second

International Fingerprint Verification Competi-

tion (FVC2002) that includes 880 samples,

http://bias.csr.unibo.it/fvc2002/databases.asp

• The DB1 A+B dataset used for the Third

International Fingerprint Verification Competi-

tion (FVC2004) that also includes 880 samples,

http://bias.csr.unibo.it/fvc2004/databases.asp

Note that images in the FVC2002 DB1 A+B have

been collected with the TouchView II scanner by

Identix. The total number of samples is 2168.

Since, the selection of training data is the only

factor that predetermines the appearance of recon-

structed fingerprints, our concept can be equally well

applied to reconstitution of forensic fingerprints by

using a dataset of exemplars or latents for training.

Data Augmentation is performed aiming at increas-

ing the amount of training data as well as its variabil-

ity. We horizontally flip and rotate images with eight

rotation angles: +/-5°, +/-10°, +/-15°and +/-20°. In

doing so we increase the number of training samples

by the factor of 18 resulting in 39024 samples. This

dataset is further referred to as ”aug39k”.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

232

Figure 2: Generator architecture.

Figure 3: Discriminator architecture - single patch classifi-

cation; for color encoding of layer transforms see Figure 2.

Synthetic Dataset Generation. In order to check

whether training of a reliable pix2pix model can be

done solely based on synthetic fingerprints, we train

the StyleGAN2-ada network (Karras et al. 2020) from

scratch based on 408 samples from the Neurotech-

nology CrossMatch dataset padded to 512x512 pix-

els with the built-in augmentation. Then we apply

the StyleGAN2-ada generator to create 50000 random

fingerprints based on the seeds from 1 to 50000. The

truncation value has been set to 0.5. This dataset is

further referred to as ”syn50k”.

Due to the low number of unique identities in the

training dataset, the synthetic samples lack diversity

and it is not assured that no identity leakage happens

in regard to the training data. However, subjectively,

the visual quality of synthetic samples is very high

making them almost indistinguishable from real sam-

ples. Figure 4 shows several examples of real finger-

prints from the Neurotechnology CrossMatch dataset

together with synthetic fingerprints generated by our

StyleGAN2-ada model.

Figure 4: CrossMatch Verifier 300 fingerprints (top row) vs.

our StyleGAN2-ada generated fingerprints (bottom row).

4 IMPLEMENTATION

The pix2pix network used in our study is cloned from:

https://github.com/junyanz/pytorch-CycleGAN-and-

pix2pix/ The architectures of generator and discrim-

inator networks are modified to fit our concept as

presented in 3.2. Training is performed using the

desktop PC with the AMD Ryzen 9 3950X 16-Core

3.5 GHz CPU and 128 GB RAM with two Nvidia

Titan RTX GPUs with 24GB VRAM each.

Training Hyperparameters. Aiming at making our

generative models comparable to each other we use

the same learning rate of 0.002 and train the networks

for 60 epochs plus 60 epochs with a learning rate

decay. After training, we have realized that non of

the final models outperforms the earlier model snap-

shots. Hence, we have picked the model snapshots

Data-Driven Fingerprint Reconstruction from Minutiae Based on Real and Synthetic Training Data

233

after 15, 30 and 55 training epochs for the evaluation

to check whether more epochs produce better visual

results or lead to a better reconstruction. We first

trained the models with batch normalization (batch

size of 64) which resulted in noisy fingerprint pat-

terns with a lot of noise especially on image margins

which should contain white pixels only. As suggested

in (Ulyanov et al. 2016) batch normalization is re-

placed by instance normalization. It helps to avoid

noise but sometimes has a negative effect on a real-

ism of ridge lines. All fingerprint images in which no

single minutiae has been detected were excluded from

the training.

Resulting Generative Models. After several rounds

of training we have ended up with four models all pro-

ducing visually convincing fingerprint images:

• aug39k DL, DL = Directed Line

• aug39k PM, PM = Pointing Minutiae

• syn50k

DL

• syn50k PM

The first two models have been trained with the

augmented CrossMatch dataset (aug39k) with minu-

tiae encoded first by directed lines and then by point-

ing minutiae. The other two models have been

trained with the StyleGAN2-ada generated dataset

(syn50k) with minutiae also encoded by directed

lines and pointing minutiae. Here, we train no

models with minutiae encoded by gray squares be-

cause this encoding scheme has been demonstrated

to underperform directed line encoding (Makrushin

et al. 2022) and our preliminary training results

have also confirmed it. After paper publication all

our models together with generated synthetic fin-

gerprints will be made public at https://gitti.cs.uni-

magdeburg.de/Andrey/gensynth

Figure 5: Anguli (left) vs. URU (right) fingerprint.

5 EVALUATION

5.1 Test Datasets

The two test datasets used for evaluation are com-

pletely detached from the training datasets. Each test

dataset contains 880 samples.

The first dataset has been created using the open

source tool Anguli (Ansari 2011). With this dataset,

we expect that a minutiae extraction tool make no

errors. Hence, the fingerprint reconstruction perfor-

mance should be seen as idealistic.

The second dataset is the DB2 A+B dataset from

the Third International Fingerprint Verification Com-

petition (FVC2004) which contains real fingerprints

collected using a URU 4500 scanner. In contrast to

Anguli fingerprints, URU fingerprints are very chal-

lenging for any minutiae extractor. Moreover, the fin-

gerprints from URU scanners are dramatically differ

from those of CrossMatch scanners used for training

of reconstruction models. Figure 5 shows an Anguli

vs a URU sample.

5.2 Metrics

The realistic appearance of reconstructed finger-

prints is evaluated using NFIQ2 scores (https://

www.nist.gov/services-resources/software/nfiq-2).

Although NFIQ2 is designed to predict the utility

of a fingerprint meaning its effectiveness for a user

authentication process, NFIQ2 is known to correlate

well with the visual quality and therefore can be seen

as an indicator of realistic appearance. The scores

span from 0 to 100 with higher values for higher

utility. The scores higher than 35 indicate good

fingerprints just as higher than 45 perfect ones. The

scores lower than 6 indicate useless patterns.

The fingerprint reconstruction success is mea-

sured by the ratio of fingerprint pairs (target vs. re-

constructed) whose matching scores exceed a certain

threshold in all tested fingerprint pairs. This mea-

sure is identical to the True Acceptance Rate (TAR)

of a fingerprint matcher. Following the state-of-the-

art studies, we calculate Type1 TAR - matching the

reconstructed fingerprint against the finger impres-

sion from which the minutiae are extracted. Calcu-

lation of Type2 TAR (matching the reconstructed fin-

gerprint against a different finger impression to that

from which the minutiae are extracted) will be ad-

dressed in future work. Fingerprint matching scores

are similarity scores from 0 to infinity produced by the

VeriFinger SDK v12.0. The matching algorithm be-

hind VeriFinger is proprietary, but is known to mostly

rely on minutiae. The decision threshold is an inher-

ent parameter of a biometric matcher. It is defined

based on the required security level of a biometric sys-

tem which in turn is defined by expected False Accept

Rate (FAR) of a matcher. The common levels for FAR

are 0.1%, 0.01% and 0.001%, the lower the more se-

cure. The decision thresholds of VeriFinger for those

FAR are 36, 48 and 60 correspondingly.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

234

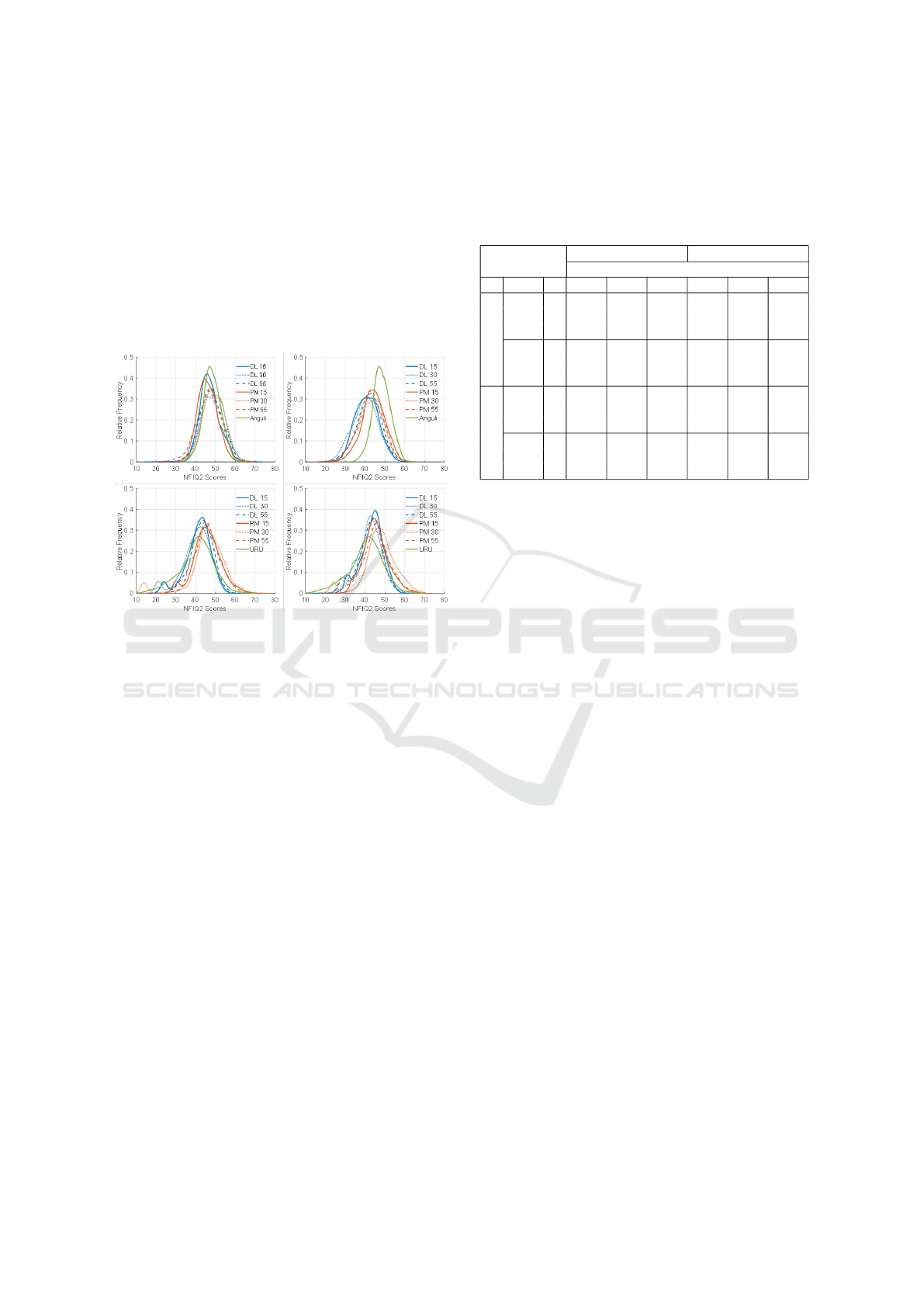

5.3 Results

Realistic Appearance. Figure 6 shows the distribu-

tions of NFIQ2 scores. In the first row, the minu-

tiae maps are derived from the Anguli dataset and in

the second row from the FVC2004 DB2 A+B dataset.

The left column represents models trained with the

aug39k dataset and the right column models trained

with the syn50k dataset. The NFIQ2 scores of origi-

nal images are taken as reference. We compare model

snapshots after 15, 30 and 55 training epochs.

Figure 6: Distributions of NFIQ2 scores for Anguli (top

row) and URU fingerprints (bottom row). The left column -

aug39k models, right column - syn50k models.

Our main observation is that the visual quality of

reconstructed fingerprints rather depends on training

samples than on samples from which minutiae have

been extracted. Indeed, NFIQ2 scores of original An-

guli samples are on average higher than that of re-

constructed samples no matter which model has been

used. In contrast, NFIQ2 scores of URU samples are

significantly lower than that of reconstructed samples

for all models.

In bottom diagrams the URU distributions have

tails towards lower NFIQ2 scores indicating the pres-

ence of several low quality samples in the dataset. For

such samples minutiae cannot be reliably extracted

leading to incomplete or messed up patterns in recon-

structed samples which explains the second peak in

reconstructed fingerprint distributions in the area of

low NFIQ2 values.

Models with PM encoding in comparison to mod-

els with DL encoding seem to produce on average fin-

gerprints with slightly higher NFIQ2 scores except for

the aug39k models tested on the Anguli dataset.

From diagrams, no clear conclusion can be drawn

which number of training epochs lead to the best vi-

sual quality. For instance, with URU test samples and

PM encoding, models trained with 30 epochs show

the best results. With URU test samples and DL en-

coding, the best aug39k model is obtained after 55

training epochs.

Table 1: Fingerprint reconstruction success (in %).

Anguli fingerprints URU fingerprints

Type1 TAR @ FAR of

Enc DB Ep 0.1% 0.01% 0.001% 0.1% 0.01% 0.001%

DL

aug39k

15 100.00 100.00 99.77 87.84 82.61 76.47

30 100.00 99.77 99.20 83.29 75.11 65.90

55 99.43 98.52 97.50 79.09 71.81 59.31

syn50k

15 97.38 97.63 86.47 78.86 71.36 59.20

30 92.50 84.88 70.56 68.52 55.79 42.84

55 93.18 86.25 74.31 72.38 62.15 48.18

PM

aug39k

15 100.00 100.00 100.00 95.45 95.00 93.52

30 100.00 100.00 100.00 95.11 94.31 93.29

55 99.88 99.43 98.86 93.52 91.36 88.86

syn50k

15 99.88 99.88 99.77 94.88 94.43 92.95

30 99.88 99.88 99.65 94.77 93.18 91.59

55 99.65 98.97 98.52 93.29 90.56 87.38

Fingerprint Reconstruction Success. Table 1 shows

the results of fingerprint reconstruction with the ide-

alistic Anguli images (upper bound of reconstruc-

tion rates) as well as with URU fingerprints from the

FVC2004 DB2 A+B dataset (realistic reconstruction

performance). The URU fingerprints for which the

VeriFinger minutiae extractor fails to find even a sin-

gle minutiae are excluded from the experiment as use-

less. Our observations regarding the reconstruction

rates can be summarized as follows:

• All models trained with aug39k are better than

their counterparts trained with syn50k.

• PM encoding outperforms DL.

• Model snapshots after 15 training epochs have the

best reconstruction performance.

• For PM encoding, the difference between 15

epochs and 30 epochs is almost negligible, while

with 55 epochs there is a considerable perfor-

mance loss.

• For DL encoding, the aug39k snapshot after 15

training epochs is better than that after 30 epochs

which is in turn better than that after 55 epochs,

but unexpectedly the syn50k snapshot after 15

epochs is better than that after 55 epochs which

is in turn better than that after 30 epochs. This ap-

plies for both test datasets Anguli and FVC2004

DB2 A+B.

Our most important finding is that with PM encoding

the performance drop between aug39k and syn50k is

in most cases lower than 1% and in no cases higher

than 1,7%. It indicates that StyleGAN2-ada finger-

prints can be perfectly used for training pix2pix mod-

els aiming at translating minutiae maps to fingerprint

images. Figure 7 shows a reconstruction example

Data-Driven Fingerprint Reconstruction from Minutiae Based on Real and Synthetic Training Data

235

with both models aug39k and syn50k. The 15 epoch

snapshots are utilized. Examples with 30 and 55

epoch snapshots can be found on our website. The

images show that our models perform a style transfer

i.e. the appearance of resulting fingerprints is similar

to those captured with a CrossMatch sensor.

Figure 7: Reconstruction example of a URU fingerprint.

Although the ridge patterns in reconstructed sam-

ples are not exactly the same as in target fingerprints,

the minutiae co-allocation is reproduced accurately

enough to enable matching with the source of minu-

tiae. Hence, we state that pix2pix in conjunction with

PM or DL encoding is a valid approach for fingerprint

reconstruction from minutiae. We have also shown

that the pix2pix architecture is scalable to larger im-

ages and training with 512x512 pixel images can be

done within a reasonable time frame.

6 CONCLUSION

Reconstruction of realistic fingerprints from minu-

tiae is an important step towards controlled genera-

tion of high-quality datasets of synthetic fingerprints.

Since, the minutiae co-allocation defines the finger-

print’s identity, reconstruction from pseudo-random

minutiae maps ensures anonymity and diversity of re-

sulting patterns and enables synthesis of mated fin-

gerprints. This paper introduces and compares four

pix2pix models trained with fingerprint images of

512x512 pixels at fingerprint-native resolution from

real and synthetic datasets with two types of minu-

tiae encoding. Our experiments show that a pix2pix

network is a valid solution to the reconstruction prob-

lem with a scalable architecture enabling training

with 512x512 pixel images, that reconstructed ridge

patterns appear realistic, that pointing minutiae en-

coding is superior to directed line encoding, that an

augmented dataset of 39k real fingerprints used for

training is superior to a dataset of 50k synthetic fin-

gerprints, but if pointing minutiae encoding is ap-

plied, the difference in reconstruction performances

between real and synthetic training data is lower than

1.7%. Future work will be devoted to compilation of

a large-scale synthetic fingerprint dataset appropriate

for evaluation of fingerprint matching algorithms.

ACKNOWLEDGEMENTS

This research has been funded in part by the Deutsche

Forschungsgemeinschaft (DFG) through the research

project GENSYNTH under the number 421860227.

REFERENCES

Ansari, A. H. (2011). Generation and storage of large syn-

thetic fingerprint database. M.E. Thesis, Indian Insti-

tute of Science Bangalore.

Bahmani, K., Plesh, R., Johnson, P., Schuckers, S., and

Swyka, T. (2021). High fidelity fingerprint generation:

Quality, uniqueness, and privacy. In Proc. ICIP’21,

pages 3018–3022.

Bouzaglo, R. and Keller, Y. (2022). Synthesis and recon-

struction of fingerprints using generative adversarial

networks. CoRR, abs/2201.06164.

Cao, K. and Jain, A. K. (2015). Learning fingerprint re-

construction: From minutiae to image. IEEE TIFS,

10:104–117.

Cappelli, R. (2009). SFinGe. In Li, S. Z. and Jain, A., ed-

itors, Encyclopedia of Biometrics, pages 1169–1176.

Springer US, Boston, MA.

Cappelli, R., Maio, D., Lumini, A., and Maltoni, D. (2007).

Fingerprint image reconstruction from standard tem-

plates. IEEE PAMI, 29:1489–1503.

Feng, J. and Jain, A. (2009). FM model based fingerprint re-

construction from minutiae template. In Proc. ICB’09,

pages 544–553.

Isola, P., Zhu, J.-Y., Zhou, T., and Efros, A. A. (2017).

Image-to-image translation with conditional adversar-

ial networks. In Proc. CVPR’17, pages 5967–5976.

Karras, T., Aittala, M., Hellsten, J., Laine, S., Lehtinen, J.,

and Aila, T. (2020). Training generative adversarial

networks with limited data. CoRR, abs/2006.06676.

Karras, T., Laine, S., and Aila, T. (2018). A style-based

generator architecture for generative adversarial net-

works. CoRR, abs/1812.04948.

Karras, T., Laine, S., Aittala, M., Hellsten, J., Lehtinen, J.,

and Aila, T. (2019). Analyzing and improving the im-

age quality of StyleGAN. CoRR, abs/1912.04958.

Kim, H., Cui, X., Kim, M.-G., and Nguyen, T.-H.-B.

(2019). Reconstruction of fingerprints from minu-

tiae using conditional adversarial networks. In Proc.

IWDW’18, pages 353–362.

Li, S. and Kot, A. C. (2012). An improved scheme for

full fingerprint reconstruction. IEEE TIFS, 7(6):1906–

1912.

Makrushin, A., Kauba, C., Kirchgasser, S., Seidlitz, S.,

Kraetzer, C., Uhl, A., and Dittmann, J. (2021). Gen-

eral requirements on synthetic fingerprint images for

biometric authentication and forensic investigations.

In Proc. IH&MMSec’21, page 93–104.

Makrushin, A., Mannam, V. S., B.N, M. R., and Dittmann,

J. (2022). Data-driven reconstruction of fingerprints

from minutiae maps. In Proc. MMSP’22.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

236

Mistry, V., Engelsma, J. J., and Jain, A. K. (2020). Finger-

print synthesis: Search with 100 million prints. CoRR,

abs/1912.07195.

Ronneberger, O., Fischer, P., and Brox, T. (2015). U-

Net: Convolutional networks for biomedical image

segmentation. CoRR, abs/1505.04597.

Ross, A., Shah, J., and Jain, A. K. (2007). From template

to image: Reconstructing fingerprints from minutiae

points. IEEE PAMI, 29:544–60.

Seidlitz, S., J

¨

urgens, K., Makrushin, A., Kraetzer, C., and

Dittmann, J. (2021). Generation of privacy-friendly

datasets of latent fingerprint images using generative

adversarial networks. In Proc. VISIGRAPP’21, Vol.4:

VISAPP, pages 345–352.

Sherlock, B. and Monro, D. (1993). A model for in-

terpreting fingerprint topology. Pattern Recognition,

26(7):1047–1055.

Ulyanov, D., Vedaldi, A., and Lempitsky, V. (2016). In-

stance normalization: The missing ingredient for fast

stylization. CoRR, abs/1607.08022.

Wang, T.-C., Liu, M.-Y., Zhu, J.-Y., Tao, A., Kautz, J.,

and Catanzaro, B. (2018). High-resolution image

synthesis and semantic manipulation with conditional

GANs. In Proc. CVPR’18.

Wijewardena, K. P., Grosz, S. A., Cao, K., and Jain, A. K.

(2022). Fingerprint template invertibility: Minutiae

vs. deep templates. CoRR, abs/2205.03809.

Data-Driven Fingerprint Reconstruction from Minutiae Based on Real and Synthetic Training Data

237