Specification Based Testing of Object Detection for Automated Driving

Systems via BBSL

Kento Tanaka

1

a

, Toshiaki Aoki

1 b

, Tatsuji Kawai

1 c

, Takashi Tomita

1 d

, Daisuke Kawakami

2

and Nobuo Chida

2

1

Japan Advanced Institute of Science and Technology, 1-1, A sahi-dai, Nomi, Ishikawa, 923-1292, Japan

2

Advanced Technology R&D Center, Mitsubishi Electric Corporation,

8-1-1, Tsukaguchi-Honmachi, Amagasaki, Hyogo, 661-8661, Japan

Keywords:

Automated Driving, Machine Learning, Deep Learning, Object Detection, Testing, Formal Specification,

Image Processing.

Abstract:

Automated driving systems(ADS) are major trend and the safety of such critical system has become one

of the most important research topics. How ever, AD S are complex systems that involve various elements.

Moreover, it is difficult to ensure safety using conventional testing methods due to the diversity of driving

environments. Deep Neural Network(DNN) is effective for object detection processing that takes diverse

driving environments as input. A method such as Intersection over Union ( I oU) that defines a threshold value

for the discrepancy between the bounding box of the inference result and the bounding box of the ground-

truth-label can be used to test the DNN. However, there is a problem that these tests are difficult to sufficiently

test to what extent they meet the specifications of ADS. Therefore, we propose a method for converting formal

specifications of ADS written in Bounding Box Specification Language (BBSL) into tests for object detection.

BBSL is a language that can mathematically describe the specification of OEDR ( O bject and Event Detection

and Response), one of the tasks of ADS. Using these specifications, we define specification based testing of

object detection for ADS. Then, we evaluate that this test is more safety-conscious for ADS than tests using

IoU.

1 INTRODUCTION

Automated driving systems (ADS) are ac tively devel-

oped by several manufacturers an d their failure can

cost human life (Devi et al., 2020). There fore, ensur-

ing their safety has become one of the most important

research topics. However, ADS are complex systems

including various elements, such as ma chine learning,

route search algor ithm and sensing technology. Fur-

thermor e, the driving environment surrounding those

systems is diverse. Therefore, it becomes difficult

to design specifications and test them as in general

software development methods. To solve this prob-

lem, government agencies in various countr ie s are re-

searching frameworks for designing and testin g ADS

by defining and designing multiple scenarios of driv-

ing environments, systematizing use cases, setting

a

https://orcid.org/0000-0002-3532-6954

b

https://orcid.org/0000-0002-1209-6375

c

https://orcid.org/0000-0003-1247-5663

d

https://orcid.org/0000-0003-1249-7862

safety standards, and establishing evaluation frame-

works. For example, the National Highway Traffic

Safety Administra tion (NHTSA) in the United States

first determines the level of a utomation of the auto-

mated driving system to be developed, and then de-

velops the Operatio nal Design Domain(ODD) and the

Object and Event and Response ( OEDR) ar e clar ified.

ODD is the specific conditio ns under which ADS or

its functions are designed to o perate, such as road

types, spe ed limits, lighting conditions, weather con-

ditions, and other operational constraints. The various

driving environments are then classified into some-

what abstract scenar ios, such as ”merging into an-

other lane a t low speed,” and OEDRs are designed

based on these scenarios to monitor the driving e n-

vironm ent and respond appr opriately to these objects

and events (Thorn et al., 2018). Th ese levels of au-

tomation, ODDs, and OEDRs ar e defined in the SAE

J3016 standar d (Co mmittee, 2021 ).

Among the components of ADS with the above

characteristics, our research focuses on the object de-

250

Tanaka, K., Aoki, T., Kawai, T., Tomita, T., Kawakami, D. and Chida, N.

Specification Based Testing of Object Detection for Automated Driving Systems via BBSL.

DOI: 10.5220/0011997400003464

In Proceedings of the 18th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE 2023), pages 250-261

ISBN: 978-989-758-647-7; ISSN: 2184-4895

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

tection process. In object detection for ADS, deep

neural networks (DNN) are used to cope with large

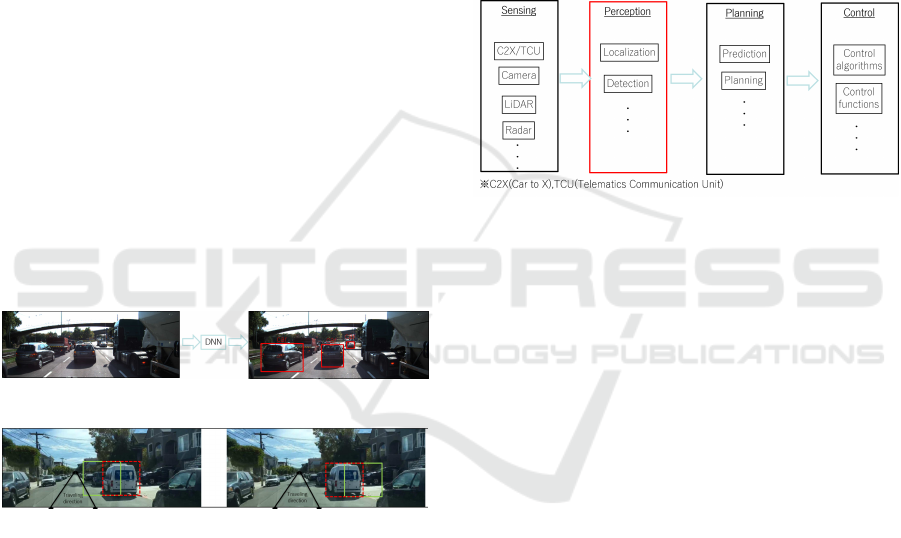

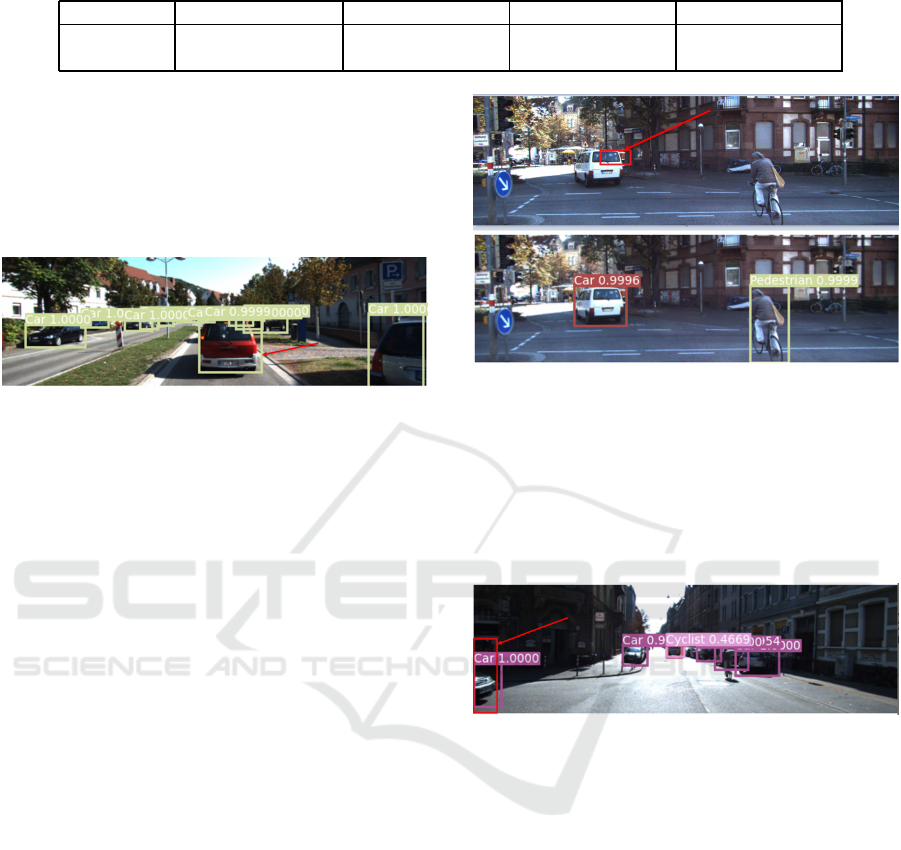

amounts of input. As shown in Figure 1, this DNN

typically takes an image as input and a labeled rect-

angle c alled bounding box as the ou tput of the infer-

ence result. The general approach to testing such a

DNN is to match the inferred label by the DNN with

the ground-truth label, given an image. However, it

is difficult for DNN to detect the position of a par-

ticular object in perfect agreement with its ground-

truth label. Therefore, in pr a ctice, it is tested using a

threshold called Intersection over Union (IoU) (Ever-

ingham and Winn, 20 12). IoU is a number that quan-

tifies the degree of overlap between two boxes. In

the case of object detection, IoU evalua te s the over-

lap of the ground -truth label and inferred labe l. For

example, Figure 2 shows two images with a ground-

truth la bel (red bounding box) and an inferred label

(green bounding box). In this case, the IoU is about

1/3 in both images. However, given a safety require-

ment to stop when there is a vehicle in the direction of

travel, the DNN that inf e rs the gree n bounding box in

the left image violates the safety requirement, while

the DNN that infers the green bounding box in the

left image satisfies it. The above shows that the test

of object detection using IoU is at variance with the

specifications for AD S. Therefore, it is necessary to

study specificatio n-based testing methods for ADS.

Figure 1: DNN inputs and outputs.

Figure 2: Problems when thresholds are defined in the IoU.

In order to achieve specification based testing of

the object detection process, a rigorously defined

specification of how the system should operate in

a given driving environment is req uired. Among

the tasks of automated driving systems, th ere is a

languag e called BBSL for writing specifications for

OEDR (Tanaka et al., 2022). BBSL is a language that

can describe specifications mathematically by repre-

senting objects such as other vehicle s and pedestrians

as b ounding b oxes and using positional relationships

between bounding boxes. The specification based

testing proposed in this paper is a method for spec-

ification based testing of the object detection pro-

cess, which has a particular impact on safety am ong

the co mponents of automated driving systems. Us-

ing a simple example specification, we evaluate that

the test is specification based an d includes important

safety contests that cannot be considered in conven-

tional IoU based testing m ethods.

2 RELATED WORK

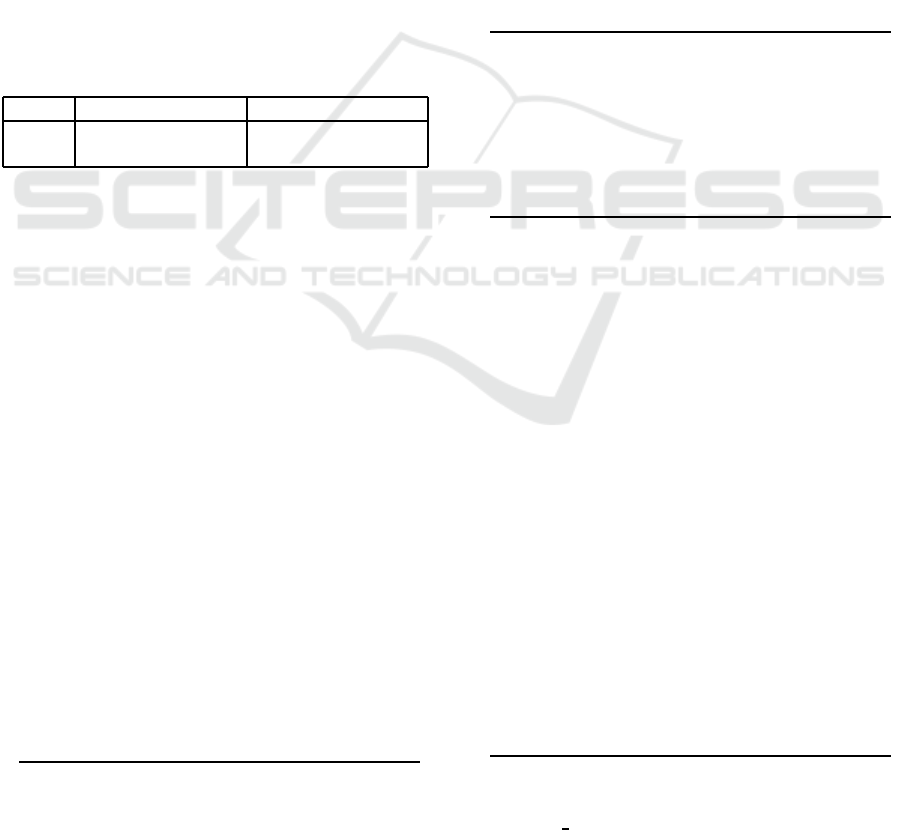

A common ADS is composed of four functional mod-

ules, namely, the sensing module, the perception

module, the planning module and the control mod-

ule in Figure 3. The purpose of our study is to judge

whether a bug exists in the perception module o f the

perception of these.

Figure 3: Typical architecture of an ADS.

The general approach of testing a DNN in the

perception module is to match the inferred label by

the DNN with the ground-truth label, given an im-

age. Usually, these ground-tr uth lab e ls are ob ta ined

by manual la beling (Sun et al., 2019) ( Kondermann

et al., 2016 ). Then, IoU, also known as Jaccard in-

dex, is used as a threshold to de termine whe ther the

positional descriptions of the ground-truth-label and

inferred-label match o r not. IoU compu tes th e dis-

crepancy between the bounding box of the inference

result and the bounding box of th e ground-truth-label,

but in our study, we use the specification of AD S and

compute it ba sed on whether the specification is sat-

isfied.

In perception testing, due to the huge input

space of the DNN models, it is a great challenge

to specify the oracles fo r all the input ima ges.

One solution to this problem is metamo rphic test-

ing.Metamorphic testing was intr oduced to tackle the

problem when the test oracle is absent in tr aditional

software testing (Chen et al., 2 020). This test de-

scribes the system f unctionality in terms of generic re-

lations(metamorphic relations) between inputs rather

than as mapping s between input and output. In ADS,

Various metamor phic relations have been proposed,

over images and frames in a scenario. For example,

in object detection , ther e is a metamorphic relation

that objects detected in the original im a ge should also

be detected in the synthetic images (Shao, 2021), and

Specification Based Testing of Object Detection for Automated Driving Systems via BBSL

251

for LiDAR based object detection, there is a metamor-

phic relatio n over the image that the noise points out-

side the Region of Interest (ROI) should not affect the

detection of objects w ithin the ROI (Zhou and Sun ,

2019). Also, two metamorphic relations over fra mes

in a scenario are proposed to respectively for iden-

tifying temporal and stereo inconsistencies that exist

in d ifferent fram es of a scenario (Ramanagopal et al.,

2018). The temporal metamorphic relation says that

an object detected in a previous frame should also be

detected in a later frame, and the stereo metamorphic

relation is defined in a similar way, for regulating the

spatial consistency of the ob je cts in different frames

of a scenario.

Recently, temporal logics based formal specifica-

tions have been adopted in the monitoring of the per-

ception module of ADS. In general, temporal log-

ics are a family of formalism used to express tem-

poral properties of systems. For example, a new

form called Timed Quality Temporal Logic (TQTL),

which can be used to express te mporal properties that

should be held by the perception mod ule during ob-

ject detection (Dokhanchi et al. , 2018) . Co nceptu-

ally, the prop e rties expressed by TQTL are similar to

the ones in the metamorphic relations as mentioned

above (Ramanagopal et al., 2018). however, by adopt-

ing such a formal specification to express these pr op-

erties, one can synthesize a m onitor that automati-

cally c hecks the satisfiability of the system execution.

TQTL is later extended to Spatio-Temporal Q uality

Logic (STQL) (Balakr ishnan et al., 20 21), which has

enriched syntax to express more r efined properties

over the boundin g boxes u sed in object detection. In

our study, we test the perception modu le of ADS u s-

ing a formal specification called BBSL. BBSL does

not currently use temporal logics, so temporal proper-

ties cannot be expressed. However, BBSL can express

properties related to position on the bounding box in

more detail than STQL.

3 BOUNDING BOX

SPECIFICATION LANGUAGE

The specification-based test proposed in this paper

uses specifications written in a formal specification

languag e called BBSL (Tanaka et al., 20 22). BBSL

is a language that describes objects in an image as

bounding boxes and the positional relationships be-

tween the bounding boxes at a level of abstraction

that can be defined manually. By definin g a bounding

box as a two-dimensional interval in in te rval analy-

sis (Moore et al., 2009), BBSL strictly describes posi-

tional relationships on an image as relationships o n a

bounding box (or set of bou nding boxes). I n additio n,

special relations and fu nctions are defined for posi-

tional relations that cannot be described b y interval

analysis. In this section, we show the types and rela-

tions of BBSL and outline the specifications of ADS

written in BBSL.

First, we explain the basic types used in BBSL ,

interval and bb. The interval type represents an inter-

val like decelerating distance in Figure 4. This inter-

val has th e same defin ition as that defined in interval

analysis. This is shown in Definition 1.

Figure 4: Visual r epresentation of the driving environment

and abstract specifications written in BBSL.

Definition 1. Let a

,a be real numbers. Then an inter-

val a is defined as follows:

a = [a

,a] = {x ∈ R : a ≤ x ≤ a}

Objects in the ima ge, such as vehicles, are

represented by bounding boxes defined by a two-

dimensional interval. The bb type represents a bound-

ing box , which has the same definition in interval

analysis. This is shown in Definition 2.

Definition 2. Let a

i

,i = 1,...,n be interva ls.Then a

multidimensional interval a is defined as follows:

a = (a

1

,...,a

n

)

In particular, when n = 2, the multidimensional inter-

val a is called the bound ing box.

The magnitude relationship of intervals has the

same definition as the interval analysis. However,

since interval analysis is defined to calculate real val-

ues with ro unding er rors and measurement errors,

many relationships cannot be applied to the descrip-

tion of p ositional relationships. Therefore, BBSL pro -

vides unique operations and re la tionships that are use-

ful for describing image specifica tions.

First, we introduce the basic relation s used to de-

scribe the positional relation ship between intervals.

These definitions are the same as those used in in-

terval analysis and are shown in Definition 3, Defin i-

tion 4.

Definition 3. Let a,b be intervals.Then the binary re-

lation < on two intervals is defined as follows:

a < b ⇔

a < b

Definition 4. Let a, b be intervals.Then the equiva-

lence relation = on two intervals is defined as follows:

a = b ⇔ a

= b and a = b

ENASE 2023 - 18th International Conference on Evaluation of Novel Approaches to Software Engineering

252

With these relatio nships, it is possible to represent

the positional relationship between the vehicle’s y-

coordinate interval and the stoppingDistance defined

as the distance to be maintained, as shown in Figure 5.

Figure 5: Example of simple positional relationships.

The definition of the inclusion relationship be-

tween intervals is shown in Definition 5.

Definition 5. Let a,b be intervals.Then the inclusion

relation ⊆ on two intervals is defined as follows:

a ⊆ b ⇔ b

≤ a and a ≤ b

For the positional relationship between these in-

tervals, ≈ is introduced as a shorthand notation to

express the relationship where two intervals overlap,

which frequently occ urs in the specification of auto-

mated driving systems. This is shown in Definition 6.

Definition 6. Let a,b be intervals.Then the overlap

relation ≈ on two intervals is defined as follows:

a ≈ b ⇔ b

≤ a and a ≤ b

In addition, a function called PROJ function is

provided to map the object to the x- an d y-axis side

intervals. This is shown in Definition 7.

Definition 7. Let a be bounding box (a

x

,a

y

).Then the

projection function PROJ

i

from bounding box to in-

terval is defined as follows:

PROJ

i

(a) = a

i

,PROJ

i

(a) = [

a,a],PROJ

i

(a) = [a,a]

,i ∈ {x,y}

BBSL can describe various positional relation-

ships strictly using the PROJ function and interval re-

lationships described above for the bounding boxes

representin g objects. For example, Figure 6 shows

some examples of a positional relationship for two

bounding boxes a and b describ e d in BBSL.

By using such types and functions, BBSL can de-

scribe OEDR specifications for ADS strictly from an

image perspective. Listing 1 shows an example of

a specification described in BBSL. This specificatio n

defines the c ases in wh ic h ADS should or should not

Figure 6: Examples of various positional relationships that

can be distinguished using the PROJ function.

stop, depending o n the position of a single vehicle.

The spe c ifica tion described in BBSL is divided into

three blocks, as shown in this specification. The first

block, external function block (lines 1-8 of Listing 1),

defines functions to receive values needed in advance

to write this specification. For example, the specifi-

cation provides a function to che c k for the presenc e

of a vehicle, a function to return the bounding box

surrounding the veh ic le , and a function to return an

interval representing the stopping distance.

The second block, the precondition block (lines

10-12 in Listing 1), is used to descr ibe the conditions

for ap plying the specification . For example, it states

that the specification is written on the assumption that

there will always be a vehicle of some kind.

The third block, case b lock (lines 14-19 and 21-25

in Listing 1), is the main part of this specification . It

describes a case for e a ch reaction of the ADS and the

conditions un der which the system should react. This

means that in the list 1, th e specification strictly de-

scribes the need to stop if a vehicle is before or over-

laps the stop pingDistance from the viewpoint of the

forward camera image, as in Figure 5.

Listing 1: Specifications of ADS response to t he distance to

the vehicle.

1

exfunction

2

//Judge the existence of the vehicle.

True if it exists.

3

vehicleExists():bool

4

//Calculate the bounding box that

surrounds the vehicle.

5

vehicle():bb

6

//Calculate the interval that represents

the range to be stopped.

7

stoppingDistance():interval

8

endexfunction

9

10

precondition

11

[vehicleExists() = true]

12

endprecondition

13

14

case stop

15

let vehicle : bb = vehicle(),

16

stoppingDistance : interval =

stoppingDistance() in

17

PROJ

y

(vehicle)

≈

stoppingDistance

Specification Based Testing of Object Detection for Automated Driving Systems via BBSL

253

18

or PROJ

y

(vehicle)

<

stoppingDistance

19

endcase

20

21

case NOT stop

22

let vehicle : bb = vehicle(),

23

stoppingDistance : interval =

stoppingDistance() in

24

PROJ

y

(vehicle)

>

stoppingDistance

25

endcase

4 SPECIFICATION BASED

TESTING

As described in the previous section, specifica-

tions fo r ADS written using BBSL are unambigu-

ous and rigorously described. Therefore, specifica-

tions written in BBSL ca n be used to rigorously define

specification-based tests for object detection pro c ess-

ing. .In this section, we make some p reparations and

define specificatio n-based tests.

The specification written in BBSL is represents

for images with multiple labeled bound ingboxes, as

shown in Figure 7. First, th e set of images with such

labeled boundingboxes is sh own in Definition 11.

Figure 7: Examples of Image with multiple boundingboxes

labeled.

Definition 8. Let I be a set of images, BB be a set

of boundingboxes, and L be a set of labels such as

vehicle and ped estrian. The set of images I

BB

with

multiple boun dingboxes with labels is defined a s fol-

lows:

I

BB

= I × 2

BB×L

Next, a specification written in BBSL for u se in

testing is defined in Definition 9.

Definition 9. A specification written in BBSL is de-

fined as a pair S = (C, f ).

• C is the set of cases. For example, in Listing 1,

C = {stop,NOT stop}.

• Let f be defined by the fu nction f : I

BB

− → 2

C

where I

BB

− = {i

BB

∈ I

BB

| i

BB

satisfies precondi-

tion con dition. }.

Hereafter, I

BB

− of f in the specification S = (C, f ) is

denoted as dom( f ).

For the pu rpose of preparing a test covering all

images or defining a unique test, the three types of

properties on the specification written in BBSL a re

defined in Definitio n 10.

Definition 10. For a specification S = (C , f ) written

in BBSL, the properties of the three types of specifica-

tions are de fined as follows:

S is an exhaustive specifi c ation

⇔ ∀i ∈ dom( f ).( f (i) 6=

/

0)

S is an exclusionary specification

⇔ ∀i ∈ dom( f ),∃c ∈ C.

( f (i) = {c }or f (i) =

/

0)

S is a non-red undant specification

⇔ ∀c ∈ C, ∃i ∈ dom( f ).(c ∈ f (i))

Thus, the sp ecification of Listing 1 is an exhaus-

tive, exclusionary, and non-redundant specification.

In this paper, unless otherwise sp ecified, specification

S written in BBSL is an exhaustive, exclusionary, and

non-redundant specification .

Next, th e de finitions of the basic elements neces-

sary to de fine a test are given in Defin ition 11.

Definition 11. The test data T d is a subset of the set

of images I. The object d etection system to be tested

is exactly the DNN shown in Figure 1, wh ich takes

an image as input an d return s an image with inferred

labels as output. This is defined by the function SU T :

I → I

BB

. In addition, assume that the ground-truth

label is given by the function GT : I → I

BB

.

Using the above definitions, a test case is defined

in Definition 12.

Definition 12. Given an exhaustive, exclusionary,

and non- redundant specific ation S = (C, f ), test data

T d and ground-truth d ata GT , test ca se CASE is d e -

fined as follows:

CASE = {(td, f (GT (td))) | td ∈ T d)}

In Definition 12., f (GT (td)) plays the role of a

specification-based pseudo-oracle.

Finally, the decision conditions for the

specification-based test ar e defined in Definition 13.

Definition 13. Given an exhaustive, exclusionary,

and non-redundant specification S = (C, f ), SUT

and a test case C ASE, the test decision condition

P : CASE → {T,F} is defined for any case (td,c) ∈

CASE is defined as follows:

P(td, c) =

T f (SUT(td)) = c

F othewise

The above definitions enabled spec ification-based

testing using specifications written in BBSL.

ENASE 2023 - 18th International Conference on Evaluation of Novel Approaches to Software Engineering

254

5 EXPERIMENT

In th is section, we actually prepare an object detec-

tion system for ADS, a gran d tuluth dataset with two-

dimensional bounding box, and a specification writ-

ten in BBSL, an d compare the proposed test method

with the IoU method.

5.1 Preparations

First, we used the KITTI dataset (Geiger et al., 2013)

for T d

1

and T d

2

(T d

1

∩ T d

2

=

/

0) as the two types o f

test data set and GT as the grand truth label. These

are 349 and 1300 images from the forward camer a

of ADS, respec tively, as shown in Table 1. Eac h im-

age contains one or more vehicles, and the number

of vehicles in the dataset is 2736 and 5644, respec-

tively. The specifications of these grand tuluth la-

bels are given by enclosing each vehicle in a two-

dimensional bounding box.

Table 1: Test Data Details.

Name Number of images Number of vehicles

T d

1

349 2736

T d

2

1300 5644

Next, two ob je c t detection systems to b e tested are

prepare d as SUT

1

and SUT

2

, re spectively. Both object

detection algorithms used in these systems ar e ba sed

on Yolov3 (Redmon and Farhadi, 201 8), and the DNN

network used is d a rknet53. In our study, we prepared

two types of object detection systems by using

yolov3-kitti.weights(https://dr ive.google.com/file/d/

1BRJDDCMRXdQdQs6-x-3PmlzcEuT9wxJV/view)

for SUT

1

and yolov3.weights(https://github.com/

patrick013/Object-Detection---Yolov3/blob/master/

model/yolov3.weights) for SUT

2

from publicly

available weighting files, without training them

indepen dently.

In addition, we prepared four simple specifica-

tions written in BBSL as S

1

, S

2

, S

3

and S

4

on which to

base our tests. S

1

is Listing 1 alr eady described above

as an example, which defines the cases in which ADS

should or should not stop depending on the distance

of the vehicle in front. S

2

is shown in Listing 2. This

specification d efines the cases in which ADS should

and should not stop dependin g on whether the target

vehicle encroaches into the linear distance of the own

vehicle or not.

Listing 2: Specifications of ADS response to t he position of

x-axis the vehicle.

1

exfunction

2

//Judge the existence of the vehicle.

True if it exists.

3

VehicleExists():bool

4

//Calculate the bounding box that

surrounds the vehicle.

5

Vehicle():bb

6

//Calculate the interval that represents

the range to be stopped.

7

directionAreaDistance():interval

8

endexfunction

9

10

precondition

11

[VehicleExists() = true]

12

endprecondition

13

14

case stop

15

let Vehicle : bb = lVehicle(),

16

directionAreaDistance : interval =

directionAreaDistance() in

17

PROJ

x

(Vehicle)

≈

directionAreaDistance

18

endcase

19

20

case NOT stop

21

let Vehicle : bb = Vehicle(),

22

directionAreaDistance : interval =

directionAreaDistance() in

23

not(PROJ

x

(Vehicle)

≈

directionAreaDistance)

24

endcase

S

3

is shown in Listing 3. This spe c ifica tion com-

bines S

1

and S

2

, and specifies that it is a case of Stop

if the distance betwe e n vehicles is close and the vehi-

cle has entered the travel direction, and a case of Not

Stop otherwise.

Listing 3: Specifications with two cases combining S

1

and

S

2

.

1

exfunction

2

vehicleExists():bool

3

vehicle():bb

4

directionAreaDistance():interval

5

stoppingDistance():interval

6

endexfunction

7

8

precondition

9

[vehicleExists() = true]

10

endprecondition

11

12

case stop

13

let vehicle : bb = vehicle(),

14

directionAreaDistance : interval =

directionAreaDistance(),

15

stoppingDistance : interval =

stoppingDistance() in

16

PROJ

x

(vehicle)

≈

directionAreaDistance

17

and PROJ

y

(vehicle)

≈

stoppingDistance

18

endcase

19

20

case NOT stop

21

let vehicle : bb = vehicle(),

22

directionAreaDistance : interval =

directionAreaDistance() in

23

not(PROJ

x

(vehicle)

≈

directionAreaDistance) or

24

not (PROJ

y

(vehicle)

≈

stoppingDistance)

25

endcase

Finally, S

4

is shown in Listing 4. Like S

3

, this

specification is a combinatio n of S

1

and S

2

. The con-

dition of x

yStop is the same as that of S

3

, but the

Specification Based Testing of Object Detection for Automated Driving Systems via BBSL

255

condition of No t Stop is divided into ysafe xwarning,

xsafe ywarning and NOT warning in more detail de-

pending on the relationship between the y and x coo r-

dinates on the image.

Listing 4: Specifications with four cases combining S

1

and

S

2

.

1

exfunction

2

vehicleExists():bool

3

vehicle():bb

4

directionAreaDistance():interval

5

stoppingDistance():interval

6

endexfunction

7

8

precondition

9

[vehicleExists() = true]

10

endprecondition

11

12

case x_ystop

13

let vehicle : bb = vehicle(),

14

directionAreaDistance : interval =

directionAreaDistance(),

15

stoppingDistance : interval =

stoppingDistance() in

16

PROJ

x

(vehicle)

≈

directionAreaDistance

17

and PROJ

y

(vehicle)

≈

stoppingDistance

18

endcase

19

20

case ysafe_xwarning

21

let directionAreaDistance : interval =

directionAreaDistance(),

22

stoppingDistance : interval =

stoppingDistance() in

23

PROJ

x

(vehicle)

≈

directionAreaDistance

24

and not(PROJ

y

(vehicle)

≈

stoppingDistance)

25

endcase

26

27

case xsafe_ywarning

28

let directionAreaDistance : interval =

directionAreaDistance(),

29

stoppingDistance : interval =

stoppingDistance() in

30

not(PROJ

x

(vehicle)

≈

directionAreaDistance)

31

and PROJ

y

(vehicle)

≈

stoppingDistance

32

endcase

33

34

case NOT warning

35

let vehicle : bb = vehicle(),

36

directionAreaDistance : interval =

directionAreaDistance() in

37

not(PROJ

x

(vehicle)

≈

directionAreaDistance) and

38

not (PROJ

y

(vehicle)

≈

stoppingDistance)

39

endcase

Each of these spe c ifica tions is interpreted by im-

plementing the conditions in Python using BBSL se-

mantics. Since all of the specifications described here

describe conditions for a single vehicle object, the test

is interpreted f or on ly one vehicle for each image with

multiple veh ic le s in it. Therefore, the number of test

cases is 2736,5644, which is the number of vehicle

objects in T d

1

and T d

2

, resp ectively.

The implementation of each exfunction was given

as a constant. T he values are those of the coordi-

nates with th e upper left corner as 0 in all images

(size is 1242 × 375), and the values are shown in Ta-

ble 2. In Table 2, sD() in the Given Exfunction col-

umn stands for stoppingDistance() and dA() for direc-

tionAreaDistance.

Table 2: Details of all tests.

Test

ID

S

Given

Exfunctions

Td SUT

ID1 S

1

sD()

= [275, 375]

T d

1

SU T

1

ID2 S

1

sD()

= [275, 375]

T d

1

SU T

2

ID3 S

1

sD()

= [250, 375]

T d

1

SU T

1

ID4 S

1

sD()

= [300, 375]

T d

1

SU T

1

ID5 S

2

dA()

= [420, 821]

T d

1

SU T

1

ID6 S

3

sD()

= [275, 375]

dA()

= [420, 821]

T d

1

SU T

1

ID7 S

4

sD()

= [275, 375]

dA()

= [420, 821]

T d

1

SU T

1

ID8 S

3

sD()

= [275, 375]

dA()

= [420, 821]

T d

1

SU T

2

ID9 S

4

sD()

= [275, 375]

dA()

= [420, 821]

T d

1

SU T

2

ID10 S

1

sD()

= [275, 375]

T d

2

SU T

1

ID11 S

2

dA()

= [420, 821]

T d

2

SU T

1

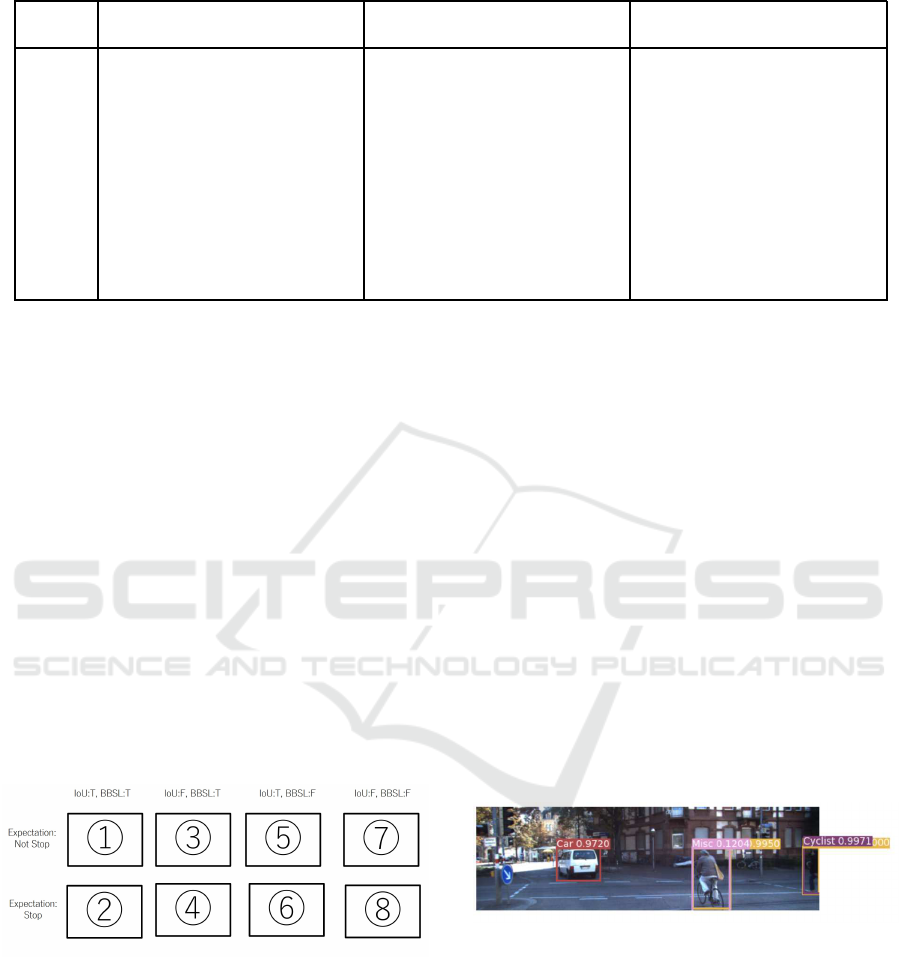

5.2 Evaluation

The ju dgment results of the propo sed test and the

judgment results with IoU

0.6

and IoU

0.8

on 11 differ-

ent tests are shown in Table 3. It can be seen that,

unlike the IoU ca lc ulated only from the test data an d

SUT, the proposed meth od changes its judgment de-

pending on the given specification. In addition, even

though the test data sets T d

1

and T d

2

were not pre-

pared artificially, it is clear that the re is a large dis-

crepancy between the I oU test an d th e proposed test.

Furthermore, for test ID1, we measured the judg-

ment result in the case of IoU

0.6

and the judgment

ENASE 2023 - 18th International Conference on Evaluation of Novel Approaches to Software Engineering

256

Table 3: Tests results.

Test ID

IoU

0.6

(T /T + F)

IoU

0.8

(T /T + F)

suggestion test

(T /T + F)

ID1 2180/(2180 + 556) = 79.7% 1432/(1432 + 1304) = 52.3% 2527/(2527 + 209) = 92.4%

ID2 1221/(1221 + 1515) = 44.6% 791/(791 + 1945) = 28.9% 1929/(1929 + 807) = 70.5%

ID3 2180/(2180 + 556) = 79.7% 1432/(1432 + 1304) = 52.3% 2500/(2500 + 236) = 91.4%

ID4 2180/(2180 + 556) = 79.7% 1432/(1432 + 1304) = 52.3% 2524/(2524 + 212) = 92.3%

ID5 2180/(2180 + 556) = 79.7% 1432/(1432 + 1304) = 52.3% 2591/(2591 + 145) = 94.7%

ID6 2180/(2180 + 556) = 79.7% 1432/(1432 + 1304) = 52.3% 2604/(2604 + 132) = 95.2%

ID7 2180/(2180 + 556) = 79.7% 1432/(1432 + 1304) = 52.3% 2502/(2502 + 234) = 91.4%

ID8 1221/(1221 + 1515) = 44.6% 791/(791 + 1945) = 28.9% 2065/(2065 + 671) = 75.5%

ID9 1221/(1221 + 1515) = 44.6% 791/(791 + 1945) = 28.9% 1867/(1867 + 869) = 68.2%

ID10 4842/(4842 + 802) = 85.8% 3182/(3182 + 2462) = 56.4% 5299/(5299 + 345) = 93.9%

ID11 4842/(4842 + 802) = 85.8% 3182/(3182 + 2462) = 56.4% 5314/(5314 + 330) = 94.2%

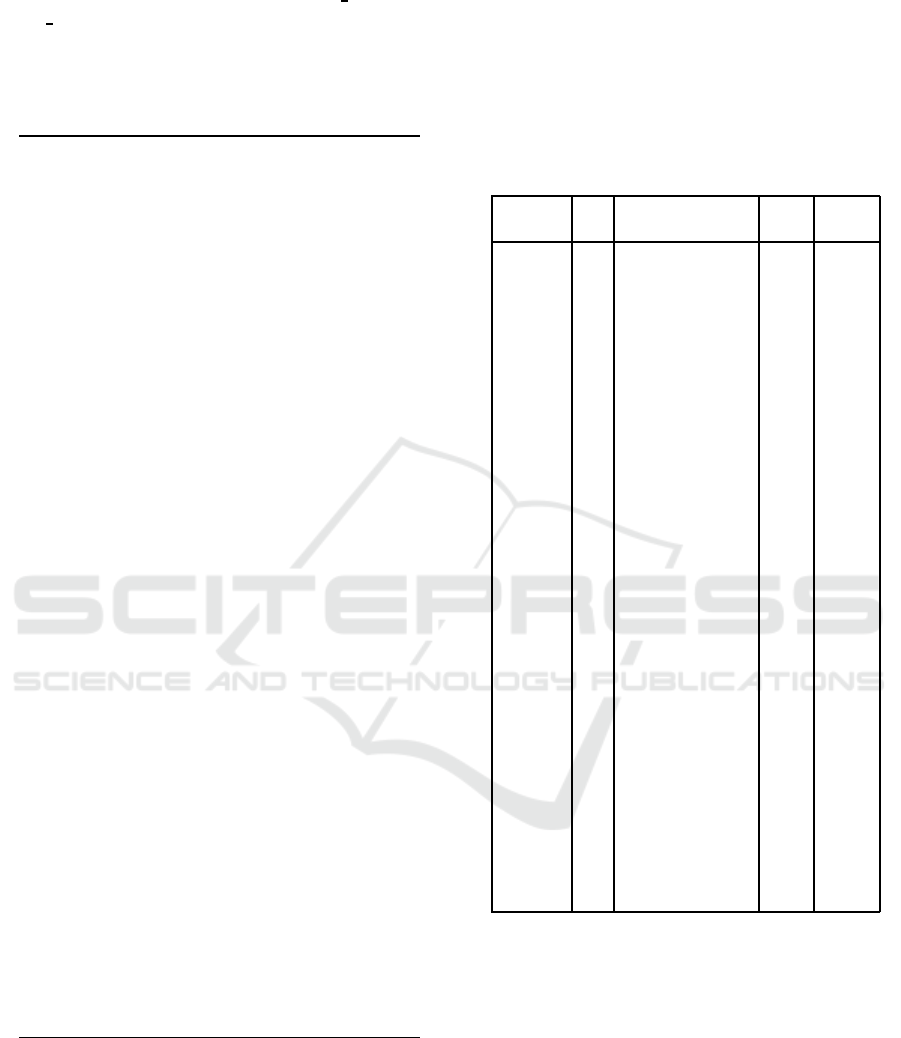

result in the case of the proposed test for each case

of expected value as shown in Figure 8. The aggre-

gate re sults are shown in Ta ble 4. The numbers to

the r ight of

1

to

8

shown in Table 4 are the number

of applicable test cases, corresp onding to

1

to

8

in

Figure 8, respectively. In Ta ble 4 and Figure 8, BBSL

refers to the proposed test, and expe ctation is c in this

test case (td,c). As can be seen fr om the results, al-

though the number of test cases is biased, th ere are

test cases that corre spond to all of them. In particular,

the existence of test cases corresponding to

3

,

4

,

5

,

and

6

indicates that there are cases in which the pro-

posed meth od makes decisio ns that differ fr om those

of IoU. In additio n, the existence of test cases corre-

sponding to

6

and

8

indicates that the proposed test

detects malfunctions such as not being able to stop

when ADS sould be stop based on the specification.

The above ind icates that th e proposed method detects

the test cases where the IoU is inadequate if these data

are valid.

Figure 8: How to classify test cases in Test ID1.

6 DISCUSSION

As shown in Table 3, the propo sed test can be per-

formed on various test da ta sets as long as they are

ground truth d ata with a two-dimensional bound-

ing box . Since many test data for object de tec-

tion systems have two-dimensional bound ing boxes

as groun d truth data, the proposed test can be per-

formed on many existing data sets. In addition, the

proposed method determines the tolerance for mis-

alignment using an algorithm that is clearly different

from IoU in that it d etermines whether a test case is

acceptable or not based on the specifications written

in the prepare d BBSL.

Furthermore, we focus on each of the data in Ta-

ble 4 and d iscuss its effectiveness based on each ex-

ample. First, we discussed test case to

1

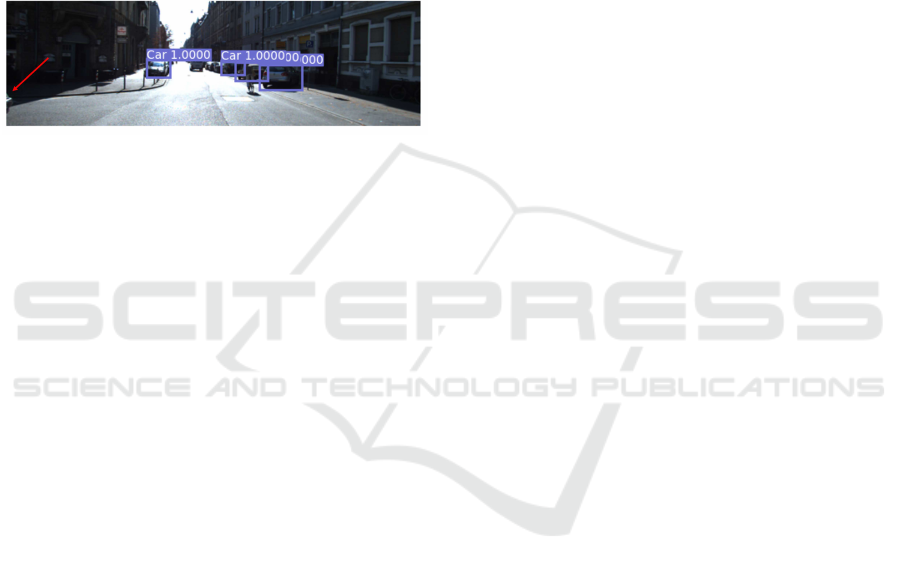

on Figure 9,

in other words, a case where the expected value is Not

Stop in the specification, and where both the proposed

test and IoU

0.6

are judged as T. The white vehicle in

Figure 9 is the re levant test case, and the bounding

box of the inferred r e sult is indicated. Since this white

vehicle has a sufficient distance from its own vehicle,

the expe cted value is ”Not Stop” based on th e specifi-

cation of Listing 1 . The bounding box in the inferred

result is determined to be T with IoU

0.6

because it is

detected with almost no deviation. In such a case,

there is clearly no defect in the specification of ADS,

and the proposed test is judged to be T, which is rea-

sonable.

Figure 9: Example of a test case corresponding to

1

on

Figure 8.

Next, we will discuss the test case classified as

2

,

in other words, an environment where th e expected

value is Stop in the specification, and Figure 10 is an

example where the proposed te st and IoU

0.6

are both

determined to be T. The red vehicle in Figure 10 is

the relevant test case, and the bounding box of the in-

ferred result is shown. Since this vehicle is very close

to its own vehicle, the expected value is Stop based

on the specification of L isting 1. The bounding box

in the inferred result has some visual deviation, but

Specification Based Testing of Object Detection for Automated Driving Systems via BBSL

257

Table 4: Test case classification results for test ID1.

expectation IoU

0.6

:T, BBSL:T IoU

0.6

:F, BBSL:T IoU

0.6

:T, BBSL:F IoU

0.6

:F, BBSL:F

Not stop

1

1744

3

345

5

4

7

74

stop

2

404

4

34

6

28

8

103

because it is a large object in the image, Io U is calcu-

lated to be high, and it is judged to be T with IoU

0.6

.

Since the direction of the misalignment is in the lat-

eral direction and the distanc e recog nition is correct,

there is no defect in the spec ifica tion of ADS, and the

proposed test is also judged to be T, which is reason-

able.

Figure 10: Example of a test case corresponding to

2

on

Figure 8.

Next, we will discuss the test case classified as

3

,

in other words, in other words, an environment with

an expected value of Not stop in the specification,

which is judged as T in th e proposed test and F in the

IoU

0.6

test. The vehicle surrounded by a red bound-

ing box of grand-truth label hidden by a white vehicle

and a building in the upper image of Figure 11 is the

correspo nding test case, and the bound ing box of the

inferred result is shown in the lower image. Since

this vehicle is sufficiently far from own vehicle, the

expected value is Not stop based on the specification

of Listing 1. Since the object d e te ction sy stem unde r

test cann ot recognize the vehicle in the back and only

detects the white car in the front, the IoU is very low

and is determined to be F with IoU

0.6

. However, if

the white vehicle in the front can be correctly re cog-

nized and judged to have a sufficient distance, there

is no defect in the specification base of ADS, and the

proposed test is judged to be T, which is reasonab le .

Next, we will discuss the test case classified as

4

, in other words, an environment with an expected

value of Stop in the specification, w hich is judged as

T in the proposed test and F in rhw IoU

0.6

test. The

black vehicle surrounde d by a red bounding box in

the image in Figure 12 is the relevant test case, and

the inferred result is indicated by the purple bound-

ing box. Since this vehicle is very close to the own

vehicle, the expected value is Stop based on the spec-

ification of Listing 1. Since only the first pa rt of the

vehicle is shown in the image, the object detection

system recognizes the vehicle as smaller than the ac-

tual size of the vehivle indicated by the red bounding

box, and the IoU is very low and is determined to be F

Figure 11: Example of a test case corresponding to

3

on

Figure 8.

at IoU

0.6

. However, most of the discrepancy is in the

height of the vehicle, and there is almost no discrep-

ancy in the re cognition of the distance from own ve-

hicle. Therefore, there is no defect in the specification

base of ADS, and the proposed test is also determined

to be T, whic h is reasonab le.

Figure 12: Example of a test case corresponding to

4

on

Figure 8.

Next, we will discuss the test case classified as

5

, in other words, in other words, an environment

where the expected value is Not stop in the specifica-

tion a nd the proposed test d etermines F and IoU

0.6

to

be T. The red vehicle in the image in Figure 13 is the

correspo nding test case, and the inferred result is indi-

cated by the purple bounding box . The red vehicle in

the image in Figu re 13 is the test case, and the inferred

result is indicated by the purple bounding b ox. Since

this vehicle is far away fr om the own vehicle, the ex-

pected value is ”Not stop” based on the specification

of Listing 1. And since this inferred re sult shows a

slight downward of the misalignment but almost no

upward or lateral of misalignment, the IoU is not low

and is determined to be T at IoU

0.6

. H owever, because

of the downward of the misalignment, there is a possi-

bility that the vehicle may stop in a situation where it

is not necessary to sto p due to the misalignment with

respect to the recognition o f the distance between the

ENASE 2023 - 18th International Conference on Evaluation of Novel Approaches to Software Engineering

258

vehicles. Therefore, this is a fault based on the spec-

ification that reduces the reliability of ADS, and the

proposed test also judg e s it a s F, which is reasonable.

Unlike the case of judging by I oU, the proposed

test can reflect the dir ection of misalignm e nt in the

judgment criteria by using the specification written in

BBSL. Therefore, as in the red vehicle in Figure 10,

eve n if the degree of misalignment of the inferred re-

sult is large, it can be judged to be True on the spec-

ification basis. Conversely, even when the degree of

misalignment of the inferred result is small, as in the

case of the black vehicle in Figure 1 3, it can be deter-

mined to be false on the specification basis. This is an

important feature of the proposed test.

Figure 13: Example of a test case corresponding to

5

on

Figure 8.

Next, we will discuss the test case classified as

6

,

in other words, an environment wh ere the expected

value is Stop in the specification, and the proposed

test determines F and IoU

0.6

to b e T. The vehicle sur-

rounded by the red bounding box on the uppe r image

in Figure 14 is the corresponding test case, and the

bounding box of the detection result is shown on the

lower image. Since this vehicle is close to the own ve-

hicle, the expected value is Sto p based on the specifi-

cation of Listing 1. The bounding box in the inferred

result is determined to be T with IoU

0.6

because it is

detected with almost no deviation. However, since th e

subtle misalignment of the distance between vehicles

crosses the border of the stop condition in the speci-

fication, it is a defect based on the specification that

reduces the saf ety of the autom atic driving system,

and is judged as F in the proposed test, which is rea-

sonable. Thus, the proposed test strictly determines T

or F for test cases around the bou ndary of case co ndi-

tion described in the specification. Since the bound-

ary of case condition on the specification is a par t that

should be func tionally tested especially carefully, it is

an important feature of the proposed test that this part

is rigorously tested.

Next, we will discuss the test case classified as

7

,

in other words, an environment wh ere the expected

value is Not Stop in the spec ifica tion, and wh ere the

proposed test and IoU

0.6

are both judg e d as F. The red

vehicle partially hidden by a building in th e image in

Figure 15 is a relevant test c ase. This vehicle has a

Figure 14: Example of a test case corresponding to

6

on

Figure 8.

sufficient distanc e from the vehicle, so the exp ected

value is Not stop based on the specification of List-

ing 1. Since the object detection system under test

cannot recognize this red vehicle at all and there is

no overlap with the inferred r esult of the white vehi-

cle before it, IoU is 0 a nd IoU

0.6

is determined to be

F. This is because Listing 1 is sufficiently far from

the vehicle. This is an exam ple where the inference

result does not satisfy the conditions of the precon-

dition bloc k of Listing 1, while the ground truth data

satisfy Not stop.

Figure 15: Example of a test case corresponding to

7

on

Figure 8.

Finally, we will discuss the test case classified as

8

, in other words, Figure 16, which is an environ-

ment where the expected value is Stop in the specifi-

cation, and where both the proposed test and IoU

0.6

determine the value to be F. The vehicle on th e far left

in Figure 16 is the relevant test case, and since this

vehicle is very close to its own vehicle, it is a n ex-

pected value Stop based on the specification of List-

ing 1. And since th e ob je ct detection system under

test doe s not recognize this vehicle at a ll, IoU is 0 and

IoU

0.6

is determined to be F. This is an example of

a situa tion in which Listing 1 specification says Stop

for ground truth data , but the car does not exist as a

inferred result, and the precondition block conditio n

of Lisitng 1 specification is no longer satisfied.

The examples shown in Figures 15 and 16 are

both examples of c ases where the inf erred results fall

outside scope of the specification. In th e first exam-

ple, the white vehicle before the vehicle is correctly

recogn ized and judged to have a sufficient distance,

Specification Based Testing of Object Detection for Automated Driving Systems via BBSL

259

so there is no defect, and it is reasonable to judge the

car to be T in the proposed test. However, in the sec-

ond example, the system is not able to detect a vihicle

at a position where it should stop due to a sho rt dis-

tance between own vehicle and the vehicle, and this

is a defect that reduces the saf e ty of ADS. Thus, there

are cases in which the inferred result is outside the

scope of the specification even if the image is su b-

ject to the specification and the expe cted value can

be defined, and it was not possible to clarify how to

give aHEREHEREHEREaccurate judgment to th ese

cases. Therefore, the pr oposed test gives priority to

safety and defines both cases to be judged as F.

Figure 16: Example of a test case corresponding to

8

on

Figure 8.

Based on the above discussion, the proposed test

returns a valid decision result as a test of safety and

reliability based on the specification. This is clearly

different from th e test method used to evaluate the

performance of object detectio n systems su ch as IoU.

Furthermore, it is an important test when incorporat-

ing an object detection system into a large piece of

software that requires high reliability and high safety,

such as an ADS.

7 CONCLUSIONS

By using the proposed test method, the object detec-

tion system of an ADS can be tested based on the

specifications. Since the test is based on the degree

to wh ic h th e object detection system under test meets

the specification when it is incorporated into an ADS

with the relevant specification, the test is able to detect

cases of impair safety or reliability defects that are not

detected by conventional testing methods. For th ese

reasons, our test is an important and innovative test

for incorpora ting object detection systems into com-

plex and safety critical software such as ADS.

Finally, we show three future works. The first is to

formally verify spe c ifica tions written in BBSL o n the-

orem proving. Since BBSL has not yet been formal-

ized in a th eorem proving system, and no pa rser has

been prepared, this study was programmed in python

so that the implementation would be equivalent to the

specification used in the experiments.This work is im-

portant for testing in larger, mor e realistic environ-

ments and will contribute to the development of real-

time monito ring tools for object detection systems.

The second is to extend specification-based testing

with mo re complex ADS specifications described in

BBSL.The tests exper imented with in our study used

only a simple specification for the relatio nship be-

tween a single object in the image and the own vihi-

cle. However, the description capability of BBSL dis-

cussed in this paper is only part of th e picture, and in

practice it can describe the positional relationships of

multiple objects and objects of complex shapes. We

think that testing extensions to handle these specifica-

tions will contr ibute to the d evelopment of even more

secure ADS. T he third is to propose and evaluate cov-

erage that correlates to the quality of the specification-

based tests proposed in our study. It is not known how

many and what kind of test cases are needed to suf-

ficiently test the specification-based test proposed in

our study. To incre ase the utility o f this test, we be-

lieve it is necessary to pro pose validity index for test,

for example, coverage on the position on the image

and coverage on the conditions of the specification

written in BBSL.

REFERENCES

Balakrishnan, A., Deshmukh, J., Hoxha, B ., Yamaguchi, T.,

and Fainekos, G. (2021). Percemon: Online monitor-

ing for perception systems. CoRR, abs/2108.08289.

Chen, T. Y., Cheung, S. C ., and Yiu, S. (2020). Metamor-

phic testing: A new approach for generating next test

cases. C oRR, abs/2002.12543.

Committee, O.-R. A. D. O. (2021). Taxonomy and Defi-

nitions for Terms Related to Driving Automation Sys-

tems for On-Road Motor Vehicles.

Devi, S., Malarvezhi, P., Dayana, R., and Vadivukkarasi, K.

(2020). A comprehensive survey on autonomous driv-

ing cars: A perspective view. Wirel. Pers. Commun.,

114(3):2121–2133.

Dokhanchi, A., Amor, H. B., Deshmukh, J. V., and

Fainekos, G. (2018). Evaluating perception systems

for autonomous vehicles using quality temporal logic.

In Colombo, C. and Leucker, M., editors, Runtime

Verification, pages 409–416, Cham. Springer Interna-

tional Publishing.

Everingham, M. and Winn, J. (2012). The pascal visual

object classes challenge 2012 (voc2012) development

kit. Pattern Anal. Stat. Model. Comput. Learn., Tech.

Rep, 2007:1–45.

Geiger, A., Lenz, P., Stiller, C., and Ur tasun, R. (2013).

Vision meets robotics: The kitti dataset. The Inter-

national Journal of Robotics Research, 32(11):1231–

1237.

Kondermann, D., Nair, R., Honauer, K., Krispin, K., An-

drulis, J., Brock, A., G¨ussefeld, B., Rahimimoghad-

dam, M., Hofmann, S., Brenner, C., and J¨ahne, B.

ENASE 2023 - 18th International Conference on Evaluation of Novel Approaches to Software Engineering

260

(2016). The hci benchmark suite: Stereo and flow

ground truth with uncertainties for urban autonomous

driving. In 2016 IEEE Conference on Computer Vi-

sion and Pattern Recognition Workshops (CVPRW),

pages 19–28.

Moore, R. E., Kearfott, R. B., and Cl oud, M. J. (2009). In-

troduction to Interval Analysis. Society for Industrial

and Applied Mathematics.

Ramanagopal, M. S., Anderson, C., Vasudevan, R., and

Johnson-Roberson, M. (2018). Failing to learn: Au-

tonomously identifying perception failures for self-

driving cars. IEEE Robotics and Automation Letters,

3(4):3860–3867.

Redmon, J. and Farhadi, A. (2018). Yolov3: An incremental

improvement.

Shao, J. (2021). Testing object detection for autonomous

driving systems via 3d reconstruction. In 2021

IEEE/ACM 43rd International Conference on Soft-

ware Engineering: C ompanion Proceedings (ICSE-

Companion), pages 117–119.

Sun, P., Kretzschmar, H., Dotiwalla, X., Chouard, A., Pat-

naik, V., Tsui, P., Guo, J., Zhou, Y., Chai, Y., Caine,

B., Vasudevan, V., Han, W., Ngiam, J., Zhao, H., Tim-

ofeev, A., Ettinger, S., Krivokon, M., Gao, A., Joshi,

A., Zhang, Y., Shlens, J., Chen, Z., and Anguelov, D.

(2019). Scalability in perception for autonomous driv-

ing: Waymo open dataset. CoRR, abs/1912.04838.

Tanaka, K., Aoki, T., Kawai, T., Tomita, T., Kawakami, D.,

and Chida, N. (2022). A formal specification language

based on positional relationship between objects in

automated driving systems. In 2022 IEEE 46th An-

nual Computers, Software, and Applications Confer-

ence (COMPSAC), pages 950–955.

Thorn, E., Kimmel, S. C., and Chaka, M. (2018). A frame-

work for automated driving system t estable cases and

scenarios.

Zhou, Z. Q. and Sun, L. (2019). Metamorphic testing of

driverless cars. Commun. ACM, 62(3):61–67.

Specification Based Testing of Object Detection for Automated Driving Systems via BBSL

261