The Exploration of Small Sample-Oriented Object Detection

Technology in the Field of Electric Power

Yanjun Dong

1

, Shigeng Wang

2

, Xiaoyu Yin

1

, Xi Chen

1

and Jiao Peng

1

1

State Grid Hebei Electric Power Company Co., Ltd Information & Telecommunication Branch, Shijiazhuang, China

2

Beijing University of Posts and Telecommunications, Beijing, China

Keywords: Power Technology, Small Sample, Target Detection.

Abstract: In view of the difficulties, low efficiency and large amount of data in current grid patrol inspection, this

project plans to study a small sample patrol inspection method based on dual-core. Firstly, based on the

object recognition method of FASTERRCNN, a two-person network model of image and query image is

constructed. Then, the improved regional proposal network (RPN) module is used to generate a higher

quality proposal; finally, the regional boundary of the supporting image and the query image is matched to a

new regional boundary. The experiments show that the method can detect the“Bird's nest” and“Insulator” in

the power network with only 10 support maps in the EPD database established by ourselves, its detection

index mAP value can reach 18.92% . Compared with other algorithms, the detection model of small sample

based on binary star network proposed in this project has better performance and greater lightweight

advantage under the condition of small sample, it can provide reference for the research of new power

detection methods.

1

INTRODUCTION

Power Transmission Equipment in outdoor operation,

the long-term operation of the stability of higher

requirements, its outdoor operation will be affected

by many uncertain factors, or even damage it. The

failure of power grid, such as insulator damage,

voltage balance ring damage and fall off, bird

invasion and shock hammer damage, has brought

huge security hidden trouble to power grid operation

and security operation of power grid. With the rapid

development of UAV technology, UAV detection is

gradually replacing manual detection. The

unmanned aerial vehicle (UAV) has the advantages

of convenient carrying, quick response, easy

operation and large amount of image data collection.

With the development of electric power industry to

smart grid, the traditional mathematical model has

been difficult to adapt to the new requirements of

grid operation and maintenance. The introduction of

deep learning technology in power industry can

effectively solve this problem. In-depth learning

extracts Galway's failure features from low-level

data layer by layer, which can effectively

circumvent the artificial features' selective

preference for data information (Shenzhen., 2021).

Aiming at the fault diagnosis of synchronous motor,

a ReLU-DBN oil sample analysis method based on

the volatile gas in transformer oil sample is proposed

in this paper (Wen Haorapido, 2022), and the

method is improved. For transmission line fault,

double Softmax classifier is used in reference (Xu

Weili, 2022), and a method of fault identification

and phase selection for transmission line is proposed

based on CNN, thus the problem of non-independent

classification of internal and external fault

judgement and phase selection is solved.

2

TWINNED NETWORK MODEL

ALGORITHM FLOW

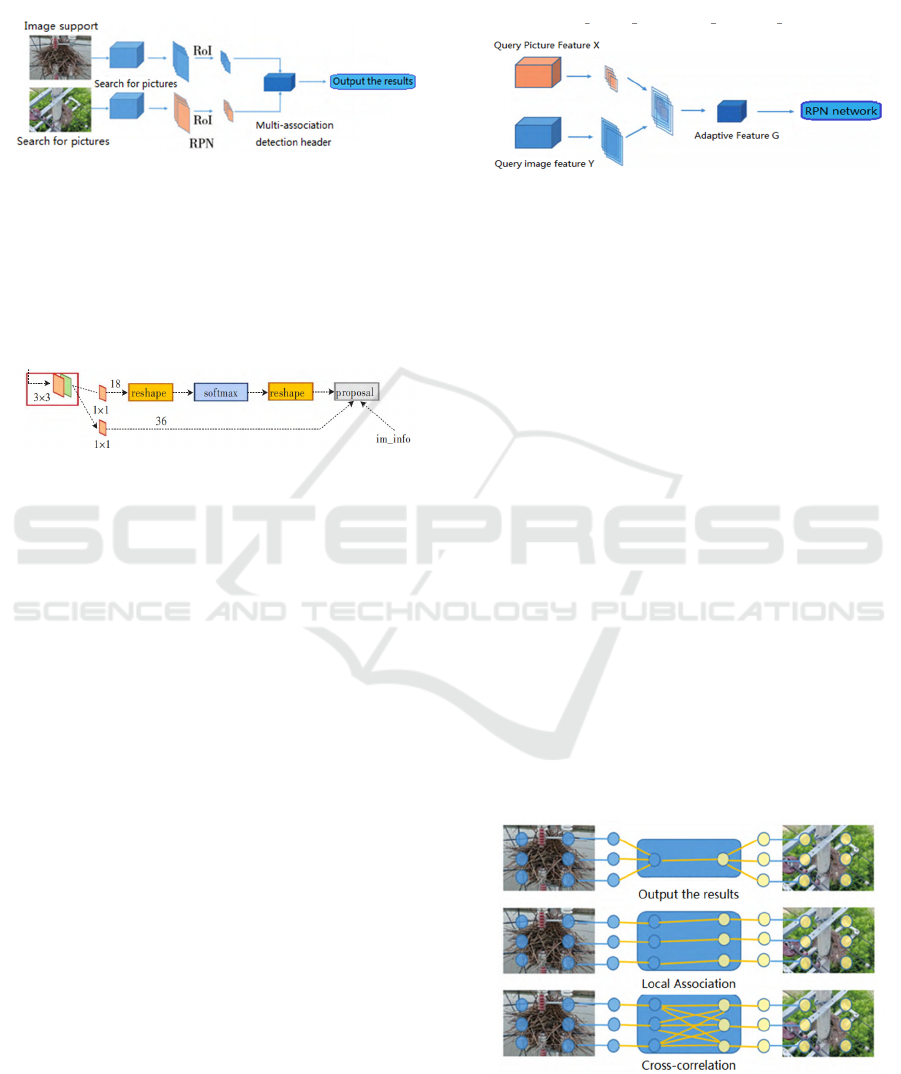

The entire process of the model is shown in Figure 1.

The image input is from the support image and the

query image input at the same time, they enter a

binary network with the same weight. The RPN

algorithm sifts for possible objects in the retrieval

image based on the relevant information of the

supporting image contained in the image (Yang

Xuejie, 2022), and then classifies and regresses the

position by the final detection head. Due to the

simplicity of the image, only 1 branch of the binary

network supporting the image is drawn. In practical

220

Dong, Y., Wang, S., Yin, X., Chen, X. and Peng, J.

The Exploration of Small Sample-Oriented Object Detection Technology in the Field of Electric Power.

DOI: 10.5220/0012278100003807

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 2nd International Seminar on Artificial Intelligence, Networking and Information Technology (ANIT 2023), pages 220-223

ISBN: 978-989-758-677-4

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

application, for N categories, each category has its

own branch, and the RPN in this branch is used to

screen the potential priority of the corresponding

classification.

Figure 1: Overall structure of

twinned

network model.

The input of the improved proposal generation

policy RPN is the output of the background, and the

output of the background is a set of predicted values,

each set of predicted values represents a set of

predicted values. Figure 2 shows the overall

structure of an RPN.

Figure 2: The Overall Structure of The RPN.

In theory, the function of RPN in object detection is

to generate a potential precursor for subsequent

classification and regression work. Ideally, when an

RPN generates a candidate object, it will be

measured by its support for the object contained in

the image, rather than simply pre-and post-binary

classification (that is, binary classification).

However, the current RPN used by FASTERRCNN

can only search blindly on the image and the feature

layer of the image, resulting in the image containing

objects that do not belong to the supporting set

image, which leads to the existence of a large

number of redundant objects in the image, thus,

more work is added to the following classification

and regression calculation. At the same time,

because the number of target classes in the support

set is much smaller than that in the actual scene, the

effective prefix takes up a smaller proportion in the

prefix generated by RPN, and the quality of the

result is poor, may have a greater impact on the

subsequent classification work. This is mainly due to

the RPN algorithm in the process of proposal

generation, did not make full use of the

characteristics of supporting the centralized image,

resulting in the generation of a large number of

unrelated proposals. On this basis, an RPN model

based on adaptive target classification is proposed.

In the pre-processing, the model focuses on the

target classification related to the support set, and

eliminates the irrelevant classes, thus, the number of

pre-processing is reduced and the workload of

subsequent processing is reduced. Figure 3

illustrates the principle of adaptive improvement.

Figure 3: Principle of adaptive improvement in RPN.

Detection head classification and localization

after RPN generation of a proposal, the probe is

generally required to perform a target class score on

the proposal and then classify it. This is the most

important step in a dual-core network. A good model

requires a single detector to distinguish between

different types of data in a small sample (Zhang

Ziqian, 2022). In the binocular network, the

supporting image and the queried image are input

into the feature extraction network. After generating

the image, the feature association and matching are

completed on the probe head. The multiple

correlation probes used are shown in Figure 4.

The algorithm includes three modules: global

correlation, local correlation and cross correlation.

Among them, the global association represents the

global feature matching of the support set and the

query set on the scene scale, and the local

association represents the one-to-one feature

matching of the support set and the query set on the

pixel level and the channel The cross-correlation

represents a one-to-many pixel-level matching

between the support and query scenarios, and is used

to solve the spatial mismatch between the support

and query scenarios.

Figure 4: Multi-association detection header.

The Exploration of Small Sample-Oriented Object Detection Technology in the Field of Electric Power

221

Loss function and model training process this

paper uses a dual-card

NVIDIAGEFORCERTX3060TIGPU hardware

environment, using Python 3.7, Pytorch1.9.0,

Torchvision0.10.0, CUDA11.1, Cudnn8.0.5.

The loss function differs from the image

classification of machine vision in that object

detection requires not only the classification of

objects, but also the regression of coordinates of

objects in rectangular position boxes. On this basis,

two parallel output layers are used to implement the

corresponding output variables. The output of the

first output layer is a discrete category possibility

confidence, where p=(p0,p1,…, pK) , corresponding

to the K categories, there are (K+1 ) outputs,

which include the confidence of the K categories

and the confidence that the proposal belongs to the

background. In this case, confidence p is the(K+1)

FC layer output of the (K+1) FC layer obtained by

the software maximum, which is the offset of the

destination position boundary box. For class K

targets, the deviation is t

=t

,t

,t

,t

, where t

does not refer to the absolute position coordinates of

the regression target boundary box, but to the

corresponding position coordinates generated by

RPN.

Each trained RoI (area boundary) has a true

classification marker u and a true locator box

regression vector g. For the object detection task

described above, we use the multi-task loss function,

with RoI as the unit, to train the classification marks

and the border regression:

L=L

+L

1

L

𝑚, 𝑢, 𝑝

, 𝑔

=L

𝑚, 𝑢

+ λ

u ≥ 1

L

p

,g

2

L

𝑚, 𝑢

= −log 𝑚

is the log loss for the

category label u.

For the regression loss of the boundary box, it

was defined as: the truth variable g=(g

x

, g

y

, g

w

, g

h

)

defining the target category U boundary box and the

predicted boundary box location variable p

=

(p

,p

,p

,p

)

𝑢≥1

=

1, 𝑢≥1

0, 𝑜𝑡ℎ𝑒𝑟𝑖𝑠𝑒

(

3

)

Where u is the result of the goal category

prediction, and U = 0 is the goal framed by the

proposal in the training sample that does not support

the goal category in the set, but rather the

background, that is, a deviation has occurred in the

first classification task, so the regression error here

is meaningless.

2.2 in the process of model training, the

commonly used indexes to measure the training

effect include Precision, Recall, AP and mAP. TP

was defined as a positive sample for correct

prediction, FN as a negative sample for false

prediction, FP as a positive sample for false

prediction, and the above assessment measures were

defined as:

Prcesion =

𝑇𝑃

𝑇𝑃 + 𝐹𝑃

#

(

4

)

Recall =

𝑇𝑃

𝑇𝑃 + 𝐹𝑁

#

(

5

)

AP =

∑

𝑝𝑖

𝑛

(

6

)

mAP =

∑

𝐴𝑃

𝑘

(

7

)

3

CONCLUSION

The learning process of twin networks is divided

into two stages. In the pre-training phase, we will

improve the model's ability of multi-class image

recognition by repeated training based on the

existing standard large sample. In this paper, it is

evaluated by using the existing mAP evaluation

criteria, and it is pre-trained to reach 24.0 in the

COCO data set. In the second step, the parameters of

neural network are adjusted according to EDP data.

The basic structure of ResNet is composed of four

layers. In the retraining stage, the weights of the first

two layers of ResNet are frozen, and the information

of EDP data set is utilized, the latter two layers and

the full-connection classification layer are adjusted

to migrate the dataset.

The improved RPN algorithm is used to

recognize the small sample of twin networks. In this

method, the features of the supporting image set are

extracted by dual-core network, and the object-

related features are generated. There is still a big gap

between the twin-sub-network small sample model

proposed in this project and the existing large data

object detection technology. In the future, we will

further explore how to eliminate the interference of

complex environment, explore new model

evaluation methods, and how to make up the

difference between training samples and test

samples.

ANIT 2023 - The International Seminar on Artificial Intelligence, Networking and Information Technology

222

ACKNOWLEDGEMENTS

This work was supported by the National Key

Research and Development Program of China under

Grant 2020AAA0107500.

REFERENCES

Shenzhen. Research on power inspection based on small

sample machine learning [J]. Microcontroller and

embedded system applications, 2021, 21(8) : 4.

Wen Hao, Zhang Guozhi, Xiao Li, etc. Study on

transformer PD planar localization based on small

sample UHF signal [J]. Transformers, 2022, 59(4) : 6.

Xu Weili, Yuan Hegang and Kai Dong Yue, Mai Xiaoqing.

Small sample generation based on antagonism neural

network [J]. Electronic testing, 2022, 36(18) : 64-65.

Yang Xuejie, Song Kai, Cao Fuyong, etc. . Research on

the application of front-end target detection technology

in electric power patrol inspection [J]. Shandong

Electric Power Technology, 2022, 49(1) : 6.

Zhang Ziqian, Xiong Zaili, Zhang Biao, etc. Small target

detection in remote sensing image based on super-

resolution reconstruction technology[J]. Journal of

Northeast Electric Power University, 2022(002): 042.

Qinzhen Hu, Hongtao Su, Shenghua Zhou, Ziwei Liu,

Yang Yang, Jun Liu. Two-stage constant false alarm

rate detection for distributed multiple-input–multiple-

output radar[J]. IET Radar, Sonar & Navigation. 2016,

10(2), pp 264-271

Bing Wang,Guolong Cui,Wei Yi, Lingjiang Kong,Xiaobo

Yang. Constant false alarm rate performance prediction

for non-independent and non-identically distributed

gamma fluctuating targets[J]. IET Radar, Sonar &

Navigation. 2016, 10(5), pp 992-1000

Wenjing Zhao,Deyue Zou,Wenlong Liu, Minglu Jin. New

matrix constant false alarm rate detectors for radar

target detection[J]. The Journal of Engineering. 2019,

2019(19), pp 5597-5601

The Exploration of Small Sample-Oriented Object Detection Technology in the Field of Electric Power

223