Enhanced Image Restoration Techniques Using Generative Facial

Prior Generative Adversarial Networks in Human Faces in

Comparison of PSNR with GPEN

D. Shravan and G. Ramkumar

Department of ECE, Saveetha School of Engineering, SIMATS, Chennai, Tamil Nadu, India

Keywords: Novel Generative Facial Prior Generative Adversarial Networks (GFPGAN), Image Restoration, GAN Prior

Embedded Network (GPEN), Facial Prior, Peak Signal Noise Ratio (PSNR), Genetrative Adversarial

Networks (GAN), Technology.

Abstract: The primary objective of this study is to discover whether or not Novel Generative Facial Prior Generative

Adversarial Networks (GFPGAN) are capable of properly recreating human portraits from damaged face

photographs. The purpose of this research is to assess the effectiveness of various image restoration techniques

by contrasting the findings generated by the Generative Adversarial Networks (GAN) Prior Embedded

Network (GPEN) approach with the quality of the restored pictures, as measured by the Peak Signal to Noise

Ratio (PSNR). For the purpose of this study, a total of 464 samples were collected, split into two groups with

232 samples each. In Group 1, the researchers utilize a novel GFPGAN approach, whereas in Group 2,

researchers use the GPEN method. The pre-trained models are imported as part of the process of the study,

and the Novel GFPGAN code has been included into Google Colab and run. The size of the sample is

determined with the use of a statistical tool found online (clincalc.com) by combining the F-score data

obtained from earlier research with the results of the current study. In the computation, the pretest power is

set at 80%, which is a number that is held constant, and the value of alpha is 0.05. As a direct result of the

simulation, the Novel GFPGAN approach achieved the greatest PSNR value, which was measured at 0.32,

while the GPEN PSNR value was measured at 0.30. There is a statistically significant difference in the

accuracy provided by the two algorithms, as shown by significance values of 0.003 (P0.05). In terms of the

PSNR values, the Novel GFPGAN performs better than the GPEN approach for the dataset that was presented.

1 INTRODUCTION

The technology that powers mobile phones and

cameras has seen significant advancements over the

last several years. Despite this, the availability of

cameras has led to a rise in the issues that are faced

while photographing persons at formal or informal

events. The acquisition of these photographs is

crucial in order to make use of them in the future; yet,

doing so may be challenging owing to difficulties

such as noise and blur caused by shaking the camera

(R. R. Sankey and M. E. Read, 1973). The exposure

value is rather high as a result of the sun's strong rays.

This might result in the images being overexposed or

noisy as a result of the camera sensor, which is

something that typically only happens when there is

not enough light (J. Q. Anderson, 2005). Image

restoration is a method that may be used to address

these faults and bring back the quality of the images.

Human portraits, and more especially the facial

region, comprise the primary focus of attention in the

current piece of scholarly literature. A GAN, which

stands for "Generative Adversarial Network," is used

in the process of "blind face restoration." This GAN

takes advantage of the wealth of previous data that is

included inside the pretrained face GAN. The prior

data comes from a variety of sources. The Generative

Facial Prior (GFP) technique is employed in this

study for the goal of doing realistic face restoration

(T. Yang, P. Ren, X. Xie, and L. 2021). The aim of

blind face repair is to revive faces of a high grade

from portions of a lower quality that have been

injured. These fragments may have deteriorated,

become fuzzy, or contain noise, compression

artifacts, or other problems of a similar kind.

Alternatively, they may include various types of

defects. The process of applying it to real-world

occurrences is exceedingly challenging for a number

of reasons, including the existence of problematic

Shravan, D. and Ramkumar, G.

Enhanced Image Restoration Techniques Using Generative Facial Prior Generative Adversarial Networks in Human Faces in Comparison of PSNR with GPEN.

DOI: 10.5220/0012561700003739

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Artificial Intelligence for Internet of Things: Accelerating Innovation in Industry and Consumer Electronics (AI4IoT 2023), pages 557-563

ISBN: 978-989-758-661-3

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

557

artifacts and a broad variety of locations and

expressions. This is due to the fact that the actual

world is full of complex situations. (Z. Teng, X. Yu,

and C. Wu, 2022). The bulk of the techniques depend

on face-specific priors in the process of restoring

facial feature maps. This is done with the intention of

restoring the proper information in the face. The

priors are calculated based on the input photographs,

which most of the time are of a very low quality. Only

a limited amount of information on the texture that

has to be repaired is provided by the priors. The usage

of reference priors, the exploitation of high-quality

face pictures, and the application of facial dictionaries

are three more tactics that may be implemented in

order to generate outputs that are realistic. However,

this issue is only present in the facial characteristics

section of that lexicon; the lack of variation is a

problem in this regard. The Generative Adversarial

Network (GAN) is able to build faces of a high quality

by using a large amount of data on the face's texture,

color, lighting, and other elements (T. Yang, P. Ren,

X. Xie, and L., 2021). There are several obstacles to

overcome when attempting to include such priors into

the process of repair. In the past, most techniques

relied on an inversion of the GAN algorithm, which,

although being able to create realistic results, more

often than not resulted in images with a low degree of

realism. The last ten years have witnessed the

development of a wide variety of distinct image

restoration procedures and algorithms, each of which

comes with its own unique set of pros and cons. A

good number of them, including the use of SWINIR,

GFPGAN, ESRGAN, and GPEN, have been included

in recognized databases such as IEEE Xplore,

ResearchGate, Google Scholar, and others. There are

around 17200 publications relating to face image

restoration that can be found in Google Scholar, 3945

face image restoration-related papers that can be

found in IEEE Xplore, and 14552 face image

restoration-related articles that can be found in

Springer. The research that makes use of ESRGAN

(X. Wang et al., 2019) has been cited 2339 times, the

research that makes use of GFPGAN (S. G and R. G.,

2022) has been cited 72 times, the research that makes

use of GPEN (T. Yang, P. Ren, X. Xie, and L., 2021)

has been cited 115 times, and a study was carried out

in which SWINIR (J. Liang, J. Cao, G. Sun, K.

Zhang, L., 2021) was utilized to restore an image; this

study was cited 429 times. These articles rank among

the highest in terms of the number of citations

received in their respective disciplines. The GFPGAN

research stands out to me as the one that is likely to

be of the most use to readers among these papers. This

is due to the fact that the GFPGAN investigation is

equivalent to this research and enlightens us about the

procedures that need to be carried out. Because low-

resolution images do not convey sufficient

information about the colors and textures of the

objects being photographed, problems have been

found in the methods that are presently used to

prevent restoration. The primary purpose of this

research effort is to recover low-resolution photos

that are blurry and then deblur those images while

maintaining as little of the original photograph's

information about its texture and colors as is

realistically possible.

2 PROPOSED METHODOLOGY

Each kind of data has 232 different samples to choose

from. The preparation of the sample size for Group 1

included utilizing a sample of 232 testing data that

was trained using Novel GFPGAN. The GFPGAN

combines the capabilities of traditional GANs with

facial priors, which are the standard facial structures

and characteristics that are present in the majority of

faces. This technique contributes to the creation of

faces that have a more natural and lifelike appearance

(H. Lithgow et al.).

A total of 232 training data points for Group 2 that

are prepared following the methodology that is

currently used by GPEN. The issue of picture

restoration in the face of a wide variety of types of

degradation, such as noise, blur, and compression

errors, will be tackled by using GPEN. The system

makes use of a generative adversarial network (GAN)

as a preliminary step in the picture restoration

process. It is based on a deep neural network.

For the purpose of the research, an electronic

device that had a resolution of 1920 by 1080 pixels,

an x64 CPU, and a 64-bit operating system was

employed. The command and the model have both

been executed with the help of Google Colab

(“Google Colaboratory”). The collaborative laptop,

as stated by nvidia-smi, is powered by a Tesla K80

with Compute 3.7, 2496 CUDA cores, 12GB GDDR5

VRAM, and a single-core hyper-threaded 2.3GHz

Xeon CPU. In addition to that, it contains 13 GB of

RAM. A free application for managing datasets called

Kaggle (J. Li, 2018) is used to import the user's own

dataset into the program once it has been launched.

The data set is then trained using Novel GFPGAN and

GPEN after this step. The evaluation is carried out

using datasets that have been trained. After that, a

comparison is made between the PSNR values of a

satisfaction provided by the GFPGAN technique and

the PSNR values acquired by the GPEN method. The

AI4IoT 2023 - First International Conference on Artificial Intelligence for Internet of things (AI4IOT): Accelerating Innovation in Industry

and Consumer Electronics

558

performance is evaluated using the PSNR values that

were generated as a consequence. The data

visualization came after the analysis had been

completed. It has been shown that Generative

Adversarial Networks, often known as GANs, are

effective tools for a variety of picture restoration

tasks, including ones that include faces. GANs are

made up of two separate neural networks known as

the generator and the discriminator. These networks

are trained concurrently with one another using a

competitive method. GANs have been effectively

used to tasks such as picture inpainting, super-

resolution, and denoising. They may also be modified

for face image restoration using generative facial

priors, which is another application of their

versatility. The following is an example of how GAN-

based approaches may be used to restore face images:

Inpainting is a technique that may be used with GANs

for the purpose of restoring missing or damaged

components of face pictures. The discriminator

network is taught to differentiate between actual and

inpainted pictures, while the generator network is

trained to fill in missing areas of an input image

during training. In order to fill in the gaps, the

generator learns how to develop information that is

both realistic and contextually consistent. This may

be helpful in situations in which elements of a

person's face are absent or are concealed. GANs may

also be used for facial super-resolution, which

involves enhancing photographs of the face with

lower resolutions to produce images with greater

resolutions. The generator network is trained to

translate low-resolution photos to their high-

resolution equivalents, which enables it to capture

finer face information. This method is very useful for

improving the quality of photos with a low resolution

as well as for zooming in on certain facial

characteristics to examine them more closely. It is

possible to teach GANs to denoise facial photos by

teaching them to understand the underlying clean

structure of faces using noisy examples as training

data. The generator's goal is to get rid of unwanted

noise while preserving the fundamental face

characteristics. This is especially helpful in situations

when there is not enough light or when noisy sensors

are being used to collect face photographs. GANs

may be used to improve certain aspects of face

photographs, such as the lighting, the skin tones, or

the facial expressions. GANs can also be used to

adjust skin tones. GANs may assist improve the

aesthetic quality of face pictures by being taught to

adjust specific qualities while maintaining the overall

facial structure. This training is done in order to do

this. GANs have the ability to create face pictures that

show the acceleration or deceleration of an

individual's aging process. It is possible to teach the

generator to create faces of varying ages while

ensuring that the created pictures maintain the

individual's distinctive features of their appearance.

Additionally, GANs may be used to artificially

generate face emotions on still photos. The generator

is able to alter the facial features to portray a certain

emotion while maintaining the integrity of the rest of

the face because it has learned the correlations that

exist between the many facial expressions. When

using GAN-based strategies for facial image

restoration, it is essential to have a high-quality

training dataset that contains both damaged face

pictures and their matching clean counterparts. This

is because the GAN-based techniques will learn from

the differences between the two. The effectiveness of

these approaches is strongly reliant on the high

quality as well as the variety of the training data. In

addition, optimizing the GAN architecture and the

training parameters is very necessary in order to

acquire the best possible outcomes for a given

restoration endeavor.

The Novel GFP-GAN achieves success in both

the realm of realism and the realm of authenticity

because of the delicate balancing act that it employs

in its design. The model incorporates both a

degradation removal module and a GAN that has

already been trained. All three of these concepts are

interconnected: direct latent code mapping, channel

split spatial feature, and prior. The restoration loss is

computed after the degradation module identifies the

areas of the face in the picture that have been

damaged as a result of image deterioration. The facial

prior from the pretrained generative adversarial

network (GAN) is utilized to replace the facial

features by using the channel split face spatial feature

as a helper. Due to the production of this high quality

face prior, the GAN will now construct priors,

generators that will create synthetic facial feature

pictures using the input photos supplied, and

discriminators that will assess if the facial features are

genuine or fake.

The current technique is solely concerned with the

face area, but an existing approach (realESRGAN)

will be included into the GFPGAN in order to provide

more enhancements to the background and even to

restore the complete image's texture. The GFPGAN

outscale value has been increased to 4x, up from the

previous value of 3.5x. This cutting-edge GFPGAN

technology is able to recover pictures that are

exceedingly blurry, which is a significant

advancement in the field. The GFPGAN method has

been given innovation by having a number of

Enhanced Image Restoration Techniques Using Generative Facial Prior Generative Adversarial Networks in Human Faces in Comparison of

PSNR with GPEN

559

enhancements made to it in order to increase the

PSNR values for the custom dataset that was

supplied. After putting this novel GFPGAN

technique through its training, the PSNR data are

retrieved for analysis. The same custom dataset is put

to use once again in the process of training the GPEN

method to get PSNR values that are identical. After

that, the PSNR values that were obtained are

compared by using the Independent Samples T-test,

and the validity is established by deciding whether or

not the significance value is lower than 0.05.

2.1 Statistical Analysis

IBM SPSS Analytical 26 is the statistical tool that was

employed (K. McCormick and J. Salcedo, 2017) in

order to evaluate the mean average PSNR value of the

intended research activity as well as the previous

investigations. For the purpose of testing, the

Independent Sample T-test is used. In this particular

investigation, the mean average PSNR values serve

as the dependent variables, while the blurriness of the

face photos serves as the independent factors. As

inputs for the analysis, the mean, the standard

deviation, and the standard error mean were used.

3 RESULTS

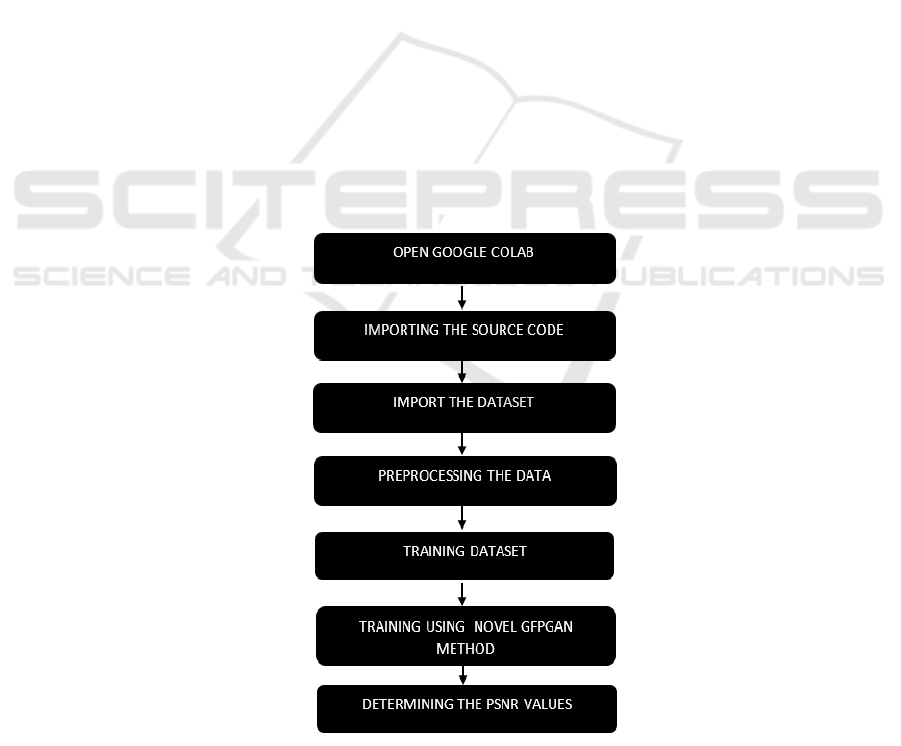

Figure 1 Depicts the workflow for running the Novel

GFPGAN method starting from the basic process of

importing the source code and datasets to giving the

results for the mean average PSNR

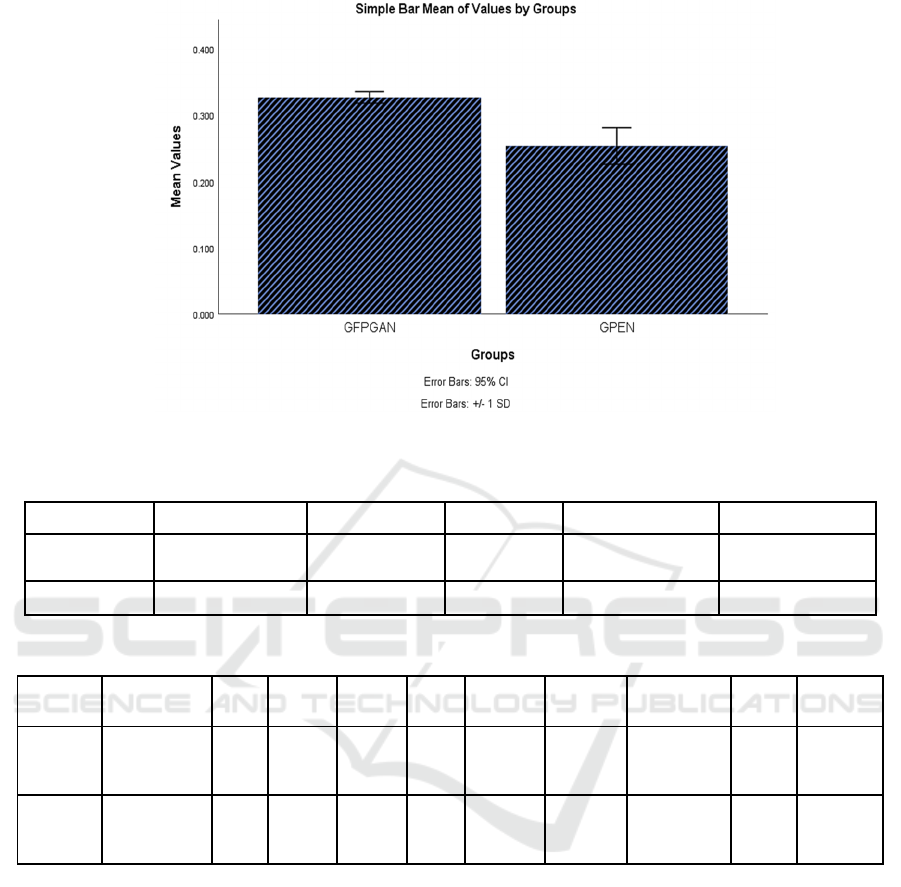

Figure 2 shows a simple bar chart of mean of

PSNR by groups, where the individual PSNR of each

picture in the dataset is noted by testing using both

Novel GFPGAN and GPEN and the mean PSNR

values are compared and mean average PSNR value

is calculated using SPSS Analytics 26’s independent

samples T-test. For Novel GFPGAN the mean PSNR

value is 0.32694 and GPEN has mean PSNR value of

0.255517, with error bars at 95%.

Table 1 shows the group statistics obtained from

performing Independent Samples T-test in order to

obtain the mean PSNR value for Novel GFPGAN as

0.326940 with standard deviation as +0.008760 and

for GPEN as 0.255517 with standard deviation as

+0.026297 using SPSS Analytics 26 tool.

Table 2 depicts the results of independent samples

t-test conducted in the SPSS Analytics tool. This table

shows the independent sample T-test performed for

the two groups and noted that the significance level

of the PSNR value of two groups is .000 which is less

than 0.05.

Figure 1: Process flowchart for determining the mean average PSNR.

AI4IoT 2023 - First International Conference on Artificial Intelligence for Internet of things (AI4IOT): Accelerating Innovation in Industry

and Consumer Electronics

560

Figure 2: The simple bar chart of mean of PSNR by groups.

Table 1: Group statistics obtained from performing Independent Samples T-test.

Groups N Mean Std. Deviation Std. Error Mean

PSNR Novel GFPGAN 232 .32694 .008760 .000575

PSNR GPEN 232 .30177 .007200 .000473

Table 2: Independent

samples

t-test.

F Sig. t df

Sig.

(2-tailed)

Mean

difference

Std. Error

Difference

Lower Upper

Accuracy

Equal

variances

assumed

16.1 .000 33.813 462 .003 .025172 .000744 .02370 .026635

Equal

variances not

assumed

33.813 445.3 .003 .025172 .000744 .02370 .026636

4 DISCUSSION

The findings of the study indicate that the Novel

GFPGAN is superior than Restormer in terms of

PSNR by 3.5% in the restoration of face pictures from

the dataset that was provided, with a significance of

0.003, which indicates that the findings should be

considered reliable. The prior findings demonstrate

that Novel GFPGAN works better as compared to the

already used restoration method in terms of

accurately and successfully recovering face images

from a user-specified dataset. According to results

that are comparable to those shown here, studies and

research from various authors and researchers, which

have also been included here, have shown that this

technology is 86% more successful than other

technologies in restoring low-resolution face images

to high-resolution face images while maintaining the

subject's identity. The topic of guided face

restoration, which is also often referred to as blind

face restoration from a corrupt picture (GFRNet), is

investigated in this article (X. Li, M. Liu, Y. Ye, W.

Zuo, L. Lin, and R. Yang, 2018). An technique to face

restoration using vector quantization (VQ), known as

VQFR, was conceived after being influenced by both

the conventional dictionary-based approaches and the

more recent vector quantization (VQ) technological

advancements. Because of this, VQFR is able to

Enhanced Image Restoration Techniques Using Generative Facial Prior Generative Adversarial Networks in Human Faces in Comparison of

PSNR with GPEN

561

recreate realistic facial features with the assistance of

high-quality low-level feature banks that were

derived from high-quality faces. This is a significant

advantage for VQFR. (Y. Gu et al., 2022).

DeblurGAN-v2 is a brand-new end-to-end generative

adversarial network (GAN) for single picture motion

deblurring. Its purpose is to dramatically increase the

quality, flexibility, and efficiency of existing

deblurring approaches (O. Kupyn, T. Martyniuk, J.

Wu, and Z. Wang, 2019). The HiFaceGAN system is

a multi-stage framework that is made up of a number

of layered CSR units. These CSR units progressively

add facial features utilizing the hierarchical semantic

guidance that was collected from the front-end

content-adaptive suppression modules as a

consequence (Kumar M, M., Sivakumar, V. L., Devi

V, S., Nagabhooshanam, N., & Thanappan, S. 2022).

According to the findings of this research, highly

trained GANs may serve as effective preprocessors

for a variety of image processing applications if they

are given multi-code GAN priors, also known as

mGANpriors. They made use of a large number of

latent codes in order to precisely invert a given GAN

model. They then used adaptive channel significance

at some point during the generator's construction at an

intermediate layer in order to generate the features

maps from these codes. The trained GAN models are

able to leverage the resultant high-fidelity picture

reconstruction for a wide variety of real-world

applications, including image colorization, super-

resolution, image inpainting, and semantic

modification (J. Gu, Y. Shen, and B. Zhou, 2020). In

addition, it has been discovered that putting the

GFPGAN blind face restoration idea into practice

might be a difficult task. Photographs of people's

faces that were shot outside usually suffer from a

variety of quality issues, such as compression,

blurring, and noise. Due to the fact that the

information loss caused by the degradation provides

an unending number of high-quality (HQ) outputs

that might have been produced from low-quality (LQ)

inputs, it is very challenging to recover these kinds of

photos. When doing blind repairs, in which the exact

degree of deterioration is unclear, the inconvenience

is amplified even more. Learning a LQ-HQ mapping

in the large picture space is still intractable, which

results in the mediocre restoration quality of previous

approaches. Despite the breakthroughs brought about

by the advent of deep learning, learning a LQ-HQ

mapping in the huge image space is still intractable.

(CodeFormer), a prediction network that is built on

transformers (G. Ramkumar, R. Thandaiah Prabu,

Ngangbam Phalguni Singh, U. Maheswaran, 2021).

Due to the fact that just around 3000 photos were used

to train GFPGAN, one essential facet to take into

account is that the number of images used to train

GFPGAN is rather high. This is an essential facet to

take into consideration. The amount of time spent

training the model with user-supplied data and photos

results in a high-quality face image being used for the

restoration of any elements of the image that have

been damaged or corrupted. In addition, extremely

poor quality photos cannot be retrieved if there is no

information on the texture of the image. In the future,

the scope should anticipate aiming the picture

restoration for extremely low-quality image

restoration with no information on texture or color.

5 CONCLUSION

From the obtained results, Novel GFPGAN performs

better and delivers more accurate and realistic human

face restoration in the facial region than the GPEN by

PSNR value 0.02517, according to the above PSNR

values.

REFERENCES

R. R. Sankey and M. E. Read,(1973) “Camera Image

Degradation Due to the Presence of High Energy

Gamma Rays in l23l-Labeled Compounds, “Southern

Medical Journal, vol. 66, no. 11. p. 1328. doi:

10.1097/00007611-197311000-00053.

J. Q. Anderson, (2005). Imagining the Internet:

Personalities, Predictions, Perspectives. Rowman &

Littlefield Publishers,

T. Yang, P. Ren, X. Xie, and L. (2021). Zhang, “GAN Prior

Embedded Network for Blind Face Restoration in the

Wild,” 2021 IEEE/CVF Conference on Computer

Vision and Pattern Recognition (CVPR). doi:

10.1109/cvpr46437.2021.00073.

Z. Teng, X. Yu, and C. Wu, (2022). “Blind Face Restoration

Multi-Prior Collaboration and Adaptive Feature

Fusion,” Front. Neurorobot., vol. 16, p. 797231, Feb.

X. Wang et al., (2019). “ESRGAN: Enhanced Super-

Resolution Generative Adversarial Networks,” Lecture

Notes in Computer Science. pp. 63–79, doi:

10.1007/978-3-030-11021-5_5.

S. G and R. G, 2022, "Automated Breast Cancer

Classification based on Modified Deep learning

Convolutional Neural Network following Dual

Segmentation," 2022 3rd International Conference on

Electronics and Sustainable Communication Systems

(ICESC), Coimbatore, India, pp. 1562-1569, doi:

10.1109/ICESC54411.2022.9885299

J. Liang, J. Cao, G. Sun, K. Zhang, L. (2021). Van Gool,

and R. Timofte, “SwinIR: Image Restoration Using

Swin Transformer,” 2021 IEEE/CVF International

AI4IoT 2023 - First International Conference on Artificial Intelligence for Internet of things (AI4IOT): Accelerating Innovation in Industry

and Consumer Electronics

562

Conference on Computer Vision Workshops (ICCVW).

doi: 10.1109/iccvw54120.2021.00210.

H. Lithgow et al., “Protocol for a randomised controlled

trial to investigate the effects of vitamin K2 on recovery

from muscle-damaging resistance exercise in young

and older adults-the TAKEOVER study,” Trials, vol.

23, no. 1, p. 1026, Dec.

“Google Colaboratory.” https://colab.research.google.com/

(accessed Mar. 15, 2023).

J. Li,2018 “CelebFaces Attributes (CelebA) Dataset.” Jun.

01,. Accessed: Mar. 15, 2023. [Online]. Available:

https://www.kaggle.com/jessicali9530/celeba-dataset

K. McCormick and J. Salcedo, 2017.SPSS Statistics for

Data Analysis and Visualization. John Wiley & Sons,

X. Li, M. Liu, Y. Ye, W. Zuo, L. Lin, and R. Yang,(2018)

“Learning Warped Guidance for Blind Face

Restoration,” Computer Vision – ECCV . pp. 278–296,

2018. doi: 10.1007/978-3-030-01261-8_17.

Y. Gu et al.,2022. “VQFR: Blind Face Restoration with

Vector-Quantized Dictionary and Parallel Decoder,”

Lecture Notes in Computer Science. pp. 126–143, doi:

10.1007/978-3-031-19797-0_8.

O. Kupyn, T. Martyniuk, J. Wu, and Z. Wang,(2019)

“DeblurGAN-v2: Deblurring (Orders-of-Magnitude)

Faster and Better,” IEEE/CVF International

Conference on Computer Vision (ICCV). 2019. doi:

10.1109/iccv.2019.00897.

Kumar M, M., Sivakumar, V. L., Devi V, S.,

Nagabhooshanam, N., & Thanappan, S. (2022).

Investigation on Durability Behavior of Fiber

Reinforced Concrete with Steel Slag/Bacteria beneath

Diverse Exposure Conditions. Advances in Materials

Science and Engineering, 2022..

J. Gu, Y. Shen, and B. Zhou, (2020). “Image Processing

Using Multi-Code GAN Prior,” 2020 IEEE/CVF

Conference on Computer Vision and Pattern

Recognition (CVPR). doi:

10.1109/cvpr42600.2020.00308.

G. Ramkumar, R. Thandaiah Prabu, Ngangbam Phalguni

Singh, U. Maheswaran, (2021) Experimental analysis

of brain tumor detection system using Machine learning

approach, Materials Today: Proceedings, ISSN 2214-

7853, https://doi.org/10.1016/j.matpr. 2021.01.246.

Enhanced Image Restoration Techniques Using Generative Facial Prior Generative Adversarial Networks in Human Faces in Comparison of

PSNR with GPEN

563