Detection of Bone Fractures in Upper Extremities Using XceptionNet

and Comparing the Accuracy with Convolutional Neural Network

Trisha Veronica M. Y. and P. V. Pramila

Department of Computer Science and Engineering, Saveetha University, India

Keywords: Bone Fractures, Convolutional Neural Networks, Deep Learning, Health, Novel XceptionNet Model,

Upper Extremity.

Abstract: Goal of this study is to differentiate an unique XceptionNet model deep learning (DL) model to Convolutional

neural networks (CNN) in order to recognize bone fracture at the upper extremities of hands with considerably

higher accuracy. Materials and Methods: To enhance the accuracy metric of bone fracture recognition in the

upper extremity areas of hands, deep learning techniques such as the novel XceptionNet model (N=10) and

Convolutional Neural Networks (N=10) were iterated. In this work, bone fracture detection using x-rays

images dataset was used which was acquired via Kaggle. The dataset, which has a total of 9463 x-ray images,

is 181 MB in size. The Train and Val datasets were separated. There are 633 photos in the val dataset and

8987 images in the train dataset which were used to calculate accuracy for the two groups with an 80% of G

power. Results and Discussion: The classification accuracy of the novel XceptionNet model is 88.74%, which

is significantly greater than the accuracy of the CNN model, which is 72.50% for Bone fracture detection

using x-rays images dataset. It is discovered that the novel XceptionNet model and convolutional neural

networks differed statistically with a notable difference of p<0.001 (p<0.05) (2-tailed). Conclusion: The

methodology used in this paper with two deep learning models namely novel XceptionNet and convolutional

neural networks. The results reveal the usefulness of the latest methods in identifying bone fracture detection

in the upper extremities and show their value for fracture prediction at an early stage.

1 INTRODUCTION

As we are all aware, bones act as the body's

framework and as points where muscles can attach.

As society advances, people's propensity for bone

fractures increases. Broken bones can impair blood

supply to the bones and result in additional

complications, but they can also hurt the wounded

limbs as well as the surrounding soft tissues. Delays

in the diagnosis and medical management of injuries

can potentially lead to complications with fractures.

Because broken bones can seriously harm a person's

body, it is vital for people to have a clear-cut and

efficient diagnosis of injuries (Pranata et al., 2019).

The analysis and remedial measures of many diseases

have advanced thanks to new trends and technology

in radiology (Sa, Mohammed and Hefny, 2020).

However, there are 7.5 times as many patients and

radiological scans each year as there are radiologists.

The procedure for detection is required to be

completed in the very brief period of time possible

because each of these images needs to be analyzed (Li

et al., 2020). In order to alleviate the frequency of

these issues and intensify the diagnosis effectiveness,

computer-aided diagnosis (CAD) has been

recommended recently. They may serve as an

alternative perspective for doctors to support and

assist their decisions. Numerous research has been

conducted to develop CADs for use at a range of

medical contexts, including the identification of

cancer, breast lesions, cognitive tumors, and

particularly cracks and fractures of upper extremity

(Lee and Fujita, 2020). In contrast to traditional

approaches, deep learning can automatically discover

strong, complicated features eliminating the need for

feature development using unprocessed information.

Utilization of advanced machine learning in genetics,

electronic health records, and images for medicine

and pharmaceutical discovery are said to have evident

advantages in maximizing the employment in

biomedicine data and boosting health. (S. Yang et al.,

2021).

The topic of detecting bone fractures in the upper

extremity areas were covered in 4 articles published

360

Y., T. and Pramila, P.

Detection of Bone Fractures in Upper Extremities Using XceptionNet and Comparing the Accuracy with Convolutional Neural.

DOI: 10.5220/0012772300003739

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Artificial Intelligence for Internet of Things: Accelerating Innovation in Industry and Consumer Electronics (AI4IoT 2023), pages 360-366

ISBN: 978-989-758-661-3

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

by IEEE and over 16,800 papers according to Google

Scholar over the past five years. The study by

(Agarwal et al., 2022) concentrates on the

classification of photographs from X-rays and

assessment of lower limb broken bones using a

variety of mechanisms for undertaking, such as

approach to envision refining, unsharp masking and

harris corner recognition for features extraction, and

classification methods, such as decision tree(DT) for

determining whether a bone is fractured or

unfractured and KNN algorithm for determining a

particular type of cracked bone, with an accuracy of

92% and 83%, independently. Region Proposal

Network (RPN) accelerated Region-based

Convolutional Neural Networks (R-CNN) Deep

learning (DL) model for cracked bone diagnosis and

categorization was proposed by (Abbas et al. 2020)

Additionally, the model's topmost layer was retaught

through 50 x-rays and the Inception v2 (version 2)

network blueprint. They assessed the effectiveness of

the suggested framework when it comes to both

category and determination. Overall accuracy of their

system in terms of classification and detection is 94%.

Because bone fractures result in various mechanical

vibration responses, the research by (Yoon et al.,

2021) investigates the transverse vibration responses

of bones. The technique of modal guarantee to

evaluate the longitudinal resonance remarks of both

solid and damaged joints criteria was developed and

utilized to detect bone fractures. In the work by

(Raisuddin et al., 2021), For the purpose of

diagnosing wrist fractures, they established and

evaluated a cutting-edge DL-based source dubbed

DeepWrist, and they evaluated it against two test sets:

one for the general population and one difficult test

set that only included instances that needed CT

confirmation. Their findings show that while a widely

used and effective method, such as DeepWrist,

performs almost perfectly on the hard test set, it does

far worse on the general independent sets of test

typical precision is 0.99 (0.99-0.99) Vs 0.64. (0.46–

0.83). The study "Bone fracture detection in X-ray

images using convolutional neural networks"

(Bagaria, Wadhwani, 2022) is in my opinion the

finest one.

Convolutional neural networks (CNN) do

remarkably well when classifying images that are

highly similar to the dataset. Convolutional Neural

Networks, on the other hand, typically struggle to

categorize images if there is even a slight tilt or

rotation. This problem was examined in this work,

and it was resolved by training the system using a

method known as data augmentation, or image

augmentation. The research's primary objective is to

determine the efficiency of two novel DL (deep

learning) models XceptionNet and CNN in predicting

the upper extremity bone fractures.

2 MATERIALS AND METHOD

Proposed experiment took place in the programming

lab of the Saveetha School of Engineering, SIMATS.

Convolutional neural networks and XceptionNet are

the two deep learning methods selected for this

research. The experiment was repeated using the

aforementioned algorithms 10 times. The iteration's

sample size is 10. The G-power for the available data

samples was calculated to be 80%, and Alpha was

adjusted to 0.05.

The testing environment for the planned study

was a Lenovo ideapad FLEX 15 IWL with 8.00 GB

RAM, an Intel i7 8th gen processor, 475 GB of

storage capacity, and Windows 11OS.

Bone fracture detection using x-rays images is the

open source dataset used in this research project,

which was acquired via Kaggle (Sairam 2022).

Multiple joints in the upper extremity regions of

hands are included in this dataset. The dataset, which

has a total of 9463 x-ray images, is 181 MB in size.

The Train and Val datasets were separated. There are

633 photos in the val dataset and 8987 images in the

train dataset.The Train set of data was further divided

into subcategories for fractured and not-fractured

data. The not fractured set of data included x-ray

images of healthy bone while the fractured

subcategory included images of bone fractures in the

upper extremities.

2.1 XceptionNet

Depth Wise Separable Convolutions are used in the

XceptionNet deep convolutional neural network

architecture. Researchers at Google created it.

Convolutional neural networks' Inception modules,

according to Google, act as a transitional stage

between the regular convolution process and the

depthwise separable convolution process (a

depthwise convolution followed by a pointwise

convolution).

2.2 Convolutional Neural Networks

(CNN)

A CNN (Convolutional Neural Network) is a kind of

ANN employed in deep learning that is often applied

to image, text, object, and recognition categorization.

Convolutional neural networks, usually referred to as

Detection of Bone Fractures in Upper Extremities Using XceptionNet and Comparing the Accuracy with Convolutional Neural

361

convnets or CNN, are a well-known technique in

computer vision applications. The class of deep

neural networks that are employed in the evaluation

of visual imagery. To identify objects from picture

and video data, this kind of architecture is studied.

NLP (neural language processing), video or image

recognition, and other applications utilize it.

2.3 Statistical Analysis

Statistical Package for the Social Sciences (SPSS)

version 26 has been used to do the crucial statistical

calculations. SPSS was developed to undertake

statistical analysis for the data collected. It currently

offers an extensive library of AI statistics, open

source scalability, and the capability to compare the

average accuracy of various algorithms. The

independent variable is the accuracy of the novel

XceptionNet model, while the dependent variable is

adequacy. To ascertain the fact that empirical proof

exists that demonstrates the ability of the

corresponding populations to be substantially

different, the Independent Samples T-test was utilized

to gauge the pair of independent groups, XceptionNet

and Convolutional Neural Networks.

Table 1: This table displays the Pseudocode and steps

performed.

S.No

Model

Pseudocode

1.

XceptionNet

Input: Training and Testing data

Output: PerformanceAccuracy

1. Import dataset and required

packages

2. Pre-process the data

3. Divide the dataset into train and test

data

4. Define the model

5. Fit the model on the training and

testing dataset

6. Train and test the model

7. Evaluate the model

8. Plot the graph using matplotlib

9. Derive the confusion matrix for the

model’s metrics

10. Report performance

2.

CNN

Input: Data from Training and Testing

Output: Value of accuracy

1. Importing dataset and required

packages

2. Pre-process the data

3. Divide the dataset into Training and

Testing sets

4. Fit the CNN model on the training

and testing dataset

5. Evaluate the CNN model

6. Plot the graph using matplotlib

7. Derive Confusion matrix

8. Calculate the accuracy score

Table 2: Sample values for the groups i.e, CNN and novel

XceptionNet model.

S.NO

ACCURACY

CNN

XceptionNet

1

59.38

78.45

2

63.00

84.40

3

64.44

87.52

4

64.13

88.11

5

67.56

88.31

6

65.33

88.45

7

67.62

88.49

8

68.94

88.60

9

69.25

88.38

10

72.50

88.74

Average

66.21

86.94

Table 3: Performance metrics of XceptionNet and

Convolutional neural network.

S.NO

METRICS

XCEPTION

CNN

1

Accuracy

88.74%

72.50%

2

Precision

88.45%

88.82%

4

F1-score

89.68%

89.11%

5

Sensitivity

90.94%

89.4%

6

Specificity

87.86%

89.73%

Table 4: Statistical analysis of diverse samples examined

between novel XceptionNet and CNN deep learning

models. The mean accuracy of XceptionNet is 88.74% and

CNN is 72.50% Standard Deviation(SD) of XceptionNet is

3.24 and CNN is 3.73. The T-test for comparison for

XceptionNet standard error mean (std. mean) is 1.02 and

CNN is 1.17.

Group

N

Mean

Std.

Deviation

Std. Mean

Error

Accuracy

XceptionNet

10

86.94

3.24

1.02

CNN

10

66.21

3.73

1.17

Loss

XceptionNet

10

30.15

6.57

2.07

CNN

10

60.26

3.40

1.07

AI4IoT 2023 - First International Conference on Artificial Intelligence for Internet of things (AI4IOT): Accelerating Innovation in Industry

and Consumer Electronics

362

Table 5: The data set is subjected to an Isolated Sample T-Test, with the confidence interval set at 95% and the corresponding

significance level set to p<0.001 (p<0.05) (2-tailed), groups are statistically significant.

Leven’s Test of

Equality of

Variances

T-test for Equality of Means

95% Confidence

Interval of the

Difference

F

Sig

t

df

Sig

(2-tailed)

Mean

Difference

Std Error

difference

Lower

Upper

Accuracy

Equal

Variance

assumed

0.606

0.004

13.25

18

<.001

20.73

1.56

17.44

24.01

Equal

Variance

not

assumed

13.25

17.66

<.001

20.73

1.56

17.43

24.02

Loss

Equal

Variance

assumed

1.150

0.298

-12.86

18

<.001

-30.10

2.34

-35.02

-25.19

Equal

Variance

not

assumed

-12.86

13.51

<.001

-30.10

2.34

-35.14

-25.07

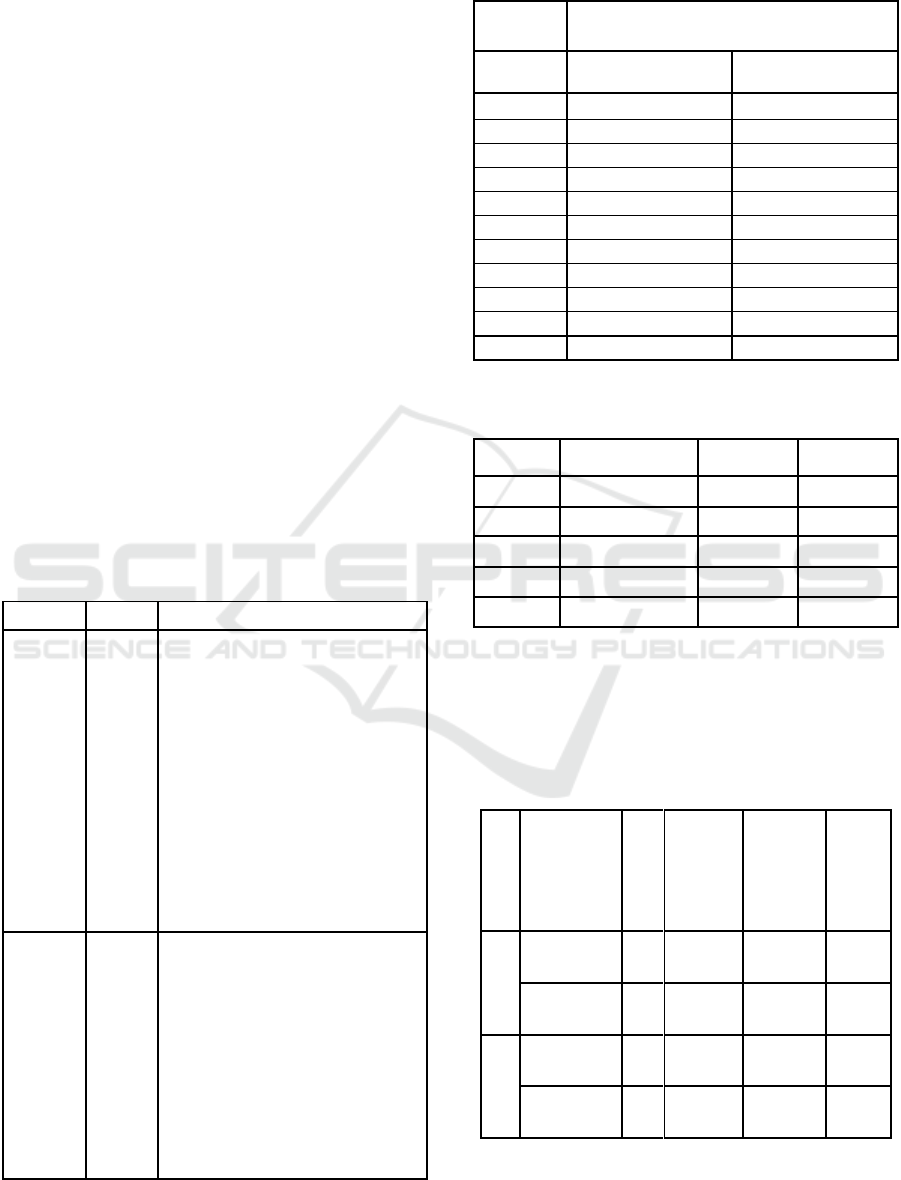

Figure 1: Conceptual representation of XceptionNet Deep learning model’s architecture.

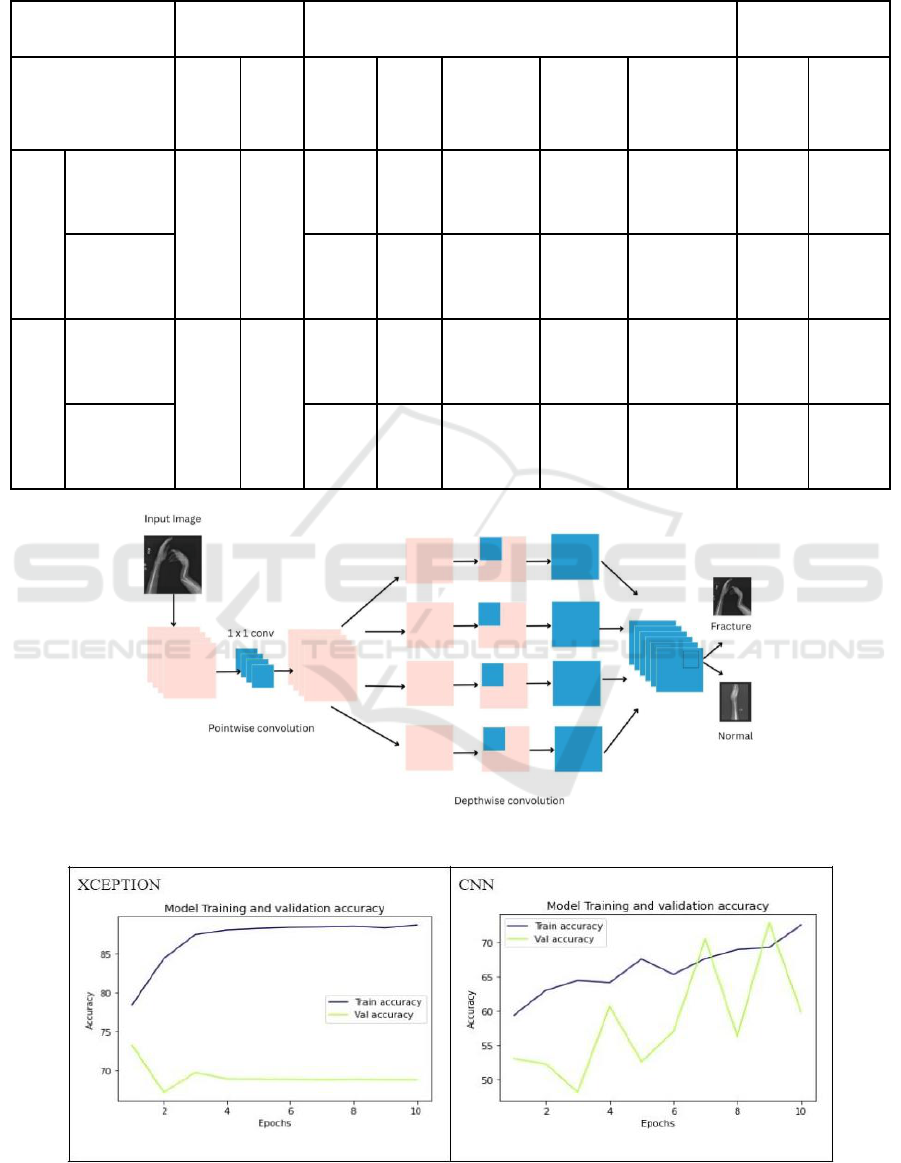

Figure 2: Novel XceptionNet and CNN - training accuracy as well as validation accuracy.

Detection of Bone Fractures in Upper Extremities Using XceptionNet and Comparing the Accuracy with Convolutional Neural

363

Figure 3: Novel XceptionNet and CNN - training loss and validation loss.

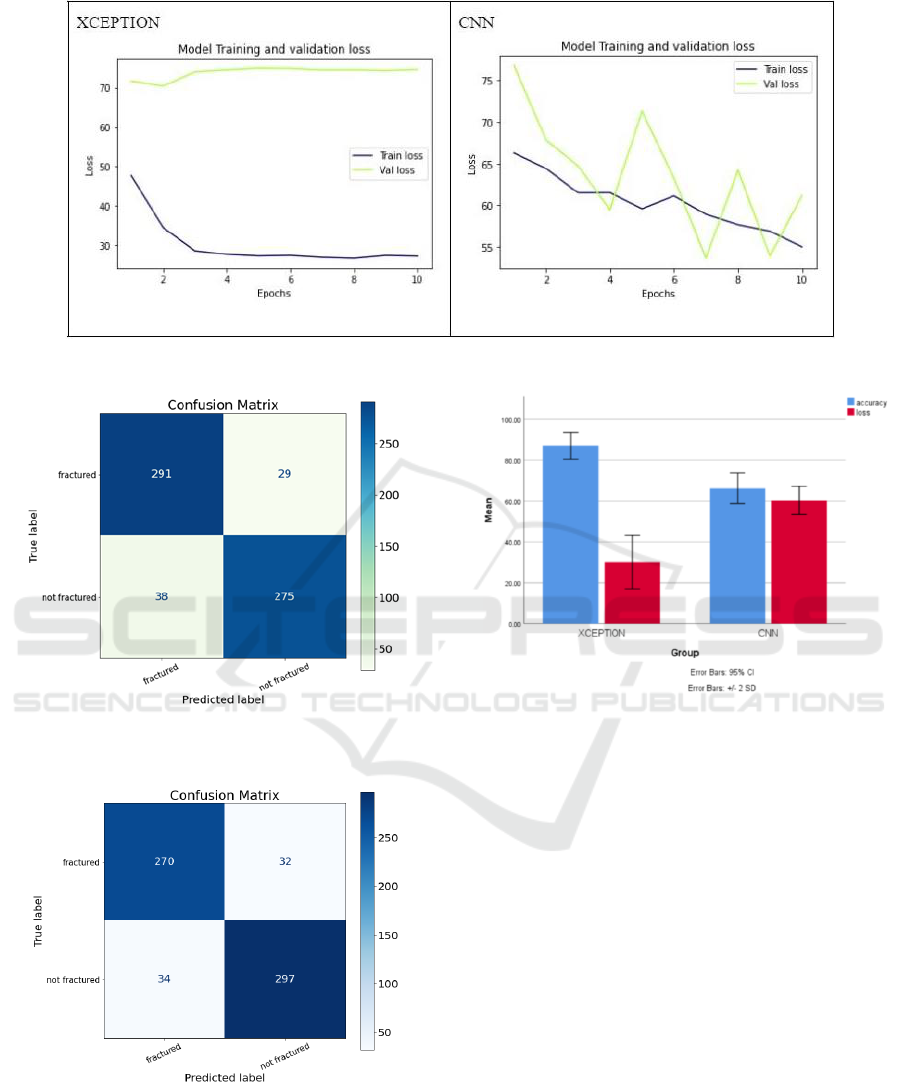

Figure 4: Confusion matrix (CM) for XceptionNet deep

learning model.

Figure 5: Confusion matrix (CM) of CNN deep learning

model.

Figure 6: Bar graph presents the contrast between Mean

accuracy of novel XceptionNet and Convolutional neural

network (CNN) deep learning model in bone fracture

detection using x-rays dataset. XceptionNet does produce

better results with standard deviation (SD). X Axis:

XceptionNet vs Convolutional neural network and Y axis:

Mean Accuracy of detection SD = ±2 and confidence

interval of 95%.

3 RESULTS

The experimental results are based on the recital of

Novel XceptionNet and CNN DL models, which are

both measured in terms of accuracy. The XceptionNet

has a 89.44 percent accuracy, whereas the CNN has a

54.44 percent accuracy. Table 1represents the steps of

the pseudocode performed for XceptionNet and CNN

models. The innovative XceptionNet and

Convolutional neural networks, two deep learning

models, are contrasted for accuracy in Table 2. In the

third or Table 3 the metrics of XceptionNet and

Convolutional neural network models are shown.

Precision, F1 score, specificity and sensitivity values

AI4IoT 2023 - First International Conference on Artificial Intelligence for Internet of things (AI4IOT): Accelerating Innovation in Industry

and Consumer Electronics

364

obtained for XceptionNet are 88.45%, 99.68%,

87.86% and 90.94%. Table 4 examines the summary

statistics from separate samples utilizing the cutting-

edge CNN and XceptionNet deep learning models.

The novel XceptionNet model has a mean accuracy

of 88.74% compared to the CNN model's 72.50%.

Relating the XceptionNet to convolutional neural

networks, the XceptionNet has a standard deviation

of 3.24. The CNN standard error mean is 1.17,

whereas the XceptionNet standard error mean is 1.02,

according to the T-test. Table 5 demonstrates a

statistical independent sample t-test comparing the

novel XceptionNet and CNN (Convolutional Neural

Network), of a 95% confidence interval(CI). p<0.001

(p<0.05) (2-tailed) has been calculated as the

significant value for accuracy and groups are

statistically significant.

Fig. 1 displays the conceptual representation of

XceptionNet deep learning model’s architecture. The

training and validation accuracy of the innovative

XceptionNet and CNN deep learning models are

shown in Fig. 2, where x is the axis that represents the

epochs and y-axis displays accuracy performance.

Fig. 3 depicts training and validation loss of the

XceptionNet and CNN (Convolutional Neural

Networks) deep learning models. Fig. 4 and Fig. 5

shows the pictorial representation of confusion

matrices for XceptionNet and Convolutional neural

networks deep learning models. A vertical bar chart

depicting the average accuracy of the novel

XceptionNet and CNN models for identifying bone

fractures in upper extremity areas of hands using x-

ray images dataset can be observed in Fig. 6. Novel

XceptionNet appears to get better results with

standard deviation. Mean of Detection SD = ±2 and

X Axis: XceptionNet Vs. Convolutional Neural

Network (CNN).

4 DISCUSSIONS

The novel XceptionNet and Convolutional Neural

Networks (CNN) approaches are used and contrasted

to be able to improve the accuracy rate of bone

fracture detection in the upper extremity regions of

hands. In the proposed research, observations

revealed that the unique XceptionNet deep learning

model worked substantially better than the

Convolutional Neural Networks Technique at

detecting bone fractures in x-ray datasets. The novel

XceptionNet model has an accuracy rate of 88.74%

for recognizing fractures in the upper extremity

regions compared to Convolutional Neural Networks

Technique's accuracy rate of 72.50%. The outcomes

demonstrate that Novel XceptionNet performs more

accurately than Convolutional Neural Networks in

terms of accuracy.

According to a research, categorizing broken and

healthy bone with a block attention module,

convolutional neural network (CNN), faster R-CNN,

and FPN provided recall, precision, accuracy,

sensitivity, and specificity values of 0.789, 0.894,

0.853, 0.789, and an AUC of 0.920 (T.-H. Yang et al.

2022). In another study, “You Look Only Once”

(YOLO) models served as algorithms for skeletal

segmentation and lesion diagnosis and an accuracy of

90% was reported for bone fracture diagnosis

(Faghani et al. 2023). A convolutional neural network

(CNN) was used to locate the fracture ROI, and a

second CNN was used to identify and segment

different types of fragments within the ROI. This

deep learning model was implemented into this

cascaded architecture. For segmenting CT images,

this model produced average dice coefficients of

90.5% and average mean accuracy value of 89.40%

for identifying individual fragments (L. Yang et al.

2022). On the MobileNet network, a two-step

categorization technique was found to have a 73.42%

accuracy rate for bone fracture prediction. (El-

Saadawy et al. 2020). The accuracy of the AC-BiFPN

detection methods and modified Ada-ResNeSt

backbone network was reported to be 68.4% in

another study (Lu, Wang, and Wang 2022).

Sometimes, the mistake that occurred in the train

set of data and the error that occurred in the testing

data set are very different. It happens in complicated

models when there are too many parameters in

comparison to the quantity of observations. Future

research can assess a model's effectiveness based on

how well it performs on a test data set rather than how

well it performs on the training data that was provided

to it.

5 CONCLUSION

In this paper, computer diagnostic methods for X-ray

image-based bone detection in upper extremities were

demonstrated. The dataset was initially trained using

the XceptionNet deep learning model. The fracture's

position is determined after the initial stage. Patterns

tested on a set of images and the results were

evaluated according to the characteristics. In

comparison to convolutional neural networks (CNN),

which had an accuracy rate of 72.50%, the analysis

revealed that the results were satisfactory and that the

XceptionNet technique had a high accuracy of

88.74%.

Detection of Bone Fractures in Upper Extremities Using XceptionNet and Comparing the Accuracy with Convolutional Neural

365

REFERENCES

Abbas, Waseem, Syed M. Adnan, M. Arshad Javid, Fahad

Majeed, Talha Ahsan, Haroon Zeb, and Syed Saqlian

Hassan. (2020). “Lower Leg Bone Fracture Detection

and Classification Using Faster RCNN for X-Rays

Images.” 2020 IEEE 23rd International Multitopic

Conference (INMIC).

https://doi.org/10.1109/inmic50486.2020.9318052.

Agarwal, Shikha, Manish Gupta, Jitendra Agrawal, and

Dac-Nhuong Le. (2022). Swarm Intelligence and

Machine Learning: Applications in Healthcare. CRC

Press.

Bagaria, Rinisha, Sulochana Wadhwani, and A. K.

Wadhwani. (2022). “Bone Fracture Detection in X-Ray

Images Using Convolutional Neural Network.” SCRS

Conference Proceedings on Intelligent Systems, April,

459–66.

El-Saadawy, Hadeer, Manal Tantawi, Howida A. Shedeed,

and Mohamed F. Tolba. (2020). “A Two-Stage Method

for Bone X-Rays Abnormality Detection Using

MobileNet Network.” Advances in Intelligent Systems

and Computing. https://doi.org/10.1007/978-3-030-

44289-7_35.

Faghani, Shahriar, Francis I. Baffour, Michael D. Ringler,

Matthew Hamilton-Cave, Pouria Rouzrokh, Mana

Moassefi, Bardia Khosravi, and Bradley J. Erickson.

(2023). “A Deep Learning Algorithm for Detecting

Lytic Bone Lesions of Multiple Myeloma on CT.”

Skeletal Radiology. https://doi.org/10.1007/s00256-

022-04160-z.

G. Ramkumar, R. Thandaiah Prabu, Ngangbam Phalguni

Singh, U. Maheswaran, Experimental analysis of brain

tumor detection system using Machine learning

approach, Materials Today: Proceedings, (2021), ISSN

2214-7853, https://doi.org/10.1016/j.matpr.2021.01.246.

Kumar M, M., Sivakumar, V. L., Devi V, S.,

Nagabhooshanam, N., & Thanappan, S. (2022).

Investigation on Durability Behavior of Fiber

Reinforced Concrete with Steel Slag/Bacteria beneath

Diverse Exposure Conditions. Advances in Materials

Science and Engineering, 2022.

Lee, Gobert, and Hiroshi Fujita. (2020). Deep Learning in

Medical Image Analysis: Challenges and Applications.

Springer Nature.

Li, Xuechen, Linlin Shen, Xinpeng Xie, Shiyun Huang,

Zhien Xie, Xian Hong, and Juan Yu. (2020). “Multi-

Resolution Convolutional Networks for Chest X-Ray

Radiograph Based Lung Nodule Detection.” Artificial

Intelligence in Medicine 103 (March): 101744.

Lu, Shuzhen, Shengsheng Wang, and Guangyao Wang.

(2022). “Automated Universal Fractures Detection in

X-Ray Images Based on Deep Learning Approach.”

Multimedia Tools and Applications 81 (30): 44487–

503.

Pranata, Yoga Dwi, Kuan-Chung Wang, Jia-Ching Wang,

Irwansyah Idram, Jiing-Yih Lai, Jia-Wei Liu, and I-Hui

Hsieh. (2019). “Deep Learning and SURF for

Automated Classification and Detection of Calcaneus

Fractures in CT Images.” Computer Methods and

Programs in Biomedicine 171 (April): 27–37.

Raisuddin, Abu Mohammed, Elias Vaattovaara, Mika

Nevalainen, Marko Nikki, Elina Järvenpää, Kaisa

Makkonen, Pekka Pinola, et al. (2021). “Critical

Evaluation of Deep Neural Networks for Wrist Fracture

Detection.” Scientific Reports 11 (1): 6006.

S. G and R. G, (2022) "Automated Breast Cancer

Classification based on Modified Deep learning

Convolutional Neural Network following Dual

Segmentation," 3rd International Conference on

Electronics and Sustainable Communication Systems

(ICESC), Coimbatore, India, 2022, pp. 1562-1569, doi:

10.1109/ICESC54411.2022.9885299.

Sa, Abdelaziz Ismael, A. Mohammed, and H. Hefny.

(2020). “An Enhanced Deep Learning Approach for

Brain Cancer MRI Images Classification Using

Residual Networks.” Artificial Intelligence in Medicine

102 (January).

https://doi.org/10.1016/j.artmed.2019.101779.

Sairam, Vuppala Adithya. (2022). “Bone Fracture

Detection Using X-Rays.”

https://www.kaggle.com/vuppalaadithyasairam/bone-

fracture-detection-using-xrays.

“SPSS Software.” n.d. Accessed March 31, (2021).

https://www.ibm.com/analytics/spss-statistics-

software.

Yang, Lv, Shan Gao, Pengfei Li, Jiancheng Shi, and Fang

Zhou. (2022). “Recognition and Segmentation of

Individual Bone Fragments with a Deep Learning

Approach in CT Scans of Complex Intertrochanteric

Fractures: A Retrospective Study.” Journal of Digital

Imaging 35 (6): 1681–89.

Yang, Sijie, Fei Zhu, Xinghong Ling, Quan Liu, and Peiyao

Zhao. (2021). “Intelligent Health Care: Applications of

Deep Learning in Computational Medicine.” Frontiers

in Genetics.

https://doi.org/10.3389/fgene.2021.607471.

Yang, Tai-Hua, Ming-Huwi Horng, Rong-Shiang Li, and

Yung-Nien Sun. (2022). “Scaphoid Fracture Detection

by Using Convolutional Neural Network.” Diagnostics

(Basel, Switzerland) 12 (4).

https://doi.org/10.3390/diagnostics12040895.

Yoon, Gil Ho, Yeon-Jun Woo, Seong-Gyu Sim, Dong-

Yoon Kim, and Se Jin Hwang. (2021). “Investigation of

Bone Fracture Diagnosis System Using Transverse

Vibration Response.” Proceedings of the Institution of

Mechanical Engineers. Part H, Journal of Engineering

in Medicine 235 (5): 597–611.

AI4IoT 2023 - First International Conference on Artificial Intelligence for Internet of things (AI4IOT): Accelerating Innovation in Industry

and Consumer Electronics

366