Real-Time 3D Reconstruction Based on Single-Photon LiDAR for

Underwater Environments

Yifei Chen

Department of Mechanical Engineering, Tsinghua University, Beijing, 100084, China

Keywords: Real-Time 3D Reconstruction, LiDAR, RT3D.

Abstract: This paper compares three real-time 3D reconstruction methods based on single-photon LiDAR technology

in underwater environments. Methods include the traditional cross-correlation method, the RT3D method

combining highly scalable computational techniques from the computer graphics field with statistical models,

and the ensemble method which combines surface detection and distance estimation are all explored. The

target was placed around three meters away from the transceiver system, and both were submerged in a 1.8-

meter-deep water tank as part of the experimental setup. The transceiver used a picosecond pulse laser source

that emitted light at a frequency of 20 MHz with a center wavelength of 532 nm. The results indicate that in

static scenarios, as the turbidity of the medium increases, the cross-correlation method exhibited the most

significant increase in noise and loss of surface detail; the RT3D method showed less noise increase but more

pronounced surface loss; the Ensemble Method overall performed the best. The cross-correlation approach

was found to be much faster than the other two ways in dynamic scenes, with the Ensemble approach being

marginally slower than the RT3D method, all three methods' processing times reduced as the attenuation

length rose. When the attenuation length exceeded 5.5AL, none of the methods could perform 3D

reconstruction, but both RT3D and Ensemble Methods could still produce clusters of points.

1 INTRODUCTION

Real-time 3D reconstruction is a popular area of study

within the domains computer vision that is used in

robot and other automated equipments. 3D

reconstruction is known as the process of recreating

different aspects of the natural world we are living in

into a virtual environment (Ingale 2021). Essentially,

it involves capturing the three-dimensional spatial

coordinates of every point on the surfaces of objects

in real-world scenes. These captured points,

embedded with spatial coordinate information, are

then depicted within a unified 3D coordinate system

using computer simulation software. This process

accurately restores the surface contours of all objects

within the target environment. When we raise our

requirements for 3D reconstruction to achieve real-

time processing, it allows users to interact in real-time

within the 3D scene, which opens up possibilities for

various real-time applications such as real-time

medical imaging, critical parameter measurement of

high-temperature metal components, AR real-time

interaction and mobile robot mapping and navigation

(Wollborn et al 2023, Wen et al 2021 & Wang et al

2023). Depending on the method of depth

information acquisition, real-time 3D reconstruction

techniques could be categorized into the following

main types: the active reconstruction and the passive

reconstruction. Active 3D reconstruction uses LiDAR

such as TOF depth cameras or structured light

methods like Microsoft's Kinect camera which sends

a predefined signal to the target object and then

receives and processes the returned signal to obtain

the object's depth information. Passive real-time 3D

reconstruction typically requires multiple image

captures using cameras. The images are then

processed to extract object feature information, which

is used to compute the object's three-dimensional

coordinates.

In the process of exploration and utilization of

marine resources, real-time 3D reconstruction offers

profound insights into comprehending and

capitalizing in underwater environment. Underwater

3D maps can help monitor coral reefs, record the

shapes of Cenotes, and support underwater rescue

operations (Williams and Mahon 2004 & Gary et al

2008). However, while real-time 3D reconstruction

technology is rapidly advancing due to the influence

184

Chen, Y.

Real-Time 3D Reconstruction Based on Single-Photon LiDAR for Underwater Environments.

DOI: 10.5220/0012816800003885

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Data Analysis and Machine Learning (DAML 2023), pages 184-191

ISBN: 978-989-758-705-4

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

of autonomous driving and mobile robot research,

underwater 3D reconstruction remains a challenging

research problem. This is because water, unlike air,

has high electrical conductivity and dielectric

constants. Thus, it is not easy to receive GPS signals

underwater. Additionally, the scattering and

absorption properties of light in water differ from

those in air. The presence of suspended particles in

water, originating from human or natural phenomena,

further attenuates light. (Menna et al 2018).

Therefore, it is challenging for cameras to extract

sufficient information for subsequent feature point

detection, matching, camera motion recovery, and

depth and disparity estimation of the 3D scene. The

unique physical properties of water also limit the use

of light detection and ranging system (LiDAR), a fast

and reliable 3D reconstruction method on land,

significantly reducing the effective detection range of

targets. In order to minimize the interference of the

underwater environment, to enhance the real-time

accuracy and robustness of underwater 3D

reconstruction, more effective sensors and data

processing methods are required.

In order to perform 3D reconstruction in water and

other complex media, researchers have developed

single-photon LiDAR imaging sensors in recent

years. Single-photon LiDAR imaging sensor has high

surface delineation capabilities and optical

sensitivity. The systems are typically based on the

Time-of-Flight (ToF) method and Time-Correlated

Single Photon Counting (TCSPC) technology.

Single-photon LiDAR utilizes short pulse widths and

high repetition rate lasers, combined with highly

sensitive detectors, to detect and count returned single

photons. Using TCSPC technology, single-photon

LiDAR is capable of high-resolution 3D single-

photon imaging in scattering underwater

environments (Maccarone et al 2015). Although there

are many advantages using single-photon LiDAR

imaging, capturing enough photon events to establish

accurate parameter estimates may require a long

acquisition time. To further increase the speed of

acquiring scene depth data and simplify the optical

configuration, researchers have developed the Single-

photon avalanche diode (SPAD) for active imaging.

A study by A. Maccarone et al. utilized a

Complementary Metal Oxide Semiconductor

(CMOS) SPAD detector array combined with TCSPC

timing electronic components, reaching visualization

frame rates of 10Hz in scattering environments with

distances up to 6.7AL between the moving target and

the systems (Maccarone et al 2019). However, these

studies did not achieve real-time imaging systems.

One of the main limitations comes from the data

processing segment. The SPAD array's high data rate

had a significant impact on data processing since

larger data volumes require longer processing times

to estimate the intensity and distance data distribution

of targets, which reduces the potential for real-time

reconstruction.

To further enhance the real-time capabilities of

single-photon 3D reconstruction technology,

researchers have developed custom computational

models implemented on Graphics Processing Units

(GPUs). J. Tachella et al. introduced a Real-time 3D

algorithm (RT3D) that uses point cloud denoising

tools presented priviously in a plug-and-play

framework. The RT3D method build fast and robust

distance estimation for single-photon LiDAR

(Tachella et al 2019). RT3D achieved video rates of

50 Hz with processing times as low as 20 ms. K.

Drummond et al. studied the joint surface detection

problem of single-photon LiDAR data, with the and

depth estimation problem, proposing a 3D

reconstruction algorithm based on combined surface

detection and distance estimation (Drummond et al

2021). S. Plosz et al. introduced a highly robust, fast

single-photon LiDAR 3D reconstruction algorithm

and applied it to a pre-collected underwater dataset,

and in the environment up to 4.6AL, they achieved 10

milliseconds processing times (Plosz et al 2023).

However, these studies did not produce a combined

acquisition device and GPU into a comprehensive

underwater 3D reconstruction imaging system.

This paper presents the comprehensive

underwater 3D reconstruction system proposed by A.

Maccarone et al., which is based on the Si-CMOS

SPAD detector array and incorporates real-time

imaging capabilities with a workstation that is GPU-

equipped (Maccarone et al 2023). The discourse

further contrasts the performance of the RT3D

algorithm with the traditional cross-correlation

method and a recently developed method that

amalgamates surface detection and distance

estimation by Drummond et al. within this

experimental framework.

2 METHODS

This section elucidates the fundamental principles of

single-photon LiDAR, accompanied by a concise

overview of traditional cross-correlation method, the

RT3D algorithm and the recently developed

algorithms that amalgamate surface detection with

distance estimation (Ensemble Method). The RT3D

method is restricted to reconstructing one surface per

pixel at most to ensure fairness in comparison. This

Real-Time 3D Reconstruction Based on Single-Photon LiDAR for Underwater Environments

185

section will first introduce fundamental principles of

single-photon LiDAR, then observation model for the

three methods, finally principles of each method.

2.1 Fundamental Principles of

Single-Photon Lidar

The detection of a single photon by a single-photon

detector is not a deterministic event, but rather a

probabilistic one. This probabilistic event is termed

PDE, defined as the likelihood of triggering the

detector once a photon enters it. In practical

engineering applications, for photons of a fixed

wavelength, PDE is commonly denoted as

.

According to the research on laser echo

characteristics by Goodman et al., from the

perspective of regular distribution, for specular

targets, the number of echo photons reflected by the

laser pulse statistically follows a Poisson distribution

(Goodman 1965). For rough targets, however, the

echo photon events statistically adhere to a negative

binomial distribution. That is, for specular targets,

within the sampling time,

, (1)

where represents the energy carried by the echo

light signal reflected from the specular target, k

denotes the number of timing events triggered when

the external signal is input to TCSPC, and signifies

the probability density function of the detector

receiving the energy of the echo light signal, is the

frequency of the echo light signal, and is the Planck

constant.

For rough targets, within the sampling time,

, (2)

where represents the conditional probability of

generating timing events, is the gamma function,

denotes the degrees of freedom of speckle

presented in the echo light signal space, signifies

the average number of photoelectrons within the

sampling time. For photon counting lidar employing

TCSPC counting, , the expression for non-

specular targets will also converge to the form of a

Poisson distribution.

In summary, the Poisson response model of the

single-photon detector to photons within the time

interval

is

, (3)

, (4)

where

represents the energy of the echo signal,

denotes a single photon’s energy.

2.2 Observation Model

This paper establishes the observation model which is

based on the research of Legros et al. Think of a series

of temporal frames with pixels each, with the

data linked to every pixel in a frame made up of a

collection of reflected photon arrival timings (ToF).

It is assumed that in each frame things happen in static

scene, and the detection events caused by dark counts

and other sources are uniformly distributed. For a

known pixel, within a temporal window of width ,

the photon ToFs are

, where

.

At this point, the probability density can be written

down function as

(5)

where is the separation between the surface being

viewed and the reconstruction imaging system,

represents the median of the speed of light, denotes

the likelihood that the photons that were released

from the laser source, reflected off the target, and then

returned to the photon detector are related to the

recorded ToF values. It can alternatively be

understood as the pixel's signal-to-background ratio

(SBR), which is influenced by the background level

and target reflectance.

is the normalized

instrumental response function (IRF). The

background distribution is noted as

. The joint

probability distribution would be

(6)

2.3 Cross-Correlation

Cross-correlation is a common method used in

LIDAR ranging. The normalized waveform template

is denoted as

, and the measured waveform

signal is

. The cross-correlation value of

and

at the time slot k is

.

Initially, the shorter sequence

is zero-padded

on the left starting point to make the new sequence

have the same length as BB. The expression

for cross-correlation then becomes

, (7)

where represents the number of time slots

occupied by the measured waveform signal

.

DAML 2023 - International Conference on Data Analysis and Machine Learning

186

The time slot position corresponding to the

maximum value of the cross-correlation

is

the approximate location

of the target flight time ,

(8)

To obtain a more refined distance , this paper

calculates the difference between the centroid

of

the waveform

in the interval

and the centroid

of the normalized pulse

template

in the interval

for

the flight time values,

(9)

where

is the Full Width at Half Maximum

(FWHM) pulse width of the echo pulse.

The final distance estimation for the target,

denoted as , can be given by:

, (10)

where represents the speed of light.

3 RT3D

J. Tachella et al. introduced a computational

framework, referred to as the RT3D algorithm, which

is accomplished by combining highly scalable

computational techniques from the computer

graphics field with statistical models. The algorithm

uses photoactivated localization microscopy (PALM)

as the general structure while calculating steps for the

proximal gradient on background variables , blocks

of depth variables , and intensity variables . Every

update starts with a gradient step that looks like

equation (6) and is based on the log-likelihood term

. After that, there is a denoising step.

Precisely, using spheres as local primitives, the

algebraic point set surfaces (APSS) algorithm fits a

smooth continuous surface to the point set described

by the depth variable t in real-world coordinates. The

intensity variable in real-world coordinates uses a

manifold metric defined by the point cloud. All points

are processed in parallel, considering local

correlations only, and removing points with

intensities below a given threshold after the denoising

step. The proximal operator for the background

variable is replaced by a denoising technique based

on the fast Fourier transform.

3.1 Ensemble Method

The Ensemble Method, as described by Drummond et

al., is a Bayesian approach that allows for

simultaneous relative distance measurement and

surface recognition, quickly delivering conservative

prior uncertainty. The method depends on the

discretization of , which has a limited capacity to

accept values. It is possible to reframe the surface

detection problem, thanks to this discretization, as a

hypothesis testing problem. At the same time, the

method redefines the distance estimate problem as a

set estimation problem. As a result, this approach is

called the Ensemble Method. This approach yields

not only a 3D map and a detection map that shows the

presence or absence of a surface, but also an

uncertainty map that offers confidence indications for

the accuracy of the estimated distance, intensity, and

background profiles. Here, this paper uses the

algorithm with default parameters, such as a value of

for and an existence measurement

probability of

.

4 RESULTS

The detection objects used for the experiment are

illustrated in the figures. Figure 1(a) displays a brass

tube connector, while Fig. 1(b) and (c) showcase 3D

printed cylindrical objects mounted on a black

anodized aluminum breadboard, utilized for the three-

dimensional reconstruction experiments of stationary

targets (Maccarone et al 2023). The brass connector

has an inner diameter of 15mm and exhibits intricate

three-dimensional features. In contrast, the 3D-

printed rectangular objects have heights of 10mm,

20mm, and 30mm, respectively, and possess simpler

three-dimensional characteristics. Figure 1(d) and (e)

depict objects used for the three-dimensional

reconstruction experiments of moving targets. This

object is attached to a 2-meter-long rail via a

connector, enabling it to move within a three-

dimensional space. The experiment involved

submerging the transceiver system and the target

object in a 1.8-meter-deep water tank. The target was

placed at a distance of about 3 meters. In the

experiment, picosecond pulse laser source was used.

The central wavelength of the laser source is 532 nm,

great and the operating frequency is 20 MHz. The

measurements were conducted using the same

average optical power as specified in Table 1

(Maccarone et al 2023).

Real-Time 3D Reconstruction Based on Single-Photon LiDAR for Underwater Environments

187

Figure 1: Detection objects.

Table 1: Average optical power.

Attenuation lengths

Concentration of scattering agent

Average optical power

7.5 ± 0.4

0.0046%

52mW

7.1 ± 0.4

0.004%

47mW

5.5 ± 0.4

0.003%

32mW

3.6 ± 0.4

0.002%

22mW

<0.5

0%

0.96 mW

4.1 Static Scenes

Three-dimensional reconstruction of the brass pipe

connector was conducted in a static scene. When

employing the Ensemble Method, the threshold for

the surface detection existence probability is set at

. Points that have a probability of

posterior existence less than 0.5 are excluded, with

discarded points being treated as black pixels, setting

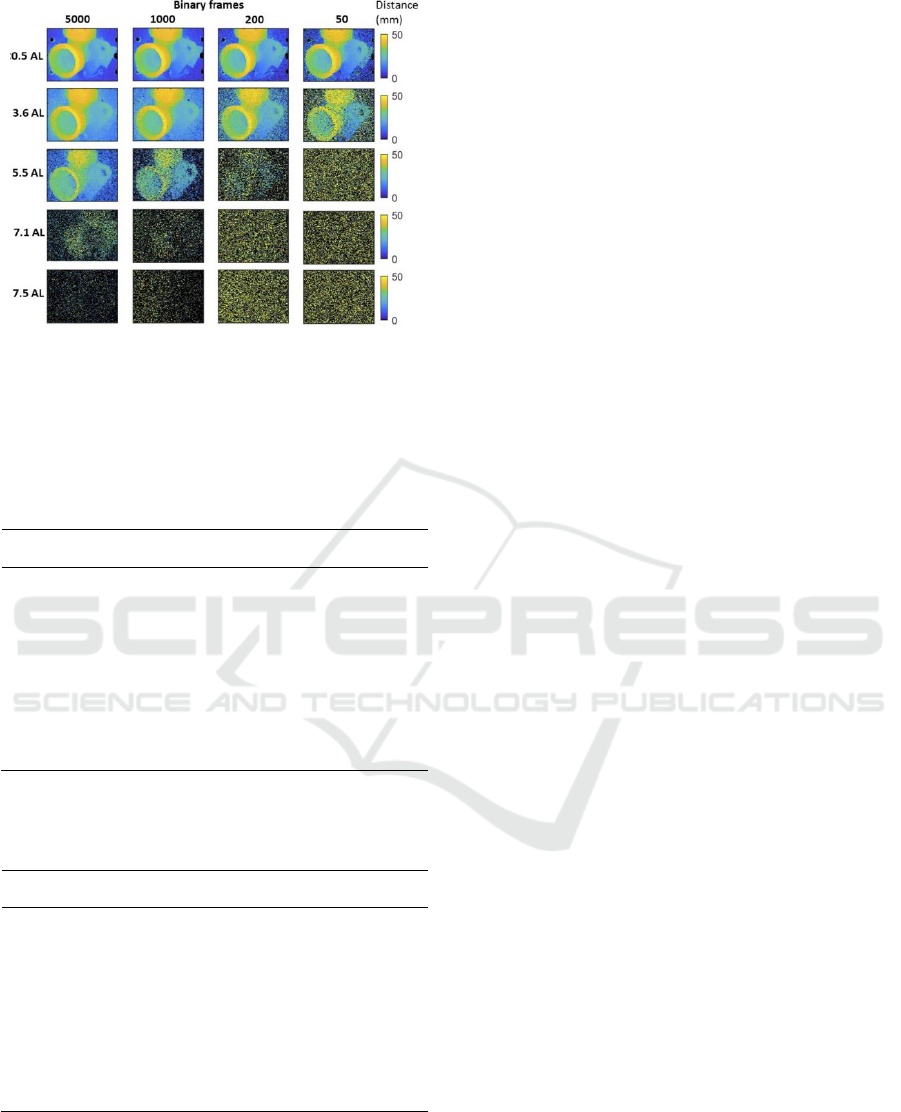

the pixel value to 0. As illustrated in Fig. 2, during the

three-dimensional reconstruction process

(Maccarone et al 2023), we aggregated 5000 binary

frames. All three 3D reconstruction methods

performed well when the attenuation length was less

than 0.5AL. However, as the attenuation length

increased, images obtained from all three methods

exhibited increased noise and surface loss. Among

them, the RT3D method showed less noise increment

but had more pronounced surface loss. This might be

attributed to the smoothing applied to the depth

variable and intensity variable during point cloud

denoising in the RT3D method. The Ensemble

Method exhibited a more significant increase in noise

compared to RT3D, as there is no denoising step in

the Ensemble Method. Overall, the Ensemble Method

showed fewer point losses at an attenuation length of

5.5AL and still maintained some denoising effect at

7.1AL. Hence, in static scenes, the Ensemble Method

offers superior overall performance in three-

dimensional reconstruction.

The Ensemble Method is also employed to

perform three-dimensional reconstruction on

cylindrical 3D printed objects. Histograms were gated

in time, focusing the target within a time window of

400 bins. The finished picture had a gate set between

0 and 50 mm. There are differences in how many

binary frames are used to aggregate the histograms.

The reconstruction results are shown in Fig. 3

(Maccarone et al 2023). Along the axis, the color

represents how far away it is from the transceiver. An

arbitrary origin close to the reference target position

was used. From the reconstruction results, it is

evident that when using the Ensemble Method, the

three-dimensional reconstruction's visual quality

declines with increasing attenuation length or

decreasing aggregated binary frame count, making

the identification of target features more challenging.

Figure 2: 3D profiles at different attenuation lengths using

different methods.

DAML 2023 - International Conference on Data Analysis and Machine Learning

188

Figure 3: Brass pipe connector 3D profiles with different

numbers of binary frames using the ensemble method.

4.2 Dynamic Scenes

Table 2: Parallel orientation’s average processing time to

obtain a single image frame (ms) and standard deviation (in

brackets).

Attenuation

lengths

Cross-

Correlation

RT3D

Ensemble

Method

<0.5

1.15(0.11)

34.53(1.25)

32.76(1.21)

0.6

1.42(0.16)

35.34(1.20)

35.78(2.37)

1.4

1.27(0.19)

32.95(1.53)

33.72(1.92)

2.5

1.49(0.24)

34.53(1.38)

37.08(2.58)

3.6

1.20(0.20)

31.34(1.39)

33.05(1.77)

4.8

0.97(0.18)

28.95(1.18)

29.51(1.32)

5.5

0.97(0.13)

29.53(1.38)

30.92(0.91)

6.0

0.93(0.12)

28.54(2.28)

30.12(0.88)

6.6

0.93(0.12)

28.14(1.44)

29.47(0.81)

Table 3: Perpendicular orientation’s average processing

time to obtain a single image frame (ms) and standard

deviation (in brackets).

Attenuation

lengths

Cross-

Correlation

RT3D

Ensemble

Method

<0.5

1.27(0.19)

34.67(1.98)

35.23(2.44)

0.6

1.54(0.19)

36.17(2.80)

37.19(3.11)

1.4

1.35(0.20)

34.83(1.23)

34.51(3.15)

2.5

1.65(0.27)

34.86(1.39)

38.56(2.98)

3.6

1.30(0.15)

32.36(1.74)

34.22(2.28)

4.8

1.02(0.11)

30.74(1.28)

30.07(1.19)

5.5

0.96(0.09)

29.55(1.12)

30.7(0.98)

6.0

0.94(0.09)

28.30(2.77)

20.54(0.9)

6.6

0.91(0.09)

29.46(4.34)

29.7(0.9)

To investigate the characteristics of real-time online

three-dimensional reconstruction in dynamic scenes,

The T-shaped metal connection made of aluminum is

depicted in Fig. 1(d)(e), and it is chosen as the subject

of study. The term "parallel direction" refers to the

axial direction of the connector's connection hole,

which is parallel to the line of sight of the system.

Displaying the connector's entire length when

oriented perpendicular to the parallel direction, which

is called the vertical direction. In the dynamic scene,

the experiment aggregated 50 binary frames to

generate histograms. Gates were used to control the

histograms, focusing the target within a time window

of 400 bins. Each pixel was processed in parallel

using a GPU to ensure the real-time nature of the 3D

reconstruction.

Experiments were conducted using the Cross-

Correlation, Ensemble Method, and RT3D methods,

with the results presented in Tables 2 and 3

(Maccarone et al 2023). When employing the

Ensemble Method, a discrete set of w values, ,

was chosen. The code was constructed in a modular

fashion and delegated to three separate GPU cores for

processing. The first step involved obtaining the

posterior probability distribution values, followed by

normalization, and finally, the results were derived.

The processing time average for a single image

frame obtained in the parallel orientation is shown in

Table 2, and the processing time average for a single

image frame obtained in the vertical orientation is

shown in Table 3. The standard deviations for

different attenuation lengths are provided in

parentheses. As evident from the tables, the

processing time for each method decreases with the

increase in attenuation length. The cross-correlation

method has a significantly greater processing speed

than the other two methods. The ensemble method's

processing time is slightly slower than that of the

RT3D method. Thus, when the attenuation length

grows, photons are scattered more widely, which

leads to a decrease in the number of returned photons.

Consequently, the generated histograms are sparser,

leading to reduced processing times.

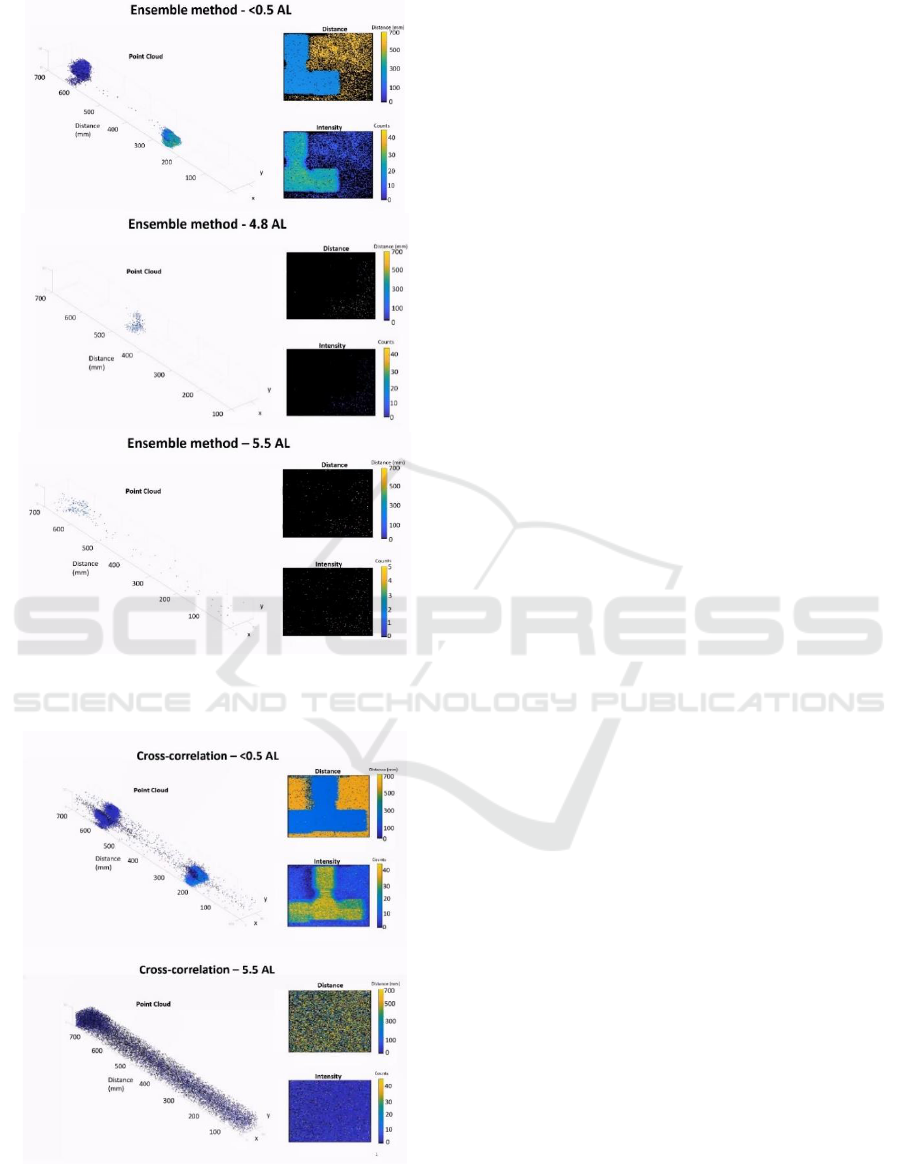

The results of three-dimensional reconstruction in

clear water and target systems with different

attenuation lengths are presented in Fig. 4

(Maccarone et al 2023). When the attenuation length

exceeds 5.5AL, the target becomes unrecognizable,

but clusters of points can still be obtained. This

indicates that these methods cannot perform three-

dimensional reconstruction in environments with

high scattering levels, but they can still track targets.

On the other hand, as is shown in Fig. 5 (Maccarone

et al 2023), the Cross-Correlation method can be used

in low-scattering environments, but it is unable to

recognize or detect targets in high-scattering

environments.

a

Real-Time 3D Reconstruction Based on Single-Photon LiDAR for Underwater Environments

189

Figure 4: 3D real-time profiles at different attenuation

lengths using ensemble method.

Figure 5: 3D real-time profiles at different attenuation

lengths using cross-correlation method.

5 CONCLUSION

The article presents real-time 3D reconstruction

technology underwater based on single-photon

LiDAR, comparing the performance of the Ensemble

Method, RT3D, and the traditional cross-correlation

method in waters of varying turbidity, as proposed by

A. Maccarone et al. The experimental results show

that in static scenes, all three 3D reconstruction

methods perform well in water with low attenuation

lengths, but as attenuation increases, they all exhibit

more noise and surface loss. Among them, the cross-

correlation method performs the worst; the RT3D

method has less noise increase but more obvious

surface loss; the Ensemble Method loses fewer points

at 5.5AL and still has some noise reduction effect at

7.1AL, making it the best performer among the three

methods. The cross-correlation approach was found

to be much faster than the other two ways in dynamic

circumstances, with the Ensemble approach being

marginally slower than the RT3D method. All three

methods' processing times reduced as the attenuation

length rose. When the attenuation length exceeds

5.5AL, none of the methods can perform 3D

reconstruction, but the RT3D method and the

Ensemble Method can still obtain clusters of points.

REFERENCES

K. Ingale, “Real-time 3D reconstruction techniques applied

in dynamic scenes: A systematic literature review,”

Computer Science Review 39, 100338 (2021).

J. Wollborn, A. Schule, R. D. Sheu, D. C. Shook and C. B.

Nyman, “Real-Time multiplanar reconstruction

imaging using 3-dimensional transesophageal

echocardiography in structural heart interventions,”

Journal of Cardiothoracic and Vascular Anesthesia,

37(4), 570-581 (2023).

X. Wen, J Wang, G. Zhang and L. Niu, “Three-dimensional

morphology and size measurement of high-

temperature metal components based on machine

vision technology: a review,” Sensors, 21(14), 4680

(2021).

W. Wang, B. Joshi, N. Burgdorfer, K. Batsos, A. Q. Li, P.

Mordohai and I. M. Rekleitis, “Real-Time Dense 3D

Mapping of Underwater Environments”, 2023 IEEE

International Conference on Robotics and

Automation, 5184-5191 (2023).

S. Williams and I. Mahon, “Simultaneous localisation and

mapping on the great barrier reef”, IEEE International

Conference on Robotics and Automation, 2, 1771-

1776 (2004).

M. Gary, N. Fairfield, W. C. Stone, D. Wettergreen, G,

Kantor and J. M. Sharp, “3D Mapping and

Characterization of Sistema Zacatón from DEPTHX

b

c

a

b

DAML 2023 - International Conference on Data Analysis and Machine Learning

190

(DE ep P hreatic TH ermal e X plorer),” Sinkholes

and the Engineering and Environmental Impacts of

Karst, 202-212 (2008).

F. Menna, P. Agrafiotis and A. Georgopoulos, “State of the

art and applications in archaeological underwater 3D

recording and mapping,” Journal of Cultural

Heritage, 33, 231-248 (2018).

Maccarone, A. McCarthy, X. Ren, R. E. Warbutrton, A. M.

Wallace, J. Moffat, Y, Petillot and G. S. Buller,

“Underwater depth imaging using time-correlated

single-photon counting, “ Optics express, 23(26),

33911-33926 (2015).

Maccarone, F. M. Della Rocca, A. McCarthy, A. McCarthy,

R. Henderson and G. S. Buller, “Three-dimensional

imaging of stationary and moving targets in turbid

underwater environments using a single-photon

detector array,” Optics express, 27(20), 28437-28456

(2019).

J. Tachella, Y. Altmann, N. Mellado, A. McCarthy, R.

Tobin, G. S. Buller, J. Y. Tourneret and S.

McLaughlin, “Real-time 3D reconstruction from

single-photon lidar data using plug-and-play point

cloud denoisers, “Nature communications, 10(1),

4984 (2019).

K. Drummond, S. McLaughlin, Y. Altmann, A.

Pawlikowska and R. Lamb, “Joint surface detection

and depth estimation from single-photon Lidar data

using ensemble estimators,” 2021 Sensor Signal

Processing for Defence Conference (SSPD), pp. 1-5

(2021).

S. Plosz, A. Maccarone, S. McLaughlin, G. S. Buller and

A. Halimi, “Real-Time Reconstruction of 3D Videos

From Single-Photon LiDaR Data in the Presence of

Obscurants,” IEEE Transactions on Computational

Imaging, vol. 9, pp. 106-119 (2023).

A. Maccarone, K. Drummond, A. McCarthy and U. K. S.

Teinlehner, “Submerged single-photon LiDAR

imaging sensor used for real-time 3D scene

reconstruction in scattering underwater

environments,” Optics Express, 31(10), 16690-16708

(2023).

J. W. Goodman, “Some effects of target-induced

scintillation on optical radar performance,”

Proceedings of the IEEE, 53(11), 1688-1700 (1965).

Q. Legros, J. Tachella, R. Tobin, A. McMarthy, S.

Meignen, G. S. Buller, Y. Altmann, S, McLayghlin

and M. E. Davies, “Robust 3d reconstruction of

dynamic scenes from single-photon lidar using beta-

divergences,” IEEE Transactions on Image

Processing, 30, 1716-1727 (2020).

Real-Time 3D Reconstruction Based on Single-Photon LiDAR for Underwater Environments

191