A Knowledge Base of Argumentation Schemes for Multi-Agent Systems

Carlos Eduardo A. Ferreira

1 a

, D

´

ebora C. Engelmann

2 b

, Rafael H. Bordini

3 c

,

Joel Luis Carbonera

1 d

and Alison R. Panisson

4 e

1

Informatics Institute, Federal University of Rio Grande do Sul, Porto Alegre, Brazil

2

Integrated Faculty of Taquara (FACCAT), Taquara, Brazil

3

School of Technology, Pontifical Catholic University of Rio Grande do Sul, Porto Alegre, Brazil

4

Department of Computing, Federal University of Santa Catarina, Ararangu

´

a, Brazil

Keywords:

Multi-Agent Systems, Argumentation, Explainable AI.

Abstract:

Argumentation constitutes one of the most significant components of human intelligence. Consequently, argu-

mentation has played a significant role in the community of Artificial Intelligence, in which many researchers

study ways to replicate this intelligent behaviour in intelligent agents. In this paper, we describe a knowledge

base of argumentation schemes modelled to enable intelligent agents’ general (and domain-specific) argumen-

tative capability. To that purpose, we developed a knowledge base that not only enables agents to reason

and communicate with other software agents using a computation model of arguments, but also with humans,

using a natural language representation of arguments which results from natural language templates modeled

alongside their respective argumentation scheme. To illustrate our approach, we present a scenario in the le-

gal domain where an agent employs argumentation schemes to reason about a crime, deciding whether the

defendant intentionally committed the crime or not, a decision that could significantly impact the severity of

the sentence handed down by a legal authority. Once a conclusion is reached, the agent provides a natural

language explanation of its reasoning.

1 INTRODUCTION

Multi-agent systems (MAS) are computational sys-

tems where autonomous intelligent entities share an

environment (Wooldridge, 2009). This paradigm has

become popular due to the increasing use of arti-

ficial intelligence (AI) techniques and the need for

distributed intelligent systems such as smart homes,

smart cities and personal assistants. Nowadays, MAS

is a popular paradigm for implementing complex dis-

tributed systems driven by AI techniques.

MAS communication often uses argumentation-

based approaches, allowing agents to communicate

arguments that support their positions in dialogues.

Arguments are built from reasoning patterns called

argumentation schemes (Walton et al., 2008), repre-

senting reasoning patterns available for agents in that

a

https://orcid.org/0000-0002-2275-7966

b

https://orcid.org/0000-0002-6090-8294

c

https://orcid.org/0000-0001-8688-9901

d

https://orcid.org/0000-0002-4499-3601

e

https://orcid.org/0000-0002-9438-5508

MAS (Panisson et al., 2021b).

Argumentation schemes are considered a central

component in argumentation-based frameworks for

multi-agent systems. They enable agents to rea-

son and communicate arguments automatically, as

demonstrated in recent practical approaches such as

(Panisson et al., 2021b). They also provide explain-

ability within MAS (Panisson et al., 2021a). Agents

can translate arguments from a computational to a

natural language representation using predefined tem-

plates for argumentation schemes. This makes argu-

mentation and explainability in MAS dependent on a

knowledge base containing argumentation schemes.

To illustrate this, we take the argumentation

scheme role_to_know, from (Panisson and Bordini,

2020), as an example. This scheme was included in

a knowledge base of 73 argumentation schemes pro-

posed in this work. In Jason syntax (Bordini et al.,

2007), this scheme can be represented as a defeasible

rule, which goes as follows:

defeasible_rule(Conc,[role(Agent,Role),

role_to_know(Role,Domain),

asserts(Agent,Conc),

Ferreira, C., Engelmann, D., Bordini, R., Carbonera, J. and Panisson, A.

A Knowledge Base of Argumentation Schemes for Multi-Agent Systems.

DOI: 10.5220/0012547800003690

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 26th International Conference on Enterprise Information Systems (ICEIS 2024) - Volume 1, pages 587-594

ISBN: 978-989-758-692-7; ISSN: 2184-4992

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

587

about(Conc,Domain)])

[as(role_to_know)].

The argumentation scheme can be explained in

natural language as follows:

⟨“<Agent> is a <Role>, and <Role>s know about

<Domain>. <Agent> asserts <Conc>, therefore we

should believe that <Conc>.”⟩[as(role to know)]

This explanation in natural language can be en-

abled in applications through the templates modelled

with each argumentation scheme. It is important

to provide a clear and concise explanation for each

scheme to increase our understanding of the reason-

ing behind it.

This work includes a knowledge base with argu-

mentation schemes that can be imported individually

or grouped by domain. The natural language tem-

plates for each scheme enable agents to explain their

reasoning to human users. The knowledge base is

practically used in the law domain, where an agent

uses these argumentation schemes to determine the

potential intentionality of a crime and explain its con-

clusion using natural language. Overall, this knowl-

edge base adds transparency to the reasoning process

of MAS and covers general argumentation, as well as

legal and hospital bed allocation domains.

Therefore, our work contributes to the field of ar-

gumentation and dialogue by using templates to gen-

erate natural language arguments and explanations.

This differs from the modular platform introduced

in (Snaith et al., 2020), which uses a Dialogue Utter-

ance Generator to populate abstract moves. Our work

is also distinct from the ones presented in (Lawrence

et al., 2020; Lawrence et al., 2019; Visser et al.,

2019), which focus on recognising and validating ar-

gumentation schemes from text or transcripts. Ad-

ditionally, our work is inspired by previous studies

that classify argumentation schemes according to dif-

ferent typologies and provide guidelines for their ap-

plication in different domains (Visser et al., 2018;

Macagno et al., 2017).

2 ARGUMENTATION SCHEMES

AS A CENTRAL COMPONENT

Argumentation schemes (Walton et al., 2008) have

been playing a central role on defining mechanism for

agents reasoning, decision making and communica-

tion (Panisson et al., 2021b; Prakken, 2011), applied

together with frameworks such as ASPIC+ (Modgil

and Prakken, 2014), DeLP (Garc

´

ıa and Simari, 2014),

and others. Most of those works focus on formal as-

pects of argumentation.

Recently, Panisson and colleagues (Panisson et al.,

2021b) proposed an argumentation-based framework

in which argumentation schemes are the central com-

ponents, considering all aspects of these reasoning

patterns for developing multi-agent systems. There-

fore, in this work, we follow (Panisson et al., 2021b),

describing this practical framework to implement

multi-agent systems powered by argumentation tech-

niques. We start by contextualising the use of the ar-

gumentation scheme in multi-agent systems and in-

troducing this practical framework. Later, we de-

scribe how the framework has been used in Explain-

able Artificial Intelligence (XAI), providing agents

with the capability of explaining their reasoning and

decision-making.

2.1 Argumentation Schemes in

Multi-Agent Systems

Argumentation schemes are patterns for arguments

that capture the structure of typical arguments used

in everyday discourse, as well as in specific con-

texts like legal and scientific reasoning (Walton et al.,

2008). They represent forms of arguments that are

defeasible

1

, meaning that they may not be strong by

themselves, but they may provide evidence that war-

rants rational acceptance of their conclusion (Toul-

min, 1958). That means conclusions from argu-

mentation schemes can be inferred in uncertain and

knowledge-lacking situations. The reasoner must be

open-minded to new evidence that can invalidate pre-

vious conclusions (Walton et al., 2008).

We use a first-order language for represent-

ing arguments, following (Panisson et al., 2021b),

as most agent-oriented programming languages are

based on logic programming. We use uppercase

letters to represent variables — e.g., Ag and R in

role(Ag,R) — and lowercase letters to represent

terms and predicate symbols — e.g., john, doctor

and role(john,doctor). We use “¬” to represent

strong negation, e.g., ¬reliable(pietro) means

that pietro is not reliable. We also use negation as

failure “not”, e.g., not(reliable(pietro)) means

that an agent does not know if pietro is reliable.

Let us go back to the example of the

role_to_know argument discussed in the intro-

duction. Imagine that there are two doctors you

are consulting with. One of them, according the

argument representing example, called john, says

that “smoking causes cancer.” However, another

agent also playing the role of doctor, called pietro,

asserts that “smoking does not cause cancer” —

1

Sometimes called presumptive, or abductive as well.

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

588

asserts(pietro, ¬causes(smoking, cancer)).

Any agent aware of both assertion, John’s and

Pietro’s, is able to construct conflicting ar-

guments for ¬causes(smoking, cancer) and

causes(smoking, cancer). However, the agents

can question whether john and pietro are reliable

(trustworthy) sources, if they really play the role of

doctor, and the other questionable points indicated

by the critical questions in the argumentation scheme

used in order to check the validity of that particular

conclusion. For example, imagine that an agent has

the information that “Pietro is not a reliable source”

— ¬reliable(pietro). In that case, that agent

is not able to answer positively the critical question

reliable(pietro), thus it is rational to think that

instance of the argumentation scheme (i.e., that

argument) might not be acceptable for that agent; the

argument concluding ¬causes(smoking, cancer)

might not be an acceptable instance of the argu-

mentation scheme role to know for such a rational

agent.

2.2 Argumentation Schemes and

Explainability

Argumentation schemes combined with natural lan-

guage templates can be used to translate argu-

ments from a computational representation to a nat-

ural language representation, supporting the develop-

ment of sophisticated multi-agent applications capa-

ble of explaining their decision-making and reason-

ing (Panisson et al., 2021a). For example, the natu-

ral language template for the argumentation scheme

role to know is as follows:

⟨ “<Agent> is a <Role>, and <Role>s know about

<Domain>. <Agent> asserts <Conc>, therefore we

should believe that <Conc>”.⟩[as(role to know)]

using the same unification function from

the previous examples {Agent 7→ john,

Role 7→ doctor, Domain 7→ cancer, Conc 7→

causes(smoking, cancer)}, it is possible to build

the following natural language argument:

⟨“john is a doctor, and doctors know about

cancer. john asserts smoking causes cancer,

therefore we should believe that smoking causes

cancer”.⟩[as(role to know)]

Thus, when an agent needs to communicate an ex-

planation in natural language, the agent uses the plan

+!translateToNL that implements how agents trans-

late arguments from a computational representation to

natural language, then aggregating those natural lan-

guage arguments into an explanation:

+!translateToNL([Rule|List],Temp,NLExpl)

<- !translate(Rule, RT);

.concat([RT],Temp,NewTemp);

!translateToNL(List,NewTemp,NLExpl).

+!translateToNL([],Temp,NLExplanation)

<- NLExpl=Temp.

Note that an explanation might be a sequence of

arguments (also considered as a chained/complex ar-

gument). Thus +!translateToNL receives a list of

one or more arguments (each of those arguments is

an instance of an argumentation scheme). Then, it

translates each computational argument to its corre-

sponding natural language argument using the plan

+!translate, which recovers the natural language

template to the argumentation scheme used to instan-

tiate that particular argument and returns its natural

language representation.

For example, the plan +!translate presented be-

low is used to translate arguments instantiated from

the argumentation scheme role_to_know to a natural

language argument, using the same unification func-

tion used to instantiate the argument being translated.

+!translate(defeasible_rule(Conc,

[role(Ag,R), role_to_know(R,Domain),

asserts(Ag,Conc),about(Conc,Domain)])

[as(role_to_know)],TArg)

<- .concat(Ag," is a ",R," and ",R,

"s know about ", Domain". ", Ag,

" asserts ",Conc, "therefore we

should believe that ",Conc,".",TArg).

There will be N different plans +!translate, each

one of them implementing a natural language tem-

plate to translate arguments instantiated from the ar-

gumentation schemes available in that particular sys-

tem to a natural language argument. Agents select

them according to the unification of the defeasible

rule corresponding to the argumentation scheme be-

ing translated. A free variable is used to return the

concatenation of strings that is performed through the

internal action .concat, i.e., TArg unifies with the

natural language translation of that particular com-

putational argument. Then, the execution returns to

the +!translateToNaturalLanguage plan and all argu-

ments used in that explanation are concatenated using

the same internal action .concat, so that the resulting

explanation available in NLExpl can be communicated

by the agent to human users towards any human-agent

interaction interface, for example, using chatbot tech-

nologies (Engelmann et al., 2021a).

A Knowledge Base of Argumentation Schemes for Multi-Agent Systems

589

3 A KNOWLEDGE BASE OF

ARGUMENTATION SCHEMES

Normally, argumentation schemes used in a particu-

lar application are modelled according to the needs

of that particular application domain. For example,

in (Toniolo et al., 2014) argumentation schemes have

been specified for analysing the provenance of in-

formation, in (Parsons et al., 2012) argumentation

schemes have been specified for reasoning about trust,

in (Tolchinsky et al., 2007) argumentation schemes

have been specified for arguing about transplantation

of human organs, in (Panisson et al., 2018) argumen-

tation schemes have been specified for implement-

ing data access control between smart applications,

in (Walton, 2019) argumentation schemes have been

specified for reasoning about the intention of execut-

ing some actions, and so forth. However, some litera-

ture has proposed a set of argumentation schemes for

more general reasoning, for example, those compiled

in Walton’s book (Walton et al., 2008).

In this paper, we present an initiative to build a

knowledge base of argumentation schemes for the ar-

gumentation framework (Panisson et al., 2021b) im-

plemented in Jason (Bordini et al., 2007), according

to the computational representation required by the

framework presented in Section 2.1. Also, concur-

rently with the computational representation of argu-

mentation schemes, we model a natural language tem-

plate for each argumentation scheme in the proposed

knowledge base, according to the approach presented

in Section 2.2. Thus, when importing argumentation

schemes from the proposed knowledge base, agents

are automatically able to use information about its ap-

plication domain to build arguments from those ar-

gumentation schemes available to them, using those

arguments for reasoning and communication, as illus-

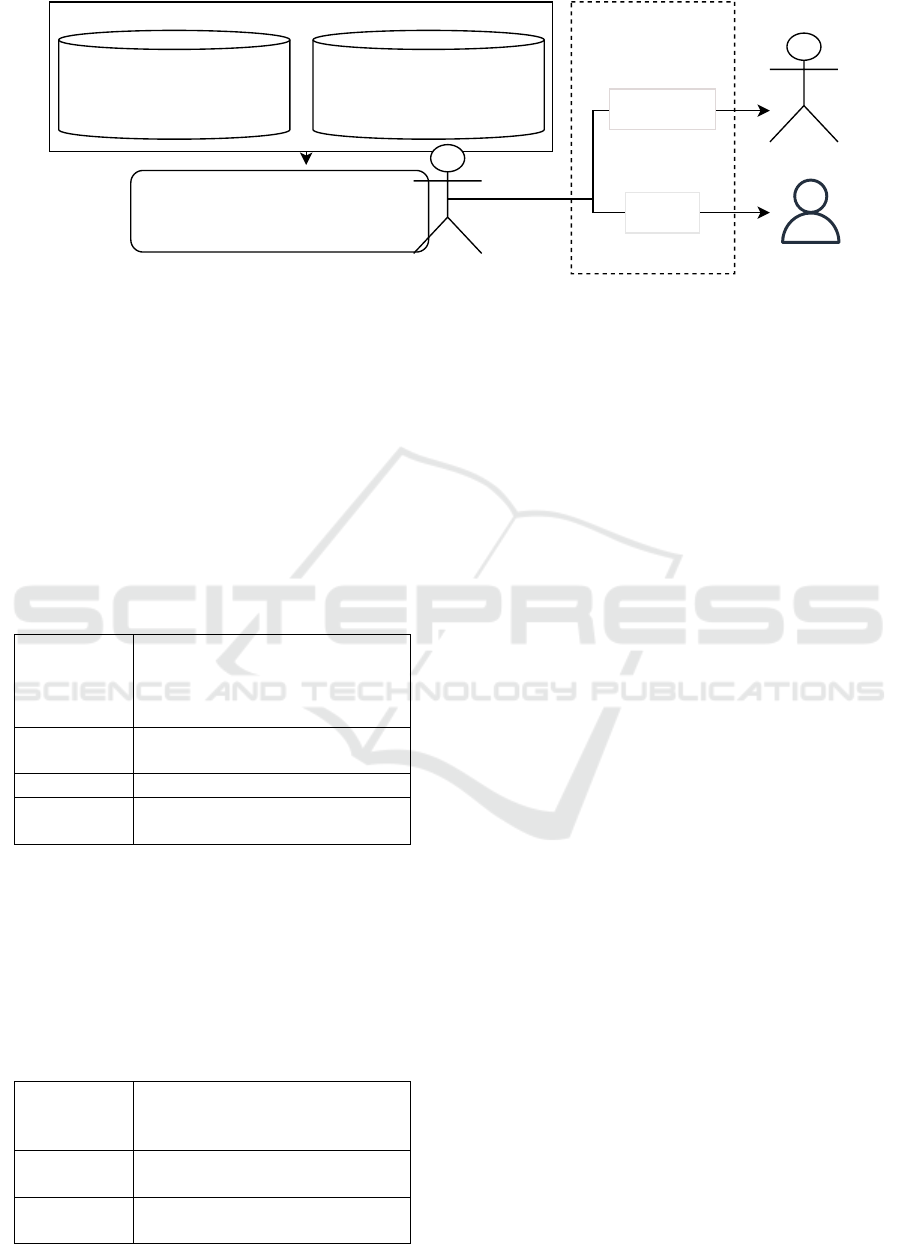

trated in Figure 1.

Furthermore, the proposed knowledge base with

argumentation schemes can be imported by agents ac-

cording to the application need, focusing on only the

modules necessary (or interesting) for that particu-

lar multi-agent application. The knowledge base is

organised towards a module hierarchy, starting from

modules with unique argumentation schemes, mod-

ules with argumentation schemes grouped according

to application domains (for example, argumentation

schemes used by agents to reason about the domain

of law), and the global module corresponding to the

complete knowledge base, enabling agent with a large

capability of argumentation over different domains.

Therefore, one of the central elements of this work

consists of the conception of the knowledge base of

argumentation schemes, which proved to be funda-

mental for evaluating the effectiveness of the argu-

mentation framework approach in different domains.

This knowledge base not only allows the implemen-

tation of argumentation schemes in the context of a

multi-agent system but also provides an additional

layer of understanding, facilitating the translation of

these arguments into natural language. Consequently,

users can lucidly and accurately track the flow of rea-

soning within the multi-agent system.

To demonstrate our approach, in particular the

proposed knowledge base, and how it enables agents

to become argumentative (with themselves or with

others), in this paper, we are going to focus on how

agents build arguments supporting their conclusions

in a particular application domain we will present in

Section 3.1, then translating arguments to natural lan-

guage arguments, and providing those arguments as

an explanation.

Currently, the knowledge base presented in this

paper has about 73 argumentation schemes extracted

from the following literature:

• 22 general argumentation schemes from Walton’s

book (Walton et al., 2008);

• 40 argumentation schemes for reasoning about the

domain of bed allocation in hospital from (Engel-

mann et al., 2021b);

• 11 argumentation schemes about reasoning in le-

gal cases (Walton, 2019; Gordon and Walton,

2009).

In order to demonstrate the proposed knowledge

base, we present 3 argumentation schemes avail-

able, including their computational representation and

natural language templates. These argumentation

schemes are also used in the case study we present

in Section 3.1. The argumentation schemes are: (i)

argumentation scheme from witness testimony (Wal-

ton et al., 2008), argumentation scheme for motive to

intention (Walton, 2019), and argumentation scheme

from bias, adapted from (Walton et al., 2008).

Table 1: Argumentation Scheme from Witness Testimony.

Premise Witness W is in a position to

know about the domain D.

Premise Witness W states that S is true.

Premise The statement S is related to do-

main D.

Premise Witness W is supposedly telling

the truth (as W knows it).

Conclusion Therefore, S may be plausibly

taken to be true.

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

590

Arguments about

the Application

Domain

Data Set with

Argumentation Schemes +

Natural Language Templates

Information about the

Application Domain

+

Argumentation-Based Reasoning

Module

Computational

Language

Natural

Language

Agent

Agents

Users

Figure 1: Our Approach for Argumentation-Based Reasoning and Communication using the Knowledge Base with Argumen-

tation Schemes.

defeasible_rule(S,[position_to_know(W,D),

states(W,S),is_related_to(S,D),

is_telling_the_truth(W)])[as(asFrWT)].

cq(cq1,credible(W,D))[as(asFrWT)].

⟨“<W> is in position to know about <D>. <W> states that

<S> is true. The statement <S> is related to <D>. <W>

is supposedly telling the truth. <W> has credibility to

state about <D>. Therefore, it is plausibly to conclude

that the statement <S> is true.”⟩[as(asFrWT)]

Table 2: Argumentation Scheme from Motive to Intention.

Premise If agent Ag had a motive

M to commit A, then Ag is

more likely to have intention-

ally committed A.

Premise Ag had M as a motive to commit

A.

Premise Ag committed A.

Conclusion Therefore, Ag has intentionally

committed A.

defeasible_rule(

was_intentional(bring_about(Ag,A)),

[had_motive_to(M,bring_about(Ag,A)),

bring_about(Ag,A)])[as(asFrM2I)].

⟨ “<Ag> has <M> as a motive to commit <A>. <Ag>

indeed committed <A>. Therefore, <Ag> has inten-

tionally committed <A>” ⟩[as(asFrM2I)]

Table 3: Argumentation Scheme from Bias.

Premise Agent Ag has no credibility

about domain D when it is bi-

ased.

Premise Agent Ag is biased about do-

main D.

Conclusion Therefore, agent Ag is not cred-

ible about domain D.

defeasible_rule(¬credible(Ag,D),

[is_biased_about(Ag,D)])[as(asFrBias)].

⟨“<Agent> is biased about <Domain>.

Therefore, <Agent> is not credible about

<Domain>.”⟩[as(asFrBias)]

3.1 Case Study

To demonstrate our framework, we will describe a

scenario inspired by the Peter shot George case pre-

sented in (Verheij, 2003). It is essential to emphasise

that this scenario is entirely fictitious, crafted solely

for illustrative purposes. It does not depict real events,

individuals, or legal cases. By presenting this simpli-

fied case, we aim to demonstrate how our approach

can be a valuable tool in decision-making processes,

particularly in contexts where explicit reasoning and

transparency are imperative.

In our scenario, a crime has been committed, and

the testimony of two witnesses will be used to help

decide whether the defendant intentionally commit-

ted the crime or not. Further, extending the analy-

sis by (Verheij, 2003), we include the argument from

motive to intention presented by (Walton, 2019), in

which the agent considers information about a previ-

ous crime (which provides motives to commit the sec-

ond crime), then inferring whether the second crime

was intentional or not. In addition, a given charac-

ter, John, is considered previously accused of steal-

ing a chicken from another character, Joana’s neigh-

bour. The second crime, for which John is in the de-

fendant’s position, is the murder of Joana, where, in

the absence of security camera records or an expert

report, the testimonies of two witnesses who are in a

position to know about this case will be decisive.

Given that Joana witnessed John’s first crime and,

consequently, would testify her witness against him

about the chicken theft, there is an interpretation to

A Knowledge Base of Argumentation Schemes for Multi-Agent Systems

591

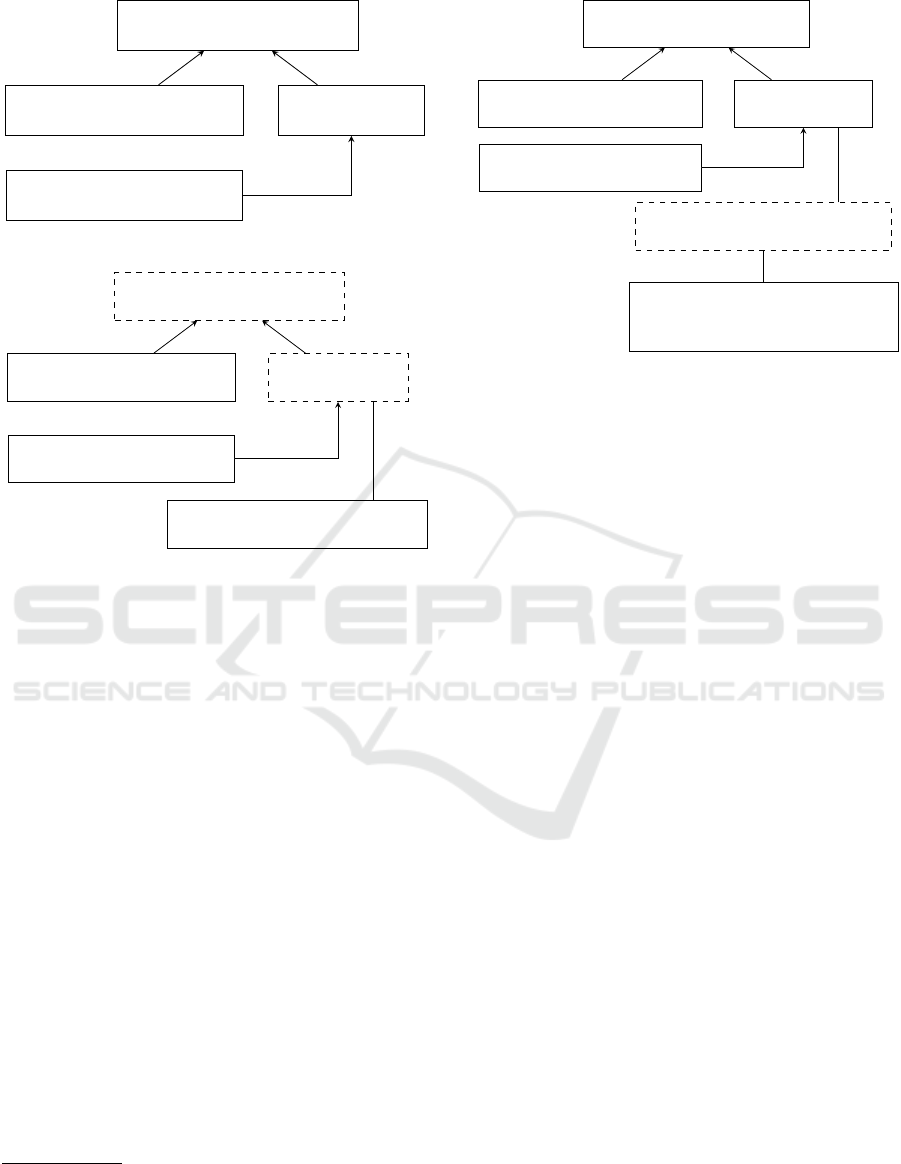

The defendant intentionally

killed the victim

The defendant had

motivation to kill the victim

The defendant

killed the victim

Witness B states that the

defendant killed the victim

Figure 2: Preliminary inference for the case study.

The defendant intentionally

killed the victim

The defendant had

motivation to kill the victim

The defendant

killed the victim

Witness B states that the

defendant killed the victim

Witness A states that the

defendant did not kill the victim

×

Figure 3: Preliminary inference for the case study, adding

information about the witness.

infer that he wanted to prevent this from happening,

as it would be negative for him. Thus, it is known that

the defendant had the motivation to kill the victim

2

,

as shown in Figure 2. Under these circumstances, if

the defendant is found guilty of Joana’s murder, he

will be in a very delicate position, given that the act

he committed has great potential to be considered pre-

meditated, and he can then be accused of intentional

homicide.

Following our scenario, the information that the

defendant killed the victim is supported by the testi-

mony of the witness who saw the defendant kill the

victim. Together, the motivation to kill the victim and

the information that the defendant killed the victim

(supported by a witness) support that the defendant

intentionally killed the victim, an intentional homi-

cide is suggested, as shown in Figure 2.

However, another witness contradicts the accusa-

tion, sating the defendant was with her at the crime

time. With this new information, there is conflicting

information about whether the defendant killed the

victim or not, suggesting that there is no conclusive

decision to support even that the defendant killed the

2

We will assume that there is already enough evidence

for the current defendant to be found guilty in the first crime

so that this information will be provided by a simple system

input.

The defendant intentionally

killed the victim

The defendant had

motivation to kill the victim

The defendant

killed the victim

Witness B states that the

defendant killed the victim

Witness A states that the

defendant did not kill the victim

Defendant and witness A are

married. Therefore, A’s testimony

is not credible in this case

×

×

Figure 4: Final inference suggesting there was an inten-

tional crime.

victim, as shown in Figure 3.

Finally, the agent receives the information that the

witness is married to the defendant, thus updating

their belief base to this case. It is crucial to empha-

sise that her testimony cannot be accepted as impar-

tial and reliable evidence due to the clear conflict of

interest arising from the marriage between the wit-

ness and John. The inherent personal and emotional

bias within this relationship can significantly compro-

mise the witness’s objectivity when testifying on be-

half of John. The credibility of any testimony largely

depends on the witness’s ability to provide impartial

information free from external influences. However,

in the present case, the intimate connection between

the witness and John creates a natural predisposition

to favour the spouse, thereby undermining the testi-

mony’s integrity.

Figure 5 shows the agent’s final explanation in

natural language to the proposed scenario. In this im-

plemented example of ours, it’s important to highlight

that Witness B was instantiated as Scarlett and Wit-

ness A as Laura.

4 RELATED WORK

In (Snaith et al., 2020), the authors describe a modu-

lar platform for argument and dialogue, in which they

introduce the Dialogue Utterance Generator (DUG)

component. DUG searches for propositional content

to populate the abstract moves present in the modu-

lar platform using a template that fills those abstract

moves. Moves include the “argue” move, in which

an argument is filled. Our work differs from (Snaith

et al., 2020) in that we use templates to generate nat-

ural language arguments in order to provide explana-

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

592

[judgeAgent]

- scarlett is in position to know

about john’s crime judgment.

- scarlett statement that john

committed joana’s murder is true.

- The statement john committed

joana’s murder is related to

john’s crime judgment.

- scarlett is supposedly telling the

truth.

- scarlett has the credibility to

state about john’s crime judgment.

- Therefore, it’s plausible to

conclude that the statement john

committed joana’s murder is

true.

[judgeAgent]

- laura is in position to know

about john’s crime judgment.

- laura statement that john

committed joana’s murder is false.

- The statement john committed

joana’s murder is related to

john’s crime judgment.

- laura is supposedly telling the

truth.

- laura has no credibility to

state about john’s crime judgment.

[judgeAgent]

- john has ’avoid joana telling to

police about your theft ector’s

chicken previous crime’ as a

motive to committed joana’s

murder.

- john indeed committed joana’s

murder.

- Therefore, john has intentionally

committed joana’s murder.

Figure 5: Jason agent system output.

tions.

In (Lawrence et al., 2020), the authors describe a

decision tree for annotating argumentation schemes

corpora, providing a heuristic decision tree that

aims to clarify Walton’s top-level taxonomy (Wal-

ton et al., 2008) of 60 schemes. That work is

extended with an assistant to annotate argumenta-

tion schemes (Lawrence et al., 2019), and an an-

notated corpus of argumentation schemes is pro-

vided by (Visser et al., 2019). Different from our

work, (Lawrence et al., 2020; Lawrence et al., 2019;

Visser et al., 2019) present components for applica-

tions (for example, argument mining (Lawrence and

Reed, 2016)) that focus on recognising argumentation

schemes from text (or transcripts for natural language

interactions), and validating arguments, instances of

argumentation schemes according to the data avail-

able.

In (Visser et al., 2018), the authors revisited

the computational representation of argumentation

schemes, providing a guideline for the classification

of schemes according to two authors, named Walton’s

typology (Walton et al., 2008) and Wagemans’ Peri-

odic Table of Arguments (Wagemans, 2016). Also,

in (Macagno et al., 2017), the authors study the struc-

ture, classification and use of argumentation schemes.

Our work on grouping modules of argumentation

schemes is inspired by such classification, in which (i)

(Visser et al., 2018) aims at using such classification

to corpus annotation, and (ii) (Macagno et al., 2017)

provides a discussion on the application of argumen-

tation schemes on different domains.

5 CONCLUSION

In this paper, we presented a knowledge base of argu-

mentation schemes that, when provided to agents, al-

low them to become argumentative (with themselves

during reasoning and with others during communica-

tion/dialogues). Also, when aware of such argumen-

tation schemes and their respective natural language

templates, agents are able to explain their reasoning

and decision-making to human users using natural

language arguments.

Currently, the knowledge base has about 73 argu-

mentation schemes and their respective natural lan-

guage templates and has been used to implement so-

phisticated multi-agent applications in which agents

argue with each other and with human users, for ex-

ample, (Engelmann et al., 2021b). The proposed

knowledge base is organised by modules, ranging

from individual argumentation schemes and their re-

spective natural language templates to argumentation

schemes grouped by application domains

3

, for exam-

ple, the law domain. In our future work, we intend

to extend the proposed knowledge base by modelling

a large number of argumentation schemes for diverse

application domains.

3

The knowledge base is available open-source at

github.com/cadu08/AS KB ICEIS24

A Knowledge Base of Argumentation Schemes for Multi-Agent Systems

593

REFERENCES

Bordini, R. H., H

¨

ubner, J. F., and Wooldridge, M. (2007).

Programming Multi-Agent Systems in AgentSpeak us-

ing Jason (Wiley Series in Agent Technology). John

Wiley & Sons.

Engelmann, D., Damasio, J., Krausburg, T., Borges, O.,

Colissi, M., Panisson, A. R., and Bordini, R. H.

(2021a). Dial4jaca – a communication interface be-

tween multi-agent systems and chatbots. In Dignum,

F., Corchado, J. M., and De La Prieta, F., editors,

Advances in Practical Applications of Agents, Multi-

Agent Systems, and Social Good. The PAAMS Collec-

tion, pages 77–88, Cham. Springer International Pub-

lishing.

Engelmann, D. C., Cezar, L. D., Panisson, A. R., and Bor-

dini, R. H. (2021b). A conversational agent to sup-

port hospital bed allocation. In Britto, A. and Val-

divia Delgado, K., editors, Intelligent Systems, pages

3–17, Cham. Springer International Publishing.

Garc

´

ıa, A. J. and Simari, G. R. (2014). Defeasible logic

programming: Delp-servers, contextual queries, and

explanations for answers. Argument & Computation,

5(1):63–88.

Gordon, T. F. and Walton, D. (2009). Legal reasoning with

argumentation schemes. In Proceedings of the 12th

international conference on artificial intelligence and

law, pages 137–146.

Lawrence, J. and Reed, C. (2016). Argument mining using

argumentation scheme structures. In COMMA, pages

379–390.

Lawrence, J., Visser, J., and Reed, C. (2019). An online

annotation assistant for argument schemes. In Pro-

ceedings of the 13th Linguistic Annotation Workshop,

pages 100–107. Association for Computational Lin-

guistics.

Lawrence, J., Visser, J., Walton, D., and Reed, C. (2020).

A decision tree for annotating argumentation scheme

corpora. In 3rd European Conference on Argumenta-

tion: Reason to Dissent.

Macagno, F., Walton, D., and Reed, C. (2017). Argu-

mentation schemes. history, classifications, and com-

putational applications. History, Classifications, and

Computational Applications (December 23, 2017).

Macagno, F., Walton, D. & Reed, C, pages 2493–

2556.

Modgil, S. and Prakken, H. (2014). The aspic+ framework

for structured argumentation: a tutorial. Argument &

Computation, 5(1):31–62.

Panisson, A. R., Ali, A., McBurney, P., and Bordini,

R. H. (2018). Argumentation schemes for data ac-

cess control. In Computational Models of Argument

(COMMA), pages 361–368.

Panisson, A. R. and Bordini, R. H. (2020). Towards a com-

putational model of argumentation schemes in agent-

oriented programming languages. In IEEE/WIC/ACM

International Joint Conference on Web Intelligence

and Intelligent Agent Technology (WI-IAT).

Panisson, A. R., Engelmann, D. C., and Bordini,

R. H. (2021a). Engineering explainable agents:

An argumentation-based approach. In Interna-

tional Workshop on Engineering Multi-Agent Systems

(EMAS).

Panisson, A. R., McBurney, P., and Bordini, R. H. (2021b).

A computational model of argumentation schemes

for multi-agent systems. Argument & Computation,

12(3):357–395.

Parsons, S., Atkinsonb, K., Haighc, K., Levittd, K., Rowed,

P. M. J., Singhf, M. P., and Sklara, E. (2012). Ar-

gument schemes for reasoning about trust. Computa-

tional Models of Argument: Proceedings of COMMA

2012, 245:430.

Prakken, H. (2011). An abstract framework for argumenta-

tion with structured arguments. Argument and Com-

putation, 1(2):93–124.

Snaith, M., Lawrence, J., Pease, A., and Reed, C. (2020).

A modular platform for argument and dialogue. In

COMMA, pages 473–474.

Tolchinsky, P., Atkinson, K., McBurney, P., Modgil, S., and

Cort

´

es, U. (2007). Agents deliberating over action

proposals using the proclaim model. In International

Central and Eastern European Conference on Multi-

Agent Systems, pages 32–41. Springer.

Toniolo, A., Cerutti, F., Oren, N., Norman, T. J., and Sycara,

K. (2014). Making informed decisions with prove-

nance and argumentation schemes. In Proceedings of

the Eleventh International Workshop on Argumenta-

tion in Multi-Agent Systems, 2014.

Toulmin, S. E. (1958). The Uses of Argument. Cambridge

University Press.

Verheij, B. (2003). Artificial argument assistants for de-

feasible argumentation. Artificial intelligence, 150(1-

2):291–324.

Visser, J., Lawrence, J., Wagemans, J., and Reed, C.

(2018). Revisiting computational models of argument

schemes: Classification, annotation, comparison. In

7th International Conference on Computational Mod-

els of Argument, COMMA 2018, pages 313–324. ios

Press.

Visser, J., Lawrence, J., Wagemans, J., and Reed, C. (2019).

An annotated corpus of argument schemes in us elec-

tion debates. In Proceedings of the 9th Conference of

the International Society for the Study of Argumenta-

tion (ISSA), 3-6 July 2018, pages 1101–1111.

Wagemans, J. (2016). Constructing a periodic table of ar-

guments. In Argumentation, objectivity, and bias:

Proceedings of the 11th international conference of

the Ontario Society for the Study of Argumentation

(OSSA), Windsor, ON: OSSA, pages 1–12.

Walton, D. (2019). Using argumentation schemes to find

motives and intentions of a rational agent. Argument

& Computation, 10(3):233–275.

Walton, D., Reed, C., and Macagno, F. (2008). Argumenta-

tion Schemes. Cambridge University Press.

Wooldridge, M. (2009). An introduction to multiagent sys-

tems. John Wiley & Sons.

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

594