From Data to Insights: Research Centre Performance Assessment

Model (PAM)

Oksana Tymoshchuk

a

, Monica Silva

b

, Nelson Zagalo

c

and Lidia Oliveira

d

Department of Communication and Art, University of Aveiro, Aveiro, Portugal

Keywords: Assessment Model, Research Project, Digital Media, DigitalOBS, Performance Assessment Model (PAM).

Abstract: This paper presents the Performance Assessment Model (PAM), designed to refine assessment practices for

the impact of scientific projects and make them easier to understand with the help of information visualisation

tools (InfoVis). The model incorporates three main dimensions: input, output, and impact, to capture the

breadth of scientific contributions. Using PAM, a holistic analysis of project results and impacts can be

conducted, integrating qualitative and quantitative data. The project team tested the model on ten research

projects, which allowed for its adaptation to different project types and ensured a comprehensive assessment

of tangible and intangible impact. Data organised with PAM was transferred to Power BI, a software that

allows for interactive visualisation and detailed data analysis. The model's adaptability and flexibility make

it valuable for assessing how effectively scientific projects create positive, enduring impacts on society. The

study results indicate that PAM provides a systematic approach to evaluating and enhancing the performance

of scientific projects. It is particularly beneficial for research centre managers needing an effective tool to

measure their projects' impacts. PAM also promotes transparency and accountability in the evaluation process.

Ultimately, it can ensure scientific projects are carried out effectively and efficiently, maximising societal

benefits.

1 INTRODUCTION

In recent decades, increased funding for scientific

research from international and national bodies has

aimed to promote innovation, knowledge transfer,

and progress towards the United Nations' Sustainable

Development Goals (SDGs). These initiatives

provide financial support and encourage advanced

training, research development, the creation of

international collaboration networks, effective

science communication, and robust links with the

private sector (Saenen et al., 2019; Santos, 2022).

These efforts have established various research

units, such as public-private collaborations,

transdisciplinary centres, research networks, and

science and technology centres (Science Europe,

2017). These units bring together researchers from

different fields, enabling the development of

scientific projects aimed at solving complex social

a

https://orcid.org/0000-0001-8054-8014

b

https://orcid.org/0000-0002-5094-7281

c

https://orcid.org/0000-0002-5478-0650

d

https://orcid.org/0000-0002-3278-0326

problems. However, increased public funding for

research projects presents challenges in science

management, particularly rigorous evaluation, and

accountability (Science Europe, 2021).

This highlights the importance of sustainable

project management, which involves planning,

monitoring, and controlling project delivery and

support processes. It considers the environmental,

economic, and social aspects of a project's life cycle

of resources, processes, outputs, and outcomes. The

goal is to benefit stakeholders transparent, fair, and

ethically, including their proactive participation

(Silvius and Schipper, 2020).

To address these challenges, the European

Science Foundation (2011) has developed a good

practice guide to improve the quality and integrity of

the European evaluation process. Additionally,

European national agencies are working to improve

their systems and criteria for evaluating scientific

180

Tymoshchuk, O., Silva, M., Zagalo, N. and Oliveira, L.

From Data to Insights: Research Centre Performance Assessment Model (PAM).

DOI: 10.5220/0012550600003690

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 26th International Conference on Enterprise Information Systems (ICEIS 2024) - Volume 1, pages 180-188

ISBN: 978-989-758-692-7; ISSN: 2184-4992

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

projects. For example, in Portugal, the Foundation for

Science and Technology I.P. (FCT, I.P.) conducts

periodic evaluations of Research and Development

(R&D) Units with the international evaluators,

assessing scientific and technological activities,

strategic objectives, activity plans, and future

organization (FCT, 2023).

However, establishing and maintaining adequate

procedures to assess research projects quality remains

a challenge for public and private funding

organisations at national and international levels (By

et al., 2022). Each project is unique in scientific terms

and follows different research methodologies, and it

is challenging to establish clear and simple indicators

considering the complexity and contextualization of

research (Patrício et al., 2018; Santos, 2022; Steelman

et al., 2021).

There has been a questioning of the tendency to

evaluate research productivity based on "traditional"

bibliometric indicators, such as the volume of

publications and their citations (Scruggs et al., 2019).

For example, metrics like, such as the SCImago

Journal Ranking (SJR) and the Journal Impact Factor

(JIF, Clarivate), weren’t originally designed to

evaluate research quality (Santos, 2022).

Consequently, several studies argue for a qualitative

approach to assessing research quality, emphasising

the need for new evaluation approaches that offer a

realistic and comprehensive view of research value

(Patrício et al., 2018; Saenen, et al., 2019). These

authors emphasize the need to develop new

evaluation approaches that provide a realistic and

comprehensive view of the value of research. As

noted by Steelman et al. (2021), the results of

scientific research go beyond academic work and

should consider contributions to positive changes in

economic, social, and/or environmental contexts.

Socially relevant knowledge is not always related to

scientific relevance or high production of

publications.

Furthermore, some studies argue for considering

temporal phases when assessing short, medium, and

long-term research benefits (Trochim et al., 2008).

The model proposed by these authors defines short-

term markers to assess immediate activities and

results, such as training, collaboration,

transdisciplinary integration, and financial

management. Intermediate markers denote progress

in developing science, models, methods, recognition,

publications, communications, and improved

interventions. Long-term indicators include the

impact of research on professionals, decision-makers,

and society in general. This logic model is valuable

because it allows for observing patterns of change in

the research impact over time.

Many models proposed by the international

scientific community aim to develop fairer and more

impartial evaluation models, using quantitative and

qualitative approaches to identify "success stories" in

research (Patrício et al., 2018;

Silvius et al., 2020

).

Among the proposed models there are approaches

such as "input-process-output", "input-output-

outcome-impact", "input-activities output-outcome-

impact", or "input-output-impact", which emphasize

measurements and weightings throughout the

knowledge production process (European

Commission, 2009; Frey & Widmer, 2009; Djenontin

& Meadow, 2018). For example, Frey & Widmer

(2009) propose evaluating a project by analysing its

efficiency about the effectiveness of its performance.

This model mainly distinguishes input, process,

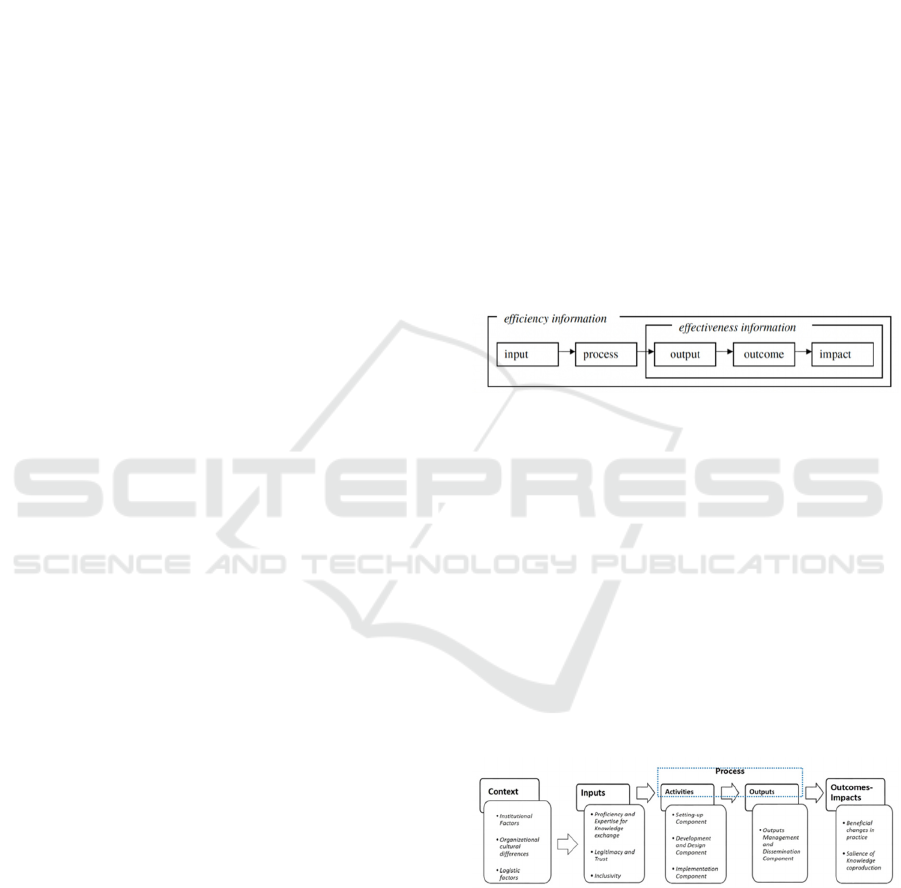

output, outcome, and impact (Figure 1).

Figure 1: Project assessment model by Frey and Widmer

(2009).

This model considers various aspects of the project,

including the resources invested (input), the activities

carried out (process), the deliverables produced

(output), the changes or results achieved (outcome),

and the broader effects or influence generated

(impact). According to the authors, to truly assess

effectiveness, it is necessary to consider the

relationship between efficiency and effectiveness and

evaluate the project's performance holistically,

considering all the above-mentioned dimensions.

Most project study models are focused on biology

and medicine, as depicted in the figure below.

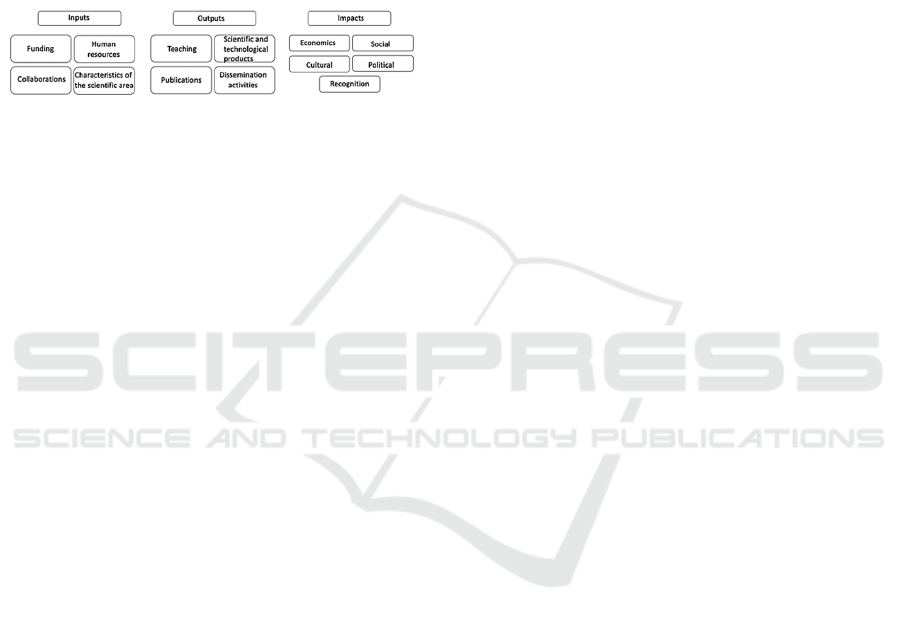

Figure 2: Factors involved in knowledge co-production

activities by Djenontin and Meadow (2018).

The co-production of knowledge applies principles

and processes that lead to developing the logical

model of the Centres for Disease Control and

Prevention (CDC, 2004). This model includes

Context, Inputs, Process (Activities and Outputs), and

Outcomes-Impacts, illustrates the placement and

From Data to Insights: Research Centre Performance Assessment Model (PAM)

181

significance of each variable within a project

management framework. This approach organizes

and presents outcomes, enabling researchers and

project managers involved in co-production work to

evaluate each stage of their project cycle critically.

This evaluation helps improve activity planning

(Djenontin & Meadow, 2018).

Another model for evaluating scientific projects

was developed in Portugal, validated in three

polytechnic higher education institutions (Patrício et

al., 2018).

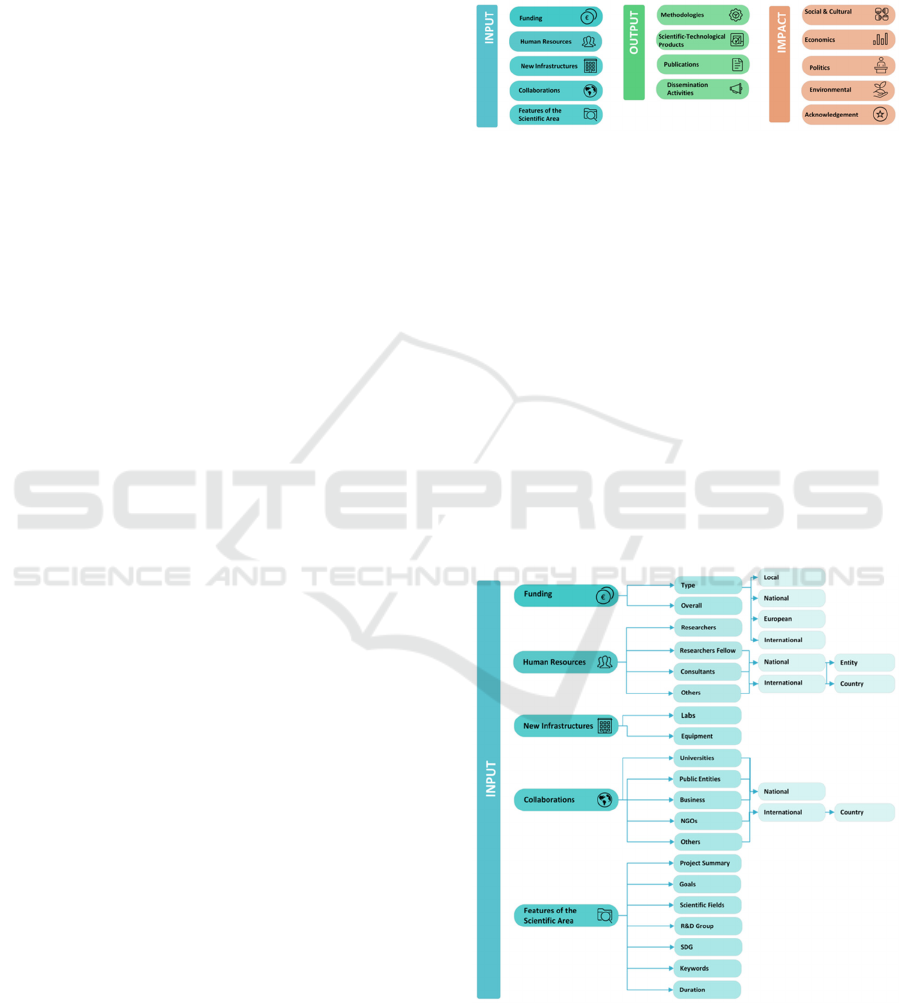

Figure 3: Applied research performance evaluation model

by Patrício et al. (2018) (adapted by authors).

Figure 3 shows the model's three main dimensions:

i) Input - refers to the resources needed to implement

interventions, measuring the quantity, quality, and

timeliness of these resources, including policies,

human resources, materials, and financial resources.

ii) Output - encompasses the most valued results,

including scientific publications in articles, books,

book chapters, and scientific communications.

iii) Impact - values a variety of indicators, considering

different dimensions and values, as well as positive,

desirable, unforeseen, direct, and indirect effects in

the short, medium, and long term (Patrício et al.,

2018).

It's crucial to apply these indicators flexibly,

considering the research areas’ peculiarities, the

difficulties in measuring impacts, and the time needed

to evaluate results, covering short-, medium-, and

long-term goals (Patrício et al., 2018;

Silvius et al.

2020

).

Most of the models identified in this study are

designed to address a particular issue, such as

evaluating projects to allocate funding. The Project

Assessment Model (CCA, 2012) is introduced as a

conceptual framework that delineates the objective of

the evaluative study. It assists research funders in

determining the type of contributions they may

provide to the financing process and the necessary

information to establish key indicators – Inputs,

Outputs and Impacts on Science, Socio-Economics

Impacts – related to Contribution Pathway,

Information Needs, Potential Indicators and Potential

Data Sources.

D

iscovery research activities necessitate inputs,

encompassing both current and retrospective

measures. These measures are typically dependable

and readily accessible and can be obtained at various

levels of aggregation. The data utilised in these

activities has been collected and developed over an

extended period (Cvitanovic et al., 2016). Outputs

research process's intricate, dynamic, and uncertain

nature serves as a valuable source of intermediate

data, showcasing the gradual progress and

contributions made towards scientific advancements,

as well as the anticipated long-term effects

(Castellanos et al., 2013). Impacts the research

process's intricate, dynamic, and uncertain nature to

determine the extent to which the outcomes align with

the stated objectives of the funding initiative. In

contrast to inputs or outputs, impacts manifest

themselves over several temporal dimensions,

embodying the intricate, dynamic, and uncertain

nature (Bautista et al., 2017).

As research becomes increasingly collaborative

and interdisciplinary, developing new evaluation

methods to capture the full impact and value of

scientific contributions is crucial (

Silvius et al., 2020).

This includes traditional metrics such as citations and

publications and considering factors such as data

sharing, open science practices, and societal impact.

By embracing these challenges, we can ensure that

the evaluation of scientific research keeps pace with

the evolving landscape of digital transformation.

2 METHOD

This study was conducted by the DigitalOBS team at

the DigiMedia Research Centre at the University of

Aveiro. Using the explanatory methodology, this

study's objective was to better understand the results

and impacts of activities within projects funded by

international and national funds and define the

research centre's development strategies. To achieve

these goals, a model was developed to evaluate the

productivity of a Research Centre. The PAM Model

seamlessly incorporates data analysis and

visualization tools, enabling researchers to quickly

and easily present data through interactive reports and

dashboards.

The methodology used in this study began with

identifying relevant indicators for evaluating the

outcomes of funded scientific research projects.

Building on the insights of Frey and Widmer (2009),

Patricio et al. (2018), Cvitanovic et al. (2016), and

Djenontin and Meadow (2018) we recognized the

significance of presenting a more concise model. The

researchers organized all collected data following the

"input-output-impact" model to simplify the

evaluation process and minimize bureaucratic

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

182

obstacles. This model enables the seamless

integration of qualitative assessments and

quantitative indicators. Moreover, it facilitates

extracting pertinent information from existing project

reports, effectively reducing administrative

complexities. On the other hand, the more intricate

"input-process-output-outcome-impact" models were

found to be excessively convoluted. They posed

challenges when retrieving information, particularly

for projects that had already been concluded some

time ago (Frey & Widmer, 2009).

Through the analysis of existing evaluation models,

two models were identified as the most suitable for the

intended evaluation: the scientific production model

(Patrício et al., 2018) and the Results Logic Model

(Trochim et al., 2008). Based on this analysis, three

main dimensions of analysis were identified: input,

output, and impact. Subcategories and indicators were

proposed for each dimension based on the analysed

models. These dimensions and indicators will be

presented in detail in the following section.

The PAM model was then tested by evaluating ten

projects with different types of funding (national and

international). The test facilitated the customization

of the model to suit the requirements of the Research

Center by eliminating certain indicators that were

deemed non-essential or challenging to gather. For

instance, metrics such as the "Magnitude of

sales/revenues/profits generated from goods or

services", and "Operational expenditures" were

revised due to the need for intricate data gathering.

However, considering the specific characteristics

of the projects developed in the DigiMedia Research

Center, there is a need to include several indicators,

such as “New infrastructures”, in the input dimension.

These indicators, specifically in the "scientific-

technological products" sub-dimension, are crucial

role in streamlining the data collection. They are

paramount in helping researchers effectively

catalogue and organize their data.

The developed model was subsequently applied to

evaluate 10 funded projects conducted at the

Research Center, allowing for minor adjustments, and

validating the model. Currently, this model is being

used to analyse the scientific activity of research

projects, supported by the Power BI tool.

3 RESULTS

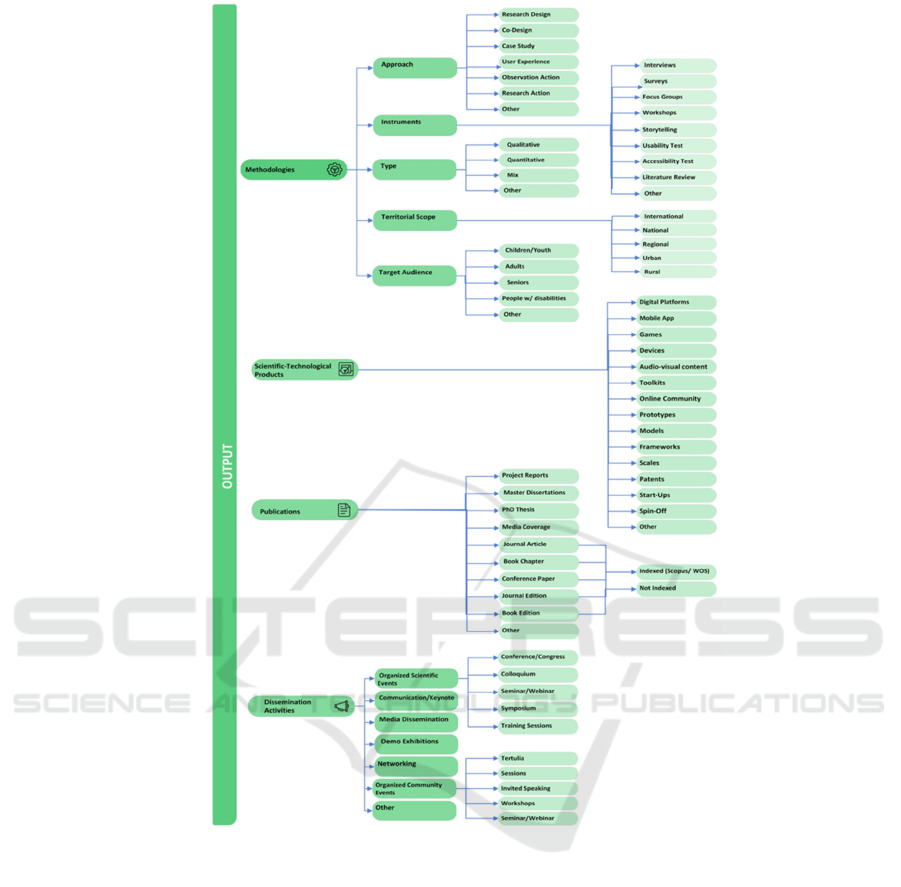

The developed PAM provides a comprehensive

conceptual and analytical framework for presenting

and evaluating scientific activities, project results,

and their potential impacts on society. By identifying

the key variables that need to be measured and

integrating both qualitative and quantitative data

typically obtained from scientific projects, this model

allows for a more thorough understanding and

interpretation of the collected data (Figure 4).

Figure 4: Performance Assessment Model (PAM).

Based on the analysis of existing models, three main

dimensions of analysis were considered: input,

output, and impact (Attachments 1:

https://doi.org/10.5281/zenodo.10678914).

3.1 Input Dimension

The initial phase of this model involves a

comprehensive analysis of project inputs, which

encompass crucial elements required for the

successful implementation of scientific projects. The

Input dimension analyses the resources allocated to

the project under scrutiny. It includes five main sub-

areas: Funding, Human Resources, New

Infrastructures, Collaborations, and Features of the

Scientific Area (Figure 5).

Figure 5: Input dimension of the PAM model.

The "Funding" sub-dimension analysis provides an

overview of the main trends in securing project

financial resources, allowing researchers to make

From Data to Insights: Research Centre Performance Assessment Model (PAM)

183

Figure 6: Output dimension of the PAM model.

informed decisions and devise strategies to optimize

funding opportunities. Evaluating the human resources

involved in scientific projects is also crucial.

The "New Infrastructures" sub-dimension

involves a detailed analysis of various topics directly

related to the implementation and management of

both physical and technological infrastructures. This

investigation gives Research Center managers

insights into the benefits that these projects bring to

the overall development and progress of this Center.

The "Collaborations" sub-dimension enables

understanding of the network of partners established

within the project framework. This analysis helps

identify key stakeholders, collaborations, and

synergies between different organizations and

research groups. It allows project managers and

decision-makers to foster effective partnerships,

leverage existing resources, and enhance the overall

project impact.

This model also incorporates a "Features of the

scientific area" sub-dimension, focusing on the

qualitative analysis of the projects. It examines the

objectives, keywords, and scientific areas of these

projects. By analysing these features, organizations

can better understand the projects' focus and scope,

identify emerging trends, and research areas, and

align their strategies and resources accordingly.

3.2 Output Dimension

The output dimension focuses on the direct results

due to the actions taken, which are directly linked to

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

184

the project's objective and can be achieved in the short

or medium term (Figure 6). This dimension consists

of four main sub-dimensions: methodologies,

scientific and technological products, publications,

and dissemination of activities.

The "methodologies" sub-dimension aims to

understand the main trends in digital media research

by mapping the methodological procedures used in

the projects in detail. The indicators used to assess the

methodologies include: the approach type; the

specific instruments applied; the research type; the

projects' territorial scope, and the target audience

being studied. This comprehensive examination

allows researchers to discover valuable insights and

make informed observations about the evolving

landscape of digital media research.

The "Scientific and technological product" sub-

category encompasses the tangible outcomes of

scientific research and technological innovation.

These products are created by applying scientific

knowledge and methods to foster innovation in

various domains and address real-world challenges.

The indicators established for this sub-dimension aim

to facilitate comprehension of their complexity and

purpose and the key trends in advancing these

products within the context of scientific projects.

The "Publications" dimension encompasses a

broad spectrum of outcomes that are highly valued and

respected by numerous models and frameworks in the

scientific community (Patricio et al., 2018). These

publications range from articles, books, and book

chapters to reports, PhD Theses, master dissertations,

media coverage, and other grey literature such as

brochures and information notes. Indicators for

indexing articles in databases such as Scopus or Web

of Science, given their importance to some funding

organisations.

The "Dissemination" dimension evaluates how

the knowledge or results of projects are shared,

communicated, and accessible to stakeholders like

researchers, policymakers, and the community. It is

essential to understand that specific strategies and

approaches to dissemination may differ based on the

context, project goals, and the nature of the

information being shared, influencing the choice of

dissemination channels and the evaluation of the

project impact and effectiveness.

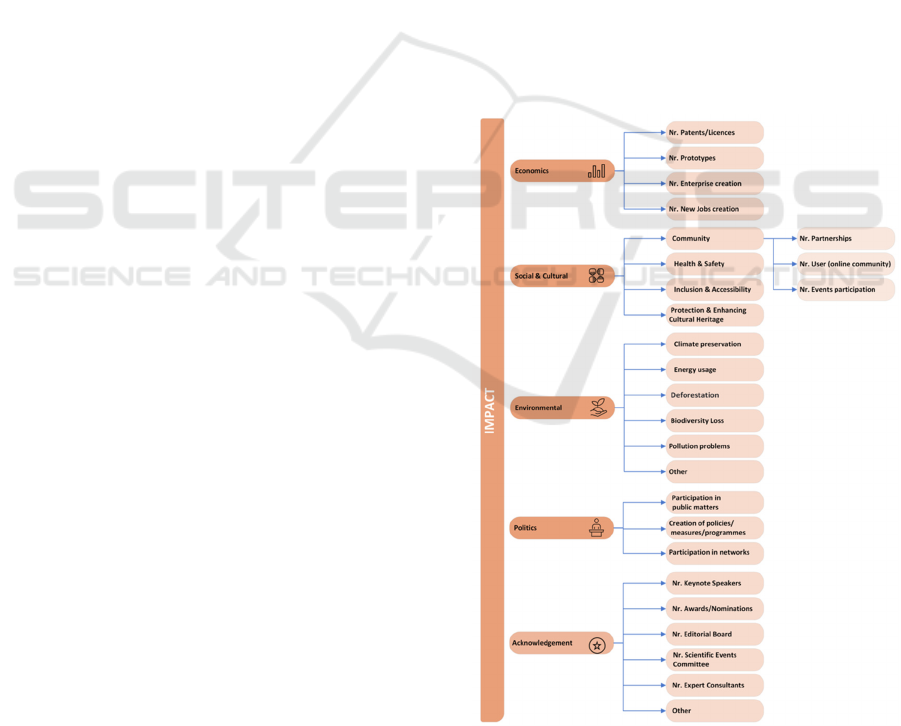

3.3 Impact Dimension

The third dimension, "Impact," focuses on a project's

long-term consequences. Defining a project's

exclusive impact is challenging, as multiple projects'

outcomes can contribute to the same impact. For

instance, an impact could be observed within digital

media through improved digital literacy among senior

citizens or enhanced accessibility of mobile

applications (Figure 7).

Whether economic, social, cultural,

environmental, political impacts aim to understand

the variability of applied research results. They

represent the multifaceted results and effects that

research can have on society. Measuring these

impacts is crucial to understanding research

contributions' real value and significance. Impacts

aren't confined to tangible results but include

intangible benefits and societal changes.

Economic impacts, for instance, extend beyond

monetary gains and include benefits like developing

new or improved products, processes, or services.

Regarding social impacts, they incorporate aspects

related to changing or altering social conditions or

social practices and habits. Cultural impacts are

reflected in the involvement and engagement of

communities and changes in cultural practices and

products. They can influence behavioural patterns

and shape cultural norms.

Figure 7: Impact dimension of the PAM model.

Political impacts deepen specific social impacts

by bringing about alterations and changes at the level

From Data to Insights: Research Centre Performance Assessment Model (PAM)

185

of political activities and public issues. These effects

can lead to transformative changes that benefit

individuals, communities, and society.

Environmental impacts can include promoting

active citizenship, increased participation in public

environmental activities, and community resilience

and sustainability.

Recognition, a more measurable impact can be

quantified through the number of invitations, awards,

and other forms of acknowledgement. The aim is to

assess the project impact as an integral part of the

science-society relationship, showcasing the

outcomes and effects of applied research.

The PAM model aims to help research centre

understand the extensive impact of ongoing research

activities. This facilitates effective communication

not only with society at large but also with the

scientific funders. The goal is to foster the creation of

knowledge and diverse perspectives, providing

decision-makers with the necessary insights to make

well-informed choices for Research Centre’s optimal

progress and expansion.

3.4 Data Presentation Using Power BI

Project results data, collected using the PAM model,

was transferred from an Excel file to Power BI

software. Using this data, the team developed a Power

BI report with the following sections: Projects,

Human Resources, Collaborations, Scientific Area,

Applied Methodologies, Products, Publications, and

Dissemination Activities (Figure 8). Each of these

sections was designed to provide precise insights into

various aspects of the projects, facilitating the process

of decision-making and strategizing (Silva et al.,

2024).

Figure 8: Data presentation using Power BI.

These reports and dashboards facilitate the effective

communication of knowledge and conclusions. They

present complex information in a simple,

understandable way, making it easier for researchers

to share their findings, communicate their insights,

and influence decision-making. In conclusion, Power

BI software revolutionises how researchers handle

and present data, making the process more efficient,

comprehensive, and communicative.

4 CONCLUSIONS

The Performance Assessment Model (PAM) provides

a framework for comprehensively evaluating the

results and impacts of funded scientific projects. It

simplifies the evaluation process into three main

dimensions: input, output, and impact, offering a

structured approach to understanding the

multifaceted nature of research endeavours.

One of the PAM model's key strengths is its

ability to integrate qualitative and quantitative data,

enriching the evaluation process. Indicators carefully

selected within each dimension provide insight on

critical aspects such as funding trends, human

resources composition, research methodologies,

scientific and technological products, and

dissemination of research results. The model's

successful application to research projects and its

ongoing use in analysing scientific activities within

our research center highlights its practicality and

relevance in research management.

Adapting evaluation models to reflect the

evolving research landscape, including integrating

innovative InfoVis tools, is vital. Power BI software

allows researchers to present project data visually,

engagingly, and interactively. It enables the analysis

of all project results and the creation of a project-

specific dashboard. Researchers can create and share

interactive reports and dashboards by utilising the

PAM model and this software's features, simplifying

analysis and knowledge communication. This

streamlined approach not only saves time but also

enhances data presentation.

However, this study faced limitations related to

finding up-to-date models. Many of the models

discovered required updates to align with current

scientific advancements, mainly digital technologies.

Additionally, most models identified were designed

to assess project proposals for funding rather than the

project outcomes and impacts.

Adapting the PAM model in different research

areas could benefit greatly. With its clear structure

and flexibility, this model can be easily customized to

meet the specific needs of various fields, enabling

research centres to gain a more profound

understanding of their projects and increase their

societal contributions.

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

186

ACKNOWLEDGEMENTS

This work is financially supported by national funds

through FCT – Foundation for Science and

Technology, I.P., under the project

UIDB/05460/2020

REFERENCES

Bai, L., Sun, Y., Shi, H., Shi, C., & Han, X. (2022).

Dynamic assessment modelling for project portfolio

benefits. J. of the Operational Research Society, 73(7),

1596-1619. https://doi.org/10.1080/01605682.2021.19

15193

Bautista, S., Llovet, J., Ocampo-Melgar, A., Vilagrosa, A.,

Mayor, Á., Murias, C., Orr, B. (2017). Integrating

knowledge exchange and the assessment of dryland

management alternatives - a learning-centered partici-

patory approach. Environmental Management, 195, 35-

45. https://doi.org/10.1016/j.jenvman.2016.11.050

Castellanos, E., Tucker, C., Eakin, H., Morales, H., Barrera,

J., Díaz, R. (2013). Assessing the adaptation strategies

of farmers facing multiple stressors: lessons from the

Coffee and Global Changes project in Mesoamerica.

Environmental Science & Policy, 26, 19-28.

https://doi.org/10.1016/j.envsci.2012.07.00

CDC [Centers for Disease Control and Prevention] (2004).

Evaluation guide: developing and using a logic model.

Dep. of Health and Human Services, Atlanta, GA.

https://www.cdc.gov/dhdsp/docs/logic_ model.pdf

Council of Canadian Academies (2012). Informing

Research Choices: Indicators and Judgment. The

Expert Panel on Science Performance and Research

Funding. https://cca-reports.ca/reports/informing-

research-choices-indicators-and-judgment/

Cvitanovic, C., McDonald, J., Hobday, A. J. (2016). From

science to action: principles for undertaking

environmental research that enables knowledge

exchange and evidence-based decision-making.

Environmental Management, 183, 864-874.

https://doi.org/10.1016/j.jenvman.2016.09.038

Djenontin, I., Meadow, A. (2018). The art of co-production

of knowledge in environmental sciences and

management: lesson from international practice.

Environmental Management, 61, 885-903.

https://doi.org/10.1007/s00267-018-1028-3

European Science Foundation (2011). European Peer

Review Guide Integrating Policies and Practices into

Coherent Procedures. Ireg – Strasbourg.

https://www.esf.org/fileadmin/user_upload/esf/Europe

an_Peer_Review_Guide_2011.pdf

European University Association (2019). Research

Assessment in the Transition to Open Science 2019.

https://eua.eu/downloads/publications/research%20ass

essment%20in%20the%20transition%20to%20 open%

20science.pdf

FCT (2023). FCT publishes the Notice of the Call of the

Evaluation of R&D Units 2023-2024.

https://www.fct.pt/en/fct-publica-o-aviso-de-abertura-

da-avaliacao-das-unidades-de-id-2023-2024/

Frey, K., Widmer, T., (2009). The role of efficiency

analysis in legislative reforms in Switzerland. In 5th

ECPR General Conference, Potsdam, Germany

https://www.zora.uzh.ch/id/eprint/26718/8/Frey1.pdf

Patrício, M., Alves, J., Alves, E., Mourato, J., Santos, P.,

Valente, R. (2018). Avaliação do desempenho da

investigação aplicada no ensino superior politécnico:

Construção de um modelo. Sociologia, Problemas e

Práticas, (86), 69-89. https://journals.openedition.org/

spp/4053

Saenen, B., Morais, R., Gaillard, V., Borrell-Damián, L.

(2019). Research Assessment in the Transition to Open

Science: 2019 EUA Open Science and Access Survey

Results. European University Association.

Sandoval, J., Lucero, J., Oetzel, J., Avila, M., Belone, L.,

Mau, M. (2012). Process and outcome constructs for

evaluating community-based participatory research

projects: a matrix of existing measures. Health

Education Research,

27(4), 680-690. https://doi.org/

10.1093/her/cyr087

Santos, J. (2022). On the role and assessment of research at

European Universities of Applied Sciences. Journal of

Higher Education Theory and Practice, 22(14).

https://doi.org/10.33423/jhetp.v22i14.5537

Scruggs, R., McDermott, P., Qiao, X. (2019). A Nationwide

Study of Research Publication Impact of Faculty in

U.S. Higher Education Doctoral Programs. Innovative

Higher Education, 44(1), 37– 51. https://doi.org/

10.1007/s10755-018-9447-x

Science Europe (2021). Practical Guide to the International

Alignment of Research Data Management. 2021.

https://www.scienceeurope.org/media/4brkxxe5/se_rd

m_practical_guide_extended_final.pdf.

Silva M., Duran, L., Bermudez, S., Fererira, F.,

Tymoshchuk, O., Oliveira, L., Zagalo, N. (2024). The

power of Information Visualization for understanding

the impact of Digital Media projects. In Proc. 26th

International Conference on Enterprise Information

Systems (in press).

Silvius, G., & Schipper, R. (2020). Sustainability Impact

Assessment on the project level; A review of available

instruments. The Journal of Modern Project

Management, 8(1). DOI:10.19255/JMPM02313

Steelman, T., Bogdan, A., Mantyka-Pringle, C., Bradford,

L., Baines, S. (2021). Evaluating transdisciplinary

research practices: insights from social network

analysis. Sustainability Science, 16, 631-

645. https://doi.org/10.1007/s11625-020-00901-y

Teixeira, A., Sequeira, J. A. (2011). Assessing the influence

and impact of R&D institutions by mapping

international scientific networks: the case of INESC

Porto. Economics and Management Research Projects:

An International Journal, 1(1), 8-19

Trochim, W., Marcus, S., Mâsse, L., Moser, R., Weld, P.

(2008). The evaluation of large research initiatives: a

participatory integrative mixed-methods approach.

From Data to Insights: Research Centre Performance Assessment Model (PAM)

187

American Journ of Evaluation, 29(1), 8-28.

https://doi.org/10.1177/1098214007309280

Walter, A., Helgenberger, S., Scholz, R. (2007). Measuring

societal effects of transdisciplinary research projects:

design and application of an evaluation method.

Evaluation and program planning, 30(4), 32.

https://doi.org/10.1016/j.evalprogplan.2007.08.002

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

188