Virtual3R: A Virtual Collaborative Platform for Animal

Experimentation

Mohamed-Amine Abrache

a

and Lahcen Oubahssi

b

Laboratoire d'Informatique de l'Université du Mans, Le Mans Université, LIUM, EA 4023,

Avenue Messiaen, 72085 Le Mans Cedex 9, France

Keywords: Virtual Reality, Virtual Learning Environments, Collaborative Platform, Animal Experimentation, Vrirtual3R,

Immersive Learning, Educational Technology.

Abstract: The purpose of this article is to highlight the ongoing work within the framework of a research project named

Virtual3R. The primary objective of this project is to introduce an alternative method, based on Virtual Reality

(Virtual3R platform), to reduce the reliance on live animals for training in biological engineering departments

across France. The overarching goal in this regard is to provide learners with the basic technical procedures

and gestures before engaging in real animal experimentation. The platform emphasizes its pedagogical

contribution by providing a dynamic and collaborative learning environment for both teachers and learners.

The technical framework supporting this perspective is based on an architectural design with different

functional layers. This paper presents an overview of the platform's functional architecture, offering

descriptions for each of its modules. Simultaneously, we present the results of the platform’s experimentation,

which serve as evidence of the learners' overall satisfaction with the virtual platform. The findings support

the platform's efficacy as a user-friendly and collaborative learning environment. These findings also validate

the platform's pedagogical value, demonstrating its beneficial impact on knowledge acquisition and learners'

active participation in the virtual environment.

1 INTRODUCTION

Virtual reality (VR) has become an essential

component of modern technological progress, with

applications in a wide range of fields (Kumari &

Polke, 2019). In this paper, our main focus is on the

use of this technology in the learning context.

Indeed, the overall aim of our research is to

contribute to the design and development of Virtual

Learning Environments (VLEs) in partnership with

teachers, ensuring alignment with their pedagogical

requirements. In addition, we intend to assist and

provide tools for educators for creating and

developing VLEs that are adapted to their

pedagogical needs.

In this educational context, VR has the potential

to significantly improve the learning process by

providing a more practical and engaging experience

compared to traditional learning approaches (Allcoat

& Mühlenen, 2018). Furthermore, VR also supports

a

https://orcid.org/0000-0002-3457-8914

b

https://orcid.org/0000-0002-2933-8780

the development of virtual applications-oriented

collaborative learning (Affendy & Wanis, 2019;

Zheng et al., 2018). In this regard, collaborative work

on a virtual activity can be highly beneficial for video

conferencing and interactive learning procedures

within virtual worlds (Najjar et al., 2022).

Within the educational landscape, these VR

technology merits are particularly apparent in

educational disciplines that require authentic and

hands-on engagement (Sala, 2020), such as

Biological Science Education (BSE).

Indeed, the integration of VR in BSE can

transform the way students are trained and educated

in animal experimentation. VR provides learners with

engaging and immersive experiences, allowing them

to develop a better understanding of animal anatomy

and learn how to perform suitable gestures during

animal experiments (Oubahssi & Mahdi, 2021).

Biology education traditionally encounters ethical

concerns regarding animal use in learning settings,

54

Abrache, M. and Oubahssi, L.

Virtual3R: A Virtual Collaborative Platform for Animal Experimentation.

DOI: 10.5220/0012605500003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 1, pages 54-65

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

necessitating a reduction in such usage (Oakley,

2012). Ormandy et al. (2022) support integrating VR

into BSE to enhance training effectiveness while

upholding ethical considerations. This approach

aligns with the principles of the 3R rule, which

advocates for replacement, reduction, and refinement,

promoting ethical considerations in the educational

process (Lemos et al., 2022).

This article presents the Virtual3R research

project, aimed at proposing a VR-based alternative to

reduce animal usage in biological engineering

departments in France. The goal is to help learners

acquire proficiency in basic technical procedures and

gestures before real animal experimentation. The

platform emphasises instructional contributions by

providing a stimulating and collaborative learning

environment. In this regard, an instructor-centred

iterative approach is adopted, promoting continuous

partnership and feedback loops to address

instructional needs (Garcia-Lopez et al., 2020).

An experiment conducted as part of this study with

students in biological science yielded promising

findings with regard to the platform's user-friendliness

and its effectiveness in facilitating skill development.

This paper is organised as follows: in the next

section, a selected literature review of virtual reality

in education and collaborative virtual learning

environments is presented, the section provides an

overview of the virtual reality approached applied for

animal experimentation. Then, the architecture of the

proposed platform is described in Section 3. In

Section 4, we outline the experimental procedure that

was undertaken to assess the platform's utility. The

final section provided a comprehensive conclusion to

this study, as well as perspectives for future research.

2 LITERATURE REVIEW

2.1 VR in Education: Integration and

Pedagogical Approaches

In the educational context, the integration of VR as a

learning modality facilitates knowledge retention,

enhances technical, behavioural and interpersonal

skills, along with creating dynamic learning situations

(Fussell & Truong, 2021; Howard & Gutworth, 2020;

Nassar et al., 2021; Schmid et al., 2018).

The use of VR technology offers active channels

for knowledge transmission and creates captivating

environments that increase learners' confidence in

real-life situations (Young et al., 2020). It also

provides more accessibility than physical classrooms,

breaking down conventional barriers and resulting in

increased engagement, involvement, communication,

and creativity (Wang et al., 2021).

Integration of VR in the educational setting

ensures a secure learning environment, enhances

student motivation, and facilitates the understanding

of complex concepts. (King, 2016; Majewska &

Vereen, 2023; Sukmawati et al., 2022).

VR experiences foster comprehension and

investigation of complex concepts through various

learning approaches, enhancing creativity, assistive

technologies, and student involvement (Dailey-

Hebert et al., 2021). The merging of VR technology

with educational practices provides a comprehensive

strategy that improves the quality of learning

experiences and introduces a new era marked by

innovation, openness, safety, and progress (Hickman

& Akdere, 2018).

According to Huang & Liaw (2018), VR has the

potential to support the integration of numerous

learning theories, including constructivism,

connectivism, and Gardner's multiple intelligences.

Previous research has established two theoretical

paradigms governing the use of VR as an

instructional tool: Situated learning and Embodied

learning. Situated learning promotes active

participation in a given topic, focusing on authentic

experiences that closely mirror real-life personal or

professional challenges (Lave, 2012). VR can be

used to connect traditional classrooms with true-to-

life situations (Dawley & Dede, 2014). For example,

students can study virtual specimens in a way that

closely simulates real dissection. Embodied learning,

on the other hand, involves all five senses, making

learning more complete and more efficient

(Skulmowski & Rey, 2018).

The combination of VR and embodied learning

increases learners' sensory engagement by allowing

them to interact with and manipulate virtual objects

and systems (Erkut & Dahl, 2018).

2.2 Collaborative Virtual Learning

Environments (CVLEs)

CVLEs are immersive and interactive pedagogical

environments that promote varied interactions and

differentiated contributions (Konstantinidis et al.,

2009). They offer computer-mediated digital spaces

that enable individuals to meet, interact, and cooperate,

improving educational and collaborative endeavours

(Dumitrescu et al., 2014; Ouramdane et al., 2007).

These environments transform virtual spaces into

dynamic communication contexts, presenting

information in various ways, from simple texts to 3D

graphics (Sarmiento & Collazos, 2012). In this

Virtual3R: A Virtual Collaborative Platform for Animal Experimentation

55

context, active interaction between learners and the

virtual environment is crucial for enabling

collaborative learning (Beck et al., 2016).

From a theoretical perspective, collaborative

learning is an educational methodology that

emphasises active engagement and cooperative

efforts of learners to collectively attain shared

learning objectives (Laal & Laal, 2012). The primary

goal is to address challenges, achieve desired

outcomes, or deepen understanding within a specific

knowledge domain (Laal, 2013). Successful

implementation of collaboration within VR-based

settings involves learners actively interacting and

manipulating virtual objects to create shared

experiences (Margery et al., 1999).

Temporal dynamics contribute to the

enhancement of the collaborative atmosphere in

CVLEs, facilitating synchronous interactions among

learners regardless of their physical locations (Ellis et

al., 1991). The real-time aspect of this interaction

promotes immediate engagement and cooperation,

improving the collaborative component of the

educational process (Çoban & Goksu, 2022).

Throughout their development, educational

collaborative VR initiatives have seen diversification

and innovation. The VR-LEARNERS project

developed a virtual reality learning environment

centred on digital exhibits from European museums

(Kladias et al., 1998). The Clev-R application is among

the initiatives that broadened the range of educational

applications by facilitating collaborative engagement

in a variety of contexts (McArdle et al., 2008).

In the same direction, Jara et al. (2012)’s work

enhanced the ability for collaboration by

incorporating Virtual and Remote Laboratories

(VRLs) into frameworks for synchronous e-learning.

The use of virtual reality collaboration was expanded,

resulting in a broader range of educational

applications. For instance, Mhouti et al. (2016)

introduce a cloud-based CVLE leveraging cloud

computing to optimise resource management and

meet dynamic learner needs, fostering a flexible and

collaborative learning environment. Platforms such

as the DICODEV platform (Pappas et al., 2006) and

work such as those of Ruiz et al. (2008), Chen et al.

(2021), illustrate the adaptability and versatility of

collaborative virtual reality environments in a variety

of educational fields.

2.3 Virtual Reality and Animal

Experimentation

VR has significantly improved the biological sciences

teaching, with advancements in scene-rendering

technologies, interaction techniques, and

information-sharing mechanisms (Fabris et al., 2019;

Khan et al., 2021; Wu, 2009).

These systems enable the creation of interactive

learning environments for different educational needs

in the field of animal experimentation, allowing

learners to collaborate remotely (Jara et al., 2012;

Quy et al., 2009).

In this regard, VR offers an ethical educational

alternative to traditional animal dissection, allowing

students to acquire skills in animal anatomy while

adhering to the principles of the 3Rs (Zemanova,

2022).

In fact, virtual dissection simulators, such as those

presented in Predavec (2001), Abdullah (2010) along

with the ViSi tool (Tang et al., 2021), introduce a new

dimension to the study of animal anatomy by

allowing students to explore 3D virtual animal

specimens, practice dissection techniques, and

explore anatomy without relying on real animals.

Vafai & Payandeh (2010)’s aimed at achieving a

higher level of authenticity during manipulation

through proposing an animal dissection simulator that

uses haptic feedback, providing a multi-sensory

experience and guiding users.

Besides, the VEA platform, developed by

Oubahssi & Mahdi (2021), focuses on learning the

right gestures in animal experimentation while

respecting ethical rules. In this regard, learners can

manipulate virtual objects, move around in a virtual

laboratory, and access educational resources.

Moreover, Sekiguchi & Makino (2021) proposed a

VR system that allows students to participate virtually

in the dissection of vertebrate animals, focusing on

preparation, dissection, observation, and post-

treatment. Each step is designed to teach specific

skills while fostering a deep understanding of animal

anatomy and ethics.

Compared to the tools mentioned above, our

proposal offers a comprehensive virtual laboratory

simulation that emulates the experimental

environment, allowing users to explore its different

sections and participate in preparatory activities.

ViRtual3R emphasises collaboration, enabling

learners to engage in collaborative experiments

through synchronised interactions and real-time

communication. In fact, the platform's learning

activities are designed based on a collaborative-

centric approach, ensuring an engaging and

motivating educational experience. The platform is

focused on the efficiency of the learning process, as it

was developed to address the specific educational

needs of the biological engineering departments of

the University Institutes of Technology in France,

CSEDU 2024 - 16th International Conference on Computer Supported Education

56

identifying and fulfilling requirements that existing

tools failed to meet.

3 Virtual3R: FUNCTIONAL

ARCHITECTURE

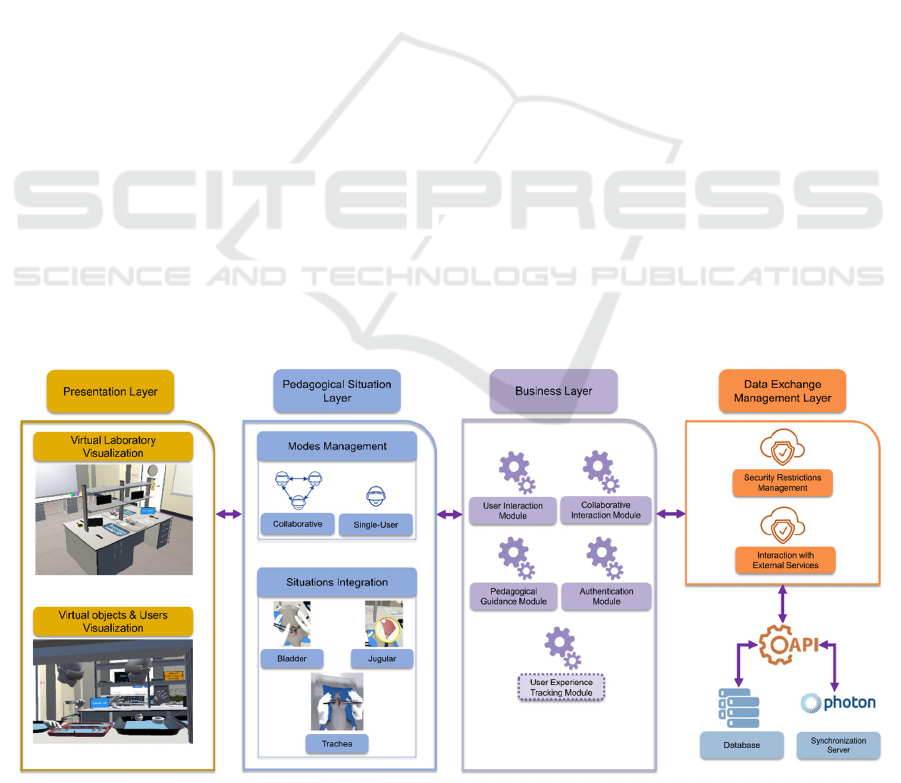

Virtual3R's architecture is based on n-tier architecture

principles, aiming to provide a modular structure with

clear segmentation of functional layers (Figure 1).

This approach ensures architectural flexibility and

allows for system maintenance and evolution without

system perturbation. Each layer plays a specific role

in creating a collaborative and pedagogical virtual

environment.

The presentation layer visually represents virtual

content and participants, providing an intuitive

interface. The Pedagogical Situations layer creates the

virtual pedagogical scenario, delivering immersive,

interactive, and authentic learning experiences.

The business layer manages various functional

modules, including user interaction, collaborative

interaction, pedagogical guidance, user

authentication, and experience tracking. The Data

Exchange Management layer facilitates real-time data

transmission and manages security restrictions for

communication with external services. This layer,

built on an API, ensures transparent connectivity,

enabling synchronised collaboration and effective

access to pedagogical content.

As mentioned before in the introduction, it is

important to note that the modelling of the platform

was performed in close partnership with the

instructors. They played a critical role in establishing

requirements, validating anatomical representation,

and systematically testing interactions with

anatomical elements during the implementation's

development. This ensures that learner interactions

are as realistic as possible, providing learners with an

immersive and accurate learning experience.

The Virtual3R Platform seamlessly integrates

Unity3D's simulation capabilities with XR

Interaction Toolkit's immersive VR features, offering

engaging learning experiences (Unity Technologies,

2024). By leveraging Photon Engine's

synchronization technology and a purpose-built API,

the platform interacts with remote components like

user administration databases and the Photon Engine

server for efficient data exchange during

collaborative sessions (PhotonEngine, 2024).

3.1 Presentation Layer

The presentation layer of Virtual3R functional

architecture’s aims to enhance learners’ awareness

and immersion in the simulated environment,

fostering engagement and motivation throughout

their learning experience.

The layer focuses on two aspects: visualisation of

virtual objects and visualisation of other participants.

The module offers a realistic graphical environment,

allowing learners to explore the simulated laboratory

through captivating virtual scenes. It also facilitates

the visual exploration of resources within the virtual

laboratory's perimeter, including virtual instruments

and tools.

Figure 1: Virtual3R’s Functional Structure.

Virtual3R: A Virtual Collaborative Platform for Animal Experimentation

57

The module also enables detailed visualisation of

the virtual specimen's anatomical structures, allowing

learners to observe and interact with these structures.

The platform features interactive animations that

simulate the use of instruments at the level of the

anatomical structures, illustrating the practical aspect

and realistic impact of users' virtual actions. In

collaborative mode, avatars represent users within the

shared virtual environment, increasing awareness of

other collaborators and fostering effective

cooperation and active communication.

3.2 Pedagogical Situations Layer

The pedagogical situations layer is the platform's

core, providing an immersive and collaborative

learning experience. It guides learners through

realistic experimental situations while encouraging

individual and collaborative interactions. The

platform offers two user modes: single-user mode,

which allows autonomous application of

experimental protocols, and collaborative mode,

which promotes cooperation and real-time

interaction.

Common actions and activities within the

pedagogical situations layer are crucial aspects of the

learning experience. These include reading protocols,

preparing virtual instruments, and conducting

anaesthesia of the animal (rat). Learners can access a

comprehensive protocol for each situation, learn to

select appropriate tools, simulate intraperitoneal

anaesthesia, and practice specimen fixation.

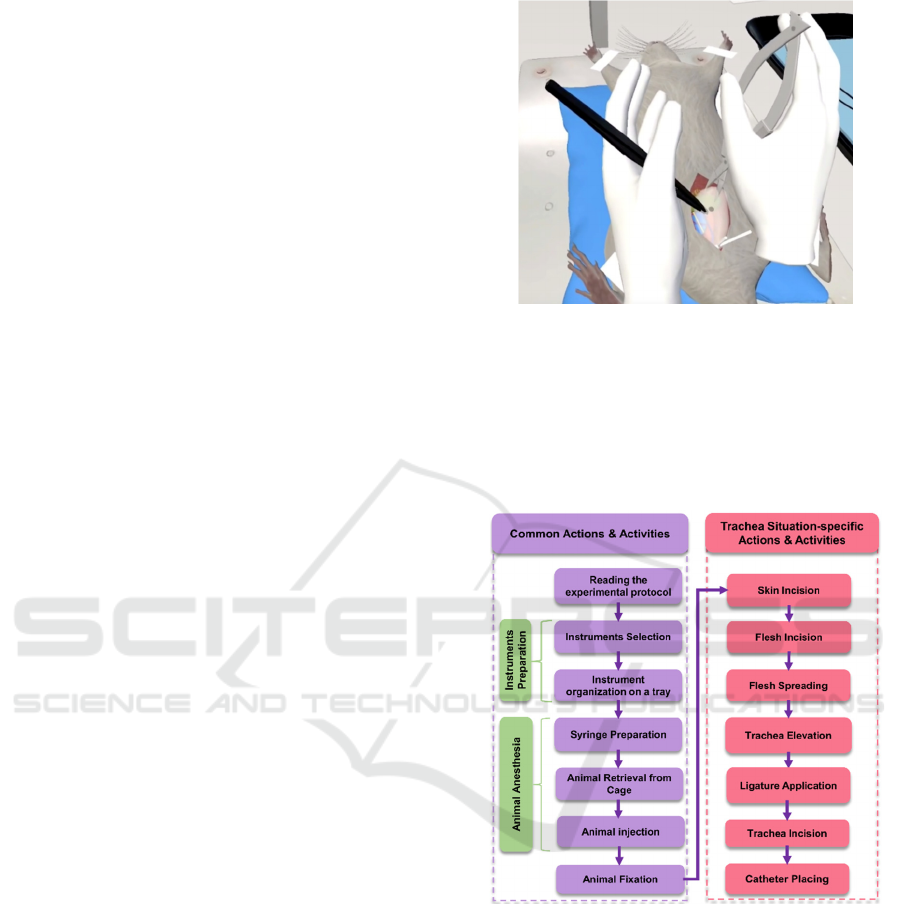

The current version of the platform features three

pedagogical situations: bladder cannulation, jugular

cannulation, and trachea cannulation. Each situation

simulates the execution of a specific cannulation

protocol, with specific actions and activities typically

taking place after immobilising the specimen. The

aim is to faithfully replicate the real experimental

protocol to the greatest extent possible.

For bladder cannulation (Figure 2), learners

execute experimental procedures such as incising the

abdominal region, scraping to elevate the bladder, and

introducing a catheter perpendicularly into its

interior.

For jugular cannulation, users learn sequential

actions such as incising the jugular, fixing the catheter

toward the heart, and applying ligatures around the

jugular. For trachea cannulation, learners practise

virtual tracheotomy according to protocol

instructions, applying ligatures around the trachea,

performing a tracheal incision, and inserting a

catheter in the direction of the lungs.

Figure 2: Educational situation Bladder cannulation.

Figure 3 illustrates the sequence of activities and

actions for the tracheal cannulation situation,

encompassing both common and situation-specific

elements. The required gestures to perform these

actions differ depending on the specific protocol for

each situation.

Figure 3: Tracheal cannulation: activities and action

sequence.

Overall, the pedagogical situations layer serves as

a dynamic core of the platform, ensuring a

comprehensive and engaging learning experience for

learners.

3.3 Business Layer

The Business Layer is a crucial component of the

Virtual3R platform, facilitating the management,

coordination, and flow of interactions within the

educational context. It consists of several functional

CSEDU 2024 - 16th International Conference on Computer Supported Education

58

modules that contribute to a seamless learning

experience for users.

The User Interaction Module: oversees user

interaction within the virtual environment, allowing

users to manipulate and potentially alter pre-existing

virtual objects. Hand controllers are adopted within

the framework to replicate hand movements and

establish a link between the user's physical actions

and their effects in the virtual environment. Users can

navigate through virtual environments using the same

controllers to explore content and interact with

multimedia objects such as explanatory videos.

The Collaborative Interaction Module: ensures

effective synchronisation aspects, enabling

concurrent connections to shared environments

among various users, enabling real-time

collaboration. This module enables users to observe

the actions of others and their impact on the

environment in real time (Figure 4). Additionally, it

supports concurrent usage of shared objects within

the environment. The module also allows users to

participate in real-time audio communication,

fostering social interaction and idea exchange. It also

ensures synchronised writing on shared virtual boards

and Post-it notes, contributing to real-time

information sharing.

Figure 4: Learner Interaction on the Virtual3R Platform.

The Pedagogical Guidance Module: provides

continuous and contextual assistance to engaged

learners during their virtual learning experience. This

assistance can take various forms, including

contextual instructions and real-time feedback.

The Authentication Module: focuses on

ensuring the virtual learning environment's security

by implementing robust security protocols and

managing the authentication process.

The User Experience Tracking Module:

represents a perspective for the Virtual3R project,

aiming to continuously improve the learner

experience through user data analysis and

optimisation. The collected data can help identify

components and areas where users struggle to

interact, resulting in a more intuitive, immersive, and

pedagogically enriching learning experience.

3.4 Data Exchange Management Layer

The Data Exchange Management layer is a crucial

component of the Virtual3R architecture, responsible

for coordinating and securing real-time data

exchange. It relies on a dedicated API as a gateway,

ensuring seamless communication between business

modules and external services and managing security

restrictions. The layer oversees security restrictions,

particularly during real-time data exchange, ensuring

system safety. It also facilitates real-time interaction

with the Photon Engine synchronisation server

(PhotonEngine, 2024), promoting seamless

interactivity within the virtual environment.

The layer also serves as an intermediary between

the User Management and Authentication Module

and the database, ensuring secure transmission of

user-related information. In addition, this layer

facilitates the exchange of data related to pedagogical

content, primarily passing through the business layer

to the situation management layer, ensuring its

integrity and availability.

The demonstration videos accessible via the link

below provide a preview of the overall features of the

Virtual3R platform

1

.

4 VIRTUAL3R: EXPERIMENT

AND EVALUATION

4.1 Evaluation Context, Method and

Protocol

This experiment was conducted with seventy-four

biology students enrolled in Biological Technologies

- Biological Engineering program at the Laval

University Institute of Technology (Figure 5). The

objective was to introduce the students to the

operation of an animal facility/laboratory and assess

the effectiveness of experimental protocols using VR

technology.

This experiment was carried out as part of a

Learning and Assessment Situation (LAS) in which

the objectives were to use VR (an alternative method)

so

that students could: (1) Become familiar with the

1

https://lium-cloud.univ-lemans.fr/index.php/s/jYcoCGzqpXj3aLF

https://lium-cloud.univ-lemans.fr/index.php/s/jYcoCGzqpXj3aLF

Virtual3R: A Virtual Collaborative Platform for Animal Experimentation

59

Figure 5: The experimental environment.

environment of an animal facility or laboratory; (2)

Comprehend the anatomy of the specimen (rat) by

using a virtual model of the live anaesthetised animal,

along with the necessary equipment required to

conduct an experimental procedure; (3) Be trained in

the performance of the technical gestures of different

animal dissection experiments by following operating

protocols.

The students used the Virtual3R collaborative

platform to learn about the 3Rs principle, identify

necessary equipment for physiological studies,

master the use of specific equipment, and perform

technical procedures for physiological experiments.

The evaluation of Virtual3R included an

assessment of the platform's ergonomics and user

satisfaction. The study was conducted in two distinct

locations. One location was dedicated to individual

training with an immersive VR game, and the other

was reserved for collaborative training with the

Virtual3R platform.

The study team consisted of an instructor, and two

assistants, deployed four computer workstations,

each equipped with an Oculus Quest 2 or Quest 3 VR

headset. The instructor oversaw the smooth running

of the experiment, contextualised the pedagogical

approach, and supervised its various stages. The

assistants provided ongoing technical and

pedagogical support.

Prior to starting the experiment, the students

received an overview of the training objectives and

pedagogical aspects, as well as an introduction to the

experimental protocols. The participants were then

trained individually with the immersive game to

familiarise themselves with the VR functionalities

(i.e., using a VR controllers & VR headset, moving &

teleporting in a virtual environment and manipulating

3D objects).

Following this, they engaged in a collaborative

experiment using the Virtual3R platform, alternating

between the roles of technician and assistant. Each

team member also had the opportunity to apply their

skills independently in a second separate pedagogical

situation.

During these experiments, learners receive

assistance from the instructor and assistants, who

provide guidance on the steps to follow if necessary

and help identify and correct errors.

In addition to the evaluation questionnaires filled

by students, brainstorming sessions, integrated into

each stage, were used to gather their initial

perceptions and to assess their overall experience.

The questionnaire administered to the students

included the System Usability Scale (SUS) inquiries

(Lewis, 2018), along with additional questions. The

SUS questions aimed to evaluate the overall usability

of the Virtual3R platform, while the additional

questions focused on the students' experience from

various perspectives.

The participants were requested to evaluate the

ease of movement within the virtual environment, the

intuitiveness of teleportation, the arrangement of 3D

objects, and the ease of interaction with these objects.

The survey also assessed the participants'

performance, perceived effectiveness of their actions,

and their experience with collaborative work in the

3D environment.

Simultaneously, the instructor filled out a skill

validation questionnaire, offering a comprehensive

evaluation of each student's performance, assessing

their level of mastery across various indicators using

a five-point scale:

(1) EX : for Expert – Excellent proficiency;

(2) A: for Acquired – Satisfactory level of mastery;

(3) C: for Confirmation needed for Acquisition –

Acceptable level of mastery;

(4) IPA: for In the Process of Acquisition –

Approximate level of mastery, and (5) NA: for Not

Acquired – Insufficient mastery.

4.2 Results: Analysis & Discussion

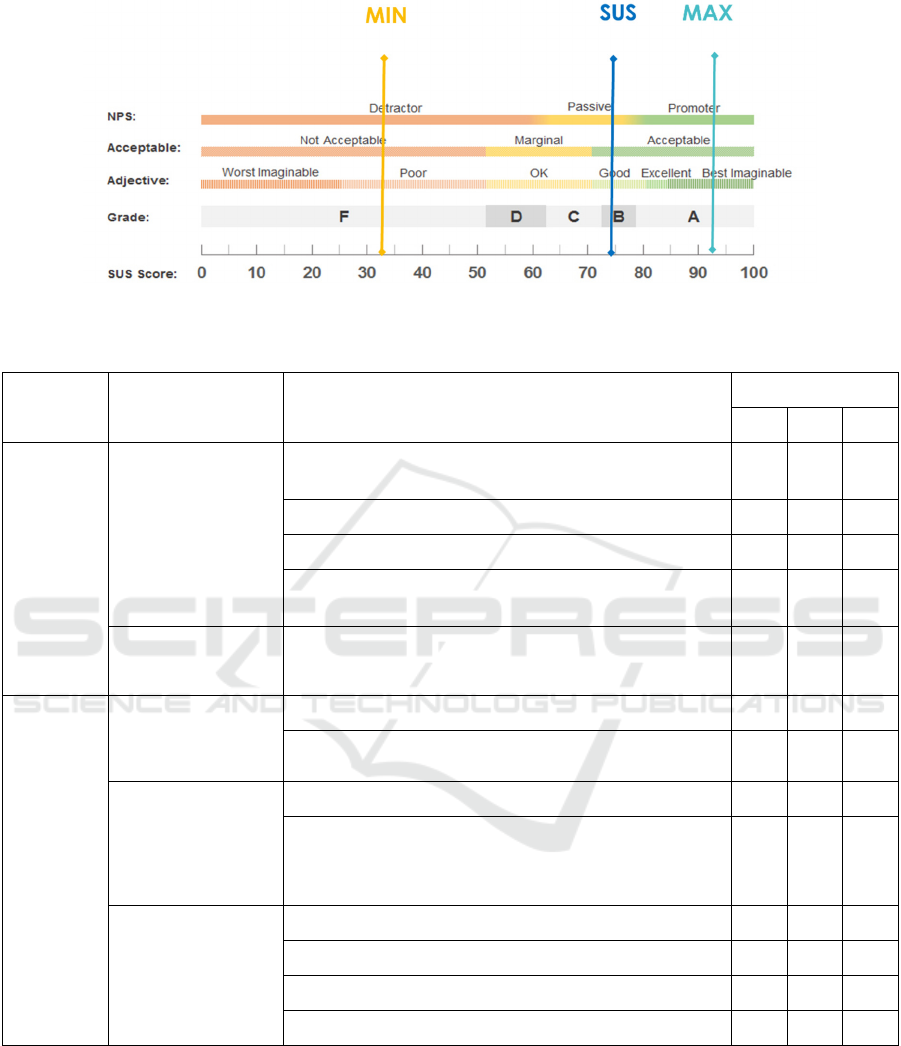

As illustrated in Figure 6, the high average score of

74.22 on the SUS scale reflects learners' overall

satisfaction with Virtual3R. The gap between the

minimum (32.5) and maximum (92.5) scores

emphasises the variety of experiences. The

consistency of responses, as evidenced by the sum of

variances (9.22) and the variance of scores (120.02),

confirms the evaluation's reliability. Cronbach's alpha

coefficient of 1.03 confirms the high internal

reliability of the SUS scale. This consistency

reinforces the credibility of the results, suggesting

that usability evaluation is a strong indicator of

positive user experience. The evaluation of the

students' experience was conducted through three

CSEDU 2024 - 16th International Conference on Computer Supported Education

60

Figure 6: The SUS Scales Score.

Table 1: Results of the Skills Validation.

CRITICAL

LEARNING

ASPECTS

COMPONENTS OF

CRITICAL

LEARNING

ASSESSMENT INDICATORS

EVALUATION

EX A C

Acquire basic

experimental

skills on

laboratory

animals

Understand the

operation of an animal

shop/animal testing

laboratory

Move around the animal experimentation laboratory to understand

their professional environment correctly

96% 4% 0

Check animal's condition after anaesthesia 49% 50% 1%

Check the animal's condition during the experimental procedure 23% 76% 1%

Immobilise the animal at the beginning of the experimental

procedure

100% 0 0

Analyse an

experimental procedure

according to the 3R rule

Consider the advantages and disadvantages of virtual reality in

animal experimentation

32% 68% 0

Implement

experimental

physiological

study

procedures

Inventory the equipment

needed to carry out an

experimental procedure

for physiological studies

Observe the equipment available 92% 8% 0

Check that all the necessary equipment is present before starting. 88% 12% 0

Use properly the

equipment and devices

necessary for the

implementation of an

experimental procedure

of physiological studies

Correct handling of dissecting equipment (scissors or forceps) 74% 26% 0

Coordination of movements / Coordinated use of both hands 69% 28% 3%

Perform the technical

gestures required for the

implementation of an

experimental procedure

of physiological studies

Use of technical protocol as training aid (protocol sheet/video) 46% 54% 0

Follow protocol steps

93% 7% 0

Sequence / fluidity of technical gestures

62% 36% 1%

Collaborative work / Interaction with partner 84% 16% 0

different components, each focused on examining

specific aspects of the user experience.

In the first component, which assessed the

displacement and interaction, users generally

responded positively. The majority of participants

(63%) found the ease of movement to be positive. In

addition, teleportation was found to be intuitive by a

total of 53% of users. The placement of 3D objects,

such as the specimen (rat) and instruments, received

overwhelming approval from 81% of participants,

indicating its relevance.

The second component examined performance

and perception in virtual educational tasks and

revealed notable successes. An impressive 82% of

Virtual3R: A Virtual Collaborative Platform for Animal Experimentation

61

users highlighted their ability to successfully handle

specific pedagogical situations. While a small

proportion (7%) reported some difficulties, the vast

majority (93%) found the steps in the virtual

environment easy to perform. However, there were

mixed opinions regarding the application of ligatures,

suggesting a potential need for further support in this

area.

In terms of collaboration, the results were

encouraging. A substantial 79% of users felt that

cooperation was intuitive. In addition, overall

satisfaction with collaborative work was high, with

89% of participants reporting that they were

"completely" or "very" satisfied.

The use of pedagogical indicators showed some

variation among participants. Seventy-two percent

(72%) of users reported using them, while 75% of this

group used them only once. This disparity indicates

different approaches to these indicators, suggesting a

need for clarification or improvement in their

integration into the virtual learning process.

In addition, learners emphasised the importance

of the pedagogical support provided by the study

team during the brainstorming sessions, suggesting

its integration into the platform in a way that

automatically adapts to the user’s actions and to the

difficulties encountered in the environment.

On the other hand, Table 1 highlights the findings

derived from the skills validation process. It presents

the proportion of students who attained a specific

level of mastery for each assessment indicator.

The table exclusively displays the values

corresponding to the three highest levels of

competence, as no learner was assigned a level of

competence below in any of the assessment

indicators. The subsequent findings presented in the

rest of the discussion correspond to the proportion by

learning component that was calculated based on the

values listed in the table.

With regard to the acquisition of basic

experimental gestures, 66.64% of the participating

students exhibited an expert level of skill,

demonstrating an adequate level of knowledge and

manipulative skills.

Regarding the analysis of experimental

procedures conforming to the 3R rule, 32.43%

reached an expert level, reflecting in-depth

comprehension, whereas 67.57% reached an

acquisition level, indicating a strong, but possibly less

thorough grasp of the subject matter.

For the implementation of experimental

procedures, the majority of students (89.87%),

attained an expert level of proficiency in equipment

inventory. 71.62% of students demonstrated a

moderate proficiency in the second component,

which relates to the correct use of instruments.

According to the results of the skills assessment,

95.95% of students demonstrated adequate mobility

in the laboratory, highlighting effective engagement

in the experimental context.

In conclusion, in terms of conviviality evaluation, the

SUS analysis confirms overall user satisfaction with

Virtual3R, which is perceived to be user-friendly. The

range of scores highlights an inclusive design, and

even the lowest scores indicate a satisfactory

experience.

The evaluation highlighted the overall

satisfaction of the users with the virtual educational

platform. However, specific areas require targeted

improvements to further enhance the learning

experience. These include providing further

assistance with some activities and clarifying or

improving the integration of pedagogical indicators.

The results also highlight the importance of

incorporating adaptive pedagogical support into the

platform to respond to user’s actions and difficulties.

With regard to skill validation, the analysis of

competencies revealed significant accomplishments,

particularly in mastering experimental manoeuvres

on laboratory animals and carrying out experimental

protocols for physiological research. The variety of

results demonstrates the overall success of the

pedagogical approach, though specific areas for

improvement were identified. These results also

confirm pedagogical success in terms of knowledge

assimilation and student interaction with the

environment.

To sum up, our experience with Virtual3R has

demonstrated its efficacy as a user-friendly

collaborative learning platform. The SUS and skill

evaluation findings suggest that Virtual3R provides a

satisfactory user experience while efficiently

delivering practical and conceptual skills. While

certain aspects could be enhanced, these outcomes

serve a solid foundation for guiding future

pedagogical improvements.

5 CONCLUSION

This article presents Virtual3R, an animal

experimentation simulation platform that represents

advancement in biological science education and

CVLE design and development. The virtual

immersion provides by the platform offers a learning

experience that merges VR technology and

innovative pedagogy.

CSEDU 2024 - 16th International Conference on Computer Supported Education

62

The visual interface of Virtual3R enables users

to engage in detailed examination and interaction

with complex anatomical structures, in addition to

manipulating virtual instruments. By promoting

collaboration among learners, the platform

incorporates a social aspect into learning, fostering

engagement, motivation, and the exchange of

experiences between participants.

In addition to immersive technology, Virtual3R

adheres to the essential ethical principles of animal

experimentation by implementing the 3Rs rules

(Reduce, Refine, Replace). This approach highlights

the platform's dedication to responsible and ethical

education.

Detailed experimentation has shown the

platform's effectiveness in terms of user-friendliness

and the acquisition of both practical and conceptual

skills. The positive results reinforce the belief that

Virtual3R is not merely an innovative technology

but also an efficient pedagogical solution. Further

experiments could be conducted to explore learners'

subjective experiences, particularly regarding their

sense of presence and co-presence with other

participants.

The development perspectives of Virtual3R are

equally promising. Continuous improvements will

be implemented on the platform, informed by

feedback from instructors and learners who have

experimented with the system.

The expansion of pedagogical situations, such as

carotid cannulation, will enhance learning

experiences and provide a broader array of skills to

acquire. Furthermore, exploring new interaction

techniques, such as hand tracking, lays the

groundwork for even more immersive and realistic

experiences.

On the other hand, introducing a virtual

animated agent to assist learners throughout their

experiences signifies a notable advancement.

Additionally, adapting learning activities and

pedagogical instructions to align with learners'

behaviours and past interactions will optimize the

learning process.

Virtual3R exemplifies the potential of VR

applications in education, offering a structured

architecture that integrates collaborative learning

experiences within immersive virtual environments.

By prioritizing both the pedagogical needs of

instructors and the immersive context of the learning

situation, Virtual3R sets a precedent for future VR

applications aimed at enhancing learning outcomes

across diverse educational settings.

ACKNOWLEDGEMENTS

This work was supported by the University of Le

Mans and the Fondation Nationale IUT de France.

The authors would like to thank the biology teachers

who participated in the creation and experimentation

of the Virtual3R platform.

REFERENCES

Abdullah, L. N. (2010). Virtual Animal Slaughtering and

Dissection via Global Navigation Elements. In

Proceedings of the 2010 Second International

Conference on Computer Research and Development

(pp. 182-185). https://doi.org/10.1109/iccrd.2010.97

Affendy, N., & Wanis, I. (2019). A Review on

Collaborative Learning Environment across Virtual and

Augmented Reality Technology. IOP Conference

Series: Materials Science and Engineering.

https://doi.org/10.1088/1757-899X/551/1/012050.

Allcoat, D., & Mühlenen, A. (2018). Learning in virtual

reality: Effects on performance, emotion and

engagement. Research in Learning Technology.

https://doi.org/10.25304/RLT.V26.2140.

Beck, D., Allison, C., Morgado, L., Pirker, J., Khosmood,

F., Richter, J., & Gütl, C. (2016). Immersive Learning

Research Network, 621. https://doi.org/10.1007/978-3-

319-41769-1.

Chen, L., Liang, H.-N., Lu, F., Wang, J., Chen, W., & Yue,

Y. (2021). Effect of Collaboration Mode and Position

Arrangement on Immersive Analytics Tasks in Virtual

Reality: A Pilot Study. Applied Sciences, 11(21),

10473. https://doi.org/10.3390/app112110473

Çoban, M., & Goksu, İ. (2022). Using virtual reality

learning environments to motivate and socialize

undergraduates in distance learning. Participatory

Educational Research, 9(2), 199–218.

https://doi.org/10.17275/per.22.36.9.2

Dailey-Hebert, A., Estes, J., & Choi, D. (2021). This

History and Evolution of Virtual Reality, 1-20.

https://doi.org/10.4018/978-1-7998-4960-5.ch001

Dawley, L., & Dede, C. (2014). Situated Learning in

Virtual Worlds and Immersive Simulations. Handbook

of Research on Educational Communications and

Technology, 723–734. https://doi.org/10.1007/978-1-

4614-3185-5_58

Dumitrescu, C., Drăghicescu, L., Petrescu, A., Gorghiu, G.,

& Gorghiu, L. (2014). Related Aspects to Formative

Effects of Collaboration in Virtual Spaces. Procedia -

Social and Behavioral Sciences, 141, 1079-1083.

https://doi.org/10.1016/J.SBSPRO.2014.05.181.

Ellis, C. A., Gibbs, S. J., & Rein, G. (1991). Groupware:

some issues and experiences. Communications of the

ACM, 34(1), 39–58. https://doi.org/10.1145/99977.9

9987

Erkut, C., & Dahl, S. (2018). Incorporating Virtual Reality

in an Embodied Interaction Course. Proceedings of the

Virtual3R: A Virtual Collaborative Platform for Animal Experimentation

63

5th International Conference on Movement and

Computing. https://doi.org/10.1145/3212721.3212884.

Fabris, C., Rathner, J., Fong, A., & Sevigny, C. (2019).

Virtual Reality in Higher Education. International

Journal of Innovation in Science and Mathematics

Education. https://doi.org/10.30722/ijisme.27.08.006.

Fussell, S. G., & Truong, D. (2021). Using virtual reality

for dynamic learning: an extended technology

acceptance model. Virtual Reality, 26(1), 249–267.

https://doi.org/10.1007/s10055-021-00554-x.

Garcia-Lopez, C., Mor, E., & Tesconi, S. (2020). Human-

Centered Design as an Approach to Create Open

Educational Resources. Sustainability, 12(18), 7397.

https://doi.org/10.3390/su12187397

Hickman, L., & Akdere, M. (2018). Developing

intercultural competencies through virtual reality:

Internet of Things applications in education and

learning. 2018 15th Learning and Technology

Conference (L&T), 24-28. https://doi.org/10.1109

/LT.2018.8368506

Howard, M. C., & Gutworth, M. B. (2020). A meta-analysis

of virtual reality training programs for social skill

development. Computers & Education, 144,

103707. https://doi.org/10.1016/j.compedu.2019.103

707

Huang, H., & Liaw, S. (2018). An Analysis of Learners’

Intentions Toward Virtual Reality Learning Based on

Constructivist and Technology Acceptance

Approaches. The International Review of Research in

Open and Distributed Learning, 19, 91-115.

https://doi.org/10.19173/IRRODL.V19I1.2503

Jara, C. A., Candelas, F. A., Torres, F., Dormido, S., &

Esquembre, F. (2012). Synchronous collaboration of

virtual and remote laboratories. Computer Applications

in Engineering Education, 20(1), 124–136. Portico.

https://doi.org/10.1002/cae.20380

Khan, M., Bhuiyan, M., & Tania, T. (2021). Research and

Development of Virtual Reality Application for

Teaching Medical Students. 2021 12th International

Conference on Computing Communication and

Networking Technologies (ICCCNT), 01-04.

https://doi.org/10.1109/ICCCNT51525.2021.9580004.

King, H. (2016). Learning spaces and collaborative work:

barriers or supports? Higher Education Research

& Development, 35(1), 158–171.

https://doi.org/10.1080/07294360.2015.1131251

Kladias, N., Pantazidis, T., & Avagianos, M. (1998). A

virtual reality learning environment providing access to

digital museums. In Proceedings of the 1998

MultiMedia Modeling Conference (MMM '98), 193–

202. IEEE Computer Society.

https://doi.org/10.1109/MULMM.1998.723002.

Konstantinidis, A., Tsiatsos, Th., & Pomportsis, A. (2009).

Collaborative virtual learning environments: design and

evaluation. Multimedia Tools and Applications, 44(2),

279–304. https://doi.org/10.1007/s11042-009-0289-5

Kumari, S., & Polke, N. (2019). Implementation Issues of

Augmented Reality and Virtual Reality: A Survey.

Lecture Notes on Data Engineering and

Communications Technologies, 853–861.

https://doi.org/10.1007/978-3-030-03146-6_97

Laal, M. (2013). Collaborative Learning; Elements.

Procedia - Social and Behavioral Sciences, 83, 814–

818. https://doi.org/10.1016/j.sbspro.2013.06.153

Laal, M., & Laal, M. (2012). Collaborative learning: what

is it? Procedia - Social and Behavioral Sciences, 31,

491–495. https://doi.org/10.1016/j.sbspro.2011.12.092

Lave, J. (2012). Situating learning in communities of

practice. Perspectives on Socially Shared Cognition.,

63–82. https://doi.org/10.1037/10096-003

Lemos, M., Bell, L., Deutsch, S., Zieglowski, L., Ernst, L.,

Fink, D., Tolba, R., Bleilevens, C., & Steitz, J. (2022).

Virtual Reality in Biomedical Education in the sense of

the 3 Rs. Laboratory Animals, 57(2), 160–169.

https://doi.org/10.1177/00236772221128127

Lewis, J. (2018). The System Usability Scale: Past, Present,

and Future. International Journal of Human–Computer

Interaction, 34, 577 - 590. https://doi.org/

10.1080/10447318.2018.1455307.

Majewska, A. A., & Vereen, E. (2023). Using Immersive

Virtual Reality in an Online Biology Course. Journal

for STEM Education Research. https://doi.org/

10.1007/s41979-023-00095-9

Margery, D., Arnaldi, B., & Plouzeau, N. (1999). A General

Framework for Cooperative Manipulation in Virtual

Environments. Virtual Environments ’99, 169–178.

https://doi.org/10.1007/978-3-7091-6805-9_17

McArdle, G., Monahan, T., & Bertolotto, M. (2008). Using

Multimedia and Virtual Reality for Web-Based

Collaborative Learning on Multiple Platforms.

Multimedia Technologies, 1125–1155. https://doi.org/

10.4018/978-1-59904-953-3.ch079

Mhouti, A., Erradi, A., & Vasquèz, J. (2016). Cloud-based

VCLE: A virtual collaborative learning environment

based on a cloud computing architecture. 2016 Third

International Conference on Systems of Collaboration

(SysCo), 1-6. https://doi.org/10.1109/SYSCO.2016.78

31340.

Najjar, N., Ebrahimi, A., & Maher, M. L. (2022). A Study

of the Student Experience in Video Conferences and

Virtual Worlds as a Basis for Designing the Online

Learning Experience. 2022 IEEE Frontiers in

Education Conference (FIE). https://doi.org/

10.1109/fie56618.2022.9962374

Nassar, A. K., Al-Manaseer, F., Knowlton, L. M., & Tuma,

F. (2021). Virtual reality (VR) as a simulation modality

for technical skills acquisition. Annals of Medicine and

Surgery, 71, 102945. https://doi.org/10.1016/j.amsu.20

21.102945

Oakley, J. (2012). Science teachers and the dissection

debate: perspectives on animal dissection and

alternatives. International Journal of Environmental

and Science Education, 7(2), 253-267.

Ormandy, E., Schwab, J. C., Suiter, S., Green, N., Oakley,

J., Osenkowski, P., & Sumner, C. (2022). Animal

Dissection vs. Non-Animal Teaching Methods. The

American Biology Teacher, 84(7), 399–404.

https://doi.org/10.1525/abt.2022.84.7.399

CSEDU 2024 - 16th International Conference on Computer Supported Education

64

Oubahssi, L., & Mahdi, O. (2021). VEA: A Virtual

Environment for Animal experimentation. 2021

International Conference on Advanced Learning

Technologies (ICALT). https://doi.org/10.1109/icalt522

72.2021.00134

Ouramdane, N., Otmane, S., & Mallem, M. (2007). A New

Model of Collaborative 3D Interaction in Shared

Virtual Environment., 663-672.

https://doi.org/10.1007/978-3-540-73107-8_74.

Pappas, M., Karabatsou, V., Mavrikios, D., &

Chryssolouris, G. (2006). Development of a web-based

collaboration platform for manufacturing product and

process design evaluation using virtual reality

techniques. International Journal of Computer

Integrated Manufacturing, 19(8), 805–814.

https://doi.org/10.1080/09511920600690426

PhotonEngine. (2024). Photon Unity Networking

framework for realtime multiplayer games and

applications. Retrieved from https://www.photon

engine.com/en-us/PUN

Predavec, M. (2001). Evaluation of E-Rat, a computer-

based rat dissection, in terms of student learning

outcomes. Journal of Biological Education, 35(2), 75–

80. https://doi.org/10.1080/00219266.2000.9655746

Quy, P., Lee, J., Kim, J., Kim, J., & Kim, H. (2009).

Collaborative Experiment and Education Based on

Networked Virtual Reality. 2009 Fourth International

Conference on Computer Sciences and Convergence

Information Technology, 80-85. https://doi.org/

10.1109/ICCIT.2009.53.

Ruiz, M. A. G., Edwards, A., Seoud, S. A. E., & Santos, R.

A. (2008). Collaborating and learning a second

language in a Wireless Virtual Reality Environment.

International Journal of Mobile Learning and

Organisation, 2(4), 369. https://doi.org/10.1504/ijm

lo.2008.020689

Sala, N. (2020). Virtual Reality, Augmented Reality, and

Mixed Reality in Education. Advances in Higher

Education and Professional Development, 48–73.

https://doi.org/10.4018/978-1-7998-4960-5.ch003

Sarmiento, W., & Collazos, C. (2012). CSCW Systems in

Virtual Environments: A General Development

Framework. 2012 10th International Conference on

Creating, Connecting and Collaborating through

Computing, 15-22. https://doi.org/10.1109/C5.2012.17

Schmid Mast, M., Kleinlogel, E. P., Tur, B., & Bachmann,

M. (2018). The future of interpersonal skills

development: Immersive virtual reality training with

virtual humans. Human Resource Development

Quarterly, 29(2), 125–141. Portico. https://doi.org/

10.1002/hrdq.21307

Sekiguchi, T., & Makino, M. (2021). A virtual reality

system for dissecting vertebrates with an observation

function. 2021 International Conference on

Electronics, Information, and Communication (ICEIC).

https://doi.org/10.1109/iceic51217.2021.9369824

Skulmowski, A., & Rey, G. D. (2018). Embodied learning:

introducing a taxonomy based on bodily engagement

and task integration. Cognitive Research: Principles

and Implications, 3(1). https://doi.org/10.1186/s41235-

018-0092-9

Sukmawati, F., Santosa, E. B., Rejekiningsih, T., Suharno,

& Qodr, T. S. (2022). Virtual Reality as a Media for

Learn Animal Diversity for Students. Jurnal Edutech

Undiksha, 10(2), 290–301. https://doi.org/10.23887/

jeu.v10i2.50557

Tang, F. M. K., Lee, R. M. F., Szeto, R. H. L., Cheng, J. K.

K., Choi, F. W. T., Cheung, J. C. T., Ngan, O. M. Y., &

Lau, A. S. N. (2021). A Simulation Design of

Immersive Virtual Reality for Animal Handling

Training to Biomedical Sciences Undergraduates.

Frontiers in Education, 6. https://doi.org/10.3389/

feduc.2021.710354

Unity Technologies (2024). Unity XR Development

Documentation. Version 2022.3. Retrieved from

https://docs.unity3d.com/Manual/XR.html

Vafai, N. M., & Payandeh, S. (2010). Toward the

development of interactive virtual dissection with

haptic feedback. Virtual Reality, 14(2), 85–103.

https://doi.org/10.1007/s10055-009-0132-3

Wang, Y., Lin, K., & Huang, T. (2021). An analysis of

learners' intentions toward virtual reality online

learning systems: a case study in Taiwan., 1-10.

https://doi.org/10.24251/HICSS.2021.184

Wu, H. (2009). Research of Virtual Experiment System

Based on VRML. 2009 International Conference on

Education Technology and Computer, 56–59

https://doi.org/10.1109/icetc.2009.39

Young, G., Stehle, S., Walsh, B., & Tiri, E. (2020).

Exploring Virtual Reality in the Higher Education

Classroom: Using VR to Build Knowledge and

Understanding. J. Univers. Comput. Sci., 26, 904-928.

https://doi.org/10.3897/jucs.2020.049

Zemanova, M. A. (2022). Attitudes Toward Animal

Dissection and Animal-Free Alternatives Among High

School Biology Teachers in Switzerland. Frontiers in

Education, 7. https://doi.org/10.3389/feduc.2022.892

713

Zheng, L., Xie, T., & Liu, G. (2018). Affordances of Virtual

Reality for Collaborative Learning. 2018 International

Joint Conference on Information, Media and

Engineering (ICIME), 6-10. https://doi.org/

10.1109/ICIME.2018.00011

Virtual3R: A Virtual Collaborative Platform for Animal Experimentation

65