Exploring Interaction Mechanisms and Perceived Realism in Different

Virtual Reality Shopping Setups

Rub

´

en Grande, Javier Albusac, Santiago S

´

anchez-Sobrino, David Vallejo, Jos

´

e J. Castro-Schez

and Carlos Gonz

´

alez

Department of Technologies and Information Systems, School of Computer Science, University of Castilla-La Mancha,

Paseo de la Universidad 4, 13071 Ciudad Real, Spain

Keywords:

Virtual Reality, E-Commerce, Human-Computer-Interaction, User Activity Monitoring.

Abstract:

Within the e-commerce field, disruptive technologies such as Virtual Reality (VR) are beginning to be used

more frequently to explore new forms of human-computer interaction in the field and enhance the shopping

experience for users. Key to this are the increasingly accurate hands-free interaction mechanisms that the user

can employ to interact with virtual products and the environment. This study presents an experiment with a

set of participants that will address: (1) users’ evaluation of a set of pre-formalised interaction mechanisms,

(2) preference for a large-scale or small-scale shopping environment and how the degree of usability while

navigating the large-scale one, and (3) the usefulness of monitoring user activity to infer user preferences.

The results provided show that i) interaction mechanisms made with users’ hands are fluid and natural, ii)

high usability in small and large shopping spaces and the second ones being preferred by the users and iii) the

recorded interactions can be employed for user profiling that improves future shopping experience.

1 INTRODUCTION

Virtual Reality (VR) is emerging as one of the key

technologies in retail innovation and in enhancing the

shopping experience for users in the coming years

(Grewal et al., 2017). In fact, studies suggest that VR,

along with Augmented Reality (AR) and other tech-

nologies, are part of the competitive strategy of many

retailers (Kim et al., 2023). Since the COVID-19 pan-

demic, the use of e-commerce services has increased

dramatically, not only among younger people but also

among individuals of all ages, including older adults

who were initially more reluctant.

One way to enhance this competitiveness is by

improving the shopping experience, making it more

realistic and closer to that of a physical store. Gen-

erally, the shopping experience can be described as

what arises from the interactions between a consumer

and a product in a specific shopping situation or envi-

ronment over a certain period of time. This definition

is directly applicable to a shopping experience in a

virtual space. In recent years, thanks to advances in

hardware for VR devices, it has become easier to fos-

ter greater immersion in a virtual shopping environ-

ment with the use of VR headsets (Xi and Hamari,

2021), which provide a much more comprehensive

field of view (FOV).

The mechanisms of interaction between the user

and virtual elements play a crucial role in the shop-

ping experience and have a direct influence on the

sense of realism. However, until recently, interaction

mechanisms in these virtual environments were quite

limited, focusing on classic input devices like the

mouse and keyboard, or head movements in devices

using a smartphone (Speicher et al., 2017). These

methods, in a way, represented a somewhat unnatural

and restricted way of exploring displayed products.

Recent advances in hand, body, and even eye

tracking in the latest VR headsets, such as the Oculus

Meta Quest 2, 3, and PRO models, open new avenues

for research and design of more complex interaction

mechanisms. Previous studies have shown that the

shopping experience in an immersive virtual shopping

environment provides greater immersion and more

natural interactions compared to desktop-based solu-

tions (Schnack et al., 2019).

Thus, we aim to achieve several objectives in this

study. The first is to investigate the sensations, fluid-

ity, and comfort that the interaction mechanisms pro-

vided by Meta’s SDK and others defined by us, such

as the shopping cart, produce in users in immersive

shopping environments. In terms of how these prod-

ucts should be displayed in a virtual shopping envi-

ronment, we aim to determine the user’s preference

for a large, store-like environment, or a smaller, sim-

Grande, R., Albusac, J., Sánchez-Sobrino, S., Vallejo, D., Castro-Schez, J. and González, C.

Exploring Interaction Mechanisms and Perceived Realism in Different Virtual Reality Shopping Setups.

DOI: 10.5220/0012626000003690

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 26th International Conference on Enterprise Information Systems (ICEIS 2024) - Volume 2, pages 505-512

ISBN: 978-989-758-692-7; ISSN: 2184-4992

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

505

pler one with minimal distractions. This will allow us

to ascertain how motivated they are to interact in each

environment, as well as the usability and navigabil-

ity of the environment. Lastly, monitoring user activ-

ity on current e-commerce websites is very useful in

understanding their tastes and preferences. Similarly,

we want to investigate the utility of such monitoring

in a VR Shopping environment so that the generated

data can be used by AI algorithms.

To achieve the proposed objectives, we have de-

signed and conducted an experiment with a group

of volunteers. Following the study, participants an-

swered a 26-question survey. The results of this sur-

vey have helped us determine the preferred layout for

virtual shopping environments, their feelings about

the presented interaction mechanisms, their prefer-

ence compared to other forms of shopping, and their

tastes based on the products they interacted with.

The remainder of this paper is structured as fol-

lows. Section 2 introduces previous work related to

interaction mechanisms used in virtual shopping envi-

ronments and experiments conducted in this context.

Then, Section 3 outlines the methodology followed

for conducting the experiment, as well as the re-

sources used and other considerations. Subsequently,

Section 4 presents the results obtained from the exper-

imentation, and Section 5 discusses the conclusions of

our work.

2 RELATED WORK

In their investigation into gesture-based controls

within VR shopping environments (Wu et al., 2019)

conducted a pair of studies that led to the develop-

ment of a novel approach for formulating dependable

user-defined gestures. The research included an ex-

perimental setup where 32 participants engaged with

a series of VR shopping tasks, ranging from object se-

lection to color changes and size adjustments—using

an HTC Vive headset. Nevertheless, the study’s de-

scription lacks specific details on the recording and

subsequent analysis of the gesture data.

(Peukert et al., 2019) explored the impact of im-

mersive experiences in VR shopping settings on the

likelihood of users adopting the technology for fu-

ture use. To this end, the researchers designed an ex-

perimental study utilizing two distinct environments:

one with a high level of immersion facilitated by the

HTC Vive, and a less immersive version presented on

a standard desktop display. In the more immersive

setting, the study monitored and captured data on the

movements of the participants’ hands and head, in-

cluding their interactions with products such as grab-

bing, dropping, or transferring items between hands.

Eye-tracking data was also gathered. The findings of

Peukert et al. suggest that the immersive quality of

the VR environment could shape a user’s decision to

reuse the system via two distinct avenues: one being

hedonic, driven by enjoyment, and the other utilitar-

ian, driven by practicality.

(Speicher et al., 2017) explored a VR shop-

ping platform to analyze the effects of user interface

modalities on shopping efficiency, user preferences,

and behaviours. Initial investigations involved a sur-

vey to capture the highs and lows of online shop-

ping, leading to the creation of VR prototypes for

both desktop and smartphones, focusing exclusively

on voice and head-pointing controls. This work cul-

minated in the proposal of design principles for VR

shopping ecosystems. Building upon this, they fur-

ther innovated with a new VR shopping prototype,

the “Apartment” metaphor (Speicher et al., 2018), to

probe into product selection and manipulation strate-

gies, alongside different shopping cart designs. Their

study revealed that immersion and a seamless user ex-

perience are paramount for users, prompting recom-

mendations to mitigate motion sickness and advising

on product assortments that are most amenable to VR

shopping interfaces.

(Ricci et al., 2023) compared immersive virtual re-

ality (IVR) and desktop virtual reality (DVR) in the

context of virtual fashion stores to assess their influ-

ence on the shopping experience. A within-subject

experiment with 60 participants was conducted to ex-

plore the use of an HTC Vive head-mounted display

against a desktop setup. The results revealed that

IVR provides a more engaging, pleasurable, and ef-

ficient shopping experience, with participants show-

ing a higher intention to use IVR setups in the future

due to enhanced hedonic and utilitarian values. The

authors underscored the significant potential of IVR

in enriching the shopping experience in the fashion

industry.

In this section, we have presented works related to

VR Shopping and experiments conducted with proto-

types in this context. However, none focused on ex-

ploring the importance of realism in purchase inten-

tion, complex interaction mechanisms with products

and environment, or the utility of monitoring user ac-

tivity in the shopping environment.

3 EXPERIMENT

METHODOLOGY AND SETUP

In this section, we will describe both the resources

used and the methodology followed to execute the

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

506

experiment. The experiment carried out focused on

the following aspects: 1) usability and preference of

a large and/or small shopping environment, 2) eval-

uation of the proposed interaction mechanisms, in-

cluding interaction with the shopping cart, and 3)

the utility of monitoring user activity for profiling

and purchase intention. Due to space limitations,

the conceptualisation and mathematical formalisation

in 3D space of the interaction mechanisms used in

the experiment (grabbing, translating, rotating, scal-

ing, teleporting, adding to shopping cart and look-

ing with the eyes) as well as the elements with

which to carry out these interactions can be found in

the following GitHub repository: https://github.com/

AIR-Research-Group-UCLM/VR-IM-Experiment.

3.1 Setup and Resources

The application, run on the Meta Quest Pro HMD,

was developed using the Unity graphics engine, ver-

sion 2022 LTS, to ensure compatibility with the lat-

est update of the Meta XR All-in-one SDK. The de-

veloped application consists of 3 scenes, each corre-

sponding to the steps of the experiment. The equip-

ment used for development and execution of the ex-

periment was an MSI Vector GP66, featuring an i7-

12700H, 32GB RAM, and an Nvidia GeForce RTX

3060 6GB VRAM GPU. During the experiment, the

laptop was connected to the Meta Quest Pro using

a 5-meter Oculus Link cable, in order to provide

the smoothest experience possible and minimize any

performance degradation during the experiment. It

should be noted that in all samples taken, the frame

rate of the application was maintained at 60 FPS.

A 2 m × 2 m space was defined to allow the user

some freedom of movement, especially in the small

environment, as the large environment required the

use of teleportation to move around due to the lack

of physical space.

3.2 Participants

For the experiment, we involved 10 participants (8

male, 2 female) from the university campus, with ages

ranging between 22 and 28 years (8 participants) and

58 years (2 participants). The experimental session

was conducted the same day, with each participant

taking a mean (M) = 28.50 minutes to complete the

experiment and answer the subsequent questionnaire.

The questionnaire also included questions about age,

gender, and experience with VR, as well as online

shopping and related aspects (see Fig. 1). According

to the mean and standard deviation results shown in

Fig. 1, most volunteers had little experience with VR

and the HMD used for the experiment (Meta Quest

Pro).

3.3 Scenes Developed for the

Experiment

For the execution of the experiment, three scenes were

developed in Unity to cover the previously described

objectives. Each of these was executed successively,

and each participant was notified beforehand of what

each scene entailed, what they would encounter, and

what they were expected to do.

3.3.1 Second Scene: Small Virtual Shopping

Environment

The second scene consists of a single counter that dis-

plays the five scanned products, along with a label

that provides basic product information: name, short

description, and price. Fig. 2a shows the aforemen-

tioned scene. In this part of the experiment, partic-

ipants were expected to interact freely with the dis-

played products for 2 to 3 minutes. Here, they could

move, rotate, and scale the objects. After the inter-

action time, they were to include in the shopping cart

the three products they preferred or would buy.

The purpose of this step is to explore the usability

of such environments and the sensations they evoke in

users within a simple, distraction-free setting. In ad-

dition to the questionnaire, interaction data with the

products and the shopping cart were monitored to de-

termine which products were added, along with eye-

tracking data to gather results on their focus of atten-

tion. In this way, we aim to deduce a correlation be-

tween the number or duration of interactions and the

frequency or length of time a product is viewed, with

the final decision to add it to the shopping cart.

Analyzing the recorded data, our intention is to

discern whether a simple environment fosters greater

user interaction with objects and whether this leads

to an increase in the participant’s purchase intention

for the interacted products. While possible layouts

for virtual shopping environments have been inves-

tigated, some using uncommon metaphors like an

apartment (Speicher et al., 2018), most prototypes re-

sort to the concept of a supermarket (Chandak et al.,

2022; van Herpen et al., 2016). There exists a gap

in the exploration of simplified shopping environ-

ments. Furthermore, the authors of (Ricci et al., 2023)

proposed using small-sized virtual shopping environ-

ments to help avoid cybersickness, so we will also in-

clude questions on this topic in the post-experiment

questionnaire.

Exploring Interaction Mechanisms and Perceived Realism in Different Virtual Reality Shopping Setups

507

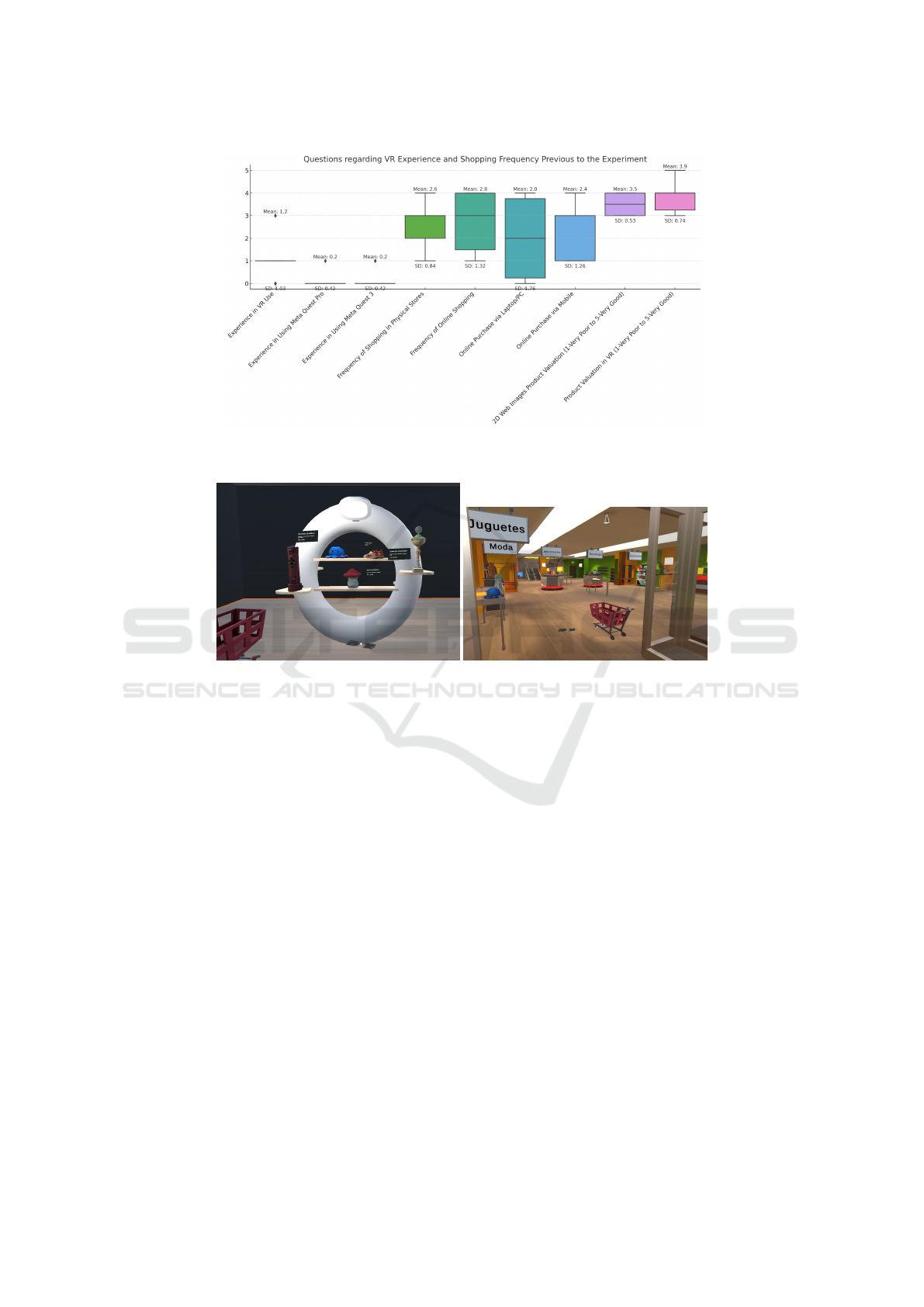

Figure 1: Box plot of responses to questions from pre-experiment questionnaire. All questions followed a Likert scale from 1

to 5. The first six questions ranged from 1- No experience to 5- Very frequently.

(a) Small environment with a single ex-

hibitor of products and the shopping cart.

(b) Screenshot of the large environment,

consisting of 24 products and 7 counters.

Figure 2: Screenshots of the environments developed for the experiment.

3.3.2 Third Scene: Large Virtual Shopping

Environment

The third and final phase of our experiment consisted

of a shopping experience in a large environment that

recreates a floor of a shopping center, as shown in Fig.

2b. In this last environment, our main objectives were

to address the usability and comfort of interactions in

a large setting, with a special focus on teleportation,

and to gather data to observe the effect of a large envi-

ronment on the time spent interacting with products.

Finally, through a post-experiment questionnaire and

the data obtained from interactions, we aim to dis-

cern whether monitoring user activity in an immersive

shopping environment provides useful data for infer-

ring their tastes and preferences.

All participants began the experiment in the same

position. This time, the experience was based on tasks

of searching for a product in a specific section. This

allowed us to collect data on navigation through the

environment and interaction in the sections.

Thus, the first task for each participant was to nav-

igate to the back of the environment, to get accus-

tomed to the teleportation interaction. From there,

participants were instructed to visit 3 product sections

and then a section of their choice, with the option to

revisit one of the first three. The user had to add to

the cart the product they would buy from the respec-

tive section at each point.

Each participant took between 3 and 4 minutes to

complete all the steps, having the freedom to inter-

act with the products once they reached the requested

section. Following this, they proceeded to complete

the questionnaire (see Section 4.4), which took them

an average of 10 minutes.

3.4 Data Collected

A series of Unity scripts were developed to save

a range of data into a .csv file during the exe-

cution of the second and third scenes. The gen-

erated .csv files contain data on interactions with

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

508

products, the shopping cart (products saved in each

scene and when), eye tracking, and teleportation.

Detailed information about the headers of the .csv

files, as well as the files generated during the

experiment, can be found in the README file

of the following repository, located in the “csv-

Files” directory of the repository: https://github.com/

AIR-Research-Group-UCLM/VR-IM-Experiment.

4 RESULTS

Figure 3: Frequencies of each product as being selected in

top 5 more realistic products.

4.1 Products Selected as Most Realistic

Fig. 3 shows the frequency with which each prod-

uct was selected as one of the top 5 most realistic by

the 10 participants. Out of a total of 50 responses

(5 products selected by each participant), 50% were

products scanned with Luma AI. Therefore, on aver-

age, 2.5 scanned products were selected by each par-

ticipant. Notably, the most popular products, as ob-

served, were the octopus teddy, followed by the sport

shoes and the burner, which are the visually most at-

tractive objects and were chosen by more than 60%

of the participants in their top 5. Although we were

aware that these scans had lower visual quality due to

being mostly composed of materials that cause reflec-

tions, like metal (the application’s own best practices

guide

1

advises against scanning such objects), we in-

cluded them to cover a wider range of materials.

4.2 Participants Behaviour in the Small

Environment

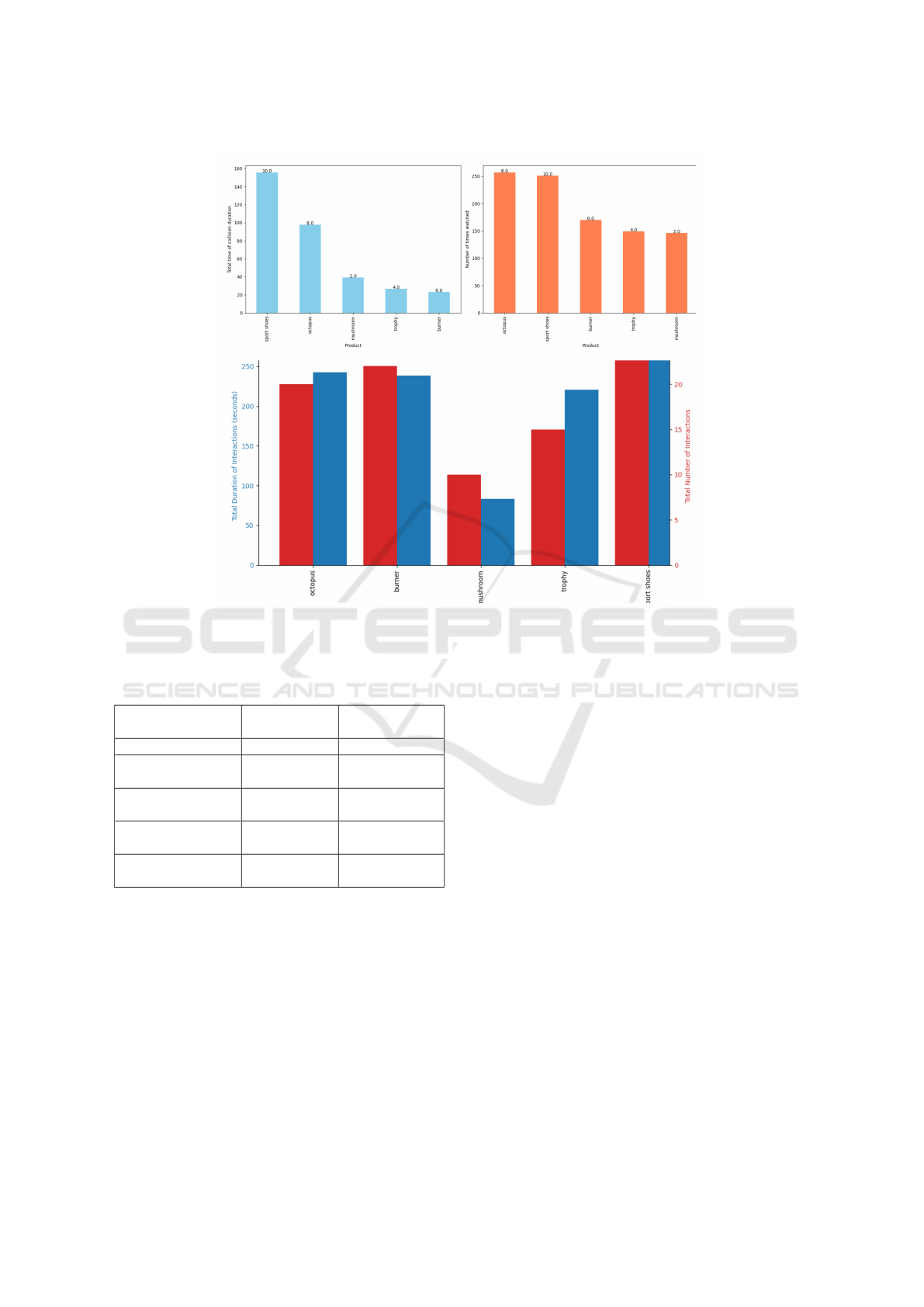

The top graph in Fig. 4 compiles the data from the

ten .csv files of eye tracking. The left graph displays

the total amount of time, in seconds, each object was

viewed (i.e., the rays emitted from the eyes collided

1

https://docs.lumalabs.ai/MCrGAEukR4orR9

with the object’s collider), with an annotation on each

bar indicating the number of times it was added to the

shopping cart. Conversely, the right graph shows the

number of times each product was looked at (counted

by the number of collisions), with the same annota-

tions as the left graph.

Notably, the products most frequently added to the

cart were the sport shoes, the octopus teddy, and the

wood burner, followed by the trophy and the mush-

room. However, in terms of collision time, the burner

registered the least amount of time. This could be due

to the object’s characteristics and the use of capsule

colliders, resulting in a narrower collider compared

to, for example, the shoes, affecting the data record-

ing. This situation is similar for the trophy. As ob-

served, the burner was the third most viewed product,

which might support this assumption. Regarding the

spatial information gathered from the eyes (position

and rotation), it’s noteworthy that the standard devi-

ation (SD) on the Y-axis is 0.61 for both eyes, while

SD = 0.11 and 0.28 for the X and Z axes, respectively.

This indicates that designs with less vertical or high

counters might be more comfortable for users.

The bottom graph in Fig. 4 shows a barplot of

the total time that each object was interacted with by

hands (left Y axis) and the total number of interac-

tions (right Y axis). This graph shows a similar trend

to those of the top barplots in Fig. 4, as we can see that

the three objects with the most interactions were the

sport shoes, the octopus teddy, and the wood burner,

followed by the trophy and the mushroom. An in-

teraction was considered from the moment the user

interacted with the object (in this case, grabbing it for

inspection) until releasing it. These data seem to sup-

port the assumption about the low viewing time of the

burner, as it’s observed that users interacted with the

burner more than 20 times in total for over 200 sec-

onds. Analyzing the time to the first interaction, the

mean (M) = 9.86 and SD = 4.19, suggesting that users

take about 10 seconds to visually scan the scene be-

fore deciding to interact with a product. Additionally,

M = 9.0 and SD = 1.45 for the number of interactions

performed by each user, indicating that the users in-

teracted more times than necessary for the experiment

on average. Finally, each participant interacted with

the octopus for M = 33.45 seconds (s), with the sport

shoes for M = 26.36 s, with the trophy for M = 20.36

s, with the burner for M = 14.85 s, and with the mush-

room for M = 9.91 s.

Exploring Interaction Mechanisms and Perceived Realism in Different Virtual Reality Shopping Setups

509

Figure 4: At the top, barplots showing total time (in seconds) and number of times every item was watched. At the bottom,

barplot showing total duration of interaction and total number of interactions for each product.

Table 1: Statistics of metrics extracted from teleportation

files.

Mean (M)

Standard

Deviation (SD)

First teleportation 14.76 seconds 6.10 seconds

Number of

teleportations

17.60 4.36

Attempts before

teleportation

2.25 0.40

Time for

teleporting

8.47 seconds 2.16 seconds

Teleportation

distance

3.74 meters 1.70 meters

4.3 Participants Behaviour in the Large

Environment

In this section, we will address the analysis of the

recorded data with the same approach as in Section

4.2. First, we will present statistics of some met-

rics related to teleportation within the virtual environ-

ment, which was the main novelty compared to the

previous environment. Table 1 shows the results ob-

tained for the metrics we will discuss. To success-

fully perform the first teleportation, participants re-

quired an average of M = 14.76 seconds, with an SD

= 6.01. This means they needed about 15 seconds on

average until they correctly formed the gesture with

their hand, pointed to a valid location to teleport, and

joined their thumb and index finger. Furthermore,

the time to successfully complete a teleportation took

users an average of M = 8.47 seconds, SD = 2.16. Al-

though there may be false negatives in the data (for

example, the HMD’s hand tracking system detecting

the pointing gesture for teleportation when the user’s

intention is different) or the dimensions of the tele-

portation zones being a factor, we consider the aver-

age teleportation time to be moderately high. While

it’s true that the teleportation distance is slightly high

(M = 3.74 meters, SD = 1.70 meters), which may also

have influenced a longer teleportation time as it re-

quires more time to aim, it suggests that participants

opted to move a greater distance with each teleporta-

tion. Lastly, we observe that the number of teleporta-

tion attempts before successfully completing one has

an average of M = 2.25 attempts, SD = 0.4 attempts.

In terms of eye tracking data, Fig. 5 displays the

number of times products in a section were viewed. It

also includes the times information signs of the sec-

tions, which guided the user (approximately 15% of

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

510

Figure 5: Number of times that each section drew the atten-

tion of participants.

the total), and the products added to the shopping cart

were viewed. As expected, the three most viewed

sections were those of the initial searches; however,

the fashion section stood out. Moreover, the technol-

ogy section was also one of the most popular dur-

ing the last step of free exploration of the environ-

ment, with 60% of users choosing a product from

this section. This environment also included many

computer-designed 3D models. Nevertheless, among

the scanned objects, both the sport shoes and the oc-

topus teddy were added to the shopping cart 4 times

each, and the trophy 2 times, maintaining moder-

ate interest among the participants. The rest of the

scanned objects, except for the trophy, were not in-

cluded in the environment.

Regarding product interactions, participants inter-

acted with 18 of the 24 products included, with the

least interaction in the food section. It’s notable that

the average number of interactions in this environ-

ment was M = 5.2, SD = 1.23, which is about half

of that in the small environment. On the other hand,

the average time spent interacting with products per

participant was M = 71.06 seconds, SD = 19.0. Com-

pared to the small environment, where the average

time was M = 117.16 seconds, SD = 16.02, there was

a 39% decrease in interaction time. These data sug-

gest that interaction was primarily limited to the first

product chosen by the user in each product search.

4.4 Questionnaire Answers

The post-experiment questionnaire completed by the

users can be consulted in the README of the repos-

itory referenced in Section 3.4. We used questions

from questionnaires present in the literature on User

Experience (UEQ), Immersion (SUS) and Motion

Sickness (MSAQ). Most of the questions were for-

mulated following a Likert scale from 1 to 5.

4.4.1 VR Shopping Usage

The participants significantly preferred inspecting

products as 3D models rather than 2D images (M =

4.0, SD = 0.63), positively influencing their purchase

intention (M = 3.9, SD = 0.83). Thanks to the inspec-

tion in VR using 3D models, participants indicated

that their purchase intention would change after ex-

amining an object in VR as opposed to just seeing it

in 2D images (M = 4.1, SD = 0.54), noting the ma-

jority of imperfections present in the sport shoes that

could be hidden with 2D images. Despite the data

collected, the majority preferred shopping in an en-

vironment similar to the large environment (M = 1.7,

SD = 0.46).Despite the data collected, the majority

preferred shopping in an environment similar to the

large environment (M = 1.7, SD = 0.46). Regarding

the usefulness of monitoring user activity to infer their

preferences and tastes, 8 out of 10 participants added

the product they wanted from the same section they

responded to in question Q24.

4.4.2 Immersion and Motion Sickness

Participants indicated very low levels of general

malaise (M = 1.6, SD = 0.66), fatigue (M = 1.9, SD =

0.94), and dizziness (M = 1.5, SD = 0.67), suggesting

that the color palette of the environments and other

graphic aspects are comfortable for users in not very

long shopping sessions. Regarding immersion, par-

ticipants felt quite immersed in the shopping environ-

ments (M = 4.1, SD = 0.54), and time passed quickly

during the experiment (M = 4.1, SD = 0.54). How-

ever, they were neutral in feeling like they were in a

physical store (M = 3.0, SD = 0.45).

4.4.3 User Experience and Usability

Participants found the environment moderately easy

to understand and use (M = 3.7, SD = 0.9), and

thought it would be so for most of the public (M =

3.6, SD = 0.92). Regarding the shopping cart, par-

ticipants equally valued adding products themselves

(M = 3.6, SD = 0.92) or through the user interface

button (M = 3.6, SD = 0.8), while opinions regard-

ing the use of gravity in the large environment varied

(M = 3.0, SD = 1.18). Additionally, users moderately

positively rated the ease of moving through the large

environment using teleportation (M = 3.7, SD = 0.64)

as well as the fluidity and naturalness of interactions

(M = 3.5, SD = 0.92), where the variety of responses

was slightly higher. Finally, participants preferred in-

teracting using hands freely (M = 3.5, SD = 0.81) over

the use of controllers (M = 2.6, SD = 0.49).

Exploring Interaction Mechanisms and Perceived Realism in Different Virtual Reality Shopping Setups

511

5 CONCLUSIONS

Based on the presented results, we can conclude that

realistic products appear to influence both purchase

intention and the time and frequency with which a

user interacts and inspects them. The data shown in

Section 4.2 indicates that the most viewed products

and those with which there was more extended inter-

action were the ones most added to the shopping cart.

We also identified aspects to improve in future shop-

ping environments, such as the use of colliders that

allow for more consistent data recording, as seen in

the case of the wood burner.

We also observed differences between a large and

a small shopping environment. Although participants

generally preferred the large environment, possibly

influenced by its novelty and the use of teleporta-

tion, we found that a small environment encourages

interaction. While only 10 seconds were needed in

the small environment to visually scan the scene and

start interacting, it took users in the large environ-

ment on average four times more seconds to begin

interacting due to needing to become accustomed to

teleportation. However, user preference for a large

environment indicates flexibility in configuring a vir-

tual shopping environment, focusing more on product

interaction or a thematic store that gamifies the shop-

ping experience and makes it more enjoyable for the

user.

Additionally, participants positively rated the in-

teraction mechanisms included in the environment in

the post-experiment questionnaire responses, result-

ing in averages close to 4 on the presented Likert

scales. Also, the monitored data showed that about

85% of the time spent by each participant in the ex-

periment steps was used to interact with objects, indi-

cating that the interactions were pleasant and natural

for them to continue repeating. Moreover, environ-

ment navigability was perceived as simple, although

the data shown in Table 1 indicate somewhat elevated

times, as participants needed more time to aim at

a greater distance. Lastly, the utility of monitoring

user activity in these virtual environments has been

demonstrated, corroborating that the objects in which

the user shows the most interest are those added to the

cart, corresponding to the section answered in ques-

tion 24 of the questionnaire.

ACKNOWLEDGEMENTS

This work has been founded by the Span-

ish Ministry of Science and Innovation

MICIN/AEI/10.13039/501100000033, and the

European Union (NextGenerationEU/PRTR), under

the Research Project: Design and development of

a platform based on VR-Shopping and AI for the

digitalization and strengthening of local businesses

and economies, TED2021-131082B-I00.

REFERENCES

Chandak, A., Singh, A., Mishra, S., and Gupta, S. (2022).

Virtual Bazar-An Interactive Virtual Reality Store to

Support Healthier Food Choices. In 2022 1st Inter-

national Conference on Informatics (ICI), pages 137–

142.

Grewal, D., Roggeveen, A. L., and Nordf

¨

alt, J. (2017). The

future of retailing. Journal of Retailing, 93(1):1–6.

The Future of Retailing.

Kim, J.-H., Kim, M., Park, M., and Yoo, J. (2023). Im-

mersive interactive technologies and virtual shopping

experiences: Differences in consumer perceptions

between augmented reality (AR) and virtual reality

(VR). Telematics and Informatics, 77:101936.

Peukert, C., Pfeiffer, J., Meißner, M., Pfeiffer, T., and Wein-

hardt, C. (2019). Shopping in virtual reality stores:

The influence of immersion on system adoption. Jour-

nal of Management Information Systems, 36(3):755–

788.

Ricci, M., Evangelista, A., Di Roma, A., and Fiorentino, M.

(2023). Immersive and desktop virtual reality in vir-

tual fashion stores: a comparison between shopping

experiences. Virtual Reality.

Schnack, A., Wright, M. J., and Holdershaw, J. L.

(2019). Immersive virtual reality technology in a

three-dimensional virtual simulated store: Investigat-

ing telepresence and usability. Food Research Inter-

national, 117:40–49.

Speicher, M., Cucerca, S., and Kr

¨

uger, A. (2017). Vrshop:

A mobile interactive virtual reality shopping environ-

ment combining the benefits of on- and offline shop-

ping. Proc. ACM Interact. Mob. Wearable Ubiquitous

Technol., 1(3).

Speicher, M., Hell, P., Daiber, F., Simeone, A., and Kr

¨

uger,

A. (2018). A virtual reality shopping experience using

the apartment metaphor. In Proceedings of the 2018

International Conference on Advanced Visual Inter-

faces, AVI ’18, pages 1–9, New York, NY, USA. As-

sociation for Computing Machinery.

van Herpen, E., van den Broek, E., van Trijp, H. C., and

Yu, T. (2016). Can a virtual supermarket bring real-

ism into the lab? comparing shopping behavior using

virtual and pictorial store representations to behavior

in a physical store. Appetite, 107:196–207.

Wu, H., Wang, Y., Qiu, J., Liu, J., and Zhang, X. L.

(2019). User-defined gesture interaction for immer-

sive vr shopping applications. Behaviour & Informa-

tion Technology, 38(7):726–741.

Xi, N. and Hamari, J. (2021). Shopping in virtual reality: A

literature review and future agenda. Journal of Busi-

ness Research, 134:37–58.

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

512