Shaping an Adaptive Path on Analytic Geometry with Automatic

Formative Assessment and Interactive Feedback

Alice Barana

a

, Cecilia Fissore

b

, Marina Marchisio Conte

c

and Michela Tassone

d

Department of Molecular Biotechnology and Health Sciences, University of Turin, Piazza Nizza 44, 10126 Torino, Italy

Keywords: Adaptive Education, Analytic Geometry, Automatic Assessment, Formative Assessment, Digital Learning

Environments, Mathematics Education.

Abstract: This paper proposes a contribution to the practice of automatic formative assessment and adaptive education

to highlight how it enhances the teaching and learning of Mathematics in secondary school. In this context

we developed an adaptive learning path on Analytic Geometry, whose potential is discussed in the light of a

theoretical framework, by describing and analyzing some activities. The activities allowed to introduce,

address, explore the circle both as a geometric locus and its relationship with real situations through

contextualized problems. The effectiveness of the path to reach the learning outcomes was tested through a

quasi-experimental research design. The experimentation involved 98 third-year students from two upper

secondary schools in Turin, Italy. Results were monitored through pre- and post-tests whose outcomes were

compared with those of a control group; in addition, a final questionnaire was administered to the treated

group. The results show the effectiveness of the tested activities in improving mathematical understanding;

they also suggest strategies to introduce automatic formative assessment for adaptive learning in an effective

way into a learning path, but they also highlight the need of solid pedagogical foundations which require

continuous training of teachers by experts.

1 INTRODUCTION

Adaptive learning is a personalized learning

methodology that is based on the individual

experiences of the learner so as to implement an

educational and training path capable of adapting to

his or her actual needs and aligning with his or her

learning times and styles. Unlike traditional learning

experiences, adaptive learning allows for a fluid and

stimulating study, which progressively helps the

learner to reach and practice his or her actual potential

(Romano et al., 2023).

This paper presents an experiment in mathematics

education that provides a contribution to adaptive

learning through automatic formative assessment in

upper secondary school. The motivation from which

this research paper arises is to highlight how

automatic formative assessment enhances the

teaching and learning of Mathematics by presenting

a

https://orcid.org/0000-0001-9947-5580

b

https://orcid.org/0000-0001-8398-265X

c

https://orcid.org/0000-0003-1007-5404

d

https://orcid.org/0009-0005-2365-7317

its characteristics, peculiarities, potential and its

effectiveness for the involvement and interactivity of

activities that allows to be designed, including the

possible improvements toward which this mode of

assessment remains open. This study is based on the

conceptualization of automatic formative assessment

proposed by the DELTA (Digital Education for

Learning and Teaching Advances) research group of

the University of Turin (Barana et al., 2021). In

particular, we refer to two kinds of adaptive activities:

guided activities, i.e., activities whose resolution

appears to be conducted step by step to show a

possible solving process or example of problem

solving; and activities with interactive feedback that

outline a path that leads the learner to the resolution

of a task after one or more autonomous attempts

allowed (Barana et al., 2021). Students can try the

activities multiple times, and the possibility to

generate algorithmic questions allows for

Barana, A., Fissore, C., Marchisio Conte, M. and Tassone, M.

Shaping an Adaptive Path on Analytic Geometry with Automatic Formative Assessment and Interactive Feedback.

DOI: 10.5220/0012627900003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 2, pages 361-372

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

361

numerically different situations at each attempt. In

this context we developed an adaptive learning path

whose potential is proposed and discussed in detail in

light of a theoretical framework by describing and

analyzing some examples of digital activities

designed in the context of automatic formative

assessment and involving peer collaboration. The

path deals with the circle from an analytical point of

view. The activities were tested through a teaching

experiment which involved a total of 98 grade 11

students from two scientific lyceums in Turin (Italy),

divided between treated and control classes. The

experimental activities consisted of adaptive digital

activities to be carried out in the classroom and at

home. Results were monitored through a pre-test, a

post-test and a final questionnaire (the latter

administered only to the treated group). Students

involved in adaptive learning were allowed to identify

their level of readiness based on INVALSI (the

national agency in charge of evaluation of the Italian

education system) levels, merged into 3 macro levels

for simplicity. The following sections will detail the

methodology adopted to structure the experiment and

to adapt the same activities to different learning

contexts for multiple factors. This was achieved

thanks to the help of the Ministry of Education and

Merit's Problem Posing & Solving (PPS) Project

platform (Brancaccio, et al., 2015). The second

section discusses the theoretical framework in which

our research fits and in light of which we have

attempted to analyze and comment on our results. In

the third section we outline the research questions that

guided us in the planning, implementation and

management of the classroom teaching experiment

and in the choice of data collection and analysis

techniques, while the fourth section reports and

discusses the most important results obtained. Lastly,

the conclusions highlight some possible future

developments of the work and some limitations that

emerged from the research, with attached suggestions

to improve the effectiveness of automatic formative

assessment in any future experiments.

2 THEORETICAL FRAMEWORK

2.1 Digital Learning Environment

The traditional learning environment is the classroom

where teacher explains, the student learns

individually or with peers and uses concrete tools

such as paper, pen, and a blackboard. The advent of

technology has introduced tablets, PCs and

interactive whiteboards into these environments and

shifted learning to a non-physical dimension: the

Internet. Digital Learning Environments (DLEs)

denote learning ecosystems in which there is a human

component (teacher, students, peers), a technological

one (software, web-conference tools, assessment

tools, smartphones, computers, tablets or interactive

whiteboards, cameras, etc.) and the interactions

between the two (dialogues between members,

human-technology interactions, etc.) (Barana &

Marchisio, 2022). The technological apparatus of a

DLE can support a learning activity in the following

ways (Barana, Conte, et al., 2019; Barana, Marchisio,

et al., 2019): (a) creation, management and editing of

resources (e.g., interactive files, theoretical lessons,

glossaries, videos), activities (e.g., discussion chats,

tests, forums, questionnaires, submission of

assignments) and the general learning environment

by both faculty and students; (b) provision of

materials; (c) collection of qualitative and

quantitative data on student action, use of materials

(whether a resource was used and how many times),

participation; (d) analysis and processing of collected

data to monitor skill development; (e) provision of

feedback on the activities carried out to both students

and teachers.

2.2 Formative Assessment

The term "formative assessment" was coined in 1967

by Michael Scriven in opposition to "summative

assessment" to denote a particular practice aimed at

gathering information about a course with the aim of

improving the program (Scriven, 1967). The

definition later given by Paul Black and Dylan

Wiliam and well accepted in the literature is the

following: "Classroom practice is formative to the

extent that evidence about student achievement is

collected, interpreted, and used by teachers, students,

or their peers to make decisions about next steps in

instruction" (Black & Wiliam, 2009). According to

William and Thompson (2007), formative assessment

can be characterized by five strategies: (1) the teacher

should clarify learning intentions and criteria for

success, peers should understand and share learning

intentions and criteria for success, the student should

understand learning intentions and criteria for

success; (2) the teacher should design effective

classroom discussions and other tasks that stimulate

learners' understanding; (3) the teacher should

provide feedback that prompts learners to progress;

(4) students should be activated as mutual teaching

resources; (5) students should be activated as the

owners of their learning.

CSEDU 2024 - 16th International Conference on Computer Supported Education

362

Feedback is probably the most distinctive and

characteristic element of formative assessment and

has been defined by John Hattie and Helen

Timplerley as "the information provided by an agent

(teacher, peer, book, parent, personal experience,

etc.) regarding aspects of one's performance or

understanding" (Hattie & Timperley, 2007). To be

effective, feedback must answer three main

questions, "Where am I going?", "How am I going?",

and "Where am I going next?". In addition, a

feedback can work at 4 different levels: at the activity

level (how well the activity was performed); at the

process level (what process is involved in the

activity); at the self-regulation level (what

metacognitive processes it triggers); at the personal

level (what personal evaluations and affections

concern the student as a person) (Hattie & Timperley,

2007). According to Sadler (Sadler, 1989), for

feedback to be productive it must alter the gap

between current and desired performance because if

the information is not, or cannot be, processed by the

student to achieve an improvement it will have no

effect on his or her learning. In any curricular area

where a grade or score given to a student constitutes

only a number the attention of the subject concerned

is diverted from such judgments and the criteria that

formulated it, and the idea that learning is a product

rather than an evolving process is built up, even if

only on an unconscious level. In this sense, a grade

can therefore be counterproductive for educational

purposes (Sadler, 1989). The learner must be able to

judge the quality of what he or she is learning and

properly control what he or she puts into play as he or

she solves a problem, performs an action, or states an

opinion. If the learner generates relevant information,

the procedure is part of self-monitoring; if the source

of the information is external, it is associated with

feedback (Sadler, 1989). Formative assessment

includes both self-monitoring and feedback, and the

goal of many educational systems that use them is to

facilitate the transition from feedback to self-

monitoring so that it occurs as naturally as possible

.

2.3 The Power of Feedback

First, it is important to distinguish between 2

categories of feedback (Shute, 2008). The first one is

elaborate feedback, which contains the explanation

leading to the correct solution, allows links to other

reading materials, cues, suggestions or combinations

thereof. In Mathematics, it often takes the form of a

guided solution proposal. It can be divided into more

manageable units to avoid cognitive overload. The

second one is corrective feedback, which simply says

whether the answer is right or wrong. According to

Shute (2008), the kind of feedback that really allows

for cognitive improvement and engagement is

elaborate feedback. According to Kluger and DeNisi

(1996) computerized feedback is more effective than

human-provided feedback because it helps the

student more to generate internal feedback to close

the gap between current performance and expected

performance. Corbalan, Paas, and Cuypers (2010)

compare 3 different levels of formative feedback:

feedback at the end of the solution, feedback on all

steps of the solution at the same time, and feedback

on all steps of the solution successively.

For those who have not managed to reach the

exact solution, feedback provides partial credit based

on the correctness of their step-by-step response and

acts as a motivational lever as well as providing more

complete knowledge to both the student and the

teacher about their skills (Barana et al., 2020).

However, it is good to keep in mind that too much

feedback within the same task can end up detracting

from performance, and overly specific feedback can

also be harmful because it leads the student to focus

excessively on the specific task and not on the general

strategy (Hattie & Timperley, 2007). Feedback has its

greatest effect when a student expects his or her

answer to be correct and instead it turns out to be

incorrect because they are studying the item longer in

the attempt to correct and understand the

misunderstanding; conversely, if the answer is

incorrect and the certainty was low, the feedback is

often ignored (Ashford & Cummings, 1983). Black

and Wiliam (1998) have proven that, for a teacher to

use feedback to praise the student's performance in a

given context and not the student as a person brings

better results because it helps learners develop what

Dweck in 2000 called "incremental vision", that is,

the ability to grasp learning as an evolving process

and not as a static, defined product (Dweck, 2000).

Peer involvements present an important source of

external feedback: the dialogue with peers improves

the students’ sense of self-control on learning in

several ways (Laurillard, 2002). First, students

understand better when a concept is explained by a

peer who has just learned the topic at hand, since they

happen to find language more accessible when it

comes from their peers. Second, peer discussion

exposes students to alternative perspectives that allow

them to revise, and possibly reject, their initial idea

and build new knowledge through dialogue and

comparison. Third, by commenting on peer work,

students develop detachment from judgment in favor

of evaluation of their work. Fourth, peer discussion

Shaping an Adaptive Path on Analytic Geometry with Automatic Formative Assessment and Interactive Feedback

363

can be motivating because it encourages students to

persist by not giving up (Boyle & Nicol, 2003).

The current research challenge is to refine the

principles related to interactive feedback, identify

gaps and gather further evidence on the potential of

formative assessment and feedback to support self-

regulation.

2.4 Adaptive Teaching and Adaptive

Learning

According to Borich (2017) adaptive teaching means

"applying different instructional strategies for

different groups of students so that the natural

diversity prevailing in the classroom does not prevent

any student from achieving success." This way

teaching has gained importance because the purpose

of education has shifted from the static transmission

of knowledge to the building of skills that imply

"personal attitudes" that allow knowledge and skills

to be brought into play in solving certain situations or

problems within a student-centered educational

approach (European Commission, 2018). The

adaptive approach is particularly effective when

applied to small groups; it also helps students

overcome their individual difficulties (Mascarenhas

et al., 2016). It is supported by the use of technologies

that can also successfully replace the physical

presence of the teacher through the use of feedback

and suggestions that a teacher would not be able to

provide during execution to all students at the same

time. An adaptive teaching/learning system, i.e. one

that can adapt to the characteristics, needs and even

preferences of teachers and students, presupposes the

involvement of adaptive technologies that are

generally controlled by computational devices. The

information collected is stored within a learning

model that provides a starting point for deciding how

to deliver personalized content for each student

(Mascarenhas et al., 2016).

2.5 Automatic Formative Assessment

When formative assessment is offered through an

automatic assessment system that automatically

evaluates responses and returns feedback, it is called

automatic formative assessment (Barana et al., 2021).

Some automatic formative assessment systems

allow the creation of adaptive questions, namely

questions divided into sections that are shown based

on the answer given in the previous section. With

adaptive questions, problems can be proposed in the

form of: "Guided Activities" or through activities

with interactive feedback – terms suggested in

(Barana et al., 2021). Guided activities mean

activities whose resolution appears outlined step by

step to show an alternative solution procedure or

example of problem solving. These types of questions

were extremely useful during the COVID-19

pandemic, when learners needed tools that could

guide their cognitive processes and help them develop

new skills even without the physical presence of a

teacher. Activities with interactive feedback, on the

other hand, represent an interactive process provided

following a student's first independent attempt at

solving a task, tending to be in the case of an incorrect

solution or for those who failed to answer the

proposed question independently, but possibly also

for those who answered correctly to enable them to

compare the solving strategy they used with the one

suggested. In interactive feedback, the main question

is broken down into sub-questions that investigate

prerequisites, fundamental operations or other

representations of the initial proposed problem. In

each step, if an incorrect answer is still given, the

correct one will be shown so that it can be used in

subsequent steps. The DELTA research group of the

University of Turin has proposed a model for

designing activities for automatic formative

assessment using an automatic formative assessment

system enhanced by a mathematical engine that

contemplates the following features (Barana et al.,

2018): (a) always available activities, which can be

tackled independently without limitations in time,

duration and number of attempts; (b) algorithmic

questions in which the values, parameters, graphs or

formulas in the question text and feedback vary

randomly with each attempt by a student and for each

individual student due to the involvement of teacher-

defined algorithms; (c) open-ended answers,

evaluated for their mathematical correctness due to

the advanced computational capabilities of the

system, where students are invited to answer by

adopting the most suitable register among numerical,

graphical, symbolic, literal, etc.; (d) immediate

feedback, or at least, short-term feedback, that can be

provided, for instance, by creating tests with no more

than 5 questions and feedback at the end of the test,

in order to show it while the student is still focused on

the task; (e) contextualization of the task in the real

world or in applications that are relevant for students

in order to produce greater engagement and build to

deeper understanding; (f) proposing interactive

feedback, which has been shown to be particularly

effective in developing mathematical skills.

In this paper, we distinguish adaptive questions

(which can take the form of guided activities or

interactive feedback) from adaptive assignments,

CSEDU 2024 - 16th International Conference on Computer Supported Education

364

which are tests composed of a set number of (simple)

items that are submitted to students one at a time; the

student's performance on one question determines the

level of the next question. Adaptive assignments are

often used to determine a student's level of knowledge

on a concept or topic, which can be determined

precisely by solving tasks of increasing difficulty

(Botta, 2021). Once the assignment is completed,

feedback is returned to students depicting the

progress profile of their performance from which they

can extract a final level of competence, facilitating

self-assessment through metacognitive effort.

Examples of adaptive questions and adaptive

assignment will be given in the following sections.

3 METHODOLOGY

3.1 Research Questions

The research activity was guided by some

fundamental questions that clearly identify and

outline the crucial aspects of the project: (RQ1) How

can the same adaptive questions and adaptive

assignments can adapt to students with different

learning prerequisites? (RQ2) What results did the

designed adaptive path have in relation to the

disciplinary learning outcomes? (RQ3) How did

learners respond to the proposed adaptive teaching?

With the first research question (RQ1) we want to

investigate whether, and in what way, it is possible to

use and/or adapt the same didactic activities in even

decidedly diverse contexts in order to produce

meaningful, varied, and complete learning despite the

differences in the learning background and

prerequisites that distinguish the subjects constituting

the class group. With the second research question

(RQ2) we try to examine the outcomes of the

experimentation in terms of learning outcomes

achieved by the students involved in order to

understand whether the effect produced by the

proposed activities, which can be achieved in a short

time interval and with reference to the control classes,

not involved in the adaptive path, can be translated

into a positive, negative or null reference. Finally,

with the third research question (RQ3), we also focus

our attention on how learners perceive and receive

our adaptive path.

3.2 Experiment Organization

The method adopted is quasi-experimental. The

participants are students of grade 11 (which in Italy

corresponds to the third year of upper secondary

school, age 16) of scientific lyceum in the city of

Turin (Italy). Specifically, 3 classes were involved in

the treated group (54 students) and 2 classes in the

control group (44 students), for a total of 98 students.

The classes were recruited based on volunteer

subscription of their Mathematics teachers to the

project. Two of the three teachers in the treated group

were used to propose activities with automatic

formative assessment to their classes, while the other

had never used this method before, like the two

teachers of the control group. The learning outcomes

of the experimentation were: identifying the equation

of a circle from its geometrical properties and vice

versa; define the mutual position of two circles in the

Cartesian plane; solving real-world problems

involving circles. The treated classes followed a 4/6

hour course, 2 or 4 entitled "The circle as a geometric

locus" and 2 entitled "The circle as a physical locus".

During the class meetings, one of the researchers of

the team was present in class to support the teacher

and the students especially with the use of the

platform. They were organized as follows: students

worked in pairs of internally homogeneous level; an

adaptive assignment and some adaptive questions

with interactive feedback or guided activities were

proposed; between one meeting and the next, other

activities similar to those carried out in class were

assigned to the students.

The choice of using internally homogeneous level

groups is motivated by the fact that if the students

have approximately the same starting level, the

adaptive activity can adapt to both at the same time.

In this way it was possible to activate collaboration

during adaptive activities. Moreover, the peer

collaboration could be even more effective, since it is

not influenced by a leader-follower relationship, and

both the students, having approximately the same

level of competence, need to actively reason on tasks

(Barana et al., 2023). The students’ initial level was

determined through the pre-test and discussed with

their teachers. Students could access the activities

from the Moodle platform of the national PP&S

Project (Brancaccio, et al., 2015), which is integrated

with the automatic assessment system Moebius

Assessment, widely used for learning Mathematics

thanks to its mathematical engine (Fahlgren &

Brunström, 2023). Figure 1 shows an example of a

guided activity to illustrate its peculiar features,

called “Cooling Towers”. The learners are faced with

an apparently unknown situation in which, following

the suggestions provided in the guided path, they are

asked to extrapolate the essential information from

the prompts and carry out simple replacement

operations.

Shaping an Adaptive Path on Analytic Geometry with Automatic Formative Assessment and Interactive Feedback

365

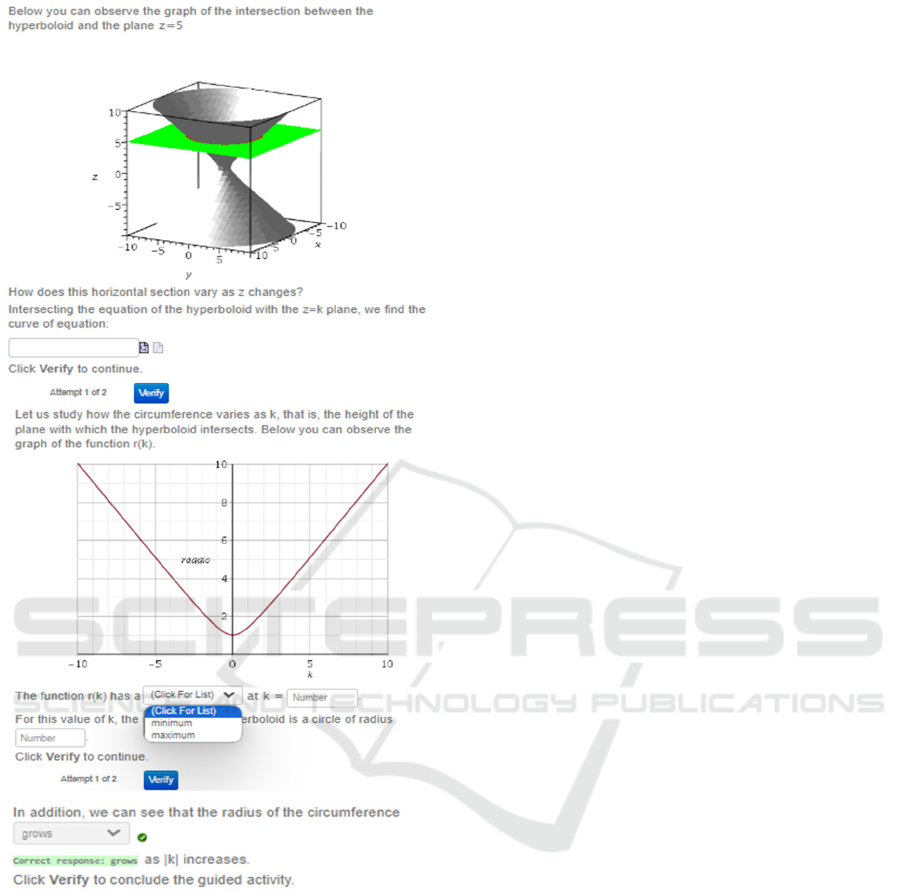

Figure 1: Guided activity “Cooling towers”.

The preview function (icon depicting a P) allows the

learners to visualize in a graphical register their

answer and thus be able to compare the graph

proposed in the text and the one generated by their

answer. This function provides students with some

initial feedback that allows them to self-assess their

answer before submitting it to the system, thus in a

moment in which they are still allowed to change it.

This makes it possible to activate the fifth strategy of

formative assessment conceived by Wiliam and

Thompson (2007): students have the opportunity to

develop responsibility in their learning path; they

should be able to take advantage of all the tools that

are made available to them in order to proceed

adequately in the resolution, and at the same time it

supports self-assessment since it makes the learners

able to establish the correctness of their answer

independently. Lastly, the preview also works on the

psychological-affective level since it enables the

learners to reassure themselves about the correctness

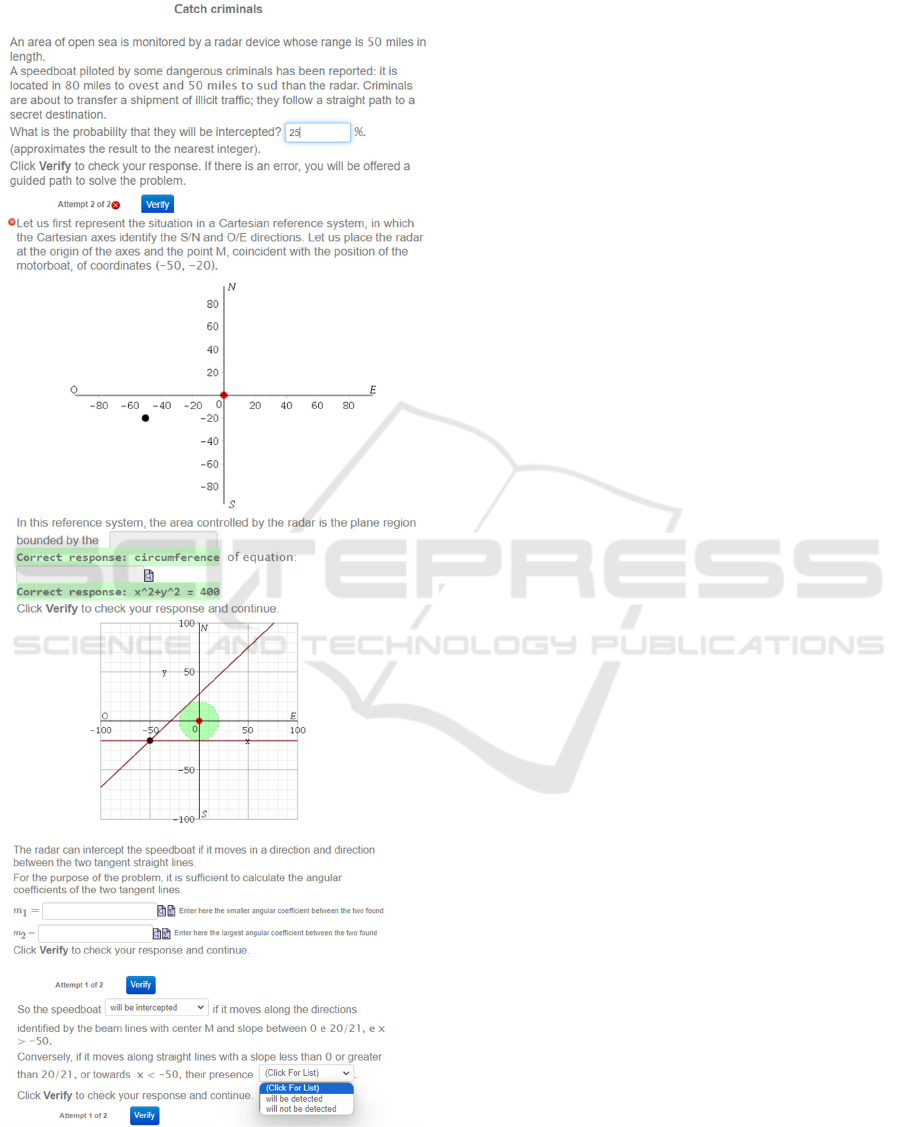

of their actions. Figure 2 shows an example of an

adaptive question with interactive feedback called

“Let's catch Criminals”. It allows a number of initial

autonomous attempts. In the case of a correct answer,

the task is successfully completed. In the case of an

incorrect answer, after a few attempts allowed, a

guided path starts: at each step, the learner is led to

develop the intermediate results necessary to obtain

the final solution.

The results of the experimentation were

evaluated through a pre-test and a post-test

administered to the control and treated group. The

treated classes took the pre-test before starting the

adaptive path; the control classes took it before

starting to work on the circle with their teachers in a

traditional way in order to compare this approach to

the one proposed in this experiment. Their teachers

were kept updated on the results of the research and

the adaptive activities developed for their future use

were shared with them. The post-test was

administered after completing the circle with or

without the adaptive path, and after a few weeks to let

students the time to study the topic and, for the treated

group, complete the online assignment. The pre-test

included 10 questions, some of which including sub-

items for a total of 25 sub-items, about analytic

geometry, mostly about the line and the parabola,

which are usually studied before dealing with the

circle. 4 of them were contextualized in real-world

situations, while the others concerned abstract

mathematical situations. Most of them are adapted by

the INVALSI tests of Mathematics for grade 10 or 13.

The post-test included 7 questions with a total of 18

sub-items, all of them dealing with the circle. Some

of them were adapted by INVALSI tests, while others

were created appositely for this test. 9 items out of 18

were contextualized in real-world situations and

almost all of them involved problem-solving

processes, so the post-test had a higher level of

difficulty than the pre-test. The experimentation was

also evaluated through the review of videos taken

with the aid of a camera during class meetings, with

the permission of the students' families and in

compliance with the rules on privacy and data

processing, in an attempt to monitor the reasoning and

communicative processes implemented by the

CSEDU 2024 - 16th International Conference on Computer Supported Education

366

students while working in pairs to carry out the

activities.

Figure 2: Activity with interactive feedback “Catch

criminals”.

Lastly, students in the treated classes completed a

final questionnaire. The student questionnaire was

made up of 23 total items that students could answer

on a 5-value scale ranging from "not at all" to "very

much" divided into: 10 items to detect appreciation of

the proposed activities and pair work; 13 items to test

the expository clarity and effectiveness of the

activities and the role of interactive feedback; 3 open-

ended questions with the task of recording

impressions, critical issues, but also strengths of the

proposed educational offering. The project was

carried out in compliance with the local, national and

institutional ethical laws and requirements. All

participants and their families signed a permission for

using their data for research purposes. The project

obtained the ethical approval from the Bioethical

Committee of the University of Turin.

3.3 Data Collection and Analysis

The data obtained from our research were divided

into 4 macro categories: data from responses to

adaptive activities; data from the pre-test and post-

test; data from the final questionnaire; data from

monitoring pairs of students. We analyzed the results

following different paths depending on the type of

data. For results to adaptive activities present on the

platform, we calculated the times of performance in

specific activities; the number of student pairs that

obtained full scores on some questions; the scores

totaled in the adaptive assignment. For the post-test

and pre-test results, they were related to 100 and then

we performed an analysis based on 3 types of tests:

1. analysis of covariance using the ANCOVA test.

This test proved to be particularly suitable for

examining whether the results of the post-test

(dependent variable) depended on the treated group

or to the control group (categorical independent

variable) while taking into account the results

obtained at pre-test (covariate), which identifies an

initial stage, common to all subjects involved in the

survey (Creswell & Clark, 2017).

2. Chi-square test that allowed us to check

whether or not the correctness of the answers to single

items were dependent on membership in the

experimental or control group and to make

observations related to the proposed learning path.

3. Cramer's V test that allowed us to test whether

the difference in scores obtained in specific post-test

items by the two groups were significant and to make

observations related to the proposed learning path.

For the qualitative data collected from the

questionnaires, we made an initial distinction

between the answers given to the Likert-scale items

Shaping an Adaptive Path on Analytic Geometry with Automatic Formative Assessment and Interactive Feedback

367

proposed in the questionnaire and the answers given

to the open-ended questions asking for strengths and

weaknesses. Response occurrence rates were

calculated in an attempt to quantitatively highlight

which aspects of the proposed experimentation they

found most successful, which were most problematic,

which new aspects were highlighted and which they

considered desirable in their future daily teaching.

4 RESULTS

4.1 Research Question 1

The educational experimentation was not carried out

in the same way in all the 3 treated classes. This fact

was dictated by the meeting of significantly different

school contexts and classroom environments, each

enriched by the specific characteristics of each

student who was part of it. What led to a clear

distinction in methods was mainly having or not

having already introduced circle before our meetings,

condition that affects the students’ learning

background and prerequisites. For this reason, we

have divided the description of each meeting into 2

sections, taking this fact into account. This enabled us

to highlight the malleability of this learning path.

4.1.1 Circle Already Introduced Before

Starting the Adaptive Path

In two out of the three treated classes, the circle had

already been introduced to the students by their

teachers before starting the adaptive learning path. In

the first meeting, after creating the working pairs

based on the pre-test results, 2 activities were

proposed. Firstly, students were asked to complete the

adaptive assignment to get feedback about their

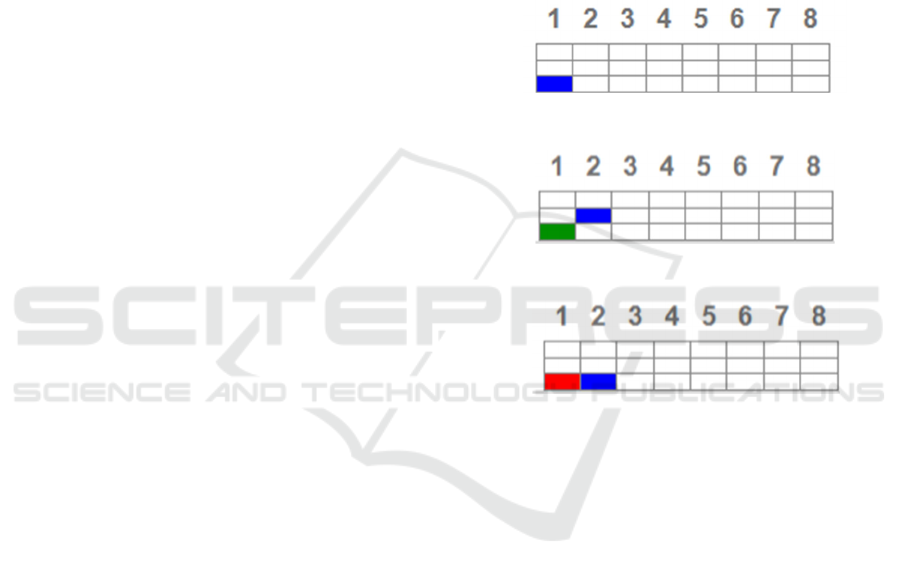

starting level. The adaptive assignment provides

feedback that Shute (2008) calls corrective since for

each question the student pairs can visualize the

correctness of their answer based on the colour (green

or red) that appears in the box corresponding to that

question, depending whether it was correct or not.

Secondly, adaptive questions split into three difficulty

levels were proposed. Students were asked to start

with the level they believed they belonged to based

on feedback from the adaptive assignment. Adaptive

questions provide students with elaborate feedback

(Shute, 2008) in that in the event of an error, guided

processes help students to achieve the correct

solution. The adaptive assignment containing 8-10

questions depending on the initial preparation of the

class. Before starting the assignment, students could

view the graph shown in Figure 3 whose number of

horizontal squares refers to the total questions in the

test (the blue colored box corresponding to the

question being asked) while the number of vertical

squares refers to the difficulty level to which the

question belongs. After answered a question, clicking

on the Next button, the next question appears but

from the graph students can visualize, by corrective

feedback, the outcome of one’s answer: if the box

turns green the answer was correct (Figure 4) and they

go up a level, if the box turns red the answer given

was wrong (Figure 5) and they go down in level or

continue to answering a question to Initial level.

Figure 3: Adaptive assignment graph.

Figure 4: Correct answer.

Figure 5: Incorrect answer.

Learners anxiously await immediate feedback and, in

the case of a correct answer, express satisfaction and

enthusiasm demonstrating how immediate feedback

relating to the resolution of a question actually has an

impact on a personal level. In fact, Hattie and

Timperley (2007) state that it increases esteem, both

toward the partner and toward oneself. On the other

hand, disappointment is perceived in the case of an

incorrect answer, but with the intention to improve in

subsequent questions not by giving up in despair or

frustration, but by proceeding with greater attention,

precision, and reflection. This attitude integrates well

with the "incremental view” identified by Dweck

(2000). In Figure 6 we report a graph depicting a

possible trend recorded by a pair performing the

adaptive assignment. It represents an example of what

Corbalan, Paas, and Cuypers (2010) call "formative

feedback on all the steps provided later", which fits

well into the third level identified by Hattie and

Timperley (2007), that of self-regulation, since it

CSEDU 2024 - 16th International Conference on Computer Supported Education

368

allows the subjects who receive it to trigger a personal

metacognitive reflection on their performance.

Figure 6: Hypothetical performance in adaptive

assignment.

In the second meeting, contextualized problems

related to real-life situations were presented in which

learners are engaged in activities of exploring,

contextualizing, extracting essential data, and

modeling an actual situation. In one class 4 out of 8

pairs autonomously solved the first contextualized

problem without the need of the interactive feedback

and within a reasonable time (from 12 min to 48 min),

while in the second class 3 pairs gave the correct

answer autonomously without the interactive

feedback. We observe from the resolution of a video-

recorded pair how the possibility of multiple attempts

before starting the guided path turns out to be

effective because, after a first wrong answer (Figure

7), students appear more thoughtful, careful to grasp

every single piece of information in order to decipher

the text well and obtain all the essential data so as to

reach the correct solution (Figure 8). In this case, it

was necessary to start by proposing the adaptive

questions to the pairs, and in particular the guided

activities, in which the learners had the opportunity to

receive elaborate feedback (Shute, 2008) in the form

of a guided path to help them proceed gradually in

solving the tasks. Thus, students were asked to go

through the adaptive questions starting from the

easiest task and proceed by increasing the level.

Figure 7: First attempt.

Figure 8: Second attempt.

In this context, we could observe how the pairs of

students, despite the possibility of guided pathways,

still attempted to reason through problem solving

independently, by using the knowledge of Euclidean

and Analytic Geometry they already possessed.

The adaptive assignment was proposed later to

help them self-assess the knowledge they had

acquired up to that point. We noticed that some pairs

of students, when acknowledging that the adaptive

assignment was too difficult for them, went back to

the guided activities and to the adaptive questions

with interactive feedback to revise the contents before

attempting the adaptive assignment again. Comparing

the averages of the scores in terms of percentages

obtained by the 3 treated classes in the adaptive

assignment, we can observe that the averages in

percentages totaled by the classes that had already

introduced the circle in class are 52.5% and 55%

respectively while the best result, 69.62%, was

obtained by the class that started the experimentation

without knowing anything about the circle, and

whose knowledge was uniquely gained through the

adaptive questions. Therefore, we can assume that our

adaptive activities were effective to understand the

circle given the time they had available.

4.2 Research Question 2

For the analyses of the results of the pre-test and the

post-test, one of the treated classes was excluded from

the sample since they did not perform the post-test

due to time constraints. It was one of the two classes

in which the circle had already been introduced

before starting to work with the adaptive path.

Moreover, we excluded from the sample those

students who did not perform one of the two tests.

Therefore, the sample considered for the analysis of

Shaping an Adaptive Path on Analytic Geometry with Automatic Formative Assessment and Interactive Feedback

369

the results includes 40 students from the control

classes and 33 students from the treated classes. The

ANCOVA test, conducted to compare the post-test

ratings for the 2 groups, using the pre-test ratings as a

covariate, showed an 11-point gap out of 100 between

the 2 groups in favor of the treated group. Table 1

shows the estimates of the post-test ratings using the

pre-test ratings as a covariate. The ANCOVA test

statistic is 9.954 and its significance is 0.002, so we

can state that the difference between the averages of

the post-test ratings taking into account the pre-test as

covariate is significant. We also analyzed by means

of the chi-square test the scores obtained by the 2

groups in the individual items focusing our attention

on those items that report significant statistics on the

differences in the distribution of scores between the 2

groups. In particular, we focused on the results that

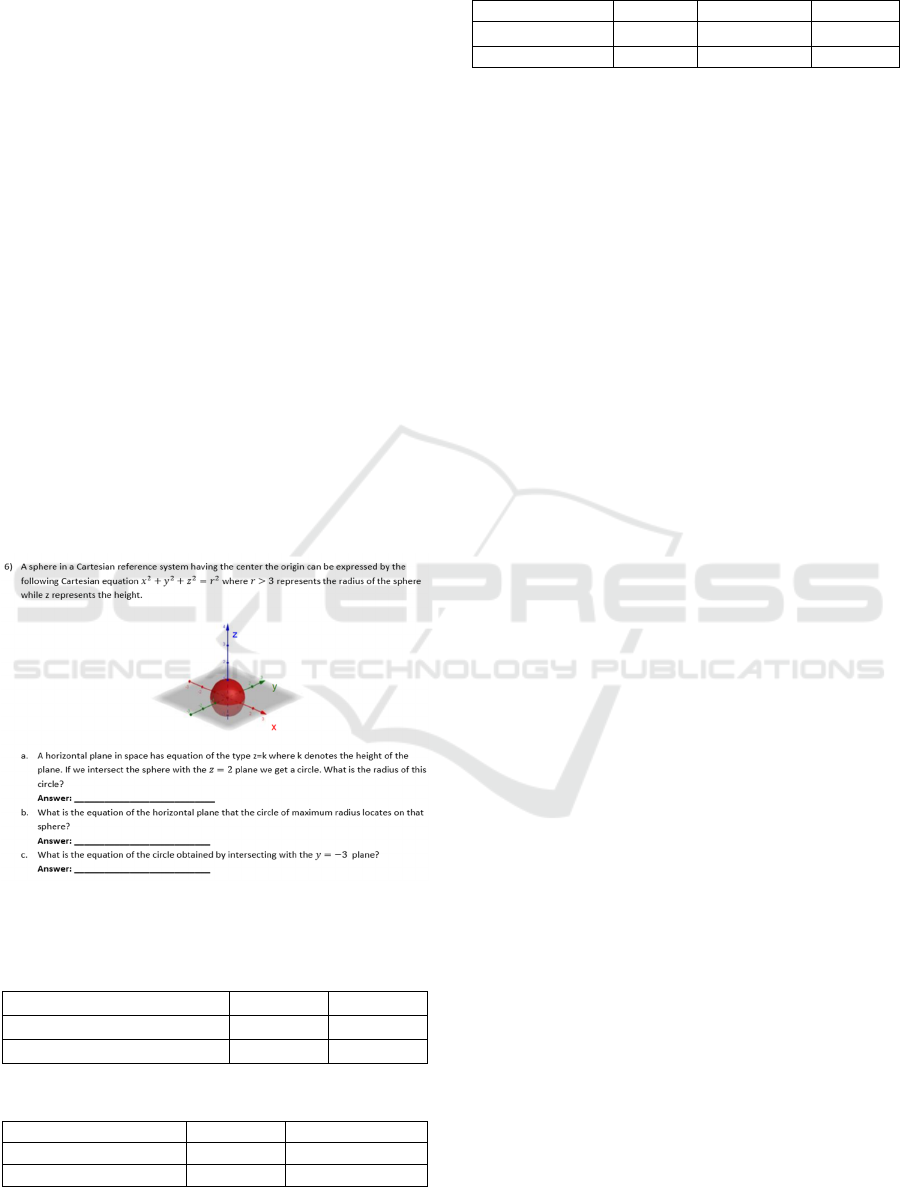

emerged from the analysis of question 6, items b and

c, (Figure 9). In Table 2 and Table 3 we report the

results totaled by the 2 groups in item b and item c,

respectively. From the first table, it emerges that

exactly 100% of the students in the control classes did

not answer or answered incorrectly while about 12%

of the students in the experimental classes scored the

highest.

Figure 9: Question 6 in post-test.

Table 1: Difference between the avarages of assessments at

post-test taking into account the pre-test as a covariate.

Mode Avara

g

e Std. erro

r

Control classes 5.47 2.44

Experimental classes 66.94 2.69

Table 2: Averages of the assessment grades.

Mode Score 0 Score 1

Control classes 100,0% 0,0%

Ex

p

erimental classes 87,9% 12,1%

Table 3: Averages of the assessment grades.

Mode Score 0 Score 0,75 Score 1

Control classes 97,6% 2,4% 0,0%

Exp. classes 72,7% 3,0% 24,2%

The chi-square test shows a value of 5.378 with

p-value of 0.02, which therefore appears to be

acceptable. Cramer's V value appears to be moderate

but significant (V=0.268 with significance of 0.02),

meaning that the 27% of variance is due to the

difference in the treatment. From Table 4 (question 6,

item c) we observe that more than 97% of the students

in the control classes, answered incorrectly, about 3%

partially correctly but none entirely correctly while in

the experimental classes we have about 24% of

students achieving a full score. The chi-square test

gives a value of 11.532 with significance of 0.003.

Cramer’s V is found to be significant (V=0.392 with

p value of 0.003), meaning that 39% of variance is

due to the difference in the treatment. These results

obtained in item b and c of question 6 can be justified

by the fact that a similar problem was faced by the

experimental classes with the guided activity

“Cooling Towers” by getting them accustomed to

moving from the 2-dimensional to the 3-dimensional

plane and considering the circle as a curve obtained

from the intersection of 2 3-dimensional geometric

locus. Becoming familiar with parametric and

contextualized problems, which are not usually

carried out in traditional lessons, helped students in

the experimental classes to be able to extrapolate

essential information from the text to model the

situations described. However, the score obtained by

the experimental classes is still a low score; this fact

can be explained considering that the reference task

is not a routine task and analytic geometry in 3

dimensions is rarely used to deal with 2-dimensional

objects, even if it might be effective to do so.

4.3 Research Question 3

4.3.1 Analysis of Video Recording

From the video recordings of some pairs of students

during classroom work, some relevant factors

emerged: the ability to divide the tasks to be

performed to reach the final solution; the crucial role

of external feedback coming from the other member

of the pair (Laurillard, 2002); the challenging attitude

towards interactive feedback (Ashford & Cummings,

1983); two possible responses to immediate

feedback: satisfaction and increased esteem, both

towards the partner and towards oneself (Hattie &

Timperley, 2007) in the case of a correct answer, and

CSEDU 2024 - 16th International Conference on Computer Supported Education

370

disappointment in the case of a wrong answer but the

intention to improve in subsequent questions by not

indulging in despair, but proceeding with greater

attention, precision and reflection typical of an

"incremental view" (Dweck, 2000). Lastly, guided

pathways are not "addictive", but on the contrary,

students try to "do without" to find the solutions on

their own.

4.3.2 Analysis of the Questionnaire

From the response percentages for each item in the

final questionnaire, we can see how there is a good

satisfaction with the proposed activities. The highest

percentages for the "very much" answer are obtained

at the items "It was helpful to have immediate

feedback after each response" (54.72 %) and "It was

helpful to have the step-by-step guided resolutions"

(50.94 %), and in both cases no one answered "not at

all". Learners found "much" in the items: "I enjoyed

working actively during the meetings" (54.72 %);

"The activities allowed me to better understand what

my preparation level is" (62.26 %); "The activities

allowed me to improve my level of preparation"

(52.83 %), which thus call for teaching to be as

engaging and stimulating as possible. The item in

which the largest percentage of responses appears to

be "not at all" (13.21 %) and "little"

49.06 %) is the

one related to the habit of working in these modalities

in their regular math lessons, demonstrating that this

is an unusual methodology that is still under-proposed

by teachers. 11.32% of students answered "not at all"

to the item "I enjoyed solving problems

contextualized in reality," while 13.21 % of students

answered "a little" to the item "It was useful to solve

problems contextualized in reality", perhaps

indicating that students are not used to this type of

activity and therefore put them in more difficulty. In

support of this fact, we observe how 15% of students

in the open-ended question "What aspect could be

improved?" asked for the easier exercises.

5 CONCLUSIONS

In conclusion, we can state that in reference to the

first research question (RQ1) we were able to observe

how the designed activities were able to adapt, albeit

with some limitations, to learning environments that

varied considerably because of the school context, the

class and the students who compose it, as well as the

different levels of preparation on the topic to be

treated in the experimental classes. From the analysis

of the results to the adaptive activities investigated

with the second research question (RQ2) we can state

how the students who participated in the adaptive

activities scored higher on the post-test than the

students in the control classes, recording a gap of 11

points out of 100 overall. Finally, from the operative

student answers to the proposed adaptive path

collected through the video recordings and the

questionnaire, it was possible to highlight some

foundational aspects of the work students carried out

pairs of homogeneous levels and their interaction

with adaptive activities and interactive feedback. The

questionnaire revealed general satisfaction with the

activities, whose originality, versatility, clarity of

exposition and relevance to reality were recognized,

while the use of interactive feedback proved effective

in ensuring that students used the information

provided to them to improve their performance

(RQ3). Thanks to that questionnaire, numerous

valuable suggestions were collected to refine, expand,

and enrich the involvement of automatic formative

assessment in teaching Mathematics. Among them,

one that is worth mentioning is the possibility to use

a clearer and more functional platform and try to

design, however complex, not a single and rigid path

with interactive feedback, but one that can be

differentiated and that is capable of taking into

account the path taken by the students independently,

regardless of its successful completion. This would

prevent the effort of the students, who we have

observed to be very stimulated and intent on doing the

exercises and problems without help, from being

wasted by a guide that does not take into account the

variety of solution methods that a mathematical

problem typically offers. It was deemed necessary to

train teachers pedagogically and professionally so

that they could learn to independently design

meaningful adaptive questions and adaptive

assignments for their classes. Finally, some further

analyses that can be carried out starting from the data

collected in our research are trying to model the path

followed by each individual student from pre-test to

post-test through the adaptive activities carried out in

class, and studying the impact of the activities on

students' self-assessment skills.

ACKNOWLEDGEMENTS

The authors would like to thank the participating

teachers for their commitment during the project, and

all the students who took part to the experimentation.

The authors would like also to thank the bank

foundation Compagnia di San Paolo Foundation for

Shaping an Adaptive Path on Analytic Geometry with Automatic Formative Assessment and Interactive Feedback

371

their support on the OPERA project (Open Program

for Education Research and Activities).

REFERENCES

Ashford, S. J., & Cummings, L. L. (1983). Feedback as an

individual resource: Personal strategies of creating

information. Organizational Behavior and Human

Performance, 32(3), 370–398.

Barana, A., Boetti, G., Marchisio, M., Perrotta, A., &

Sacchet, M. (2023). Investigating the Knowledge Co-

Construction Process in Homogeneous Ability Groups

during Computational Lab Activities in Financial

Mathematics. Sustainability, 15(18), 13466.

https://doi.org/10.3390/su151813466

Barana, A., Conte, A., Fioravera, M., Marchisio, M., &

Rabellino, S. (2018). A Model of Formative Automatic

Assessment and Interactive Feedback for STEM.

Proceedings of 2018 IEEE 42nd Annual Computer

Software and Applications Conference (COMPSAC),

1016–1025.

https://doi.org/10.1109/COMPSAC.2018.00178

Barana, A., Conte, A., Fissore, C., Marchisio, M., &

Rabellino, S. (2019). Learning Analytics to improve

Formative Assessment strategies. Journal of E-Learning

and Knowledge Society, 15(3), 75–88.

https://doi.org/10.20368/1971-8829/1135057

Barana, A., Fissore, C., & Marchisio, M. (2020). From

Standardized Assessment to Automatic Formative

Assessment for Adaptive Teaching: Proceedings of the

12th International Conference on Computer Supported

Education, 285–296. https://doi.org/10.5220/0009577

302850296

Barana, A., & Marchisio, M. (2022). A Model for the

Analysis of the Interactions in a Digital Learning

Environment During Mathematical Activities. In B.

Csapó & J. Uhomoibhi (Eds.), Computer Supported

Education (Vol. 1624, pp. 429–448). Springer

International Publishing. https://doi.org/10.1007/978-3-

031-14756-2_21

Barana, A., Marchisio, M., & Miori, R. (2019). MATE-

BOOSTER: Design of an e-Learning Course to Boost

Mathematical Competence. Proceedings of the 11th

International Conference on Computer Supported

Education (CSEDU 2019), 1, 280–291.

Barana, A., Marchisio, M., & Sacchet, M. (2021). Interactive

Feedback for Learning Mathematics in a Digital

Learning Environment. Education Sciences, 11(6), 279.

https://doi.org/10.3390/educsci11060279

Black, P., & Wiliam, D. (1998). Assessment and Classroom

Learning. Assessment in Education: Principles, Policy &

Practice, 5(1), 7–74.

Black, P., & Wiliam, D. (2009). Developing the theory of

formative assessment. Educational Assessment,

Evaluation and Accountability, 21(1), 5–31.

https://doi.org/10.1007/s11092-008-9068-5

Borich, G. (2017). Effective teaching methods (9th ed.).

Pearson.

Botta, E. (2021). Verification of the measuring properties and

content validity of a computer based MST test for the

estimation of mathematics skills in Grade 10. 7th

International Conference on Higher Education Advances

(HEAd’21). Seventh International Conference on Higher

Education Advances. https://doi.org/10.4995/HEAd

21.2021.13046

Boyle, J., & Nicol, D. (2003). Using classroom

communication systems to support interaction and

discussion in large class settings. Association for

Learning Technology Journal, 11(3), 43–57.

Brancaccio, A., Marchisio, M., Meneghini, C., & Pardini, C.

(2015). More SMART Mathematics and Science for

teaching and learning. Mondo Digitale, 14(58), 1–8.

Corbalan, G., Paas, F., & Cuypers, H. (2010). Computer-

based feedback in linear algebra: Effects on transfer

performance and motivation. Computers & Education,

55(2), 692–703. https://doi.org/10.1016/j.compedu.20

10.03.002

Creswell, J. W., & Clark, V. L. P. (2017). Designing and

conducting mixed methods research. Sage publications.

Dweck, C. S. (2000). Self-theories: Their role in motivation,

personality, and development. Psychology Press.

European Parliament and Council. (2018). Council

Recommendation of 22 May 2018 on key competences

for lifelong learning. Official Journal of the European

Union, 1–13.

Fahlgren, M., & Brunström, M. (2023). Designing example-

generating tasks for a technology-rich mathematical

environment. International Journal of Mathematical

Education in Science and Technology, 1–17.

https://doi.org/10.1080/0020739X.2023.2255188

Hattie, J., & Timperley, H. (2007). The Power of Feedback.

Review of Educational Research, 77(1), 81–112.

https://doi.org/10.3102/003465430298487

Kluger, A. N., & DeNisi, A. (1996). The effects of feedback

interventions on performance: A historical review, a

meta-analysis, and a preliminary feedback intervention

theory. Psychological Bulletin, 119(2), 254–284.

https://doi.org/10.1037/0033-2909.119.2.254

Laurillard, D. (2002). Rethinking university teaching: A

conversational framework for the effective use of

learning technologies (2. ed). Routledge/Falmer.

Mascarenhas, A., Parsons, S., & Burrowbridge, S. C. (2016).

Preparing Teachers for High-Need Schools: A Focus on

Thoughtfully Adaptive Teaching. Occasional Paper

Series, 2011(25), 26.

Romano, A., Petruccioli, R., Rossi, S., Bulletti, F., & Puglisi,

A. (2023). Practices for adaptive teaching in STEAM

disciplines: The Project T.E.S.T. QTimes Journal of

Education Technology and Social Studies, 1(1), 312–

328. https://doi.org/10.14668/QTimes_15123

Sadler, D. R. (1989). Formative assessment and the design of

instructional systems. Instructional Science, 18(2), 119–

144.

Scriven, M. (1967). The methodology of evaluation.

Lafayette, Ind: Purdue University.

Shute, V. J. (2008). Focus on Formative Feedback. Review of

Educational Research, 78(1), 153–189.

Thompson, M., Wiliam, D., & Dwyer, C. A. (2007).

Integrating assessment with instruction: What will it take

to make it work? In The future of assessment: Shaping

teaching and learning (pp. 53–82). Erlbaum.

CSEDU 2024 - 16th International Conference on Computer Supported Education

372