Empowering Students: A Reflective Learning Analytics Approach to

Enhance Academic Performance

Dynil Duch

1,2 a

, Madeth May

1 b

and S

´

ebastien George

1 c

1

LIUM, Le Mans Universit

´

e, 72085 Le Mans, Cedex 9, France

2

Institute of Digital Research & Innovation, Cambodia Academy of Digital Technology, Phnom Penh, Cambodia

Keywords:

Learning Analytics, Student Performance, Reflective Tools, Data Indicators, Data Visualization, Predictive

Learning, Empowering Students, Learning Patterns, Learning Behavior, Educational Dashboards.

Abstract:

The surge in online education has accentuated the importance of practical Learning Analytics (LA) tools,

traditionally designed to support educators. In the meantime, a notable gap exists in empowering students

directly through user progress insights and reflective components. This paper presents our research effort in

designing a novel approach: a Self-reflective Tool (SRT) with data indicators on student performance designed

to actively engage students in their learning journey. Our research explores the landscape of existing LA tools,

pinpointing the lack of technological supports for students, and the limitations in empowering students. The

methodology involves data extraction, and a comparative analysis of classifiers to predict student performance

(SP). Our reflective tool is therefore built, not only to support students in their learning activities, but also to

provide them with a more relevant assistance according to their SP. Surveys are made to assess our proposal of

SRT. The findings illustrate how students perceive it and how SRT oriented data indicators increase awareness,

regulation, and motivation of individual learning patterns. Our qualitative analysis also demonstrates a positive

correlation between student engagement with the reflective tool and improvements in academic outcomes. This

research contributes to the discourse on LA by emphasizing the importance of reflective tools for students in

Metacognition Online Learning Environments (MOLE), providing valuable insights for future developments

in student-centric approaches to education.

1 INTRODUCTION

Learning Analytics (LA) is a powerful tool that sub-

stantially supports educators and content creators

in enhancing the teaching and learning experiences

(Banihashem et al., 2022; Hern

´

andez-de Men

´

endez

et al., 2022). However, while existing tools and ser-

vices in LA are mostly dedicated to instructors, there

is a lack of similar supports that directly empower stu-

dents (Arthars et al., 2019). Yet, it has been demon-

strated that students strongly need self-assessment

throughout their learning process to gain motivation

and higher achievement (McMillan and Hearn, 2008;

Andrade, 2019). Thus, providing reflective tools that

allow students to do so is not only crucial from a

pedagogical standpoint but also a significant research

challenge. Our paper delves into the necessity of ad-

a

https://orcid.org/0000-0002-7857-5811

b

https://orcid.org/0000-0002-8527-7345

c

https://orcid.org/0000-0003-0812-0712

dressing this gap by proposing a novel approach lever-

aging specific and selective tools to enable students

through reflective LA, focusing on self-regulation and

user progress insights.

LA has traditionally emphasized data analysis, vi-

sualization, and the creation of indicators to aid edu-

cators in understanding and improving their teaching

methodologies (Ndukwe and Daniel, 2020; Silvola

et al., 2021). While these approaches have proven

valuable, a need for more emphasis exists on tools that

create and foster students motivation in their learning

journey (Joksimovi

´

c et al., 2019; Arthars et al., 2019).

The absence of these tools becomes more pronounced

than the traditional LA when considering the implica-

tions for student performance (SP). Our research is

motivated by the conviction that students, provided

with a better understanding of their learning behav-

iors, can significantly enhance their academic perfor-

mance and cultivate self-regulation skills.

The research effort presented in this paper

pinpoints shortcomings in existing practices and

Duch, D., May, M. and George, S.

Empowering Students: A Reflective Learning Analytics Approach to Enhance Academic Performance.

DOI: 10.5220/0012634600003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 2, pages 385-396

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

385

proposes a transformative solution with our self-

reflective tool (SRT) that allows and incites students

to monitor their progress, analyze behavior, and ac-

tively participate in improving their learning perfor-

mance. We explore the landscape of existing LA

tools, highlighting their current focus and limitations,

particularly in the context of student empowerment.

We then introduce our design approach, which places

students at the center of the analytics process, provid-

ing them with indicators on a Learning Management

System (LMS).

The primary research questions guiding our stud-

ies include:

1. How can LA better support students in Metacog-

nition Online Learning Environments (MOLE)?

2. What role does SRT play in addressing SP, the risk

of failure, and motivational loss?

To contextualize our work, we examine the cur-

rent state of SP analysis and outline our approach to

data mining, drawing on existing methodologies. In

the context of our research, SP refers to the academic

achievements, learning outcomes, and overall success

of students within an educational setting. It encom-

passes a multifaceted evaluation beyond traditional

metrics such as grades and exam scores. As per our

context, SP involves a holistic assessment that con-

siders various factors, including attendance, interac-

tion with learning materials, engagement in quizzes,

assignments, and tasks on the LMS, and the ultimate

academic production and outcome.

For the experimental setup, the cohort of 160 stu-

dents, primarily associated with the CADT (Cam-

bodia Academy of Digital Technology), actively en-

gages in various educational activities on the Moodle

LMS. Throughout the year, these students participate

in courses, attend classes, submit assignments, and

interact with the learning materials available on the

platform. In the context of our research, the SP aspect

is the key focus. We aim to delve into the intricacies

of learning patterns exhibited by these students on the

Moodle LMS.

To identify key attributes that can predict SP,

we have conducted a literature review to understand

the factors influencing student outcomes. First, we

collected data from CADT’s Moodle platform and

Google Sheets, which provided insights into student

engagement and performance outcomes. Second, we

carefully selected a subset of crucial attributes from

the collected data to develop a predictive algorithm

using a Random Forest classifier. Third, we further re-

fined the algorithm by employing oversampling tech-

niques to handle imbalanced data. Last but not least,

we evaluated the algorithm’s performance and ad-

justed it to enhance accuracy. By adopting such an ap-

proach, we are able to not only effectively identify at-

tributes contributing to student performance, but also

to develop a reliable predictive model.

As for the reflective tools, they refer to tools

and mechanisms designed to help students reflect

on their learning processes, identify weaknesses and

strengths, and make informed decisions to improve

their academic performance. Specifically, the self-

reflective tool (SRT) is a user progress instrument tai-

lored to individual students, which includes personal

insight and a group-level overview feature that en-

ables students to gain insights into their performance

compared to their peers. SRT integrates our predict-

ing model and key attributes to provide a dynamic and

supportive learning environment, fostering students’

self-awareness, self-regulation, self-evaluation, and

self-motivation. We aim to create a sophisticated SRT,

offering a novel approach to improve students’ educa-

tional practices at CADT.

With this experimental setup, we can work on de-

signing and implementing our SRT, including a dash-

board with program-level and user progress indica-

tors. The ”program-level” refers to an assessment

or analysis conducted at an entire academic program

of study level. Rather than focusing on individual

courses or specific components, a program-level per-

spective takes a holistic view, considering an aca-

demic program’s overall objectives, outcomes, and

performance.

The rest of the paper is structured as follows. Re-

lated works are presented in section 2. Sections 3

and 4 are dedicated to our SP analysis approach. The

design of our SRT presented in section 5. The out-

comes of our study are discussed in section 6, provid-

ing valuable insights into the perceptions of students

and the impact of our reflective learning analytics ap-

proach on their academic performance.

2 RELATED WORKS

In recent years, many studies have paid significant

attention to exploring the LA applications and their

impact on educational practice (Dawson et al., 2019;

Wong et al., 2018; Papamitsiou and Economides,

2014; Viberg et al., 2018; Wong and Li, 2020). In

this section, we review the existing literature to con-

textualize our research within the broader landscape

of LA, focusing on e-learning practices, support for

students, technological solutions, and the research is-

sues related to students and SRT.

CSEDU 2024 - 16th International Conference on Computer Supported Education

386

2.1 Learning Analytics

Numerous studies covered the integration of LA into

MOLE. A systematic review by (Banihashem et al.,

2018; Tepgec¸ and Ifenthaler, 2022; Banihashem et al.,

2022) addressed LA’s crucial role in optimizing the

online learning experience. The review highlighted

LA’s potential to improve student and overall satis-

faction in digital learning environments. Additionally,

(Mangaroska et al., 2021) focused on the specifics of

employing LA in online learning platforms, provid-

ing insights into its effectiveness in identifying pat-

terns (Khosravi and Cooper, 2017) and refining in-

structional design for virtual classrooms (Jovanovic

et al., 2017; Volungeviciene et al., 2019). A sys-

tematic mapping review by (Sghir et al., 2023) ex-

amined the published articles between 2012 and 2022

that utilized LA for predicting students’ performance

and risk of failure or dropout. They found that LA

provides insights into the classroom by analyzing data

about learners, allowing for a deeper understanding of

the learning process and optimizing the learning envi-

ronment.

2.2 Supports for Online Learning

In the past decade, we have witnessed a growing of

both theoretical and technological solutions to sup-

port online teaching practices. (Bakharia et al., 2016;

Alowayr and Badii, 2014) formulated a conceptual

framework to assist teachers in evaluating learning ac-

tivities, learning performance, and making informed

decisions. Additionally, (Arthars et al., 2019; Dy-

ckhoff et al., 2012; Sergis and Sampson, 2016) have

developed dashboards with data indicators to support

teachers in their instructional roles. Moreover, (Vol-

ungeviciene et al., 2019) have designed a professional

monitoring tool for teachers to understand students’

different learning habits, recognize their behavior, as-

sess their thinking capacities and engagement, and de-

sign their curriculum.

Thus far, while witnessing prior studies that pre-

dominantly focused on designing monitoring and

evaluation tools for teachers, we also support the

claim of (Wong, 2023) and acknowledge the neces-

sity for customized technological solutions to answer

the unique needs of students. Therefore, our research

is motivated by the imperative to address the absence

of direct support that enhances students learning ex-

periences. Our goal is not to imitate the existing sup-

ports for teachers and recreate new ones for students,

but to take a closer look at how we can provide them

with reflective tools, enabling them to gain insights

on their own behaviors, then adapt their learning pace

and strategy throughout their learning activities. The

reflective tools become the primary and direct support

for students as they do not solely rely on feedback

from their teachers, and mostly at the end of a learn-

ing session. Our proposal places a strong focus on an

innovative approach to design and implement reflec-

tive tools with data indicators on student performance

in order to foster student self-regulation and empow-

erment in MOLE.

2.3 Students and Reflective Tools

Research within MOLE has identified core issues

related to students and the integration of reflective

tools in online learning. (Ndukwe and Daniel, 2020)

conducted a study exploring the expectations of stu-

dents regarding LA tools in online courses. Their

findings indicated that students desired more user

progress feedback and a greater emphasis on real-

time progress tracking. These insights shed light

on specific areas for improvement in LA tools, sug-

gesting a need for enhancements in features related

to user progress learning experiences (Fatma Gizem

Karaoglan Yilmaz, 2020; Hegde et al., 2022; Fatma

Gizem Karaoglan Yilmaz, 2022; Karaoglan Yil-

maz, 2022) and continuous monitoring of academic

progress (QAZDAR et al., 2022). (Silvola et al.,

2021) examined the expectations of educators and on-

line learners concerning LA dashboards, emphasizing

the need for user progress insights in virtual class-

rooms.

In the context of online learning, the existing body

of literature unveils the potential of LA to provide

valuable understanding of student engagement and

performance. It emphasizes the need for tailored sup-

port and technological solutions in online education.

Furthermore, the research mentioned core issues re-

lated to students and integrating reflective tools in vir-

tual classrooms. Despite all that, a significant gap

persists in developing reflective tools that empower

students within online learning. Not to mention that

most existing supports are often designed to assess

the final outcomes of learning activities. Accordingly,

the data indicators provided are not exactly exploited

by the students as reflective tools during their learn-

ing process, but are mainly used at the end as feed-

back or report on their final academic outcomes. Our

work seeks to contribute to filling this gap by intro-

ducing a unique reflective tool designed to address re-

search challenges that cover two aspects: (i) the pre-

diction of student performance and (ii) the elabora-

tion of SRT oriented data indicators to enhance stu-

dent performance.

Empowering Students: A Reflective Learning Analytics Approach to Enhance Academic Performance

387

3 STUDENT PERFORMANCE

This section provides an overview of our current work

on understanding and enhancing SP from LA per-

spectives. The illustration in Figure 1 unfolds the

comprehensive research methodology adopted at the

CADT. The key attributes influencing learning pat-

terns are identified. These attributes seamlessly feed

into the SP Prediction Model, employing data mining

approaches. The predictions from the SP model drive

the development of SRT, which include Performance

Evaluation, Progress Tracking, Recommendation En-

gine, and Personal Indicators. The ultimate goal is

to translate SRT utilization into tangible academic

outcomes, fostering self-awareness, self-regulation,

self-evaluation, and self-motivation for students at

CADT. The illustration encapsulates the intercon-

nected stages of data-driven predictions and the de-

velopment of tools, emphasizing the student-centric

approach adopted for enhancing academic success.

Figure 1: Unveiling the Iterative Journey: From Data Min-

ing to Self-Reflective Empowerment.

3.1 Current Work and Approach

Our study on SP involves a comprehensive analy-

sis of data gathered from students engaged in on-

line courses. Leveraging data mining techniques, we

explore existing approaches to SP analysis, seeking

to identify patterns, trends, and factors influencing

students’ learning outcomes (la Red Mart

´

ınez and

G

´

omez, 2014; Brahim, 2022). By understanding the

intricacies of SP, we can tailor our SRT to fulfill the

unique needs and challenges of online learning (Jag-

gars and Xu, 2016). Our approach strongly empha-

sizes empowering students to take an active role in

monitoring their progress and regulating their learn-

ing pace. Comparing to traditional LA tools, which

primarily focus on providing retrospective insights

for educators, our framework shifts the paradigm by

directly involving students in analyzing their perfor-

mance data. This student-centric approach is pivotal

in fostering a sense of ownership and autonomy, con-

tributing to improved engagement and academic suc-

cess.

3.2 Necessity of a Reflective Tool

As our analysis progresses, it becomes evident that

SP analysis lacks a reflective component dedicated to

students. Reflective tools are essential for students

to learn and to improve learning (McKenna et al.,

2019). Yet these tools are not systematically included

in the basic set of tools for educational settings. Also

pointed out by (Volungeviciene et al., 2019) reflective

tools often offer students the means to gain deep in-

sights into their learning patterns, preferences, and ar-

eas that may require additional attention. The absence

of those tools is particularly pronounced in online

learning, where students may face challenges related

to self-motivation (Ainley and Patrick, 2006). By in-

tegrating a reflective tool into the learning environ-

ment, we aim to incite students to actively shape their

educational experiences (Perrotta and Bohan, 2020),

identify areas of improvement (Talay-Ongan, 2003),

and optimize their learning strategies (Majeed et al.,

2021).

In conclusion, our research work on SP in the

context of LA addresses the current limitations in

SP analysis, especially in MOLE. By leveraging data

mining techniques and emphasizing a student-centric

approach, we aim to develop a SRT that enhances

SP analysis and encourages students to become active

participants in their learning journey. The following

sections detail the experimental setup, data analysis,

and the implementation of our SRT.

4 STUDENT PERFORMANCE

ANALYSIS

4.1 Attributes and Learning Patterns

We comprehensively analyzed existing literature to

identify the attributes for predicting student perfor-

mance. To be accurate and objective, we consid-

ered the frequency of attribute appearance in the lit-

erature, their relevance, practicality, and importance

in our study, and the data available in the LMS. A

meta-study by (Felix et al., 2018) reviewed 42 pa-

pers, and (Namoun and Alshanqiti, 2020) examined

62 papers that used data mining techniques to predict

student outcomes, which mainly used attributes such

as assessment data/grade, interaction logs, quizzes

data, assignment data, access logs, resources logs, and

tasks data. Another study by (Felix et al., 2019) uti-

lized a dataset of 1,307 students’ activity logs in a

course, including variables related to quizzes submit-

ted, activities, time spent on the platform, and grades,

to build a predictive model of student outcomes.

CSEDU 2024 - 16th International Conference on Computer Supported Education

388

In the same context as the previous study, (Hi-

rokawa, 2018) collected information from a Japanese

institution and used machine learning methods to

forecast academic achievement. The result found that

previous academic grade were essential for predicting

academic performance. Furthermore, (Gaftandzhieva

et al., 2022) used a machine learning algorithm to pre-

dict students’ final grades in an Object-Oriented Pro-

gramming course using data from Moodle LMS activ-

ities such as exam results, and online activities. Other

studies have focused on predicting various aspects of

student outcomes, such as the likelihood of dropout

(Quinn and Gray, 2020), the likelihood of success in

a course (Arizmendi et al., 2023) or predicting stu-

dent grades using both academic and non-academic

factors (Ya

˘

gcı, 2022). In the meantime, some studies

have also explored specific contexts, such as analyz-

ing interaction logs (Brahim, 2022), assessing grades

and online activity data (Alhassan et al., 2020).

As a result of all these studies, we have selected

specific attributes and learning patterns that corre-

late with positive or negative SP outcomes at CADT.

By identifying these correlations, we have collected

dataset from CADT’s Moodle LMS and from Google

Sheets, as shown in Table 1, covering over 160 stu-

dents from three classes and eight separate courses in-

cluding Linear Algebra, Discrete Mathematics, Prob-

ability and Statistics, C Programming Language, Vi-

sual Art, Soft Skills and Information Technology Es-

sentials. This dataset includes two semesters and rep-

resents two program levels. Plus, it also incorporates

Hypothesis Video Player (HVP) scores, which mea-

sure student engagement in interactive video activi-

ties. Right below, we describe the attributes in our

dataset that provide information on student engage-

ment and performance.

1. attendance - This attribute represents the number

of modules in all courses that a student has com-

pleted.

2. number of interaction log - This attribute repre-

sents the number of interactions a student has had

with all courses.

3. total quiz submitted - This attribute represents

the number of quizzes a student has submitted in

all courses.

4. total assignment submitted - This attribute rep-

resents the number of assignments a student has

submitted in all courses.

5. total tasks submitted - This attribute represents

the number of tasks a student has submitted in all

courses.

6. outcome score - The outcome score is a numeric

measure of a student’s academic performance af-

ter completing a first-year program. It is typically

calculated by taking the weighted average of the

final scores of each course in the program, with

the weight assigned to each course based on vari-

ous factors such as credit hours, difficulty level, or

course importance. The outcome score is an im-

portant metric used in academic and employment

contexts to evaluate a student’s academic perfor-

mance and potential.

4.2 Data Mining Approaches

Our research uses data mining techniques and pre-

dictive algorithms to forecast SP in the Moodle en-

vironment. To predict student outcomes and select

the best classifier, we used few classification meth-

ods with our dataset for comparison. (Felix et al.,

2018) reviewed 42 studies that used data mining tech-

niques to predict student outcomes, as the result, the

nine of ten highest accuracies (95%-100%) found in

the review are reached through classification methods.

Similarly, another systematic review by (Namoun and

Alshanqiti, 2020) examined 62 papers that used data

mining and machine learning to predict student out-

comes, General findings from the review show that

the machine learning algorithms, including Decision

Trees, Neural Networks, Support Vector Machines,

Na

¨

ıve Bayes, and Random Forests, accurately predict

student outcomes, with some studies reporting predic-

tion accuracies of over 90%. In a specific study, (Felix

et al., 2019) utilized a dataset of 1,307 students’ ac-

tivity logs in a course, including variables related to

student interactions in forums, chats, quizzes, activi-

ties, time spent on the platform, and grades. Simulta-

neously, the study built a predictive model of student

outcomes using Na

¨

ıve Bayes, Decision Trees, Mul-

tilayer Perceptron, and Regression algorithms, with

the Na

¨

ıve Bayes model performing the best with an

accuracy of 87%. In the same context of the previ-

ous study, (Gaftandzhieva et al., 2022) used a ma-

chine learning algorithms to predict students’ final

grades in an Object-Oriented Programming course us-

ing data from Moodle LMS activities and online lec-

tures. They found that the Random Forest algorithm

had the highest prediction accuracy of 78%.

Thus, in our research, we have made comparisons

of the five classifiers (Decision Tree, Random Forest,

Bayesian Classification (Na

¨

ıve Bayes), Support Vec-

tor Machines, and Neural Network) with our dataset.

Our comparison aimed to evaluate the performance

and accuracy of these classifiers in predicting student

outcomes based on our specific dataset. In the same

way, we have identified the most effective classifier

for our research context by applying these classifica-

Empowering Students: A Reflective Learning Analytics Approach to Enhance Academic Performance

389

tion algorithms to the collected data. Moreover, to

adopt our classifier approach, a grading system was

used to translate the outcomes score, ranging from 0

to 100, into grades A to F.

This section has presented the foundation of our

SP analysis at CADT. The experimental setup, en-

compassing a diverse dataset from CADT, as shown

in Table 1, and an array of data mining techniques,

positions us to uncover valuable insights into the dy-

namics of student learning in online environments.

The subsequent section will introduce our SRT de-

sign with the SP prediction technique to create data

indicators for bridging the gap between analysis and

actionable student insights.

5 SRT AND DATA INDICATORS

In this section, we introduce the design and function-

ality of our SRT, emphasizing incorporating data indi-

cators to provide students with a comprehensive view

of their academic progress. Our tool empowers stu-

dents at CADT by offering group-level overviews and

user progress insights, fostering a student-centric ap-

proach to LA.

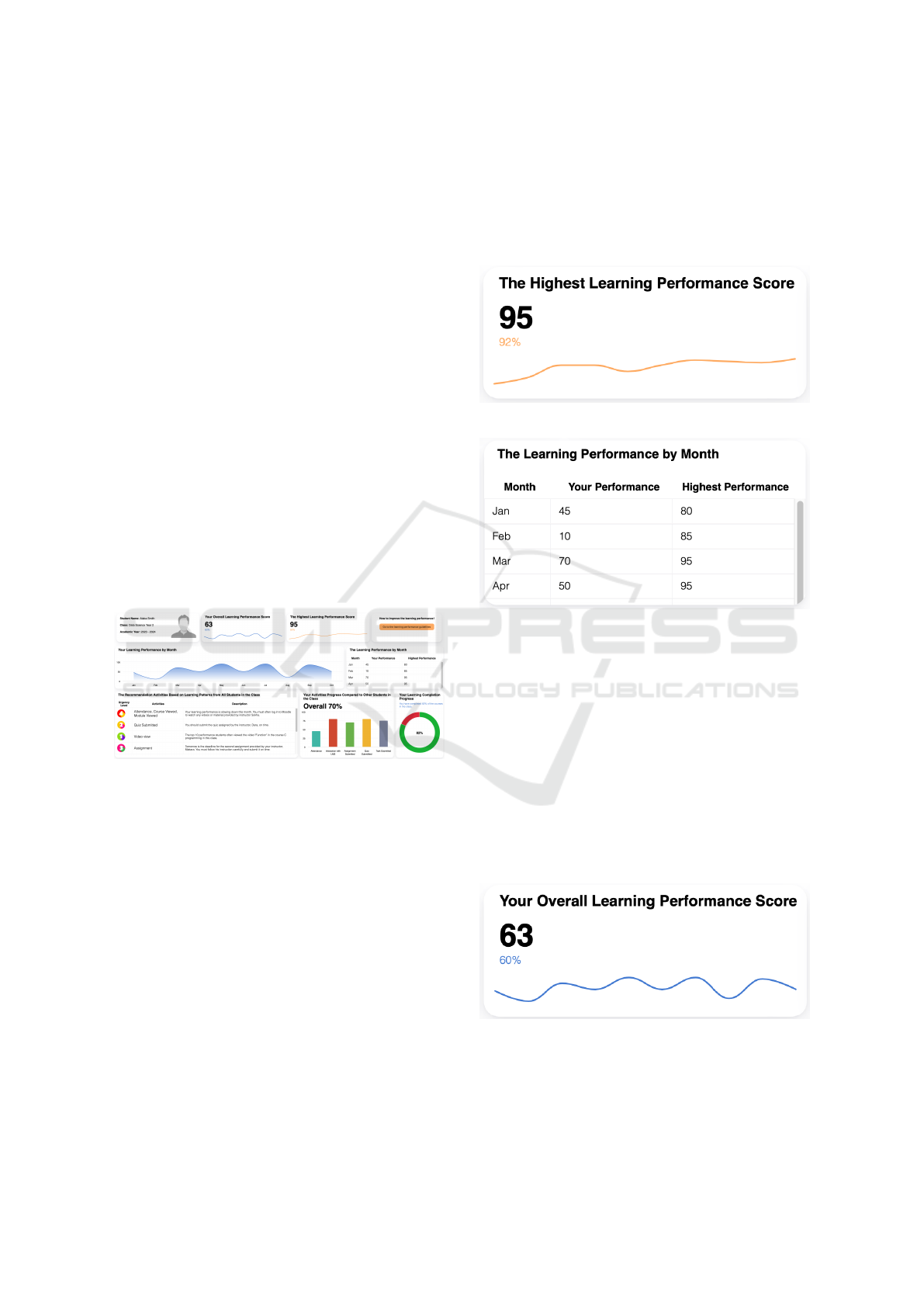

Figure 2: Dashboard of SRT and SP Data indicators.

5.1 Self-Reflective Tool Design

The dashboard in Figure 2 provides an intuitive inter-

face, offering students a visual representation of their

learning process in real time. Our design goal is of-

fering friendly user experience, ensuring accessibil-

ity for students with varying levels of technological

proficiency. Indeed, we would like to make sure that

students spend less time understanding the dashboard,

but start exploiting right away the SRT-oriented data

indicators to enhance their SP.

5.1.1 Group-Level Overview

At the group level, our tool aggregates data to

present a comprehensive overview of class perfor-

mance trends. Visualizations such as distribution of

highest learning performance, as shown in Figure 3

and Figure 4, engagement metrics allow students to

gauge their standing relative to their peers (Figure

6), and learning guideline for improving their learn-

ing performance. This group-level insight promotes a

sense of healthy competition, encouraging students to

set ambitious but achievable goals in metacognition.

Figure 3: The highest learning performance in the class.

Figure 4: The comparison of learning performance in the

class.

5.1.2 User Progress Indicators

The heart of our SRT lies in its ability to provide user

progress indicators for individual students, as shown

in Figure 5. These indicators are derived from a

nuanced analysis of each student’s learning patterns,

considering attendance, number of interaction to the

LMS, assignments, quiz, tasks scores, and participa-

tion in collaborative activities. By customizing feed-

back for each student’s progress according to their SP

score, our tool facilitates targeted interventions and

encourages proactive self-evaluation.

Figure 5: The overall learning performance.

Our learning performance dashboards provide in-

dividual learning performance scores (Figure 5) and

highlights the highest learning performance scores

within the class (Figure 3). Additionally, it presents a

CSEDU 2024 - 16th International Conference on Computer Supported Education

390

Table 1: The CADT’s dataset.

Attendance Interaction log Quiz submitted Assignment submitted Tasks submitted Grade

0.279412 0.265866 0.000000 0.166667 0.304348 F

0.382353 0.798456 0.333333 0.333333 0.521739 A

0.397059 0.421098 0.333333 0.309524 0.478261 B+

0.397059 0.482847 0.333333 0.309524 0.478261 A

0.161765 0.325043 0.000000 0.214286 0.391304 B

percentage comparison to the maximum score attain-

able. For instance, if the maximum score is 110, a stu-

dent with a learning performance score of 63 might be

at approximately 60%, while the highest performance

score in the class, say 95, could be around 92%. Our

dashboard’s individual learning performance scores,

class averages, and percentage comparisons are com-

prehensive metrics to gauge academic achievements.

These figures provide a clear overview of where a

student stands compared to peers and the maximum

achievable score. This comparative aspect fosters a

sense of self-awareness by allowing students to eval-

uate their performance relative to the class’s highest

achiever and the overall class average. The visual

representation of these scores not only offers trans-

parency but also acts as a motivational tool. Know-

ing one is standing in the class can catalyze self-

regulation, prompting students to set personal goals

and enhance their learning strategies.

5.2 Dashboard Features

Our tool incorporates various features to enhance the

student experience:

5.2.1 Learning Patterns and Progress Tracking

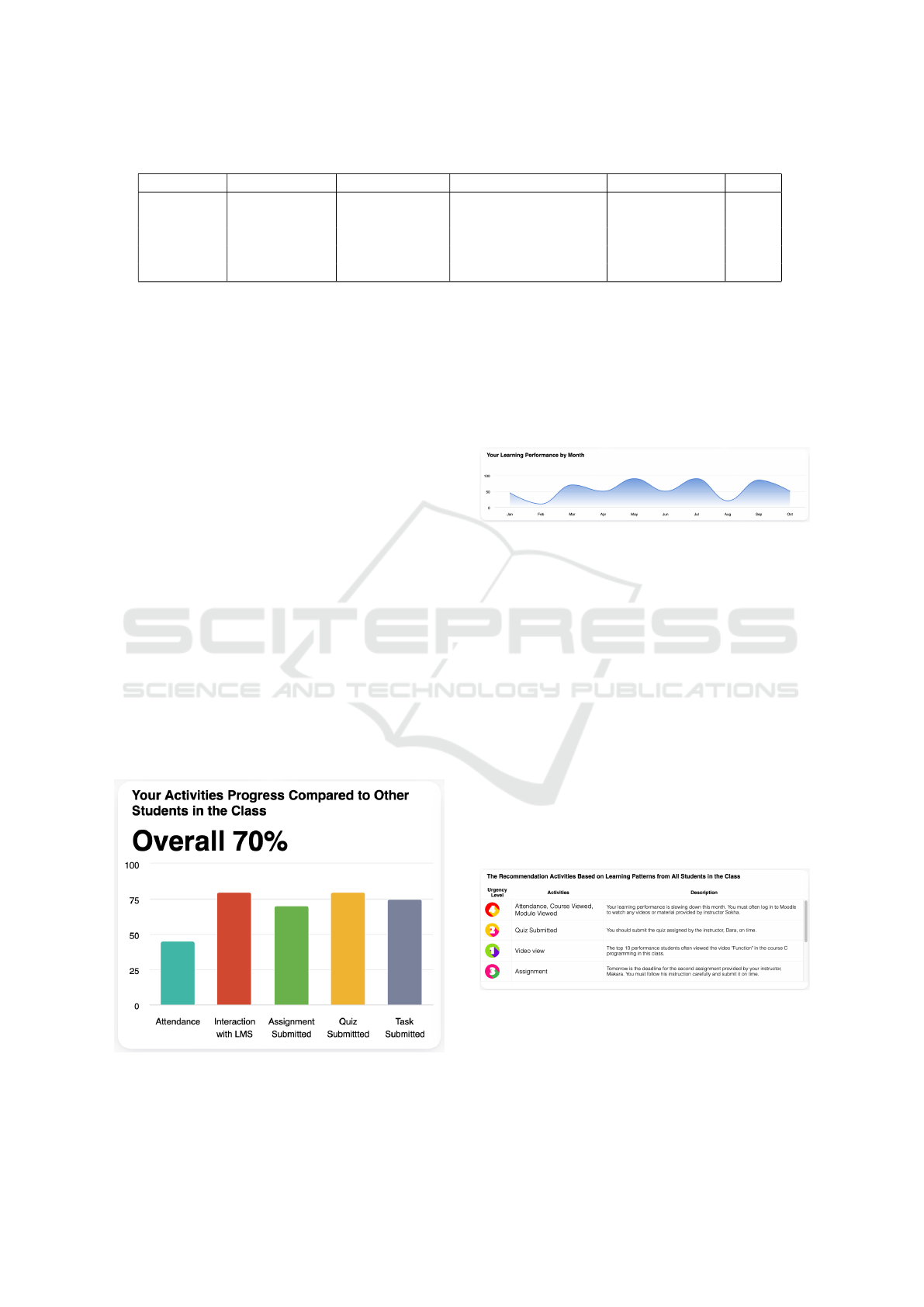

Figure 6: The learning activities progress.

The tool visualizes individual learning patterns, al-

lowing students to identify their strengths and ar-

eas for improvement in Figure 6. Insights into pre-

ferred study times, resource utilization, and engage-

ment peaks empower students to optimize their study

habits. As for the progress tracking, it is dynamic, as

shown in Figure 7 providing students with real-time

updates on their academic performance. This feature

aids in goal setting and time management, fostering a

sense of accountability and self-motivation.

Figure 7: The learning performance progress by month.

5.2.2 Recommendation Engine

Our SRT includes a recommendation engine, as

shown in Figure 8 to assist students in maintaining

and achieving positive performance. Based on histor-

ical data and learning patterns, this engine provides

user progress suggestions for resources, study mate-

rials, and time management strategies to enhance the

learning experience, as well as to gain self-awareness

and self-regulation. Additionally, our recommenda-

tion engine assigns urgency levels ranging from 1 to

5 with the highlight color, alerting students to take im-

mediate action based on predictions generated by our

performance algorithm. The tool suggests individual-

ized actions by predicting SP, learning from their pat-

terns, fostering proactive engagement, and addressing

potential challenges before they escalate.

Figure 8: The recommendation activities for each course in

the class.

Empowering Students: A Reflective Learning Analytics Approach to Enhance Academic Performance

391

5.3 Implementation and Integration

The SRT is seamlessly integrated into CADT’s on-

line learning platform, ensuring a cohesive user expe-

rience for students. It operates in real-time, allowing

for continuous monitoring and adaptation to evolving

learning patterns. Up to this point, we have imple-

mented this tool with and for students, enabling them

not only to take part of the design process, but also to

naturally adopt the tool and use it for reflective analy-

sis of their learning activities.

With the integration of group-level overviews and

user progress insights, we are pursuing our effort in

making our tool as a valuable resource for students.

We also seek to improve our approach (both SP and

SRT), and the way that students utilize it to shape their

academic experiences. For that, we have conducted a

study, focusing on how the SRT is perceived by stu-

dents and its impact on their overall SP. The following

section will present the results of our study.

6 STUDY RESULTS & FINDINGS

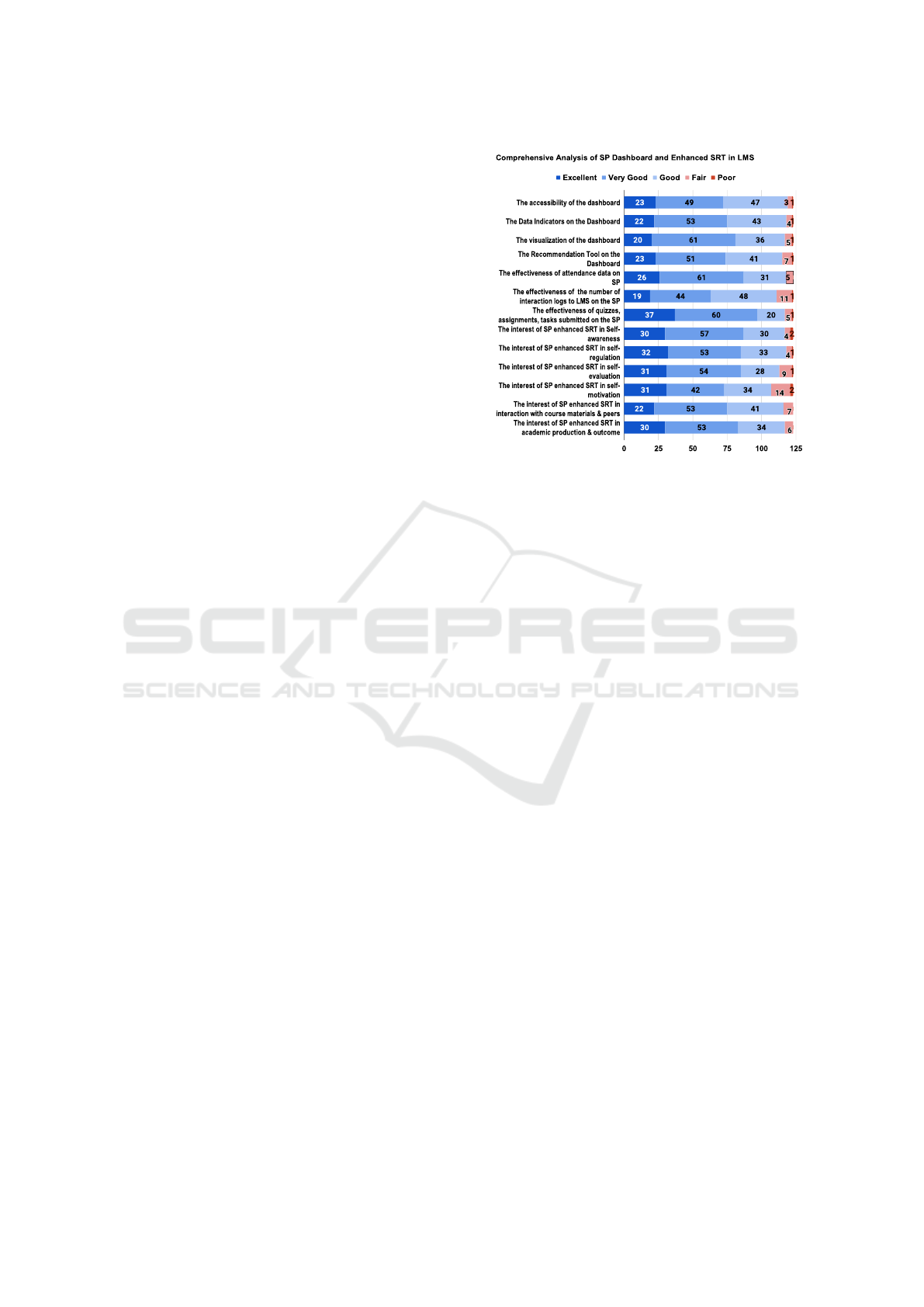

6.1 Data Analysis Protocol

The study employed a comprehensive data analysis

protocol to extract valuable insights responses from

the survey conducted in December 2023 and for six

days, which reached 123 participants from CADT, as

shown in Figure 9. Descriptive statistics were em-

ployed to summarize survey responses, and qualita-

tive data was analyzed thematically to identify recur-

ring patterns and trends.

Figure 9 shows the overall positive feedback and

student perception of the SRT, and data indicators re-

flect a significant enhancement in the learning expe-

rience. Students have consistently expressed satisfac-

tion with the user progress insights and tools designed

to support their academic performance. The tools

dedicated to self-reflection, particularly those assess-

ing performance and tracking progress, received ac-

claim for their effectiveness in enhancing students’

metacognition. The recommendation engine, offering

user progress insights aligned with individual learning

patterns, garnered appreciation for its motivational

impact on students’ commitment to academic tasks.

Regarding progress indicators, students recognized

the high efficacy of monitoring attendance data, view-

ing it as a significant factor contributing to improved

understanding and academic performance. The value

of the number of interaction logs with the LMS was

acknowledged, with increased interactions indicating

active engagement and enriching the learning experi-

Figure 9: The survey results from SP dashboard enhanced

with SRT in LMS.

ence. Similarly, tracking quizzes, assignments, and

submitted tasks received praise for its effectiveness in

self-assessment, aiding students in staying on course

with their coursework and ensuring timely submis-

sions.

6.2 Unveiling Novel Findings

As we take a closer look at the data from our study,

three significant findings have emerged and will be se-

lected for discussion. These findings unveil not only

the relevance of SRT in SP enhancement, but also

the correlation among learning components, includ-

ing students’ interactions, SRT oriented data indica-

tors, and the impacts of SRT on not only individuals

but also the community.

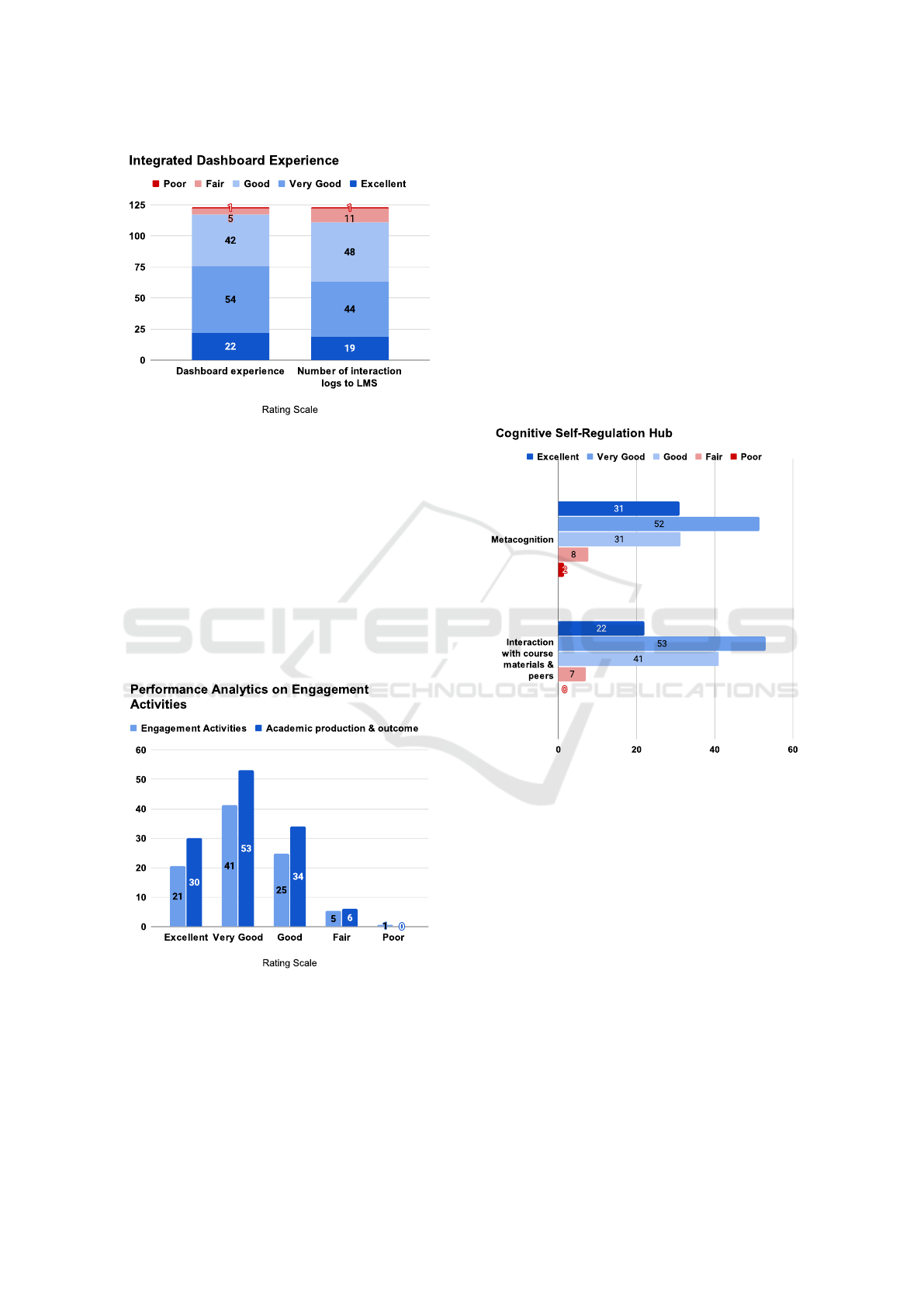

6.2.1 Integrated Dashboard Experience: A

Symphony of Connectivity

As interactions are part of the learning process, a

well-integrated SRT will incite more interactions,

thus leading to a more active learning and better per-

formance. The dashboard experiences of the SRT

were analyzed, with participants providing ratings on

a scale from Excellent to Poor. Figure 10 illustrates

how these experiences encourage students to interact

more with LMS.

Figure 10 demonstrates notable and positive

SRT’s Dashboard Experience, where the accessibil-

ity, data indicators, visualization, and recommenda-

tion tool collectively orchestrate a symphony of con-

nectivity. Our findings confirm that when these el-

ements harmonize positively, the number of interac-

tion logs in the LMS increases proportionally. It un-

CSEDU 2024 - 16th International Conference on Computer Supported Education

392

Figure 10: The advantages of dashboard design encourage

students to interact with LMS.

veils a compelling correlation, suggesting that a well-

designed and informative dashboard enhances indi-

vidual components and creates a ripple effect, foster-

ing increased engagement and interaction within the

learning environment. This correlation in this finding

reinforces the essential role of a cohesive dashboard

with self-reflective data indicators in shaping and am-

plifying student interaction.

6.2.2 Performance Analytics and Engagement

Mastery: A Virtuous Cycle

Figure 11: The effectiveness of engagement activities on

academic production and outcome.

Our research also uncovered a virtuous cycle in per-

formance analytics and engagement mastery. When

students actively participate by attending classes, en-

gaging more interactions with the LMS, and dili-

gently completing quizzes, assignments, and tasks,

a cascade of positive outcomes follows, as shown in

Figure 11. The academic production and overall out-

come align with these engaged behaviors. This intrin-

sic connection illustrates that student engagement is a

catalyst for immediate academic tasks and a predictor

of broader academic success. It challenges conven-

tional wisdom, emphasizing the dynamic relationship

between consistent engagement and sustained high-

level academic performance. As for SRT in that mat-

ter, it plays a crucial role in helping students become

aware of their engagement, thus inciting them to par-

ticipate even more.

6.2.3 Cognitive Self-Regulation Hub:

Empowering Holistic Growth

Figure 12: The empowering of metacognition on interaction

with course materials and peers.

If we take a look at SRT from a broader perspective

and particularly from the Cognitive Self-Regulation

Hub domain, our findings show the relevance of SRT

in cultivating metacognition such as self-awareness,

self-regulation, self-evaluation, and self-motivation,

as shown in Figure 12. The tools that empower in-

dividual cognitive processes extend their influence,

fostering increased interaction with course materials

and peers. The data from Figure 13 reveal the pro-

found impact of cognitive self-regulation on individ-

ual introspection and as a catalyst for collaborative

learning. They also demonstrate the positive impact

of SRT in self-assessment for students while inter-

act with others and learning resources. This finding

presents SRTs as tools for personal development and

contributing factors in creating a vibrant, interactive

learning community. It marks a paradigm shift, posi-

Empowering Students: A Reflective Learning Analytics Approach to Enhance Academic Performance

393

tioning cognitive self-regulation as the foundation for

a thriving and collaborative educational ecosystem.

In summary, these three key insights address part

of complex challenges in educational research, with a

special focus on reflective analytics to help students

enhance their academic performance. Our research

efforts aim to provide a fresh perspective on the in-

tricate dynamics that shape student experiences and

outcomes. Thus, we hope they invites further explo-

ration and a redefinition of established paradigms in

the ever-evolving education landscape.

7 DATA PRIVACY

As we navigate the data privacy landscape within

SRT, assessing the impact of stringent data privacy

constraints becomes imperative. SRT uses granular

data from detailed logs to construct meaningful data

indicators. While this granularity enhances the rele-

vance and pertinence of data indicators, it inevitably

raises questions regarding user privacy. The compro-

mise lies in striking a delicate balance between data

utility and privacy preservation. For that matter, we

utilize data anonymization and aggregation methods.

We ensure that SRT continues evolving and provides

valuable insights without exposing individual user de-

tails. It involves implementing techniques that allow

the extraction of meaningful patterns and trends with-

out revealing sensitive information, thus respecting

users’ privacy.

Compliance with the General Data Protection

Regulation (GDPR) is a cornerstone of our research

methodology. Ethical considerations are at the fore-

front, with participants fully informed about the na-

ture of their involvement, their rights, and the proce-

dures in place for data management. Transparency is

maintained through clear communication, and partic-

ipants can request the deletion of their data. An ethics

committee, including research team members, over-

sees and approves all aspects of our research design

and execution.

8 CONCLUSION

The research efforts presented in this paper focus on

a reflective learning analytics approach to empower

students and improve their learning experiences. We

have pointed out the lack of supports in terms of re-

flective tools for students, yet reflective analytics is

crucial to the learning process and has positive im-

pacts in student self-regulation and self-evaluation.

Our research was motivated by the identified gap in

empowering students through LA tools, particularly

in MOLE. Instead of imitating the existing supports

for teachers to recreate new ones for students, we ad-

dressed research challenges on how we can provide

them with reflective tools, enabling them to gain in-

sights on their own behaviors, then adapt their learn-

ing pace and strategy throughout their learning activi-

ties. On top of that, the originality of our work lies

in our proposal that places a focus on student per-

formance. An experimental setup involved over 160

students from CADT participating in our study. The

setup lasted over a year, during which students are in-

vited to participate in the design process of SRT as

well as the evaluation of our proposal.

Our design approach covers two aspects: the pre-

diction of SP and the implementation of SRT with

data indicators on SP. Our goal is to provide students

with personalized insights, real time progress track-

ing, and reflective components, thus enabling them

not only to conduct reflective analytics with specific

data indicators on their academic performance, but

also to interact and participate more in their learning

environment. To achieve this, our research method-

ology involved a multifaceted approach, combining

data extraction, classifier comparison, and perfor-

mance evaluation. Indeed, our SP approach relies on

selecting key attributes to efficient predicting student

performance. As for the design of our SRT, we pro-

pose a set of data indicators based on SP computed

data, featuring student attendance, participation, in-

teraction, quiz, assignment and grading, etc. These

SRT-oriented data indicators provide more than just

feedback, they are pertinent information about SP on

both individual and community scales. Plus, they are

accessible in real time, and not only at the end of

a learning session, making reflective analytics more

prompt, and suitable for different learning paces and

strategies.

Our research effort also includes both quantitative

and qualitative analyses of our SRT and its impact on

SP. A total of 123 students participated in our study

through a survey, allowing us to evaluate the correla-

tion between student engagement with the SRT and

improvements in SP. Students who actively used the

tool reported statistically significant enhancements

in their academic outcomes. Data from the sur-

vey highlighted the value of personalized data indi-

cators regarding self-awareness, self-regulation, self-

evaluation, and self-motivation of individual learning

patterns. Moreover, qualitative insights demonstrated

that the SRT contributed to students’ sense of empow-

erment, control over their learning journey, and proac-

tive engagement with academic challenges.

To sum up, through this research work, we hope

CSEDU 2024 - 16th International Conference on Computer Supported Education

394

to make a contribution to the discourse on LA by

emphasizing the importance of SRT designed explic-

itly for students in MOLE. Integrating personalized

indicators in our SRT at CADT showcases its po-

tential to empower students and actively shape their

educational experiences. As we move forward, the

implications of this research extend to the broader

realm of online education, promoting student-centric

approaches to enhance engagement, motivation, and

academic success. This work serves as a foundation

for future research endeavors, encouraging the con-

tinued exploration and development of tools that pri-

oritize student empowerment and self-regulation in

the evolving landscape of metacognition online edu-

cation.

REFERENCES

Ainley, M. and Patrick, L. (2006). Measuring self-regulated

learning processes through tracking patterns of stu-

dent interaction with achievement activities. Educa-

tional Psychology Review, 18:267–286.

Alhassan, A., Zafar, B., and Mueen, A. (2020). Predict stu-

dents’ academic performance based on their assess-

ment grades and online activity data. International

Journal of Advanced Computer Science and Applica-

tions, 11(4).

Alowayr, A. and Badii, A. (2014). Review of monitor-

ing tools for e-learning platforms. arXiv preprint

arXiv:1407.2437.

Andrade, H. L. (2019). A critical review of research on stu-

dent self-assessment. In Frontiers in Education, vol-

ume 4, page 87. Frontiers Media SA.

Arizmendi, C. J., Bernacki, M. L., Rakovi

´

c, M., Plumley,

R. D., Urban, C. J., Panter, A., Greene, J. A., and

Gates, K. M. (2023). Predicting student outcomes us-

ing digital logs of learning behaviors: Review, current

standards, and suggestions for future work. Behavior

research methods, 55(6):3026–3054.

Arthars, N., Dollinger, M., Vigentini, L., Liu, D. Y.-T.,

Kondo, E., and King, D. M. (2019). Empowering

teachers to personalize learning support: Case stud-

ies of teachers’ experiences adopting a student-and

teacher-centered learning analytics platform at three

australian universities. Utilizing learning analytics to

support study success, pages 223–248.

Bakharia, A., Corrin, L., De Barba, P., Kennedy, G.,

Ga

ˇ

sevi

´

c, D., Mulder, R., Williams, D., Dawson, S.,

and Lockyer, L. (2016). A conceptual framework

linking learning design with learning analytics. In

Proceedings of the sixth international conference on

learning analytics & knowledge, pages 329–338.

Banihashem, S. K., Aliabadi, K., Pourroostaei Ardakani, S.,

Delaver, A., and Nili Ahmadabadi, M. (2018). Learn-

ing analytics: A systematic literature review. Inter-

disciplinary Journal of Virtual Learning in Medical

Sciences, 9(2).

Banihashem, S. K., Noroozi, O., van Ginkel, S., Mac-

fadyen, L. P., and Biemans, H. J. (2022). A systematic

review of the role of learning analytics in enhancing

feedback practices in higher education. Educational

Research Review, page 100489.

Brahim, G. B. (2022). Predicting student performance from

online engagement activities using novel statistical

features. Arabian Journal for Science and Engineer-

ing, 47(8):10225–10243.

Dawson, S., Joksimovic, S., Poquet, O., and Siemens, G.

(2019). Increasing the impact of learning analytics.

In Proceedings of the 9th international conference on

learning analytics & knowledge, pages 446–455.

Dyckhoff, A. L., Zielke, D., B

¨

ultmann, M., Chatti, M. A.,

and Schroeder, U. (2012). Design and implementation

of a learning analytics toolkit for teachers. Journal of

Educational Technology & Society, 15(3):58–76.

Fatma Gizem Karaoglan Yilmaz, R. Y. (2020). Stu-

dent opinions about personalized recommendation

and feedback based on learning analytics.

Fatma Gizem Karaoglan Yilmaz, R. Y. (2022). Learning

analytics intervention improves students’ engagement

in online learning.

Felix, I., Ambrosio, A., Duilio, J., and Sim

˜

oes, E. (2019).

Predicting student outcome in moodle. In Proceed-

ings of the Conference: Academic Success in Higher

Education, Porto, Portugal, pages 14–15.

Felix, I., Ambr

´

osio, A. P., LIMA, P. D. S., and Brancher,

J. D. (2018). Data mining for student outcome pre-

diction on moodle: A systematic mapping. In Brazil-

ian Symposium on Computers in Education (Simp

´

osio

Brasileiro de Inform

´

atica na Educac¸

˜

ao-SBIE), page

1393.

Gaftandzhieva, S., Talukder, A., Gohain, N., Hussain, S.,

Theodorou, P., Salal, Y. K., and Doneva, R. (2022).

Exploring online activities to predict the final grade of

student. Mathematics, 10(20):3758.

Hegde, V., Pai, A. R., and Shastry, R. J. (2022). Personal-

ized formative feedbacks and recommendations based

on learning analytics to enhance the learning of java

programming. In ICT Infrastructure and Comput-

ing: Proceedings of ICT4SD 2022, pages 655–666.

Springer.

Hern

´

andez-de Men

´

endez, M., Morales-Menendez, R., Es-

cobar, C. A., and Ram

´

ırez Mendoza, R. A. (2022).

Learning analytics: state of the art. International

Journal on Interactive Design and Manufacturing

(IJIDeM), 16(3):1209–1230.

Hirokawa, S. (2018). Key attribute for predicting student

academic performance. In Proceedings of the 10th In-

ternational Conference on Education Technology and

Computers, pages 308–313.

Jaggars, S. S. and Xu, D. (2016). How do online course

design features influence student performance? Com-

puters & Education, 95:270–284.

Joksimovi

´

c, S., Kovanovi

´

c, V., and Dawson, S. (2019). The

journey of learning analytics. HERDSA Review of

Higher Education, 6:27–63.

Jovanovic, J., Gasevic, D., Dawson, S., Pardo, A., and Mir-

riahi, N. (2017). Learning analytics to unveil learning

Empowering Students: A Reflective Learning Analytics Approach to Enhance Academic Performance

395

strategies in a flipped classroom. Internet and Higher

Education, 33:74–85.

Karaoglan Yilmaz, F. G. (2022). The effect of learning an-

alytics assisted recommendations and guidance feed-

back on students’ metacognitive awareness and aca-

demic achievements. Journal of Computing in Higher

Education, 34(2):396–415.

Khosravi, H. and Cooper, K. M. (2017). Using learning an-

alytics to investigate patterns of performance and en-

gagement in large classes. In Proceedings of the 2017

acm sigcse technical symposium on computer science

education, pages 309–314.

la Red Mart

´

ınez, D. L. and G

´

omez, C. P. (2014). Contri-

butions from data mining to study academic perfor-

mance of students of a tertiary institute. American

Journal of Educational Research, 2(9):713–726.

Majeed, B. H. et al. (2021). The impact of reflexive learn-

ing strategy on mathematics achievement by first in-

termediate class students and their attitudes towards

e-learning. Turkish Journal of Computer and Mathe-

matics Education (TURCOMAT), 12(7):3271–3277.

Mangaroska, K., Vesin, B., Kostakos, V., Brusilovsky, P.,

and Giannakos, M. N. (2021). Architecting analytics

across multiple e-learning systems to enhance learn-

ing design. IEEE Transactions on Learning Technolo-

gies, 14(2):173–188.

McKenna, K., Pouska, B., Moraes, M. C., and Folkestad,

J. E. (2019). Visual-form learning analytics: A tool for

critical reflection and feedback. Contemporary Edu-

cational Technology, 10(3):214–228.

McMillan, J. H. and Hearn, J. (2008). Student self-

assessment: The key to stronger student motiva-

tion and higher achievement. Educational horizons,

87(1):40–49.

Namoun, A. and Alshanqiti, A. (2020). Predicting student

performance using data mining and learning analytics

techniques: A systematic literature review. Applied

Sciences, 11(1):237.

Ndukwe, I. G. and Daniel, B. K. (2020). Teaching analytics,

value and tools for teacher data literacy: A systematic

and tripartite approach. International Journal of Edu-

cational Technology in Higher Education, 17(1):1–31.

Papamitsiou, Z. and Economides, A. A. (2014). Learn-

ing analytics and educational data mining in prac-

tice: A systematic literature review of empirical ev-

idence. Journal of Educational Technology & Society,

17(4):49–64.

Perrotta, K. A. and Bohan, C. H. (2020). A reflective study

of online faculty teaching experiences in higher edu-

cation. Journal of Effective Teaching in Higher Edu-

cation, 3(1):50–66.

QAZDAR, A., QASSIMI, S., HASSIDI, O., HAFIDI, M.,

EH, A., and Melk, Y. (2022). Learning analytics for

tracking student progress in lms.

Quinn, R. J. and Gray, G. (2020). Prediction of student

academic performance using moodle data from a fur-

ther education setting. Irish Journal of Technology

Enhanced Learning, 5(1).

Sergis, S. and Sampson, D. G. (2016). Towards a teaching

analytics tool for supporting reflective educational (re)

design in inquiry-based stem education. In 2016 IEEE

16th International Conference on Advanced Learning

Technologies (ICALT), pages 314–318. IEEE.

Sghir, N., Adadi, A., and Lahmer, M. (2023). Recent ad-

vances in predictive learning analytics: A decade sys-

tematic review (2012–2022). Education and informa-

tion technologies, 28(7):8299–8333.

Silvola, A., N

¨

aykki, P., Kaveri, A., and Muukkonen, H.

(2021). Expectations for supporting student engage-

ment with learning analytics: An academic path per-

spective. Computers & Education, 168:104192.

Talay-Ongan, A. (2003). Online teaching as a reflective

tool in constructive alignment. In Proceedings of In-

ternational Education Research Conference AARE–

NZARE, volume 30. Citeseer.

Tepgec¸, M. and Ifenthaler, D. (2022). Learning analytics

based interventions: A systematic review of experi-

mental studies. International Association for Devel-

opment of the Information Society.

Viberg, O., Hatakka, M., B

¨

alter, O., and Mavroudi, A.

(2018). The current landscape of learning analytics

in higher education. Computers in human behavior,

89:98–110.

Volungeviciene, A., Duart, J. M., Naujokaitiene, J., Tamoli-

une, G., and Misiuliene, R. (2019). Learning analyt-

ics: Learning to think and make decisions. Journal of

Educators Online, 16(2):n2.

Wong, B. T.-m. and Li, K. C. (2020). A review of learn-

ing analytics intervention in higher education (2011–

2018). Journal of Computers in Education, 7(1):7–28.

Wong, B. T.-M., Li, K. C., and Choi, S. P.-M. (2018).

Trends in learning analytics practices: A review of

higher education institutions. Interactive Technology

and Smart Education, 15(2):132–154.

Wong, R. (2023). When no one can go to school: does

online learning meet students’ basic learning needs?

Interactive learning environments, 31(1):434–450.

Ya

˘

gcı, M. (2022). Educational data mining: prediction

of students’ academic performance using machine

learning algorithms. Smart Learning Environments,

9(1):11.

CSEDU 2024 - 16th International Conference on Computer Supported Education

396