High-Fidelity Simulation Pre-Briefing with Digital Quizzes: Using

INACSL Standards for Improving Effectiveness

Nazanin Sheykhmohammadi

a

, Aryobarzan Atashpendar

b

and Denis Zampunieris

c

Faculty of Science, Technology, and Medicine (FSTM), University of Luxembourg, Esch-sur-Alzette, Luxembourg

Keywords:

Pre-Briefing, High-Fidelity Simulation, Medical Education, Digital Quiz.

Abstract:

High-fidelity simulations enable medical students to gain experience in typical scenarios through the use of

computerized manikins, though their learning outcomes and performance greatly depend on their preparation.

To that end, a pre-briefing phase is typically set up to teach the students the necessary information, including

both the theory and the technical workings of the manikin. Our work explores a digital-first approach to

pre-briefing, where the learners are provided quizzes through a mobile application, which allows them to

identify gaps in their knowledge and reinforce their retention through repetitive testing in their own time.

Additionally, demonstrative videos of the manikin are offered to complement their learning. The quiz-based

approach to pre-briefing has been tested with a university class of medical students to prepare them for a basic

life support simulation. We discuss our findings in terms of how the digital quizzes were perceived by students

and evaluate our pre-briefing method against a set of best practices established by ”The International Nursing

Association for Clinical Simulation and Learning” (INACSL). Finally, recommendations to improve the quiz-

based approach are outlined for future case studies.

1 INTRODUCTION

Lack of practice is a common complaint in medical

education, but simulation is increasingly employed

as a novel approach to address this concern. Re-

search has shown that simulation-based education im-

proves skill performance, knowledge, and patient out-

comes (Zeng et al., 2023). High-fidelity patient sim-

ulation is an advanced teaching method that utilizes a

computerized manikin to simulate real-life scenarios.

It helps students integrate their knowledge and skills

based on their clinical decisions. It has also been used

for problem-solving and clinical reasoning abilities in

educational training (Wong et al., 2023).

High-fidelity simulation involves three phases, re-

ferred to by different terms in various sources, such as

pre-briefing, simulation, and debriefing (Tong et al.,

2022a). Alternatively, they may be defined as prepa-

ration, participation and debriefing, with preparation

being composed of a pre-briefing and a briefing (Tong

et al., 2022b). In some contexts, the three stages

are pre-briefing, clinical case scenarios, and debrief-

ing (Duque et al., 2023).

This research solely focuses on the initial ”pre-

briefing” phase, which refers to ”the activities PRIOR

a

https://orcid.org/0000-0002-0241-4034

b

https://orcid.org/0000-0002-6652-4478

c

https://orcid.org/0009-0008-8037-0656

to the start of the simulation including the prepa-

ration and briefing aspects” according to ”The In-

ternational Nursing Association for Clinical Simula-

tion and Learning” (INACSL) definition (McDermott

et al., 2021). Students expect to develop their skills

during the simulation, but the class time dedicated to

the simulation can be limited. As the students need

to be adequately prepared for these time-constrained

simulations, the pre-briefing can equip them with

the necessary knowledge to successfully perform the

simulation (Tong et al., 2022b). While the debrief-

ing phase has been widely studied and practiced, the

significance of pre-briefing has recently been high-

lighted in studies by showing that its combination

with the participation and debriefing phases helps stu-

dents ”anticipate priorities, recognize and respond to

changes in the condition, and reflect on prioritization

choices” (Penn et al., 2023).

Traditionally, preparation for simulations involved

conventional methods such as lectures, textbook read-

ings, and skills practice. Newly, alternative strategies

involve web-based modules, mental rehearsal, and the

development of cognitive aids or care plans. Further-

more, facilitating self-assessment through quizzes,

self-reflections, or competency rubrics may allow

learners to identify knowledge or skill gaps before

engaging in simulations and to foster self-regulated

learning (Tyerman et al., 2019).

412

Sheykhmohammadi, N., Atashpendar, A. and Zampunieris, D.

High-Fidelity Simulation Pre-Briefing with Digital Quizzes: Using INACSL Standards for Improving Effectiveness.

DOI: 10.5220/0012682500003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 1, pages 412-419

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

Recent studies and reviews (Silva et al., 2022; Ty-

erman et al., 2019) have highlighted alternative meth-

ods that have been used either alone or in combina-

tion with traditional methods. These include video-

based resources for pretraining, such as healthcare

professionals role modeling patient care, informative

reviews of concepts, and demonstrations of scenar-

ios developed by expert nurses in the role modeling

capacity. Other resources include web-based guides,

discussion forums, team building and communica-

tion exercises, and so on. In particular, the INACSL

also recommends some preparation activities such as:

”Assigned readings or audiovisual materials, Review

of the patient health record/patient report, Observa-

tion of a model of a simulated case, Completion of a

pretest or quiz (McDermott et al., 2021).”

Implementing a pre-briefing session through

videotaping has been shown to be effective (Wheeler

and Kuehn, 2023). Moreover, quizzes containing

higher-order thinking questions can be an effective

tool to prepare learners for simulation-based learning

experiences, as they can prompt critical thinking and

motivate students to study relevant literature for the

answers. In this context, simulation experts may set a

minimum threshold on the score to obtain on the pre-

quizzes such that the students are eligible to partake

in the corresponding simulations (Leigh and Steuben,

2018).

The goal of practice through quizzes is to invoke a

testing effect (Roediger III and Karpicke, 2006; Fer-

nandez and Jamet, 2017; Van Gog and Sweller, 2015),

which has been shown to improve the long-term re-

tention of students compared to the act of restudy-

ing (Eisenkraemer et al., 2013). Additionally, the in-

direct effects of testing can further help the student

with their learning: firstly, they can apply their theo-

retical knowledge to questions and consequently as-

sess their current understanding without the aid of a

teacher, which has been shown to also break their

false, confident perception that they already master

the topics without practice. Secondly, they can im-

prove their future restudying efforts (Arnold and Mc-

Dermott, 2013; Izawa, 1971) by knowing which se-

lect topics to focus on.

The testing effect is also dependent on several

factors which determine its efficacy. Firstly, harder

questions require a greater retrieval effort by the stu-

dent, which in turn can improve the resulting testing

effect (Greving and Richter, 2018; Rowland, 2014).

One approach is to rely on free-form (recall) ques-

tions (Eisenkraemer et al., 2013; Greving and Richter,

2018; Van Gog and Sweller, 2015; Cranney et al.,

2009), which are considered harder than multiple-

choice questions due to the latter’s passive recogni-

tion nature, i.e., simply having to recognize the cor-

rect answer as opposed to remembering and produc-

ing it from memory.

Next, testing needs to be performed regu-

larly (Eisenkraemer et al., 2013; Cranney et al.,

2009), as the student’s retention can decay overtime.

Lastly, students may also retain incorrect information

and weaken their retention when they answer ques-

tions wrongly (Eisenkraemer et al., 2013; Cranney

et al., 2009; Greving and Richter, 2018). To counter-

act this negative testing effect (Richland et al., 2009;

Arnold and McDermott, 2013; Barber et al., 2011;

Butler and Winne, 1995; Eisenkraemer et al., 2013;

Cranney et al., 2009; Van Gog and Sweller, 2015;

Roediger III and Karpicke, 2006; Fernandez and

Jamet, 2017), it is important to provide immediate

feedback to the learner by not only informing them

of the correct answer but also by providing an expla-

nation such that they understand why they had orig-

inally answered incorrectly. Feedback can thus not

only prevent a negative testing effect, but even further

reinforce the student’s learning and retention (Hattie

and Timperley, 2007; Azevedo and Bernard, 1995).

The remainder of this paper is organized as fol-

lows. In Section 2, we lay out the objectives of our

case study and highlight our methodology in Sec-

tion 3. Next, we describe the results in Section 4

and discuss our findings alongside recommendations

in Section 5. We conclude in Section 6 with the future

work planned for our next case study.

2 OBJECTIVES

The literature generally lacks guidance on the proper

organization of simulation pre-briefings, with no con-

sistent approach to implementation. It is recom-

mended that researchers focus on pre-briefing to help

close those gaps (Wheeler and Kuehn, 2023).

A recent study (Sheykhmohammadi et al., 2023)

formulated recommendations for improving high-

fidelity simulation sessions using manikins in a uni-

versity setting, which were based on the challenges

and requirements faced by both the students and train-

ers. These include:

1. Scenario design and implementation

2. Before simulation (Pre-briefing)

3. During simulation

4. After simulation (Debriefing and feedback)

The necessary steps and frameworks for developing

valid and reliable scenarios were outlined for the

given manikin’s software. Given these findings, our

High-Fidelity Simulation Pre-Briefing with Digital Quizzes: Using INACSL Standards for Improving Effectiveness

413

research focuses on reforming the pre-briefing by re-

lying on purely digital means. In particular, we ex-

plore digital quizzes to prepare students for a specific

simulation scenario, which are intended to test their

knowledge such that they can identify gaps they need

to study up on further, as well as to reinforce their

retention through repetition of the quizzes.

The goal is to facilitate access to self-regulated

testing by providing the quizzes through a mobile ap-

plication which they can use in their own time, thus

allowing them to align their testing with their per-

sonal study schedule. Additionally, while the quizzes

are intended to include questions both about the the-

ory behind the upcoming simulation scenario, as well

as technical information such as how to work with

the manikin, the pre-briefing also relies on recorded

videos demonstrating the manikin’s functionality.

By applying our pre-briefing method to a univer-

sity class of students who were preparing for a high-

fidelity simulation, we aim to gauge both their inter-

est and motivation in using our approach, as well as

its effect on their simulation performance.

3 METHOD

According to McDermott et al, Pre-briefing activi-

ties are designed to provide a ”psychologically safe

learning environment” for the simulation-based ex-

perience through two key components (McDermott

et al., 2021):

1. Preparation: the learners are aligned with a com-

mon mental model.

2. Briefing: the learners are conveyed essential

ground rules.

To that end, we relied on video-based resources and

digital quizzes to realize our pre-briefing approach.

Firstly, we chose the mobile quiz app BEACON

Q due to several features which can help the students

achieve an effective testing effect to improve both

their studying efforts and their retention: educators

can schedule quizzes for specific periods, which al-

lows each student to align their testing with their per-

sonal study timing before the simulation session.

It also supports replaying quizzes, which enables

the learner to regularly practice and reinforce their re-

tention. Furthermore, BEACON Q adjusts the diffi-

culty of questions to each user’s performance level,

e.g., by changing the format of multiple-choice ques-

tions to free-form, with the latter potentially resulting

in a stronger testing effect.

Finally, the application provides immediate feed-

back to the user, who not only has their answer eval-

uated, but who is also given explanations to further

describe the question and its possible answers, includ-

ing the distractors (wrong answers), which also helps

avoid a negative testing effect.

BEACON Q can thus cover the quizzing portion

of the pre-briefing. In particular, the dynamic ad-

justment of the quizzes’ difficulty is important, given

the best practice criterion ”The experience and knowl-

edge level of the simulation learner should be consid-

ered when planning the pre-briefing.” (Miller et al.,

2021). BEACON Q accommodates the simulation

learner’s knowledge level over time as they replay

quizzes by collecting their performance data and ei-

ther rendering the same questions easier or harder de-

pending on their past attempts.

To test our method of pre-briefing, we selected

third-year medical students at a university to conduct

a simulation session based on the BLS scenario, using

a high-fidelity manikin manufactured by MedVision

1

.

For the preparation phase, an initial quiz was pro-

vided through the BEACON Q app, with 13 questions

prepared based on research (Spinelli et al., 2021) and

the advice of a professional anesthesiologist who also

acted as the simulation teacher. The questions cov-

ered both theoretical and guideline-based knowledge

about the BLS scenario. To introduce the students to

the BEACON Q app, an explanatory session was or-

ganized two weeks before the planned simulation, af-

ter which they gained access to the quiz. Each student

was free to play the quiz on their given day and time

until the simulation session.

Next, for the briefing phase, we relied on a sec-

ond digital quiz which was prepared by the simula-

tion teacher and a high-fidelity simulation expert. The

quiz contained 11 questions covering the manikin’s

functionality, though several demonstrative videos

were also provided to the students through both the

second quiz, as well as their learning management

system Moodle. The second quiz became available

to play slightly later, specifically six days before the

simulation session. Playing both quizzes was a pre-

requisite to participating in the simulation session.

Following the pre-briefing and the simulation, we an-

alyzed the quiz results, questionnaire responses and

simulation performance of the students. Based on our

findings and in accordance with the INACSL (McDer-

mott et al., 2021) standards, we endeavored to formu-

late recommendations for optimizing the incorpora-

tion of video and quiz-based training to enhance our

pre-briefing process.

1

Manikin ”Leonardo” by MedVision Group:

https://www.medvisiongroup.com/leonardo.html

CSEDU 2024 - 16th International Conference on Computer Supported Education

414

4 RESULTS

We present the results of the students’ performance

on the digital quizzes, followed by their questionnaire

responses.

4.1 Quizzes

Two quizzes were playable through the mobile quiz

app BEACON. The first quiz meant for the prepara-

tion step of the pre-briefing was available from 26

October to 2 November and contained 13 questions.

Each student could initiate their quiz attempt at any

time during that period, but they would be limited to

30 minutes to finish it once started. The average suc-

cess rate for this quiz was 85%, i.e., 85% of all stu-

dents’ answers to the 13 unique questions were cor-

rect. However, only 15% of the answers were fol-

lowed by feedback reviews, meaning instances where

a student would tap on a given choice, either correct

or a distractor, after submitting their answer to read

the additional explanations provided by their simula-

tion teacher.

Next, the second quiz meant for the briefing step

of the pre-briefing was available from 2 November

to 6 November and contained 11 questions, with the

quiz expiring shortly before the simulation session.

The students were granted more time to finish the sec-

ond quiz once started, specifically 50 minutes, due to

some of its questions requiring them to watch videos

before answering. Similar to the first quiz, the success

rate was high at 83%, though in this case there were

no feedback reviews whatsoever.

As a final note, the days on which the students

played each quiz were spread out, especially in the

second quiz’ case. However, the first quiz was played

more often on either the first day or the day after it

became available, and no students played it over the

weekend. On the other hand, the second quiz was

also played over the weekend, most likely due to the

simulation being on the following Monday.

4.2 Questionnaire

After the simulation session, students were invited to

fill out a questionnaire, shown in Figure 1, to cap-

ture their impressions of the BEACON Q quiz app, as

well as how prepared they felt for the simulation. The

questions were primarily designed based on expert in-

sights along with past research (Sharoff, 2015).

The average student answers to the choice-based

questions 1 − 9 are given in Table 1, which firstly in-

dicates that 89.66% of the 29 students had used the

BEACON Q app. Next, 86.21% of the students had

played the preparation quiz, with only 55.17% find-

ing it helpful in preparing them for the simulation.

Furthermore, the briefing quiz, which included in-

structional videos in its questions, was completed by

82.76% of the students, though only 31.03% found

it useful. In the same context, their learning man-

agement system Moodle revealed that only 7 students

had viewed the instructional videos which were made

available outside of the mobile quiz app.

As for the students’ impression of our quiz-based

pre-briefing approach for improving how they notice,

interpret and respond during the simulation session,

50.00% found it helpful. Regarding what they had

taken away from both the pre-briefing and the simu-

lation session itself, 20.69% thought they were bet-

ter prepared for the future, while 15.52% felt more

confident in themselves. Only 6.90% thought their

skills had improved simulation, while 29.31% be-

lieved that they had gained the opportunity to reflect

on their actions. Additionally, 25.86% of the partici-

pants thought they had learned lessons from both their

own errors, as well as from their colleagues during the

simulation. Lastly, there were no students who felt

highly anxious about the simulation, with most hav-

ing low levels of anxiety.

Figure 1: Post-simulation questionnaire.

Regarding the last, free-form question of the ques-

tionnaire, 18 students had provided additional com-

ments, with 6 of them expressing that the quizzes

were not helpful, with comments such as ”I do not

think the quizzes were helpful in preparing us for the

simulation”. Their main concerns were about the con-

tent of the quizzes, though 1 student strongly opposed

the mandatory nature of the pre-briefing quizzes.

Next, 5 students suggested that the quizzes could

be beneficial if the quality of the content, pictures and

High-Fidelity Simulation Pre-Briefing with Digital Quizzes: Using INACSL Standards for Improving Effectiveness

415

Table 1: Post-simulation questionnaire results for the

choice-based questions.

Question Title Results

1. Mobile Quiz App Usage 89.66%

2. Completion of Preparation Quiz 86.21%

3. Helpfulness of Preparation Quiz Very helpful and Somewhat helpful: 55.17%

Slightly helpful and Not helpful: 44.83%

4. Completion of Briefing with Instructional Videos 82.76%

5. Helpfulness of Briefing for Simulation Preparation Very helpful and Somewhat helpful: 31.03%

Slightly helpful and Not helpful: 68.97%

6. Overall Effectiveness of Quiz-Based Pre-Briefing 50.00%

7. Feelings After Simulation (Reflecting) More confident: 15.52%, Better prepared: 20.69%,

Overall skills improved: 6.90%, Reflected on own actions: 29.31%,

Learned from errors and colleagues: 25.86%

8. If did not review preparatory material Yes: 0.00%, No: 50.00%, Not sure: 50.00%

9. Anxiety Level for Simulation Experience (No Review) Low: 100.00%, Medium: 0.00%, High: 0.00%

Anxiety Level for Simulation Experience (Review) Low: 55.17%, Medium: 37.93%, High: 6.90%

videos were improved. For instance, one student men-

tioned ”Clearer instructions, the photos taken for the

quiz on the mobile app were not taken optimally”. On

the other hand, 2 students found the quizzes helpful,

with one noting ”The pre-briefing quiz and the videos

were very helpful for today; I felt prepared before the

simulation [...]”.

Finally, 5 students mentioned that they would like

to have a debriefing session, although this is beyond

the scope of this research and questionnaire. For ex-

ample, one student commented ”Immediate feedback

will be great, so we can know what we did wrong.”,

i.e., they wanted their simulation teacher or an expert

to be present such that they could immediately give

the students individual feedback after the simulation.

5 DISCUSSION

Following our initial case study, we discuss rec-

ommendations for organizing a pre-briefing phase

based on our own quiz-based approach. Afterwards,

we evaluate our method against the INACSL stan-

dards (McDermott et al., 2021).

5.1 Recommendations

For our quiz-based method of pre-briefing, some key

areas can benefit from enhancements.

Primarily, there is the impression that the two

quizzes which were offered as part of the pre-briefing

did not cover enough content-wise: the students’ suc-

cess rate for them was significantly high (> 80%) and

in the questionnaires, they had not only noted that

the quizzes were not particularly helpful in preparing

them for the simulation, but some of the additional

comments explicitly stated that the quizzes did not

cover enough and that they were too simple. This

is a point which can easily be amended, as the stu-

dents did not necessarily have qualms with the mobile

quiz app itself, but rather the content of the quizzes.

Hence, the pre-briefing quizzes should be extended

with additional questions to go into more depth when

it comes to both the theory of the simulation scenario,

as well as the technical know-how required for inter-

acting with the manikin.

Furthermore, greater care should be taken when

preparing the demonstrative pictures and videos of

the manikin to be included both in the quizzes and

on their learning management system, such as paying

attention to the angle and lighting.

Next, it is important that the students also mas-

ter essential skills such as team-based communica-

tion (Livne, 2019), with the latter’s lack being noted

while observing the students trying to work together

to operate the manikin. For example, these team-

based skills would include how to efficiently dis-

tribute the tasks among the group members, as well

as assigning a leader at the start of the simulation to

ensure someone is managing the team as a whole and

keeping track of the required steps and guidelines.

Lastly, certain students opposed the fact that the

digital quizzes were considered prerequisites to par-

ticipating in the simulation session. We similarly be-

lieve this to not be the right approach, as the quizzes

should be offered as an additional learning aid, i.e.,

the students themselves should be free to plan their

preparation for the simulation based on all the re-

sources we provide them during the pre-briefing.

The reason for a less restrictive approach is that

intrinsic motivation (Cranney et al., 2009; Rodrigues

et al., 2021) to test yourself can reinforce the testing

effect, as opposed to extrinsic motivation, e.g., where

the teacher forces their students to test themselves

through quizzes. The purpose of testing is to improve

the student’s long-term retention and to enhance their

future restudying efforts, not to actually teach them

the material. Rather, it is important that the students

still study in addition to testing themselves.

As a result, we propose that the pre-briefing

should not be mainly based on quizzes, but rather on

a set of resources, such as written guides and demon-

strative videos, which the students would firstly study

before moving on to the quizzes to practice their

knowledge. This would form a loop, where the stu-

dents would go back and forth between studying their

material and testing what they have retained through

CSEDU 2024 - 16th International Conference on Computer Supported Education

416

the quizzes until they feel confident enough in being

prepared for the simulation.

5.2 INACSL Standards

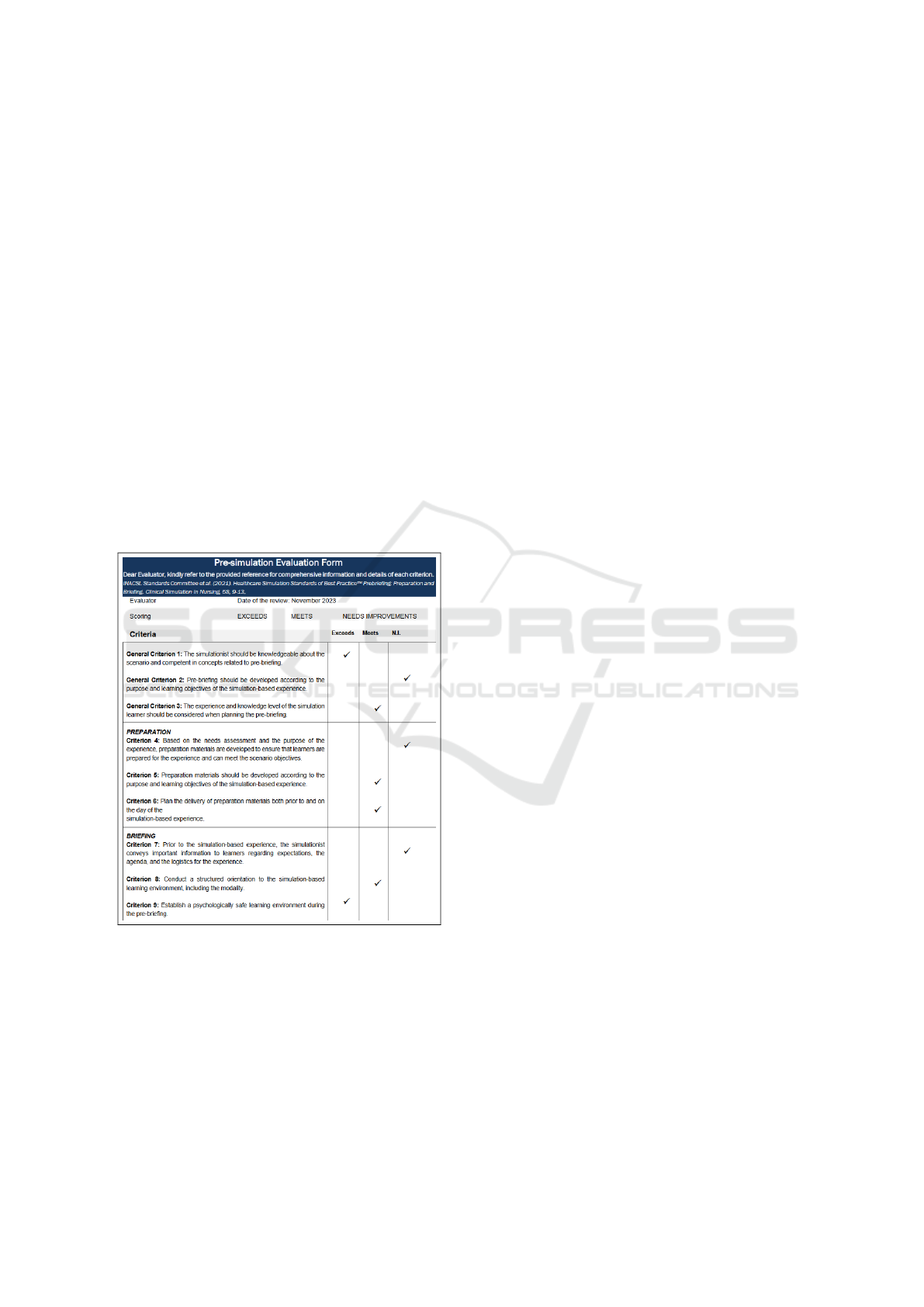

INACSL has established a set of standards for simula-

tion under the title ”Healthcare Simulation Standards

of Best Practice” (HSSOBP), which cover aspects

such as ”professional development,” ”simulation de-

sign,” ” interprofessional education,” ”debriefing pro-

cess” and ”learning and performance evaluation” (Vi-

olato et al., 2023). In particular, pre-briefing (Penn

et al., 2023; Miller et al., 2021) was included as one

of the standards (McDermott et al., 2021), based on

which we have created a checklist for evaluating our

method, as shown in Figure 2. A high-fidelity simu-

lation expert, who was responsible for managing the

simulation session, was asked to fill out the form. We

believe this following set of recommendations to be

particularly useful, as a recent review (Barlow et al.,

2024) noted that few studies clearly articulate how

their methods aligned with these standards.

Figure 2: Pre-briefing evaluation checklist based on the IN-

ACSL standards (McDermott et al., 2021).

Based on the expert’s feedback and our analysis

of the case study results, we have compiled improve-

ments for our pre-briefing method according to each

criterion in the INACSL standards (McDermott et al.,

2021):

• Criterion 1: for our case study, the simula-

tion manager was an experienced anesthesiolo-

gist who, in collaboration with a medical infor-

matics researcher, implemented the pre-briefing

(videos, quizzes). However, we recommend in-

volving more experts, particularly ones who spe-

cialize in high-fidelity simulation, e-learning and

psychology, such that they can set up a compre-

hensive set of quizzes and resources to prepare the

students for the simulation.

• Criterion 2: meeting the learning objectives be-

comes a difficult task when only using digital

quizzes and videos. Rather, we think it is still

necessary to rely on traditional methods, such as

written learning material, to teach the required

knowledge to the students during the pre-briefing.

Quizzes can help the learners in re-orienting their

studying efforts and improving their retention, as

has been shown with the testing effect (Arnold

and McDermott, 2013; Izawa, 1971), but they are

not a substitute for other resources such as lec-

tures meant to teach the content as a first step.

Digitization can facilitate access to the learning

material, e.g., with the help of a mobile quiz app,

but the content itself should not be reduced in the

process by purely relying on quizzes, which are

not an ideal teaching medium.

• Criterion 3: although we designed preparation

quiz questions based on research. However, based

on the students’ post-simulation feedback, the

pre-briefing quizzes would benefit from more in-

depth questions specifically in terms of the theory.

To that end, the content of the quizzes needs to be

better aligned with the student’s curriculum, such

that the questions do not appear as simple repeti-

tions of topics they have already studied recently.

Rather, the quizzes should also fill any gaps in

knowledge in terms of concepts not typically cov-

ered in their lectures and similarly allow the stu-

dents to test themselves with practical questions.

• Criterion 4: to aid the learning experience, more

general resources regarding psychology should

also be provided to the students, rather than only

covering the simulation scenario’s topics.

• Criterion 5: a diverse set of formats for the pre-

briefing material can be useful, as each student

has their preferences when it comes to how they

study. As such, while digital quizzes and audio-

visual material may seem helpful for some, tradi-

tional methods such as written guides should also

be offered to support a broader range of learning

modalities.

• Criterion 6: based on the students’ own com-

plaints from our case study, we recommend of-

fering the pre-briefing resources, including in dif-

ferent formats as previously noted, though with-

High-Fidelity Simulation Pre-Briefing with Digital Quizzes: Using INACSL Standards for Improving Effectiveness

417

out imposing a requirement that they must com-

plete a certain set of tasks before being allowed

to participate in the simulation. The simulation

itself is what the students are to be evaluated on,

meaning how they prepare themselves during the

pre-briefing should not matter to the teacher. Pro-

viding the students with the learning material,

while still giving them the freedom to organize

their preparation can best suit each student’s self-

regulated learning.

• Criterion 7: as per our observations and results,

we need to apply all steps in the current Criterion

in the following study. We highly recommend

preparing a comprehensive document covering all

aspects, especially students’ roles and team-based

communication, in any suitable format.

• Criterion 8: we found that teaching the modalities

of the high-fidelity manikin was difficult through

the digital quizzes. Instead, we would suggest

not only written tutorials explaining the manikin’s

functionality but also a virtual simulation room

tour before the actual session. The latter would

not only help the students get a hands-on expe-

rience with the general controls and monitoring

displays of the manikin, but it could also reduce

their anxiety by familiarizing them with the envi-

ronment.

• Criterion 9: an issue we faced with our case study

was the limited amount of time we could dedicate

to each simulation group. This lack of time not

only made the students feel pressured to quickly

learn how to handle the manikin before being able

to start with the basic life support scenario, but it

also did not allow the simulation teacher to offer

individual feedback to each student after the ses-

sion. While debriefing was not the focus of our

work, it is still an important aspect in reinforcing

the students’ learning outcomes. As a result, we

recommend allocating more hours for simulation

in the curriculum to allow teachers much time to

manage all phases of the simulation session and

cover all aspects of this criterion.

6 CONCLUSION

Pre-briefing can greatly help in preparing students for

high-fidelity simulations with manikins. Our work

proposed a testing-based approach through the us-

age of digital quizzes whose difficulties are adapted

to each learner’s skill level, as well as demonstrative

videos. Our case study revealed that this testing fo-

cus is not enough, as the students still require learning

material such as written guides and lectures to acquire

the required knowledge. Testing can improve their re-

tention and help them identify topics they have not

mastered yet, but it cannot teach the underlying con-

tent effectively. Based on our findings and based on

the INACSL pre-briefing criteria, we proposed several

improvements for our method to achieve comprehen-

sive pre-briefing.

For future work, we intend to implement the pro-

posed improvements and conduct additional simu-

lations using our pre-briefing approach. Given the

importance of debriefing, we are developing a plan

to provide students with automatic and personalized

debriefing reports by integrating proactive comput-

ing(Dobrican and Zampunieris, 2016) during and af-

ter simulations.

ACKNOWLEDGEMENTS

The researchers would like to express their gratitude

to the participants for their contribution to this study

and are grateful to the medical teaching team for their

support and guidance.

REFERENCES

Arnold, K. M. and McDermott, K. B. (2013). Free recall

enhances subsequent learning. Psychonomic Bulletin

& Review, 20:507–513.

Azevedo, R. and Bernard, R. M. (1995). A meta-analysis

of the effects of feedback in computer-based instruc-

tion. Journal of Educational Computing Research,

13(2):111–127.

Barber, L. K., Bagsby, P. G., Grawitch, M. J., and Buerck,

J. P. (2011). Facilitating self-regulated learning

with technology: Evidence for student motivation

and exam improvement. Teaching of Psychology,

38(4):303–308.

Barlow, M., Heaton, L., Ryan, C., Downer, T., Reid-Searl,

K., Guinea, S., Dickie, R., Wordsworth, A., Hawes,

P., Lamb, A., et al. (2024). The application and in-

tegration of evidence-based best practice standards to

healthcare simulation design: A scoping review. Clin-

ical Simulation in Nursing, 87:101495.

Butler, D. L. and Winne, P. H. (1995). Feedback and self-

regulated learning: A theoretical synthesis. Review of

educational research, 65(3):245–281.

Cranney, J., Ahn, M., McKinnon, R., Morris, S., and Watts,

K. (2009). The testing effect, collaborative learn-

ing, and retrieval-induced facilitation in a classroom

setting. European Journal of Cognitive Psychology,

21(6):919–940.

Dobrican, R. A. and Zampunieris, D. (2016). A proactive

solution, using wearable and mobile applications, for

CSEDU 2024 - 16th International Conference on Computer Supported Education

418

closing the gap between the rehabilitation team and

cardiac patients. In 2016 IEEE International Confer-

ence On Healthcare Informatics (ICHI), pages 146–

155. IEEE.

Duque, P., Varela, J., Garrido, P., Valencia, O., and Terradil-

los, E. (2023). Impact of prebriefing on emotions in

a high-fidelity simulation session: A randomized con-

trolled study. Revista Espa

˜

nola de Anestesiolog

´

ıa y

Reanimaci

´

on (English Edition), 70(8):447–457.

Eisenkraemer, R. E., Jaeger, A., and Stein, L. M. (2013).

A systematic review of the testing effect in learning.

Paid

´

eia (Ribeir

˜

ao Preto), 23:397–406.

Fernandez, J. and Jamet, E. (2017). Extending the testing

effect to self-regulated learning. Metacognition and

Learning, 12:131–156.

Greving, S. and Richter, T. (2018). Examining the testing

effect in university teaching: Retrievability and ques-

tion format matter. Frontiers in Psychology, 9:2412.

Hattie, J. and Timperley, H. (2007). The power of feedback.

Review of educational research, 77(1):81–112.

Izawa, C. (1971). The test trial potentiating model. Journal

of Mathematical Psychology, 8(2):200–224.

Leigh, G. and Steuben, F. (2018). Setting learners up

for success: Presimulation and prebriefing strategies.

Teaching and Learning in Nursing, 13(3):185–189.

Livne, N. L. (2019). High-fidelity simulations offer a

paradigm to develop personal and interprofessional

competencies of health students: A review article. In-

ternet Journal of Allied Health Sciences and Practice.

McDermott, D. S., Ludlow, J., Horsley, E., and Meakim, C.

(2021). Healthcare simulation standards of best prac-

ticetm prebriefing: preparation and briefing. Clinical

Simulation in Nursing, 58:9–13.

Miller, C., Deckers, C., Jones, M., Wells-Beede, E., and

McGee, E. (2021). Healthcare simulation standards

of best practicetm outcomes and objectives. Clinical

Simulation in Nursing, 58:40–44.

Penn, J., Voyce, C., Nadeau, J. W., Crocker, A. F., Ramirez,

M. N., and Smith, S. N. (2023). Optimizing interpro-

fessional simulation with intentional pre-briefing and

debriefing. Advances in Medical Education and Prac-

tice, pages 1273–1277.

Richland, L. E., Kornell, N., and Kao, L. S. (2009). The

pretesting effect: Do unsuccessful retrieval attempts

enhance learning? Journal of Experimental Psychol-

ogy: Applied, 15(3):243.

Rodrigues, L., Toda, A. M., Oliveira, W., Palomino, P. T.,

Avila-Santos, A. P., and Isotani, S. (2021). Gamifi-

cation works, but how and to whom? an experimen-

tal study in the context of programming lessons. In

Proceedings of the 52nd ACM technical symposium

on computer science education, pages 184–190.

Roediger III, H. L. and Karpicke, J. D. (2006). The power of

testing memory: Basic research and implications for

educational practice. Perspectives on psychological

science, 1(3):181–210.

Rowland, C. A. (2014). The effect of testing versus restudy

on retention: a meta-analytic review of the testing ef-

fect. Psychological bulletin, 140(6):1432.

Sharoff, L. (2015). Simulation: Pre-briefing preparation,

clinical judgment and reflection. What is the Connec-

tion, pages 88–101.

Sheykhmohammadi, N., Stammet, P., Gr

´

evisse, C., and

Zampunieris, D. (2023). Challenges and requirements

in initial high-fidelity simulation experiences for med-

ical education: Highlighting scenario development. In

11th International Conference on University Learning

and Teaching (InCULT 2023), In Press. IEEE.

Silva, C. C. d., Natarelli, T. R. P., Domingues, A. N., Fon-

seca, L. M. M., and Melo, L. d. L. (2022). Prebriefing

in clinical simulation in nursing: scoping review. Re-

vista Ga

´

ucha de Enfermagem, 43.

Spinelli, G., Brogi, E., Sidoti, A., Pagnucci, N., and Forfori,

F. (2021). Assessment of the knowledge level and ex-

perience of healthcare personnel concerning cpr and

early defibrillation: an internal survey. BMC cardio-

vascular disorders, 21:1–8.

Tong, L. K., Li, Y. Y., Au, M. L., Wang, S. C., and Ng,

W. I. (2022a). High-fidelity simulation duration and

learning outcomes among undergraduate nursing stu-

dents: A systematic review and meta-analysis. Nurse

Education Today, 116:105435.

Tong, L. K., Li, Y. Y., Au, M. L., Wang, S. C., and Ng,

W. I. (2022b). Prebriefing for high-fidelity simulation

in nursing education: A meta-analysis. Nurse Educa-

tion Today, page 105609.

Tyerman, J., Luctkar-Flude, M., Graham, L., Coffey, S., and

Olsen-Lynch, E. (2019). A systematic review of health

care presimulation preparation and briefing effective-

ness. Clinical Simulation in Nursing, 27:12–25.

Van Gog, T. and Sweller, J. (2015). Not new, but nearly for-

gotten: The testing effect decreases or even disappears

as the complexity of learning materials increases. Ed-

ucational Psychology Review, 27:247–264.

Violato, E., MacPherson, J., Edwards, M., MacPherson,

C., and Renaud, M. (2023). The use of simulation

best practices when investigating virtual simulation in

health care: A scoping review. Clinical Simulation in

Nursing, 79:28–39.

Wheeler, J. and Kuehn, J. (2023). Live versus video-

taped prebriefing in nursing simulation. Teaching and

Learning in Nursing.

Wong, F. M., Chan, A. M., and Lee, N. P. (2023). Can high-

fidelity patient simulation be used for skill develop-

ment in first-year undergraduate students after coron-

aviral pandemic.

Zeng, Q., Wang, K., Liu, W.-x., Zeng, J.-z., Li, X.-l., Zhang,

Q.-f., Ren, S.-q., and Xu, W.-m. (2023). Efficacy of

high-fidelity simulation in advanced life support train-

ing: a systematic review and meta-analysis of ran-

domized controlled trials. BMC Medical Education,

23(1):664.

High-Fidelity Simulation Pre-Briefing with Digital Quizzes: Using INACSL Standards for Improving Effectiveness

419