A Pipeline for the Automatic Evaluation of Dental Surgery Gestures in

Preclinical Training from Captured Motions

Mohamed Nail Hefied

1

, Ludovic Hamon

1

and S

´

ebastien George

1

, Val

´

eriane Loison

2

, Fabrice Pirolli

2

,

Serena Lopez

2

and Rapha

¨

elle Cr

´

etin-Pirolli

2

1

LIUM, Le Mans Universit

´

e, France

2

CREN, Le Mans Universit

´

e, France

mohamed nail.hefied, ludovic.hamon, sebastien.george, valeriane.loison, fabrice.pirolli, raphaelle.pirolli @univ-lemans.fr,

Keywords:

Automatic Gesture Evaluation, Motion Capture, Dental Surgery.

Abstract:

This work in progress proposes an automatic evaluation pipeline for dental surgery gestures based on teacher’s

demonstrations and observation needs. This pipeline aims at supporting learning in preclinical situations for

the first years of study in the dental school. It uses the Random Forest (RF) algorithm to train a model based

on specific descriptors for each gesture component, that are designed to cover the evolution of the observation

needs. The inputs are the captured motion parts whose labels are defined by the teachers with their own

vocabulary, to represent expected or no-wanted geometrical or kinematic features. The overall evaluation (for

example, weighted average of each component) and the component evaluation can be given to students to

improve their postures and motor skills. A preliminary test correctly classifies a back correct posture and

three main flaws (”Twisted Back and Bent Head”, ”Leaning Back”, ”Leaning Back and Bent Back”) by the

RF model, for the posture component. This approach is designed for the adaptation to the expert’s evolving

observation needs while minimizing the need for a heavy re-engineering process and enhancing the system

acceptance.

1 INTRODUCTION

Training in dentistry begins with a preclinical period,

dedicated to the learning of the most common pro-

cedures such as clinical examination, cavity prepara-

tion, tooth preparation for the crown placement, etc.

It is also important to learn how to adopt the right

postures to preserve the practitioner health and pre-

vent pathologies, such as the development of Muscu-

loSkeletal Disorders (MSD) (FDI, 2021).

During the preclinical period, students train on

conventional simulators, also known as physical sim-

ulators or ”phantom”, consisting mainly of (i) a man-

nequin head (fig.3(a)) (ii) a model of jaws with ar-

tificial teeth (e.g., resin) that the students can insert

into the phantom mouth (iii) and various instruments,

including, mouth mirror, dental probe, rotative instru-

ments, etc. In the dental school of Nantes Univer-

sity (France), a practical session typically includes, at

least, twenty students. The assistance of teachers is

often required by a student, making them unavailable

to assist, assess and correct other students’ gestures.

Alongside conventional simulators, there are vir-

tual and haptic environments for dentistry training

such as the HRV Virteasy Dental or NISSIN SIMON-

DOT system (Bandiaky et al., 2023). These simu-

lators use force-feedback arms to replicate physical

contacts of tools with the virtual teeth. The SIMTO-

CARE Dente training system includes a phantom’s

head on which augmented pedagogical feedback is

provided. In both cases, those simulators primarily

track the instrument movements through the haptic

arm or motion sensors. It is therefore impossible to

capture the user’s body movements, which limits their

ability to evaluate the full range of a student’s techni-

cal gesture.

Nowadays, with motion capture (mocap) systems,

one can record any motion-based activities to analyze

and evaluate them and/or to build a dedicated Virtual

Learning Environment (VLE) in which 3D avatars of

the teachers and learners can be displayed in real time

(Djadja et al., 2020; Le Naour et al., 2019). Mo-

cap solutions also include those based on pose esti-

mation in computer vision (e.g. OpenPose and Medi-

aPipe). Movement data are often represented by a tree

of joints (or skeleton) as shown in fig.3(e), each node

420

Hefied, M., Hamon, L., George, S., Loison, V., Pirolli, F., Lopez, S. and Crétin-Pirolli, R.

A Pipeline for the Automatic Evaluation of Dental Surgery Gestures in Preclinical Training from Captured Motions.

DOI: 10.5220/0012685000003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 1, pages 420-427

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

containing a time series made of the 3D positions and

orientations of the join over time.

The gesture learning can be considered accord-

ing to three non-exclusive viewpoints: (a) the obser-

vation and the imitation of the expert gestures (Liu

et al., 2020; Oagaz et al., 2022) (b) the learning of

geometric, kinematic and dynamic features or (c) se-

quence of actions (focusing on reaching specific dis-

crete states of manipulated objects regardless of the

user’s underlying movements)(Djadja et al., 2020). In

this context, the pedagogical strategy can vary from

one teacher to another for the same task. However,

most existing current evaluation systems or VLE, ne-

glect the motion-based evaluation process (neglect (a)

and (b)) or the adaptation to the teacher’s expertise

that restricts the tool acceptance.

Consequently, the aim of this study is to propose

a method and an operationalizable architecture able

to: (a) automatically evaluate the dental surgery ges-

tures using mocap systems, (b) integrate the teacher’s

expertise, in terms of gesture execution, to assist the

students in their learning.

The main contributions of this work are the fol-

lowing ones:

- An analysis and decomposition of the dental techni-

cal gestures into evaluable motion-based components,

making it possible to provide feedback to the learner

and integrate the teacher’s expertise thanks to each

component.

- The proposal of an operationalizable approach for

the automatic evaluation that addresses the dental

surgery gesture at the component level and as a whole.

- The challenges and solutions to make this approach

adaptable to the teacher’s evolving observation and

analysis needs, independent of the simulator type

(conventional or virtual and haptic), independent of

the motion capture system used as long as it provides

skeletal data, and independent of the task to learn.

Although machine learning algorithms are used in this

work, this study does not aim at contributing to the

machine learning domain. AI is a technical solution

to address our questions, not a goal in itself. More-

over, the automatic evaluation of the procedure (e.g.,

quality of the preparation, shape of the teeth, % of the

removed dental cavity) are currently out of the scope

of this work in progress.

The article is structured as follows. Section 2 re-

views automatic gesture evaluation in the literature.

Section 3 presents a survey on teacher practices and

the potential advantages of an automatic gesture eval-

uation system to assist them and preclinical students.

Section 4 breaks down dental surgery gestures. Sec-

tion 5 describes the proposed system architecture.

Section 6 depicts initial validation tests and discusses

the results and the proposal, while section 7 gives the

perspectives of this work.

2 RELATED WORKS

Many studies focus on specific aspects such as the

posture of oral health professionals (Bhatia et al.,

2020; Maurer-Grubinger et al., 2021; Pispero et al.,

2021; FDI, 2021). However, to our current knowl-

edge, it seems that there are no studies that fully ad-

dress the automatic evaluation of gestures in dental

surgery.

In other application domains, the automatic eval-

uation of gestures relies mainly on the analysis of

movement data based on the teacher expertise. For

instance, in the table tennis domain, the expert obser-

vation needs, related to forehand and backhand stroke

gestures, were translated into metrics computed from

movement data, while the gesture acceptability values

were extracted from the pre-recorded demonstrations

of the expert with a tolerance factor (Oagaz et al.,

2022). Another study focused on the performances

of novice salsa dancers compared to regular dancers

(Senecal et al., 2020). Following the suggestions of

dance experts, three criteria were proposed to repre-

sent the essential salsa skills (Rhythm, Guidance, and

Style). The gesture was not studied as a whole but as a

set of components, each of them associated with spe-

cific descriptors based on two popular motion analy-

sis systems (MMF and LMA). A review of expressive

motion descriptors, all based on kinematic, dynamic,

and geometric features, was conducted (Larboulette

and Gibet, 2015). However, other kinds of descrip-

tors exist. Regarding postures, several studies used

a score-type descriptor called the Rapid Upper Limb

Assessment (RULA) score (Maurer-Grubinger et al.,

2021; Bhatia et al., 2020; Manghisi et al., 2022). The

RULA score is an ergonomic measure that evaluates

the postural risk of the body during a task. It assigns

a rating on a scale (1 safe to 7 dangerous) to a pos-

ture. The RULA score was adapted to the practice of

oral health professionals, evaluating the postural risk

during a therapeutic act for approximately 60 seconds

(Maurer-Grubinger et al., 2021). In most of the cases,

the descriptors are specifically chosen or designed for

the task to learn, leading to significant engineering

challenges if the observation needs or the task evolve.

An approach adapted to the evolution of the ex-

pert’s needs while minimizing the reengineering pro-

cess relies on the motion capture of the expert com-

bined with spatial similarity techniques such as the

Dynamic Time Warping (DTW). DTW aims at com-

paring the shape of two-time series without consider-

A Pipeline for the Automatic Evaluation of Dental Surgery Gestures in Preclinical Training from Captured Motions

421

ing the temporal aspect. The lesser the DTW score is

the closer the two series are. An acceptance thresh-

old must be empirically defined. For instance, a VLE

(Liu et al., 2020) used DTW to compare the Tai Chi

movements of a learner with a virtual coach replaying

the pre-recorded movements of the expert, while pro-

viding a similarity score. However, it is reasonable to

question the pedagogical effectiveness of such a kind

of score-based approach as it does not provide infor-

mation about specific incorrect aspects of the gesture.

Another method consists in using supervised ma-

chine learning algorithms trained on motion-based

data. In the context of home-based physical therapies,

a study adopted a two-step machine learning classifi-

cation approach that recognized the exercise among

10 types and then, evaluated whether the exercise

is correctly executed or not (Garc

´

ıa-de Villa, 2022).

They collected and used data from four IMUs placed

on volunteers’ limbs that performed each exercise se-

ries four times. Putting aside the complex process-

ing chain of motion capture, the expert can train the

model to evaluate new movements. However, the sys-

tem’s output is binary and does not provide relevant

feedback to correct or enhance the gesture. The IANB

gesture (Inferior Alveolar Nerve Block anesthesia)

was evaluated (Sallaberry et al., 2022). The VIDA

Odonto simulator collected the position and orienta-

tion of the syringe in the virtual environment. Sev-

eral features were extracted from the collected data

(e.g., mean jerk, penetration angle) and a compari-

son of the performances of different machine learn-

ing classification algorithms (Naive Bayes, Random

Forests, Multi-Layer Perceptrons, and Support Vec-

tor Machine) and feature selection/fusion algorithms

(ReliefF and PCA) was carried out. However, the out-

put only discriminated between expert and novice lev-

els without more information to guide the learners.

In a study aiming at assisting the rehabilitation pro-

cess of stroke patients (Weiss Cohen and Regazzoni,

2020), authors developed a system based on a leap

motion as a hand-tracking device. The hand move-

ments of the physiotherapist served to build a ref-

erence model. The gesture must be repeated 20-30

times. Joint angles were extracted and stored in a vec-

tor for each frame for each sample. A KNN algorithm

allowed averaging the vectors for each frame of an

exercise during the training phase. The system gen-

erated feedback for each finger separately, based on

the angle difference, indicating the gap with the ref-

erence movement. The output was divided into four

flexible segments that can be defined by users. An

evaluation system based on ML is often designed as a

proof of concept for the automatic evaluation perfor-

mances. Despite, most of the existing systems limit

the evaluation to an overall appreciation, the defini-

tion of output classes by the teachers can be relevant

in terms of pedagogical feedback.

Systems only based on low-level descriptors are

not adaptable to the evolution of tasks or observation

needs. DTW and ML existing approaches can coun-

terbalance this issue, but often overlook pedagogical

feedback. Regarding dental surgery techniques, there

is not one perfect motion i.e. various biomechanical

approaches are viable as long as they meet the ex-

perts’ criteria for each gesture aspect. Consequently,

there is a challenge in designing a system adapted to

the teachers’ practices and their evolution, while pro-

viding feedback to the learner related to those criteria.

The expectations of such a system are discussed in the

next section.

3 EVALUATION EXPECTATIONS

To gather teaching practices, observation and analysis

needs in relation to technical gestures and their evalu-

ation, a qualitative survey was carried out using semi-

directive interviews with eleven teachers from several

disciplines (Prosthetics, Restorative Dentistry and En-

dodontics (DRE) and Pediatric Dentistry) at the den-

tal school of Nantes University. The semi-directive

interviews, lasting an average of thirty-five minutes,

were conducted by a pair made of a computer sci-

entist researcher and an educational researcher. The

interview recordings were anonymized and the audio

files transcribed. A thematic analysis of the verbatim,

by manually coding the discourse segments, was car-

ried out using the Nvivo software. The main topics of

the interview and their results are exposed below.

Work Session and Formative Evaluation. A Prac-

tical Work (PW) session, dedicated to working on

conventional simulators, generally includes about

”twenty” (ens1) students supervised by a teacher, as-

sisted either by monitors (students with a higher level

of study) or operating alone. A formative evaluation,

without grading, is carried out at each stage of the ex-

ercise by the teacher or monitors, based on their ob-

servations or at the student’s request: ”It’s to validate

the steps as they are carried out” (ens3);”each time,

we give them a little advice on what was done well

and what must be improved” (ens2);

High Demand on Teachers and Procedure Results

vs. Gesture Evaluation. The demands placed on

teachers and monitors are considerable, due to the

size of the student groups: ”when you’re managing

20 or 23 students, that’s a lot, and you’re not neces-

sarily available at the right time” (ens1). Therefor,

assessment primarily focuses on the result of the den-

CSEDU 2024 - 16th International Conference on Computer Supported Education

422

tal surgery procedure, rather than the technical ges-

tures performed by students: ”after a while, even for

us, we’re human, so we end up looking mainly at the

clinical aspect, the final result (...) whereas the means

to achieve it, is very important” (ens2).

Dental Preparation vs. Gesture Concerns. A ses-

sion can last between 1h30 and 3h00, and the number

and duration of PW sessions are limited by the density

of the required teaching, ”the problem is that if at a

given session, the student hasn’t assimilated all infor-

mation (...) it’s a bit lost, given that at the next session

we’ll move on to another exercise” (ens7). In addition

to the preparation, ”for them, the working position,

ergonomics, are a secondary objective (...) and they

may find it easier or quicker to bend their neck to see

better and perform the gesture” (ens3) that is not rec-

ommended to avoid MSD.

Interests of Automatic Evaluation of Technical

Gestures. The interest of automatic evaluation of

technical gestures is to (i) ”help because it’s compli-

cated to manage all the students” (ens2) for whom

”you have to repeat over and over again (...) and who

regularly forget their work position” (ens3) (ii) cal-

culate metrics in real or near-real time, which can

be used to provide feedback to the student on the

technical gesture (e.g.: Your back is bent, stand

straight, lower your elbows...) ”if it’s something to

tell to students, it might be more educational for them,

and especially for all those we don’t see at a given

moment”(ens9) (iii) standardize practices further,

and ”perhaps smooth out the level between different

groups a little more, and ensure that certain trans-

missions of information are not teacher-dependent,

because certain choices of instruments, for example,

certain set-ups, certain working position will be a

matter of habit, a matter of personal feeling. (...)

we don’t practice in the same way (...) as long as it

remains within a framework where it’s done in good

conditions”(ens5).

An automatic system for evaluating students’ ges-

tures can help teachers, who face high demands and

repetitive gesture issues. This system allows students

to focus on procedures while being reminded of the

correct gestures. This is possible if the dental surgery

gesture is formalized in a frame made of interpretable,

operationnalisable and evaluable components, allow-

ing the integration of teachers’ observation needs, as

discussed in the next section.

4 DENTAL SURGERY GESTURE

In addition to interviews with teachers, two visits

were made during PW sessions at the dental school of

Nantes University, to observe and perform a PW (i.e.,

preparing a tooth for the placement of a crown). Fur-

thermore, an analysis of the two PW notebooks of the

teachers, in Prosthetics, Restorative Dentistry and En-

dodontics was conducted, along with a review of the

2021 ergonomic recommendations for oral health pro-

fessionals (FDI, 2021) published by the FDI (World

Dental Federation). Based on the gathered pieces of

information, the surgery dental gesture can be broken

down as follows.

Posture. This component qualifies the body part con-

figuration to adopt: (i) The natural curvatures of the

spine must be respected (cervical lordosis, thoracic

kyphosis, lumbar lordosis). The forward body must

not lean (bust/leg angle ≥ 90

◦

). No excessive bend-

ing or twisting of the spine (including back and head)

must be observed. The head slightly tilts forward.

(ii) Arms can be at rest or almost alongside the torso

(20° between vertical and arms). There is no abduc-

tion of the shoulders. The practitioner’s elbows are

close to the body and do not protrude. Forearms are

in front of the body (elbow angle 60°). Wrists held in

a neutral and straight position. (iii) One must observe

legs apart and lower legs vertical (knee angle 90° to

100° degrees). Feet must be flat on the floor.

Sitting Orientation. This component represents

the practitioners’ seated position around the patient’s

head, according to their dominant arm. The space

occupied by a right-handed person must be between

9 and 12 o’clock (12 and 3 o’clock for left-handed

one). In this interval, and depending on the tooth to

be treated, the practitioners must opt for a positioning

that enable them to better see the tooth.

Instrument Holding and Fulcrum (Finger Rest).

Errors in holding rotative instruments are recurrent,

and difficult to detect in a PW context, requiring the

teacher to be close to each student’s workstation.

The instrument should be held like a pen by three

fingers (thumb, index and middle fingers) close to the

head of the instrument, to control the pressure applied

to the tooth. The other two fingers are positioned as

close as possible to the preparation, ideally on the

same working arch, acting as a fulcrum on the tooth

or gum. The objective is not working with a floating

hand but following the patient’s arch to have an ac-

curate motion, reduce muscular load and fatigue, and

avoid injuring the patient.

Asepsis. In addition to complying with general asep-

sis guidelines, such as wearing goggles, masks, the

gloves of the practitioner must not touch anything

other than the patient’s oral cavity (e.g., tooth, arch,

gum), and the instruments placed on the operating

field. The goal is to monitor parasitic movements e.g.,

scratching one’s nose or head, leaving a free hand on

A Pipeline for the Automatic Evaluation of Dental Surgery Gestures in Preclinical Training from Captured Motions

423

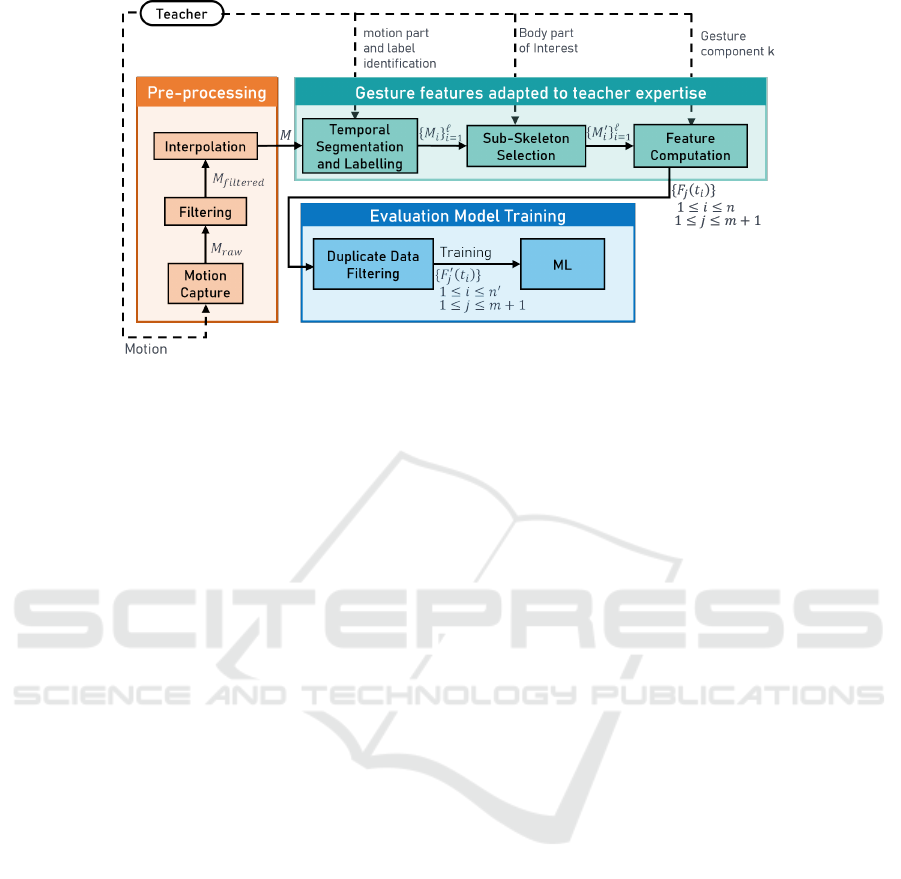

Figure 1: Training phase.

their own pants, etc., This kind of gestures leads to

hygiene faults.

All the previous four components were validated

by the three teachers implied in the visits. Despite the

given specific measurements and values provided by

the aforementioned pedagogical documents, the auto-

matic evaluation system should encompass all gesture

aspects, while being adaptable to changes and toler-

ance (e.g. is 91°, 92°, etc., an acceptable value for

the bust/leg angle ?). Consequently, the next section

outlines a pipeline based on gesture components, ana-

lyzed each with descriptors able to integrate the teach-

ers’ expertise from their demonstrations.

5 PROPOSED EVALUATION

PIPELINE & METHOD

This section outlines the proposed evaluation

pipeline, which includes training individual Machine

Learning (ML) models for each gesture compo-

nent, identified in the previous section, to conduct

continuous learner assessments.

5.1 Training Phase

Fig.1 illustrates the initial phase of the ML model

training, applicable for each gesture component, and

using generic descriptors computed from labeled

teacher demonstrations. Therefore, for a given com-

ponent, this phase begins by asking the expert to pro-

vide good and/or bad demonstrations.

Motion Capture and Filtering. The expert’s move-

ments are captured using a motion capture system

such the Qualysis infrared system. M

raw

is the raw

motion data structure. This raw data can be noisy,

containing inconsistent or missing values, and must

therefore be manually filtered (linear, polynomial,

Savitzky Golay, relation filters, etc.) to obtain a clean

motion M

f iltred

.

Interpolation. An interpolation process will gener-

ate the motion M with the desired frequency or frame

number, as a mocap system with a high frequency can

generate too much data than the system can handle in

a reasonable time.

Temporal Segmentation and Labeling. From M,

the experts (or teachers) identify (non-)acceptable se-

quences {M

i

}

ℓ

i=1

with ℓ being the number of labels.

They must visualize the 3D motions and give the cor-

responding time periods and labels. The teacher may

define and identify as many (non-)acceptable motion

parts as they want in the way they want (e.g., correct,

incorrect, almost correct, bending back, head leaning,

weird shoulder position, etc.).

Sub-Skeleton Selection. From the complete joint

tree, the teacher selects the branches (succession of

joints) representing the body part of interest for a ges-

ture component. This module returns the {M

′

i

}

ℓ

i=1

la-

beled motion spatially trimmed to the desired set of

joints.

Feature Computation. This module computes pre-

defined features (or descriptors) for a given gesture

component. The challenge here is to find an appro-

priate set of features adapted to the evolution of the

teaching practices, i.e., any expert could integrate new

gestures to identify without changing the descriptors

for the component. The proposed features are the fol-

lowing ones:

- (i) Posture and instrument holding: the joint orien-

tation (quaternion) from a movement expressed in a

local coordinate system, plus normalized directional

CSEDU 2024 - 16th International Conference on Computer Supported Education

424

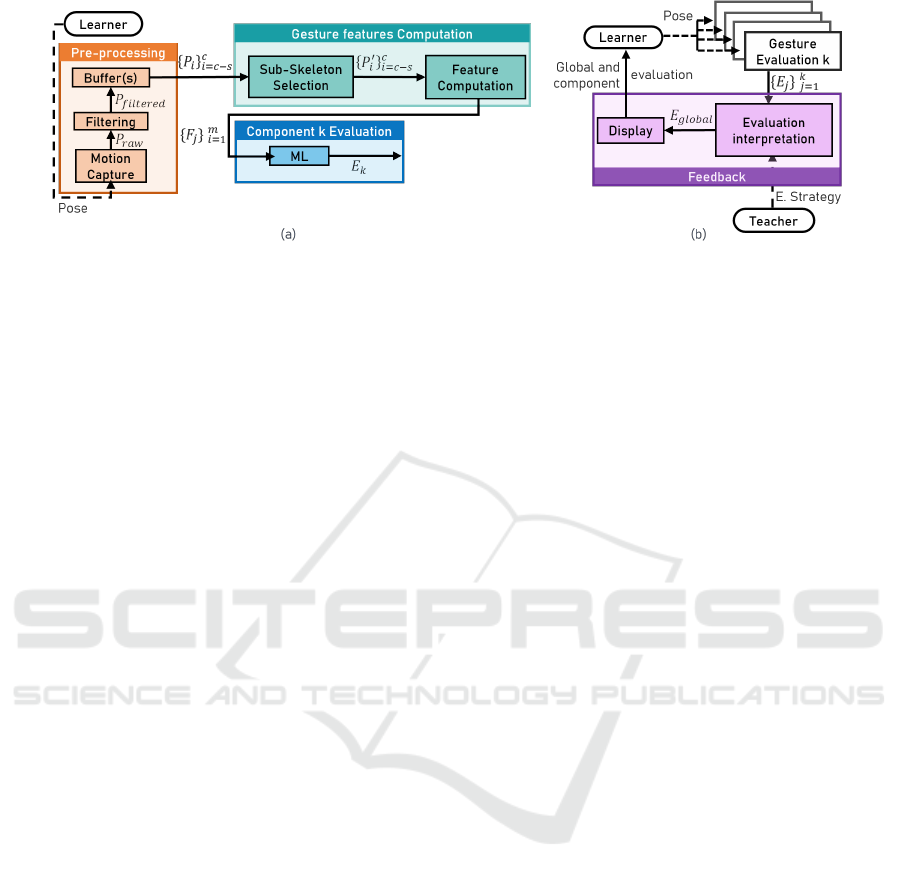

Figure 2: (a) Evaluation phase per gesture component (b) Evaluation feedback.

vectors from the root joint to each of the other joints

computed from a movement expressed in a global co-

ordinate system(fig. 3(e)).

- (ii) Sitting orientation: the angle between a straight

line connecting the root joint to a reference point on

the operating area, and a fixed reference line (e.g., 12

o’clock).

- (iii) Asepsis: distances between hand and wrist

joints to other body joints.

The fulcrum is currently being studied and features

will be proposed in the future. The output {F

j

(t

i

)}

of this module is a data table with n row (frame) and

m + 1 column (time series of features and a label col-

umn).

Duplicate Data Filtering. This module parses

{F

j

(t

i

)} with a sliding window to compare rows and

filter out duplicates that do not meet a threshold to

return {F

′

j

(t

i

)} with n

′

< n. The objective is to only

keep distinctive necessary samples for the ML train-

ing process.

ML. The machine learning module will correlate the

training samples to their expected labels defined by

the teacher. The chosen algorithm is the Random For-

est (RF). This algorithm is non-dependent to a dis-

tance function as heterogeneous component features

can be considered in different kinds of distance func-

tion (Euclidean, spherical, geodesic, etc.). Decision

trees generated by a Random Forest identify the most

informative data divisions by maximizing informa-

tion gain or minimize entropy. This algorithm is also

known to perform well with few data as one cannot

ask a teacher to make many demonstrations.

5.2 Evaluation Phase

Now that the ML model is trained with the teacher’s

(non)acceptable gesture demonstrations, it can be

used to evaluate the learner’s gestures. Figure 2(a) de-

scribes the pipeline to evaluate a single gesture com-

ponent based on a capture of the learner’s pose P

(i.e., the joint tree in a single frame) for (near) real-

time evaluation. {P

i

} contains s poses (i.e. short mo-

tion part) stored by the buffer module for later com-

putation of descriptors requiring several poses (e.g.,

speed), while {P

′

i

} is the sub-skeleton trimmed ac-

cording to the targeted subset of joints of interest de-

fined by the teacher. Finally, the ML block infers a

class for the gesture component.

Figure 2(b) shows the feedback information sent

to the learner. This feedback is composed of two

kinds of information. The first information is the in-

ferred class corresponding to the teacher’s label for

each gesture component. The second information,

E

global

gathers {E

j

} predictions to deliver a global

evaluation, defined by the teacher’s evaluation strat-

egy (e.g., a score based on the weighted average of

each digitally transformed component). The repre-

sentation method (textual information ? Dashboard

? More advanced visual artefacts) of this evaluation

is not defined at this stage of this work.

6 PRELIMINARY TESTS &

DISCUSSION

The posture component based on the respect of the

natural curvatures of the back was implemented. To

this end, an installation was setup with a phantom at-

tached to a table and a stool positioned at 10:30 from

the head of the mannequin (figure3(a)). Surrounding

this setup are 6 Qualisys Miqus M3 infrared cameras

to capture the movements of the expert (at 100 Hz),

who is equipped with an upper-body marker set. The

figure3(e) illustrates a tree of joints. The expert simu-

lates a therapeutic act on a tooth located on the upper

arch, maintaining an acceptable posture (1 min.) and

unacceptable ones (2 min.). The table 1 depicts the

posture classes, the record sequence duration and the

number of samples (i.e. number of frames) obtained

after the duplicate data filtering. Figure3 shows each

posture classes.

After training a RF model with 100 decision trees,

A Pipeline for the Automatic Evaluation of Dental Surgery Gestures in Preclinical Training from Captured Motions

425

Figure 3: (a) Straight Back (b) Leaning Back (c) Leaning Back and Bent Back (d) Twisted Back and Bent Head (e) A skeleton

from motion capture under Unreal Engine with directional vectors (red) and normalized ones (blue) starting from the pelvis

joint, and pointing to the remaining ones.

based on 80% training 20% test split of the expert

samples (all classes mixed), our architecture is able to

perfectly recognized each good and bad posture (per-

fect accuracy score).

Table 1: Posture class, record sequence duration (seconds)

and number of frames obtained after data temporal segmen-

tation and duplicate data filtering.

Posture Class Time Samples

Straight Back 60 3273

Leaning Back 4 232

Leaning Back and Bent Back 4 182

Twisted Back and Bent Head 8 428

The above first tests offer encouraging results, but

are not yet a proof of the system’s validity and per-

formance. Other experiments will be carried out with

several teachers, their observation needs and dental

students with different morphologies. Provided that

the system proves its effectiveness, it offers the fol-

lowing advantages:

Adaptation to the Teachers’ Needs. The teachers

are actively involved in the proposed pipeline. Their

expertise relies on several provided pieces of infor-

mation: (non)acceptable motions, body parts of in-

terest, labels linked to each aspect or skill related to

the gesture to learn and the evaluation strategy com-

bining each component. All these pieces of informa-

tion does not impact the system reengineering given

that: (a) the proposed set of components to analyze

the dental gesture is valid (b) and the set of descrip-

tors for each component is carefully chosen to cover

different kinds of correct gestures and flaws for this

component. In this way, the system becomes adapt-

able to each demonstrated gesture the teacher does or

does not want to see.

Building Relevant Pedagogical Feedback. The sys-

tem architecture handles the gesture as a set of evalu-

able components. For each of these components, a

RF model is trained separately. This approach allows

for an evaluation of the overall gesture without ne-

glecting the assessment of each component represent-

ing the gesture aspect to acquire or to enhance. In

addition, the evaluation uses the teacher’s vocabulary

thanks to the labels.

System Independencies. The proposed architecture

is designed to be independent of any specific motion

capture system (as long as it provides a skeleton made

of the position and orientation of each joint), the used

simulator (conventional or haptic), the task to learn

and the pedagogical strategy (if based on the chosen

valid components and their descriptors).

Nevertheless, the following limitations and re-

maining challenges must be considered:

MoCap Process. The process of obtaining a clean

time series can be tedious. Indeed, some motion

capture devices can be costly (infrared camera-based

ones), give a good precision and require a heavy

data pre-processing (marker re-identification, interpo-

lation of lost data, etc.). Other systems are less costly

and quicker to set up, but the signal quality is worse

(depth camera, inertial units, etc.).

Proposed Architecture vs. ad-hoc Implementa-

tion. When the descriptor and range of acceptability

are considered as trivial to implement (e.g., the sitting

orientation around the phantom requires verifying an

angle within a well-defined range), the question of the

interest of an ML training process requiring the pres-

ence of a teacher, a capture session and a labeling pro-

cess can be raised.

Learning Impact. The potential impacts of the sys-

tem on learning must be considered with caution and

must be tested. The observation needs are only for-

malized through the labels associated to the demon-

strated motion parts. The system is not currently

adapted to the formalization of the underlying knowl-

CSEDU 2024 - 16th International Conference on Computer Supported Education

426

edge related to the overall healthcare procedure.

7 CONCLUSION

This work proposes a pipeline for the automatic eval-

uation of dental surgery gestures. The aim of this

system is to assist teachers and learners during prac-

tical sessions on simulators (conventional or virtual

and haptic). The expected long-term impacts are re-

lated to the improvement of motor skills in preclinical

situations, to prepare students for clinical ones, and

avoid learning motions leading to MSD. This first step

breaks down the gesture into components (posture,

sitting orientation, holding the instrument, fulcrum,

asepsis) and proposes generic descriptors for each

component. The proposed approach consists in train-

ing random forest models for each component, whose

inputs are the generic descriptors computed from the

teacher’s labeled and captured motions. Each label is

defined by teachers to integrate the observation needs

with their own vocabulary. The trained RF model can

be used to analyse the learners’ gestures by giving the

class label for each gesture component. This architec-

ture tends to tackle the challenges linked to the evalu-

ation of the often neglected geometric and kinematic

aspects of the dental gesture in the existing systems,

while avoiding a heavy reengineering process in case

of the evolution the learning situation. This work will

continue through an experiment with a dual objective:

(a) validating the pipeline in terms of evaluation per-

formances with teachers and (b), evaluating the im-

pact of the evaluation on students during practical ses-

sions.

ACKNOWLEDGEMENTS

The authors would like to thank the support given

by the French Research National Agency in funding

of the ANR PRCE EVAGO project (ANR-21-CE38-

0010).

REFERENCES

Bandiaky, O. N., Lopez, S., Hamon, L., Clouet, R.,

Soueidan, A., and Le Guehennec, L. (2023).

Impact of haptic simulators in preclinical den-

tal education: A systematic review. Jour-

nal of Dental Education, n/a(n/a). eprint:

https://onlinelibrary.wiley.com/doi/pdf/10.1002/jdd.13426.

Bhatia, V., Randhawa, J. S., Jain, A., and Grover, V. (2020).

Comparative analysis of imaging and novel marker-

less approach for measurement of postural parameters

in dental seating tasks. Measurement and Control,

53(7-8):1059–1069.

Djadja, D., Hamon, L., and George, S. (2020). Design

of a Motion-based Evaluation Process in Any Unity

3D Simulation for Human Learning:. In Proceedings

of the 15th International Joint Conference on Com-

puter Vision, Imaging and Computer Graphics The-

ory and Applications, pages 137–148, Valletta, Malta.

SCITEPRESS - Science and Technology Publications.

FDI (2021). Ergonomics and posture guidelines for oral

health professionals.

Garc

´

ıa-de Villa, S. (2022). Simultaneous exercise recogni-

tion and evaluation in prescribed routines: Approach

to virtual coaches. Expert Systems With Applications.

titleTranslation:.

Larboulette, C. and Gibet, S. (2015). A review of com-

putable expressive descriptors of human motion. In

Proceedings of the 2nd International Workshop on

Movement and Computing, pages 21–28, Vancouver

British Columbia Canada. ACM.

Le Naour, T., Hamon, L., and Bresciani, J.-P. (2019). Su-

perimposing 3D Virtual Self + Expert Modeling for

Motor Learning: Application to the Throw in Ameri-

can Football. Frontiers in ICT, 6:16.

Liu, J., Zheng, Y., Wang, K., Bian, Y., Gai, W., and Gao,

D. (2020). A Real-time Interactive Tai Chi Learning

System Based on VR and Motion Capture Technol-

ogy. Procedia Computer Science, 174:712–719.

Manghisi, V. M., Evangelista, A., and Uva, A. E. (2022).

A Virtual Reality Approach for Assisting Sustainable

Human-Centered Ergonomic Design: The ErgoVR

tool. Procedia Computer Science, 200:1338–1346.

Maurer-Grubinger, C., Holzgreve, F., Fraeulin, L., Betz,

W., Erbe, C., Brueggmann, D., Wanke, E. M., Nien-

haus, A., Groneberg, D. A., and Ohlendorf, D.

(2021). Combining Ergonomic Risk Assessment

(RULA) with Inertial Motion Capture Technology in

Dentistry—Using the Benefits from Two Worlds. Sen-

sors, 21(12):4077. Number: 12 Publisher: Multidis-

ciplinary Digital Publishing Institute.

Oagaz, H., Schoun, B., and Choi, M.-H. (2022). Real-time

posture feedback for effective motor learning in ta-

ble tennis in virtual reality. International Journal of

Human-Computer Studies, 158:102731.

Pispero, A., Marcon, M., Ghezzi, C., Massironi, D., Varoni,

E. M., Tubaro, S., and Lodi, G. (2021). Posture As-

sessment in Dentistry for Different Visual Aids Using

2D Markers. Sensors, 21(22):7717.

Sallaberry, L. H., Tori, R., and Nunes, F. L. S. (2022). Com-

parison of machine learning algorithms for automatic

assessment of performance in a virtual reality dental

simulator. In Symposium on Virtual and Augmented

Reality, SVR’21, pages 14–23, New York, NY, USA.

Association for Computing Machinery.

Senecal, S., Nijdam, N. A., Aristidou, A., and Magnenat-

Thalmann, N. (2020). Salsa dance learning evalu-

ation and motion analysis in gamified virtual real-

ity environment. Multimedia Tools and Applications,

79(33):24621–24643.

Weiss Cohen, M. and Regazzoni, D. (2020). Hand rehabili-

tation assessment system using leap motion controller.

AI & SOCIETY, 35(3):581–594.

A Pipeline for the Automatic Evaluation of Dental Surgery Gestures in Preclinical Training from Captured Motions

427