EduColl: A Collaborative Design Approach Based on Conflict Resolution

for the Assessment of Learning Resources

Manel BenSassi

a

and Henda Ben Ghezala

b

Univ. Manouba, ENSI, RIADI LR99ES26, Campus Universitaire Manouba, 2010, Tunisia

Keywords:

Collaboration Design, Conflict Resolution, Learning Materials Assessment.

Abstract:

To meet expectation for education in the 21st century established by OECD, educational system are grappling

with many challenges at different levels. As accrediting bodies consistently ask for evidence of the quality of

educational programs, the alignment of learning materials with specific course or program curricula, as well

as broader educational standards and guidelines, becomes imperative. This requirement places an overwhelm-

ing burden on educational systems, necessitating iterative evaluations from diverse perspectives. Given the

involvement of several multidisciplinary stakeholders, conflicts may naturally arise in this intricate evaluation

process. To address this complexity, we propose, in this paper, a collaborative design of criteria-based frame-

work approach to evaluate learning materials. The approach allows for a flexible selection process of criteria

without predefined order, and it incorporates an automatic conflict resolution mechanism based on user pref-

erences. Our objective is to streamline the evaluation process, enhance collaboration among stakeholders, and

contribute to the overall improvement of educational materials in alignment with contemporary educational

standards.

1 INTRODUCTION

Building strong education systems is fundamental to

development and growth. Providing access to qual-

ity education not only fulfills a basic human right, but

also serves as a strategic development investment. At

the individual level, while a diploma may open doors

to employment, it is her or his skills that determine

his or her productivity and ability to adapt to new

technologies and opportunities (Rogers and Demas,

2013).

At the strategic level, for education systems to

deliver quality education, they need to be able to

promote both schooling and learning by designing

and adapting learning materials to anticipate learn-

ers’ needs and contexts (Gottipati and Shankarara-

man, 2018).

Textbooks, learning materials and activities play a

significant role in shaping the learning experience of

students, and their quality can have a direct impact on

the effectiveness of instruction(Morgan et al., 2013).

The ongoing evaluation of them contributes to

the continuous improvement of educational prac-

tices and ensures that educational institutions and

programs maintain high standards (Gottipati and

a

https://orcid.org/0000-0002-0224-6165

b

https://orcid.org/0000-0002-6874-1388

Shankararaman, 2018). It fosters a culture of reflec-

tion and adaptability in response to evolving educa-

tional needs, contexts and high standards.

For reasons cited above, as the evaluation of learn-

ing activities is a multifaceted process, great effort has

been devoted to propose criteria and frameworks that

ensure a systematic, fair and comprehensive assess-

ment of learning activities, ultimately contributing to

the improvement of educational quality and effective-

ness (McDonald and McDonald, 1999).

Several meetings and exchanges among multi-

disciplinary practitioners are organized to collabo-

ratively design, redesign, and adapt such a multi-

faceted framework (Grover et al., 2014) (Dewan,

2022). This undertaking may become challenging and

seems overwhelming given the existence of different

perspectives and conflicting situations may emerge.

Thus, educational systems, although their interest in

improving programs and their willing to embrace the

evaluation process, are sometimes discouraged in the

perception that any meaningful assessment will likely

require unreasonable amounts of time and effort (De-

wan, 2022).

To address this complexity, we propose in this pa-

per a collaborative design of a criteria-based frame-

work to evaluate learning materials, called ”EduColl”,

that relies on free-order criteria selection process,

where stakeholders freely select their analysis criteria

470

BenSassi, M. and Ben Ghezala, H.

EduColl: A Collaborative Design Approach Based on Conflict Resolution for the Assessment of Learning Resources.

DOI: 10.5220/0012690600003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 1, pages 470-477

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

or metrics toward competencies and learning activi-

ties without being constrained by the selected metrics

made by the other ones.

Our main objective is to offer an approach with

supporting tools that enhance the collaborative design

for a consensus framework of learning materials as-

sessment. This consensus framework, which is elabo-

rated on the basis of the existing grid and the opinions

of experts, will likely take into account the specificity

of the educational system.

This paper is structured as follows. Section 2

gives an overview of learning materials assessment

related work and discuss challenges of collaborative

design. Section 3 presents the knowledge about con-

flict within collaborative configuration, feature mod-

els, and minimal correction subsets (MCSs). The pro-

posed approach is explained in section 4. We present

an illustrative example and the supporting tool in sec-

tion 5 before concluding in section 6 .

2 RELATED WORK

A number of studies and frameworks focus on an

analysis strategy of learning materials. For exam-

ple, Baker (Baker, 2003) has developed a framework

for the design and evaluation of Internet-based dis-

tance learning courses. Morgan (Morgan et al., 2013),

also, proposed a systematic tool for assessing learning

materials in various dimensions. Bundsgaard et al.

(Bundsgaard and Hansen, 2011) introduced a holistic

framework to evaluate learning materials and learn-

ing design. Leacook and Nesbit (Leacock and Nesbit,

2007) have contributed to this domain with the devel-

opment of the LORI (Learning Object Review Instru-

ment) framework. LORI allows educators to create

reviews that include ratings and comments on nine

dimensions: content quality, alignment of learning

goals, feedback and adaptation, motivation, presenta-

tion design, usability of the interaction, accessibility,

reusability, and compliance with standards. Other re-

search focuses on curriculum assessment such as (Vi-

vian and Falkner, 2018) and (Grover et al., 2014).

However, the main focus of the studied research

and others is to advance criteria, dimensions, and

framework for assessing learning materials. They do

not provide any information about how these frame-

works are designed.

On the other hand, Kalle et al. (Piirainen et al.,

2009) assert that collaborative design not only forms

the foundation for developing guidelines to achieve

better design outcomes, but is also an efficient ap-

proach for managing the complexity in multi-actor

systems. However, it has been recognized that there

is a need to identify models of design processes that

facilitate rather than prescribe, given the challeng-

ing nature of collaborative design (Maher, 1990). By

bringing together diverse expertise, we may certainly

contribute to the development of a consensus frame-

work. However, the collaborative process is not with-

out its challenges, as conflicts may emerge due to di-

vergent opinions.

In light of these considerations, this paper pro-

poses an approach that offer a flexible and collab-

orative design process, empowering stakeholders to

freely express their preferences and points of view de-

scribed in the following subsection.

3 BACKGROUND

To understand our approach, knowledge about con-

flict within collaborative configuration, feature mod-

els, and minimal correction subsets (MCSs) is impor-

tant. They are briefly discussed in the following sec-

tion.

3.1 Research Hypothesis and Conflict

Definition

Research Hypothesis. Let us consider a scenario

where practitioners are tasked to evaluate various

learning materials (units, activities in textbook) ac-

cording to learning objectives and given competen-

cies outlined in curriculum. To facilitate this pro-

cess, practitioners have at their disposal a cartogra-

phy of criteria, referred to as a configuration, that en-

compasses a variety of criteria for different scenar-

ios. Practitioners select the configuration of criteria

for each unit, ensuring a cohesive alignment with the

intended educational outcomes.

So, in this context of collaborative learning mate-

rials assessment where multidisciplinary stockholder

are involved, conflicting situation may emerge and

will likely require unreasonable amounts of time and

effort to resolve it. Managing such situation becomes

important to optimize human times and efforts.

Basically, according to (Mendonca et al., 2007),

a conflict situation occurs when two or more charac-

teristics (in our case, the evaluation of criteria) con-

tain explicit or implicit dependencies rely on the de-

cision state of the other. Likewise, Elfaki et al. (Elfaki

et al., 2009) outlined that a conflict occurs when two

or more configuration decisions assigned to different

stakeholders cannot be true at the same time. For-

mally, a conflict can be defined as follows:

Definition. For a given configuration of criteria Cc

that comprise a set of configuration decisions {Cdi},

EduColl: A Collaborative Design Approach Based on Conflict Resolution for the Assessment of Learning Resources

471

a subset Cs ⊆ Cc is a conflict, if Cc is unsatisfiable

and ∀Cdi ∈ Cs, Cc \ {Cdi} is satisfiable. A conflict

situation can be categorized in different ways. We

outline, in the following section, a a classification of

different types of conflict.

3.2 Conflict Types

With regard to the classification proposed by (Edded

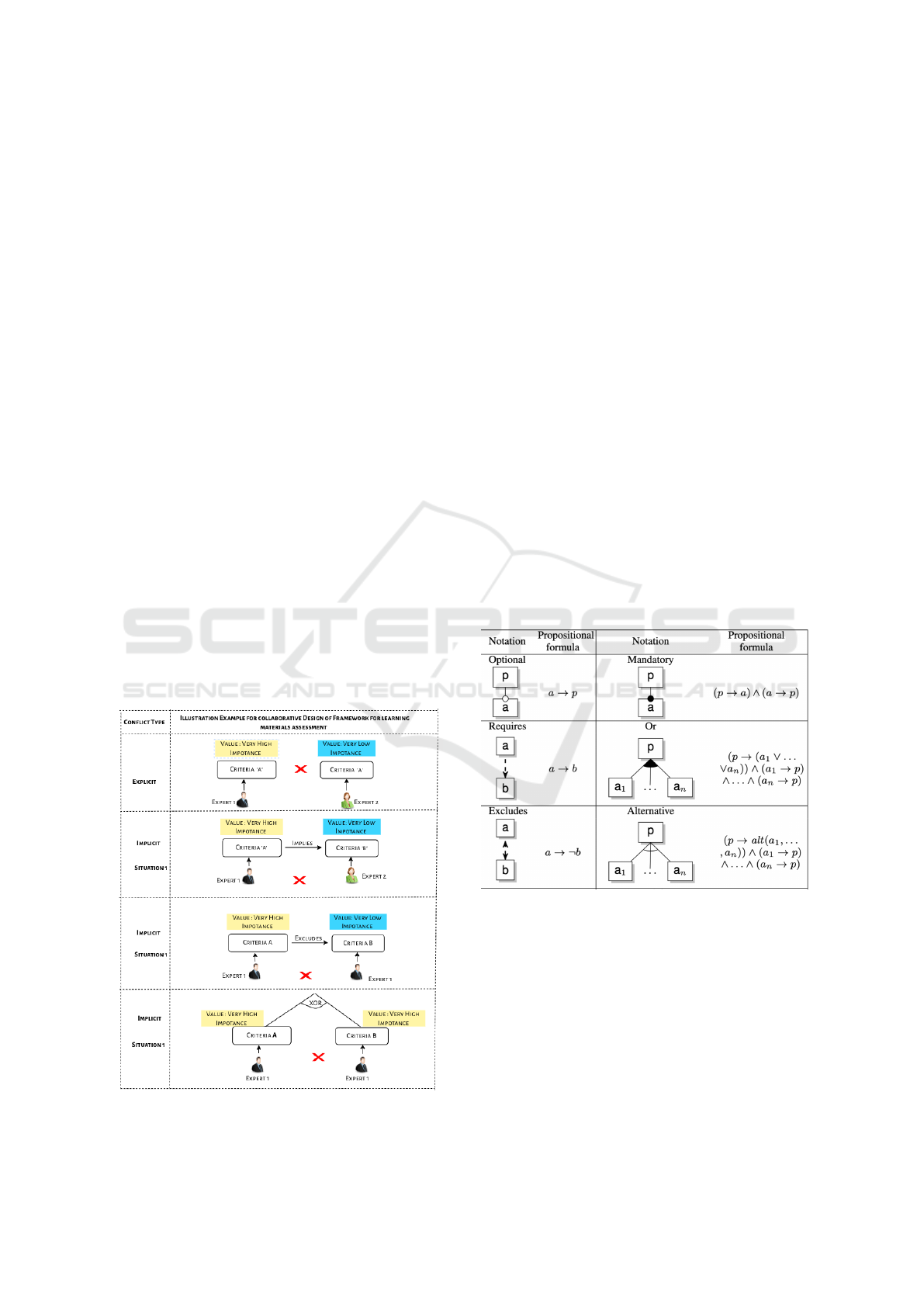

et al., 2020), conflict may be (see Fig.1):

• Explicit: that represents the case where the deci-

sions about the same criteria made by two or more

stakeholders are contradictory (criteria value se-

lected as ”Extremely High Importance” selected

by a stakeholder and undesired by another se-

lected as ”Extremely Low Importance” ).

• Implicit: represents the case where the decisions

of different experts do not respect the pedagogi-

cal constraints. Here, three situations are distin-

guished:

– Situation 1: conflict occurs when a criteria A

selected by a expert 1 imply an other criteria B

which undesirable by expert 2

– Situation 2: conflict occurs when a criteria A

excludes criteria B and both are selected as

”very high important” by two experts.

– Situation 3: conflict occurs when two or more

criteria cannot coexist and all are selected as

”very high important” by different experts.

Figure 1: Conflicts types inspired from (Edded et al., 2020).

The organization of criteria cartography follows

a hierarchical structure, visually presented using the

notation of feature models theory explained in in the

following section.

3.3 Feature Models in Software

Engineering

Feature models, in software engineering (Apel et al.,

2016), serve as specialized information models that

comprehensively depict all possible scenarios in

terms of features and their relationships. Specifically,

a basic feature model organizes features hierarchi-

cally, incorporating parent-child relationships catego-

rized as OR, Alternative (XOR), and AND which in-

cludes the Mandatory or Optional options. Fig.2 il-

lustrates the graphical notation corresponding to these

relationship types (Arcaini et al., 2015).

In addition to these parent-child relations, extra-

constraints, such as cross-tree relations, can be in-

troduced to specify feature incompatibilities, notably

through expressions like ”A requires B” and ”A ex-

cludes B” (Arcaini et al., 2015). Feature models have

become a de facto standard for representing the com-

monalities and variability of configurable software

systems (Feichtinger et al., 2021).

Figure 2: Conventional translation in propositional formu-

lae (Apel et al., 2016).

In our context, we have chosen to embrace feature

models, visually represented by feature diagrams, as a

concise representation of these intricate scenarios due

to their simplicity.

3.4 Minimal Correction Subsets

A Minimal Correction Subset (MCS) refers to a sub-

set of constraints within an infeasible constraint sys-

tem. Correcting the infeasibility by removing this

CSEDU 2024 - 16th International Conference on Computer Supported Education

472

subset transforms the system into a set of satisfiable

constraints. The term ’minimal’ is used to denote that

no proper subset possesses this corrective property. It

is important to note that an infeasible constraint sys-

tem may have several Minimal Unsatisfiable Subsets

of Constraints (MUSes) and MCSes. Formally, given

an unsatisfiable constraint system C, its MUSes and

MCSes are defined as follows according to (Liffiton

and Sakallah, 2008).

Definition 2. A subset N ⊆ C is an MUS if N is

unsatisfiable and ∀Ci ∈ N, N \ {Ci} is satisfiable. We

will refer to individual clauses as Ci, where i refers

to the position of the clause in the formula and where

each literal a

i j

is either a positive or negative instance

of some Boolean variable: Ci

W

j=1..k

i

a

i j

Definition 3. A subset F ⊆ C is an MCS if C \ F is

satisfiable and ∀Ci ∈ F,C \(F \{Ci}) is unsatisfiable.

Much research and proposals on computing MCSs

have been done in the fields of Boolean satisfiability

and constraint satisfaction problems. Their objective

is to identify minimal sets of clauses whose elimina-

tion transforms a given unsatisfiable Conjunctive Nor-

mal Formula (CNF) into a satisfiable one. The idea

behind this is to make iterative calls to a Standard

Boolean Satisfiability (SAT) solver to check the sat-

isfiability of different sub-formulas. Generally, these

algorithms handle a triplet {S,U,C}o f F, where S is a

satisfiable subformula, C contains clauses which are

inconsistent with S, and U contains the remaining

clauses of F.

MaxSAT as represented in (Liffiton and Sakallah,

2008), stands as the most widely adopted approach for

computing MCSs that consists of finding an assign-

ment that satisfies the maximum number of clauses of

an unsatisfiable formula (Marques-Silva et al., 2013).

Consequently, finding MCSs is closely tied to the

MaxSAT (or MaxCSP) problem, wherein the goal is

to determine a minimal subset of assignments that

satisfy the various clauses of an CNF, providing an

optimal solution to MaxSAT. This represents an opti-

mal solution to MaxSAT. In this paper, we adopt the

approach outlined by (Liffiton and Sakallah, 2008)

to calculate MCS in the conflict resolution process

within the collaborative design framework.

4 PROPOSED APPROACH

To deal with the issues of the collaborative design

framework, we propose a new approach where stake-

holders freely express their conception decisions and

conflicts are resolved based on their preferences and

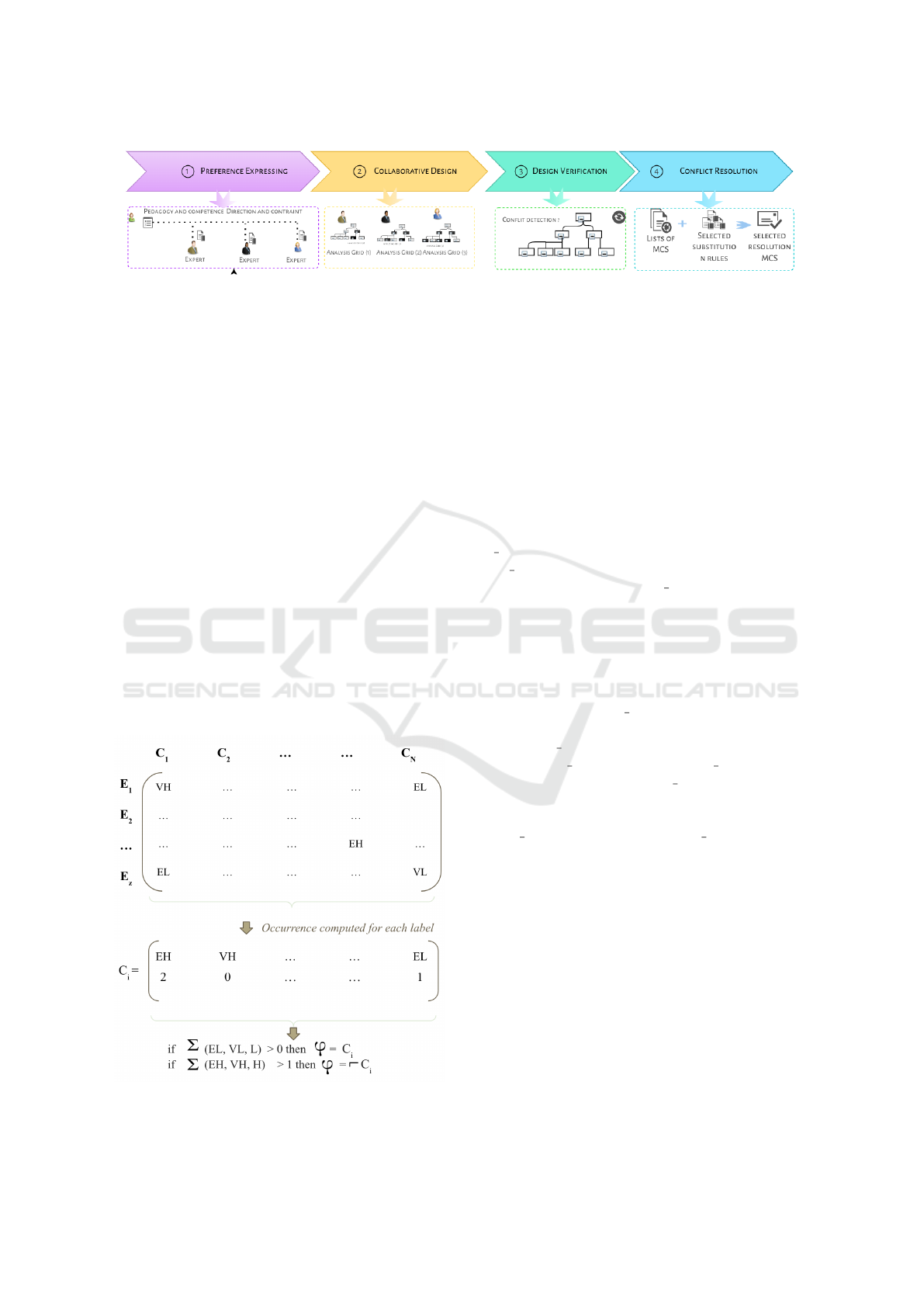

opinions. A summary of the proposed approach is

depicted in Fig.3 . The approach encompasses four

main steps: (1) the preference expression, (2) collab-

orative design, (3) design verification, and (4) conflict

resolution based on MUC and utility function.

4.1 Preference Expressing

Preferences of experts, in our case, represent a recov-

ery plan that permit to collaborators to express their

preference if one or more of their configuration deci-

sions could not be retained in case of conflict.

Each preference refers to the removal of a specific

MCS as illustrated in the example (see figure 1).

In the context of the proposed approach, an MCS

represents the set of selected criteria for the assess-

ment framework of the quality of learning materials

whose removal makes the current framework satisfi-

able.

The proposed list, which could be later enriched,

is composed of two preferences described as follows:

• Pref1. The most selected clause by collaborators

• Pref2. Decision made by the referent

The referent is a collaborator chosen during the

first step of the proposed approach. For each expert

E p (where E p in E = {E1, .., Ez}), a reference in-

dex is computed based on N features specified by the

moderator. For each of these features, a scoring scale

has been established to quantify their individual con-

tributions to the overall index.

The alternative selected by the referent (with the

higher reference index value) is considered.

In case of conflict, the selected preferences are

applied on the list of computed MCS to identify the

resolution MCS which is the one common among all

these preferences.

This approach helps in systematically resolving

conflicts by providing a structured and quantitative

basis for decision-making. This can contribute to con-

sensus building and a better understanding of the col-

lective decision.

4.2 Collaborative Design

During the collaborative design step, different stock-

holders freely express their preference and select a set

of criteria composing an assessment scenario towards

a given learning materials without being constrained

to others scenarios made by the pother collaborators.

In the context of the proposed approach, experts

express their opinions about each criteria using the

flowing scale:

1. Extremely Low importance (EL)

2. Very Low importance (VL)

EduColl: A Collaborative Design Approach Based on Conflict Resolution for the Assessment of Learning Resources

473

Figure 3: Overview of the proposed approach.

3. Low importance (L)

4. Medium importance (M)

5. High importance (H)

6. Very High importance (VH)

7. Extremely High importance (EH)

A conflict situation may occurs when a given cri-

teria is labeled with (EL or VL or L ) and (H or VH or

EH) as we illustrate in Fig.1. To streamline the iden-

tification of these conflicts, we formally classify ex-

perts’ opinions into two distinct sets:F = {EL,V L, L}

and ¬F = {H,V H, EH}.

4.3 Design Verification

During the verification step, all the proposals are

merged: The number of occurrences of each opinion

is computed as we illustrate in Fig.4. A binary vec-

tor is constructed to check against the list of conflict

types described in section 3.2.

The proposal is formulated as CNF where each de-

cision is reprised as a single clause. In case of conflict

Figure 4: Computing and conversion process of experts’

opinions to a CNF problem.

detection, the MAXSAT algorithm of (Liffiton and

Sakallah, 2008) is used to compute the list of all the

possible MCS for a given unsatisfiable configuration.

Taking into account the set preference selected by

the collaborators, the MCS that better meets these

preferences is chosen to resolve the detected con-

flict(s).

4.4 Conflict Resolution

The inputs of the proposed algorithm 1 are the

list of preferences selected by the stakeholders

(S preferences), and the list of computed MCSs

(List MCS). As output, the algorithm delivers the

MCS of conflict resolution (R MCS) according to the

preference selected by collaborators.

The algorithm selects, at the first step, the list of

MCS that eliminates the minimum of metrics to re-

turn MCS that encompasses the maximum of met-

rics (MaxFeatures). This first step may return none

or many MCSs.

Therefore, (Result list) contains the different

lists of MCSs returned by the first step. If

(Size(Result list)=1), then this one is returned as a so-

lution (Result MCS). If (Size(Result list)> 1), then

the function (GetPreference(S preferences)) returns

the most preference (MPref) selected by collabora-

tors. Thus, this preference is applied by Apply(Pref,

Result list) to select an MCS (Resul MCS) that better

respects the preferences of different collaborators.

We provide an illustrative example in the follow-

ing section.

5 ILLUSTRATIVE EXAMPLE

In the previous section, we introduced our collabora-

tive design framework for evaluating learning materi-

als. In this section, we describe a simple example that

illustrates the proposed approach, namely how con-

flicts can be resolved during the design process using

our algorithm. Firstly, we introduce the adopted car-

tography of criteria. Secondly, we showcase an illus-

trative example using the developed supporting tool.

CSEDU 2024 - 16th International Conference on Computer Supported Education

474

Data: S Preferences: list of Preference

selected by stakeholders. ;

List MCS: list of computed MCSs.

Result: Result MCS: conflict resolution’s

MCS.

Result list ← MaxFeatures(List MCS);

read current;

if (Size(Result

list)=1) then

Result MCS ←Result list ;

else

MPref ←(GetPreference(S preferences));

Result MCS ←Apply(Pref, Result list);

end

Return(Result MCS);

Algorithm 1: Algorithm of Conflict Resolution based

on Experts’ preferences.

5.1 Learning Design Materials:

Direction and Constraint of

Pedagogy and Competence

The adopted framework is inspired by (Ferrell, 1992)

to evaluate the quality of the designed learning mate-

rials. Here, there are some common criteria:

• Instructions of the Given Learning Material.

– Clarity of Instruction. Learning materials

should be written in a clear and accessible lan-

guage, facilitating student comprehension.

– Diversity of Instruction. Learning materials

should include effective pedagogical features,

such as exercises, examples, and activities, to

reinforce learning.

• Responsiveness of Learning Needs. The lean-

ing materials should align closely with the learn-

ing needs curriculum and learning objectives of a

course or programming particular and with edu-

cational standards and guidelines in general .

• Flexibility. Learning materials should cater to

the needs of diverse learners, including those with

different learning styles and abilities. Evaluation

helps ensure that the textbook is inclusive and can

be effectively used by a broad range of students.

The assessment of a learning activity typically is cen-

tred around a specific competence in competency-

based learning. In this paper, we take scientific

reasoning competencies as an illustrative example.

Scientific reasoning, considered an advanced skill,

can be composed of complex combinations of prac-

tices, rudimentary skills, and intermediate competen-

cies. It encompasses various types of thinking such

as computational, mathematical, engineering, design

and system thinking, needed to enhance citizen real

life experience. These experiences may involve activ-

ities such as critiquing a situation, solving problems,

or proposing feasible solutions.

Problem-solving, a key facet of scientific reason-

ing, can manifest in both individual and collabora-

tive settings. Drawing upon this overarching frame-

work and leverage an existing criteria framework, we

extrapolate a non-exhaustive configuration model for

evaluating the quality of a learning activity.

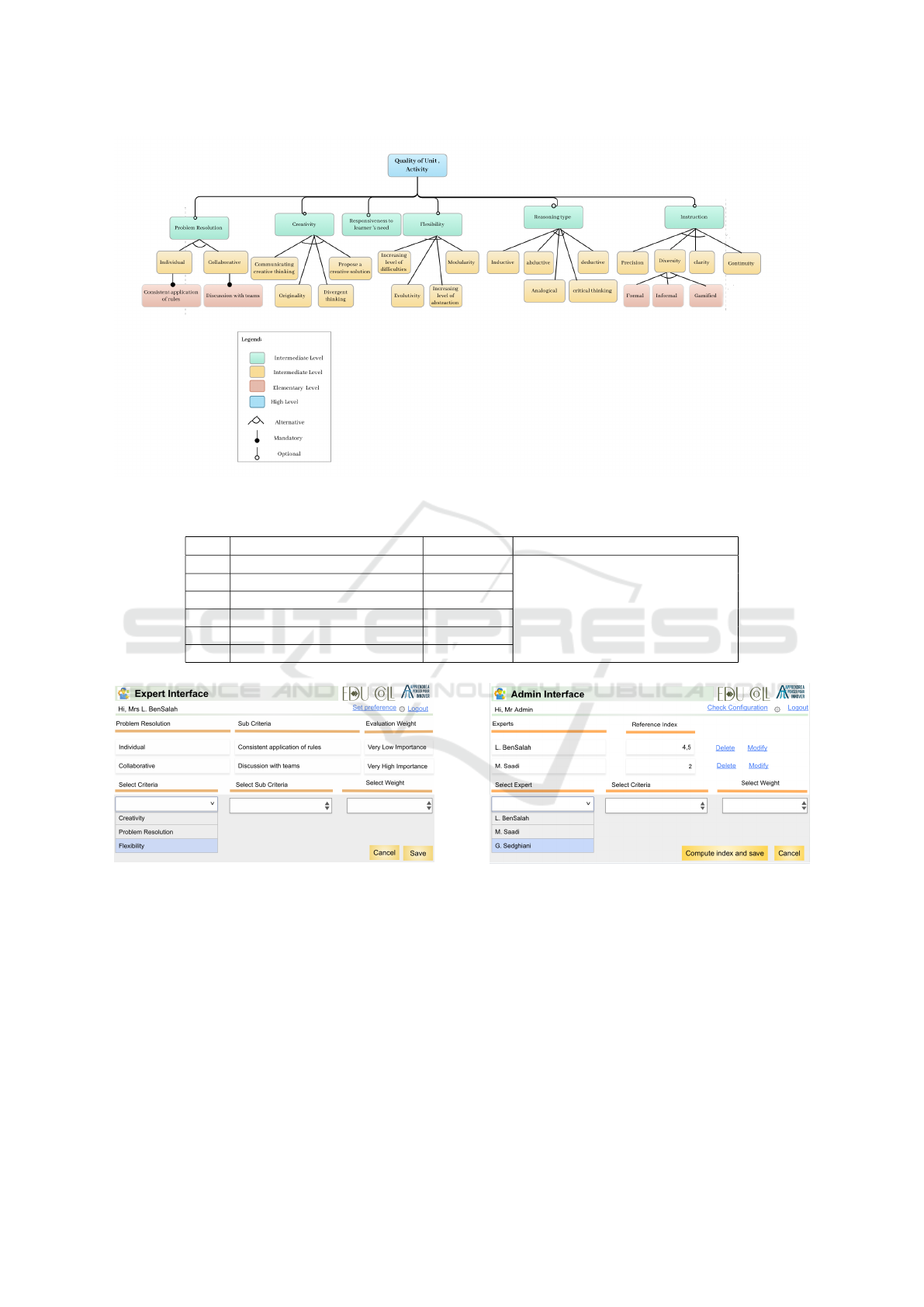

Fig.5 offers a snapshot of this cartography, offer-

ing a visual insight into the derived model as repre-

sented by feature diagrams.

5.2 Collaborative Design Using the

Supporting Tool ”EduColl”

Considering that three collaborators are sharing this

cartography of the assessment framework model, a

popularity order is computed and assigned to the dif-

ferent stakeholders. Afterwards, each collaborator

tags the framework criteria switch to the learning ac-

tivity to be assessed. The table 1 resumes the prefer-

ence of each collaborator and their reference index.

Table 1: Preferences of each collaborator.

Expert Reference index Preference

E1 2 Pref.1

E2 3 Pref.2

E3 4.5 Pref.1

The total design encompasses all the labels of

different criteria made by the different stakeholders.

Subsequently, the total consistency of the conception

is checked against the dependencies of the feature

model depicted in the third column of the table 2.

After the verification of the obtained configura-

tion, three conflicting situations are detected. The

initial conflict arises due to the labeling of the

’Discussion with teams’ criteria as VHI in the

’problem resolution’ category. This designation

conflicts with the exclusionary label of ’individual

work,’ which is marked as EH. The second con-

flict emerges when both the ’individual work’ is la-

beled as VH and the ’collaborative work’ is also

marked as VH. A third conflict materializes when

both ’Divergent creativity’ and ’analogical reason-

ing’ are concurrently selected with the VH designa-

tion.

To address these conflicts, Minimal Correction

Subsets (MCSs) are calculated. The initial phase of

our algorithm involves the elimination of C1, which

excludes C2 and C4. Subsequently, the algorithm

faces the decision between C5 and C6, opting for the

switch that aligns with the most frequently chosen

EduColl: A Collaborative Design Approach Based on Conflict Resolution for the Assessment of Learning Resources

475

Figure 5: Criteria framework modelled according to feature models.

Table 2: Preferences of each collaborator.

Ref. Clauses Constraints Violated Constraints

C1 Individual is VH C1 −→⌝C4 ϕ = C1 ∧C2 ∧C3 ∧C4 ∧C5 ∧C6

C2 Discussion with them is VH C1 −→⌝C2

C3 Critical thinking is EH C2 −→ C3

C4 Collaborative is VH C4 −→⌝C1

C5 Divergent creativity is VH C5 −→⌝C6

C6 Analogical reasoning is VH

Figure 6: The expert Interface.

Figure 7: The administrator interface.

preference. In this particular scenario, stakeholders

have expressed a greater preference for Pref.1. Ex-

amining the vectors for C5 = (1, 1, 0, 0, 0, 1, 0) and

C6 = (1, 0, 0, 0, 1, 1, 0), it becomes evident that the im-

portance is assigned to C5.

To assess the viability of the proposed approach,

we implemented a tool named EduColl, utilizing

a microservice-based web application architecture.

EduColl provides various user interfaces to cater to

different needs. The first interface is tailored for col-

laborators, allowing them to select preferences and

express opinions regarding various criteria, as illus-

trated in Fig. 7. The second interface is designed for

administrator, who manages participating stakehold-

ers (refer to Fig. 6) by assigning reference indices

and verifying the overall configuration’s consistency,

as depicted in Fig. 6. The administrator is entrusted

with overseeing conflict resolution and ensuring that

the final validated configuration is delivered to stake-

holders.

CSEDU 2024 - 16th International Conference on Computer Supported Education

476

6 CONCLUSION AND FUTURE

DIRECTIONS

The evaluation of learning materials used in learn-

ing situation provides valuable feedback to different

stockholders practitioner, publishers, and educators.

This feedback loop supports their iterative improve-

ment, allowing for updates and revisions based on the

evolving needs of learners and changes in the educa-

tional landscape.

We consider that in the future several controlled

experiments must be conducted to assert the useful-

ness of this work.

REFERENCES

Apel, S., Batory, D., K

¨

astner, C., and Saake, G. (2016).

Feature-oriented software product lines. Springer.

Arcaini, P., Gargantini, A., and Vavassori, P. (2015). Gen-

erating tests for detecting faults in feature models. In

2015 IEEE 8th International Conference on Software

Testing, Verification and Validation (ICST), pages 1–

10. IEEE.

Baker, R. K. (2003). A framework for design and evalua-

tion of internet-based distance learning courses: Phase

one–framework justification design and evaluation.

Online Journal of Distance Learning Administration,

6(2):43–51.

Bundsgaard, J. and Hansen, T. I. (2011). Evaluation of

learning materials: A holistic framework. Journal of

learning design, 4(4):31–44.

Dewan, H. (2022). Reforms in curriculum and textbooks:

Challenges and possibilities. Learning without Bur-

den, pages 83–103.

Edded, S., Sassi, S. B., Mazo, R., Salinesi, C., and Ghezala,

H. B. (2020). Preference-based conflict resolution for

collaborative configuration of product lines. In In-

ternational Conference on Evaluation of Novel Ap-

proaches to Software Engineering.

Elfaki, A. O., Phon-Amnuaisuk, S., and Ho, C. K. (2009).

Investigating inconsistency detection as a validation

operation in software product line. Software Engineer-

ing Research, Management and Applications 2009,

pages 159–168.

Feichtinger, K., Hinterreiter, D., Linsbauer, L., Pr

¨

ahofer,

H., and Gr

¨

unbacher, P. (2021). Guiding feature model

evolution by lifting code-level dependencies. Journal

of Computer Languages, 63:101034.

Ferrell, B. G. (1992). Lesson plan analysis as a program

evaluation tool. Gifted Child Quarterly, 36(1):23–26.

Gottipati, S. and Shankararaman, V. (2018). Competency

analytics tool: Analyzing curriculum using course

competencies. Education and Information Technolo-

gies, 23:41–60.

Grover, S., Pea, R., and Cooper, S. (2014). Promoting ac-

tive learning & leveraging dashboards for curriculum

assessment in an openedx introductory cs course for

middle school. In Proceedings of the first ACM con-

ference on Learning@ scale conference, pages 205–

206.

Leacock, T. L. and Nesbit, J. C. (2007). A framework

for evaluating the quality of multimedia learning re-

sources. Journal of Educational Technology & Soci-

ety, 10(2):44–59.

Liffiton, M. H. and Sakallah, K. A. (2008). Algorithms

for computing minimal unsatisfiable subsets of con-

straints. Journal of Automated Reasoning, 40:1–33.

Maher, M. L. (1990). Process models for design synthesis.

AI magazine, 11(4):49–49.

Marques-Silva, J., Heras, F., Janota, M., Previti, A., and

Belov, A. (2013). On computing minimal correction

subsets. In Twenty-Third International Joint Confer-

ence on Artificial Intelligence.

McDonald, M. and McDonald, G. (1999). Computer sci-

ence curriculum assessment. Acm Sigcse Bulletin,

31(1):194–197.

Mendonca, M., Cowan, D., and Oliveira, T. (2007).

A process-centric approach for coordinating prod-

uct configuration decisions. In 2007 40th Annual

Hawaii International Conference on System Sciences

(HICSS’07), pages 283a–283a. IEEE.

Morgan, K. E., Henning, E., et al. (2013). Designing a tool

for history textbook analysis. In Forum Qualitative

Sozialforschung/Forum: Qualitative Social Research,

volume 14.

Piirainen, K., Kolfschoten, G., and Lukosch, S. (2009). Un-

raveling challenges in collaborative design: a litera-

ture study. In Groupware: Design, Implementation,

and Use: 15th International Workshop, CRIWG 2009,

Peso da R

´

egua, Douro, Portugal, September 13-17,

2009. Proceedings 15, pages 247–261. Springer.

Rogers, H. and Demas, A. (2013). The what, why, and how

of the systems approach for better education results

(saber). Technical report, The World Bank.

Vivian, R. and Falkner, K. (2018). A survey of australian

teachers’ self-efficacy and assessment approaches for

the k-12 digital technologies curriculum. In Proceed-

ings of the 13th Workshop in Primary and Secondary

Computing Education, pages 1–10.

EduColl: A Collaborative Design Approach Based on Conflict Resolution for the Assessment of Learning Resources

477